1. Introduction

Information geometry was pioneered by Rao [

1] in 1945, and the more concise framework was built up by Chentsov [

2], Efron [

3,

4], and Amari [

5]. In information geometry, the research object is the statistical manifold, which consists of a parameterized family of probability distributions with a topological structure,

. Given the Fisher information matrix as the Riemannian metric, the distance between any two points (probability distributions) can be calculated [

6]. In such a manifold, the distance between two points stands for the intrinsic measure for the dissimilarity between two probability distributions [

7]. As information geometry provides a new perspective on signal processing, there are many applications of it. In estimation issues, based on the Riemannian distance, the natural gradient has been employed [

8,

9,

10]. The intrinsic Cramér–Rao bound is a tighter bound of both biased and unbiased estimators and derives from the Grassmann manifold [

11]. In addition, the geometric structure (considering the distance between all pairs of points) can be used as an evaluation of the quality of the observation model, which has been applied in waveform optimization [

12]. In optimization problems under the matrix constraint, the geometric structure was utilized [

13,

14,

15]. Moreover, there are also many significant works of detection based on the distance [

16,

17,

18,

19,

20]. Furthermore, in image processing, based on the Grassmann manifold, the target recognition in the SAR (Synthetic Aperture Radar) image is proposed [

21].

As this new general theory has revealed the capability to solve statistical problems, the further development of information geometry demands the unambiguous relationship between the geometric structure and the intrinsic characteristic of common issues. This letter focuses on the influence of the signal processing on the statistical manifold in terms of estimation issues. In the estimation issues, the signal processing is the common means to mine for the information of a desired parameter. Accompanying signal processing, the geometric structure of the considered statistical manifold, to which the distribution of the observed data belongs, would change. The purpose of this letter is studying the geometric structure change accompanying signal processing and proposing an appropriate processing based on the change of the structure.

This research will be presented in the following way. At first, according to the essence of the estimation issues, the intrinsic parameter submanifold, which reflects the geometric characteristic of the issues, has been defined. Then, we show that the statistical manifold will become a tighter one after processing and give the necessary and sufficient condition of the invariant signal processing of the geometric structure (named isometric signal processing). Considering the more specific condition that the processing is linear, the construction method of linear isometric processing is proposed. Moreover, the properties of the constructed processing are presented.

The following notations are adopted in this paper: the math italic x, lowercase bold italic , and uppercase bold denote the scalars, vectors, and matrices, respectively. Constant matrix indicates the identity matrix. Symbols , , and indicate the conjugate transpose operator, transpose operator, and the complex conjugate, respectively. In addition, indicates the ith row jth column element of matrix , and is the rank of matrix . Moreover, means that the matrix is a positive semidefinite matrix. Finally, indicates the statistical expectation of a random variable.

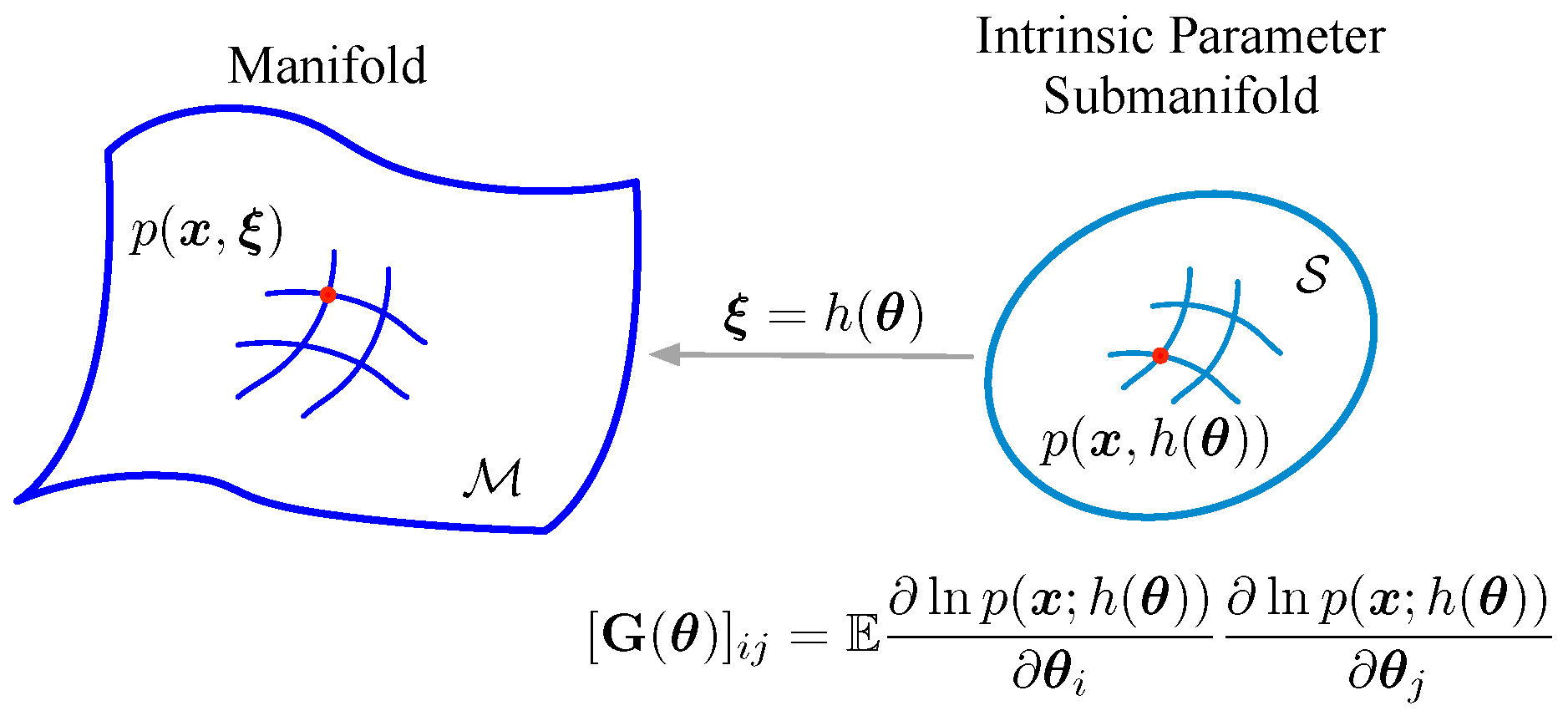

2. Intrinsic Parameter Submanifold

Let be a statistical manifold with coordinate system , which consists of a family of probability distributions. Consider an estimation issue on the statistical manifold ; the observed data belong to one of the probability distributions in . Suppose the desired parameter is implied in parameter and the relation between and can be expressed as a mapping, . As an instance, in the distance measurement of the pulse-Doppler radar, the desired distance r is embedded in the statistical mean of the observed data, i.e., ( means the pulse signal, and c is the velocity of light).

Actually, not all in are concerned with the estimation issue; the considered probability distributions not cover the whole manifold, they are only from a submanifold, which is the essential manifold in the issue. In the above example, the considered distributions are screened by the pulse signal (the statistical mean is able to be expressed as ).

Definition 1 (Intrinsic parameter submanifold)

. The manifold is the intrinsic parameter submanifold of , with coordinate system .

The Riemannian metric of submanifold

is defined as

, the Fisher information matrix associated with parameter

, as in

Figure 1. Actually, the distance of two points on the submanifold is defined by using the Riemannian metric [

6].

Remark 1. When the Fisher information matrices belonging to two observation models satisfy , the observation model with is suggested to be better than another in terms of the estimation problem. The reason is that the distance (defined by ) is larger than (defined by ), because of the definition of the distance on the manifold. That means the two parameters are easier to discriminate in the manifold with than .

Furthermore, the above remark also can be explained in traditional statistical signal processing. In estimation theory, the Fisher information also plays an important role, as the CRLB (Cramér–Rao Lower Bound) inequality. Therefore, in the traditional estimation theory, the same conclusion can be educed.

3. Signal Processing on the Intrinsic Parameter Submanifold

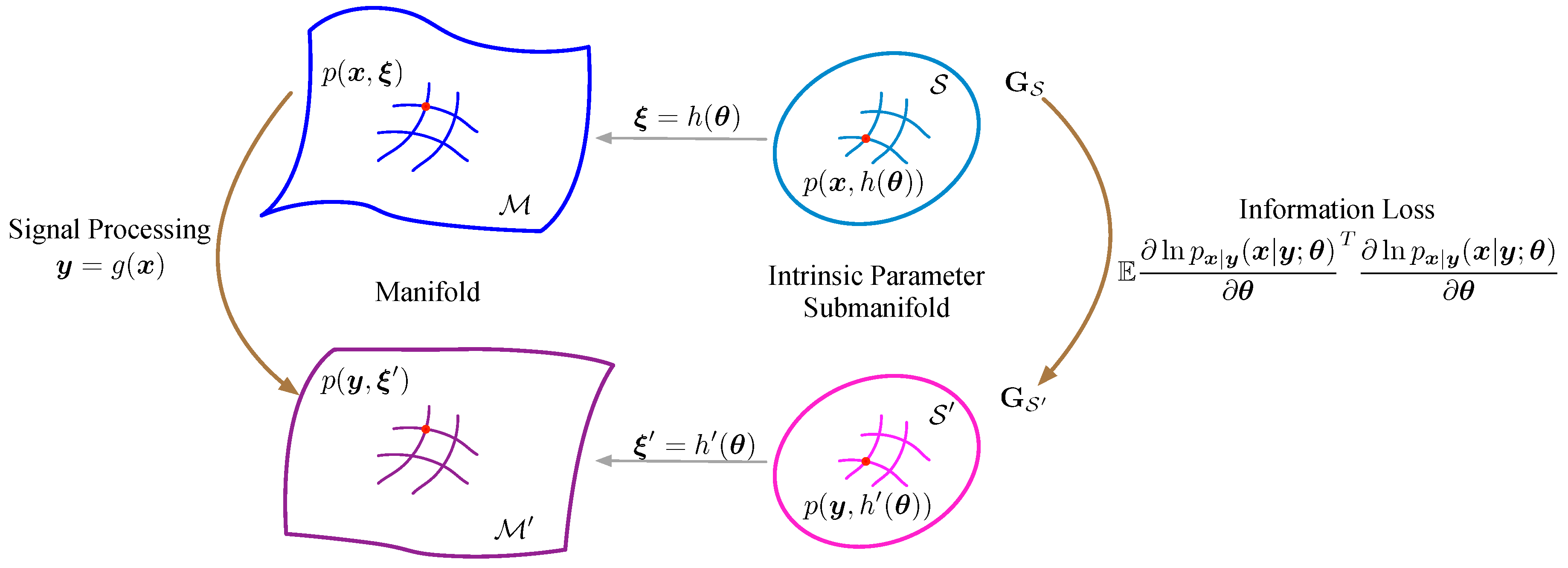

3.1. Geometric Structure Change by Signal Processing

In estimation issues, the signal is often processed to another form to obtain accurate estimates. Consider the signal processing , where indicates the original signal and is the processed signal. The signal processing often accompanies the varying of the statistical manifold, specially the varying of the Riemannian metric.

One of the most vital factors of the submanifold in terms of estimation issues is its Riemannian metric, because the distance, representing the similarity, between two parameters is defined by it. Suppose the intrinsic parameter submanifold of

x and

y are

and

, respectively. The Riemannian metrics of

and

are

and

, respectively. If the PDFs (Probability Density Functions)

,

, and

obey the boundary condition [

22], then the Fisher information satisfies the following equation [

22,

23],

Because

is produced by

via

, the following equation has been established.

Proof. Because

for

, then the

can be expressed as

, the Fisher information can be simplified:

□

Then, the following lemma holds.

Lemma 1. The Riemannian metrics and satisfy, : Proof. By Equations (

1) and (

3), and the definitions of

and

, the lemma has been established. □

Corollary 1. For each , is a positive semidefinite matrix, i.e., .

Proof. By Equation (

2), Equation (

3), and the definitions of

and

, the corollary has been established. □

Therefore, according to Lemma 1 and its corollary, the signal processing would result in Fisher information loss. As

Figure 2 shows, the signal processing would turn the intrinsic parameter submanifold into a tighter one, i.e., discriminating two parameters turns out to be more difficult.

3.2. Isometric Signal Processing

As the above discussion, the appropriate signal processing should satisfy that the intrinsic parameter submanifold of processed signal is isometric to the original submanifold, i.e., the difference between any two parameters is unreduced.

Definition 2 (Isometry)

. When , the two intrinsic parameter submanifolds and are isometric.

Actually, the sufficient and necessary condition of the isometry of and is as follows.

Theorem 1. If and only if is the sufficient statistic of , .

Proof. For Lemma 1, the following relations are equivalent,

That means is irrelevant to parameter , i.e., is the sufficient statistic of . □

The theorem suggests to use the test statistic to estimate the desired parameter, in the information geometry view. Actually, this conclusion also can be ensured in traditional estimation theory. For the Rao–Blackwell theorem [

24], for any estimator

, the estimator

is the better estimator, i.e.,

, when

is the sufficient statistic. This theorem indicates that designing the estimator using the sufficient statistic

is more appropriate, because for each estimator

using the original signal

as input, there exists the estimator

using the sufficient statistic

as the input that is better than

. Furthermore, for the Lehmann–Scheffé theorem [

25,

26], when the sufficient statistic

is complete, if the estimator

is unbiased, i.e.,

, the estimator

is the minimum-variance unbiased estimator.

Corollary 2. If is a reversible function, .

Proof. If

is a reversible function, the PDF of

and

satisfy:

According to the Fisher–Neyman factorization theorem [

27],

is the sufficient statistic of

, so

. □

When the processed signal is the sufficient statistic of , the signal processing is the isometric signal processing. Specifically, the reversible processing is definitely isometric processing, such as DFT (Discrete Fourier Transformation, because the inverse discrete Fourier transformation can recover the original signal, i.e., DFT is a reversible process). Moreover, this conclusion is also encountered in traditional estimation theory as the Rao–Blackwell theorem and Lehmann–Scheffé theorem.

4. Linear Form of Signal Processing

In real works, the noise is often Gaussian or asymptotically Gaussian, and the common signal processing is linear, such as DFT, matched filter, coherent integration, etc. This section will discuss the linear form of signal processing on the Gaussian statistical manifold.

4.1. Model Formulation

The information, as the desired parameter, is usually embedded in the signal, and the signal is often contaminated by noise, which can be described as , where is the uncontaminated signal waveform, is the Gaussian noise, and is the signal. The linear signal processing can be expressed as a matrix form, .

4.2. Fisher Information Loss of Linear Signal Processing

Suppose the linear form of signal processing is formed as ; is the m dimension, and is the n dimension, then the matrix is the dimension. If , there are rows, which are the linear combination of the rest of the rows. Therefore, the PDF of only depends on the corresponding elements, and the Fisher information loss is equivalent to the loss of the submatrix consisting of such rows. Therefore, for a convenient statement, is assumed to be n, i.e., matrix is row full rank.

The Fisher information loss will be discussed under WGN (White Gaussian Noise), at first. Then, the Fisher information under CGN (Colored Gaussian Noise) will be presented based on the results under WGN.

4.2.1. White Gaussian Noise

Suppose the noise is WGN and with power

, then the signal also obeys normal distribution

. As the property of the normal distribution, the distribution of

is also the normal distribution, but with different parameter

. Calculate the Fisher information of

and

; the loss of information is:

4.2.2. Colored Gaussian Noise

Suppose the noise is CGN and with covariance matrix . According to the property of the Hermite positive definite matrix, the covariance matrix can be expressed as , where is a reversible matrix.

According to Theorem 1, perform the reversible transformation

; the Fisher information is invariant, i.e.,

, and the noise in

is WGN. Performing the linear processing

to

, the result is:

and the information loss can be calculated by Equation (

8). Therefore, the loss of information is:

4.3. The Construction of the Isometric Linear Form of Signal Processing

In the previous section, the sufficient and necessary condition of isometric signal processing was that is the sufficient statistic of . However, the sufficient statistic of is often difficult to obtain, and the isometric processing should be constructed in another way. This part will introduce the construction method of linear isometric signal processing.

As regards the previous discussion, the signal under CGN can be transformed to the signal under WGN without information loss. Therefore, the signal under WGN is discussed in this part. As for the condition of CGN, the signal can be white at first, then the next steps are the same as the WGN condition.

The linear isometric processing can be obtained in the following way. Firstly, solve the equation:

Suppose the solution space is

with dimension

l and the orthogonal complement of

is

with dimension

. Then, the desired signal processing is formed as:

where

is the bias of

.

Proposition 1. is the isometric processing.

Proof. Let

. Because the non-zero eigenvalue of

is equivalent to that of

, the eigenvalue of

is one (

n multiplicity) and zero (

multiplicity). Therefore, the eigenvalue of

is one (

multiplicity) and zero (

n multiplicity). Then, as the matrix

is the Hermitian symmetric matrix, it can be expressed as:

Consider the fact

; the first

n columns of

must equal zero. That means the first

n columns of

are the bias of

, and the rest of the columns are the bias of

, i.e.,

Because

is the solution of Equation (

12),

then:

i.e., the Fisher information loss is zero. □

According to the proposed construction method, the following proposition can be obtained.

Proposition 2. The matrix is the isometric matrix with the minimal rows, i.e., the processed signal has the minimal length.

Proof. Let

be the isometric matrix with dimension

and

. Similarly, the matrix also can be expressed as:

where the multiplicity of eigenvalue one is

.

As:

the first

rows of

must be zero, which means the first

columns of

is the linear independent solution of Equation (

12). However, the solution space

has dimension

, so we can get

, i.e.,

.

Therefore, the matrix is the isometric matrix with the minimal rows. □

Remark 2. Because the first columns of are the linear independent solution of Equation (12), that means any element from satisfies that the first elements of equal zero. Therefore, the solution space of is . Moreover, satisfies , so consists of the bias of . In other words, the isometric matrix with dimension n is the equivalent matrix of , which indicates that the proposed construction method can generate any isometric matrix with minimal rows.

Sample of the Construction

Consider the radar target detection scene: the radar emits the single frequency signal and receives the echo to obtain the distance and RCS (Radar-Cross-Section) information of the target. The observation model can be formulated as:

where

j indicates the unit of the imaginary part,

is the sampling interval,

f is the frequency of the emitted signal,

c is the velocity of light,

denotes WGN,

r indicates the distance of the target, and

A is the unknown amplitude, which contains the information of RCS. The desired parameter is

.

Firstly, the derivative is:

Solve Equation (

12); the orthogonal complement of the solution space is:

Therefore, the isometric processing is:

5. Conclusions

This letter focuses on the influence of signal processing on the geometric structure of the statistical manifold in estimation issues. Based on the intrinsic characteristics of the estimation issues, the intrinsic parameter submanifold is defined in this letter. Then, the intrinsic parameter submanifold is proven, which turns into a tighter one after signal processing. Moreover, we show that if and only if the processed signal is the sufficient statistic, the geometric structure of the intrinsic parameter submanifold is invariant. In addition, the construction method of the linear isometric signal processing is proposed. Moreover, the linear processing produced by the proposed method is shown with minimal rows (when it is represented as a matrix), i.e., the processed signal has the minimal length, and the proposed method can generate all linear isometry with minimal rows.