Abstract

The Gaussian process is gaining increasing importance in different areas such as signal processing, machine learning, robotics, control and aerospace and electronic systems, since it can represent unknown system functions by posterior probability. This paper investigates multisensor fusion in the setting of Gaussian process estimation for nonlinear dynamic systems. In order to overcome the difficulty caused by the unknown nonlinear system models, we associate the transition and measurement functions with the Gaussian process regression models, then the advantages of the non-parametric feature of the Gaussian process can be fully extracted for state estimation. Next, based on the Gaussian process filters, we propose two different fusion methods, centralized estimation fusion and distributed estimation fusion, to utilize the multisensor measurement information. Furthermore, the equivalence of the two proposed fusion methods is established by rigorous analysis. Finally, numerical examples for nonlinear target tracking systems demonstrate the equivalence and show that the multisensor estimation fusion performs better than the single sensor. Meanwhile, the proposed fusion methods outperform the convex combination method and the relaxed Chebyshev center covariance intersection fusion algorithm.

1. Introduction

Estimation in nonlinear systems is extremely important because almost all practical systems involve nonlinearities of one kind or another [1,2], such as target tracking, vehicle navigation, automatic control and robotics [3,4]. In the case of nonlinearities, estimation cannot be obtained analytically in general. Some methods based on exact parametric models and ideas of the Kalman filter (KF) have been developed. The Extended Kalman filter (EKF) [5] was the most common application to nonlinear systems, which simply linearizes all nonlinear functions via Taylor-series expansion and substitutes Jacobian matrices for the linear transformations into the KF equations. The unscented transformation was introduced to address the deficiencies of linearization, namely unscented Kalman filters (UKF) [1], which provided a more direct and explicit mechanism for transforming mean and covariance matrices. Under the Bayesian framework, particle filter (PF) was presented by constructing the posterior probability density function of the state based on all available information in Reference [6]. However, for nonlinear systems, these methods were usually assumed that the nonlinear relationships are known. Lack of modeling accuracy, including the identification of the noise and the model parameters, was inevitable [7]. In many applications, most real dynamic systems are difficult to obtain the exact models and system noises due to the complexity of the target motion environment, therefore, the parameterized estimation methods may be invalid.

To overcome the limitations of parametric models, researchers have recently employed so called non-parametric methods like the Gaussian process [8] to learn models for dynamic systems. More specifically, the functional representation needs to be learned from the training data before the filtering prediction and update step [9]. Gaussian processes have been increasingly attracting the interests in machine learning, signal processing, robotics, control and aerospace and electronic systems [10,11,12]. For example, Gaussian process models are used as the surrogate models for complex physics models in Reference [13]. The advantages stemmed from the fact that Gaussian processes take both the noise in the system and the uncertainty in the model into consideration [14]. In the context of modeling the dynamic systems, Gaussian processes can be used as prior over the transition function and measurement function. By analyzing the correlation among given training data, Gaussian process models can provide the posterior distributions over functions through the combination of the prior and the data. For the cases that ground truth data are unavailable or can only be determined approximately, Gaussian process latent variable models were developed in References [15,16] and they were extended to the setting of dynamical robotics systems in Reference [17]. In order to reduce the cubic complexity of Gaussian process training for the fixed number of training points, sparse Gaussian processes were developed (see e.g., [18,19,20,21,22,23]). K-optimality was used to improve the stability of the Gaussian process prediction in Reference [24].

So far, Gaussian process models have been applied successfully to massive nonlinear dynamic systems. Motivated by modeling human motion, learning nonlinear dynamical models with Gaussian process was investigated in Reference [16]. Many filtering methods, such as the Gaussian process extended Kalman filters (GP-EKF) [25], the Gaussian process unscented Kalman filters (GP-UKF) [26], the Gaussian process particle filters (GP-PF) [27], GP-BayesFilters [14] and the Gaussian process assumed density filters (GP-ADF) [7,10] were derived by incorporating the Gaussian process transition model and Gaussian process measurement model into the classic filters. GP-ADF was an efficient form of assumed density filtering (ADF) introduced in References [28,29,30] and propagated the full Gaussian density [7]. Although Gaussian processes have been around for decades, they mainly focus on single sensor systems.

With the rapid development of the sensor technology, computer science, communication technology and information technology, multisensor systems are widely used in military and civil fields [31,32,33,34,35]. Benefited from the application of multiple sensors, multisensor data fusion makes more comprehensive and accurate decision by integrating the available information from multiple sensors and has attracted lots of research interests. Generally speaking, the multisensor estimation fusion mainly contains centralized estimation fusion and distributed estimation fusion. Centralized estimation fusion is that a central processor receives all measurement data from sensors without processing and uses them to estimate the state [36,37]. In general, many filtering algorithms in single sensor system can be applied to the multisensor systems, since measurement value can be stacked and regarded as one measurement. On the other hand, distributed estimation fusion has several advantages in terms of reliability, survivability, communication bandwidth and computational burdens [38,39,40,41,42], which make it desirable in real applications such as surveillance, tracking and battle management. In the distributed setting, each local sensor deals with its own measurement data and transmits the local state estimation to the fusion center for the purpose of more accurate estimation fusion. So far, a variety of distributed fusion methods have been investigated for different occasions, such as References [37,40,43,44,45,46]. A convex combination method was given to fuse the local estimates in Reference [43]. For the unavailable cross correlation matrix problem, a relaxed Chebyshev center covariance intersection (RCC-CI) algorithm was also provided to fuse the local estimates in Reference [37]. A current review of distributed multisensor data fusion under unknown correlation can be seen in Reference [47]. However, these multisensor estimation fusion methods are mainly based on the exact dynamic models or known nonlinear functions. For the cases in which accurate parametric models are difficult to obtain, it is worth considering integrating Gaussian processes with multisensor estimation fusion to improve the system’s performance.

In this paper, we focus on multisensor estimation fusion including the centralized and distributed fusion methods with Gaussian process for nonlinear dynamic systems. Firstly, given the training data, we learn the dynamic models with the Gaussian process and derive multisensor estimation fusion methods based on the Gaussian process models for nonlinear dynamic systems, which can avoid inappropriate parametric models and improve predictive ability. Combining with the single senor GP-ADF and GP-UKF, respectively, the prediction step and update step of the multisensor estimation fusion are provided. In general, it is hard to analyze the performance of the non-parametric fusion methods. Since the Gaussian process fusion methods have analytic mean and covariance, we show that the distributed estimation fusion is equivalent to the centralized estimation fusion with the single sensor cross terms being full column rank. Numerical examples show that the equivalence is satisfied under the condition and the multisensor estimation fusion performs better than the single sensor. We also compare the proposed fusion methods with the RCC-CI [37] algorithm and the convex combination method [43]. In the Gaussian process model setting, the simulation results show that the multisensor estimation fusion methods outperform the RCC-CI algorithm and the convex combination method, as far as the estimation accuracy is concerned.

This article also extends our earlier work [48]. Compared with the conference paper, the main differences are as follows:

- Detailed proofs are provided.

- The enhancement of the multisensor fusion algorithm with GP-UKF is presented.

- Additional set of extensive experiments are carried out.

- The equivalence condition of Proposition 1 is analyzed.

- Comparison between GP-ADF fusion and GP-UKF is given.

The rest of the paper is organized as follows. In Section 2, the problem formulation and the Gaussian process are introduced. In Section 3, the centralized estimation fusion and the distributed estimation fusion methods are presented. In Section 4, simulations are provided to confirm our analysis. Some conclusions are drawn in Section 5.

2. Preliminaries

2.1. Problem Formulation

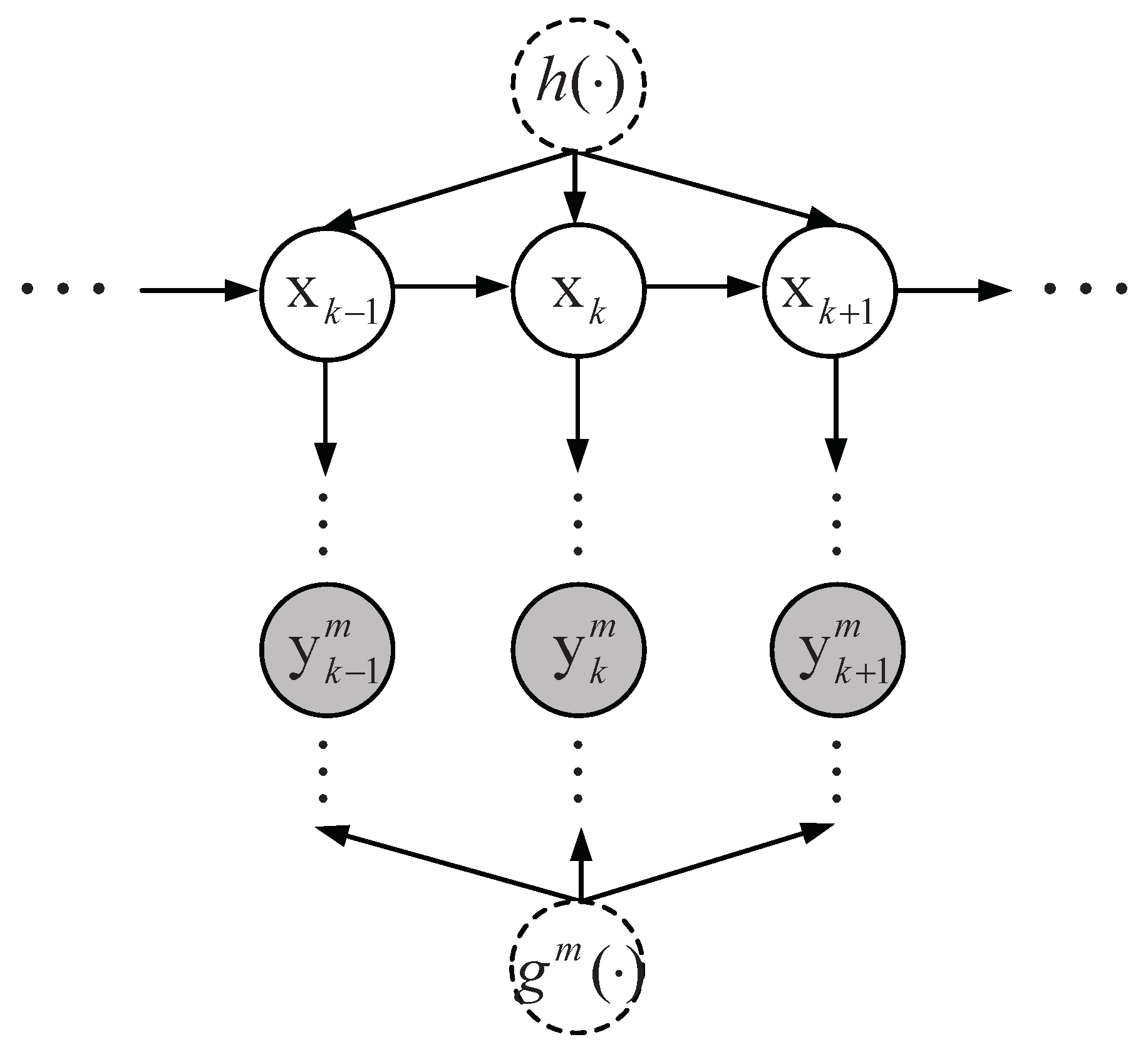

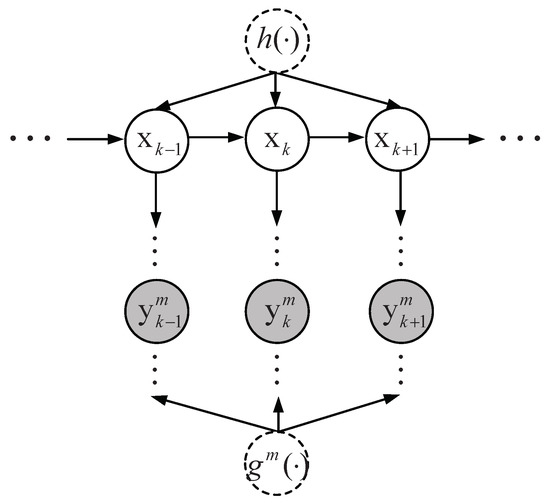

In this paper, we consider the state estimation problem of a nonlinear dynamic system with additive noise and N () sensor measurements. The multisensor nonlinear dynamic system with state equation and N measurement equations (see Figure 1) is described as follows:

where is the state of the dynamic system at time k and is the measurement of the sensor at time k, . is the transition function of the state and is the nonlinear measurement function of at the sensor. is a Gaussian system noise and is a Gaussian measurement noise, which is independent of each other.

Figure 1.

Graphical structure for multisensor nonlinear dynamic systems.

Our goal is to estimate the state from all the available sensor measurement information. Gaussian processes are regarded as the prior of the transition function and the sensor measurement function , , respectively. Then we make inference about the posterior distribution of the transition function and the sensor measurement function. The Gaussian process model represents a powerful tool to make Bayesian inference about functions [49].

2.2. Gaussian Processes

A Gaussian process is defined over functions, which is a generalization of the Gaussian probability distribution [8]. It means that we should consider inference directly in function space. Also, it gives a formal definition, “the Gaussian process is defined a collection of random variables, any finite number of which have a joint Gaussian distribution,” in [8].

Similar to a Gaussian distribution, knowledge of both mean and covariance function can specify a Gaussian process. The mean function and covariance function (also called a kernel) of a Gaussian process are defined as follows,

where denotes the expectation. Thus, we write the Gaussian process as

Unless stated otherwise, a prior mean function is usually assumed to be 0. The choice of the covariance functions depends on the application [14]. In this paper, we employ the most commonly-used squared exponential (SE) kernel in machine learning, which is the prototypical stationary covariance function and useful for modeling particularly smooth functions. It is defined as

where parameter represents the variance of the function f that controls the uncertainty of predictions in areas of less training sample density and parameter is a diagonal matrix of the characteristic length-scales of the SE kernel. Other commonly employed kernel functions can be seen in Reference [8].

A Gaussian process implies a distribution over functions based upon the obtained training data. Assume that we have obtained a set of training data . and are made up of multiple samples drawn from the following standard Gaussian process regression model

where and , denotes the normalized Gaussian probability density function. Note that Gaussian process regression uses the fact that any finite set of training data and testing data of a Gaussian process are jointly Gaussian distributed. Let , called the hyper-parameters of the Gaussian process. Using the evidence maximization method [8,50], we obtain a point estimate

from the known training data [51]. We can solve the optimization problem with numerical optimization techniques such as conjugate gradient ascent [8,14]. Next, we infer the posterior distribution over function value from training data for each inputs . In addition, the test input can be categorized into two classes, relying on whether it is uncertain or not. The corresponding predictive distribution over will be presented as follows.

(1) Predictive Distribution over Univariate Function

Suppose that the training data is =, where is a D-dimensional input vector, is a scalar output, and n is the number of training data. Next, two cases, deterministic inputs and uncertain inputs, are considered.

● Deterministic Inputs

Conditioned on the training data and the deterministic test input , the predictive distribution over is a Gaussian with the mean

and the variance

where represents the variance with respect to f,

and is the kernel matrix with each element satisfying . The uncertainty of prediction is characterized by the variance . More details can be seen in Reference [8].

● Uncertain Inputs

When the test input is uncertain, namely has a probability distribution, the prediction problem is relatively difficult. Let us consider the predictive distribution of for the uncertain input . According to the results in Reference [7] (or reviewing References [52,53]), we introduce the predictive distribution over the function value as

where the mean and variance of the distribution are provided with Equations (5) and (6), respectively. Based on the conditional expectation and variance formulae, it yields the mean and variance of the distribution in closed form. In particular, we have

and

Note that in Equation (8) is from ,

represents the trace in Equation (9) and we have

and

In general, the predictive distribution in Equation (7) cannot be analytically calculated since it leads to a non-Gaussian distribution, if a Gaussian distribution is mapped through a nonlinear function. Using moment-matching method, the distribution can be approximated by the Gaussian distribution .

(2) Predictive Distribution over Multivariate Function

For the model (4), let us turn to the multiple output case that , . Following the results in References [7,51], we need to train E Gaussian process regression models independently. The ath model is learned from the training data , , , where is the ath element of . It implies that any two targeted dimensions are conditionally independent given the input.

For any deterministic input , the mean and variance of each target dimension are obtained by Equations (5) and (6). The predictive mean of is a vector stacked by E targeted dimension mean, and the corresponding covariance is a diagonal matrix whose diagonal element is the variance of each targeted dimension.

With the uncertain input , the predictive mean of also is the stacked E predictive mean given by Equation (8), . Unlike predicting at deterministic inputs, targeted dimensions are dependent due to the uncertain input. Denote and the corresponding predictive covariance matrix is given by

It is obvious that the predictive covariance is no longer a diagonal matrix. Using Equation (9), we can obtain the diagonal element of covariance matrix (10). For each , the off-diagonal elements satisfy

Given , and are independent, then we have

where can be similarly obtained in Equation (5). For notational simplicity, define

where

Thus, we also approximate the distribution with the Gaussian distribution . More details can be found in Reference [51].

3. Multisensor Estimation Fusion

In this section, an essential prerequisite is that the transition function (1) and the measurement functions (2) are either not known or no longer accessible. Thus, we model the latent functions with Gaussian processes. For the N-sensor dynamic systems (1) and (2), suppose that we have obtained some training data of the target state and sensor measurement. In the following, we discuss the centralized and distributed estimation fusion methods to estimate the state from a sequence of noisy sensor measurements.

3.1. Centralized Estimation Fusion

First, we consider the centralized estimation fusion method. To facilitate fusion, we stack the multisensor measurement information as follows

Thus the dynamic system (1) and (2) can be rewritten as

where the covariance of the noise is given by

Considering the Gaussian process dynamic system setup, two Gaussian process models can be trained using evidence maximization. models the mapping and models the mapping .

As we know, the key of the estimation is to recursively infer the posterior distribution over the state of the dynamic system (13)–(14), which are based on all the sensor measurements , including the prediction step and the update step. In particular, the prediction step uses the posterior distribution from the previous time step to produce a predictive distribution of the state at the current time step. In the update step, the current predictive distribution is combined with current measurement information to refine the posterior distribution at the current time step. Next, centralized estimation fusion methods with GP-ADF and GP-UKF are presented, respectively.

(1) Fusion with GP-ADF

Note that some approximation methods are required to obtain an analytic posterior distribution for the nonlinear dynamic systems. In particular, for the Gaussian process dynamic system, it is easy to make some Gaussian approximation to the posterior distribution. GP-ADF exploits the fact that the true moments of the Gaussian process predictive distribution can be computed in closed form. The predictive distribution is approximated by a Gaussian with the exact predictive mean and covariance [7]. Based on the following lemma, we present the centralized estimation fusion method with GP-ADF.

Lemma 1.

Remark 1.

Since GP-ADF algorithm [7] is a state estimation method in the single sensor Gaussian process dynamic system, it is applicable to the multisensor system with all the sensor measurements stacked into a vector. Therefore, Equation (15) and Equation (16) are called the centralized estimation fusion method with GP-ADF here.

Then, let us present the prediction step and update step of the above fusion method in detail.

● Prediction Step

First, this step needs to use the previous posterior distribution to produce a current predictive distribution . We write the predictive distribution as

and is a Gaussian distribution due to and . From this, an analogy with Equation (7) yields the mean and the variance of the state conditioned on the measurement . In particular,

is a D-dimension mean vector and the computation of every target dimension can be seen in Equation (8). The corresponding covariance matrix

where is the covariance matrix of transition noise obtained by the evidence maximization method, and the computation of can be seen in Equation (10).

● Update Step

Next, we approximate the joint distribution with a joint Gaussian , where

Note that we are not aiming at approximating the distribution directly. To obtain the joint approximation Gaussian distribution, we use the Gaussian distribution to approximate the distribution , which can be done in a same way to the prediction step due to

On the other hand, the cross term satisfies

For the unknown term , we hav

where , is the normalization constant of the unnormalized SE kernel, is the mean of a new Gaussian distribution that is the product of two Gaussian density functions and is the normalization constant of the new Gaussian distribution. Consequently, from the joint distribution , we obtain the posterior distribution with the mean and variance as Equations (15) and (16), respectively.

Remark 2.

From Equations (15) and (16), we can see that the centralized fusion method with GP-ADF is similar to the unified optimal linear estimation fusion [40,54]. The difference is that the computational way of , and and they are mainly based on the training data due to the nonparametric dynamic system model.

(2) Fusion with GP-UKF

Following the single sensor GP-UKF (see e.g., References [14,26]), we present the prediction step and update step of the GP-UKF fusion.

● Prediction Step

At time , based on the unscented transform [1], we obtain containing points through the mean and covariance . Using the GP prediction model, the transformed set is given by

and the process noise is computed as

where and are the mean and covariance models with respect to the Gaussian process . The predictive mean and covariance are

where is the weight generated in the unscented transform. Using the predictive mean and covariance , we obtain through the unscented transform. Thus, based on the GP observation model,

and the observation noise matrix is determined by

Then the predicted observation and innovation covariance are calculated by

Meanwhile, the cross covariance is given by

● Update Step

Finally, the update can be obtained by the following equations:

Remark 3.

Note that GP-ADF propagates the full Gaussian density by exploiting specific properties of Gaussian process models [7]. GP-UKF maps the sigma points through the Gaussian process models instead of the parametric functions and the distributions are described by finite-sample approximations. In contrast to GP-UKF, GP-ADF is consistent and moment preserving [7]. In addition GP-EKF requires linearization of the nonlinear prediction and observation models in order to propagate the state and observation, respectively [14]. GP-PF needs to perform one Gaussian process mean and variance computation per particle and has very high computational cost. For more details about these single sensor filtering methods, it can be seen in [7,14], and so forth. Due to the linearization loss of GP-EKF and high computational cost of GP-PF, we do not consider them and only introduce the multisensor estimation fusion with GP-ADF and GP-UKF in this paper.

3.2. Distributed Estimation Fusion

In this subsection, we mainly present the distributed estimation fusion with GP-ADF. For the dynamic systems (1) and (2), assume that there is a prior on initial state and each local sensor sends its estimation to the fusion center. Thus, the posterior distribution of local state estimation is a Gaussian distribution , where and are calculated as follows

with

Then, the local posterior mean and covariance are transmitted to the fusion center to yield the posterior distribution of global state estimation.

In general, the centralized estimation fusion method with directly fusing raw data has better fusion performance than the distributed estimation fusion method based on the processed data. However, in some cases, the distributed estimation fusion is equivalent to the centralized estimation fusion. In particular, in what follows, we propose a distributed estimation fusion method and prove that the distributed estimation fusion method is equivalent to the above centralized estimation fusion method when all cross terms , , have full column rank.

Proposition 1.

Assume that all cross terms , are full column rank, the distributed estimation fusion is equivalent to the centralized estimation fusion as follows

where

and

is an appropriate partition of matrix such that Equation (35) holds. The superscript “+” stands for pseudoinverse.

Proof.

According to Equation (15), the mean of centralized fused state is given by

Based on the assumption that all have full column rank, we obtain

Thus, combining Equations (37) and (38) and Equation (39) yields

In order to obtain the centralized estimation mean, that is, fuse the mean of local sensor state estimation at the fusion center, we use Equations (32) and (40) to eliminate from Equation (36). From Equation (32), we have

Thus, substituting Equation (41) into Equation (40) and recalling Equations (36)–(38), we have

Therefore, we obtain the state mean of the distributed estimation fusion. □

Remark 4.

The proposed distributed estimation fusion formula is equivalent to the centralized estimation fusion, therefore, the two fusion methods have the same fusion performance. However, distributed estimation fusion is more desirable in real applications in terms of reliability, survivability, communication bandwidth and computational burdens. From Equation (35), we can see that , , , , can be calculated in advance and the terms , , , , need to be transmitted by local sensors in real time.

Remark 5.

From the update step (30) of the centralized estimation fusion with GP-UKF, the distributed estimation fusion method (35) is also suitable for the GP-UKF. Since the detailed description and proof for the distributed GP-UKF fusion are similar to Proposition 1, we omit the results. Note that the distributed GP-UKF fusion is different from the distributed GP-ADF fusion in the prediction step.

4. Numerical Examples

In this section, we assess the performance of our fusion methods with two different examples.

4.1. 1D Example

We consider the nonlinear dynamic system with two sensor measurements as follows:

where are given by and , respectively. This nonlinear system is popularly used in many papers (see References [7,10]) to measure the performance of nonlinear filters. We regard the first 200 samples as the training data and use the rest 50 samples called testing data to test the fusion methods. Assume that the is a Gaussian distribution with prior mean and variance . The estimation performance of the single sensor and multisensor fusion is evaluated by the average Mahalanobis distances that are also used in Reference [7]. The Mahalanobis distance, which is defined between the ground truth and the filtered mean, is as follows:

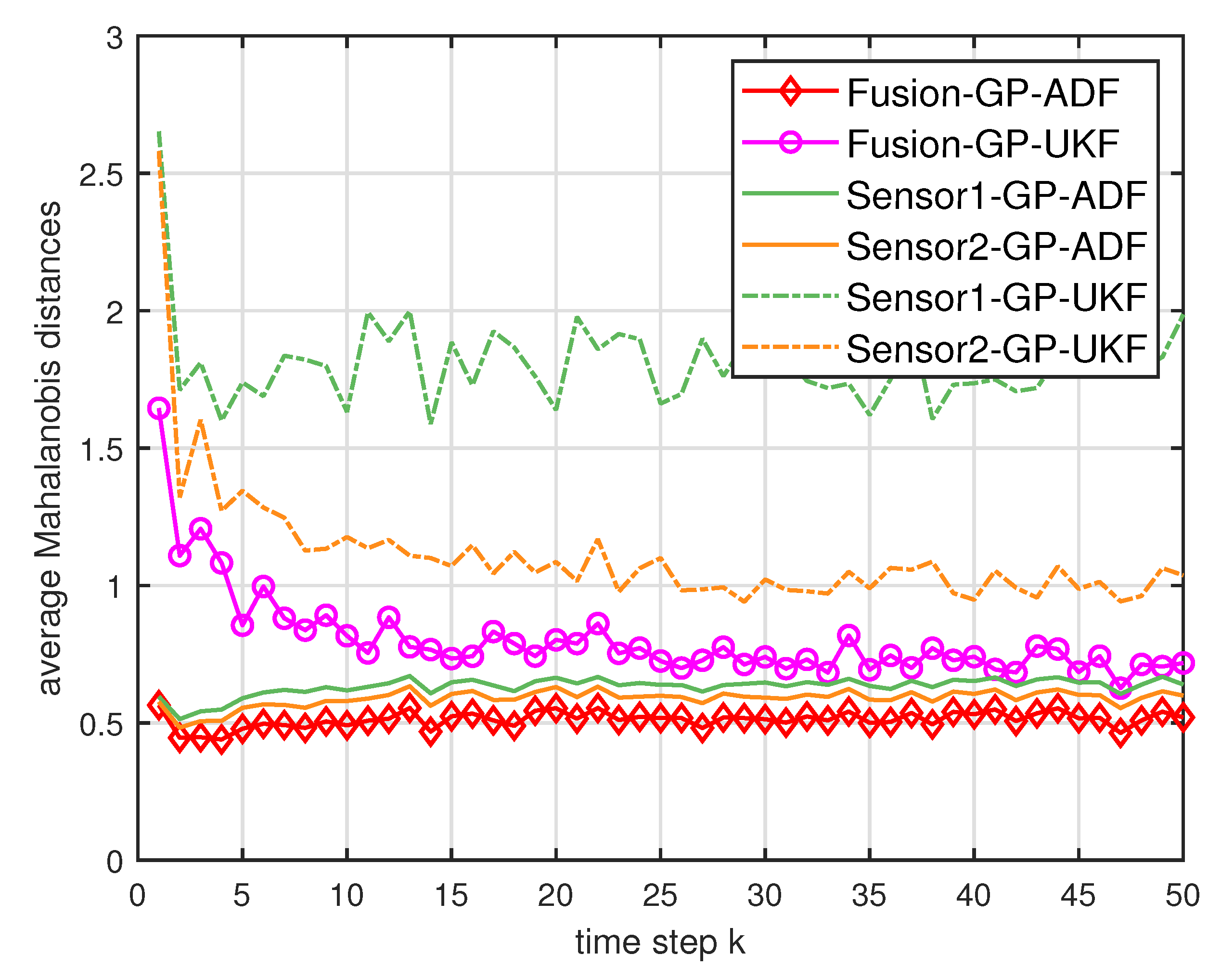

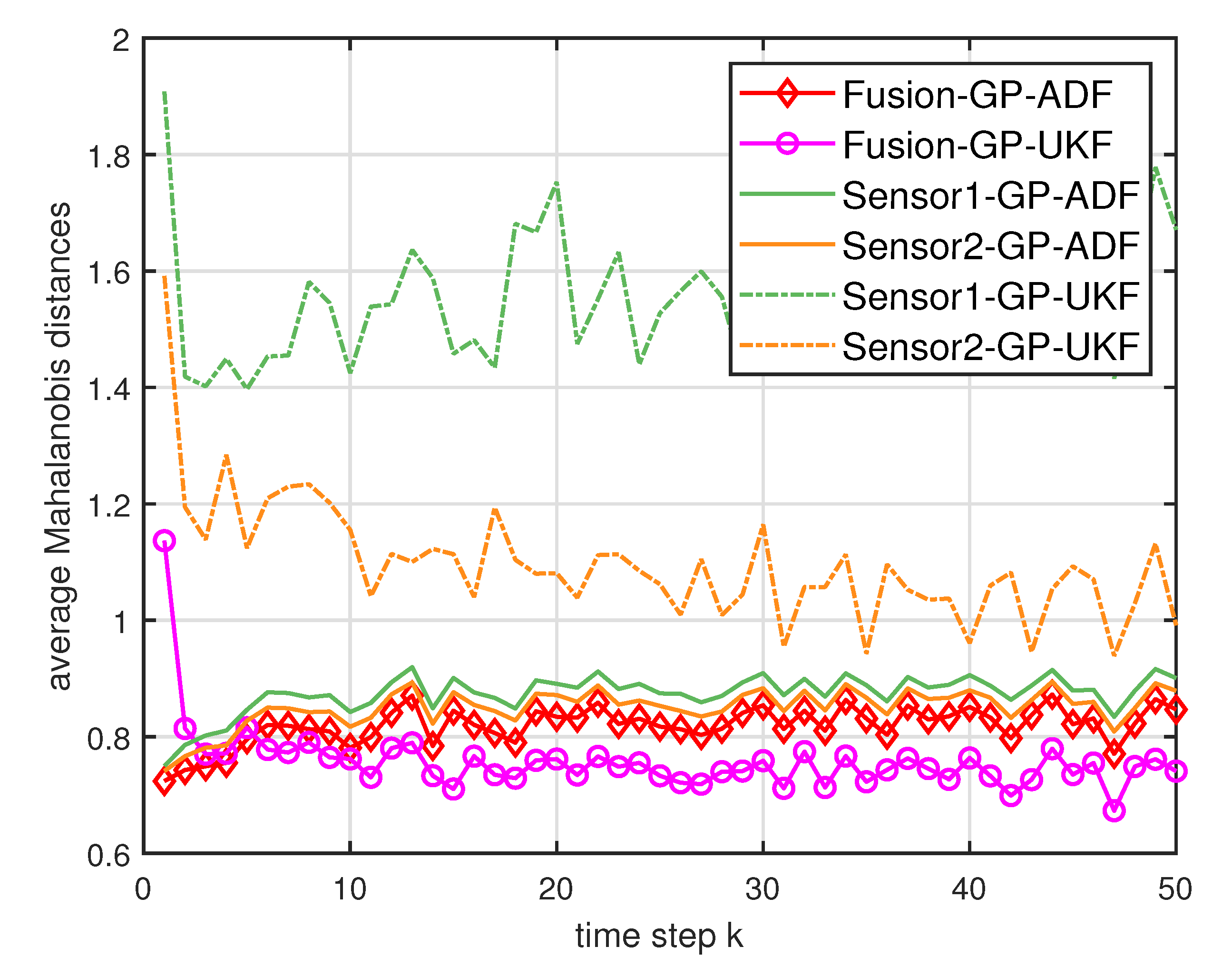

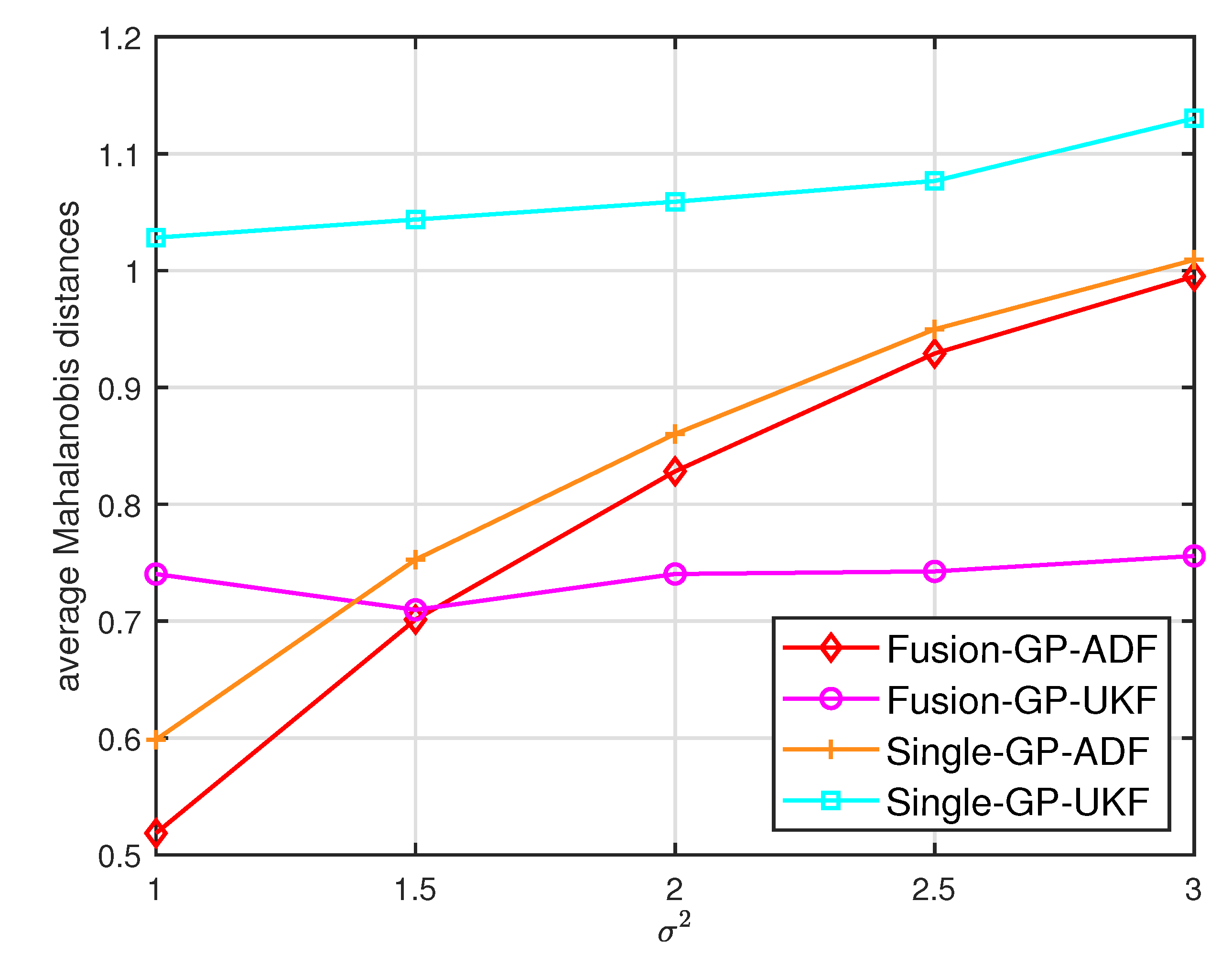

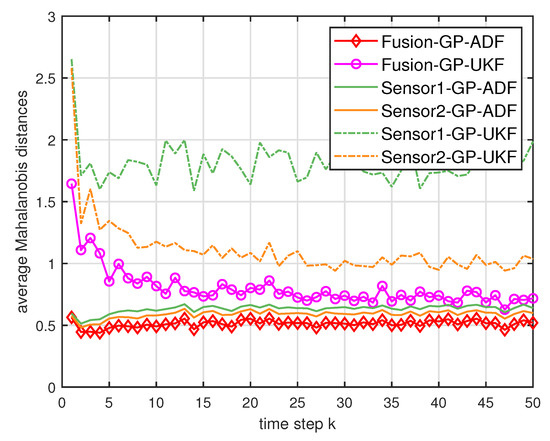

where is the estimated covariance. For the Mahalanobis distance, lower values indicate better performance [7]. Figure 2 and Figure 3 show the average Mahalanobis distances of the single filter with GP-ADF and GP-UKF, and the fusion methods after 1000 independent runs. Figure 4 shows the average Mahalanobis distances for different system noises.

Figure 2.

The average Mahalanobis distances of the single sensor and multisensor fusion with .

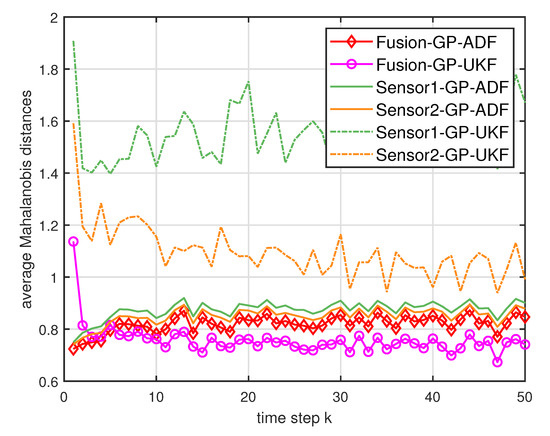

Figure 3.

The average Mahalanobis distances of the single sensor and multisensor fusion with .

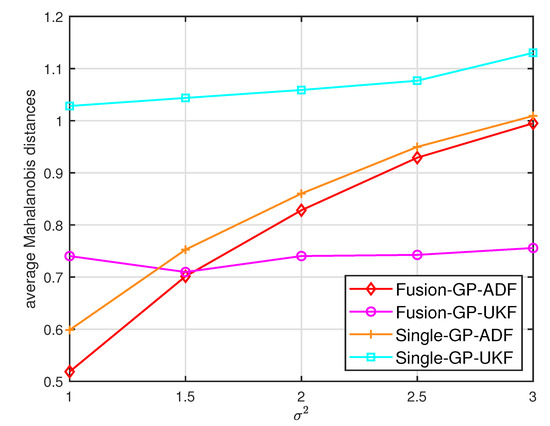

Figure 4.

The average Mahalanobis distances for different system noises .

According to the average Mahalanobis distances in Figure 2 and Figure 3, we can see that the estimation performance of Sensor 2 is better than that of Sensor 1. Furthermore, the multisensor estimation fusion methods perform better than the single sensor filters with GP-ADF and GP-UKF, respectively. Thus the effectiveness and advantages of the multisensor estimation fusion with Gaussian process can be observed. In addition, GP-ADF fusion performs better than GP-UKF fusion for the system noise and GP-UKF fusion performs better than GP-ADF fusion for the system noise . It means that GP-ADF fusion and GP-UKF fusion have their comparative advantages for different system noises. It suggests that GP-ADF fusion may be a better choice for the small system noise and GP-UKF fusion may be worth considering for the relatively large system noise. From Figure 4, we can see that the proposed fusion methods enjoy better performance for different system noises. It demonstrates the consistence of our fusion methods for different system noises.

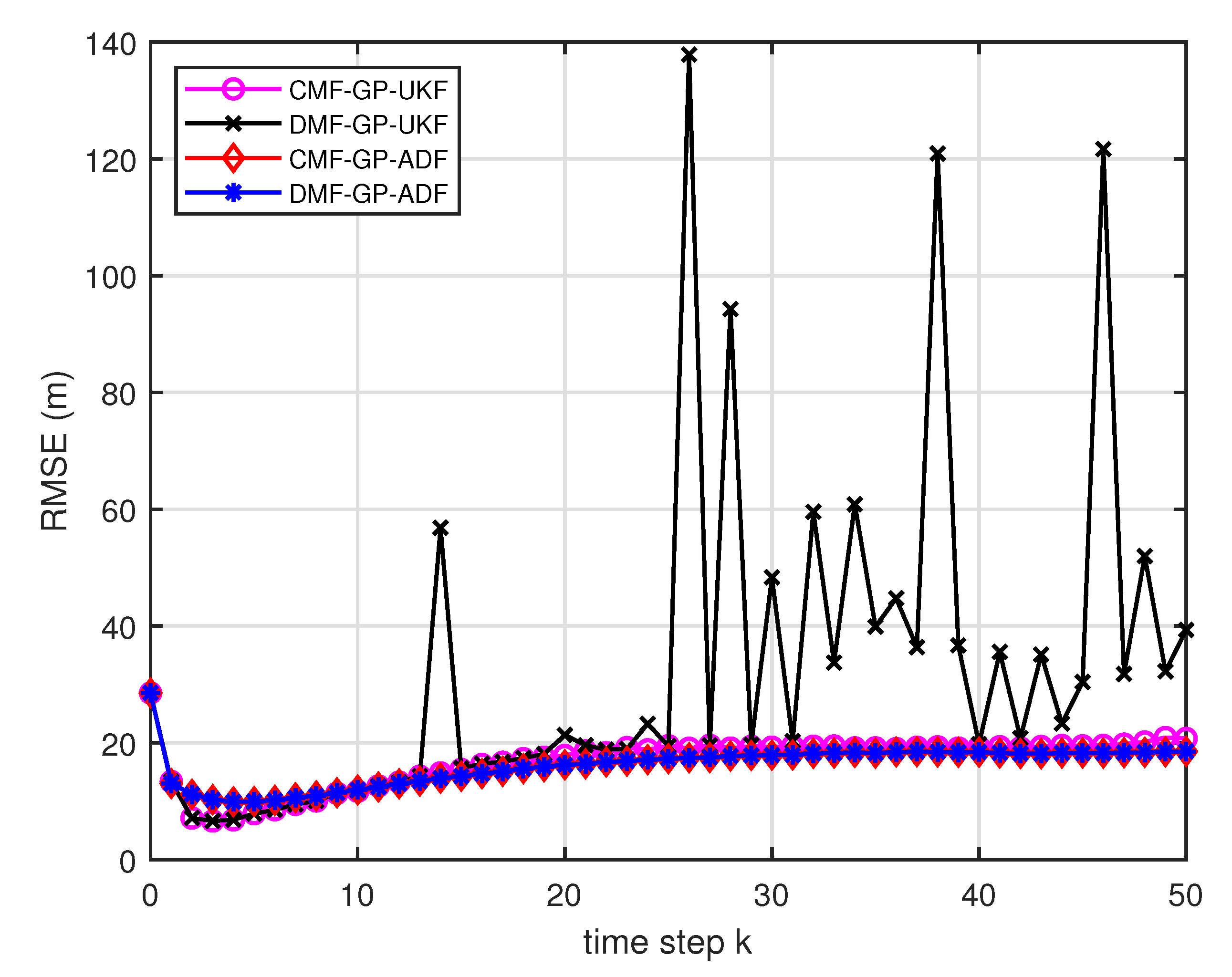

4.2. 2D Nonlinear Dynamic System

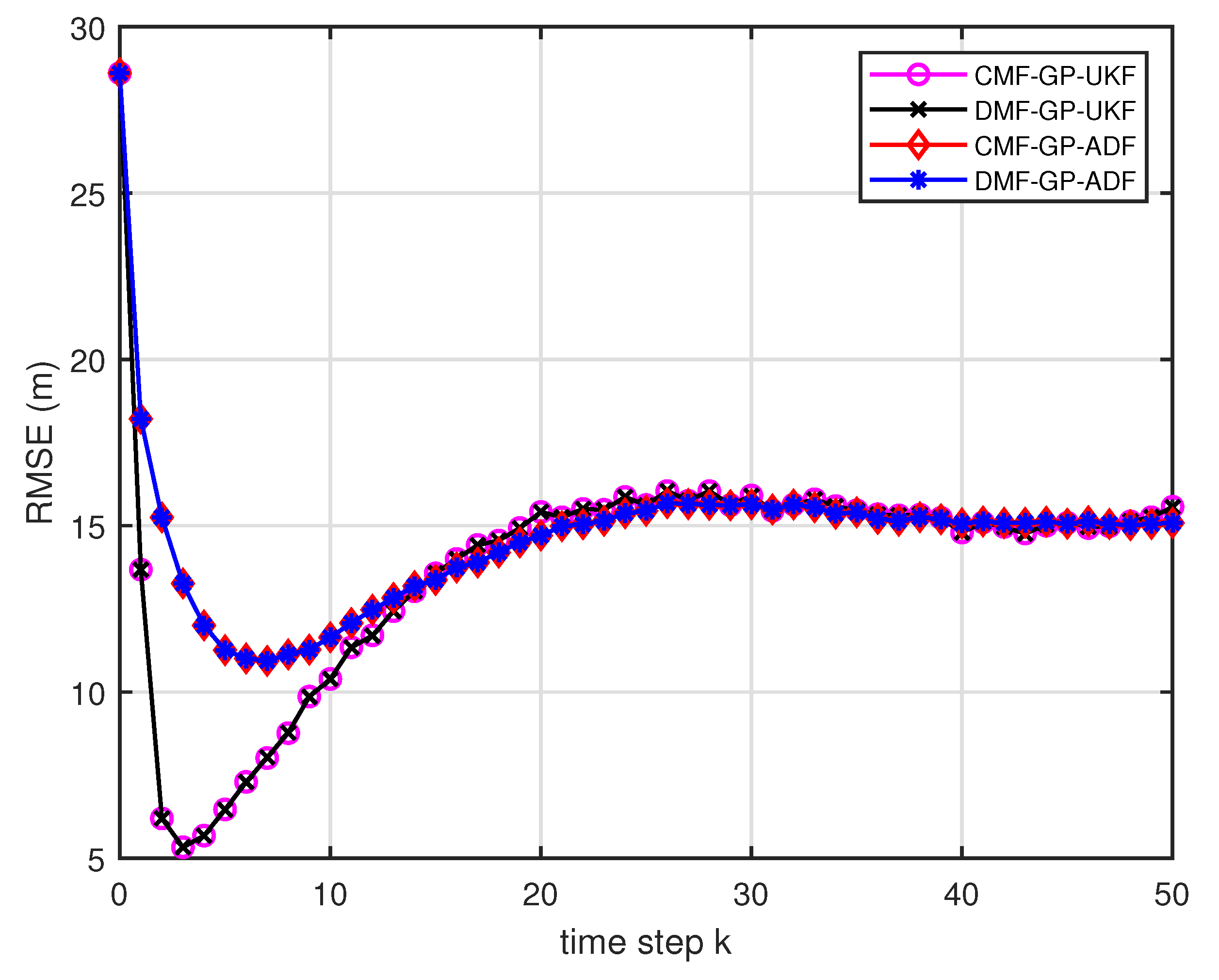

In this subsection, we elaborate the performance of the fusion methods with GP-ADF and GP-UKF, and we consider the example with constant turn motion model in target tracking. The root mean square error (RMSE) is used as the estimation performance measure. It is defined as follows:

where M is the total number of simulation runs, is the true simulated state for the jth simulation, and is the estimated state value for the jth simulation at time k, . The lower the value of the RMSE is, the better the performance of corresponding method is.

4.2.1. Experimental Setup

We consider the constant turn motion model with two sensors as follows:

where the state transition matrix is given by

with angular velocity , the covariance matrix of transition noise satisfies

and the covariance matrix of measurement noise is

The sensor positions are given by and , respectively.

We use the transition function (46) and measurement functions (47) to simulate a group of data. The values of angular velocity are given by and , respectively, which correspond to the small turn motion model and large turn motion model. The first samples are regarded as the training data and the rest 50 samples called testing data are used to test the fusion methods. The are the true simulated state and two-sensor observations used to train the models. Assume that the is a Gaussian distribution with the prior mean and the identity matrix variance . The initial value of filtering for each sensor is set as the Cartesian coordinate transformation value based on the Polar coordinate observation with a random perturbation for each dimension. We use the random perturbation for the training data case and for the training data cases, respectively. The initial value of fusion filtering is the mean of the initial values of all sensors. Based on the available observation information, the outputs of GP-ADF and GP-UKF for each single sensor are the mean and covariance terms , , , , ,, . The distributed fusion methods, the RCC-CI algorithm [37] and the convex combination method [43], are also used as a comparison with our distributed fusion method. The RCC-CI algorithm and the convex combination method directly use the estimated mean and covariance matrix to fuse the estimated results. Our methods take full advantages of the results of the single sensor Gaussian process filters such as the cross term which is easily obtained. Note that all of the fusion methods are based on the local estimates that are distilled from the measurements. Thus, the comparison is fair from the available observation information. The simulation results of the RCC-CI algorithm are based on the YALMIP toolbox [55]. We compare the fusion methods with the RCC-CI algorithm and the convex combination method by RMSE after independent simulation runs. Meanwhile, we also compare the computation time of the multisensor estimation fusion methods with the RCC-CI algorithm and the convex combination method. The ratio of full rank, defined as the percentage of full column rank of the cross term for the single sensor filtering at every time step after independent runs, is used to test the condition of equivalence. If the ratio of full rank is less than , the condition is broken. The simulations are done under Matlab (MathWorks, Inc., Natick, MA, USA) R2018b with ThinkPad W540.

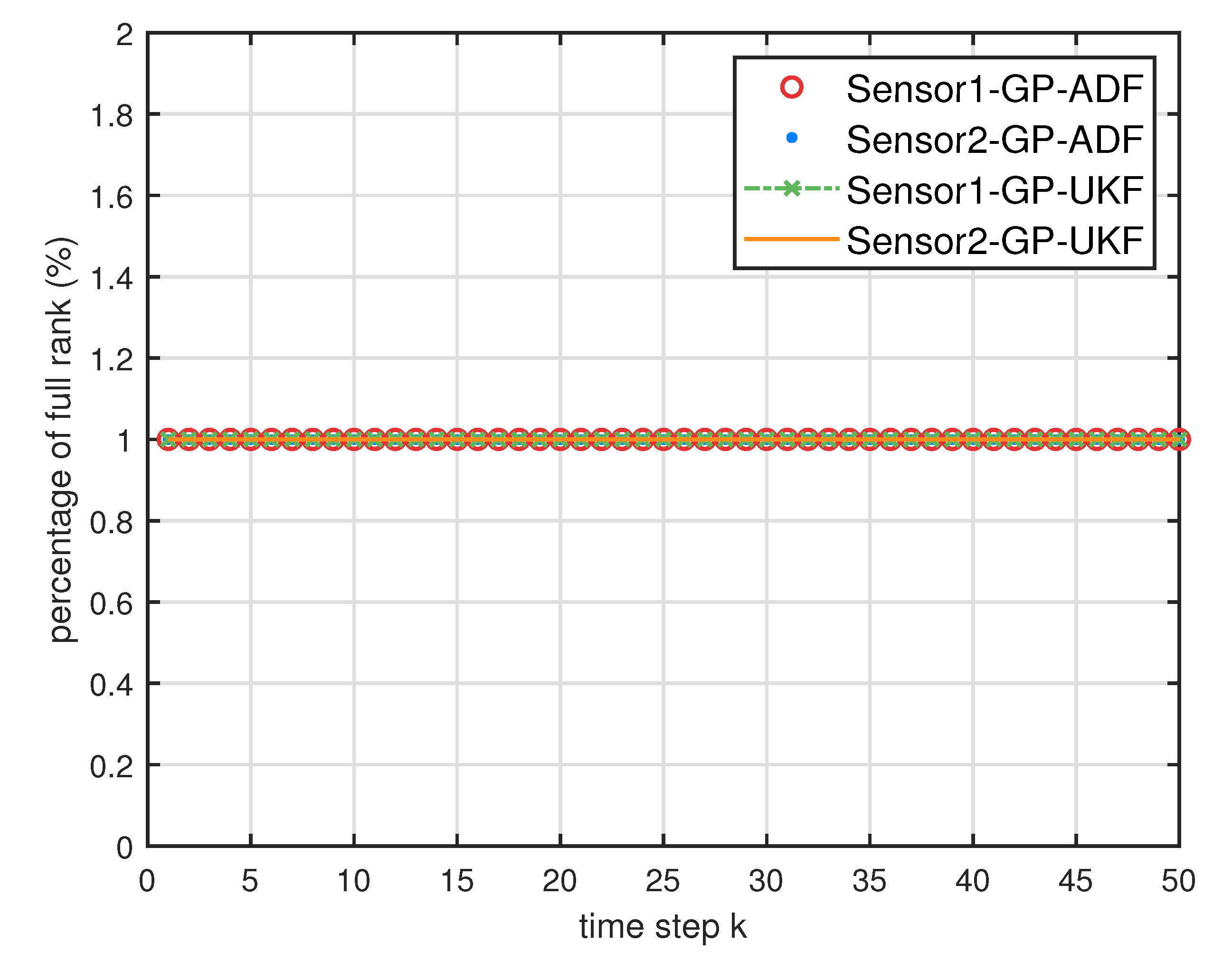

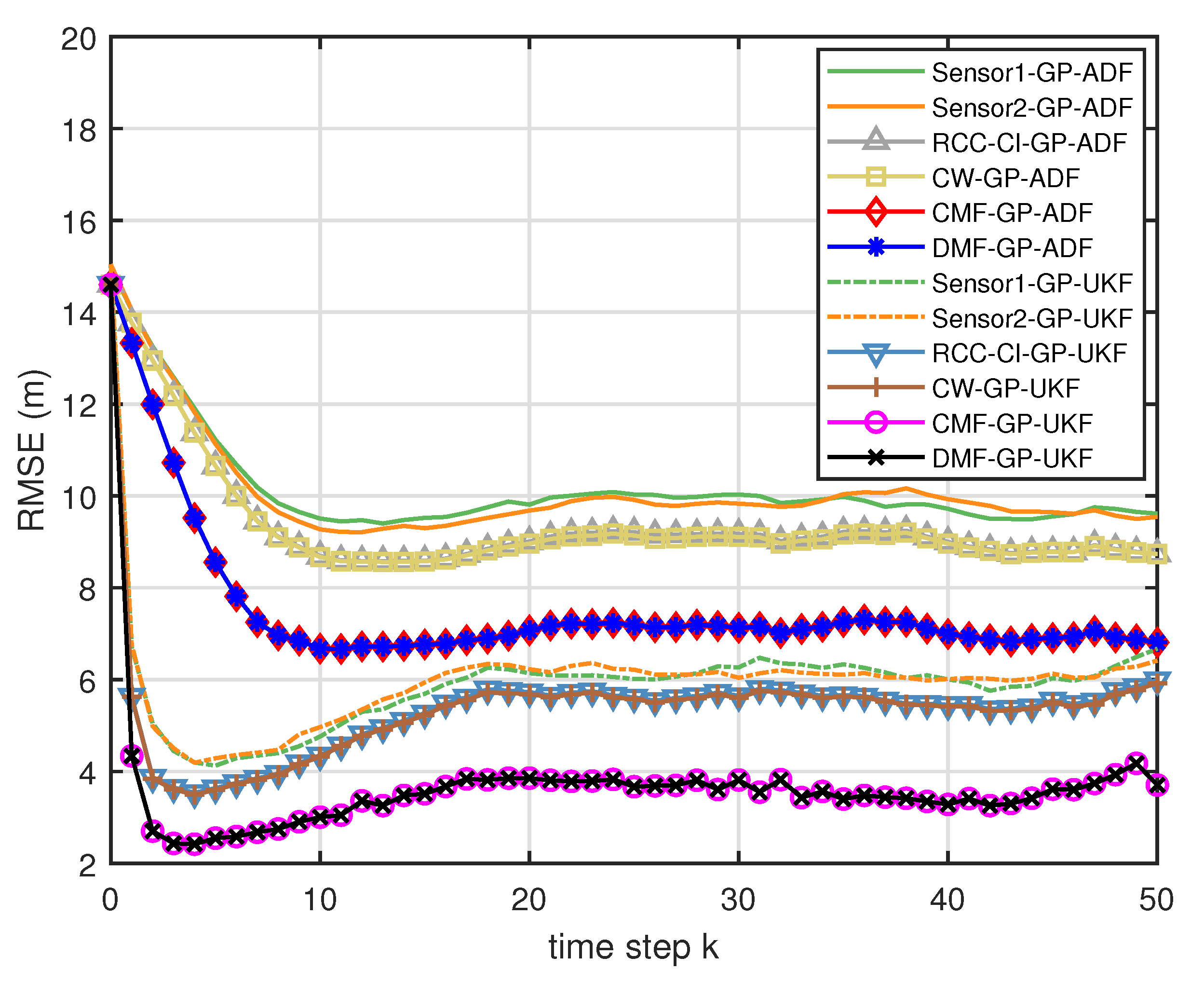

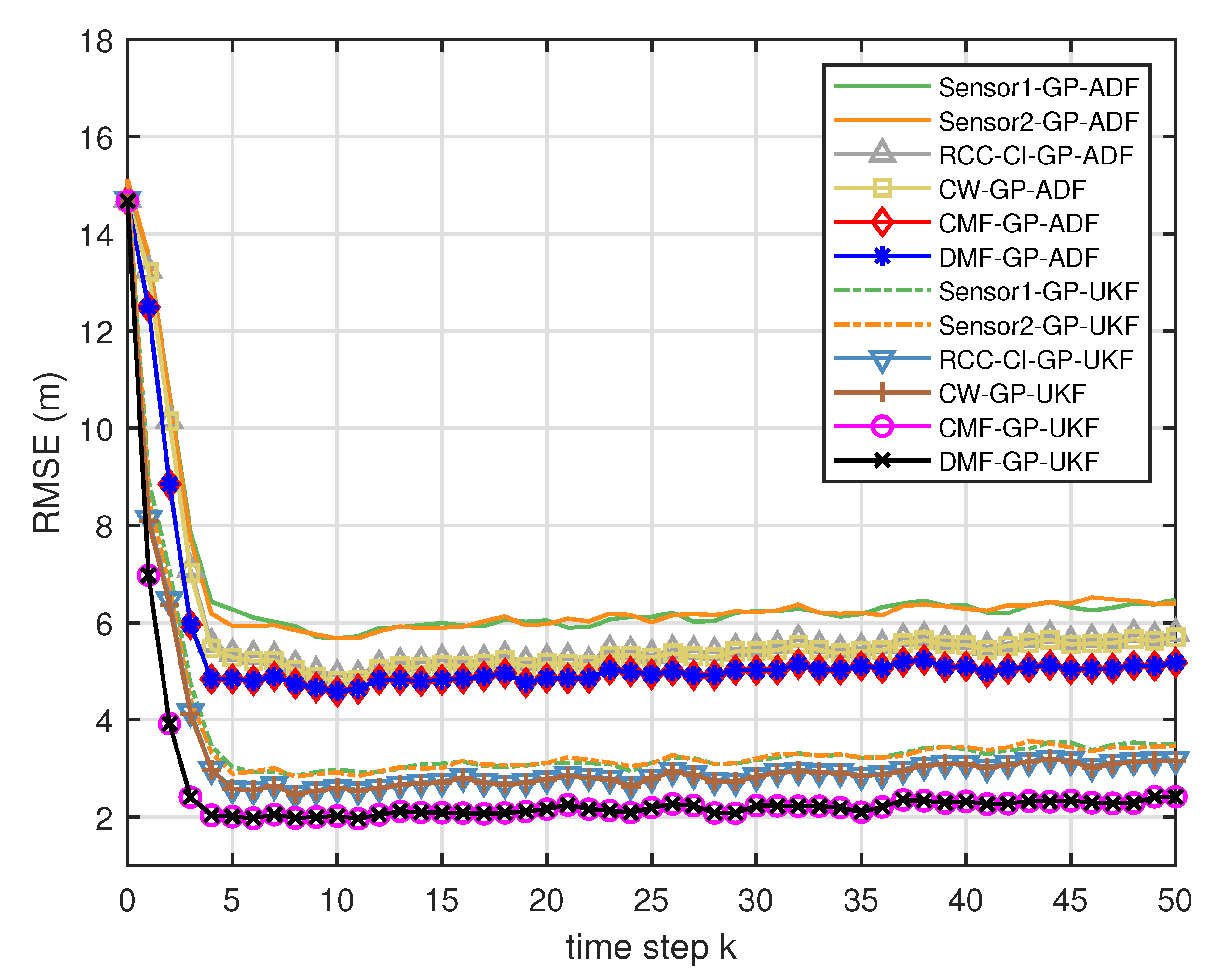

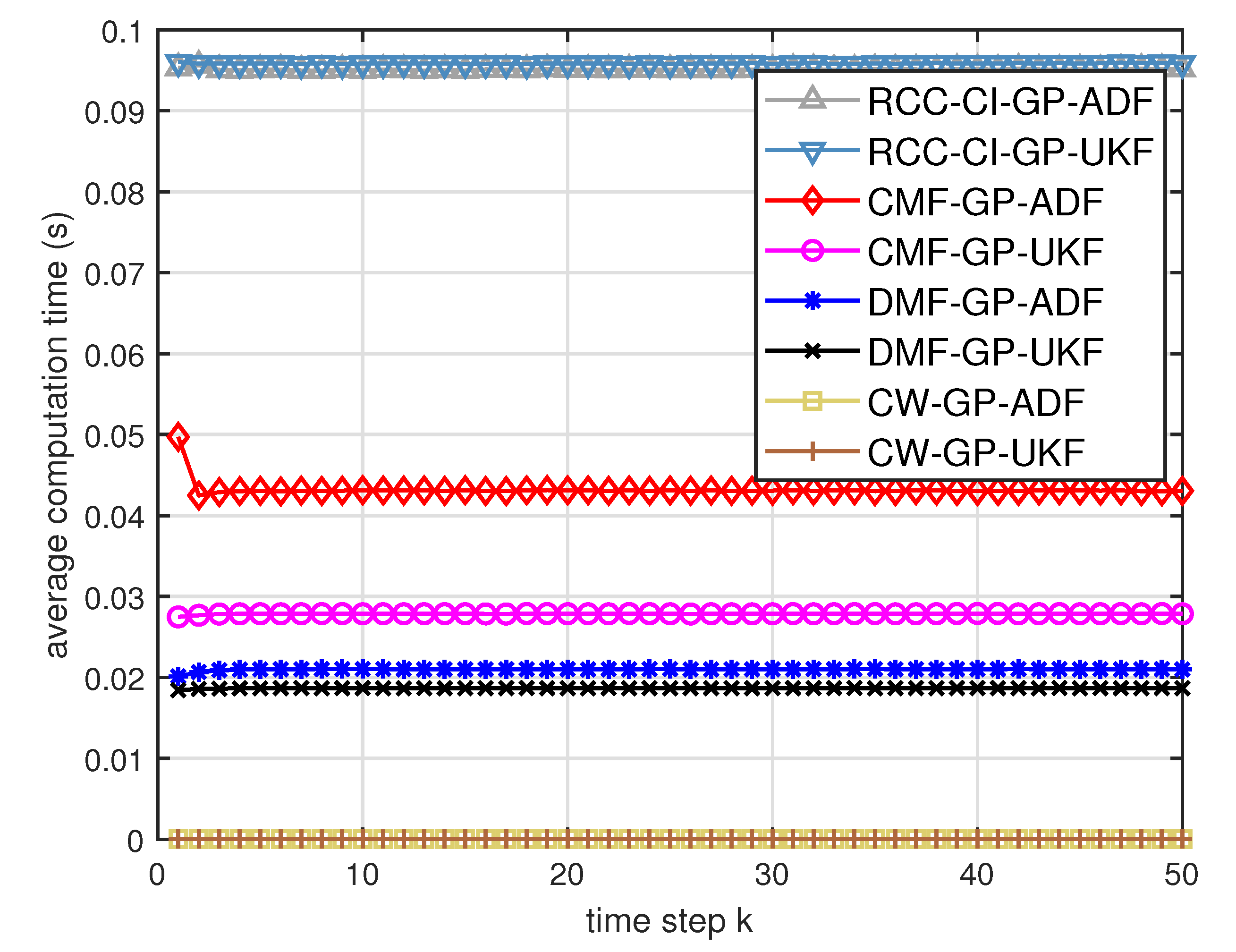

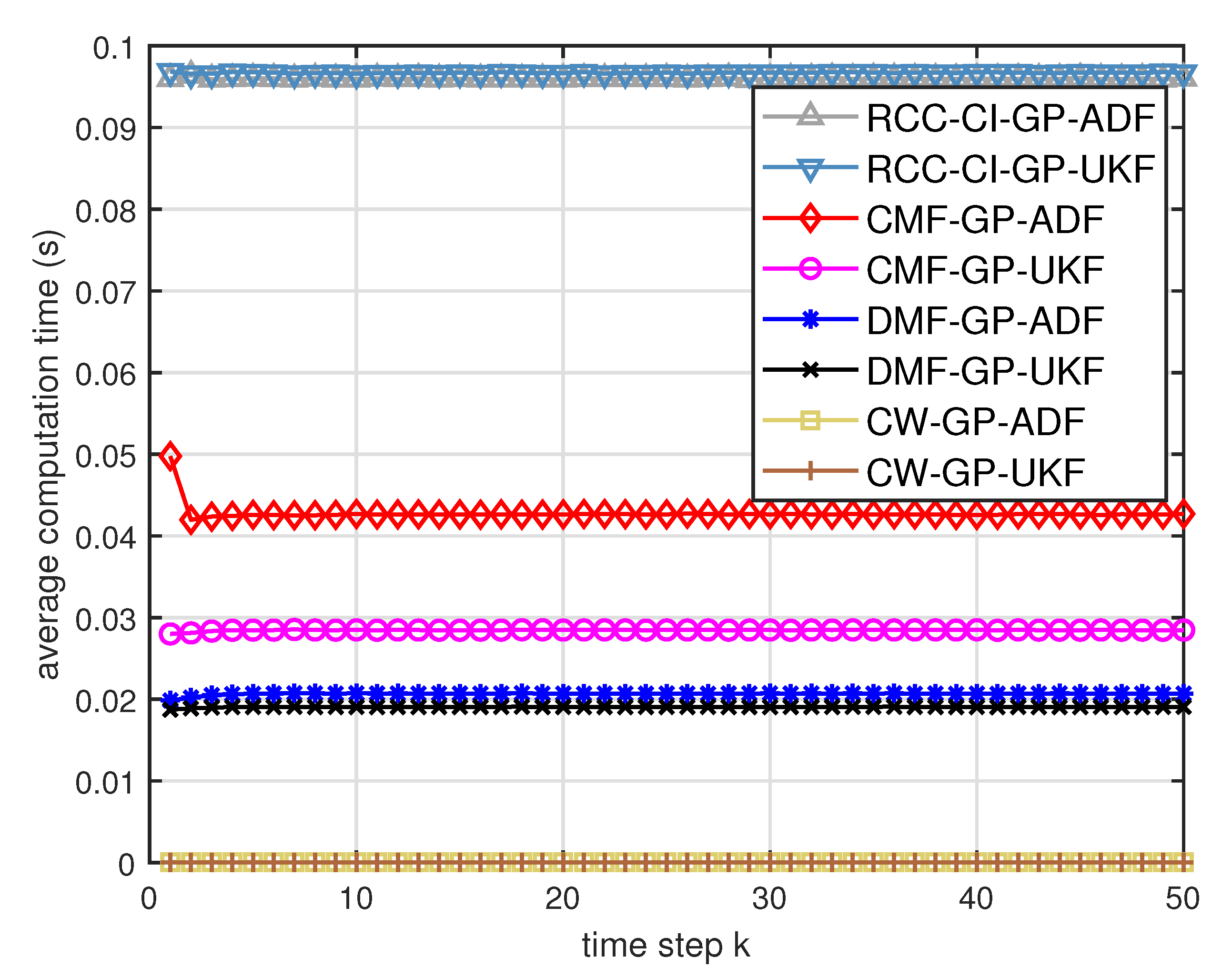

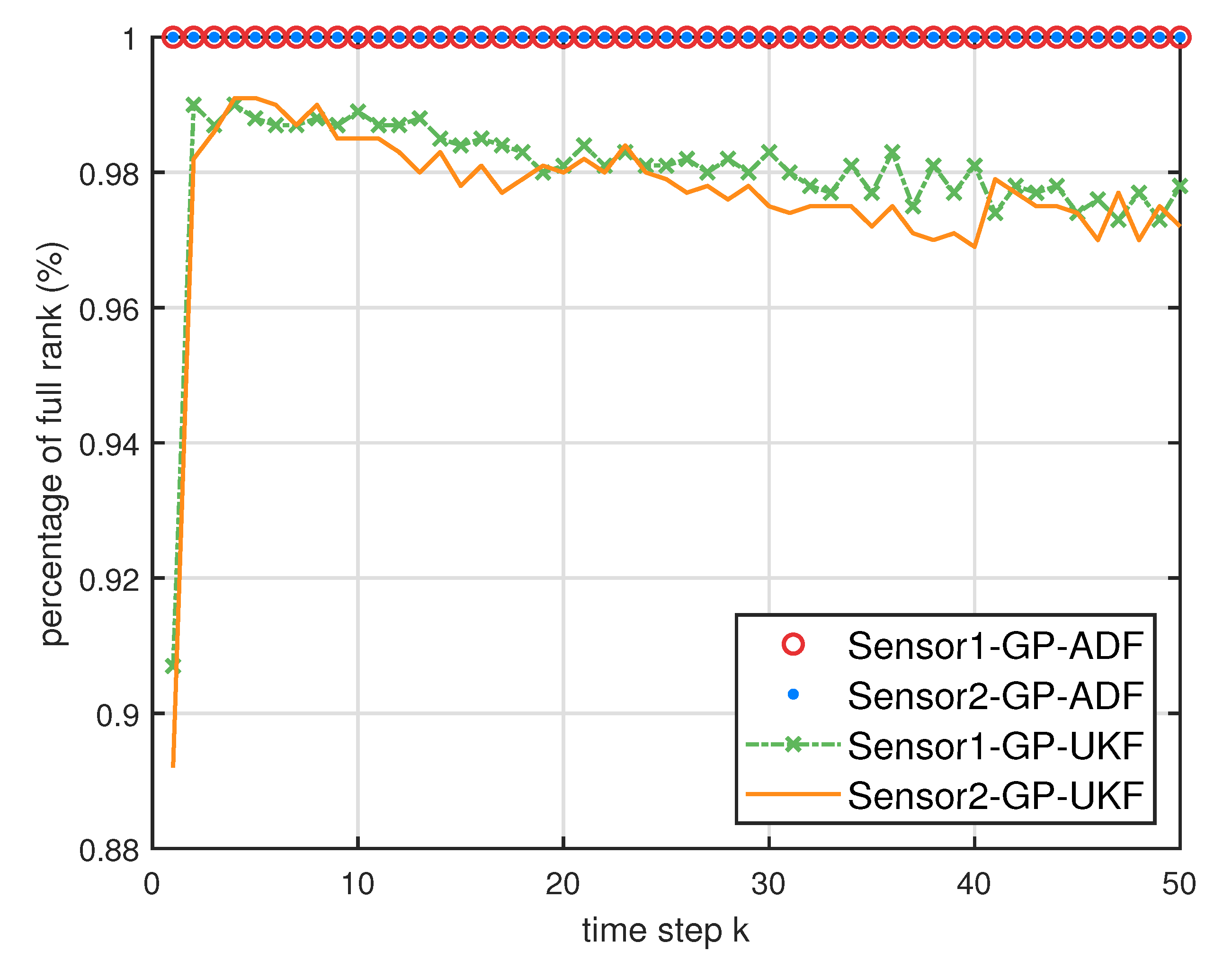

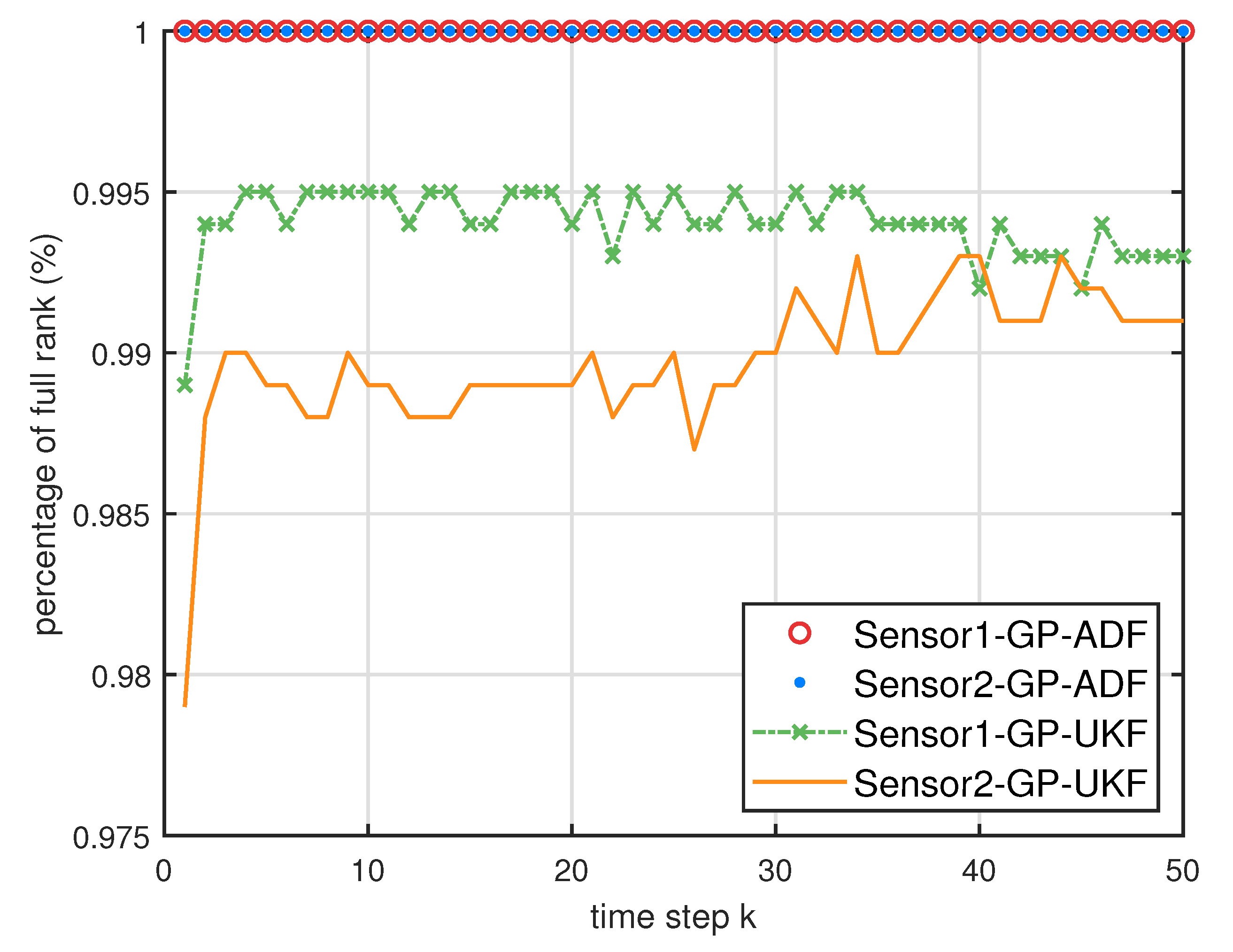

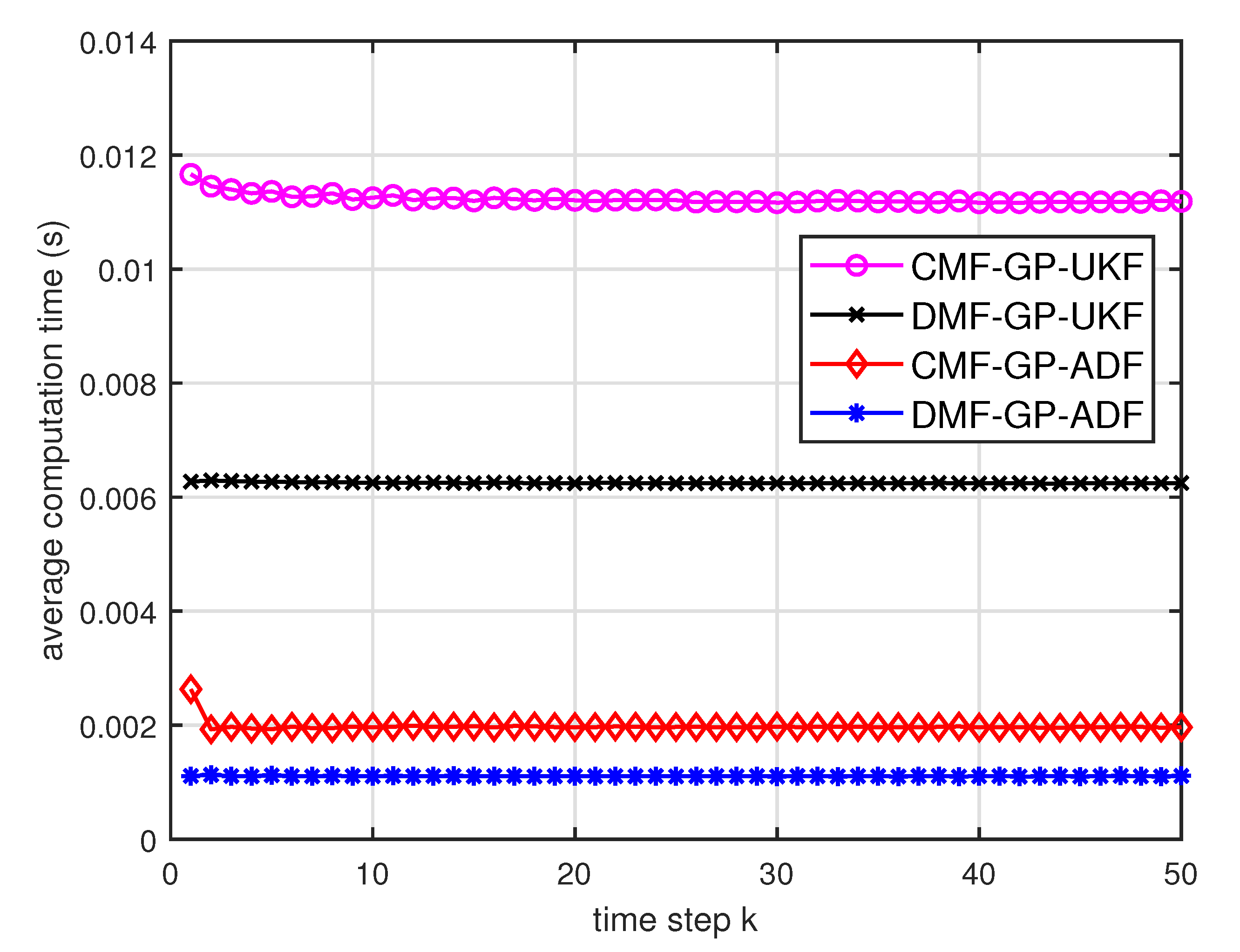

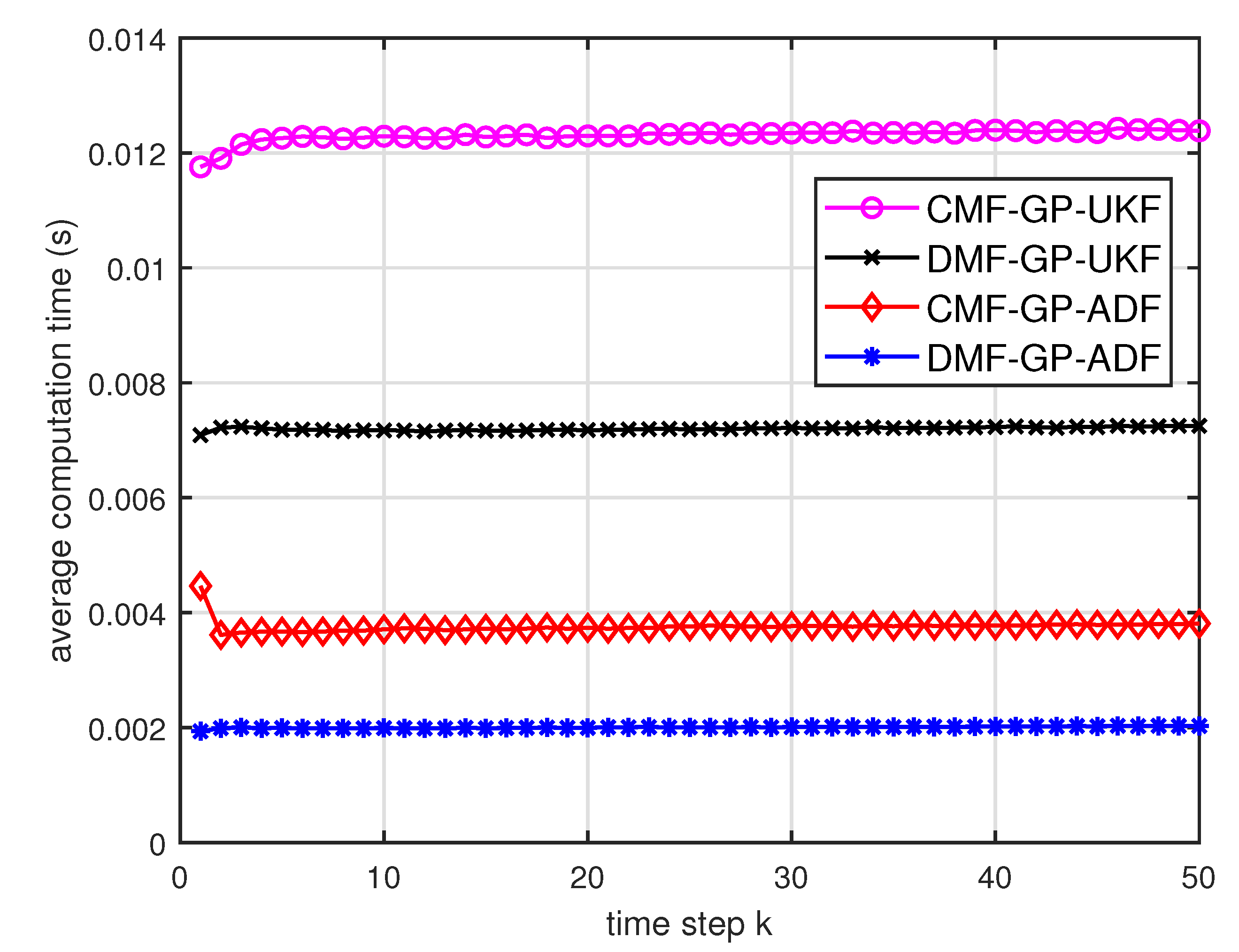

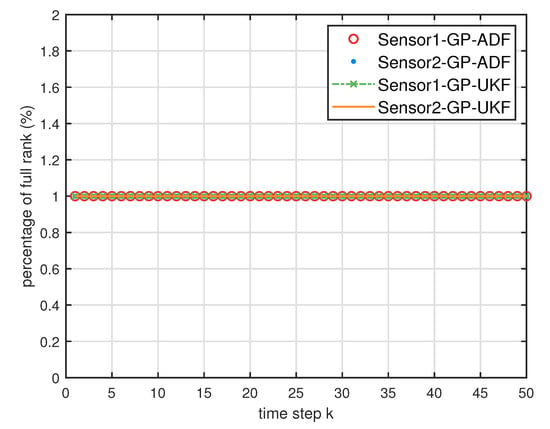

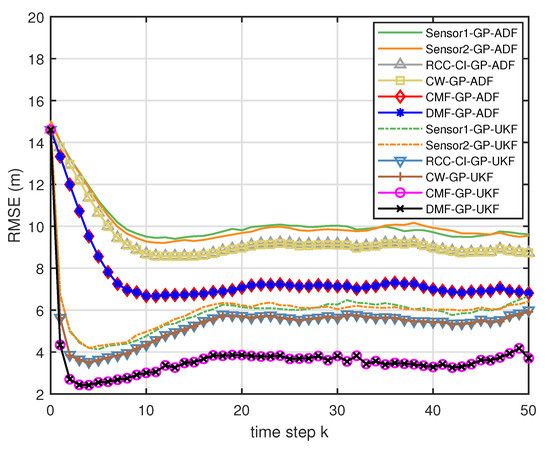

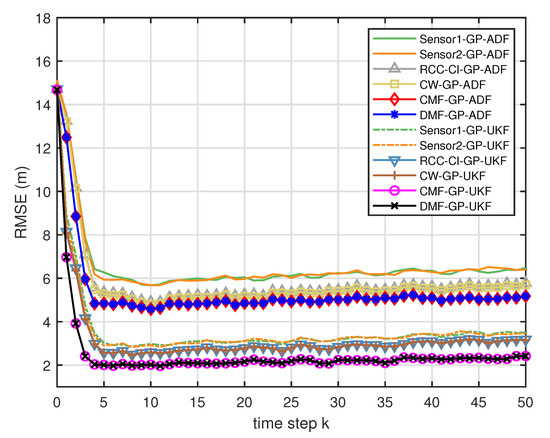

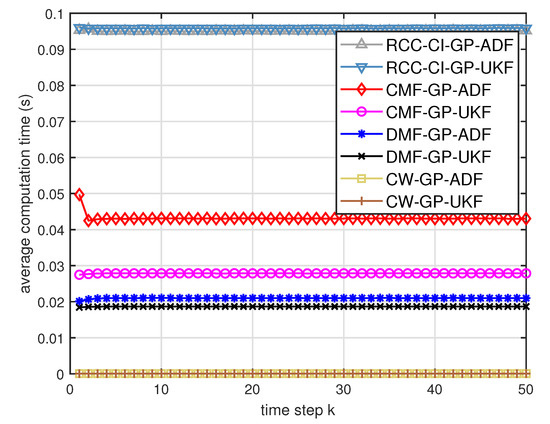

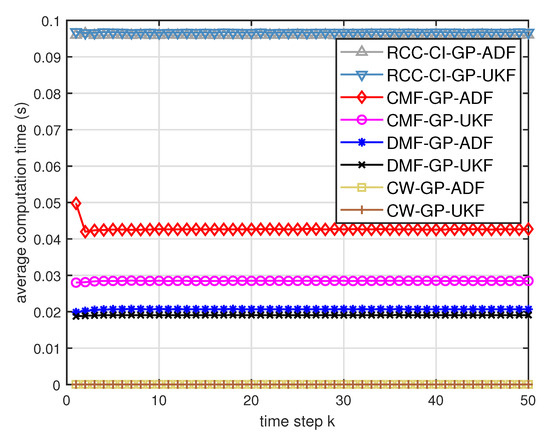

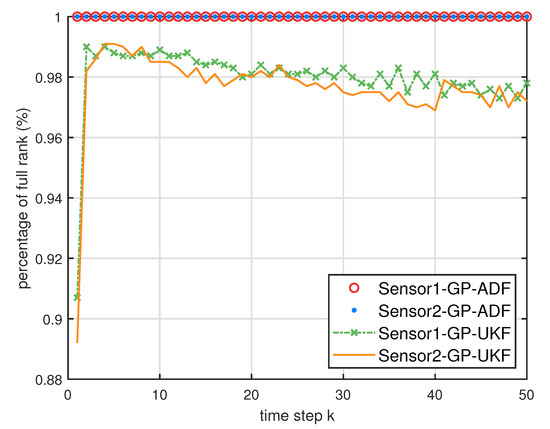

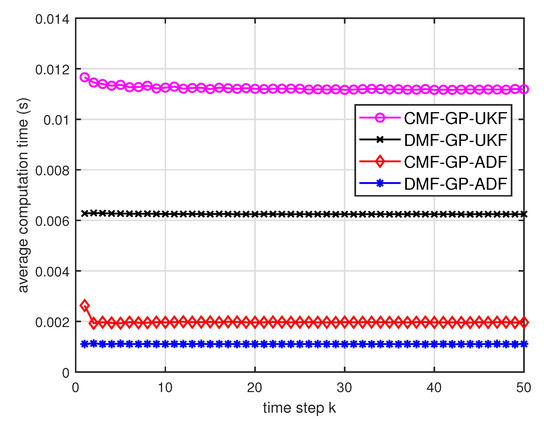

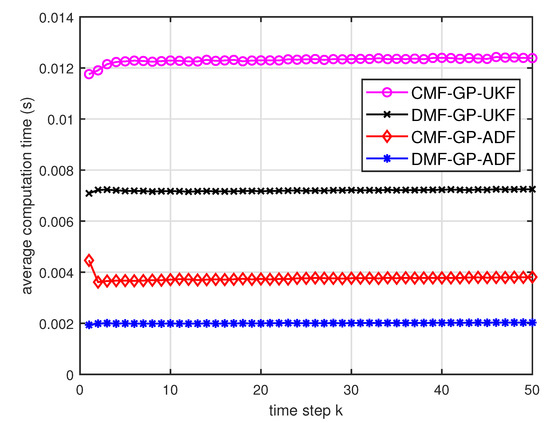

Figure 5 describes the ratios of full column rank in the single sensor case for testing data with training data and . The RMSEs for in the case of training data are depicted in Figure 6 and Figure 7, respectively. The average computation time of these fusion methods for in the case of training data after 1000 independent simulation runs are depicted in Figure 8 and Figure 9, respectively. The diamond line represents the RMSE of the centralized estimation fusion with GP-ADF (CMF-GP-ADF) and the star line represents the distributed estimation fusion with GP-ADF (DMF-GP-ADF). The circle line represents the RMSE of the centralized estimation fusion with GP-UKF (CMF-GP-UKF) and the x line represents the distributed estimation fusion with GP-UKF (DMF-GP-UKF). The upper triangle line represents the RMSE of the RCC-CI fusion algorithm with GP-ADF (RCC-CI-GP-ADF) and the lower triangle line represents the RMSE of the RCC-CI fusion algorithm with GP-UKF (RCC-CI-GP-UKF). The square line represents the RMSE of the convex combination method, that is, covariance weight, with GP-ADF (CW-GP-ADF) and the + line represents the RMSE of the convex combination method with GP-UKF (CW-GP-UKF). At the same time, solid line is used for the single sensor GP-ADF filter and dash-dotted line is used for the single GP-UKF filter. Similarly, the ratios of full column rank in the single sensor case for testing data with training data and are depicted in Figure 10 and Figure 11, respectively. The RMSEs for in the case of training data are depicted in Figure 12 and Figure 13, respectively. The average computation time of these fusion methods for testing data with training data and after 1000 independent simulation runs are depicted in Figure 14 and Figure 15, respectively.

Figure 5.

The ratios of full column rank of the cross term in the single sensor case for testing data with training data and .

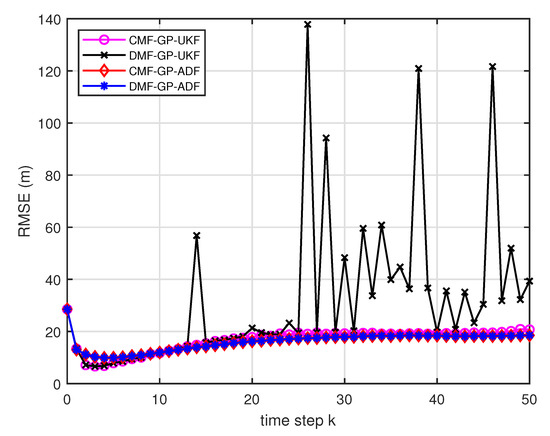

Figure 6.

The RMSE for testing data with training data and .

Figure 7.

The RMSE for testing data with training data and .

Figure 8.

Average computation time of the three fusion methods for testing data with training data and .

Figure 9.

Average computation time of the three fusion methods for testing data with training data and .

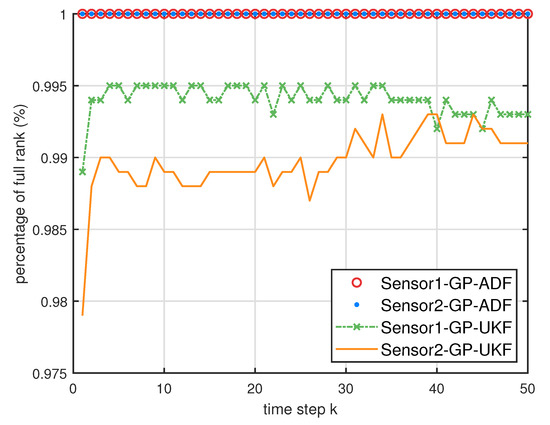

Figure 10.

The ratios of full column rank of the cross term in the single sensor case for testing data with training data and .

Figure 11.

The ratios of full column rank of the cross term in the single sensor case for testing data with training data and .

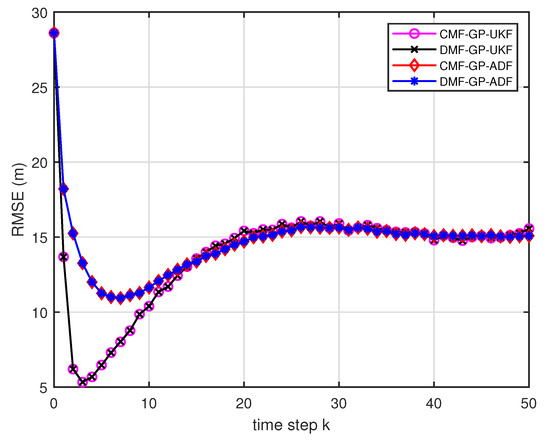

Figure 12.

The RMSE for testing data with training data and .

Figure 13.

The RMSE for testing data with training data and .

Figure 14.

Average computation time of the three fusion methods for testing data with training data and .

Figure 15.

Average computation time of the three fusion methods for testing data with training data and .

4.2.2. Experimental Analysis

We divide the experimental analysis into two cases, that is, the equivalence condition is satisfied or not. Some phenomena and analysis are described as follows:

Case 1: the equivalence condition is satisfied.

- From Figure 5, we can see that the ratios of full rank are all equal to . It means that the cross terms of the single sensor filters are all full column rank for the training data case and thus the equivalence condition of centralized fusion and distributed fusion is satisfied in Proposition 1. Meanwhile, from Figure 6 and Figure 7, the RMSE of the distributed estimation fusion is the same as that of the centralized estimation fusion based on GP-ADF and GP-UKF, respectively. It demonstrates the equivalence between the centralized estimation fusion and the distributed estimation fusion in Proposition 1 under the condition of full column rank.

- In addition, the RMSE of multisensor estimation fusion method is lower than that of the RCC-CI algorithm and the convex combination method. It implies the effectiveness of the fusion methods based on Gaussian processes. The possible reason is that our estimation fusion methods extract more extra correlation information, and the RCC-CI algorithm and the convex combination method only use the local estimates with mean and covariance.

- From Figure 8 and Figure 9, we can find that the computation time of the distributed estimation fusion is less than that of the centralized estimation fusion. It demonstrates the superiority of the distributed estimation fusion under the same fusion performance. The computation time of the proposed fusion methods is much less than that of the RCC-CI algorithm. The possible reason is due to solve a optimization problem for the RCC-CI algorithm. The convex combination method takes the least computation time, since it directly uses the weight combination with covariance.

Case 2: the equivalence condition is not satisfied.

- From Figure 10 and Figure 11, it can be seen that the ratios of full rank are both less than for the single sensor GP-UKF case with and training data. Thus, the equivalence between the centralized and distributed estimation fusion with GP-UKF is broken in Figure 12 and Figure 13, respectively. The reason may be that the Gaussian models are relatively inaccurate with less training data, which can be known from the comparison with Figure 6, Figure 12 and Figure 13 for the same methods. At the same time, the finite-sample approximation of GP-UKF seriously depends on the Gaussian process models and the computation way of the cross terms is the sum about rank-one matrices for GP-UKF. However, the equivalence is still satisfied for the GP-ADF fusion. It implies GP-ADF is more stable than GP-UKF, which is also referred to in Reference [7].

- We can also see that the performance of GP-UKF fusion is better than that of GP-ADF fusion with training data from Figure 6 and a little worse with training data from Figure 12 and Figure 13. Meanwhile, from Figure 8, Figure 14 and Figure 15, the average computation time of GP-UKF fusion is less than that of GP-ADF fusion with training data and is contrary with training data. It may inspire us that the GP-UKF fusion is suitable for the enough training data case and GP-ADF fusion does well in the small number of training data case for the turn motion systems.

In a word, distributed estimation fusion with GP-ADF is more stable, and has a better performance in the case of the small number of training data. If we have enough training data, GP-UKF fusion may be the better choice.

5. Conclusions

In the context of this paper, the property matters if the real system does not closely follow idealized models or a parametric model cannot easily be determined for nonlinear systems. A non-parametric method, the Gaussian process, is introduced to learn models from training data, since it takes both model uncertainty and sensor measurement noise into account. In order to estimate the state of the multisensor nonlinear dynamic systems, we have used the Gaussian process models as the prior of the transition and measurement function of the dynamic system. Then the transition function and measurement function have been trained with Gaussian processes, respectively. Based on the Gaussian process models for all available local sensor measurements, we have developed two fusion methods, centralized estimation fusion and distributed estimation fusion, with GP-ADF and GP-UKF, respectively. Taking full advantage of the nature of the Gaussian process, the equivalence between centralized estimation fusion and distributed estimation fusion has been derived under mild conditions. Simulations show that the equivalence is satisfied under given conditions and the multisensor estimation fusion performs better than the single sensor filters. Compared with the RCC-CI algorithm, the multisensor estimation fusion methods not only have higher accuracy, but also require less computation time. The estimation performance of multisensor estimation fusion methods is also better than that of the convex combination method. Future work may involve state initialization, Gaussian process latent variable models without ground truth states, multiple model fusion with different Gaussian processes for maneuvering target tracking and validate the methods with the real sensor data.

Author Contributions

Conceptualization, Y.L. and J.X.; Gaussian process methodology, Z.W. and Y.L.; fusion methodology, X.S. and Y.L.; data curation, J.X. and Y.L.; software, Y.L. and J.X.; validation, Y.L. and Z.W.; formal analysis, Y.L.; writing–original draft preparation, J.X. and Y.L.; writing–review and editing, Y.L.; supervision, X.S.; project administration, X.S.; funding acquisition, X.S.

Funding

This work was supported in part by the NSFC under Grant 61673282, the PCSIRT under Grant PCSIRT16R53 and the Fundamental Research Funds for the Central Universities under Grand No. 2012017yjsy140.

Acknowledgments

We would like to thank Yunmin Zhu for his insightful comments and helpful suggestions that greatly improved the quality of this paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Julier, S.J.; Uhlmann, J.K. Unscented filtering and nonlinear estimation. Proc. IEEE 2004, 92, 401–422. [Google Scholar] [CrossRef]

- Lan, J.; Li, X.R. Multiple conversions of measurements for nonlinear estimation. IEEE Trans. Signal Process. 2017, 65, 4956–4970. [Google Scholar] [CrossRef]

- Bar-Shalom, Y.; Li, X.R.; Kirubarajan, T. Estimation with Applications to Tracking and Navigation: Theory, Algorithms and Software; Wiley: New York, NY, USA, 2001. [Google Scholar]

- Thrun, S.; Burgard, W.; Fox, D. Probabilistic Robotics; MIT Press: Cambridge, MA, USA, 2005. [Google Scholar]

- Simon, D. Optimal State Estimation: Kalman, H∞, and Nonlinear Approaches; Wiley-Interscience: New York, NY, USA, 2006. [Google Scholar]

- Arulampalam, M.S.; Maskell, S.; Gordon, N.; Clapp, T. A tutorial on particle filters for online nonlinear/non-Gaussian Bayesian tracking. IEEE Trans. Signal Process. 2002, 50, 174–188. [Google Scholar] [CrossRef]

- Deisenroth, M.P.; Huber, M.F.; Hanebeck, U.D. Analytic moment-based Gaussian process filtering. In Proceedings of the International Conference on Machine Learning, Montreal, QC, Canada, 14–18 June 2009. [Google Scholar]

- Rasmussen, C.E.; Williams, C.K.I. Gaussian Processes for Machine Learning; MIT Press: Cambridge, MA, USA, 2006. [Google Scholar]

- Huber, M.F. Nonlinear Gaussian Filtering: Theory, Algorithms, and Applications. Ph.D. Thesis, Karlsruhe Institute of Technology, Karlsruhe, Germany, 2015. [Google Scholar]

- Deisenroth, M.P.; Turner, R.D.; Huber, M.F.; Hanebeck, U.D.; Rasmussen, C.E. Robust filtering and smoothing with Gaussian processes. IEEE Trans. Automat. Control 2012, 57, 1865–1871. [Google Scholar] [CrossRef]

- Jacobs, M.A.; DeLaurentis, D. Distributed Kalman filter with a Gaussian process for machine learning. In Proceedings of the 2018 IEEE Aerospace Conference, Big Sky, MT, USA, 3–10 March 2018; pp. 1–12. [Google Scholar]

- Guo, Y.; Li, Y.; Tharmarasa, R.; Kirubarajan, T.; Efe, M.; Sarikaya, B. GP-PDA filter for extended target tracking with measurement origin uncertainty. IEEE Trans. Aerosp. Electron. Syst. 2019, 55, 1725–1742. [Google Scholar] [CrossRef]

- Preuss, R.; Von Toussaint, U. Global optimization employing Gaussian process-based Bayesian surrogates. Entropy 2018, 20, 201. [Google Scholar] [CrossRef]

- Ko, J.; Fox, D. GP-BayesFilters: Bayesian filtering using Gaussian process prediction and observation models. Autonomous Robots 2009, 27, 75–90. [Google Scholar] [CrossRef]

- Lawrence, N.D. Gaussian process latent variable models for visualisation of high dimensional data. In Proceedings of the NIPS’03 16th International Conference on Neural Information Processing Systems, Whistler, BC, Canada, 9–11 December 2003; pp. 329–336. [Google Scholar]

- Wang, J.M.; Fleet, D.J.; Hertzmann, A. Gaussian process dynamical models. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 5–8 December 2005; pp. 1441–1448. [Google Scholar]

- Ko, J.; Fox, D. Learning GP-BayesFilters via Gaussian process latent variable models. Autonomous Robots 2011, 30, 3–23. [Google Scholar] [CrossRef]

- Csató, L.; Opper, M. Sparse on-line Gaussian processes. Neural Comput. 2002, 14, 641–668. [Google Scholar] [CrossRef]

- Snelson, E.; Ghahramani, Z. Sparse Gaussian processes using pseudo-inputs. In Proceedings of the 18th International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 5–8 December 2005; pp. 1257–1264. [Google Scholar]

- Seeger, M.; Williams, C.K.I.; Lawrence, N.D. Fast forward selection to speed up sparse Gaussian process regression. In Proceedings of the Workshop on AI and Statistics 9, Key West, FL, USA, 3–6 January 2003. [Google Scholar]

- Smola, A.J.; Bartlett, P. Sparse greedy Gaussian process regression. In Proceedings of the Advances in Neural Information Processing Systems, Denver, CO, USA, 27 November–2 December 2000. [Google Scholar]

- Quiñonero-Candela, J.; Rasmussen, C. A unifying view of sparse approximate Gaussian process regression. J. Mach. Learn. Res. 2005, 6, 1939–1959. [Google Scholar]

- Velychko, D.; Knopp, B.; Endres, D. Making the coupled Gaussian process dynamical model modular and scalable with variational approximations. Entropy 2018, 20, 724. [Google Scholar] [CrossRef]

- Yan, L.; Duan, X.; Liu, B.; Xu, J. Bayesian optimization based on K-optimality. Entropy 2018, 20, 594. [Google Scholar] [CrossRef]

- Ko, J.; Klein, D.J.; Fox, D.; Hähnel, D. Gaussian processes and reinforcement learning for identification and control of an autonomous blimp. In Proceedings of the IEEE International Conference on Robotics and Automation, Roma, Italy, 10–14 April 2007. [Google Scholar]

- Ko, J.; Klein, D.J.; Fox, D.; Haehnel, D. GP-UKF: Unscented Kalman filters with Gaussian process prediction and observation models. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, San Diego, CA, USA, 29 October–2 November 2007. [Google Scholar]

- Ferris, B.; Hähnel, D.; Fox, D. Gaussian processes for signal strength-based location estimation. In Proceedings of the Robotics: Science and Systems, Philadelphia, PA, USA, 16–19 August 2006; pp. 1–8. [Google Scholar]

- Maybeck, P.S. Stochastic Models, Estimation, and Control; Academic Press, Inc.: New York, NY, USA, 1979. [Google Scholar]

- Boyen, X.; Koller, D. Tractable inference for complex stochastic processes. In Proceedings of the 14th Conference on Uncertainty in Artificial Intelligence, Madison, WI, USA, 24–26 July 1998; pp. 33–42. [Google Scholar]

- Opper, M. On-line learning in neural networks. In ch. A Bayesian Approach to On-line Learning; Cambridge University Press: Cambridge, UK, 1999; pp. 363–378. [Google Scholar]

- Liggins, M.E.; Hall, D.L.; Llinas, J. Handbook of Multisensor Data Fusion: Theory and Practice; CRC Press: Boca Raton, FL, USA, 2009. [Google Scholar]

- Bar-Shalom, Y.; Willett, P.K.; Tian, X. Tracking and Data Fusion: A Handbook of Algorithms; YBS Publishing: Storrs, CT, USA, 2011. [Google Scholar]

- Shen, X.; Zhu, Y.; Song, E.; Luo, Y. Optimal centralized update with multiple local out-of-sequence measurements. IEEE Trans. Signal Process. 2009, 57, 1551–1562. [Google Scholar] [CrossRef]

- Wu, D.; Zhou, J.; Hu, A. A new approximate algorithm for the Chebyshev center. Automatica 2013, 49, 2483–2488. [Google Scholar] [CrossRef]

- Li, M.; Zhang, X. Information fusion in a multi-source incomplete information system based on information entropy. Entropy 2017, 19, 570. [Google Scholar] [CrossRef]

- Gao, X.; Chen, J.; Tao, D.; Liu, W. Multi-sensor centralized fusion without measurement noise covariance by variational Bayesian approximation. IEEE Trans. Aerosp. Electron. Syst. 2011, 47. [Google Scholar] [CrossRef]

- Wang, Y.; Li, X.R. Distributed estimation fusion with unavailable cross-correlation. IEEE Trans. Aerosp. Electron. Syst. 2012, 48, 259–278. [Google Scholar] [CrossRef]

- Chong, C.-Y.; Chang, K.; Mori, S. Distributed tracking in distributed sensor networks. In Proceedings of the American Control Conference, Seattle, WA, USA, 18–20 June 1986; pp. 1863–1868. [Google Scholar]

- Zhu, Y.; You, Z.; Zhao, J.; Zhang, K.; Li, X.R. The optimality for the distributed Kalman filtering fusion with feedback. Automatica 2001, 37, 1489–1493. [Google Scholar] [CrossRef]

- Li, X.R.; Zhu, Y.; Jie, W.; Han, C. Optimal linear estimation fusion–Part I: Unified fusion rules. IEEE Trans. Inf. Theory 2003, 49, 2192–2208. [Google Scholar] [CrossRef]

- Duan, Z.; Li, X.R. Lossless linear transformation of sensor data for distributed estimation fusion. IEEE Trans. Signal Process. 2010, 59, 362–372. [Google Scholar] [CrossRef]

- Shen, X.J.; Luo, Y.T.; Zhu, Y.M.; Song, E.B. Globally optimal distributed Kalman filtering fusion. Sci. China Inf. Sci. 2012, 55, 512–529. [Google Scholar] [CrossRef]

- Chong, C.-Y.; Mori, S. Convex combination and covariance intersection algorithms in distributed fusion. In Proceedings of the 4th International Conference on Information Fusion, Montreal, QC, Canada, 7–10 August 2001. [Google Scholar]

- Sun, S.; Deng, Z.-L. Multi-sensor optimal information fusion Kalman filter. Automatica 2004, 40, 1017–1023. [Google Scholar] [CrossRef]

- Song, E.; Zhu, Y.; Zhou, J.; You, Z. Optimal Kalman filtering fusion with cross-correlated sensor noises. Automatica 2007, 43, 1450–1456. [Google Scholar] [CrossRef]

- Hu, C.; Lin, H.; Li, Z.; He, B.; Liu, G. Kullback–Leibler divergence based distributed cubature Kalman filter and its application in cooperative space object tracking. Entropy 2018, 20, 116. [Google Scholar] [CrossRef]

- Bakr, M.A.; Lee, S. Distributed multisensor data fusion under unknown correlation and data inconsistency. Sensors 2017, 17, 2472. [Google Scholar] [CrossRef]

- Xie, J.; Shen, X.; Wang, Z.; Zhu, Y. Gaussian process fusion for multisensor nonlinear dynamic systems. In Proceedings of the 37th Chinese Control Conference (CCC), Wuhan, China, 25–27 July 2018; pp. 4124–4129. [Google Scholar]

- Osborne, M. Bayesian Gaussian Processes for Sequential Prediction, Optimisation and Quadrature. Ph.D. Thesis, University of Oxford, Oxford, UK, 2010. [Google Scholar]

- Nguyen-Tuong, D.; Seeger, M.; Peters, J. Local Gaussian process regression for real time online model learning. In Proceedings of the International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 8–10 December 2008. [Google Scholar]

- Deisenroth, M. Efficient Reinforcement Learning using Gaussian Processes; KIT Scientific Publishing: Karlsruhe, Germany, 2010. [Google Scholar]

- Ghahramani, Z.; Rasmussen, C.E. Bayesian Monte Carlo. Adv. Neural Inf. Process. Syst. 2003, 15, 489–496. [Google Scholar]

- Candela, J.Q.; Girard, A.; Larsen, J.; Rasmussen, C.E. Propagation of uncertainty in Bayesian kernel models - application to multiple-step ahead forecasting. IEEE Int. Conf. Acoust. Speech Signal Process. 2003, 2, 701–704. [Google Scholar]

- Zhu, Y. Multisensor Decision and Estimation Fusion; Kluwer Academic Publishers: Boston, MA, USA, 2003. [Google Scholar]

- Öfberg, J.L. Yalmip: A toolbox for modeling and optimization in matlab. In Proceedings of the CACSD Conference, Taipei, Taiwan, 2–4 September 2004. [Google Scholar]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).