Abstract

Stochastic processes are ubiquitous in nature and laboratories, and play a major role across traditional disciplinary boundaries. These stochastic processes are described by different variables and are thus very system-specific. In order to elucidate underlying principles governing different phenomena, it is extremely valuable to utilise a mathematical tool that is not specific to a particular system. We provide such a tool based on information geometry by quantifying the similarity and disparity between Probability Density Functions (PDFs) by a metric such that the distance between two PDFs increases with the disparity between them. Specifically, we invoke the information length to quantify information change associated with a time-dependent PDF that depends on time. is uniquely defined as a function of time for a given initial condition. We demonstrate the utility of in understanding information change and attractor structure in classical and quantum systems.

1. Introduction

Stochastic processes are ubiquitous in nature and laboratories, and play a major role across traditional disciplinary boundaries. Due to the randomness associated with stochasticity, the evolution of these systems is not deterministic but instead probabilistic. Furthermore, these stochastic processes are described by different variables and are thus very system-specific. This system-specificity makes it impossible to make comparison among different processes. In order to understand universality or underlying principles governing different phenomena, it is extremely valuable to utilise a mathematical tool that is not specific to a particular system. This is especially indispensable given the diversity of stochastic processes and the growing amount of data.

Information geometry provides a powerful methodology to achieve this goal. Specifically, the similarity and disparity between Probability Density Functions (PDFs) is quantified by a metric [1] such that the distance between two PDFs increases with the disparity between them. This was the very idea behind a statistical distance [2] based on the Fisher (or Fisher–Rao) metric [3] which represents the total number of statistically different states between two PDFs in Hilbert space for quantum systems. The analysis in [2] was extended to impure (mixed-state) quantum systems using a density operator by [4]. Other related work includes [5,6,7,8,9,10,11,12]. For Gaussian PDFs, a statistically different state is attained when the physical distance exceeds the resolution set by the uncertainty (PDF width).

This paper presents a method to define such distance for a PDF which changes continuously in time, as is often the case of non-equilibrium systems. Specifically, we invoke the information length according to the total number of statistically different states that a system evolves through in time. is uniquely defined as a function of time for a given initial condition. We demonstrate the utility of in understanding information change and attractor structure in classical and quantum systems [13,14,15,16,17,18,19,20,21].

2. Information Length

Intuitively, we define the information length by computing how quickly information changes in time and then measuring the clock time based on that time scale. Specifically, the time-scale of information change can be computed by the correlation time of a time-dependent PDF, say , as follows.

From Equation (1), we can see that the dimension of is time and serves as a dynamical time unit for information change. is the total information change between time 0 and t:

In principle, in Equation (1) can depend on time, so we need the integral for in Equation (2). To make an analogy, we can consider an oscillator with a period s. Then, within the clock time 10 s, there are five oscillations. When the period is changing with time, we need an integration of over the time interval.

We now recall how and in Equations (1) and (2) are related to the relative entropy (Kullback–Leibler divergence) [15,16]. We consider two nearby PDFs and at time and and the limit of a very small to do Taylor expansion of by using

In the limit (), Equations (3)–(6) give us

Up to (), Equation (7) and lead to

and thus the infinitesimal distance between and as

By summing for (where ) in the limit , we have

where is the information length. Thus, is related to the sum of infinitesimal relative entropy. It cannot be overemphasised that is a Lagrangian distance between PDFs at time 0 and t and sensitively depends on the particular path that a system passed through reaching the final state. In contrast, the relative entropy depends only on PDFs at time 0 and t and thus does not tell us about intermediate states between initial and final states.

3. Attractor Structure

Since represents the accumulated change in information (due to the change in PDF) at time t, settles to a constant value when a PDF reaches its final equilibrium PDF. The smaller , the smaller number of states that the initial PDF passes through to reach the final equilibrium. Therefore, provides us with a unique representation of a path-dependent, Lagrangian measure of the distance between a given initial and final PDF. We will utilise this property to map out the attractor structure by considering a narrow initial PDF at a different peak position and by measuring against . We are particularly interested in how the behaviour of against depends on whether a system has a stable equilibrium point or is chaotic.

3.1. Linear vs. Cubic Forces

We first consider the case where a system has a stable equilibrium point when there is no stochastic noise and investigate how is affected by different deterministic forces [15,16]. We consider the following Langevin equation [22] for a variable x:

Here, is a short (delta) correlated stochastic noise with the strength D as

where the angular brackets denote the average over and . We consider two types of F, which both have a stable equilibrium point ; the first one is the linear force ( is the frictional constant) which is the familiar Ornstein–Uhlenbeck (O-U) process, a popular model for a noisy relaxation system (e.g., [23]). The second is the cubic force where represents the frictional constant. Note that, in these models, the dimensions of () and () are different.

Equivalent to the Langevin equation governed by Equations (11) and (12) is the Fokker–Planck equation [22]

As an initial PDF, we consider a Gaussian PDF

Then, for the O-U process, the PDF remains Gaussian for all time with the following form [15,16]:

In Equations (14) and (15), is the mean position and is its initial value; is the inverse temperature at , which is related to the variance at as . The fluctuations level (variance) changes with time, with time-dependent given by

Note that, when , for all t, PDF maintains the same width for all t.

For this Gaussian process, and constitute a parameter space on which the distance is defined with the Fisher metric tensor [3] () as [16]

where , , . This enables us to recast in Equation (1) in terms of as

The derivation of the first relation in Equation (18) is provided in Appendix A (see Equation (A2)). Using Equations (2) and (18), we can calculate analytically for this O-U process (see also Appendix A).

In comparison, theoretical analysis can be done only in limiting cases such as small and large times for the cubic process [17,24]. In particular, the stationary PDF for large time is readily obtained as

where . For the exact calculation of , Equation (13) is to be solved numerically.

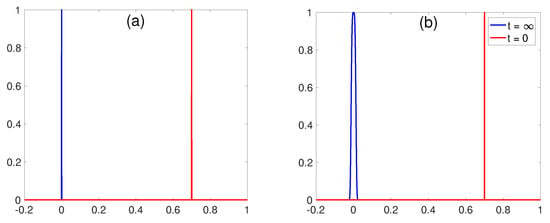

To summarise, due to the restoring forcing F, the equilibrium is given by a PDF around , Gaussian for linear force and quartic exponential for cubic force. If we were to pick any point in x, say , we are curious about how close is to the equilibrium and how affects it. To determine this, we make a narrow PDF around (see Figure 1) at and measure . The question is how this depends on . We repeat the same procedure for the cubic process, as shown in Figure 1, and examine how depends on .

Figure 1.

Initial (red) and final (blue) Probability Density Functions (PDFs) for the O-U process in (a) and the cubic process in (b).

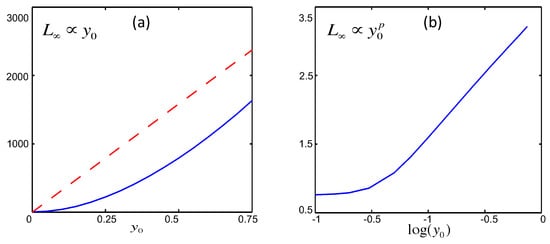

as a function of is shown for both linear (in red dotted line) and cubic (in blue solid line) processes in Figure 2. In the linear case we can see a clear linear relation between and , meaning that the information length preserves the linearity of the system. This linear relationship holds for all D and . In particular, when , we can show that by taking the limit of () in Equation (A10).

Figure 2.

(a): against for the linear process in red dashed line and for the cubic process in blue solid line; (b): against for the cubic process on log-log scale (data from [17]).

In contrast, for the cubic process, the relation is not linear, and the log-log plot on the right in Figure 2 shows a power-law dependence with the power-law index p. This power-law index p varies between 1.52 and 1.91 and depends on the width () of initial PDF and stochastic forcing amplitude D, as shown in [16]. This indicates that nonlinear force breaks the linear scaling of geometric structure and changes it to power-law scalings. In either cases here, has a smooth variation with with its minimum value at since the equilibrium point 0 is stable. This will be compared with the behaviour in chaotic systems in Section 3.2.

3.2. Chaotic Attractor

Section 3.1 demonstrates that the minimum value of occurs at a stable equilibrium point [15,16]. We now show that in contrast, in the case of a chaotic attractor, the minimum value of occurs at an unstable point [13]. To this end, we consider a chaotic attractor using a logistic map [13]. The latter is simply given by a rule as to how to update the value x at from its previous value at t as follows [25]

where and a is a parameter, which controls the stability of the system.

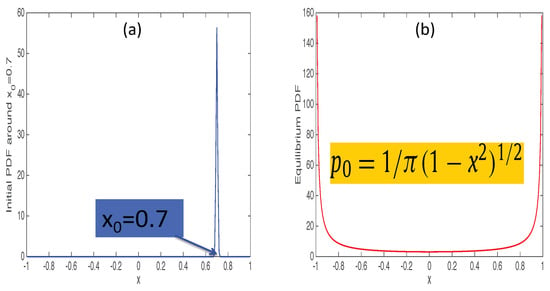

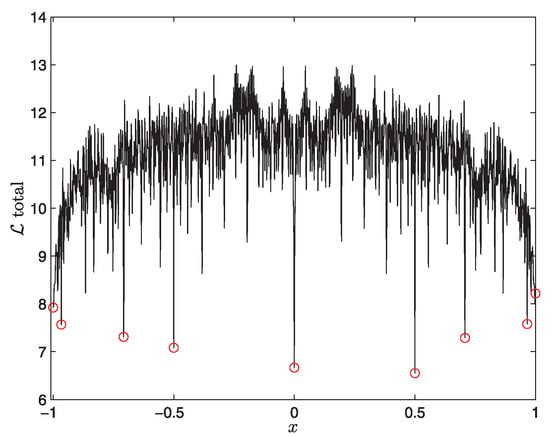

As we are interested in a chaotic attractor, we chose the value so that any initial value evolves to a chaotic attractor given by an invariant density (shown in the right panel of Figure 3). A key question is then whether all values of are similar as they all evolve to the same invariant density in the long time limit. To address how close a particular point is to equilibrium, we (i) consider a narrow initial PDF around at , (ii) evolve it until it reaches the equilibrium distribution, (iii) measure the between initial and final PDF, and (iv) repeat steps (i)–(iii) for many different values . For example, for , the initial PDF is shown on the left and final PDF on the right in Figure 3. We show against in Figure 4. A striking feature of Figure 4 is an abrupt change in for a small change in . This means that the distance between and the final chaotic attractor depends sensitively on . This sensitive dependence of on means that a small change in the initial condition causes a large difference in a path that a system evolves through and thus . This is a good illustration of a chaotic equilibrium and is quite similar to the sensitive dependence of the Lyapunov exponent on the initial condition [25]. That is, our provides a new methodology to test chaos. Another interesting feature of Figure 4 are several points with small values of , shown by red circles. In particular, has the smallest value of , indicating that the unstable point is closest to the chaotic attractor. That is, an unstable point is most similar to the chaotic attractor and thus minimises .

Figure 3.

(a): an initial narrow PDF at the peak ; (b): the invariant density of a logistic map.

Figure 4.

against the peak position of an initial PDF in the chaotic regime of a logistic map (Reprinted from Physics Letters A, 379, S.B. Nicholson & E. Kim, Investigation of the statistical distance to reach stationary distributions, 83-88, Copyright (2015), with permission from Elsevier).

4. Music: Can We See the Music?

Our methodology is not system-specific and applicable to any stochastic processes. In particular, given any time-dependent PDFs that are computed from a theory, simulations or from data, we can compute to understand information change. As an example, we apply our theory to music data and discuss information change associated with different pieces of classical music. In particular, we are interested in understanding differences among famous classical music in view of information change. To gain an insight, we used the MIDI file [26], computed time-dependent PDFs and the information length as a function of time [14].

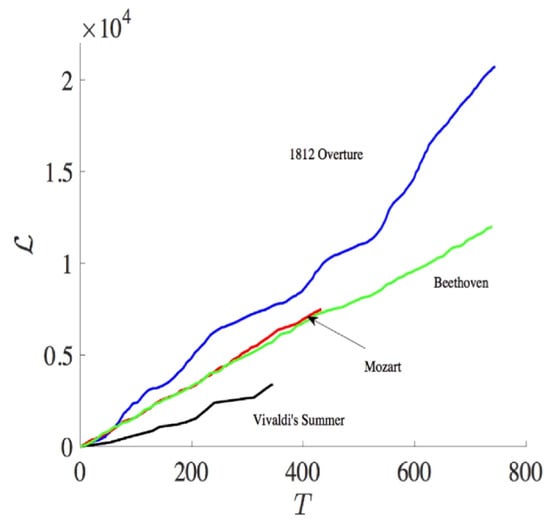

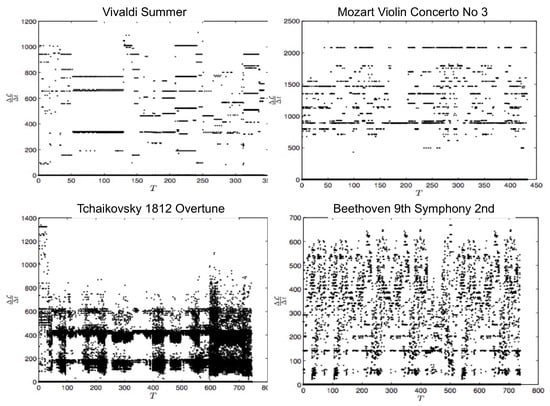

Specifically, the midi file stores a music by the MIDI number according to 12 different music notes (C, C, D, D, E, F, F, G, G, A, A, B) and 11 different octaves, with the typical time between the two adjacent notes of order s. In order to construct a PDF, we specify 129 statistically different states according to the MIDI number and one extra rest state (see Table 1 in [14]) and calculate an instantaneous PDF (see Figure S1 in [14]) from an orchestra music by measuring the frequency (the total number of times) that a particular state is played by all instruments at a given time. Thus, the time-dependent PDFs are defined in discrete time steps with , and the discrete version of (Equation (7) in [14]) is used in numerical computation. Figure 5 shows against time for Vivaldi’s Summer, Mozart, Tchaikovsky’s 1812 Overture, and Beethoven’s Ninth Symphony 2nd movement. We observe the difference among different composers, in particular, more classical, more subtle in information change. We then look at the rate of information change against time for different music by calculating the gradient of () in Figure 6, which also manifests the most subtle change in information length for Vivaldi and Mozart.

Figure 5.

against time T for different composers (from [14]).

Figure 6.

for different composers shown in Figure 5 (from [14]).

5. Quantum Systems

Finally, we examine quantum effects on information length [21]. In Quantum Mechanics (QM), the uncertainty relation between position x and momentum P gives us an effect quite similar to a stochastic noise. We note here that we are using P to denote the momentum to distinguish it from a PDF (). For instance, the trajectory of a particle in the phase space is random and not smooth. Furthermore, the phase volume h plays the role of resolution in the phase space, one unit of information given by the phase volume h. Thus, the total number of states is given by the total phase volume divided by h. This observation points out a potentially different role of the width of PDF in QM in comparison with the classical system since a wider PDF in QM occupies a larger region of x in the phase space, with the possibility of increasing the information.

To investigate this, for simplicity, we consider a particle of mass m under a constant force F and assume an initial Gaussian wave function around [21]

where is the wave number at , is the width of the initial wave function, and is the initial momentum. A time-dependent PDF is then found as (e.g., see [21,27]):

Here,

Equation (22) clearly shows that the PDF is Gaussian, with the mean and the variance

In Equation (24), is the initial variance. We note that the last term in Equation (24) increases quadratically with time t due to the quantum effect, the width of wave function becoming larger over time. Obviously, this effect vanishes as .

Since the PDF in Equation (22) is Gaussian, we can use Equation (18) to find (e.g., see [16])

where , the time scale of the broadening of the initial wave function [21]. It is interesting to note that when there is no external constant force F, the two terms in Equation (25) decrease for large time t, making large. The situation changes dramatically in the presence of F in Equation (25) as the second term approaches a constant value for large time. The region with the same value of signifies that the rate of change in information is constant in time, and was argued to be an optimal path to minimise the irreversible dissipation (e.g., [16]). Physically, this geodesic arises when when the broadening of a PDF is compensated by momentum which increases with time. Mathematically, the limit reduces Equation (25) and thus to

Since and is the width of the wave function at , in Equation (26) represents the volume in the phase space spanned by this wave function. This reflects the information changes associated with the coverage of a phase volume ℏ. Interestingly, similar results are also obtained in the momentum representation where is computed from the PDF in the momentum space:

where . In Equation (27), is obviously constant, and linearly increases with time t. We can see even a strong similarity between Equation (27) and Equation (26) as once using . In view of the complementary relation between position and momentum in quantum systems, the similar result for in momentum and position space highlights the robustness of the geodesic.

6. Conclusions

We investigated information geometry associated with stochastic processes in classical and quantum systems. Specifically, we introduced as a dynamical time scale quantifying information change and calculated by measuring the total clock time t by . As a unique Lagrangian measure of the information change, was demonstrated to be a novel diagnostic for mapping out an attractor structure. In particular, was shown to capture the effect of different deterministic forces through the scaling of again the peak position of a narrow initial PDF. For a stable equilibrium, the minimum value of occurs at the equilibrium point. In comparison, in the case of a chaotic attractor, exhibits a sensitive dependence on initial conditions like a Lyapunov exponent. We then showed the application of our method to characterize the information change associated with classical music (e.g., see [14]). Finally, we elucidated the effect of the width of a PDF on information length in quantum systems. Extension of this work to impure (mixed-state) quantum systems and investigation of Riemannian geometry on the space of density operators would be of particular interest for future work.

Funding

This research received no external funding.

Acknowledgments

This paper is a review and summary of work carried out over several years with numerous co-authors. Among these, I am particularly grateful to Rainer Hollerbach, Schuyler Nicholson and James Heseltine for their contributions and valuable discussions regarding different aspects of information length.

Conflicts of Interest

The author declares no conflict of interest.

Appendix A. for the O-U Process

To make this paper self-contained, we provide here the main steps for the derivation of for the O-U process [15,16]. We use in in Equation (12) and differentiate it to find

We express in Equation (16) in terms of as Differentiating this and using then give

Similarly, using , and , we obtain

Again, in Equation (A5), , , and [15,16,17]. It is worth noting that q and r, respectively, arise from the difference in mean position at and (i.e., ) and in PDF width at and (i.e., ). Thus, the first and second terms in Equation (A5) represent the information change due to the change in PDF width and the movement of the PDF, respectively. Using , we express r, q and T in Equation (A5) as Equations (A5) and (2) then give us

where and . To compute Equation (A6) for , we use and integrate

where and . To calculate H in Equation (A7), we need to consider the two cases where or . First, when , we use the change of the variable to find

When , we let and find

When (), for all t. Thus, Equation (2) can easily be calculated directly from Equation (A5) with the result

where again .

References

- Gibbs, A.L.; Su, F.E. On choosing and bounding probability metrics. Int. Stat. Rev. 2002, 70, 419–435. [Google Scholar] [CrossRef]

- Wootters, W.K. Statistical distance and Hilbert space. Phys. Rev. D 1981, 23, 357. [Google Scholar] [CrossRef]

- Frieden, B.R. Science from Fisher Information; Cambridge University Press: Cambridge, UK, 2000. [Google Scholar]

- Braunstein, S.L.; Caves, C.M. Statistical distance and the geometry of quantum states. Phys. Rev. Lett. 1994, 72, 3439. [Google Scholar] [CrossRef] [PubMed]

- Feng, E.H.; Crooks, G.E. Far-from-equilibrium measurements of thermodynamic length. Phys. Rev. E 2009, 79, 012104. [Google Scholar] [CrossRef] [PubMed]

- Ruppeiner, G. Thermodynamics: A Riemannian geometric model. Phys. Rev. A 1979, 20, 1608–1613. [Google Scholar] [CrossRef]

- Schlögl, F. Thermodynamic metric and stochastic measures. Z. Phys. B Condens. Matter 1985, 59, 449–454. [Google Scholar] [CrossRef]

- Nulton, J.; Salamon, P.; Andresen, B.; Anmin, Q. Quasistatic processes as step equilibrations. J. Chem. Phys. 1985, 83, 334–338. [Google Scholar] [CrossRef]

- Sivak, D.A.; Crooks, G.E. Thermodynamic metrics and optimal paths. Phys. Rev. Lett. 2012, 8, 190602. [Google Scholar] [CrossRef] [PubMed]

- Plastino, A.R.; Casas, M.; Plastino, A. Fisher’s information, Kullback’s measure, and H-theorems. Phys. Lett. A 1998, 246, 498–504. [Google Scholar] [CrossRef]

- Polettini, M.; Esposito, M. Nonconvexity of the relative entropy for Markov dynamics: A Fisher information approach. Phys. Rev. E. 2013, 88, 012112. [Google Scholar] [CrossRef] [PubMed]

- Naudts, J. Quantum statistical manifolds. Entropy 2018, 20, 472. [Google Scholar] [CrossRef]

- Nicholson, S.B.; Kim, E. Investigation of the statistical distance to reach stationary distributions. Phys. Lett. A 2015, 379, 83–88. [Google Scholar] [CrossRef]

- Nicholson, S.B.; Kim, E. Structures in sound: Analysis of classical music using the information length. Entropy 2016, 18, 258. [Google Scholar] [CrossRef]

- Heseltine, J.; Kim, E. Novel mapping in non-equilibrium stochastic processes. J. Phys. A Math. Theor. 2016, 49, 175002. [Google Scholar] [CrossRef]

- Kim, E.; Lee, U.; Heseltine, J.; Hollerbach, R. Geometric structure and geodesic in a solvable model of nonequilibrium process. Phys. Rev. E 2016, 93, 062127. [Google Scholar] [CrossRef] [PubMed]

- Kim, E.; Hollerbach, R. Signature of nonlinear damping in geometric structure of a nonequilibrium process. Phys. Rev. E 2017, 95, 022137. [Google Scholar] [CrossRef] [PubMed]

- Hollerbach, R.; Kim, E. Information geometry of non-equilibrium processes in a bistable system with a cubic damping. Entropy 2017, 19, 268. [Google Scholar] [CrossRef]

- Kim, E.; Tenkès, L.M.; Hollerbach, R.; Radulescu, O. Far-from-equilibrium time evolution between two gamma distributions. Entropy 2017, 19, 511. [Google Scholar] [CrossRef]

- Tenkès, L.M.; Hollerbach, R.; Kim, E. Time-dependent probability density functions and information geometry in stochastic logistic and Gompertz models. J. Stat. Mech. Theory Exp. 2017, 2017, 123201. [Google Scholar] [CrossRef]

- Kim, E.; Lewis, P. Information length in quantum system. J. Stat. Mech. Theory Exp. 2018, 2018, 043106. [Google Scholar] [CrossRef]

- Risken, H. The Fokker–Planck Equation: Methods of Solutions and Applications; Springer: Berlin, Germany, 2013. [Google Scholar]

- Klebaner, F. Introduction to Stochastic Calculus with Applications; Imperial College Press: London, UK, 2012. [Google Scholar]

- Kim, E.; Hollerbach, R. Time-dependent probability density function in cubic stochastic processes. Phys. Rev. E 2016, 94, 052118. [Google Scholar] [CrossRef] [PubMed]

- Ott, E. Chaos in Dynamical Systems; Cambridge University Press: Cambridge, UK, 2002. [Google Scholar]

- Kern Scores. Available online: http://kernscores.stanford.edu/ (accessed on 12 July 2016).

- Andrews, M. Quantum mechanics with uniform forces. Am. J. Phys. 2018, 78, 1361–1364. [Google Scholar] [CrossRef]

© 2018 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).