Interfering Relay Channels

Abstract

:1. Introduction

1.1. Related Work

1.2. Summary of Results and Contributions

- We propose an inner bound for the capacity region of the discrete memoryless IRC. The coding technique is based on rate splitting at the transmitters and quantize-bin-forward [10] (Note that in the literature of relay channels, the term binning and hashing often have the same meaning, see, e.g., [19]) or extended hash-forward [20].

- We characterize the capacity region of a class of discrete memoryless channels, namely the semi-deterministic IRC which includes several known interference and relay channels as special cases.

- We derive an outer bound for the Gaussian IRC and use it to show constant-gaps to the capacity region or to the sum capacity in multiple scenarios: When the interference is strong, we characterize the capacity region of the real-valued Gaussian IRC to within bit. For the Gaussian IRC with high-capacity relay-receiver links, we characterize the capacity region to within bits. For other regimes of channel parameters, we show that the inner bound and outer bound are within a bounded gap as long as the interference that each transmitter induces at the neighboring relay is not unboundedly stronger than the interference induced at the neighboring receiver. Moreover, for the Gaussian IRC in the so called weak interference-relay regime, we characterize the sum capacity to within 1 bit.

- We also study a closely related channel model, the compound multiple access relay channel. We characterize capacity region of a class of the semi-deterministic compound multiple access relay channel and the capacity region of a class of Gaussian compound multiple access relay channels to within bit.

1.3. Organization

1.4. Notation and Definition

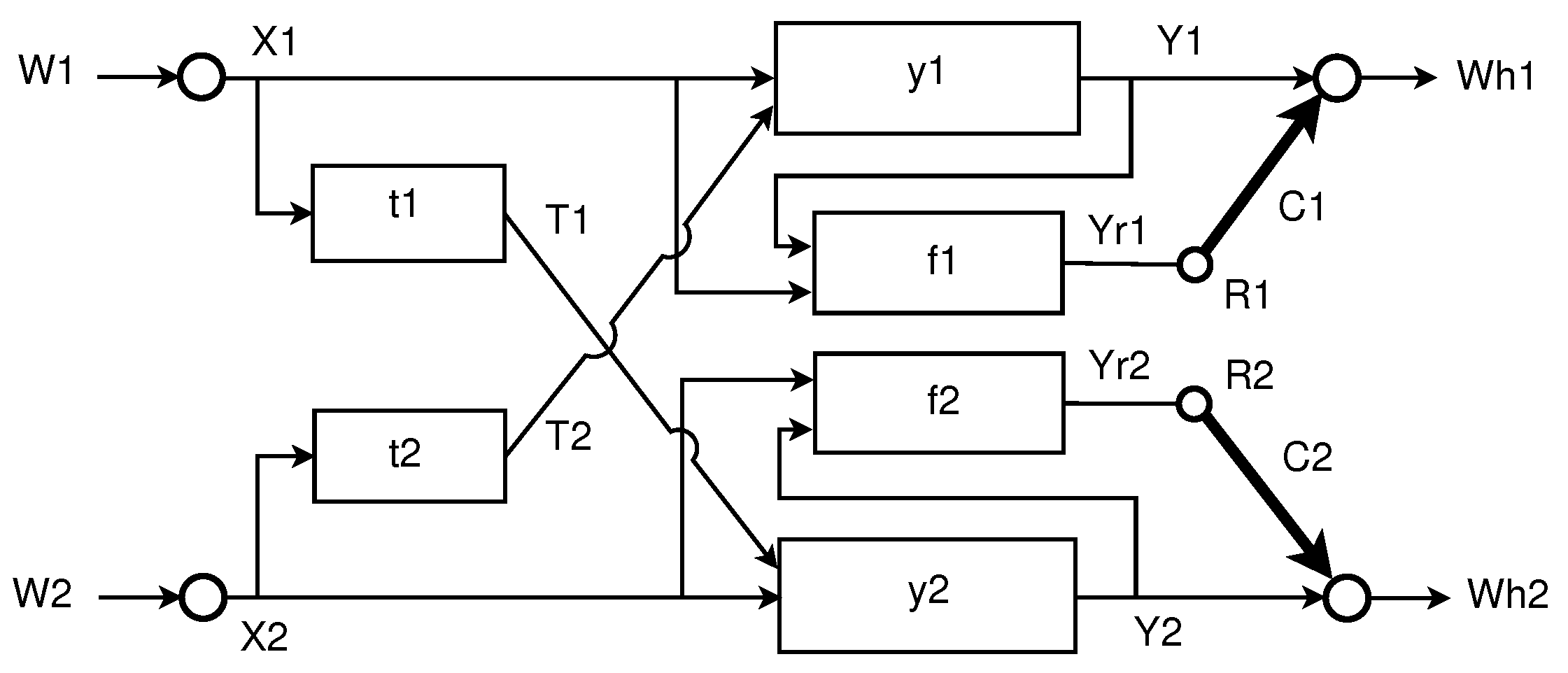

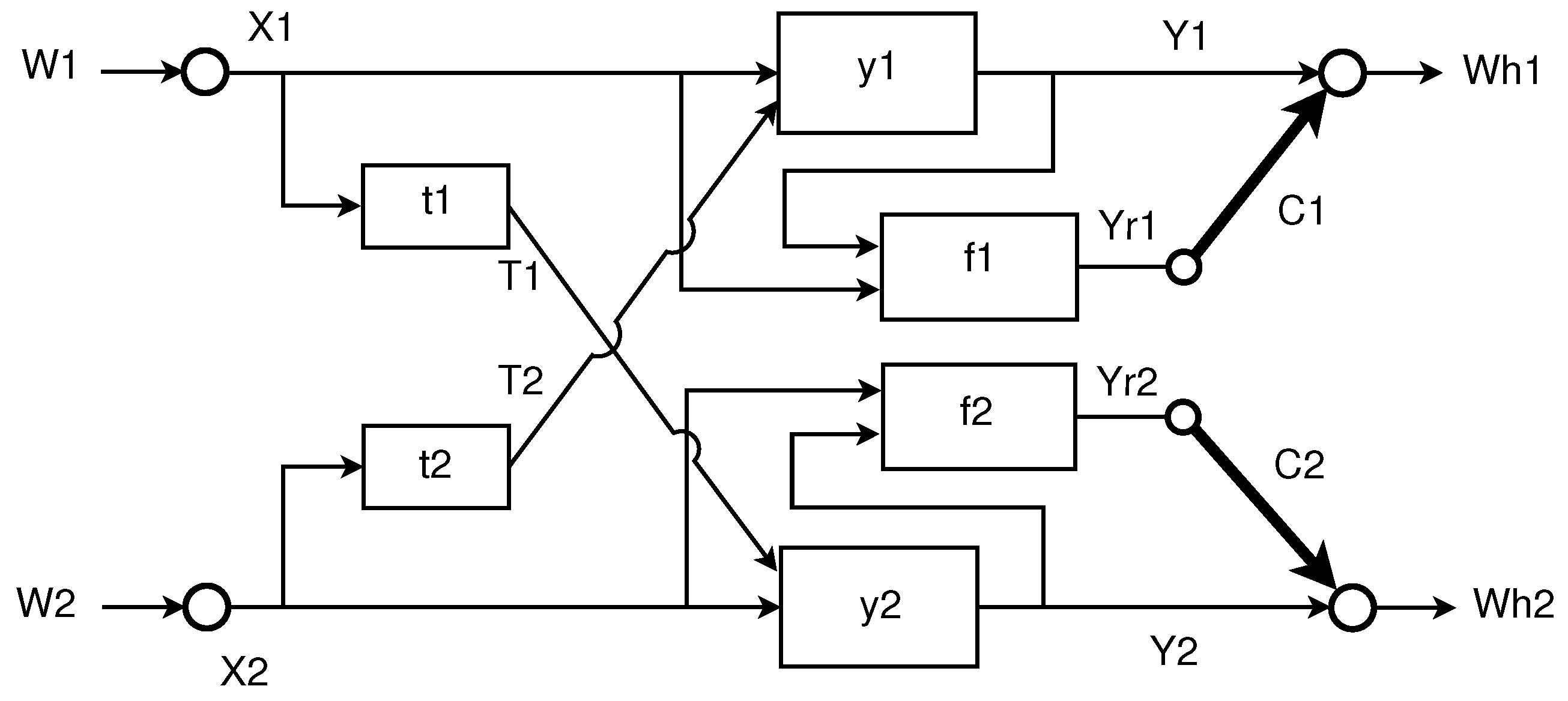

2. Discrete Memoryless IRC and A General Achievability

2.1. Problem Formulation

- a message set ;

- an encoding function that assigns a sequence to each message ;

- a relaying function that maps to a symbol for all , where denotes the received sequence at the relay k up to and including the th symbol and denotes the ith symbol sent via the digital link of capacity ;

- a decoding function that produces a guess from the received sequence and an index from the set .

2.2. An Achievable Rate Region for the Discrete Memoryless IRC

3. Capacity Region of Some Classes of Discrete Memoryless IRCs

3.1. Semi-Deterministic IRC

- : There exist deterministic functions , , , (not necessary invertible) such that:

- –

- : , ,

- –

- : , .

- : depends only on and , via the conditional distribution . Similarly for and .

- : For all positive integers N and all distributions of the form , the following conditions hold:

3.1.1. Semi-Deterministic IRC with Strong Interference

- and for some functions and ,

- and for all product distributions on (Note that due to we can rewrite as and .).

3.1.2. Deterministic IRC

- : There exist deterministic functions , , , (not necessary invertible) such that:

- –

- : , ,

- –

- : , .

- : depends only on and via deterministic function . Similarly, . Moreover there exist functions and such that and . In other words, function is invertible given ,

3.2. Compound Semi-Deterministic Multiple Access Relay Channel

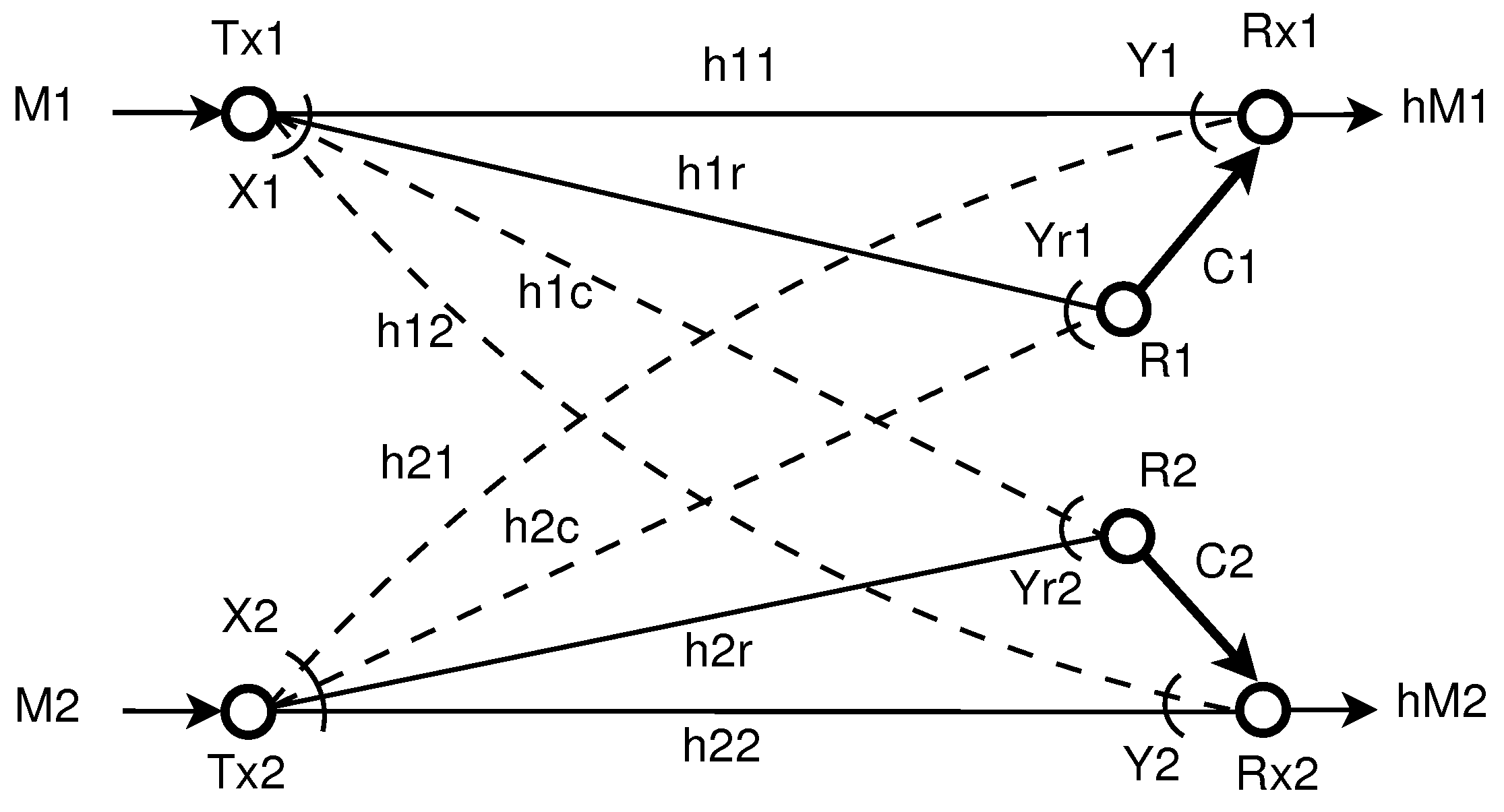

4. Gaussian Interfering Relay Channels

4.1. An Outer Bound to the Capacity Region

- Group 1: The bounds on individual rates in (50) and (51) are cut-set bounds.

- Group 5: The bounds in (64)–(67) are similarly derived as the bounds in Group 4, by symmetry.

- Group 6: The bound in (68): A genie gives to one of the two decoders 1, and give to decoder 2, where , . We also bound the sum capacity gain due to the relays by . The rest follows from the facts that conditioning does not increase entropy, the chain rule, and that Gaussian distribution maximizes differential entropy and conditional differential entropy subject to covariance constraint.

- Group 7: The bound in (69): A genie gives to one of the two decoders 1, and gives to decoder 2. We also bound the sum capacity gain due to one of the relays ’s by .

4.2. Achievability

4.3. Capacity Region within a Bounded Gap for General Interference Condition

4.4. Capacity Region within Bit for Strong Interference

4.5. Capacity Region within Bits for IRC with High-Capacity Relay-Receiver Links

- Choosing a quantization distortion at each relay such that the rate loss terms are bounded so that one can characterize the conditions on , for the second terms in the min in the RHS of (22)–(29) to be active.

- Choosing the suitable outer bounds among the ones in Theorem 3.

- Choosing a proper power allocation scheme at the transmitters such that the resulting achievable rates are within constant gaps to the corresponding chosen outer bounds.

4.6. Sum Capacity within 1 Bit in the Weak Interference-Relay Regime

4.7. Capacity Region within Bit for the Compound Multiple Access Relay Channel

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Abbreviations

| C-MARC | Compound mutiple access relay channel |

| CS-MARC | Compound semi-deterministic multiple access relay channel |

| DM-IRC | Discrete memoryless interfering relay channels |

| IRC | Interfering relay channels |

| MARC | Mutiple access relay channel |

| MIMO IC | Multiple input multiple output interference channel |

Appendix A. Proof of Theorem 1.

Appendix B. Proof of Theorem 2.

Appendix B.1. Achievability

- is because is a function of , and depends only on ;

- is because ;

- is due to (30a) with , ;

- is due to (A14).

Appendix B.2. Converse

Appendix C. Proof of Theorem 3.

Appendix D. Proof of Theorem 4.

- (resp. ) in the RHS of (14) is within (resp. ) of the first term in the RHS of (50).Proof.We have:which is within of the outer bound . Further,which is within of the outer bound . ☐

- (resp. ) in the RHS of (14) is within (respectively ) of the second term in the RHS of (50).Proof.We have:which is within of the outer bound . Next,which is within of the outer bound . ☐

- , , are within of the RHS’s of (52)–(54), respectively.

- , , are within of the RHS’s of (56)–(58), respectively.

- , , are within of the RHS’s of (60)–(62), respectively.

- , , are within of the RHS’s of (64)–(66), respectively.

- is within of the RHS of (55).

- is within of the RHS of (59).

- is within of the RHS of (63).

- is within of the RHS of (67).

- is within of the RHS of (68).

- is within of the RHS of (69).

Appendix E. Proof of Proposition 1.

- in (A57) is within bit of the outer bound in (50).

- in (A57) is within bit of the outer bound in (50).

- in (A59) is within 1 bit of the outer boundin (61).

- in (A59) is within 1 bit of the outer boundin (57).

Appendix F. Proof of Proposition 2.

- Constraints on :

- –

- The RHS of (A69) is within of the RHS of (50).

- –

- The RHS of (A70) is within of the RHS of (50).

- Constraints on follow by symmetry.

- Constraints on :

- –

- The RHS of (A73) is within of the RHS of (56).

- –

- Similarly for the RHS of (A75).

- –

- The RHS of (A74) is within of the RHS of (58).

- Constraint on :The RHS of (A76) is within of the RHS of (59).

- Constraint on follows by symmetry.

Appendix G. Proofs of Lemma 1 and Lemma 2.

Appendix H. Proof of Proposition 4.

- in (A59) is within bit of the outer boundin (88).

- in (A59) is within bit of the outer boundin (90).

References

- Dahlman, E.; Parkvall, S.; Skold, J. 4G: LTE/LTE-Advanced for Mobile Broadband; Academic Press: Cambridge, MA, USA, 2013. [Google Scholar]

- Jha, S.C.; Sivanesan, K.; Vannithamby, R.; Koc, A.T. Dual Connectivity in LTE small cell networks. In Proceedings of the IEEE Globecom Workshops, Austin, TX, USA, 8–12 December 2014; pp. 1205–1210. [Google Scholar]

- Sahin, O.; Erkip, E. Achievable Rates for the Gaussian Interference Relay Channel. In Proceedings of the IEEE Global Telecommunications Conference, Washington, DC, USA, 26–30 November 2007; pp. 1627–1631. [Google Scholar]

- Marić, I.; Dabora, R.; Goldsmith, A. On the capacity of the interference channel with a relay. In Proceedings of the IEEE International Symposium on Information Theory, Toronto, ON, Canada, 6–11 July 2008; pp. 554–558. [Google Scholar]

- Sahin, O.; Erkip, E.; Simeone, O. Interference channel with a relay: Models, relaying strategies, bounds. In Proceedings of the Information Theory and Applications Workshop (ITA), San Diego, CA, USA, 8–13 February 2009; pp. 90–95. [Google Scholar]

- Tian, Y.; Yener, A. The Gaussian Interference Relay Channel: Improved Achievable Rates and Sum Rate Upperbounds Using a Potent Relay. IEEE Trans. Inf. Theory 2011, 57, 2865–2879. [Google Scholar] [CrossRef]

- Simeone, O.; Erkip, E.; Shamai, S. On Codebook Information for Interference Relay Channels with Out-of-Band Relaying. IEEE Trans. Inf. Theory 2011, 57, 2880–2888. [Google Scholar] [CrossRef]

- Zhou, L.; Yu, W. Incremental Relaying for the Gaussian Interference Channel With a Degraded Broadcasting Relay. IEEE Trans. Inf. Theory 2013, 59, 2794–2815. [Google Scholar] [CrossRef]

- Razaghi, P.; Hong, S.N.; Zhou, L.; Yu, W.; Caire, G. Two Birds and One Stone: Gaussian Interference Channel With a Shared Out-of-Band Relay of Limited Rate. IEEE Trans. Inf. Theory 2013, 59, 4192–4212. [Google Scholar] [CrossRef]

- Wang, I.H.; Tse, D. Interference Mitigation Through Limited Receiver Cooperation. IEEE Trans. Inf. Theory 2011, 57, 2913–2940. [Google Scholar] [CrossRef]

- Cover, T.M.; Kim, Y.H. Capacity of a Class of Deterministic Relay Channels. In Proceedings of the IEEE International Symposium on Information Theory, Nice, France, 24–29 June 2007; pp. 591–595. [Google Scholar]

- Chang, H.; Chung, S.Y.; Kim, S. Interference Channel With a Causal Relay Under Strong and Very Strong Interference. IEEE Trans. Inf. Theory 2014, 60, 859–865. [Google Scholar] [CrossRef]

- Do, H.T.; Oechtering, T.J.; Skoglund, M. Layered Coding for the Interference Channel With a Relay. IEEE Trans. Inf. Theory 2014, 60, 6154–6180. [Google Scholar] [CrossRef]

- Baik, I.J.; Chung, S.Y. Causal Relay Networks. IEEE Trans. Inf. Theory 2015, 61, 5432–5440. [Google Scholar] [CrossRef]

- Liu, T.; Tuninetti, D.; Chung, S.Y. On the DoF Region of the MIMO Gaussian Two-User Interference Channel With an Instantaneous Relay. IEEE Trans. Inf. Theory 2017, 63, 4453–4471. [Google Scholar] [CrossRef]

- Shin, W.; Lee, N.; Lee, J.; Poor, H.V. Guiding blind transmitters for K-user MISO interference relay channels with Imperfect channel knowledge. In Proceedings of the IEEE International Symposium on Information Theory (ISIT), Barcelona, Spain, 10–15 July 2016; pp. 545–549. [Google Scholar]

- Kang, H.S.; Kang, M.G.; Nosratinia, A.; Choi, W. The Degrees of Freedom of the Interference Channel with a Cognitive Relay under Delayed Feedback. IEEE Trans. Inf. Theory 2017, 63, 5299–5313. [Google Scholar] [CrossRef]

- Nokleby, M.; Aazhang, B. Cooperative Compute-and-Forward. IEEE Trans. Wirel. Commun. 2016, 15, 14–27. [Google Scholar] [CrossRef]

- Kramer, G.; Hou, J. Short-message quantize-forward network coding. In Proceedings of the 8th International Workshop on Multi-Carrier Systems Solutions, Herrsching, Germany, 3–4 May 2011; pp. 1–3. [Google Scholar]

- Kim, Y.H. Coding techniques for primitive relay channels. In Proceedings of the Forty-Fifth Annual Allerton Conference Allerton House, UIUC, Monticello, IL, USA, 26–28 September 2007. [Google Scholar]

- Carleial, A. Interference Channels. IEEE Trans. Inf. Theory 1978, 24, 60–70. [Google Scholar] [CrossRef]

- Han, T.; Kobayashi, K. A New Achievable Rate Region for the Interference Channel. IEEE Trans. Inf. Theory 1981, 27, 49–60. [Google Scholar] [CrossRef]

- Do, H.T.; Oechtering, T.J.; Skoglund, M. Noisy Network Coding Approach to the Interference Channel with Receiver Cooperation. In Proceedings of the 49th Annual Allerton Conference on Communication, Control, and Computing (Allerton), Monticello, IL, USA, 28–30 September 2011. [Google Scholar]

- Do, H.T.; Oechtering, T.J.; Skoglund, M. A new inner bound for the interference relay channel. In Proceedings of the 46th Annual Conference on Information Sciences and Systems (CISS), Princeton, NJ, USA, 21–23 March 2012. [Google Scholar]

- El Gamal, A.; Kim, Y.H. Network Information Theory; Cambridge University Press: Cambridge, UK, 2011. [Google Scholar]

- Lim, S.H.; Kim, Y.H.; El Gamal, A.; Chung, S.Y. Noisy Network Coding. IEEE Trans. Inf. Theory 2011, 57, 3132–3152. [Google Scholar] [CrossRef]

- Razaghi, P.; Yu, W. Universal relaying for the interference channel. In Proceedings of the Information Theory and Applications Workshop (ITA), San Diego, CA, USA, 31 January–5 February 2010. [Google Scholar]

- Kim, Y.H. Capacity of a Class of Deterministic Relay Channels. IEEE Trans. Inf. Theory 2008, 54, 1328–1329. [Google Scholar] [CrossRef]

- Costa, M.H.M.; Gamal, A. The capacity region of the discrete memoryless interference channel with strong interference (Corresp.). IEEE Trans. Inf. Theory 1987, 33, 710–711. [Google Scholar] [CrossRef]

- Gamal, A.; Costa, M. The capacity region of a class of deterministic interference channels (Corresp.). IEEE Trans. Inf. Theory 1982, 28, 343–346. [Google Scholar] [CrossRef]

- Chong, H.F.; Motani, M. The Capacity Region of a Class of Semideterministic Interference Channels. IEEE Trans. Inf. Theory 2009, 55, 598–603. [Google Scholar] [CrossRef]

- Kang, B.; Lee, S.H.; Chung, S.Y.; Suh, C. A new achievable scheme for interference relay channels. In Proceedings of the IEEE International Symposium on Information Theory Proceedings (ISIT), Istanbul, Turkey, 7–12 July 2013. [Google Scholar]

- Khormuji, M.; Skoglund, M. Capacity of Two Semideterministic Classes of Multiple-Access Relay Channels. IEEE Commun. Lett. 2012, 16, 1529–1531. [Google Scholar] [CrossRef]

- Telatar, E.; Tse, D. Bounds on the capacity region of a class of interference channels. In Proceedings of the IEEE International Symposium on Information Theory, Nice, France, 24–29 June 2007; pp. 2871–2874. [Google Scholar]

- Karmakar, S.; Varanasi, M.K. The Capacity Region of the MIMO Interference Channel and Its Reciprocity to Within a Constant Gap. IEEE Trans. Inf. Theory 2013, 59, 4781–4797. [Google Scholar] [CrossRef]

- Etkin, R.H.; Tse, D.N.C.; Wang, H. Gaussian Interference Channel Capacity to Within One Bit. IEEE Trans. Inf. Theory 2008, 54, 5534–5562. [Google Scholar] [CrossRef]

- Shang, X.; Kramer, G.; Chen, B. A New Outer Bound and the Noisy-Interference Sum-Rate Capacity for Gaussian Interference Channels. IEEE Trans. Inf. Theory 2009, 55, 689–699. [Google Scholar] [CrossRef]

- Motahari, A.; Khandani, A. Capacity Bounds for the Gaussian Interference Channel. IEEE Trans. Inf. Theory 2009, 55, 620–643. [Google Scholar] [CrossRef]

- Annapureddy, V.S.; Veeravalli, V.V. Gaussian Interference Networks: Sum Capacity in the Low-Interference Regime and New Outer Bounds on the Capacity Region. IEEE Trans. Inf. Theory 2009, 55, 3032–3050. [Google Scholar] [CrossRef]

- Chong, H.F.; Motani, M.; Garg, H.; El Gamal, H. On The Han-Kobayashi Region for the Interference Channel. IEEE Trans. Inf. Theory 2008, 54, 3188–3195. [Google Scholar] [CrossRef]

- Csiszár, I.; Körner, J. Information Theory: Coding Theorems for Discrete Memoryless Systems; Academic Press: Cambridge, MA, USA, 1981. [Google Scholar]

- Avestimehr, A.; Diggavi, S.; Tse, D. Wireless Network Information Flow: A Deterministic Approach. IEEE Trans. Inf. Theory 2011, 57, 1872–1905. [Google Scholar] [CrossRef]

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Do, H.T.; Oechtering, T.J.; Skoglund, M.; Vu, M. Interfering Relay Channels. Entropy 2017, 19, 441. https://doi.org/10.3390/e19090441

Do HT, Oechtering TJ, Skoglund M, Vu M. Interfering Relay Channels. Entropy. 2017; 19(9):441. https://doi.org/10.3390/e19090441

Chicago/Turabian StyleDo, Hieu T., Tobias J. Oechtering, Mikael Skoglund, and Mai Vu. 2017. "Interfering Relay Channels" Entropy 19, no. 9: 441. https://doi.org/10.3390/e19090441

APA StyleDo, H. T., Oechtering, T. J., Skoglund, M., & Vu, M. (2017). Interfering Relay Channels. Entropy, 19(9), 441. https://doi.org/10.3390/e19090441