1. Introduction

Generative artificial intelligence (AI) is reshaping and disrupting various industries [

1,

2], and the online healthcare is no exception. Embedded within online healthcare platforms, generative AI trains language models using real consultation data from these platforms, enabling rapid responses and answers to patient inquiries through advanced learning and reasoning capabilities. Generative AI holds significant value on online healthcare platforms [

3]. Unlike automated response systems that leverage AI technologies to assist doctors in making quick diagnoses and responses [

3,

4], the generative AI developed for online healthcare platforms functions as an intelligent medical assistant for patients. By learning from extensive medical data and knowledge, it can answer health-related questions, provide medical consultations, and explain disease information in a patient-friendly manner [

5]. Moreover, it can assist patients in making appointments, selecting doctors, and querying information, thus serving as a convenient, efficient, and friendly tool for online healthcare service that is accessible anytime and anywhere. Additionally, generative AI can enhance patient engagement by personalizing interactions based on individual health needs and preferences, ultimately improving the overall health awareness and health literacy of patients [

6,

7].

Despite the many benefits of generative AI for online healthcare services, their widespread adoption of online healthcare platforms and patients remains limited, and there is scant literature exploring patients’ intention to adapt generative AI online healthcare platforms. Previous research on generative AI in the field of healthcare has primarily focused on both its benefits and challenges. Existing research has extensively explored the role of generative AI in healthcare, including its support for diagnostic decision-making by physicians [

8], assistance in clinical practice and research [

9], enhancement of healthcare quality and efficiency [

10], optimization of operations and management [

5], and reinforcement of patient education and physician training [

11]. There are also concerns regarding generative AI, such as the potential for generating inaccurate content, making inappropriate diagnoses [

12], issues related to privacy and security [

13,

14], ethical dilemmas regarding patient understanding [

15], and deficiencies in legal regulation. However, research on generative AI in online healthcare remains limited, and it is still unclear which factors influence patients’ adoption of generative AI on such platforms. Undoubtedly, the value that generative AI brings to healthcare cannot be denied; however, this value can only be realized if patients adopt generative AI. Therefore, exploring the factors influencing patients’ intention to adopt generative AI on online healthcare platforms is essential for promoting its broader application and maximizing its value. UTAUT2 is a well-established model for understanding technology acceptance and usage, and it has been widely applied in research on user adoption of new technologies [

16,

17]. In this study, generative AI is an emerging technology, patients constitute a new user group, and online healthcare represents a novel usage context. Therefore, this study employs the UTAUT2 model to investigate the factors influencing patients’ intention to adopt generative AI on online healthcare platforms.

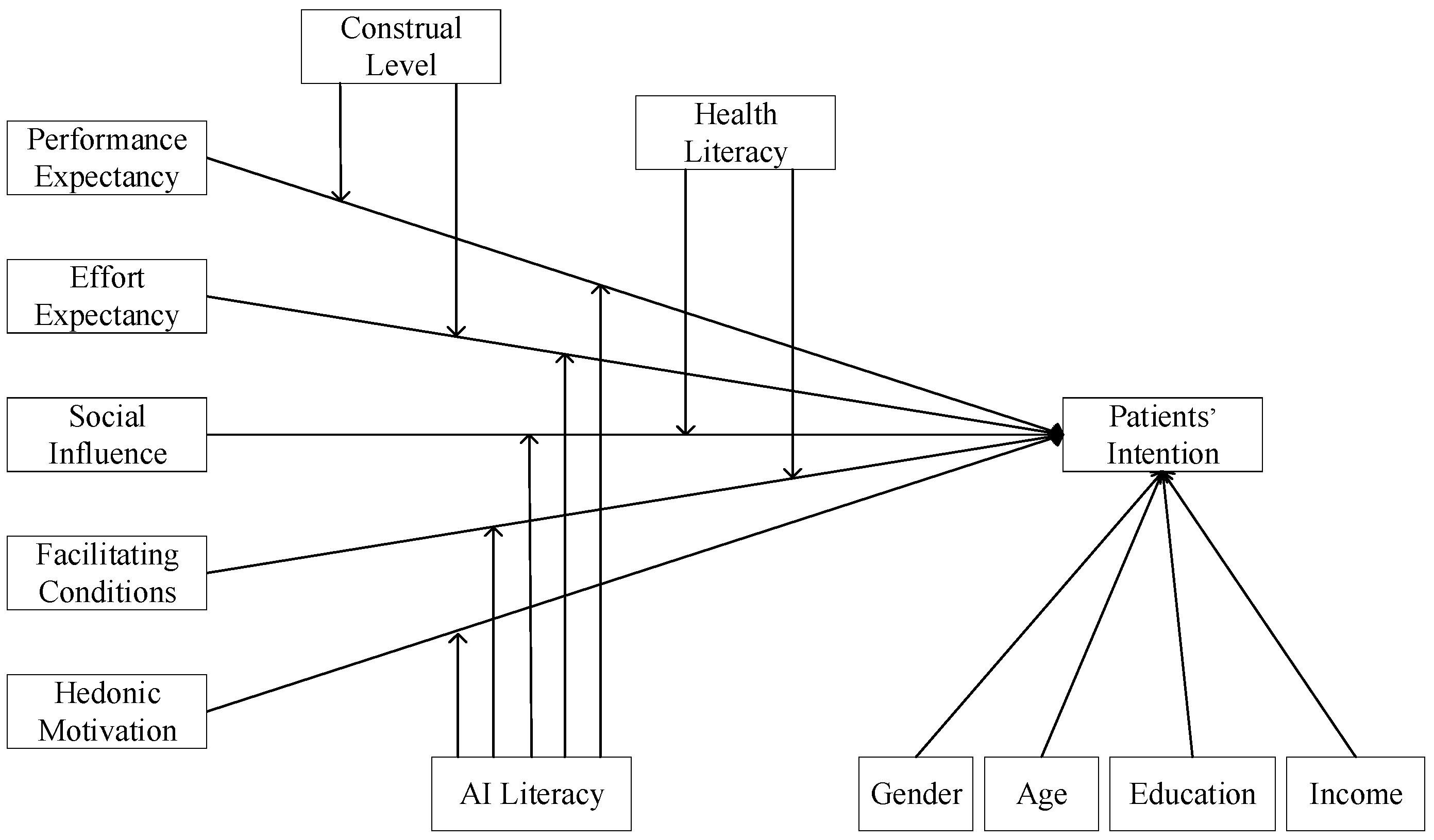

Venkatesh et al. [

18] points out that moderators are critical for meaningful extensions of theories of technology acceptance. For example, individual differences in age, gender, and experience moderate the effects of UTAUT2 on behavioral intention [

18]. Therefore, examining boundary conditions in patients’ intention to adopt generative AI on online healthcare platforms is crucial, as it contributes to the extension of technology adoption theories and deepens the understanding of this specific context. Performance expectancy and effort expectancy correspond to attributes situated at different construal levels [

19]. Performance expectancy, as an outcome-oriented variable, aligns with a higher construal level, in which individuals adopt an abstract mode of thinking that emphasizes causes and consequences. In contrast, effort expectancy, as a process-oriented variable, is associated with a lower construal level, wherein individuals engage in a concrete mode of thinking that highlights execution and processes [

20]. Accordingly, the influence of performance expectancy and effort expectancy on the adoption of generative AI may differ across individuals with different construal levels. Social influence and facilitating conditions represent the key social and technological factors through which individual access and use health-related information. Health literacy determines both the motivation and the ability to acquire and utilize such information [

21,

22]. Therefore, the impact of social influence and facilitating conditions on the adoption of generative AI for health-related information is likely to vary across individuals with different levels of health literacy. Additionally, individuals with different levels of AI literacy demonstrate distinct AI-related behaviors. Specifically, variations in AI literacy shape individuals’ trust in and ability to use AI technologies [

23]. Consequently, differences in AI literacy may lead to divergent perspectives on the adoption of generative AI. Taken together, this study aims to enhance the understanding of patients’ intention to adopt generative AI on online healthcare platforms by examining the moderating roles of construal level, health literacy, and AI literacy from the patient’s perspective.

In summary, this study aims to examine the factors influencing patients’ intention to adopt generative AI on online healthcare platforms and the moderating effect of construal level, health literacy, and AI literacy. We collect data through a questionnaire via online channels. The analysis reveals that performance expectancy, social influence, and facilitating conditions are positively associated with patients’ intention to adopt generative AI on online healthcare platforms, whereas effort expectancy and hedonic motivation do not exhibit a significant effect. Furthermore, the results show that the construal level positively moderates the relationship between performance expectancy and patients’ intention. Health literacy negatively moderates the relationship between social influence and patients’ intention. AI literacy positively moderates the relationship between effort expectancy and patients’ intention but negatively moderates the relationship between social influence and patients’ intention. These findings contribute to a more nuanced understanding of patients’ intention to adopt generative AI and offer practical implications for the effective design, implementation, and dissemination of such technologies in online healthcare contexts.

6. Discussion

6.1. Principal Findings

This study aims to investigate the factors influencing patients’ intention to adopt generative AI on online healthcare platforms. First, the findings suggest that performance expectancy exerts a positive influence on patients’ intention to adopt generative AI. This implies that generative AI is a promising and effective model and can enhance health management efficiency and healthcare utility on online healthcare platforms. Second, effort expectancy does not exhibit a significant effect on patients’ intention, suggesting the difficulty that patients may face in trusting and operating the unique features and functionalities of generative AI. Third, social influences are identified as a positive determinant of patients’ intention, as individuals’ choices and inclinations are inevitably shaped by significant others, who not only impact patients’ decisions to adopt generative AI but also care about their physical well-being. Four, the research results reveal that facilitating conditions have a significant effect on patients’ intention to adopt generative AI. This finding can be attributed to the widespread accessibility of information and knowledge and the presence of appropriate hardware and software infrastructure, which supports the utilization of generative AI. Five, notably, while generative AI is a novel technology, its primary function is health management and healthcare service. Therefore, hedonic motivation is not the primary motivator for adoption of such technology.

Additionally, this study examines three moderators that influence the relationship between influencing factors and patients’ intention. Firstly, the research results demonstrate that the construal level plays a positive moderating role in the relationship between performance expectancy and patients’ intention. This effect is attributed to the tendency of individuals with a higher construal level to prioritize outcome-related factors that embody desirability over those with a lower construal level. However, no significant effect of the construal level is found on the relationship between effort performance and patients’ intention, possibly due to evolving societal values and contemporary changes, wherein even individuals with a lower construal level may not place adequate attention to the process aspect that could impact their assessment of objects and behaviors.

Secondly, the results of this study suggest that health literacy plays a negative moderating role in the relationship between social influence and patients’ intention while having no significant effect on the relationship between facilitating conditions and patients’ intention. This indicates that individuals with lower health literacy are more vulnerable to the influence of significant others on their inclination to adopt generative AI. Nevertheless, the pervasiveness of the Internet, the widespread use of hardware and software, and the abundance of knowledge and information online have made the impact of facilitating conditions on intention independent of patients’ health literacy.

Thirdly, the study findings reveal that AI literacy exerts a significant and positive influence on the relationship between effort expectancy and patients’ intention. This is because individuals with higher levels of AI literacy, compared with those with lower AI literacy, are better able to understand the functions and operational processes of AI, thereby strengthening the influence of perceived ease of use on their adoption intention. Additionally, AI literacy negatively affects the relationship between social influence and patients’ intention. This is attributed to patients with higher AI literacy possessing a more profound understanding of AI technologies, enabling them to independently assess the value of AI, making them less susceptible to external influences. However, no significant effect of AI literacy is detected on the relationship between performance expectancy and patients’ intention, which may be attributed to the powerful functionalities of generative AI. This leads to the influence of performance expectancy on patients’ intention to adopt generative AI remaining stable regardless of their level of AI literacy. Similarly, AI literacy does not significantly moderate the relationship between facilitating conditions and patients’ intention. This may be possibly because the facilitating conditions for adopting generative AI are sufficiently consistent across individuals with varying levels of AI literacy. Furthermore, no significant moderating effect of AI literacy is found on the relationship between hedonic motivation and patients’ intention, suggesting that patients’ perceived enjoyment in adopting generative AI is not contingent upon their level of AI literacy [

61].

6.2. Theoretical Implications

This study offers three primary contributions to the study of generative AI and UTAUT2 models. Firstly, while prior research has examined factors such as social influence, novelty value, and anthropomorphism in relation to the acceptance of ChatGPT-3.5 [

62], limited attention has been given to the adoption of generative AI by patients within online healthcare settings. This study seeks to address this gap by investigating the factors that influence patients’ intention to adopt generative AI on online healthcare platforms, thereby advancing theoretical understanding and offering insights into the key determinants shaping patient behavior in this emerging context. Secondly, this study expands the UTAUT2 model to apply to the adoption of generative AI. Our analysis reveals that effort expectancy and hedonic motivation do not significantly affect the intention of using generative AI. This finding highlights the need for a tailored approach to comprehending patients’ intention regarding generative AI on online healthcare platforms, as traditional models may not fully fit new research scenarios. Thirdly, this study extends the theoretical boundaries and explanatory power of the UTAUT2 model in healthcare by introducing moderators such as the construal level, health literacy, and AI literacy. These variables demonstrate influence on the relationship between factors and patients’ intention. By integrating these moderating variables into the UTAUT2 model, we offer a more comprehensive understanding of the factors influencing patients’ intention to adopt generative AI in online healthcare scenarios.

6.3. Implications for Practice

This study has practical implications for the development and promotion of generative AI on online healthcare platforms. First, performance expectancy is positively associated with patients’ intention to adopt generative AI, but effort expectancy has no significant influence on patients’ intention. Thus, platform managers should underscore the need for effective educational and promotional efforts to enhance patients’ understanding of the benefits of generative AI and their ability to use it. This is further supported by the confirmation that AI literacy positively moderates the relationship between effort expectancy and patients’ intention. Second, the findings indicate that social influence is positively associated with patients’ intention, especially among individuals with lower health literacy and AI literacy [

63]. Platform managers should encourage patients to recommend the use of generative AI to others by providing rewards and should particularly encourage young patients to recommend it to older adults and to those living in remote areas with low health literacy and AI literacy [

64]. Third, facilitating conditions are positively associated with patients’ intention to adopt generative AI, whereas hedonic motivation has no significant influence on patients’ intention. Therefore, platforms should enhance the compatibility of generative AI to facilitate patient use, while investment in entertainment functionalities may not be necessary. These strategies are likely to attract more patients and expand the value of generative AI on online healthcare platforms.

6.4. Limitations and Future Research

This study has several limitations that warrant consideration and provide directions for future research. First, patients may engage with generative AI on online healthcare platforms for varying purposes, such as seeking diagnostic support, managing chronic conditions, or accessing general health information. These differentiated purposes reflect heterogeneous expectations and value perceptions [

65], which may systematically influence the relationships between antecedent factors and patients’ intention to adopt generative AI. However, this study does not account for such heterogeneity. Future research should examine the moderating role of patients’ usage purposes, thereby unpacking how different user goals shape the strength or direction of influencing factors. Secondly, this study does not account for additional constructs that may influence adoption behavior, such as price value and usage habits [

66]. As generative AI technologies become more prevalent in online healthcare contexts, future research could incorporate these variables to capture evolving patients’ behavior. Furthermore, exploring other potentially relevant factors, such as novelty value, perceived risk, and privacy concerns, may offer a more comprehensive understanding of the facilitators and inhibitors shaping patients’ intention to adopt generative AI in an online health context [

14,

67]. Lastly, the data are collected in China without regional distinction and within a specific time frame, which may limit the generalizability of the findings across diverse cultural contexts and temporal settings. Future studies should collect data across multiple regions and time points to improve external validity and capture potential cultural, regional (rural vs. urban), and temporal variations in patients’ perceptions of generative AI on online healthcare platforms [

68].