Abstract

Images contain more visual semantic information. Consumers first view multimodal online reviews with images. Research on the helpfulness of reviews on e-commerce platforms mainly focuses on text, lacking insights into the product attributes reflected by review images and the relationship between images and text. Studying the relationship between images and text in online reviews can better explain consumer behavior and help consumers make purchasing decisions. Taking multimodal online review data from shopping platforms as the research object, this study proposes a research framework based on the Cognitive Theory of Multimedia Learning (CTML). It utilizes multiple pre-trained models, such as BLIP2 and machine learning methods, to construct metrics. A fuzzy-set qualitative comparative analysis (fsQCA) is conducted to explore the configurational effects of antecedent variables of multimodal online reviews on review helpfulness. The study identifies five configurational paths that lead to high review helpfulness. Specific review cases are used to examine the contribution paths of these configurations to perceived helpfulness, providing a new perspective for future research on multimodal online reviews. Targeted recommendations are made for operators and merchants based on the research findings, offering theoretical support for platforms to fully leverage the potential value of user-generated content.

1. Introduction

With the rapid growth of e-commerce, online platforms have become pivotal in shaping global consumer behavior and driving the digital economy, underscoring the critical importance of understanding user interactions and feedback. Different from offline shopping, when consumers make online shopping decisions, they often check online reviews to obtain other consumers’ evaluations of the product and service to assist in their decision-making. Existing research shows that online reviews play an important role in promoting product sales [1] and influencing consumer decisions [2]. Merchants and platforms also provide certain material rewards to encourage users to write high-quality reviews, thereby promoting product sales and improving industry competitiveness.

Online reviews can effectively reduce consumers’ uncertainty perception in the decision-making process by conveying information that is not available in the seller’s product description and describing the actual use of the product more objectively and accurately [3]. However, due to the lack of a unified review mechanism on the platform, false reviews, manipulated reviews, and abused reviews are emerging in an endless stream, and the quality of reviews varies, which, to a certain extent, undermines the helpfulness of reviews [4].

The quality of online reviews is one of the important factors affecting their helpfulness [5]; meanwhile, as the idea that “pictures speak louder than words” has gained popularity, image information in online reviews has gradually attracted the attention of scholars. Existing research on the helpfulness of multimodal online reviews mainly focuses on the number of images [6], image background [7], image–text consistency [8], and image quality [6,9]. Although most studies have confirmed that multimodal reviews containing images are more helpful than text-only reviews, few have explored the fundamental issue of image-based reviews, what product attributes consumers express through images in their reviews, and how these attributes are associated with the helpfulness of the review. Thus, the following research questions are proposed:

- What specific product attributes are consumers most likely to express through images in multimodal reviews, and how do these attributes influence the perceived helpfulness of the review?

- How do different combinations of product types and multimodal review features collectively contribute to the perceived helpfulness of online reviews?

- What configurations of these factors are most likely to enhance review helpfulness?

To address these main research questions, based on previous research, this work uses a variety of pre-trained models to extract text and image multi-dimensional features and constructs indicators based on real multimodal online review data from JD (www.jd.com), focusing on the impact of product attributes reflected by images and image–text consistency on the helpfulness of reviews. Furthermore, from a configurational perspective, the fsQCA (fuzzy-set qualitative comparative analysis) method is used to explore how the image and text features in the reviews affect the helpfulness of the reviews. This can not only help broaden the research perspective of multimodal online reviews and deepen the understanding of the helpfulness of reviews but also provide a scientific basis for user-generated content governance on shopping platforms and merchant product improvements in practice.

2. Related Studies

2.1. Helpfulness of Text Content Online Reviews

Review helpfulness refers to the measure of value perceived by consumers in a series of processes such as demand identification, information search, and purchase testing [10]. Since the text content data in online reviews is easy to obtain and natural language processing technology is relatively mature, it can effectively perform tasks such as sentiment analysis [11] and topic modeling [12]. Existing helpfulness research mainly focuses on two categories: directly perceptible text statistical features and semantic features mined using natural language processing technology. Text statistic features refer to the features that consumers directly perceive from the review text, including the length of the text [10], the structural features of the text [13], and response behavior to the comments [14]. According to information adoption theory, factors such as text length, replies, and additional comments affect information quality, which in turn affects the helpfulness of review information through the central path. Existing research shows that the length of review text and the number of replies to reviews have a positive impact on perceived helpfulness [15]. However, overly long review texts may impose a cognitive burden on consumers’ information processing capabilities, triggering an “information overload” phenomenon that damages review helpfulness [16].

Semantic features are obtained using text mining methods. These features require consumers to perform relatively complex cognitive processing to understand them. The main contents include product feature extraction using topic models [17], sentiment analysis [18], text similarity analysis [19], and information volume [20]. Comment texts contain consumers’ evaluations of product (or service) attributes. For different product attributes, consumers’ perceived helpfulness varies [21]. In addition, the sentiment tendency of comments will also affect consumers’ perception of their helpfulness. Online comments with negative emotions are usually regarded by consumers as highly credible information sources because they are highly realistic and can effectively reveal potential problems with products or services [22].

2.2. Helpfulness of Multimodal Content of Online Reviews

Multimodal information refers to a technology or method that uses multiple different forms of data or signal sources to process tasks, such as text, images, audio, and video [23]. With the development of mobile network technology and the evolution of mobile terminal performance, consumers can obtain and share multimodal content. The format of online reviews has shifted from a simple textual description to a multimodal, multifaceted online evaluation system, including rating systems, ranking systems, review images, review videos, and more [24]. Multimodal research in online reviews has mainly focused on images. Kübler, Lobschat, Welke, and van der Meij [6] found that reviews that include images and videos are more intuitive and persuasive, and existing research has shown that the number of images in a review has a positive impact on its perceived helpfulness. To further clarify the role of images as a factor influencing the helpfulness of reviews, subsequent studies introduced the Dual Coding Theory [25] and the Cognitive Theory of Multimedia Learning [26]. Research-based on the above two theories shows that consumers process text and image information through independent but interactive dual channels, and there is information integration in this process. The better the integration effect, the more helpful it is for memory and understanding. Therefore, the consistency of images and texts in multimodal reviews has a positive impact on helpfulness [8,27].

One of the major difficulties in semantic feature analysis of multimodal content is extraction and presentation. With the development of computer vision technology, reliance on feature engineering in traditional digital image processing is no longer necessary; instead, features can be truly analyzed from a semantic level. Existing research methods and technologies mainly focus on the following two categories: one is to use closed-source models such as Google Vision API to extract object labels from comment images, and the other is to use trained neural networks such as R-CNN to detect and classify objects in comment images. Yang, Wang, and Zhao [9] used a deep learning method based on object detection to measure the four dimensions of user-generated image quality and found that the accuracy and relevance of comment images positively affect the helpfulness of comments. Ceylan, Diehl, and Proserpio [8] used Vision API to extract image labels and used Doc2Vec to measure the text similarity between comments and image labels and found that the consistency between images and texts promoted the helpfulness of comments. However, although the existing research on the helpfulness of multimodal online reviews has made some progress, there are still two shortcomings. First, existing studies mostly construct indicators from the perspective of single image features or the number of images, ignoring the correlation between multiple images. When a review contains multiple images, these images may provide similar content or different perspectives. Analysis based on a single image is prone to information loss, and it is impossible to fully explore how multiple images work together to affect consumers’ perception of the helpfulness of reviews. Second, images and texts may form different integration relationships. Some images supplement text information, while others are unrelated to the text. Existing studies usually do not fully explore how images and texts jointly influence consumer understanding and decision-making, resulting in an inability to accurately evaluate the overall helpfulness of reviews.

2.3. Online Review Research with Qualitative Comparative Analysis Method

Qualitative comparative analysis (QCA) is based on holism and uses a configurational method to explore the complex causal relationship between antecedent variables and outcomes and then explains the case from the perspective of set theory, different from the binary view of independent variables and dependent variables in previous quantitative methods [28]. QCA methods are divided into four types according to the data type of the antecedent variable, including clear-qualitative comparative analysis (csQCA), fuzzy-set qualitative comparative analysis (fsQCA), multi-valued qualitative comparative analysis (mvQCA), and time series qualitative comparative analysis (tQCA) [29]. Compared with other methods, fsQCA is applicable to a wider range of cases and has more scientific and rigorous analysis steps, so it is chosen by more scholars. Large sample cases have also been gradually expanded in the fields of social sciences and health research and have certain feasibility and credibility [30]. Recently, some scholars have used the fsQCA method to study issues in the field of online reviews. For example, Perdomo-Verdecia et al. [31] used a fuzzy set qualitative comparative analysis based on online survey data to explore the complex motivations of consumers in writing hotel reviews. Rassal et al. [32] conducted a fuzzy set qualitative comparative analysis on 6742 validated TripAdvisor reviews and found that the systematic and heuristic processing of online reviews can have an independent impact on consumer decision-making.

3. Materials and Methods

3.1. Research Framework

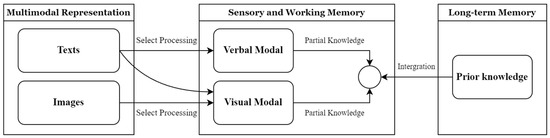

The CTML (Cognitive Theory of Multimedia Learning) theory was first proposed by Mayer [26] to explain how people learn knowledge from text and graphics. The theoretical model is shown in Figure 1.

Figure 1.

Basic structure of Cognitive Theory Of Multimedia Learning model.

From the perspective of CTML theory, consumers process the information from review text and image through dual channels, and this information is temporarily stored in sensory memory. After that, consumers will selectively notice some information and process it, forming a “visual model” (such as the appearance of the product, the effect of use, etc.) and a “verbal model” (such as the description of product performance and user experience) of the product. Finally, in the integration stage, consumers combine visual information (such as images) and verbal information (such as text descriptions) with each other and extract relevant experiential knowledge from memories to help understand the content of the review (for example, past usage experience, trust in the reviewer, etc.), and finally form a perception of the helpfulness of the review.

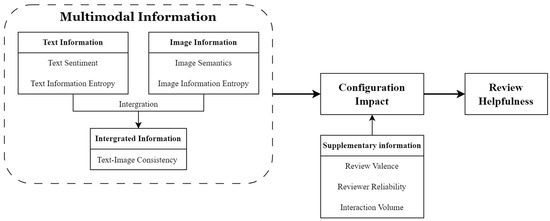

Therefore, this study divided the influencing factors of the helpfulness of multimodal online reviews into four parts: text information, image information, integrated information, and supplementary information. The text information category encompasses text sentiment and text information entropy. Image information includes image content and image information entropy. Integrated information includes image–text consistency. Supplementary information includes review valence, reviewer reliability, and volume of interactions. Among these factors, text information and image information are mainly used for external processing and essential processing, while integrated information and supplementary information play a role in the generation processing stage. In summary, the research framework based on CTML theory is shown in Figure 2.

Figure 2.

Research framework of multimodal review helpfulness analysis.

3.2. Fuzzy Qualitative Comparative Analysis Method

The existing literature has verified the impact of a single variable on the helpfulness of multimodal reviews. However, the formation of high review helpfulness is not the result of a single variable but the result of a dynamic combination of multiple variables interacting with each other. Therefore, the fs-QCA method was used to explore the configurational impact of multimodal online review antecedent variables on review helpfulness, with several steps shown in Figure 3.

Figure 3.

fs-QCA process flow.

The construction of the fs-QCA model is based on theoretical frameworks and research hypotheses, aiming to identify possible causal pathways. Variable selection should align with research objectives and include both causal conditions and the outcome variable. The chosen variables must be theoretically justified and capable of distinguishing differences between cases effectively. Data collection typically involves surveys, literature analysis, or database retrieval, ensuring the representativeness and quality of the dataset. During data preprocessing, missing values and outliers are handled, and variables may be standardized to enhance the accuracy of subsequent calibration and analysis.

Calibration is a crucial step in fs-QCA, where raw data are transformed into fuzzy set values by defining key thresholds, such as full membership, full non-membership, and the crossover point. Calibration methods can be based on theoretical reasoning, expert judgment, or empirical distributions to ensure that causal and outcome variables meet fs-QCA’s analytical requirements. Necessity analysis identifies whether a single condition variable is a necessary condition for the outcome variable. Configurational analysis is conducted using a truth table and minimization algorithms to identify different combinations of configurations leading to a specific outcome. Through sufficiency analysis, researchers can detect multiple pathways that result in the same outcome and differentiate between core conditions and peripheral conditions. The intermediate solution is often used to derive theoretically interpretable configurational patterns. At last, to ensure the reliability of the findings, robustness analysis is performed by adjusting calibration thresholds, modifying consistency standards, or applying different minimization algorithms. Additionally, case comparison analysis or methodological triangulation can be conducted to enhance the credibility of the results.

From the process aspect, there are multiple antecedent variables in the impact of multimodal online reviews on review helpfulness. Meanwhile, from the result aspect, there are multiple equivalent configurations for high review helpfulness, which is the situation of “multiple causes and one result”. Finally, the configuration truth table analysis can be further analyzed in combination with the specific review situation, and different scenarios of high helpfulness can be analyzed based on the actual situation, making the results more explanatory.

3.3. Data Selection and Sampling

The review data were sourced from JD.com, China’s largest online shopping platform, with reviews of Canon 5D series digital SLR cameras being crawled for analysis. As a high-priced search product, the characteristics of digital SLR cameras allow consumers to fully understand the attributes of the product before purchasing. Studies have shown that the higher the price of the product, the higher the perceived risk of consumers and the more motivated they are to conduct further searches to ensure that they have enough information [33].

Multimodal reviews must contain both images and text content to be included in the research dataset. The earlier the review is published, the more attention will be drawn. To reduce the impact of publishing time, the review must exist for more than 6 months. Based on the above premises, multimodal reviews were collected from 19 April 2014 to 19 March 2024. Each review contains the review text, review image, product rating, volume of interactions, platform membership status, and volume of images. A total of 1220 valid review texts and 4324 review images were obtained as the research dataset.

3.4. Data Preprocess

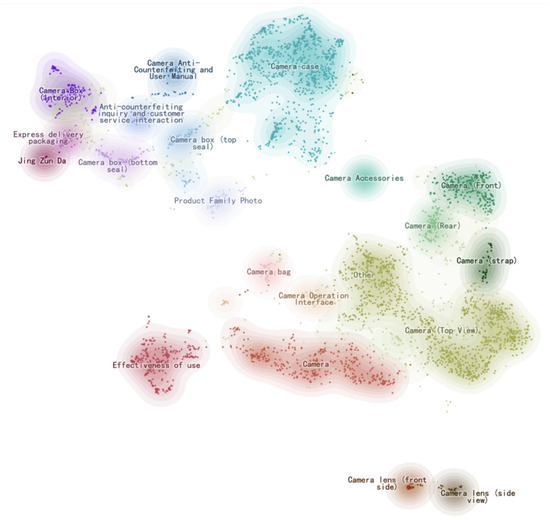

3.4.1. Image Category and Product Attribute Extraction

To explore the specific content of each review image and the product attributes involved, this study uses an unsupervised image clustering algorithm for feature recognition. The BLIP2-Chinese model, obtained by training 200 million Chinese image–text pairs for five epochs, was used to extract image features. The model is based on BLIP2 (Bootstrapping Language-Image Pre-training 2), which is a multimodal pre-training model [34]. Compared with traditional image feature extraction methods, the pre-training model helps understand the connection between images and texts based on semantic features, which helps to deeply explore the impact of images on the helpfulness of reviews. The model is characterized by efficient image–text alignment using less annotated data and has stronger cross-modal understanding capabilities compared to previous multimodal pre-training models. After the features are extracted from one single review image, an embedding tensor with a shape of 32 × 256 is obtained. The first dimension of the tensor is average pooled to generate a global 256-dimensional embedding vector for subsequent clustering and analysis. Again, the UMAP and HDBSCAN algorithms were used to reduce the dimension and cluster the embedding vectors of the 4324 images, resulting in a total of 20 cluster categories and 1 abnormal category, which is shown in Figure 4.

Figure 4.

Two-dimensional embedding distribution of images.

To obtain a more general category, the image categories are further summarized, and four types of product attributes are obtained: global attributes, local attributes, additional attributes, and experience attributes. Global attributes mean that the review image focuses on the overall characteristics of the product. Local attributes mean that the review image focuses on some characteristics of the product. Additional attributes mean that the review image mainly reflects the additional characteristics of the product, such as packaging and after-sales service. Experience attributes mean that the review image shows the camera shooting effect.

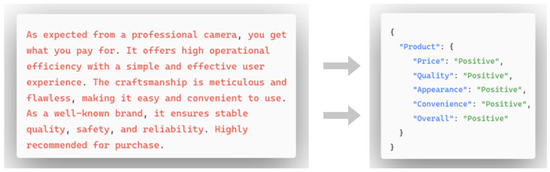

3.4.2. Product Aspect Level Text Sentiment Extraction

An aspect refers to a specific attribute or feature involved in an online review. It can be described as an attribute of an entity (such as “screen” or “battery life” in a mobile phone review) or an aspect of a service (such as “room cleanliness” or “customer service” in a hotel review). These aspects can be represented by keywords, phrases, or implicit features. To extract sentiment binary pairs, an aspect-based sentiment analysis pre-trained model was employed. [35]. The model is pre-trained on a supervised task of the Text2Text unified paradigm on 100 Chinese datasets and can effectively complete the aspect-level sentiment analysis task. The example is shown in Figure 5.

Figure 5.

Example of aspect-level text sentiment extraction.

3.5. Variable Measurement

According to the research framework, the factors affecting the helpfulness of multimodal online reviews were divided into four dimensions: text information, image information, integrated information, and supplementary information. The text information dimension focuses on the linguistic content and the sentiment of the review text itself. The image information dimension examines visual elements and their attributes, especially the semantic features. The integrated information dimension explores how text and images work together. The supplementary information dimension considers contextual factors beyond the review content itself. The text information dimension included two indicators: sentiment (TS) and text entropy (TE). The image dimension comprised five indicators: global attributes (GL), local attributes (LO), additional attributes (AD), experience attributes (EX), and image entropy (IE). The integrated information dimension centered on image–text consistency (IC) and valence (VA) to evaluate the complementarity of visual and textual elements. Finally, the supplementary information dimension involved the assessment of interaction volume (IV) and reviewer reliability (RR) to capture social aspects and credibility factors influencing the perceived helpfulness of multimodal reviews. The specific conditional variables and outcome variable measurement methods and literature sources are shown in Table 1 and Table 2.

Table 1.

Dimensions and indicators of conditional variables influencing the helpfulness of multimodal online reviews.

Table 2.

Outcome variable of the helpfulness of multimodal online reviews.

3.5.1. Conditional Variables

Text Information

The voting method is used to define the overall sentiment of the text. In each comment text, the volumes of “positive”, “neutral”, and “negative” were counted. Scholars usually used text length to evaluate the information content of the comment text. However, this measurement method cannot accurately reflect the diversity and depth of the comments and cannot fully reveal the specific information of the text at multiple levels. Therefore, referring to the research of Fresneda and Gefen [36], the text information entropy is included in the variables. Information entropy is usually defined as “a measure of the amount of information contained in a system”. In this case, it refers to the diversity and complexity of various aspects of text information. The original calculation formula is

where represents the probability of the category (aspect) , and is the volume of aspects. can be calculated as

where represents the volume of occurrences of a certain aspect, and represents the total volume of occurrences of all aspects. Meanwhile, to measure the diversity and depth of the comment text, a compensation factor is introduced for calculation, which can be calculated as

where is the length of the comment by character. is used to measure the diversity of aspects in the comment, indicating that as the number of aspects increases, the diversity of the comment content also increases. is used to measure the depth of information in the comment, indicating that as the total volume of aspects and the length of the text increase, the depth of the comment content also increases. Finally, the text information entropy can be calculated as

where is a smoothing value, which is used to prevent the original entropy from being 0 when there is only one type of aspect in the text, thus causing the overall information entropy to be 0. Text information entropy combines the entropy value, diversity, and depth of the comment text and can more accurately measure the amount of information provided by different aspects of the comment.

Image Information

Previous studies have shown that different product attributes will have an impact on consumer attitudes. For example, the intrinsic attributes of a product will lead to inferences about product quality, while the extrinsic attributes of a product will lead to inferences about price and performance [40]. Therefore, based on the product attribute classification results, the image content variable is set as the percentage of each type of product attribute to the total number of images, which is used to compare whether different types of images have different effects on consumers’ helpfulness perception. Thus, image information entropy is introduced to measure the amount of image information in multimodal reviews, which can be calculated as

Among them, is the number of original images corresponding to attribute , is the Laplace smoothing factor, which ensures that even if the count of an attribute is zero, a small positive probability can be assigned to the category; is the total volume of images one single review. When the review contains more images, the potential information content of the review will be greater. Image information entropy combines the uncertainty of the distribution of the four types of product attributes in the image information and the number of images to more comprehensively measure the potential impact of the image information content in multimodal reviews on consumers’ helpfulness perception.

Integrated Information

The BLIP2 multi-modal pre-training model is used to calculate the cosine similarity between the embedding vector of the texts and the images in the same review. If there are multiple images in the comment, the average value of the cosine similarity is calculated, and finally, the image–text consistency is obtained. The BLIP2 model directly learns the joint representation of images and text in an end-to-end transformer, thereby capturing richer semantic information and contextual relationships than modeling features from each individually. Compared with the method of measuring the consistency of images and texts in reviews by Ceylan, Diehl, and Proserpio [8], BLIP2 not only avoids the information loss caused by intermediate steps but also can process the abstract concepts, emotions, and implicit information of images and texts. Therefore, it has great advantages in measuring accuracy and significant advantages in flexibility.

Supplementary Information

The review valence is set as the score given by the reviewer to the product, where 5 points are good reviews, 3 points and 2 points are medium reviews, and 1 point is bad reviews. Most users received a score of 5, while fewer received lower scores (see Table 3 for the detailed distribution). The volume of interactions is set as the total of comments and interactions of other users or merchants belonging to the review. The reviewer reliability is set as whether the reviewer is a JD Plus member; if the reviewer is a member, the value is 1, and if not, it is set to 0.

Table 3.

Frequency distribution of user scores.

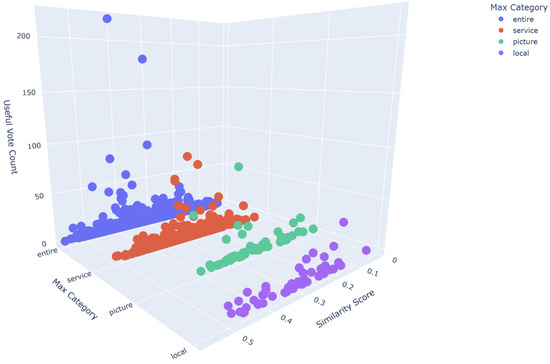

3.5.2. Outcome Variable

The volume of likes of a comment is used as a helpfulness measurement indicator. The image content variable is processed, and the product attribute that appears most frequently in a single comment image is defined as the maximum product attribute. Combined with the image–text consistency and comment helpfulness variables, the visualization result is shown in Figure 6. In summary, there is a complex causal relationship between the information at all levels of online reviews and the helpfulness of reviews, which needs further exploration.

Figure 6.

Distribution of product attributes, text–image consistency, and review helpfulness.

3.6. Variable Calibration

Before the fuzzy set is converted into a truth table, the original data needs to be calibrated. Based on the distribution characteristics of the variable data, a combination of direct calibration and indirect calibration was used to calibrate the variables to fuzzy set membership scores of 0 to 1. In the direct calibration process, considering the skewed distribution characteristics of the data, the 80%, 50%, and 20% quantiles of the sample data are used as the full membership point, crossover point, and full non-membership point of the variable calibration. At the same time, to avoid the problem of unclear configuration attribution—refer to the practice of scholars such as Du et al. [41]—after the initial calibration, if a membership score of 0.5 appears, 0.001 is subtracted from it. The specific calibration results are shown in Table 4 and Table 5.

Table 4.

Calibration of outcome variables and conditional variables (direct calibration).

Table 5.

Calibration of conditional variables (indirect calibration).

4. Results

4.1. Single Variable Necessity Analysis

According to two basic strategies in set theory, before constructing a truth table for configuration analysis, it is necessary to evaluate whether a condition or a combination of conditions constitutes a necessary condition for the result. The necessary condition analysis (NCA) method was used to conduct necessity testing. For continuous variables and categorical variables, the Capacitated Regression (CR) method and Capacitated Envelope (CE) method are employed for estimation, respectively. Only when the effect size is not at a low level, and the two conditions of significance are met at the same time, can the antecedent variable be identified as a necessary condition for the outcome variable. The NCA package in R language was used for analysis, and the specific results are shown in Table 6. Among them, the effect size of each antecedent variable is 0, so it does not constitute a necessary condition for the outcome variable, indicating that the perception of review helpfulness is the result of the joint influence of multiple factors.

Table 6.

Results of necessary condition analysis.

The necessary conditions function in fsQCA3.0 was used to test the NCA necessary condition analysis, and the consistency threshold was set to 0.9 [42]. The specific results are shown in Table 7. In fsQCA necessity analysis, consistency captures how well the relationship of necessity is approached. Coverage, on the other hand, indicates the relevance (or, conversely, the trivialness) of a necessary condition. The results show that the consistency of all single variables in the composition of high review helpfulness is lower than 0.9, indicating that a single antecedent variable does not constitute a necessary condition, which is consistent with the NCA results.

Table 7.

Results of necessary condition testing for individual antecedent variables.

4.2. Configuration Analysis

In the process of constructing the truth table, the minimum case frequency, raw consistency, and PRI consistency threshold are set to 2.00, 0.80, and 0.60 [43]. To bolster the credibility of the findings from this larger-sample QCA, a minimum case frequency threshold higher than the customary ‘1’ used in small-N studies was adopted. If the logical condition combinations fall below the threshold, the outcome variable is manually marked as 0, following Ding’s [43] approach, and only reports the parsimonious solution for two main reasons. First, the QCA method still includes redundant elements in the generation of complex and intermediate solutions, which raises concerns about the interpretability of causal inferences and makes parsimonious solutions more reliable [44]. Second, this study yielded 12 parsimonious solutions, of which all but two have consistency scores greater than 0.8, thereby constituting sufficient conditions for the outcome.

The specific solution results are shown in Table 8. After removing solutions with consistency below the threshold, a total of 10 parsimonious solutions were obtained. The overall coverage is 0.536, indicating that the 10 parsimonious solution configurations can explain 53.6% of the cases with high review helpfulness. Following the derivation and categorization of the parsimonious solutions, the theoretical naming of these configurational paths was undertaken. Each label was formulated through a systematic interpretation of the combination of conditions within its respective solution group, grounded in the core mechanisms and essential attributes that these configurations exhibited in explaining high review helpfulness. Based on the occurrence of conditions, the configurational paths leading to high review helpfulness are categorized into five types: effective explanation type, unilateral negative type, insufficient integrated type, sufficient integrated type, and complementary type.

Table 8.

Analysis of configurations for high review helpfulness.

4.2.1. Effective Explanation Type

The characteristics of the effective explanation type comments are that the image uses an effect attribute, and the number of interactions appears in a conditional form. Configuration 1 shows a higher consistency between the image and the text, while configuration 2 shows that the comment image contains more information. An example of this type is in Table 9.

Table 9.

Examples of highly helpful reviews in effective explanation type.

When shopping on online platforms, consumers lack real-life product interaction and often use alternative methods to evaluate product value in pursuit of a realistic experience [45]. Showing the product usage effect in reviews can reduce consumers’ uncertainty and improve their perceived helpfulness to a certain extent. For example, in Table 9, the comments in configuration 1 show consumers’ dissatisfaction with the camera’s bad pixels, and the persuasiveness of the comments is enhanced by posting pictures of bad pixels. The comments in configuration 2 show the camera’s shooting effect, expressing consumers’ satisfaction with the product’s usage effect and requesting interaction with other consumers. Therefore, by clearly demonstrating product effects and functionality, this ‘Effective Explanation Type’ primarily helps consumers manage essential processing, as the core information about the product’s performance becomes more concrete and easier to mentally digest. According to the CTML theory, observation of product usage behavior will trigger consumers’ memory retrieval of similar behaviors and help them understand the overall content of the review.

4.2.2. Unilateral Negative Type

Unilateral negative information comments are characterized by negative textual sentiment, accompanied by either high textual information entropy or high image information entropy. Comments in configuration 3 exhibit higher image information entropy, while comments in configuration 4 exhibit higher textual information entropy. Specific examples of comments are provided in Table 10.

Table 10.

Examples of highly helpful reviews in unilateral negative type.

Negative reviews are comments in which consumers tend to express dissatisfaction with their shopping experience by conveying negative emotions, thereby warning other consumers. Existing research indicates that consumers are more likely to trust negative reviews compared to positive ones. This is because negative reviews often use text or images to provide detailed descriptions of product issues or defects. These specific issues are perceived by consumers as more objective evidence of a product’s performance, making it easier to associate such reviews with the product’s objective attributes and enhancing the perceived helpfulness of the review.

For example, in configuration 3, the review highlights dissatisfaction with the product’s shipping service, particularly the lack of attention to the packaging of valuable items. Additionally, the review is supplemented with eight images illustrating different product attributes, demonstrating high image information entropy. In configuration 4, the textual review identifies product shortcomings from multiple perspectives, such as packaging issues, quality problems, and customer service concerns. Although it includes only one review image, it reflects high textual information entropy. The ‘Unilateral Negative Type’ enhances perceived helpfulness by primarily helping consumers reduce irrelevant processing, as the direct and information-rich negative feedback (whether textual or visual) allows for a focused and efficient assessment of potential product shortcomings. Thus, negative reviews with high image or textual information entropy are likely to be more helpful.

4.2.3. Insufficient Integrated Type

The characteristics of insufficient integrated text–image reviews are that the review images focus on a single product attribute, while the integration level between text and image information is relatively low. In Configuration 5, the review images focus on the global attributes of the product, whereas in Configuration 6, the review images focus on the local attributes of the product. Specific examples of reviews are provided in Table 11.

Table 11.

Examples of highly helpful reviews in insufficient integrated type.

Text–image integration represents the alignment between image information and textual information. Previous studies suggest that lower levels of text–image integration reduce the cognitive processing fluency of readers, thereby lowering the perceived usefulness of reviews [8]. This is because when the “visual model” and the “language model” process different concepts, people often experience confusion or a sense of conflict.

However, this study finds that reviews with lower integration levels can also result in high perceived usefulness. For example, in Configuration 5, the textual review describes various aspects such as price protection, free gifts, packaging, and fingerprints, but only the first image corresponds to the information mentioned in the review. In Configuration 6, the textual review describes defects in the camera lens and the rights protection process, but there is only one image which highlights a scratch on the lens.

In these cases, the volume of review images is relatively small, while the text mentions multiple product features or problem scenarios. As a result, the images can only reflect part of the content, making it difficult to maintain consistency with the lengthy text. The ‘Insufficient Integrated Type’, despite its lower integration, enhances review helpfulness by primarily aiding consumers to reduce irrelevant processing, as the focused, albeit limited, imagery can prevent information overload from disparate cues and guide attention to specific key points within the broader textual content. In this situation, the smaller number of images reduces the likelihood of irrelevant cognitive processing, allowing consumers to focus on the key feedback points emphasized by the reviewer and concentrate on the necessary cognitive processing.

On the other hand, the product attributes highlighted by the review images may align with the product information consumers expect to obtain, thereby enhancing the perceived usefulness of the review.

4.2.4. Sufficient Integrated Type

The characteristics of text–image sufficient reviews are defined by simultaneously high textual and image information entropy, along with high reviewer credibility and a significant number of interactions. In configuration 7, the review images focus on the additional attributes of the product. In configuration 8, the review demonstrates a high degree of text–image integration in addition to high textual and image information entropy. Specific examples of such reviews are provided in Table 12.

Table 12.

Examples of highly helpful reviews in sufficient integrated type.

Multimodal reviews can enhance consumers’ depth of processing product information through the diversity of information entropy in both text and images. When the information entropy in a review is high, consumers may expend more cognitive effort to process this information but, in return, gain a more comprehensive understanding and insight into the product. High information entropy in both images and text together creates a comprehensive and in-depth review, aiding consumers in making more effective decisions. This integration of information significantly enhances the perceived usefulness of the review. The ‘Sufficient Integrated Type’ leverages its rich, high-entropy content in both text and images, often coupled with high credibility and strong integration, to help users not only manage essential processing of this detailed information but also to actively promote generative processing, thereby facilitating a comprehensive and insightful understanding. For example, in configuration 7, the review text and images detail the buyer’s satisfaction with the product and service from multiple perspectives, including product packaging, the attitude of logistics personnel, and delivery speed. In configuration 8, the review text adopts a structured format, describing the positive shopping experience from angles such as product packaging, convenience, and other unique features. This structured approach improves the readability of the review, reduces irrelevant cognitive processing in the verbal module, and facilitates subsequent multimodal information integration. As a result, the review in configuration 8 provides diverse product information while reducing the cognitive effort required for processing, making it easier for readers to integrate the information.

Additionally, the platform membership badge of the reviewer enhances the credibility of the information source, improving the overall perceived usefulness during the integration stage where prior knowledge plays a role. Therefore, multimodal reviews with high information entropy in both text and images represent an ideal form of high-quality reviews.

4.2.5. Complementary Type

The characteristics of text–image complementary reviews lie in the conditional complementarity between textual information entropy and image information entropy. Specific examples of such reviews are provided in Table 13.

Table 13.

Examples of highly helpful reviews in complementary type.

A core principle of the CTML is that working memory capacity is limited, and information from different modalities can share the cognitive load [46]. When textual information entropy is low while image information entropy is high, images serve as the primary source of information delivery. Through the diversity and richness of visual information, they can quickly convey key messages and reduce the likelihood of irrelevant cognitive processing by readers. Conversely, when textual information entropy is high while image information entropy is low, detailed textual descriptions provide sufficient information, with images playing a supplementary or intuitive role without requiring complex or diverse visual content. In essence, the ‘Complementary Type’ optimizes cognitive load by primarily helping consumers reduce irrelevant processing, as one modality clearly carries the main informational weight while the other offers focused support without introducing unnecessary complexity or cognitive distraction.

For example, in configuration 9, the review text provides a detailed explanation of issues related to product packaging and invoice services, using only a single image for clarification. In configuration 10, the textual information in the review is relatively simple, but it is supplemented with eight images showcasing different product attributes. Thus, the complementary combination of textual and image information entropy helps optimize the way information is conveyed, thereby enhancing the overall perceived usefulness of the review.

4.3. Robustness Check

Acknowledging the sensitivity and potential for arbitrariness inherent in the calibration of antecedent conditions and the outcome variable in fsQCA, a robustness check was performed. For this check, the PRI consistency threshold was raised to 0.7, with all other analytical parameters held constant. Table 14 displays the results. The parsimonious solution yielded five configurations that met the conditions outlined previously. Specifically, configurations 1, 2, 3, and 4 were identified as subsets of the configurations from the original analysis. Moreover, configuration 5, constituted by its antecedent conditions, showed no substantive changes. This provides a degree of confidence in the stability of the analytical findings.

Table 14.

Configuration analysis for adjusting PRI consistency.

To scrutinize the research findings from an alternative analytical perspective, a binary Logit regression model was introduced as a supplementary analysis. This approach aimed to estimate the impact of each antecedent condition on the likelihood of a review achieving high usefulness. If conditions identified as core or consistently important within the fsQCA configurations also demonstrated a statistically significant influence in the Logit model, this would provide corroborating evidence from a different methodological standpoint for the importance of these factors.

In constructing the Logit model, the outcome variable “Review Usefulness (RH)” was dichotomized. Specifically, when a review’s fuzzy-set membership score for RH exceeded 0.5, the dependent variable, “High Usefulness Review (),” was coded as 1; otherwise, it was coded as 0. The independent variables were kept consistent with the antecedent conditions used in the fsQCA analysis, totaling 11 variables. The basic form of the model is as follows:

The analytical results of the Logit regression model are presented in Table 15. Both interaction volume (IV) and experience attributes (EX) demonstrate a significant positive impact on achieving high review usefulness in the Logit model. This finding aligns with their identification as core conditions in multiple high-usefulness configurations derived from the fsQCA. For instance, IV is present as a core condition in seven configurational paths, and EX appears as a core condition within the ‘Effective Explanation Type’ configuration. Consequently, the results of the Logit regression analysis, particularly in the identification of key factors, exhibit consistency and complementarity with certain findings from the fsQCA, thereby enhancing the robustness of this study’s conclusions.

Table 15.

Binary Logit regression estimation results.

5. Conclusions

5.1. Research Contributions

Leveraging the value of online reviews is crucial for the high-quality development of e-commerce. This study employed a multimodal pre-training model to process image content, identifying four types of product attributes conveyed by review images: global attributes, local attributes, additional attributes, and usage effect attributes. This study explored the intrinsic content of review images and the association of these product attributes with the perceived helpfulness of reviews. Specifically, findings suggest that consumer perceptions of helpfulness differ across various types of review images. Intrinsic attributes, such as global and local product attributes, appear to have lower verifiability for consumers. In contrast, extrinsic attributes, like usage effect attributes, which are more easily verifiable, tend to be associated with a higher degree of activation, thereby potentially enhancing perceived review helpfulness. Furthermore, drawing on the Cognitive Theory of Multimedia Learning (CTML), this research analyzed the integration relationship between review text and images. While previous studies have suggested that higher image–text consistency is conducive to information processing, the findings of this study indicate that even a relatively lower degree of image–text integration may be linked to enhanced perceived helpfulness, possibly by reducing extraneous cognitive processing. This observation aligns with the three demands of cognitive capacity within CTML, suggesting its utility in interpreting the helpfulness of multimodal reviews. These insights deepen the understanding of review image information and offer new perspectives for future multimodal online review research.

From a configurational perspective, this study expanded the dimensions of the relationship between review text and images, aiming to identify complex antecedent configurations associated with the perceived helpfulness of multimodal online reviews. Introducing CTML to construct the antecedent variables and research framework, the study identified five distinct configuration paths: usage effect explanation type, one-sided negative information type, under-integrated image–text type, sufficient image–text information type, and complementary image–text information type. These configurations suggest that high review helpfulness often emerges from the combined interplay of multiple variables, potentially extending existing paradigms in online review helpfulness research. An analysis of specific configuration cases indicated that these five paths contribute to review helpfulness through differing mechanisms, likely influencing various stages of information processing (as detailed in the findings of this study, analogous to information previously contained in Table 16).

Table 16.

Path analysis of helpfulness contributions.

Thirdly, this research introduced text information entropy and image information entropy as variables from an information theory perspective. Text information entropy focuses on the diversity and depth of textual content, while image information entropy emphasizes the diversity of categories among multiple images, thereby addressing potential information omissions in studies focusing on single images. Findings suggest two manifestations of image–text information entropy—sufficient information and complementary information—which, under different cognitive load conditions, appear to be differentially associated with perceived helpfulness. This offers a novel angle for future investigations into the helpfulness of multimodal online reviews and broadens the application of information entropy in this domain. In conclusion, this study is primarily exploratory, designed to identify associations and configurational patterns between features of multimodal online reviews and their perceived helpfulness, as reflected in aggregate user responses (e.g., ‘helpful’ votes). The identified patterns of product attributes, image–text integration, and information entropy configurations suggest that certain combinations of features tend to receive more ‘helpful’ votes within a user population. CTML provides a useful lens for interpreting why some of these combinations might be generally perceived as more helpful, potentially because they facilitate more effective information processing and understanding in a general sense, even considering variations in individual cognitive processes.

5.2. Practical Implications

For platform operators, designing effective review recommendation systems requires translating an understanding of review images into concrete system features by leveraging the distinct helpful configurations of text–image interplay identified in this study. Specifically, they should develop algorithms to automatically categorize reviews based on these structural patterns—such as the ‘Effective Explanation Type’ providing clear demonstrations or the ‘Complementary Type’ balancing information across modalities—assessing image attributes and text–image integration to more accurately identify and prioritize genuinely helpful content for consumers. Recognizing reviews aligning with configurations like the ‘Sufficient Integrated Type’ for depth or ‘Unilateral Negative Type’ for clear issue reporting allows platforms to improve content surfacing. Furthermore, the review submission process can be enhanced with dynamic prompts that guide users toward constructing these more effective review formats. These system-level interventions directly contribute to a higher-quality shopping experience by reducing consumers’ cognitive load when searching for useful information, thereby enhancing overall platform competitiveness.

Meanwhile, platform merchants can also gain significant advantages by systematically analyzing product attributes and information patterns, as reflected in the identified helpful review configurations. Given consumer skepticism towards product claims that are difficult to verify from product descriptions alone [40], merchants should actively learn from customer reviews matching configurations like the ‘Effective Explanation Type’ or ‘Sufficient Integrated Type’, which effectively showcase such attributes. These insights can directly inform how merchants present product information in their own descriptions to build trust. More critically, by categorizing customer feedback based on these distinct review structures, merchants can derive targeted actions for product and service enhancement. For instance, ‘Unilateral Negative Type’ reviews can rapidly flag product defects or service issues for immediate attention. Such focused improvements, grounded in the structural insights of helpful reviews, are crucial for enhancing product design, service quality, and overall competitiveness.

5.3. Limitations and Future Research Directions

The multimedia learning cognitive theory was taken as the theoretical basis to explore the complex causal relationship between the antecedent variables of multimodal online reviews and the helpfulness of reviews. It has made some progress, but there are also some shortcomings. Firstly, this study primarily focuses on search-oriented products, such as SLR cameras, and its findings may be generalizable to other high-value digital products (e.g., mobile phones and computers). However, the product attributes depicted in review images for different product types may vary; for instance, review images for experience-oriented products might contain different types of information. Secondly, the image–text integration relationship in text research refers to the alignment of image and text features in high-dimensional vector space, but the contribution of specific dimensions to the integration relationship lacks explainability, such as how emotional alignment or contextual alignment will affect the integration relationship. Thirdly, the current research did not directly model individual heterogeneity in consumer preferences for specific product attributes or review styles. These issues need to be further explored in the future.

In the future, further exploration of experience-based products can be carried out to make the research results more universal. The contribution of specific dimensions to the integration relationship, such as how emotional alignment or contextual alignment will affect the integration relationship, also needs further investigation. Relatedly, future research could specifically delve into image-conveyed sentiment and explore its congruence with textual sentiment. Directly measuring and integrating consumer heterogeneity also presents a valuable direction for future research. For instance, experimental studies, such as consumer interviews or focus groups, could be employed to measure individual preferences and correlate them with the perceived helpfulness of different types of reviews.

Author Contributions

Conceptualization, C.M. and C.Y.; methodology, C.M. and C.Y.; software, C.Y.; data curation, Y.Y.; writing—original draft preparation, C.Y.; writing—review and editing, Y.Y.; visualization, C.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China, grant number 72104219; The MOE Project of Humanities and Social Sciences, grant number 21YJC870013.

Institutional Review Board Statement

The study was approved by the Zhejiang Normal University Human Ethics Committee (approval code: ZSRT2024131) on 6 May 2024.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Acknowledgments

The authors have reviewed and edited the output and take full responsibility for the content of this publication.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Xu, X. How do consumers in the sharing economy value sharing? Evidence from online reviews. Decis. Support Syst. 2020, 128, 113162. [Google Scholar] [CrossRef]

- Paget, S. Local Consumer Review Survey 2024: Trends, Behaviors, and Platforms Explored. Available online: https://www.brightlocal.com/research/local-consumer-review-survey/ (accessed on 4 September 2024).

- Zhu, L.; Li, H.; Wang, F.-K.; He, W.; Tian, Z. How online reviews affect purchase intention: A new model based on the stimulus-organism-response (S-O-R) framework. Aslib. J. Inf. Manag. 2020, 72, 463–488. [Google Scholar] [CrossRef]

- Salminen, J.; Kandpal, C.; Kamel, A.M.; Jung, S.-G.; Jansen, B.J. Creating and detecting fake reviews of online products. J. Retail. Consum. Serv. 2022, 64, 102771. [Google Scholar] [CrossRef]

- Mofokeng, T.E. The impact of online shopping attributes on customer satisfaction and loyalty: Moderating effects of e-commerce experience. Cogent Bus. Manag. 2021, 8, 1968206. [Google Scholar] [CrossRef]

- Kübler, R.V.; Lobschat, L.; Welke, L.; van der Meij, H. The effect of review images on review helpfulness: A contingency approach. J. Retail. 2024, 100, 5–23. [Google Scholar] [CrossRef]

- Zhang, M.; Zhao, H.; Chen, H. How much is a picture worth? Online review picture background and its impact on purchase intention. J. Bus. Res. 2022, 139, 134–144. [Google Scholar] [CrossRef]

- Ceylan, G.; Diehl, K.; Proserpio, D. Words Meet Photos: When and Why Photos Increase Review Helpfulness. J. Mark. Res. 2024, 61, 5–26. [Google Scholar] [CrossRef]

- Yang, Y.; Wang, Y.; Zhao, J. Effect of user-generated image on review helpfulness: Perspectives from object detection. Electron. Commer. Res. Appl. 2023, 57, 101232. [Google Scholar] [CrossRef]

- Mudambi, S.M.; Schuff, D. Research Note: What Makes a Helpful Online Review? A Study of Customer Reviews on Amazon.com. MIS Q. 2010, 34, 185–200. [Google Scholar] [CrossRef]

- Li, L.; Goh, T.-T.; Jin, D. How textual quality of online reviews affect classification performance: A case of deep learning sentiment analysis. Neural Comput. Appl. 2020, 32, 4387–4415. [Google Scholar] [CrossRef]

- Kar, A.K. What Affects Usage Satisfaction in Mobile Payments? Modelling User Generated Content to Develop the “Digital Service Usage Satisfaction Model”. Inf. Syst. Front. 2021, 23, 1341–1361. [Google Scholar] [CrossRef]

- Mayzlin, D.; Dover, Y.; Chevalier, J. Promotional Reviews: An Empirical Investigation of Online Review Manipulation. Am. Econ. Rev. 2014, 104, 2421–2455. [Google Scholar] [CrossRef]

- Jiang, W.; Wanwan, L. Identifying Reviews with More Positive Votes—Case Study of Amazon.cn. Data Anal. Knowl. Discov. 2017, 1, 16–27. [Google Scholar]

- Wang, Z.; Ji, Y.; Zhang, T.; Li, Y.; Wang, L.; Qu, S. Product competitiveness analysis from the perspective of customer perceived helpfulness: A novel method of information fusion research. Data Technol. Appl. 2023, 57, 437–464. [Google Scholar] [CrossRef]

- Zhang, Y.; Lin, Z. Predicting the helpfulness of online product reviews: A multilingual approach. Electron. Commer. Res. Appl. 2018, 27, 1–10. [Google Scholar] [CrossRef]

- Park, J. Combined Text-Mining/DEA method for measuring level of customer satisfaction from online reviews. Expert Syst. Appl. 2023, 232, 120767. [Google Scholar] [CrossRef]

- Zhao, H.; Liu, Z.; Yao, X.; Yang, Q. A machine learning-based sentiment analysis of online product reviews with a novel term weighting and feature selection approach. Inf. Process. Manag. 2021, 58, 102656. [Google Scholar] [CrossRef]

- Yang, S.; Zhou, C.; Chen, Y. Do topic consistency and linguistic style similarity affect online review helpfulness? An elaboration likelihood model perspective. Inf. Process. Manag. 2021, 58, 102521. [Google Scholar] [CrossRef]

- Guo, R.; Li, H. Can the amount of information and information presentation reduce choice overload? An empirical study of online hotel booking. J. Travel Tour. Mark. 2022, 39, 87–108. [Google Scholar] [CrossRef]

- Xiang, Z.; Du, Q.; Ma, Y.; Fan, W. A comparative analysis of major online review platforms: Implications for social media analytics in hospitality and tourism. Tour. Manag. 2017, 58, 51–65. [Google Scholar] [CrossRef]

- Ren, G.; Hong, T. Examining the relationship between specific negative emotions and the perceived helpfulness of online reviews. Inf. Process. Manag. 2019, 56, 1425–1438. [Google Scholar] [CrossRef]

- Gandhi, A.; Adhvaryu, K.; Poria, S.; Cambria, E.; Hussain, A. Multimodal sentiment analysis: A systematic review of history, datasets, multimodal fusion methods, applications, challenges and future directions. Inf. Fusion 2023, 91, 424–444. [Google Scholar] [CrossRef]

- Li, Y.; Xie, Y. Is a Picture Worth a Thousand Words? An Empirical Study of Image Content and Social Media Engagement. J. Mark. Res. 2020, 57, 1–19. [Google Scholar] [CrossRef]

- Ganguly, B.; Sengupta, P.; Biswas, B. What are the significant determinants of helpfulness of online review? An exploration across product-types. J. Retail. Consum. Serv. 2024, 78, 103748. [Google Scholar] [CrossRef]

- Mayer, R.E. The Past, Present, and Future of the Cognitive Theory of Multimedia Learning. Educ. Psychol. Rev. 2024, 36, 8. [Google Scholar] [CrossRef]

- Zhao, Z.; Guo, Y.; Liu, Y.; Xia, H.; Lan, H. The effect of image-text matching on consumer engagement in social media advertising. Soc. Behav. Personal. Int. J. 2022, 50, 1–11. [Google Scholar] [CrossRef]

- Kumar, S.; Sahoo, S.; Lim, W.M.; Kraus, S.; Bamel, U. Fuzzy-set qualitative comparative analysis (fsQCA) in business and management research: A contemporary overview. Technol. Forecast. Soc. Change 2022, 178, 121599. [Google Scholar] [CrossRef]

- Nikou, S.; Molinari, A.; Widen, G. The Interplay Between Literacy and Digital Technology: A Fuzzy-Set Qualitative Comparative Analysis Approach. 2020. Available online: https://informationr.net/ir/25-4/isic2020/isic2016.html (accessed on 6 September 2024).

- Elgin, D.J.; Erickson, E.; Crews, M.; Kahwati, L.C.; Kane, H.L. Applying qualitative comparative analysis in large-N studies: A scoping review of good practices before, during, and after the analytic moment. Qual. Quant. 2024, 58, 4241–4256. [Google Scholar] [CrossRef]

- Perdomo-Verdecia, V.; Garrido-Vega, P.; Sacristán-Díaz, M. An fsQCA analysis of service quality for hotel customer satisfaction. Int. J. Hosp. Manag. 2024, 122, 103793. [Google Scholar] [CrossRef]

- Rassal, C.; Correia, A.; Serra, F. Understanding Online Reviews in All-Inclusive Hotels Servicescape: A Fuzzy Set Approach. J. Qual. Assur. Hosp. Tour. 2024, 25, 1607–1634. [Google Scholar] [CrossRef]

- Basu, S. Information search in the internet markets: Experience versus search goods. Electron. Commer. Res. Appl. 2018, 30, 25–37. [Google Scholar] [CrossRef]

- Li, J.; Li, D.; Savarese, S.; Hoi, S. BLIP-2: Bootstrapping Language-Image Pre-training with Frozen Image Encoders and Large Language Models. In Proceedings of the 40th International Conference on Machine Learning, Honolulu, HI, USA, 23–29 July 2023; Andreas, K., Emma, B., Kyunghyun, C., Barbara, E., Sivan, S., Jonathan, S., Eds.; PMLR Proceedings of Machine Learning Research: Cambridge, MA, USA, 2023; Volume 202, pp. 19730–19742. [Google Scholar]

- Zhang, W.; Li, X.; Deng, Y.; Bing, L.; Lam, W. A Survey on Aspect-Based Sentiment Analysis: Tasks, Methods, and Challenges. IEEE Trans. Knowl. Data Eng. 2023, 35, 11019–11038. [Google Scholar] [CrossRef]

- Fresneda, J.E.; Gefen, D. A semantic measure of online review helpfulness and the importance of message entropy. Decis. Support Syst. 2019, 125, 113117. [Google Scholar] [CrossRef]

- Hong, S.; Pittman, M. eWOM anatomy of online product reviews: Interaction effects of review number, valence, and star ratings on perceived credibility. Int. J. Advert. 2020, 39, 892–920. [Google Scholar] [CrossRef]

- Filieri, R.; Raguseo, E.; Vitari, C. Extremely Negative Ratings and Online Consumer Review Helpfulness: The Moderating Role of Product Quality Signals. J. Travel Res. 2021, 60, 699–717. [Google Scholar] [CrossRef]

- Sun, S.; Wang, C.; Jia, L. Research on Helpfulness of E-commerce Platform Reviews Based on Multi-dimensional Text Features. J. Beijing Inst. Technol. (Soc. Sci. Ed.) 2023, 25, 176–188. [Google Scholar]

- Bambauer-Sachse, S.; Heinzle, P. Comparative advertising for goods versus services: Effects of different types of product attributes through consumer reactance and activation on consumer response. J. Retail. Consum. Serv. 2018, 44, 82–90. [Google Scholar] [CrossRef]

- Du, Y.Z.; Liu, Q.C.; Chen, K.W.; Xiao, R.Q.; Li, S.S. Ecosystem of doing business, total factor productivity and multiple patterns of high-quality development of Chinese cities: A configurational research based on complex system view. J. Manag. World 2022, 38, 127–145. [Google Scholar] [CrossRef]

- Schneider, M.R.; Schulze-Bentrop, C.; Paunescu, M. Mapping the institutional capital of high-tech firms: A fuzzy-set analysis of capitalist variety and export performance. J. Int. Bus. Stud. 2009, 41, 246–266. [Google Scholar] [CrossRef]

- Ding, H. What kinds of countries have better innovation performance?–A country-level fsQCA and NCA study. J. Innov. Knowl. 2022, 7, 100215. [Google Scholar] [CrossRef]

- Baumgartner, M.; Thiem, A. Often Trusted but Never (Properly) Tested: Evaluating Qualitative Comparative Analysis. Sociol. Methods Res. 2017, 49, 279–311. [Google Scholar] [CrossRef]

- Yang, D.; Zhang, J.; Sun, Y.; Huang, Z. Showing usage behavior or not? The effect of virtual influencers’ product usage behavior on consumers. J. Retail. Consum. Serv. 2024, 79, 103859. [Google Scholar] [CrossRef]

- Sepp, S.; Howard, S.J.; Tindall-Ford, S.; Agostinho, S.; Paas, F. Cognitive load theory and human movement: Towards an integrated model of working memory. Educ. Psychol. Rev. 2019, 31, 293–317. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).