1. Introduction

The periodic technical inspection (PTI) is a regular assessment of vehicles regarding traffic safety and environmental compliance. The PTI follows Directive 2014/45/EU [

1] in the EU and is governed by the German Road Traffic Registration Ordinance (StVZO) and associated directives. The FSD Fahrzeugsystemdaten GmbH (FSD) [

2] acts as the Central Agency for PTI commissioned by the Federal Republic of Germany, providing information and testing instructions. Only authorized inspectors [

3] are permitted to conduct a PTI, during which the vehicle is examined thoroughly and non-destructively according to StVZO criteria. The results of the PTI depend on the identified malfunctions and their severity. If a vehicle has no malfunctions, it passes the PTI and receives a certificate and inspection sticker. Vehicles used for passenger transport must undergo re-inspection every two years [

3].

With the increasing number of automated vehicles [

4,

5], the scope of PTI should also adapt [

6,

7,

8,

9,

10]. A significant challenge is ensuring the correct functioning of environmental sensors essential for automated driving. This is crucial for safe traffic operations. Since 7 July 2024, EU Regulation 2019/2144 [

11] has made installing ADAS and the necessary environmental sensors mandatory for first registrations.

The PTI, therefore, faces significant challenges [

12]. New test methods are needed to test the environment sensors and the associated ADAS functions. The reliable functionality of these systems is essential, as drivers are often unable to react appropriately in the event of technical malfunctions [

13].

In addition to the PTI, vehicle self-diagnosis is continuously carried out as a technical check by the vehicle itself by the manufacturer. However, related work has shown that vehicle self-diagnosis does not always work without errors [

14,

15].

There are already promising approaches to meet the need for new test methods for vehicle environment sensors and ADAS. In work in [

16], camera detection, including the lighting system, is tested. The tests take place in a custom-built hall that allows reproducible variation of the light intensity, from complete darkness to full brightness. In addition, pairs of headlights can be adjusted in their horizontal distance to the test vehicle. [

16]

The work by [

17] presents methods for testing camera systems as a function of the development process. However, there is no consideration during vehicle operation. The options mentioned include simulations, hardware-in-the-loop (HiL) test benches, video interface boxes (VIB), and vehicle-in-the-loop (ViL) test benches [

17].

FSD, together with DEKRA and other partners, has developed new test methods for automated driving functions and ADAS in the ErVast project [

14]. The functionality of vehicle systems and environment sensors was tested in the project. A target-based approach was developed in which a dynamic target stimulates the environment sensors of a vehicle under test (VUT). It was determined whether the stimulation was correct. A framework developed in the project made it possible to connect the components and to control and evaluate test sequences. Overall, the project contributes to improving road safety through “Vision Zero”, a future without road fatalities [

14].

The KÜS DRIVE (Dynamic Roadworthiness Inspection for VEhicles) [

18,

19] is a modern test bench for developing new test procedures for the PTI of automated vehicles. It includes various components such as radar target simulators and camera monitors to test the functionality and safety of the vehicles. A unique feature is the scenario-based efficiency test, which checks the reaction of the technical system to known stimuli without physical contact, supported by over-the-air technology. Vehicle-in-the-loop (VIL) simulation enables testing in a virtual environment. ADAS functions can also be tested. Despite its high cost and complexity, the test bench offers valuable opportunities for research and development of new test methods [

19].

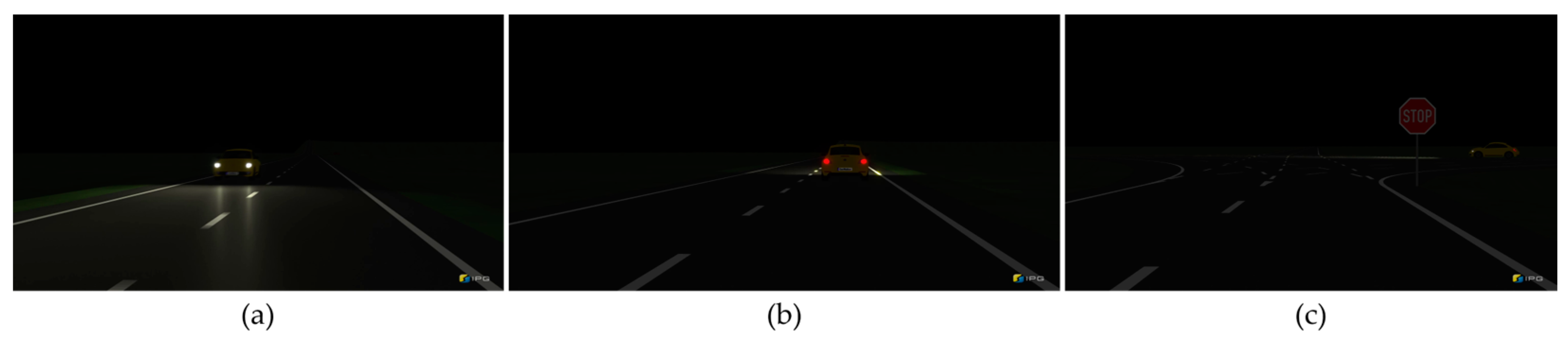

In our previous work [

6], several concepts for testing a vehicle’s front camera were developed. One concept was selected as a cost-effective test method and implemented in a proof of concept. The results show that the test vehicle, a Tesla Model 3, reacts to simulation scenarios projected onto a screen in front of the vehicle, stimulating the vehicle’s front camera. With the high beam assistant activated, a complete vehicle reaction was detected by automatically switching off the high beam.

This work aims to improve the developed method and to demonstrate a proof of concept with new test series. Furthermore, the test method is to be validated with additional tests. The following research questions should, therefore, be answered with this work:

RQ1: Is it possible to stimulate a sensor for vehicle environment perception with a virtual driving environment to trigger a complete vehicle reaction?

RQ2: How realistic must the stimulation of the sensor for vehicle environment perception be to trigger a complete vehicle reaction?

4. Discussion

The results of test series 1 on the Tesla Model 3 can be compared directly with the results of the proof of concept on the Tesla Model 3 from [

6] and with validation 3 on the VW ID.3. The simulation scenarios were played at constant brightness in all three test series. In [

6] the stimulation was carried out via a projector in test series 1 of this work via a tablet on the Tesla Model 3 and in validation 3 via a tablet on the VW ID.3. The stimulation with the projector worked reproducibly in all three simulation scenarios. With one exception, vehicle reactions could always be triggered. Here, the type of light source in the simulation (headlights or taillights) does not appear to influence the result. In contrast, when stimulated via a tablet on the Tesla Model 3, only the simulation scenario with the leading vehicle could always trigger a vehicle reaction without exception. With the oncoming vehicle and vehicle from the side, no vehicle reaction could be triggered, with one exception. Here, it appears that Tesla is particularly sensitive to the red taillights of a leading vehicle when stimulated by a tablet. Surprisingly, there is no vehicle reaction for the vehicle from the side with only one taillight. This could be because the size of the individual taillight always remains the same during the simulation. In contrast, the size of the taillights on the leading vehicle increases as the VUT approaches the leading vehicle. With the VW ID.3, on the other hand, a different behavior can be observed. Apparent results were obtained despite a high number of test trials. The larger headlights of the oncoming vehicle are presumably particularly effective here, as a vehicle reaction could always be triggered.

RQ1 can be answered with the discussed results: Yes, stimulating a sensor for vehicle environment perception with a virtual driving environment is sufficient to trigger a complete vehicle reaction. Depending on the VUT used, the simulation scenarios can be more or less effective in triggering a vehicle reaction.

In both test series 2 and validation 1 with the color images, the results between the Samsung A8 tablet and the Google Pixel C tablet barely differ. There is only a slight trend that the Google Pixel C Tablet can trigger a vehicle reaction even at lower brightness levels. This is consistent with the light intensity measurements taken in validation 1. The light intensity of the Google Pixel C Tablet tends to be slightly higher compared to the Samsung A8 Tablet for all colors. In particular, the light intensity at the first brightness level of the white color image is more than twice as high, and at the fifth brightness level of the white color, the image is almost twice as high as on the Samsung A8 tablet.

In test series 1, two scenarios—an oncoming vehicle and a vehicle from the side—did not trigger a vehicle reaction. The Samsung Tab A (2016) tablet was used in these tests. In the tests from test series 2, other tablets were used, such as the Samsung A8 tablet and the Google Pixel C tablet. Here, a vehicle reaction could be triggered with each simulation scenario. Not even the maximum brightness of the tablet was necessary for this. The Samsung Tab A (2016) may have a lower brightness than the other two tablets. Unfortunately, the light intensity was not measured for the Samsung Tab A (2016), so it can only be speculated about.

The RQ2 can be answered with validation 1: The stimulation of a sensor for vehicle environment perception does not have to be realistic to trigger a complete vehicle reaction, at least in the case of the vehicle’s front camera. Simple color images are sufficient to trigger the reaction. Traffic scenarios for the simulation are not required to trigger a vehicle reaction using this method.

It is also interesting to note that the VW T-Cross recognized the test setup as a fault immediately after the ignition was switched on and the high beam assistant was reasonably deactivated. In the VW ID.3, another model from the same manufacturer, and in the Tesla Model 3, the self-diagnosis shows no error. Accordingly, no assistance functions are restricted, which is questionable given the camera’s limited field of view due to the test setup.

However, even after the tests, it remains unclear whether the VUT deactivates the high beam due to object recognition, i.e., actually recognizes the simulated vehicle as such or only reacts due to brightness. The necessity of utilizing the tablet’s operating system overlay to adjust the display brightness of the tablet in front of the front camera has already led to changes in brightness. During the tests, it occasionally happened that the high beam was already deactivated when the operating system overlay was opened. At least with the Tesla Model 3, the can data confirms the assumption that the vehicle reaction is based solely on a change in the ambient brightness. The CAN data shows that in all samples from validation 2, the vehicle reaction is due to the ambient light. A CAN message was expected with “HIGH BEAM OFF REASON MOVING VISION TARGET” or “HIGH BEAM OFF REASON HEADLIGHT”.

The Tesla Model 3 also allows the image from the front camera to be shown on the proprietary display in the vehicle interior. After the test setup, this view shows that the tablets were visibly placed in front of the front camera, but the display is blurred. The front camera will most likely not be designed to place the focal point at such a close distance, making it even more questionable whether the vehicle reaction is based on a recognized vehicle. Moreover, manually positioning the tablet by eye is a method that is difficult to reproduce accurately. While Tesla’s proprietary display with the front camera image allows for almost borderless positioning, this feature is not available in every vehicle and is time-consuming.

As an independent third party with no intrinsic knowledge of the vehicle, data processing in the vehicle can only be regarded as a black box. This is seen as the biggest problem for the test method used. For reliable tests, the tester should know what the vehicle perceives and interprets via the perception of the environment. Notably, the Tesla Model 3 is equipped with several additional cameras (such as those on the side mirrors, B-pillars, and rear) that are not stimulated using this method. Since the vehicle is treated as a black box, how perceived environmental data is processed remains unknown. Sensor data fusion seems possible, where the front camera detection (simulation scenario) might contradict the detections from other environmental sensors (real stationary environment). This discrepancy could lead to potential error messages, depending on how the data is processed. This issue already arises with stereo cameras, as the method does not account for such technologies. Stereo cameras that detect the tablet screen cannot provide depth information, leading to possible issues in data processing and vehicle response. Consequently, this method cannot determine whether the high beam deactivation timing is correct, too early, or too late. Also, this method cannot verify whether the dynamic high beam assist is dimming the correct segment. If an additional sensor is unintentionally stimulated, for instance, by an interfering vehicle or a person at the test site, the test must be repeated as a precaution. This is necessary because the detection of additional traffic participants is very likely to be captured by other sensors, thus directly influencing the data processing. This precautionary measure is time-consuming. An interface that reads a live sensor data feed from all vehicle environment sensors would suit this purpose. In addition, a read-out intention or calculated behavior of the vehicle based on the recorded data would be desirable. An elaboration of this interface and the definition of its scope is helpful here.

Furthermore, the method’s validation is limited to the three vehicles used in this work. The method could be successfully validated for the Tesla Model 3 and VW ID.3; this was impossible with the VW T-Cross. Although the three test vehicles used are sufficient for this proof of concept, further research must show the method’s suitability for other vehicles and whether generally valid statements can be derived from it.

Currently, this method is unsuitable for PTI, as it cannot fully determine the vehicle reaction’s basis. In addition, the time required, especially with actual test drives, is too long for the PTI framework. An interface for activating assistance systems at a standstill without having to fulfill the usual conditions would simplify testing significantly.

However, it should always be considered that testing on its own does not guarantee error-free performance [

40]. The primary goal should be to make automated vehicles safer and to ensure the functionality of environmental detection. To this end, alternative approaches should be pursued in addition to the test method described in this work. These include, for example, improving the vehicle’s own diagnostics or concretely developing standardized test methods.

5. Conclusions

In this work, we continued the test method we developed in an earlier work [

6] for testing a vehicle’s front camera. The aim was to stimulate the front camera of a complete vehicle by using simulation. A vehicle reaction was detected with the high beam assist. The test was passed if the high beam was automatically deactivated during the simulation.

As part of this work, the original test method was enhanced—the amount of ambient light and interfering factors could be reduced to a minimum. This was achieved by covering the new test setup. The stimulation is performed using a tablet in front of the front camera instead of a projector with a screen.

Two test series were carried out. A vehicle reaction could be triggered by automatically deactivating the high beam by stimulating the front camera using simulation scenarios. From this, RQ1 can be answered: The stimulation of a sensor for vehicle environment perception with a virtual driving environment is sufficient to trigger a complete vehicle reaction.

Three independent approaches were chosen to validate this test method. The first validation showed that abstract stimulation, for example, by a color image, is already sufficient to trigger a vehicle reaction. RQ2 can, therefore, be answered: The stimulation of a sensor for vehicle environment perception does not have to be realistic to trigger a complete vehicle reaction.

The second validation analyzed the CAN bus signals of the Tesla Model 3 regarding the high beam assist. As a result, it was observed that the automatic deactivation of the high beam is due to ambient light. Possible other signals, such as moving target or headlight, were not recorded. This leads to the assumption that the vehicle reaction is due to a change in brightness and not based on actual recognized objects or road users.

In a third validation phase, the method was applied to the Volkswagen ID.3. This resulted in changes in the vehicle type and the high beam assist technology. The ID.3 comes with a dynamic light assist. During the tests using the test method, a change in the high beam cone segment was identified. Overall, the applicability of a Volkswagen ID.3 was successful. However, an application in a Volkswagen T-Cross could not be examined, as the vehicle’s self-diagnosis gave an error due to the test setup.

In general, the testing method has several limitations, which make it impractical to integrate into the current state of periodic technical inspections (PTI). The most significant problem with the method is that the data processing in the vehicle must be treated as a black box for independent third parties. It is often impossible to understand what environmental data the vehicle collects and how it is processed. It, therefore, remains mostly unclear why the vehicle reacted in our test trials. Although a reproducible reaction could be triggered with the test method, it remains theoretically conceivable that further unintentional stimulation, for example, of the other environmental sensors, could have triggered a vehicle reaction. Besides, the method is also too time-consuming.

In summary, new test methods are required for independent functional testing of vehicle environment sensors and ADAS functions to ensure their functionality throughout vehicle operation. Vehicle manufacturers will eventually have to provide more information about the vehicles so that appropriate test methods can be used.