1. Introduction

Telemedicine is broadly defined as information and communications technologies that provide and support health care between participants in the distance [

1]. From a simple voice communication to a teleoperated surgical robot, telemedicine includes a wide range of different technologies. Nonetheless, most systems are based on video-mediated communication, through which social interaction between two parties as well as information acquisition takes place. Humanoid robots or virtual reality (VR) technologies have been employed to improve the social interaction aspects of telemedicine, and various cameras and image processing techniques have been applied for visual information acquisition.

One of the widely used camera types is a pan-tilt-zoom (PTZ) camera [

2]. PTZ refers to the camera’s ability to move side-by-side (panning), up-and-down (tilting), and enlarge/shrink the captured scene (zooming). This ability allowed the remote physician to control the camera orientation to communicate with in situ medical practitioners with regards to the context, and zoom in when an up-close view is needed, e.g., inspecting wounds or reading medical instrument. However, the PTZ camera could be difficult to control. Chapman et al. [

3] designed an ambulance-based telestroke platform with a PTZ camera and assessed its usability. In their evaluation, one physician commented on how difficult it was to maneuver the PTZ camera.

Another popular camera used in telemedicine is an omnidirectional camera. Unlike PTZ cameras, omnidirectional cameras capture a semi- or entire spherical visual field, thus there is no need for mechanical maneuvering of the view direction. Laetitia et al. [

4] used an omnidirectional camera in a pre-hospital telemedicine scenario. The camera allowed a remote physician to see the entire scene inside an ambulance, including a patient and first responders. Also, the camera supported digital zooming: an image processing technique to enlarge certain areas on the image. Though an omnidirectional camera could capture the entire scene, it did not provide better resolution compared with the PTZ camera. Digital zooming would only enlarge the image region pixel size (with probably additional image processing technique) without changing the image angular resolution. The angular resolution was certainly much lower compared with the PTZ camera which could do optical zooming.

The problem of increasing FOV while providing high angular resolution around the region of interest (ROI) has been the primary issue of foveated imaging research. The research field takes advantage of the foveation phenomenon in the human visual system (HVS) caused by the non-uniform distribution of photoreceptor cells (rods and cones) that lines the retina. Cones are less sensitive to light compared with rods but provide color perception and perceive finer details in the image. Thus, visual acuity, e.g., spatial resolution, of the human eye will be highest in the region that contains a high concentration of cones. The cone density is highest at the fovea and drops off sharply as the distance from the fovea increases. Consequently, the visual acuity is highest within a range of 2

from the fovea and falls off very sharply beyond that [

5].

Two approaches exist when capturing a scene for foveated imaging [

6]. First, specialized imaging sensors have been designed and applied to capture the scene with different resolutions. Examples are non-uniform sampling sensors [

7], foveated optical distortion of the lens [

8], and multiple-channel segmented single sensors [

9]. Second, two or more cameras can be used and post-processed to generate a unified foveated image [

9,

10]. Though custom imaging sensors have been proposed [

9,

11], this approach also exploits off-the-shelf cameras [

10]. We follow the latter approach, in agreement with Carles et al. [

6]’s argument that the low-cost, high-performance computing has made post-processing an appealing solution compared to the complexity of custom sensor and optics design.

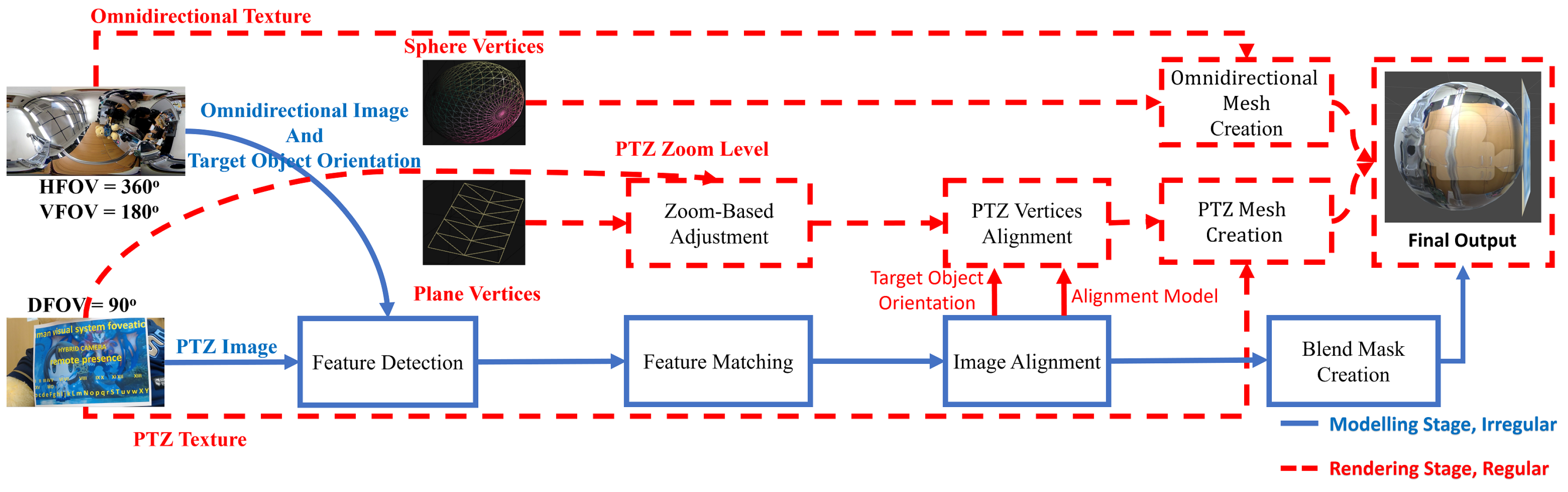

In this paper, we present a foveated imaging pipeline that integrates a wide-FOV image from an omnidirectional camera with a high-resolution ROI image from a PTZ camera. Unlike most foveated imaging research for wide FOV, we specifically designed our pipeline to support VR HMD, thereby maximizing the benefits of the use of omnidirectional camera—i.e., head synced view control and consequent increased spatial awareness [

12]. To do so, we separated our pipeline into two parallel stages: modeling and rendering. Instead of integrating two images in pixel level, we adjusted the positions of 3D geometric primitives in the modeling stage and rendered the camera images onto the corresponding primitives in the rendering stage. The positions of the primitives are updated only when the ROI (i.e., PTZ orientation) is changed. Regardless of the modeling stage, the rendering stage keeps updating frames from each camera. Thus, the computational load of the modeling stage does not affect the frame rate of the foveated view. Moreover, we applied a zoom-based adjustment and blend mask for the seamless integration of the two images.

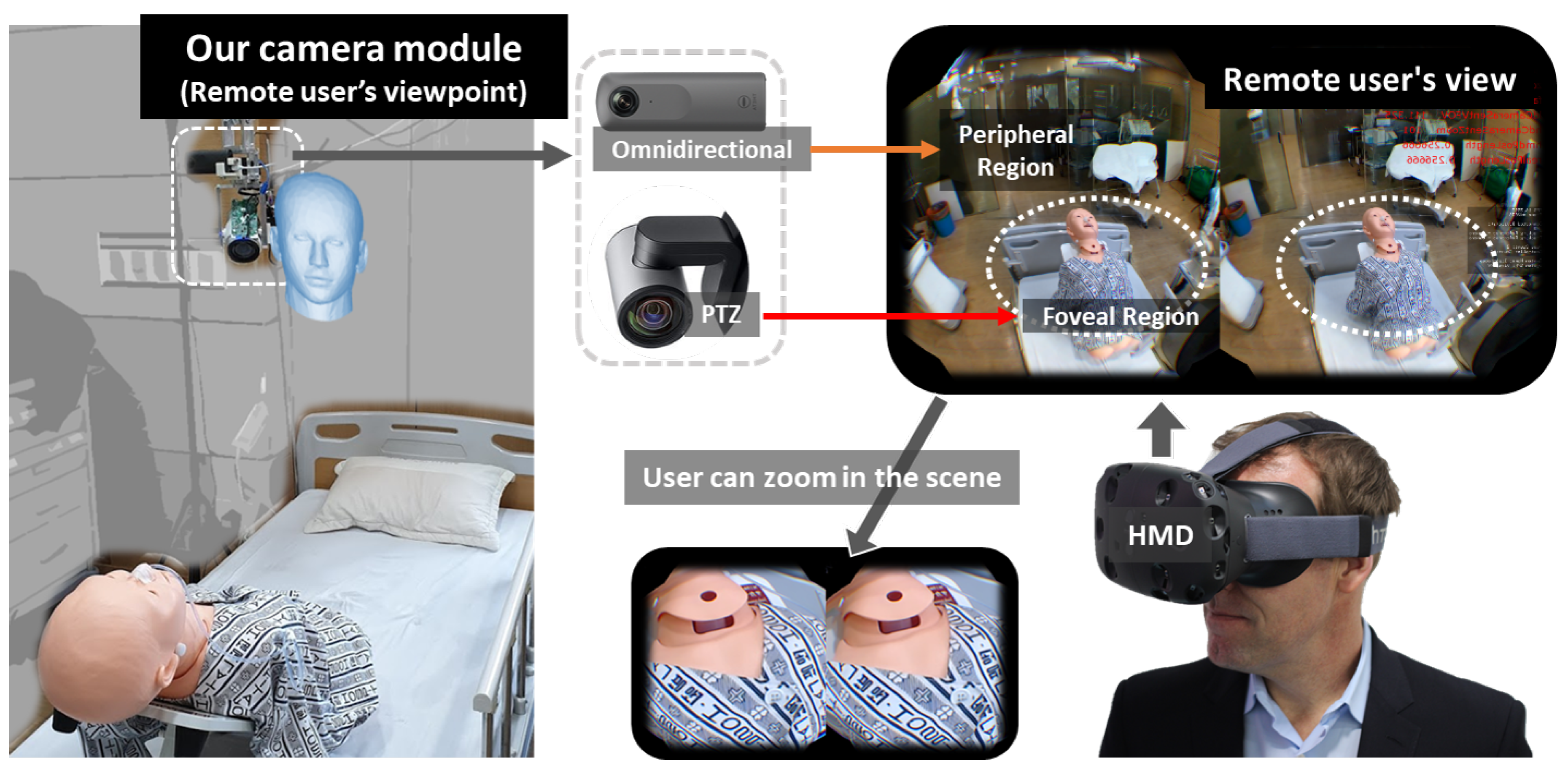

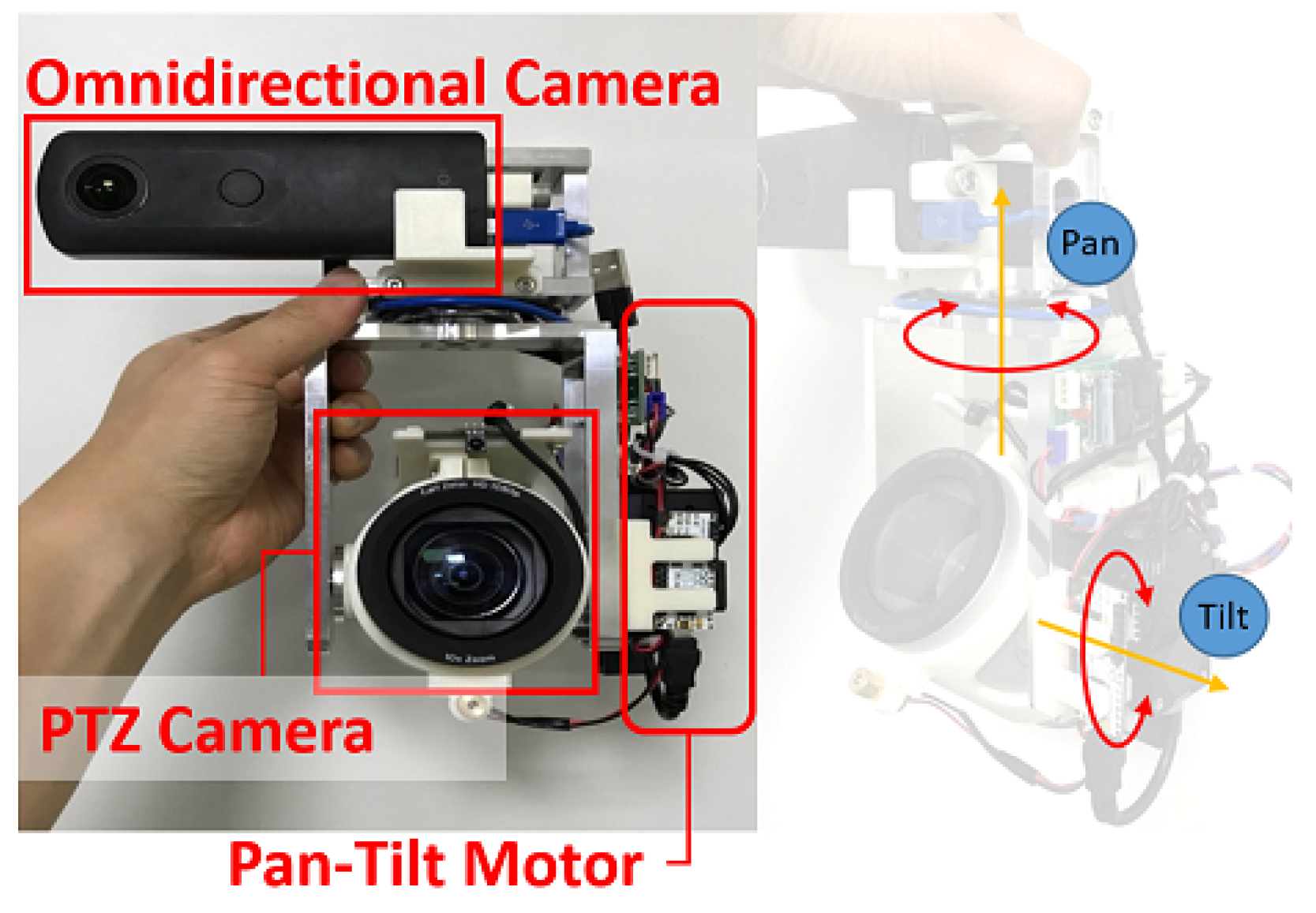

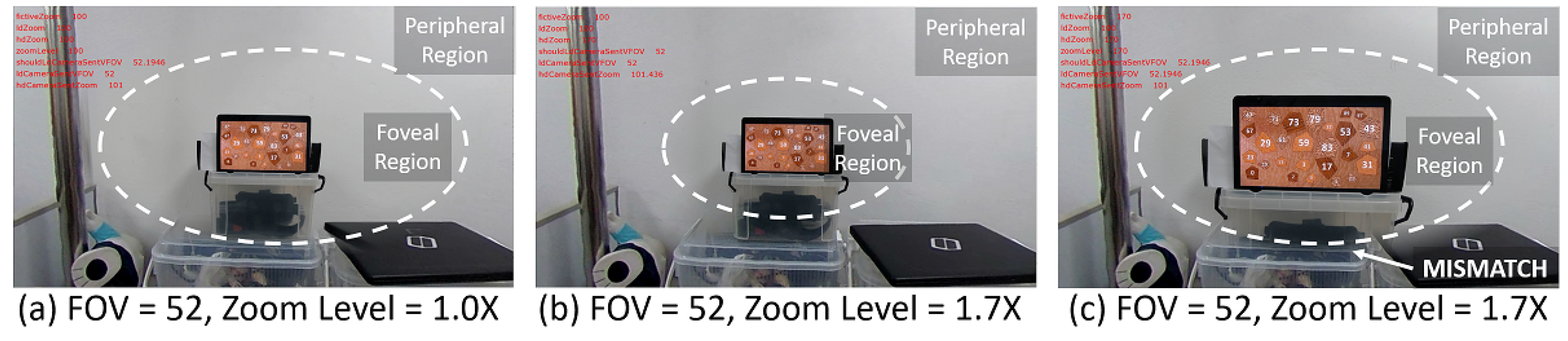

The telemedicine system we develop with our foveated imaging pipeline is shown in

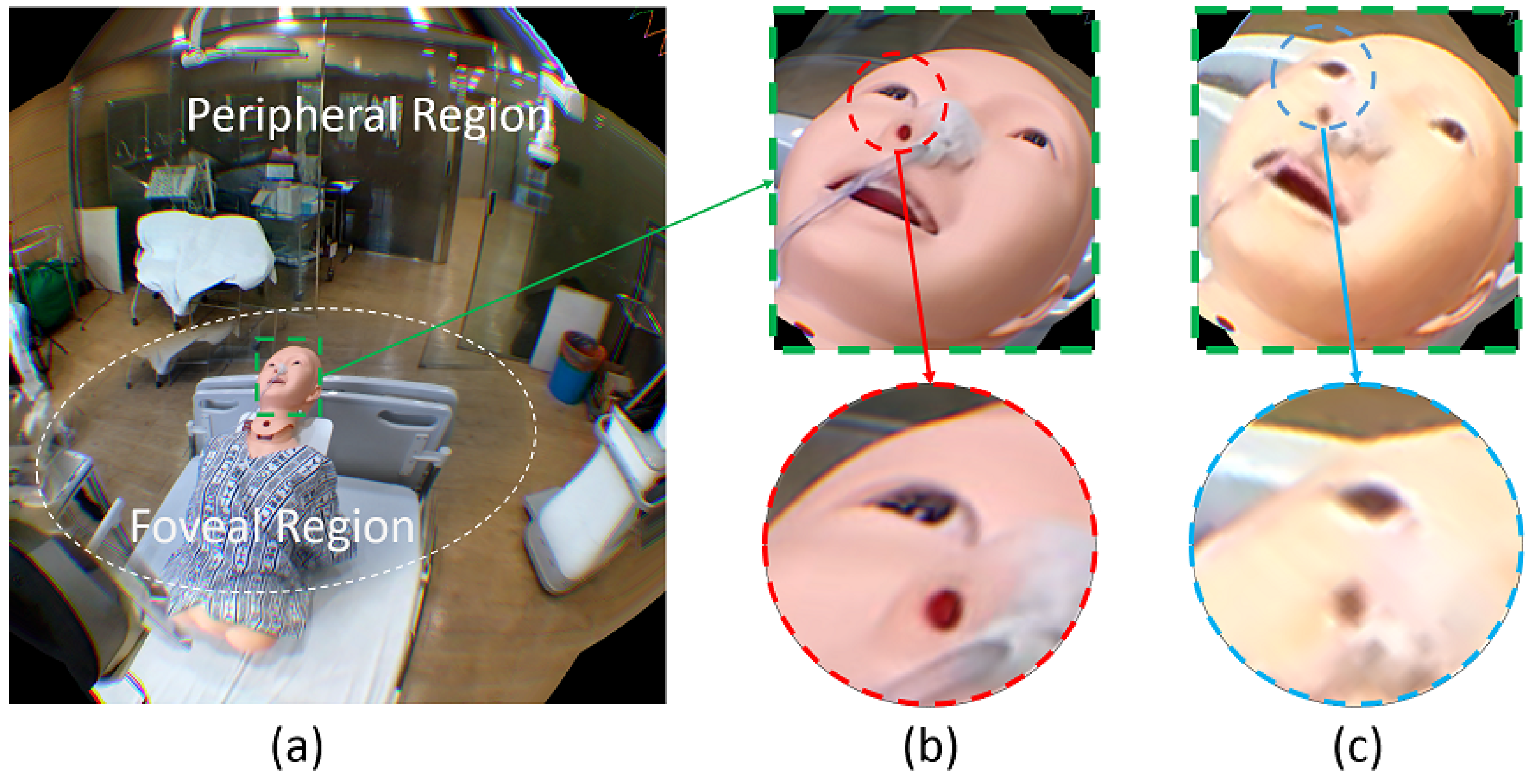

Figure 1. Our camera module consists of an omnidirectional and PTZ camera pair, and a remote physician sees the scene from the position of the camera module through a VR HMD. The foveal region (ROI) contains the target object (the patient). The foveal region is rendered in high angular resolution and smoothly superimposed onto the peripheral region, i.e., 360

background. The remote physician can zoom in the scene to inspect the wound area in detail.

Our contributions are summarized as follows: First, we propose a telemedicine system consisting of an omnidirectional and high-resolution PTZ cameras that supports 360 video streaming for VR HMDs with a high angular resolution for the foveal region. Moreover, our system allows the remote user to zoom in the foveal region in high detail. Second, we present a novel foveated imaging pipeline for VR HMDs, which includes parallel modeling and rendering stages. In that, additional techniques, such as zoom-level adjustment and masking, are also devised to improve the quality of the foveated image.

The rest of the paper is organized as follows: In

Section 2, we provide a literature review of the foveated imaging system.

Section 3 details our hybrid camera module and the proposed pipeline that enables the real-time rendering of the foveated view on HMDs. In

Section 4, we present evaluations on the proposed system, in terms of the angular resolution for the foveal region, overall processing time, and frame per second achieved. Then, we further discuss improvement points based on the results of our evaluations. Finally, we summarize and conclude the paper in

Section 5.

2. Related Works

Foveated imaging research not only addresses how to design the cameras but also how to use or view the foveated image itself. One of the early uses of such foveated imaging-based camera systems was for object tracking, whether stationary [

13], on a robot [

10], or mobile agent-based surveillance [

14]. Typically, an omnidirectional image is processed with a fast algorithm to extract the ROI. The centroid of the ROI combined with external camera parameters is then converted to the pan and tilt angle of the PTZ camera. The PTZ camera then either shows the object in a higher resolution or further classifies it. In this research, they analyze the obtained image separately. The way of how they present the images is not essential.

On the other hand, several applications require more attention on how they should present both images to the user. Qin et al. [

15] designed a laparoscope that consists of two fully integrated imaging probes: a wide-angle and a high-magnification probe. They showed the wide-FOV and high-resolution images in separate windows. However, the separation between the two views will increase the user workload when navigating as they need to split the focus between the two windows [

16].

Several researchers have also tried to integrate the multi-resolution images that come from a single PTZ camera [

17] or a pair of wide-angle non-full panoramic 360

camera and PTZ camera [

18,

19] for surveillance application. The problem of integrating the different resolution images is located on how we would preserve the high-resolution region detail. Precisely, the common way in mosaicking different images are by performing an image stitching process in pixel level during graphics rendering. However, merging the high-resolution image onto a low-resolution one will certainly reduce the detail captured in a stitched high-resolution region. The foveal region on foveated images will not have the same high angular resolution to the PTZ camera, even if we try to digitally zoom in on the foveal region. On the contrary, merging the low-resolution image onto the high-resolution one will require the upsampling of the low resolution, thus make the target rendered image bigger than the original [

19]. Existing solutions to preserve the high-resolution detail is either to construct a 3D model of the scene [

17] or use a large high-resolution display [

18]. Another way is to use a separate output display to render the output of each camera [

20]. However, these viewing approaches are impractical to implement for our application. Their resulting images cannot be rendered directly for VR HMDs.

Other researchers are attempting to take advantage of foveation phenomena in panoramic 360

video streaming. However, the purpose of the foveation is to save the streaming bandwidth and computational power [

21]. Thus, instead of trying to improve the quality of the 360

on the foveal region, they reduce the resolution rendered in the peripheral region. This gives a direct implication on the hardware design of the camera they used. For example, they use a camera rig containing several high-resolution cameras to capture 360

scene and then locally stitch the images in the server [

22,

23]. They decided which region is rendered in high resolution based on some criteria, such as user eye-gaze orientation [

23] or saliency importance of each region [

22,

23]. They then render foveal regions at a higher resolution and peripheral region in a lower resolution on pixel level [

23]. Alternatively, they perform a non-uniform spherical sampling ray approach and adjust the graphics vertices position accordingly to allocate more pixels on the foveal regions [

22]. However, aside far from real-time performance, the approach also distorts the foveal-peripheral region boundary.

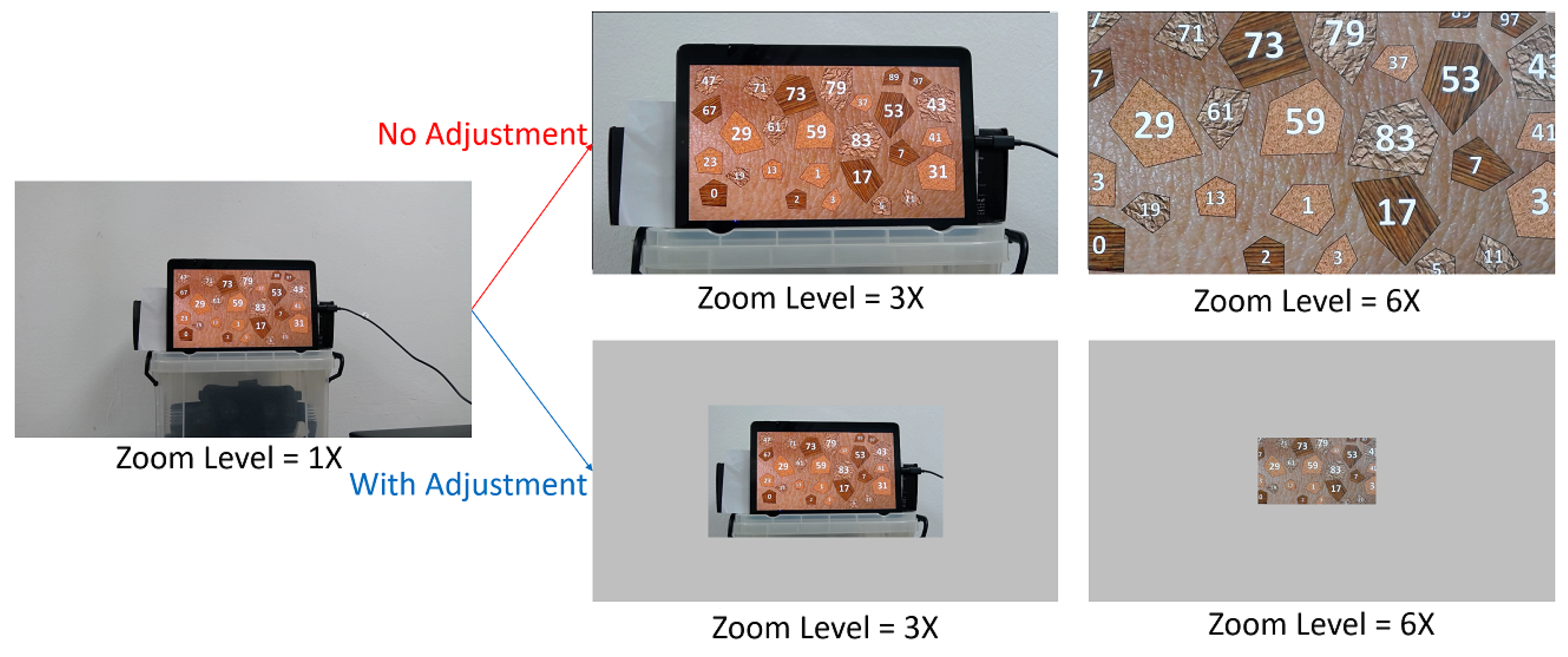

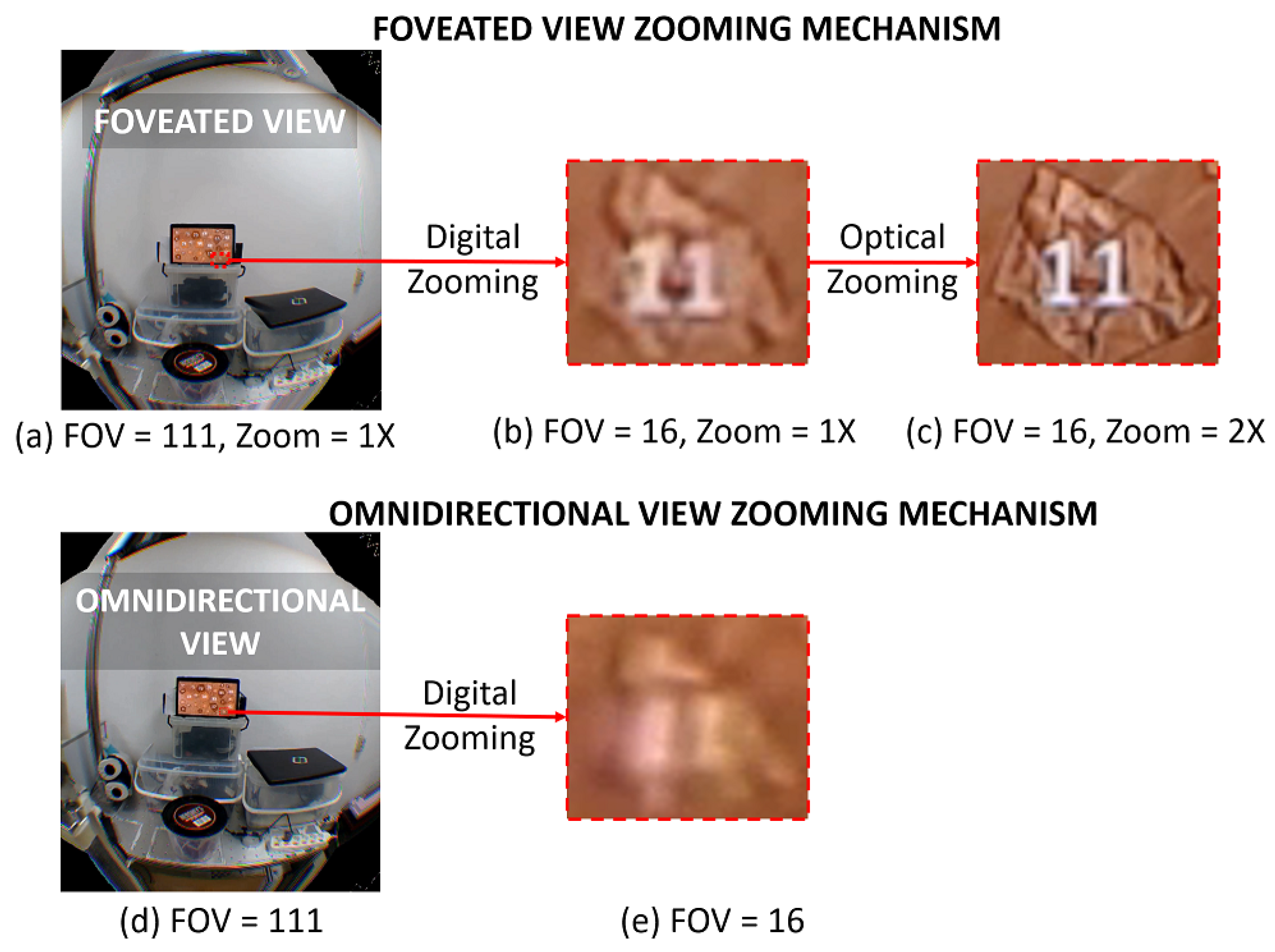

We aim to render the foveal region in 360

video stream in high resolution while preserving the bandwidth as efficiently as possible. Inspired by the usage of hybrid camera for surveillance systems, we use an omnidirectional (360

FOV) and PTZ camera pair for 360

streaming on VR HMDs. Compared to other foveated 360

videos [

22,

23], the usage of the PTZ camera in our system will enable a remote user to see the foveal region up-close in detail with its zoom component. However, it raises additional challenges on how we can preserve the high angular resolution detail for high optical zooming value. High optical zooming PTZ cameras will render only a small part of the captured scene compared to panoramic 360

images captured by the omnidirectional camera. While we can use common direct stitching techniques on a pixel level to render the foveated view on high-resolution 2D monitor, the VR HMD display pixel resolution is not high enough to cover the whole multi-resolution foveated view while preserving the foveal region high angular resolution. Thus, we propose a foveated stitching pipeline that works on the vertices level. Our vertices level stitching method separates the foveal region mesh from that of the peripheral region. The mesh separation allows the foveal region mesh to preserve its texture source (PTZ camera) angular resolution. We also describe the accompanying zooming mechanism so that the local physician can inspect the foveal region in high-quality while zooming in.

Table 1 shows a comparison of the previous foveated imaging systems along with ours.

4. Evaluation and Discussion

In this section, we present evaluations of the proposed system and discuss the results and potential improvements. We first discuss the approximated angular resolution of our foveated view in comparison with human visual acuity. Secondly, we show our computation times for the modeling and rendering stages, which gives support for the separation of the two. Then we identify the two main error sources in the foveated view pipeline: pixel registration error and zoom-based adjustment error. Lastly, we provide our experimental results of the foveated view image sharpness in various zoom levels and discuss the implications.

For the experiments, the hybrid camera module and HTC Vive VR devices were connected to a single desktop PC as our focus was not on the network latency. Specifications of the desktop PC were as follows: Intel i7-7700K CPU, 16 GB RAM, 64-bit Windows 10 Pro. We used OpenFrameworks 0.10 to access hardware devices (hybrid camera module and HTC Vive VR devices) and OpenGL rendering functions. We also used OpenCV 4.0 to implement image processing algorithms.

4.1. Angular Resolution Approximation

We used Clark’s approach [

31] to approximate human eye angular resolution. We used visual acuity of 1.7

. Two pixels are required to create one line pair. Thus, we approximate the human eye angular resolution for each horizontal and vertical direction as follows:

The maximum angular resolution that can be given by our foveated view comes from maximum foveal region angular resolution, which depends on PTZ camera video pixel size at the highest optical zoom level. First, we used the following function to find the corresponding FOV angle for certain “X×” zoom value:

where

and

is the FOV at 1× and target X× respectively. Our PTZ camera can give up to 10× optical zoom. From

Section 3.1, we get 82.15

and 52.24

as PTZ camera HFOV and VFOV at 1× zoom value, respectively. Inserting these values to Equation (

2) resulted in 9.96

and 5.61

as PTZ camera HFOV and VFOV at 10× zoom value, respectively. Therefore, the PTZ camera will have a maximum horizontal and vertical angular resolution:

As can be seen from Equations (

3) and (

4), our PTZ camera at maximum zooming value has similar angular resolution to human eye.

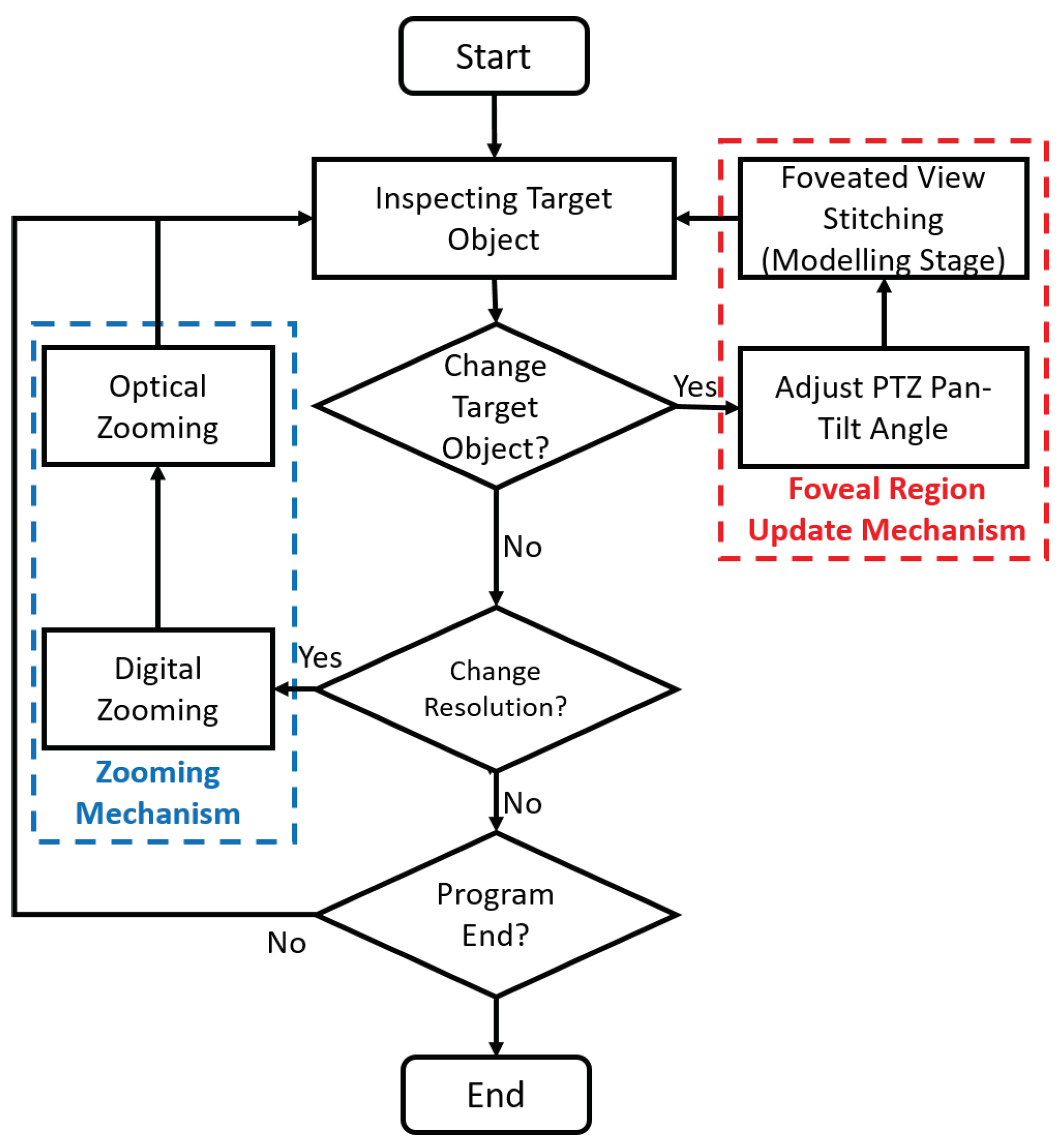

4.2. Computation Time

To measure the processing time of our foveated view stitching pipeline, we ran an image stitching process for 100 times: we used 5 levels of pan and tilt angles respectively, and 4 zoom levels per each pair of the pan-tilt angles.

Table 2 shows the average processing time for each block. Preprocessing in the table refers to the process required to initialize OpenCV variables. The total computation time of the modeling stage is around 2.18 s, of which about 89% of the time was devoted to the feature detection block. The fact that we neither did limit the number of features to be detected in each camera image nor accelerated the detection algorithm with GPU in the current implementation gives a hint to a potential speed-up of the modeling stage. For example, Bian et al. [

32] demonstrated a GPU accelerated detection algorithm, based on SIFT and FLANN matcher similar to ours, could detect 1000 features in tens of milliseconds.

However, a faster modeling stage would have little or no effect on the rendering stage due to the separation between the two stages in our stitching pipeline; yet, it might improve user experience. In the rendering stage, the zoom-based adjustment takes about 3 ms and the PTZ vertices alignment takes 0.01 ms. Compared to a baseline performance where we rendered the two videos without stitching, those additional processing time (3.01 ms) in the foveated view rendering caused a small decrease in frame rate: from 18 to 17 fps. In other words, users received constant foveated view updates, even with the relatively slow modeling stage. However, if they relocate the foveal region, they would need to wait for around 2.18 s to finally be able to see the target object in high-resolution. In this sense, a faster modeling stage can bring more responsiveness in the system.

4.3. Pixel Registration Error and Zoom-Based Adjustment Error

One way to consider the quality of our foveated view would be how well the PTZ camera image is superimposed onto the omnidirectional camera image. However, to the best of our knowledge, there are no standardized methods to measure such quality of the foveated view, specifically for a case where one of the stitched images is in equirectangular form. Therefore, we devised proxy measures that are closely related to the final quality of our foveated view: pixel registration error and zoom-based adjustment error.

Pixel registration error,

, was designed to measure the mismatch between the detected features on the omnidirectional image and the corresponding features on the PTZ image. A smaller error can lead to a better 3D alignment mapping, thus affecting the final image quality. Although Zhao et al. [

33] proposed a method to find matched features between planar and omnidirectional equirectangular images, their feature detection only occurred in the equatorial region of the equirectangular image. On the other hand, in our case, users could need to locate the foveal region, i.e., PTZ image, outside the equatorial region. Thus, we decided to use a rectilinear projection for the omnidirectional image to run the SIFT feature detector on the projected planar image. Given the detected features on the omnidirectional image,

, and the corresponding features on the aligned PTZ image,

, we used root-mean-square error to calculate the average mismatch:

We calculated the pixel registration error of the 100 stitching process trials and averaged them. The average pixel registration error was 1.45 pixels. Considering that we used 1920 × 1080 resolution for both images and that one was up-sampled, the SIFT seemed to perform well; however, the measured performance might be due to the use of the planar surface as the target object (tablet). Further research on planar-equirectangular feature matching to improve either the quality of the alignment model should be carried out.

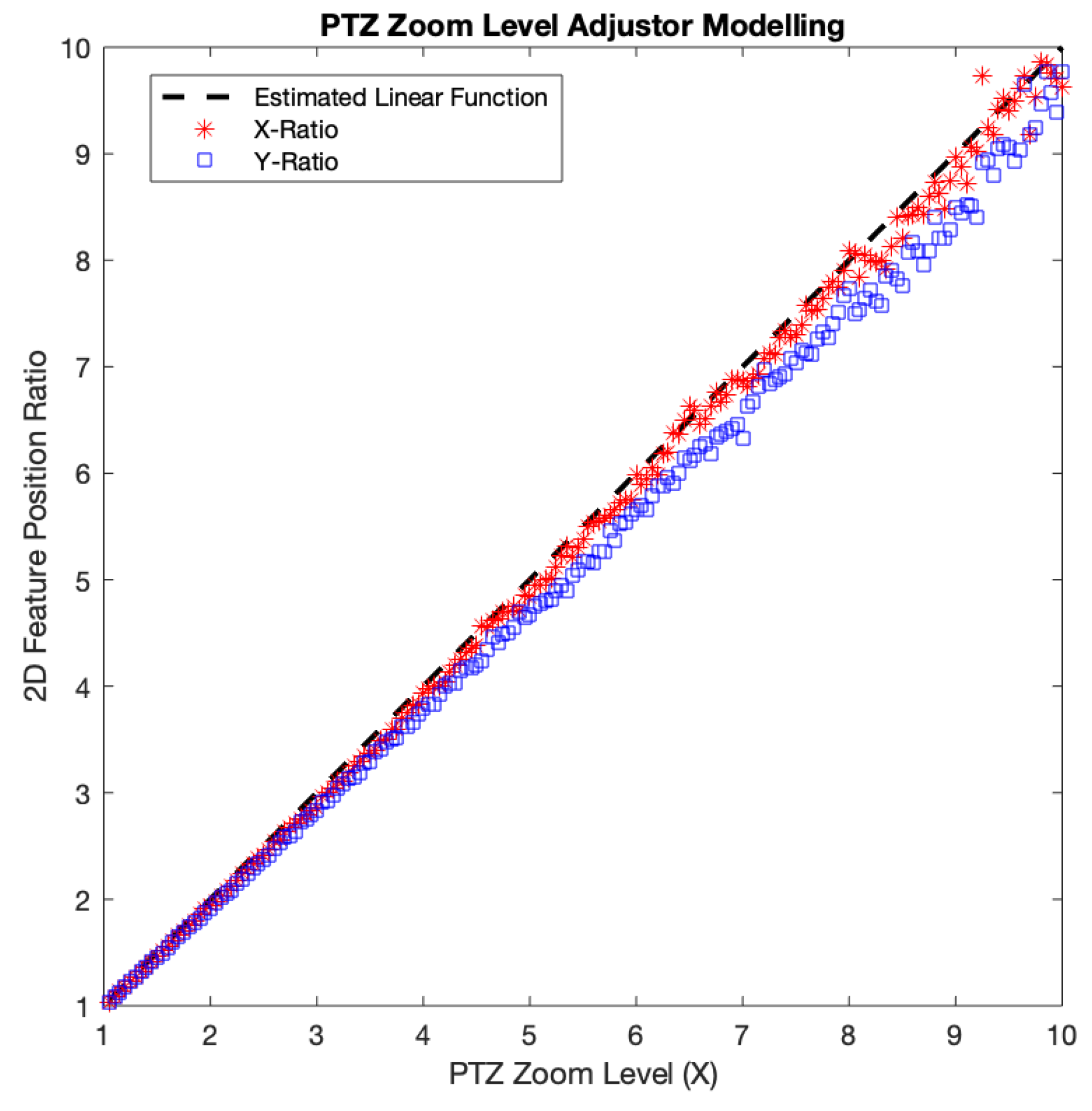

Zoom-based adjustment error originated from our approximate linear model of the PTZ image size with regards to the PTZ zoom level in the zooming process. We chose to use the model-based approach for the optical zooming because of the time overhead of the feature detection required to adjust PTZ image size accordingly. To make the model, we measured the amount of displacement of detected SIFT features at various zoom levels from the positions of those features at the base zoom level = 1×. We incremented the PTZ camera zoom level from 1× to 10× monotonically with an interval of 0.05×. At each zoom level, we acquired the

x-axis positions of the SIFT features and averaged their

x-axis distances from the origin (0, 0) located at the center of the image. Then, we divided the average distance by that of the base zoom level. We did the same for the

y-axis. Both the

x and

y-axis distance ratios followed the identity relation with the PTZ zoom level fairly well, with the

x distance ratio slightly fitter (see

Figure 9). Thus, for simplicity’s sake, we chose to use the identity function as our model, which in the end resulted in the zoom-based adjustment error.

The average zoom-based adjustment error per each axis was measured as 1.49% and 4.45% for

x and

y, respectively. In lower PTZ zoom levels, both

x and

y-axis distance ratios seemed to fit our model, while as the zoom level goes higher, they started to deviate. This axis-wise difference could be due to the non-linearity of PTZ camera optics as reported by Sinha et al. [

17]. Also, incorrect feature matching and restricted precision in position might contribute to the error, especially when the features were located near the center of the image, which is the case for high PTZ zoom levels. The features around the origin (image center) will have small

x and

y-axis distances. Assuming that the features position accuracy is similar across the whole PTZ image region, the position accuracy error on each

x and

y-axis will have a bigger influence on features whose

x or

y-axis distance is small. Thus, the imprecise of feature location detection that occurred around the image center will more possibly give a higher error. Therefore, a more accurate zoom-based adjustment model built with consideration of the camera optics as well as the locations of features would possibly improve the quality of the final stitched image.

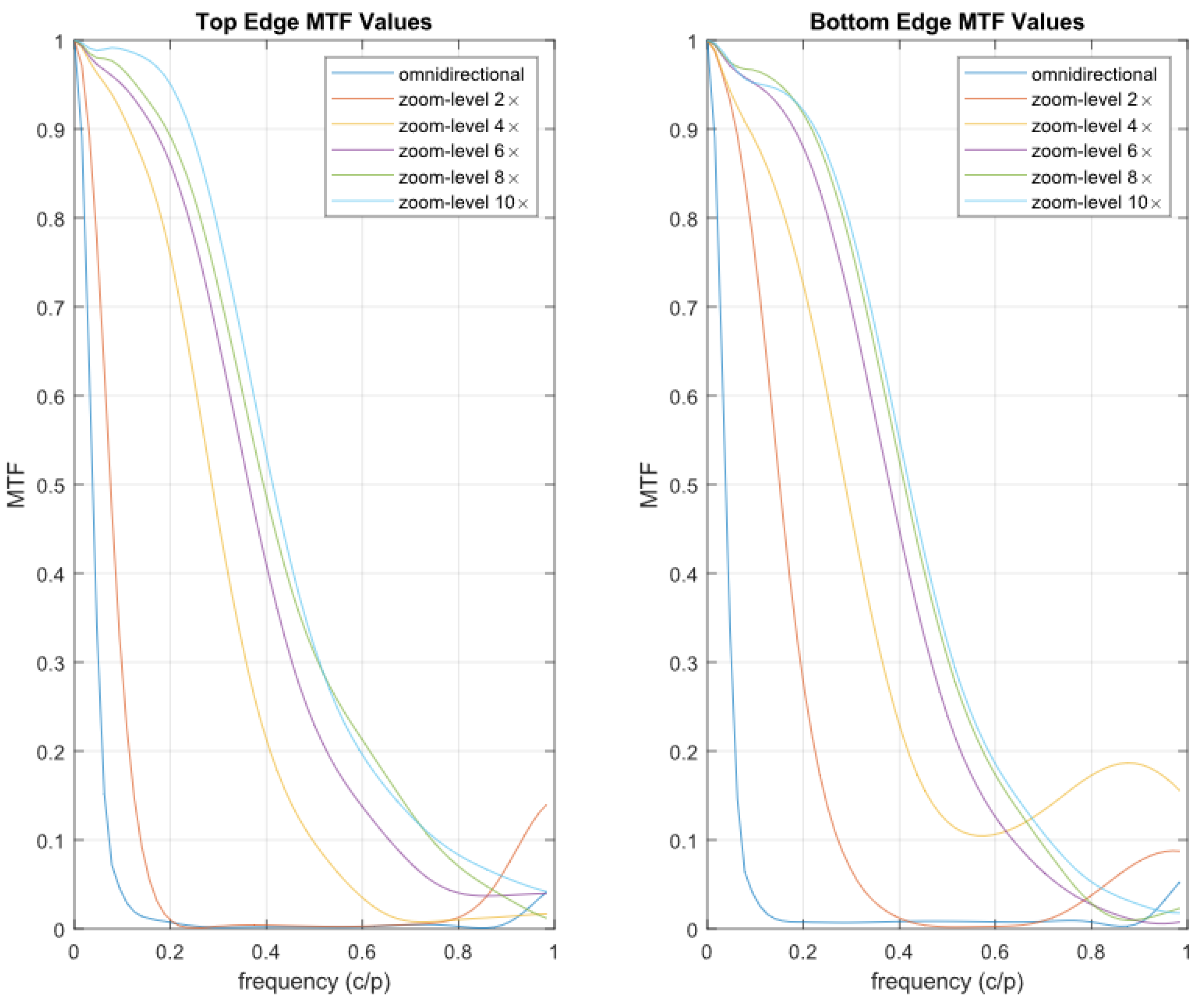

4.4. Image Sharpness Measure Experiment

Image sharpness determines how much detail an image can reproduce, which relates one of the goals of our foveated imaging system. We perform an experiment to measure the sharpness improvement we get from our foveated image compared with the reference omnidirectional view. We measured the Modulation Transfer Function (MTF) curve for the foveal region of the foveated images with optical zoom level 1×, 2×, 3×, ..., and 10×. We also measured the MTF curve for the omnidirectional image as the reference.

We used an open-source software, MTF Mapper [

34], to measure the MTF. We performed a slanted-edge method. Due to the small FOV caused by 10× zoom, we used a single quadrilateral printed on a white paper, instead of the chart consisting of multiple quadrilaterals. In addition, we made the quadrilateral always placed within the foveal area regardless of the zoom level. The distance between our camera module and the paper was 1

m. We selected the top and bottom edges of the quadrilateral in both foveated and omnidirectional images across various zoom levels to compare.

Figure 10 shows the MTF curves of even zoom levels for two edges (denoted by “top” and “bottom”) of the quadrilateral. Horizontal axis denotes frequency cycles/pixel (c/p) while vertical axis denotes MTF value, which is comparison of contrast value at frequency

f to frequency 0. We also measured the

MTF50 (The frequency where the MTF value drop to 0.5), presented on

Table 3.

Figure 10 shows that the high-frequency component (correspond to the high

x-axis value) of the MTF curve on the foveated image tends to be higher than that of the omnidirectional image. This means that the foveated image can preserve high-resolution details better than the omnidirectional image.

Table 3 also shows a similar result that the foveated image’s MTF50 is higher than that of the omnidirectional image, thus better at preserving high-resolution details.

Though in certain frequency the MTF curve seems increasing again we suspect this is because the lens is not focused on the inspected edge. During the experiment, we used autofocus features from the PTZ camera. Thus, the PTZ camera is not always focused on the edges that we inspected.

Another observation from the MTF curve of the foveated images that the curves started to saturate from the zoom level 8×. There seems no noticeable change in the MTF curve between the zoom level 8× to zoom level 10×. This is probably due to optical aberrations occurred particularly at near the extremes of their range [

35]. This result implies that our proposed system no longer produces the sharpness improvement by optical zooming beyond a specific zoom level. Considering our pipeline design, this is due to the limitations of the PTZ camera we used.

5. Conclusions

In this paper, we presented a foveated imaging pipeline that integrates 360 panoramic image from an omnidirectional camera with high-resolution ROI images from a PTZ camera. To make the best use of the 360 panoramic view, we chose VR HMDs as our target viewing devices and designed the pipeline accordingly. The proposed pipeline consisted of two stages: modeling and rendering, and the parallel executions of the two stages allowed our system to achieve a high frame rate of the foveated view, which is required for the use of VR HMDs. The use of the PTZ camera made it easy to relocate the foveal region, and also made our system capable of zooming on the foveated view, which guarantees the usefulness of our pipeline even when used a viewing device with lower resolution. In addition, our hybrid camera module can potentially be integrated with other telemedicine research to provide better video quality on the local environment.

From the experiment, we showed that the foveated view can roughly provide the remote physician with a view whose angular resolution similar to human visual acuity. We also showed that the separation of the modeling from the rendering stage allows the application to render the foveated view near real time. Precisely, even though the modeling stage required around 2.18 s to update the alignment model, the system can still run in 17 fps. The proposed pipeline also showed good quality by having only 1.45 pixels registration error and 1.49% and 4.45% for x and y-coordinate ratio relative error, respectively. From the MTF score, we showed that the separation of the omnidirectional sphere and PTZ planar mesh allowed the foveated view to preserve the high-resolution component of images better than the omnidirectional view while zooming in.

Future work should focus on improving the performance of our proposed system. For example, we assumed, in a medical scenario, the remote physician might not need to update the ROI frequently, so the delay caused by updating the model would not degrade the usability of our system on a scale. However, if our system is applied to a scenario where a remote user keeps changing the foveal region, the user might get frustrated from the delayed reflection of his/her intention. Clever use of extrinsic parameters in the modeling stage [

19] or GPU acceleration would speed up the feature detection. In relation to that, planar-equirectangular image feature detector also should be further investigated. In addition, supporting motion parallax would greatly improve user experience. Though a motorized platform can achieve this, a deep-learning model-based view synthesis might also be used to address this issue.

Although we targeted telemedicine, our proposed system can also be used in other applications. In remote teleoperation, the capability of rendering high angular resolution on the VR HMD will allow the remote operator to control the local mobile robot better and precisely. For example, in a search and rescue task [

36], the use of immersive HMD will improve the situation awareness in the disaster site for the remote operator. The high resolution of the foveal region will also help him to locate victims. In addition, remote collaboration can also benefit from our 360

high-resolution foveated image. Recently researchers have attempted to use a 36

camera as an alternative of a 3D reconstructed virtual space or a live 2D image-based mixed reality collaboration [

37]. In such a collaboration situation, the remote user’s capability to view high-resolution in 360-degree can allow the remote participant to inspect the far located object without having to ask the local participant to carry the camera. With the proliferation of the omnidirectional camera and VR HMD, we believe our proposed foveated imaging system can be useful in various fields.