Abstract

The practical increase of interest in intelligent technologies has caused a rapid development of all activities in terms of sensors and automatic mechanisms for smart operations. The implementations concentrate on technologies which avoid unnecessary actions on user side while examining health conditions. One of important aspects is the constant inspection of the skin health due to possible diseases such as melanomas that can develop under excessive influence of the sunlight. Smart homes can be equipped with a variety of motion sensors and cameras which can be used to detect and identify possible disease development. In this work, we present a smart home system which is using in-built sensors and proposed artificial intelligence methods to diagnose the skin health condition of the residents of the house. The proposed solution has been tested and discussed due to potential use in practice.

1. Introduction

Smart homes have become the key elements in the real estate market. In-Built electronics and home management systems are becoming more and more popular due to numerous advantages. They not only improve the comfort of human life, but also contribute to nature for example by saving energy. A classic example is light control in smart home environment. Another advantage, which is widely appreciated, is increased safety and protection through motion sensors or cameras attached to the alarming system which quickly prevents burglary. These are just a few important elements characterizing the trend of creation of intelligent homes. One of advantages which have important influence on humans is protection of the health of the household members. As protection, we mean counteracting to the development of disease, controlling and motivating while adhering to diets and sports. Such actions enable rapid detection of diseases and care for the correct and healthy body functioning.

Leaving the house results in exposure to the sunlight. This type of action might seem harmless, as long as we are not exposed during the strongest sun rays on hot days. In the last few years the risk of cancer has increased [1,2] and one of the most serious skin diseases is malignant tumor, i.e., melanoma. It can occur as pigmented nevus on our skin. Any skin change can mean a mutation of genes and the occurrence of melanoma, which is a type of malignant tumor. It is estimated that melanoma accounts for up to 10% of all human skin cancers, and on average, more than 1,300,000 cases are diagnosed yearly [3,4]. Each year, this number is growing rapidly—on average, about 5% compared to the previous year. Therefore a rapid detection of the disease at the initial stage increases patient’s survival prognosis. The later detection, the more difficult is the treatment. Moreover, the likelihood of metastasis is larger, and thus the survival rate is lower.

To increase the awareness of the household members about the existing disease, their state of health as well as visible signs on/in the body should be controlled. It can save their health by fast detection and treatment. A good solution that will not cause major inconveniences is to design an intelligent solution that can analyze this type of signs during other activities. Smart homes have in-built sensors that can improve control and alert quickly about the need for a visit to a specialist doctor. The awareness of people about the risk of cancer increases, which is evident from the example of education in schools, or information on the Internet, television and radio. Researchers from around the world are trying to create solutions that could be used in practice and do not cost a lot of computing power and money.

The latest research in the field of Internet of Things that can be classified as a help for health protection show a significant number of scientists focused on the analysis of people’s behavior and emotion in order to determine their health status. This type of analysis uses the information from various types of sensors, such as cameras or smart watches. One of the key examples of this type is presented in [5]. The authors focused on the use of image processing methods to help sick and elderly people. Obtained results are presented for selected artificial intelligence techniques such as decision trees, fuzzy logic or support vector machine, and as the greatest advantages are given an accuracy at 80% and low costs. Similar approaches are shown in [6,7], where the influence of emotions on these systems is measured. Sensors that record video, sounds or movements are very often used in monitoring. The retrieved data may indicate some deviations from the norm in the behavior of the household members. Information can be processed in terms of behavior analysis [8,9,10]. There are also very interesting research on sensing technologies for robotics which can assist elderly people in daily routine [11]. The use of machine learning methods requires a certain database on which the classifier will be trained. The extraction and segregation of data in smart homes were analyzed in [12]. For the purposes of verification of the proposed method, the authors collected data during 30 days, after which classifier’s accuracy was obtained at 85%. A useful tool is a smart phone that can integrate various sensors, measurement data and display them in an intelligible form for each person. The only problem is the configuration of the installed application in relation to location of the sensors or different construction of houses. An interesting solution is the automatic generation of code for the application on the android platform, which results in great flexibility of operation [13]. Another issue beside detection is the anticipation of the appearance of premature signs of diseases such as Alzhaimer [14] or the simplest application, such as assessing the state of current health and nutrition [15]. All sensors and the entire operating system that manages them or processes the obtained data requires some computing power and database to store the information. The solution is a special server or the use of cloud computing [16,17].

Related Works

Detection of skin diseases from the processing of images is an important topic both for sensors and sensing methods but also for Computational Intelligence and image processing. There are also research on medical symptoms of skin diseases and devoted skin markers for chemical analysis. In [18] was presented how plasmacytoid dendritic cells, inflammatory dendritic epidermal cells, and Langerhans cells influence anti-viral skin defense mechanism. In [19] was discussed the diagnostic gold standard for skin diseases detection based on the analysis of auto-antibodies and mucous membranes by the use of immunofluorescence microscopy and commercialized tools. The authors presented how the antigens influence skin characterization for serological diagnosis. In [20] was discussed a chemical method to detect atopic dermatitis, psoriasis and contact dermatitis characterized by transcriptomic profiling. Potential disease was detected by the use of protein expression levels.

In [4] was presented classification of skin lesions using Deep Convolutional Neural Network. Proposed model was trained from images, using only pixels and disease labels on the input. The research presents classification in two cases: keratinocyte carcinomas vs. seborrheic keratoses and malignant melanomas vs. nevi. In [21] was proposed a technique for melanoma skin cancer detection. Authors proposed a system which combines deep learning with proposed skin lesions ensemble method. In [22] was presented optoacoustic dermoscopy model based on a function of excitation energy and penetration depth measures for skin analysis by the use of ultra imaging. It was shown how to analyze absorption spectra at multiple wavelengths for visualization of morphological and functional skin features. An approach to detect skin lesion and melanoma based on deep learning model was proposed in [23]. The authors have discussed segmentation and feature extraction in relation to the center of melanoma by Lesion Indexing Network which uses Fully Convolutional Residual Network as a main detection method. In [24] we can find an up to date review over various machine learning methods applied to skin diagnosis and diseases detection. While in [25] we can find a wide comparison of mobile apps for skin monitoring and melanoma detection. The authors have compared several apps, discussed their potential in efficient image processing form smart phone camera and sensing technologies and also presented legal aspects of ethical, quality and transparent development of apps for medical use.

Beside to the research on imge analysis methods a constant development in sensing technology is visible. In [26] an intelligent sensing system for monitoring human skin and pathogen microbiota was presented. The system was designed as a composition of nanowire and thin film metal oxide technologies. The device uses GC–MS (Gas Chromatography–Mass Spectrometry) and SPME (Solid Phase Micro Extraction) as methods of data sampling and analysis. There are also various tests of efficiency and operability measured for cameras used in skin sensing technologies. In [27] was presented a review of hyperspectral imaging one.

In this article we propose a smart sensing for detection of skin diseases resulting from changes in nevi over body area. In our system the image from a smart home camera is analyzed by the proposed methodology. The novelty of our proposal is in efficient detection and estimation of the analyzed nevus. The method first searches over the image for any potential dangerous skin changes using key point search algorithm. After the image is clustered according to their locations. Selected clusters are forwarded to the proposed neural network architecture which analyzes them for changes in skin features. Therefore by the use of the proposed novel solution the household members have a constant monitoring of their health. The solution is very easy to use, we just need to stand in front of the camera, which in our system was placed over the mirrors to enable dynamic health control within daily routine. The image is automatically forwarded to analysis and the results are presented to the household member on the connected devices he/she choses. The solution is very efficient and the tests have shown accuracy and precision rates for our idea of 82.4% and 80% respectively.

2. Intelligent System for Monitoring Skin Diseases

Let us assume that a smart home has its own monitoring system. Proposed type of operation is using cameras with motion sensors which collect images of the people for analysis of the skin. When these cameras are used, some specific requirements should be stated. Especially, we need very good recording quality and high resolution. Unfortunately, these parameters generate higher costs. In addition, the processing of video files will be very burdensome for the server, which will have 24 frames (identified with images) per each second acquired from one camera and in the home, there will be many of them.

Of course, this solution is possible with the assumption of a good server that can handle such amounts of the data. As an alternative solution, we suggest combining a camera with a motion sensor. In the case of a motion in a given area, photos will be taken within a few seconds (selection of this parameter should depend on the available computing power). Placing this sensor is basically simple, for example, in the vicinity of the mirrors, because we stop for a moment to look while dressing or in the morning to improve something in the hair, etc. We take into account the argument that often mirrors are placed only in the bathroom. Therefore we suggest creating a picture-taking control that can be stopped using a remote control. This solution seems to be simple and intuitive even for elderly or disabled people.

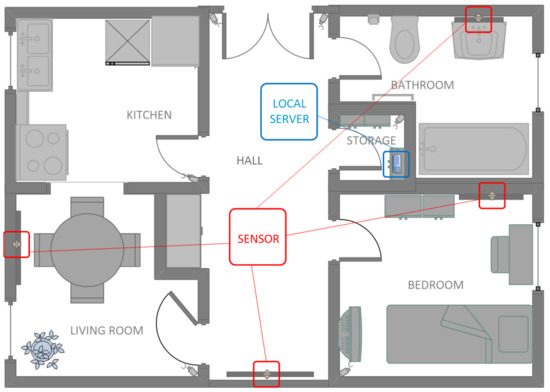

A person may want to check the condition of their skin in other places (not visible to the camera), so additionally the sensor should be small and possible to be mounted on the wall or stand on the cabinet. The choice of the place depends only on the size of the equipment—the smaller the equipment, the more convenient it will be to use. In Figure 1, there is an example of a smart home model in which monitoring cameras are located and controlled by composed motion sensors. Locations have been chosen in a way that they do not interfere with any activities of the household members. Moreover, a local server placed in a small room (here, called storage) is responsible for downloading data via local wireless network from the cameras to a database stored on that server. A small disadvantage is that motion sensors which are associated with the cameras may have a short range, i.e., it would not be possible to apply this solution in places such as a living room or hall, where a house traffic could be recorded over a distance.

Figure 1.

An example of a smart home visualization with the placement of sensors over mirrors in the house (the camera is activated by the motion sensor) and the location of a local data server.

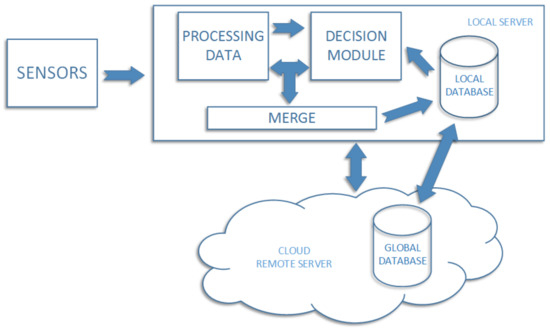

In Figure 2, we present a general information flow diagram which shows how obtained information is processed in our system. At first, sensor data is downloaded and saved on the local server. Each photo is processed by the skin analysis module, after which it is analyzed by the classifier. Each image with the decision obtained from the classifier is retained in the local database. In case when the classifier result means high probability of the skin disease, the information is sent to the user immediately. There is a risk of hardware failure, which may result in data loss. To prevent such situation, the local database is synchronized with the external one, located in the cloud (called global). An additional advantage of this solution is the situation when the user installs the system at the home, but the local classifier cannot classify images because it was not trained before. In such situation cloud system starts to work as first one, since it is previously trained, standard structure of the classifier saved on the global database. In practice, after installing the system in home, the local classifier should be updated with the latest information retrained from the cloud. On the part of the contractor, there should be a classifier in the cloud, which after a certain period of time (sometimes even weeks) will be overhauled using data received from various clients. This will allow automatically updating the global classification data for each client separately using one resource.

Figure 2.

Diagram of information flow in the home management system.

The use of a double database means that the proposed system is resistant to failures. Of course, the loss of the global database will be much more expensive, but there is a fast possibility of recovering the data by downloading them separately from all the clients and after that combining it again into one global data. Additionally, the classifier training is in the public cloud, so this process does not burden client’s local server. Another advantage is an easy modification of this installation by adding or removing additional sensors. In a case of a large data inflow, processing support will depend on the time of saving on the server’s disk.

3. Skin Analysis Module

Skin diseases can manifest themselves differently, especially when the new distinctive signs are visible. These types of changes may appear slowly, which often avoids quick observation. It is particularly important to analyze any new nevus on the skin, because this can start to change, which is one of the first symptoms of skin cancer. Changes are visible and often classified as follows

- A (asymmetry)—any two halves of the nevus are asymmetrical;

- B (border)—the boundary between the healthy tissue and the nevus is fuzzy, tilted or invisible;

- C (color)—the nevus has different shades of color, and the border between them is irregular;

- D (diameter)—the diameter is greater than 6 mm;

- E (evolving)—there is a sudden enlargement or bulge.

However any suspicious change in nevus shape, color or structure requires particular attention.

3.1. Image Processing

For a given body image, it is important to locate the changes in the skin, detect all the hallmarks and classify them as a healthy skin or suspicious disease. Let us assume that the obtained image will contain large part of body. It is necessary to extract the skin and then to analyze all suspicious areas. Having an image, we are looking for areas where we can expect some important elements.

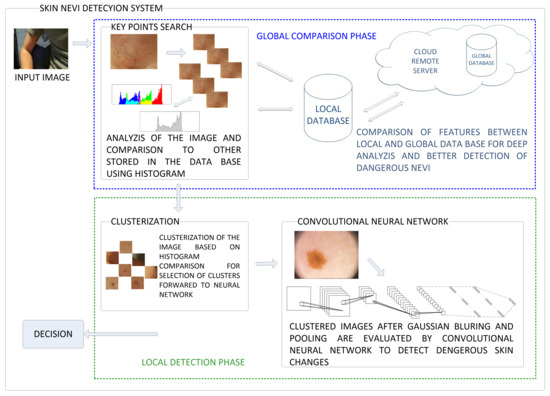

The obtained image I is colorful and two-dimensional. We should detect human skin for further analysis. The proposed approach consists of processing the image and detecting features, and then classify them. The scheme of the proposed skin nevi analysis module is presented in Figure 3. For a given image I, we search for key points (representing the features of the image) using the classic detection algorithm, and then each of these points will be analyzed in terms of the quality of the detection (containing a nevus or birthmark) and used to create a small image called cluster. This cluster will contain an area with a given feature for later classification by the use of Convolutional Neural Network. The process is shown in Figure 4.

Figure 3.

The captured image goes through the following stages of processing in the proposed system: global and local features extraction and comparison. In the first one an image is clustered into smaller parts containing suspicious symptoms over the skin. These clusters are compared by the use of histograms and selected ones are forwarded to detection phase in which Convolutional Neural Network performs evaluation of these symptoms.

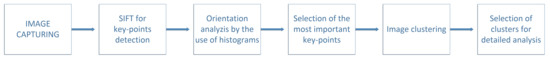

Figure 4.

The processing of input image goes through the following stages first to locate the key-points over the image and after to select among them the most important ones which will be clustered and forwarded to the detection stage in the form of a blurred nevi image.

3.2. Key Points Search

A mentioned algorithm of key points detection is called Scale Invariant Feature Transform (in short SIFT) [28,29]. The algorithm works on the rule of four steps, where the first one consists of detecting local extremes on different scales. Key-points are searched for depending on certain local features of the area and should be independent from scale or orientation. In addition, each point should correspond to the local extreme in the image after applying the Gauss filter (on different scales). Let us assume that the Gauss filter will be described as a continuous function in the following way

where is a parameter understood as a variance. The operation of applying this filter to the given image can be described as convolution in the following way

Next, Difference-of-Gaussian (DOG) must be created for different scale k as

Detection of local extremes works on the basis of the comparison of a given point with its neighbors in the square , where the analyzed point is in the middle. It is possible to increase the size of the grid, but the used photographic equipment should take pictures of very high quality because attempts to use larger dimension resulted in the selection of non-discriminating elements. A given point can be considered as an important if its value is maximal or minimal in relation to the neighbors. In the next step, points are localized by interpolating previously found extremes using Taylor series expansion as

In addition, unstable points are rejected due to the low contrast value which is verified by the following function

After, points on the edges are removed if the following condition is fulfilled

where r is a given parameter of a neighborhood and is a trace of a given Hessian matrix defined as

The third step is the orientation of all remaining points from Gaussian images. It is done by using gradient module and orientation defined as

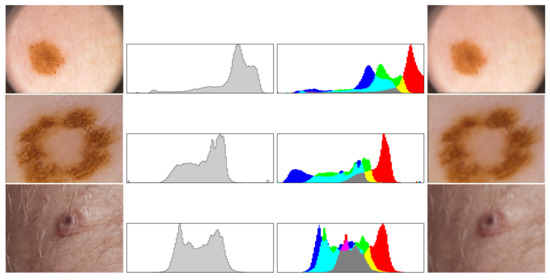

Above formulas allow creating a histogram of orientation values weighted by calculated module and function where . Further, the greatest value in histogram is selected, and all values above of selected maximum are treated as a feature orientation of this point. This action caused that key points are independent from rotation. The last step generates descriptors for this points. A certain neighborhood of the point is taken. Then all elements of neighborhood are divided into smaller, even areas. In each area, resultant gradient modules are calculated, which allows to define a descriptor. Sample images of healthy skin and nevi with their histograms in gray and color scale are presented in Figure 5 and Figure 6, while the procedure to select clusters is presented in Figure 7.

Figure 5.

Each cluster is analyzed by the use of histograms (in gray and full color), which are compared to other images stored in the data base system before blurring operation. In the rows 1–3 we see examples of suspicious nevi, both histograms and blurred cluster that will be forwarded to evaluation phase.

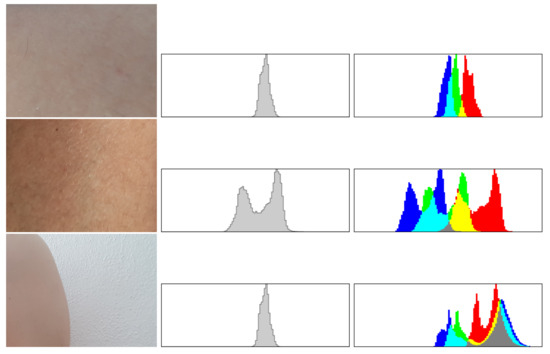

Figure 6.

If the analysis of histograms shows that the cluster presents normal skin the images is not forwarded to evaluation phase. In the rows 1–2 we see examples of regular healthy skin and in the last one a fragment of a skin over a wall. The images show a cluster and both histograms, since there are no suspicious nevi therefore all without blurring necessary for evaluation phase.

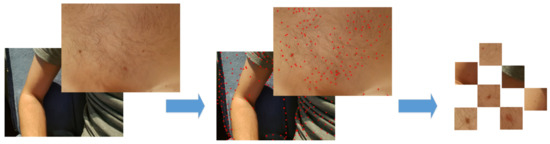

Figure 7.

The process of creating clustered image files with nevi for classification. The original image is processed by the use of SIFT method which shows potential locations of key-points. After clusters are composed, and each of them is evaluated and cropped to show the suspicious nevus in the best possible way to make the evaluation the next phase more efficient.

3.3. Selection of Found Key Points

All the points found by using SIFT will be located on the entire image, so they will adhere to various skin features. Imagine the simplest and the most likely situation when such a picture is taken in front of a mirror. The householder will not occupy the entire picture, but only a part of it. The rest of the area will concern the elements of home furnishings. The points that indicate this objects should be removed and not taken into account.

The easiest way is to take several photos earlier (with different lighting), find key points and save their coordinates with colors. In the case when the same point appears on the new image, it will be automatically deleted. We also remove these which colors do not fit the palette colors in the data base since we assume these may reflect new clothing or jewelery. Additionally border lines and other long, thin shapes are removed. Moreover, there is a chance that a given point will be characterized by a certain part of the body such as hair, eyes or lips. The exact elimination of these parts is not so desirable, because at a later stage, the classifier will decide on the validity of the element. However, in order to improve the operation of the entire system, when the number of images is smaller, the computational power needed to process them is also lower.

3.4. Creation of Suspicious Clusters

After the previous selection of points, each of them can be presented in the form of a cluster. The cluster will be called a small, separate area from the original image containing the given point. An intuitive example may be the point of the nevus, where the cluster is an image which takes a full picture of the nevus. In practice, the idea is to create a large area with a given point, which is processed to extract the localized object and trim to its size. Assume that the key point is at position . A new bitmap of size is created (where w, h correspond respectively to the width and the height of the original image). In the center is located the key-point. Then, we change all colors to gray-scale by averaging the value of each component of a given pixel as

After, we modify the images by applying gamma correction and increasing the contrast to eliminate unnecessary, small elements in the image. Modifications for a given point can be defined as

where is a coefficient of correction (). The second formula is described by

where is a parameter called level of contrast. After applying the above formulas to each pixel, the image obtained is much simplified. Taking the key point, we count all its neighbors as having the same color in a recursive manner. If the number of counted pixels is greater than , the key point is removed. Otherwise, the image is cropped relatively to the pixels that have been counted. It is easy to see if the resulting cluster is useless due to the filtering, so each of these pixels is replaced according to the original image. Additionally, in order to unify the dimensions of clusters, all images are minimized/extended to the same dimension given by the user. This process is presented in Figure 7.

Convolutional Neural Network

Convolutional neural networks are structures inspired by information transfer between neurons, which are basic units in the brain. Their operation is similar to classic artificial networks, so the structure itself in general description is the same. It is composed of several types of layers, each of them contains neurons. Neurons in one layer are connected with other units in the previous and next layer (if they are composed in the structure), and each connection is burdened with a weight. In the initial operation of the network, weights are selected in random way and modified during the training process.

The main difference between the classic network and this one is the received information, which forces a certain layer structure (see Figure 8). In the classical approach, the inputs are vectors composed of information saved as numerical values. Here, images are input data. The whole idea is inspired by cells in the primary cortex [30,31]. The first layer is called convolutional, which is responsible for extracting features from the image using an image convolution operation. To describe it, let us define a filter which is a matrix of size and is the position of the center point in the matrix (the position is related to original image). Using this position, this filter can be moved over the image by step S. This layer uses to modify the image in a way to isolate or highlight certain important features by adding certain pixels to local neighbors multiplied by a value called the kernel and described as mentioned before . In short description, in this layer, the image is only modified relatively to the given filter. Gaussian blur is one of the filters that distorts the image and is described as

Figure 8.

Sample scheme of the Convolutional Neural Network composed to process clustered images of suspicious skin nevi.

Another important filter is emboss which modifies a given pixel by replacing it with a back light or shade depending on the original one and the kernel is defined as

The second type of layer is called pooling one. It’s main task is to reduce the incoming image by selecting certain pixels. The idea is similar to the operation of convolutional layer, with the difference that on each matrix from input image, one pixel is created to replace the previous 9. Let us denote the matrix of pixels as where , then the returning pixel can be selected as the maximum element in matrix or in terms of a certain parameter like one of the value or the average color as

where , and are scales that attenuate the importance of a given color only if .

For the dimension matrix, the output matrix from this layer will be three times smaller. The last type of layers is called fully-connected and their operation is almost identical to the classical neural network. The construction of this layer can be separated into smaller elements resembling the idea of hidden and output layers in classic understanding. At the input to this layer, there is a small image. Each pixel must be described as a numerical value and as a result of such representation, the vector with numerical values is formulated. Next, a column of neurons is created where the number of units is identified with the number of pixels form the input image. Operation of the neuron can be described as a following function

where is the output from neuron, is the output from neuron in the previously layer, is the weight on the connection between j-th and k-th neurons and is the activation function defined as

which normalizes the value of the sum to the range .

Fully-connected layer can be composed of many smaller layers with neurons, where in the last one are output neurons (their number is defined depending on desired classification results).

Of course, such structure is useless before training, and one of the most popular algorithms for this purpose is back-propagation algorithm (which modifies the weights to minimize the error of the whole structure) [30,32]. To describe the actions, some markings should be defined like error function and error at the end of the network as . In addition, we specify the output from a particular neuron at position in l-th layer as . The training algorithm is using chain rule defined as follows

Equation (18) allows to define error formula for the current layer in the following manner

Based on Equations (18) and (19), it can be seen that the algorithm uses gradient technique to minimize error value, so it can be also done for calculating error in convolutional layer (this rule cannot be applied to pooling layer, which does not take part in training process) as

what gives the formula for error on previous layer determined as

4. Experimental Section

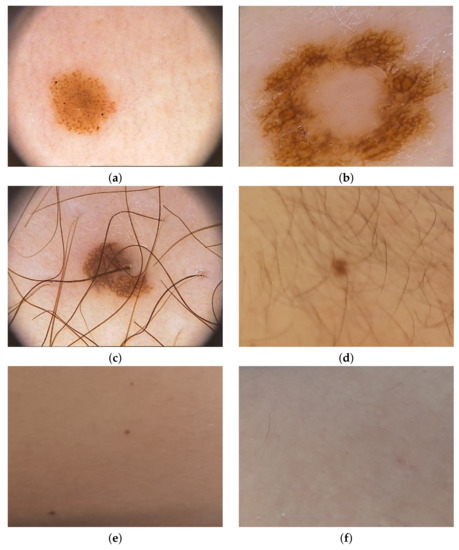

To check the operation of the proposed solution, we first tested the operation of the proposed method for creating images from photos taken in different places in six houses, where we mounted sample sensors of our system to collect new images from the household members. After we have added an open database PH2 Dataset [33] to extend our collection. PH2 Dataset contains images from the Dermatology Service of Hospital Pedro Hispano (Matosinhos, Portugal) presenting nevi in 8-bit RGB color images with a resolution of 768 × 560 pixels. PH2 Dataset contains 200 dermoscopic images of melanocytic lesions, where 80 are common nevi, 80 are atypical nevi, and 40 are melanomas. Our new images are in resolution 800 × 600, where we have collected 73 images of nevi. Figure 9 presents samples from both data sets.

Figure 9.

Selected samples used in training process—(a–c) come from PH2 Dataset, and (d–f) were obtained from the method described in this paper.

The main purpose of classification is to make decision about the input data and assign to this the value from decision set . It means that is decision class for i from 1 to n. For every element x in the input database, we verify whether the decision was right. That is, if . The test result is negative only if . Otherwise the test result is positive. We assume that the condition is the null hypothesis and the opposite to this, we call an alternative hypothesis. Let f be the classifier and take one of decision classes . Then test results can be described by 4 parameters

- TP—True Positive, when and ,

- TN—True Negative, when and ,

- FP—False Positive, when and , what means that the type I error was made—the null hypothesis was rejected even though it was correct,

- FN—False Negative, when and , what means that the type II error was made—the null hypothetis was not rejected even though it was not correct.

For our model we have two decisions: the image presents the nevus which does not cause dangerous effects to health and opposite which means the nevus is dangerous and might have consequences to health. Therefore our hypothesis is to reject that the nevus is dangerous.

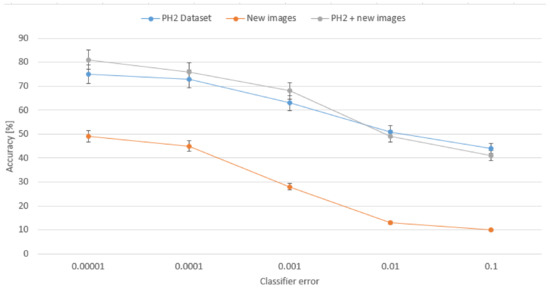

The scores we obtained in our research are shown in Table 1. We can see that the best results are achieved for merged datasets, where precision is 80% and accuracy 82.4%. These results are almost twice better in comparison to single set of new images. We can also see that for single PH2 dataset accuracy was improved of about 3% while precision decreased of 10%. This is a result of extended set of input images, since for a single origin the classifier was trained on a specific nevi detection so precision was higher while for new images new possibilities came so the precision is lowered. On the other hand, what is more important, the accuracy rate is the highest for merged sets. Figure 10 presents how the accuracy rate changes for different assumed error from our classifier for all three configurations of the input data collection. Analysis of this chart confirms that extended data collection improves the classifier for more efficient detection of dangerous nevi on the skin.

Table 1.

Quality measures of classifier trained only for the data from PH2 Dataset, only for obtained new images from proposed method and merged both dataset.

Figure 10.

Dependency between the classifier’s error and the average accuracy presented for different assumed error in the classifier for all three configurations of the input data collection.

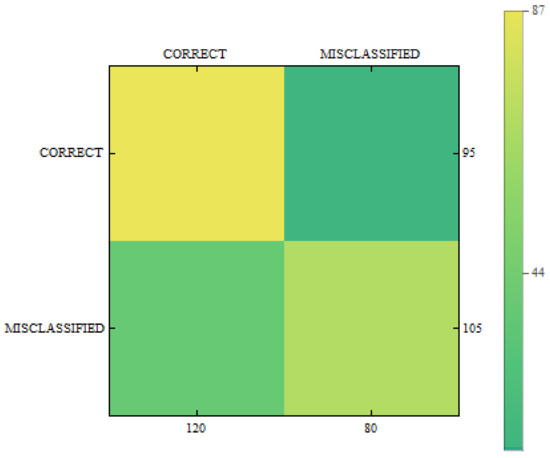

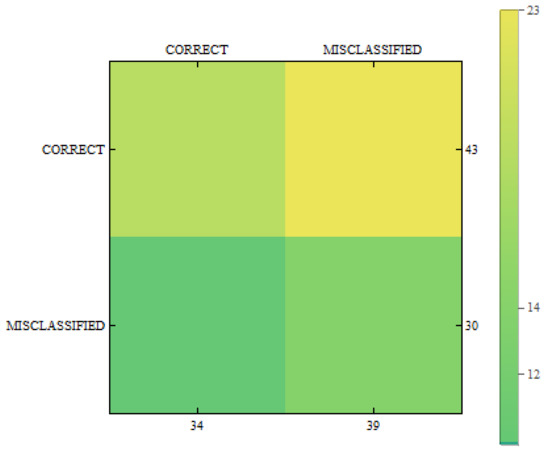

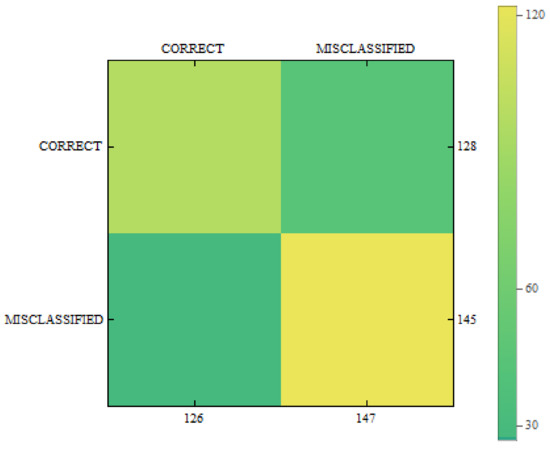

In Figure 11, Figure 12 and Figure 13 we can see confusion matrices for classification results. If we compare these charts we will see how decision classes change for each of training configurations. The best recall rates are visible for merged collections from both datasets. While in case of single training sets we can see that false positive and false negative rates influence the overall decision classes of the classifier. In Figure 11 the relation between decision classes show that we had more false positives than false negatives. In Figure 12 we see that using only new images caused the highest rates of false positive, while true positives and true negatives were at similar level but still lower. In Figure 13 we see that merged collections of PH2 Dataset and our new images improved training of the proposed classifier to reach the highest rate for true positive. At the same time, true negatives reached also good level for efficient classification. In this case, both false decision classes levels were lowered to minimum so that the classifier could reach good overall accuracy of 82.4% and precision of 80%.

Figure 11.

Confusion matrix for measurements obtained by the classifier using PH2 Dataset only.

Figure 12.

Confusion matrix for measurements obtained by the classifier using created images from the proposed method only.

Figure 13.

Confusion matrix for measurements obtained by the classifier using PH2 Dataset and created images from the proposed method.

We have also checked whether the obtained results depend on one or more factors. For this purpose, the statistical analysis of variance (ANOVA with ) was used and the scores are presented in Table 2. Comparing F-ratio values we see that merged data sets show the highest dispersion among results. Results for single data sets are much lower, while the result for our data set is the lowest among all. These results mean that merged data sets give dispersion among the results, what is obvious since in our data set we have only a few people while in PH2 there are examples from various people. These are confirmed for F-ratios for each of the sets alone. Since in our data there are the highest similarities among samples the dispersion is the lowest. This show that introduction of new images extends dependencies on various factors, which is true since in our data set we have various nevi which show mostly healthy skin. On the other hand without our new dataset the proposed classifier was working worse which was visible in lower accuracy presented in confusion matrices in Figure 11, Figure 12 and Figure 13. Therefore we assume that merging of these two collections extends dependencies between decisions and input images but improves the overall efficiency of the proposed classifier.

Table 2.

Statistical results for the ANOVA test.

Conclusions

This research has shown that the proposed classification method works well. We were able to train our system to reach overall accuracy of 82.4% and precision of 80.4%, which is very good result. Comparison to the results from other similar solutions is presented in Table 3. Analyzing the table we see that our method is not the best, since, for example, the Circular center + Support Vector Machine [34] achieved 94% accuracy but on the other hand, our solution has beaten other methods based on Support Vector Machine alone by reaching higher values. This comparison shows that our method works well, but there is still some room for improvements, i.e., by the use of better quality cameras the images would have higher resolution what would definitely improve the results. On the other hand, our system is easy to use since it is able to monitor possible skin changes during daily routine at very low costs. Household members can make evaluations of nevi just by using any mirror. The procedure is simple, since mounted cameras collect images and by the use of the proposed method select body are with clustered areas of the skin. The evaluation is using proposed methodology which compares clustered skin outlook with images of nevi stored in the database.

Table 3.

Comparison of results to other methods.

5. Final Remarks

Frequent control of any symptoms which may signal potential disease is a crucial condition for efficient medicine. During our life we are often so busy with family, work, running a house, etc. that we are leaving aside medical examinations. Therefore an automated support is a solution which can help us to maintain our health. This kind of support shall be installed in a place where we feel good and where the evaluation of the symptoms can be the most efficient. Therefore to solve these issues we propose a smart home system. At home we feel safe and proposed installation of cameras over mirrors make the use of this system very easy. Proposed methodology collects information about skin changes and if any potential risk occurs informs the users.

Experimental research have shown high efficiency and gives good start for further development. It is possible to extend the system ability to examine not only nevi on the skin but other features of our bodies. Proposed architecture can be also extended to automatically contact the doctor in case of emergency. It is also possible to develop a portable version of this solution which we can take for a holiday or a business trip.

Author Contributions

D.P. (15%), A.W. (25%), K.S. (25%), K.K. (25%), M.W. (10%) designed the method, performed experiments and wrote the paper.

Funding

This research was funded by the Polish Ministry of Science and Higher Education, No. 0080/DIA/2016/45.

Acknowledgments

The authors would like to acknowledgment the contribution to this research from the “Diamond Grant 2016” No. 0080/DIA/2016/45 funded by the Polish Ministry of Science and Higher Education.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Stewart, B.W.; Wild, C.P. World Cancer Report 2014; World Health Organization: Geneva, Switzerland, 2017. [Google Scholar]

- Matsumoto, M.; Secrest, A.; Anderson, A.; Saul, M.I.; Ho, J.; Kirkwood, J.M.; Ferris, L.K. Estimating the cost of skin cancer detection by dermatology providers in a large healthcare system. J. Am. Acad. Dermatol. 2017, 78, 701–709. [Google Scholar] [CrossRef] [PubMed]

- Gupta, S.; Tsao, H. Epidemiology of Melanoma. In Pathology and Epidemiology of Cancer; Springer: Berlin/Heidelberg, Germany, 2017; pp. 591–611. [Google Scholar]

- Esteva, A.; Kuprel, B.; Novoa, R.A.; Ko, J.; Swetter, S.M.; Blau, H.M.; Thrun, S. Dermatologist-level classification of skin cancer with deep neural networks. Nature 2017, 542, 115. [Google Scholar] [CrossRef] [PubMed]

- Mano, L.Y.; Faiçal, B.S.; Nakamura, L.H.; Gomes, P.H.; Libralon, G.L.; Meneguete, R.I.; Geraldo Filho, P.; Giancristofaro, G.T.; Pessin, G.; Krishnamachari, B.; et al. Exploiting IoT technologies for enhancing Health Smart Homes through patient identification and emotion recognition. Comput. Commun. 2016, 89, 178–190. [Google Scholar] [CrossRef]

- Kim, M.; Cho, M.; Kim, J. Measures of Emotion in Interaction for Health Smart Home. Int. J. Eng. Technol. 2015, 7, 343. [Google Scholar] [CrossRef]

- Kim, M.J.; Lee, J.H.; Wang, X.; Kim, J.T. Health smart home services incorporating a MAR-based energy consumption awareness system. J. Intell. Robot. Syst. 2015, 79, 523–535. [Google Scholar] [CrossRef]

- Mshali, H.; Lemlouma, T.; Magoni, D. Adaptive monitoring system for e-health smart homes. Pervasive Mob. Comput. 2018, 43, 1–19. [Google Scholar] [CrossRef]

- Alemdar, H.; van Kasteren, T.; Ersoy, C. Active learning with uncertainty sampling for large scale activity recognition in smart homes. J. Ambient Intell. Smart Environ. 2017, 9, 209–223. [Google Scholar] [CrossRef]

- Civitarese, G. Behavioral monitoring in smart-home environments for health-care applications. In Proceedings of the 2017 IEEE International Conference on Pervasive Computing and Communications Workshops (PerCom Workshops), Kona, HI, USA, 13–17 March 2017; pp. 105–106. [Google Scholar]

- Gomez, M.A.; Chibani, A.; Amirat, Y.; Matson, E.T. IoRT cloud survivability framework for robotic AALs using HARMS. Robot. Autom. Syst. 2018, 106, 192–206. [Google Scholar] [CrossRef]

- Verma, P.; Sood, S.K. Fog Assisted-IoT Enabled Patient Health Monitoring in Smart Homes. IEEE Internet Things J. 2018, 5, 1789–1796. [Google Scholar] [CrossRef]

- Sinha, R.; Narula, A.; Grundy, J. Parametric statecharts: Designing flexible IoT apps: deploying android m-health apps in dynamic smart-homes. In Proceedings of the Australasian Computer Science Week Multiconference, Geelong, Australia, 30 January–3 February 2017; ACM: New York, NY, USA, 2017; p. 28. [Google Scholar]

- Alberdi, A.; Weakley, A.; Schmitter-Edgecombe, M.; Cook, D.J.; Aztiria, A.; Basarab, A.; Barrenechea, M. Smart Homes predicting the Multi-Domain Symptoms of Alzheimer’s Disease. IEEE J. Biomed. Health Inform. 2018. [Google Scholar] [CrossRef] [PubMed]

- Dawadi, P.N.; Cook, D.J.; Schmitter-Edgecombe, M. Automated cognitive health assessment from smart home-based behavior data. IEEE J. Biomed. Health Inform. 2016, 20, 1188–1194. [Google Scholar] [CrossRef] [PubMed]

- Tao, M.; Zuo, J.; Liu, Z.; Castiglione, A.; Palmieri, F. Multi-layer cloud architectural model and ontology-based security service framework for IoT-based smart homes. Future Gener. Comput. Syst. 2018, 78, 1040–1051. [Google Scholar] [CrossRef]

- Chaudhary, A.; Peddoju, S.K.; Peddoju, S.K. Cloud based wireless infrastructure for health monitoring. In Cloud Computing Systems and Applications in Healthcare; IGI Global: Hershey, PA, USA, 2017; pp. 19–46. [Google Scholar]

- Wollenberg, A.; Günther, S.; Moderer, M.; Wetzel, S.; Wagner, M.; Towarowski, A.; Tuma, E.; Rothenfusser, S.; Endres, S.; Hartmann, G. Plasmacytoid dendritic cells: A new cutaneous dendritic cell subset with distinct role in inflammatory skin diseases. J. Investig. Dermatol. 2002, 119, 1096–1102. [Google Scholar] [CrossRef] [PubMed]

- Schmidt, E.; Zillikens, D. Modern diagnosis of autoimmune blistering skin diseases. Autoimmun. Rev. 2010, 10, 84–89. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Suárez-Fariñas, M.; Estrada, Y.; Parker, M.L.; Greenlees, L.; Stephens, G.; Krueger, J.; Guttman-Yassky, E.; Howell, M.D. Identification of unique proteomic signatures in allergic and non-allergic skin disease. Clin. Exp. Allergy 2017, 47, 1456–1467. [Google Scholar] [CrossRef] [PubMed]

- Codella, N.C.; Nguyen, Q.B.; Pankanti, S.; Gutman, D.; Helba, B.; Halpern, A.; Smith, J.R. Deep learning ensembles for melanoma recognition in dermoscopy images. IBM J. Res. Dev. 2017, 61, 5:1–5:15. [Google Scholar] [CrossRef]

- Schwarz, M.; Soliman, D.; Omar, M.; Buehler, A.; Ovsepian, S.V.; Aguirre, J.; Ntziachristos, V. Optoacoustic dermoscopy of the human skin: tuning excitation energy for optimal detection bandwidth with fast and deep imaging in vivo. IEEE Trans. Med. Imaging 2017, 36, 1287–1296. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Shen, L. Skin lesion analysis towards melanoma detection using deep learning network. Sensors 2018, 18, 556. [Google Scholar] [CrossRef] [PubMed]

- Pathan, S.; Prabhu, K.G.; Siddalingaswamy, P. Techniques and algorithms for computer aided diagnosis of pigmented skin lesions—A review. Biomed. Signal Process. Control 2018, 39, 237–262. [Google Scholar] [CrossRef]

- Chao, E.; Meenan, C.K.; Ferris, L.K. Smartphone-based applications for skin monitoring and melanoma detection. Dermatol. Clin. 2017, 35, 551–557. [Google Scholar] [CrossRef] [PubMed]

- Carmona, E.N.; Sberveglieri, V.; Ponzoni, A.; Galstyan, V.; Zappa, D.; Pulvirenti, A.; Comini, E. Detection of food and skin pathogen microbiota by means of an electronic nose based on metal oxide chemiresistors. Sens. Actuators B Chem. 2017, 238, 1224–1230. [Google Scholar] [CrossRef]

- Behmann, J.; Acebron, K.; Emin, D.; Bennertz, S.; Matsubara, S.; Thomas, S.; Bohnenkamp, D.; Kuska, M.T.; Jussila, J.; Salo, H.; et al. Specim IQ: Evaluation of a new, miniaturized handheld hyperspectral camera and its application for plant phenotyping and disease detection. Sensors 2018, 18, 441. [Google Scholar] [CrossRef] [PubMed]

- Lowe, D.G. Object recognition from local scale-invariant features. In Proceedings of the Seventh IEEE International Conference on Computer Vision, 1999, Kerkyra, Greece, 20–27 September 1999; Volume 2, pp. 1150–1157. [Google Scholar]

- Jindal, R.; Vatta, S. Sift: Scale invariant feature transform. IJARIIT 2010, 1, 1–5. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems; NIPS: La Jolla, CA, USA, 2012; pp. 1097–1105. [Google Scholar]

- Lawrence, S.; Giles, C.L.; Tsoi, A.C.; Back, A.D. Face recognition: A convolutional neural-network approach. IEEE Trans. Neural Netw. 1997, 8, 98–113. [Google Scholar] [CrossRef] [PubMed]

- Oquab, M.; Bottou, L.; Laptev, I.; Sivic, J. Learning and transferring mid-level image representations using convolutional neural networks. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 1717–1724. [Google Scholar]

- Mendonça, T.; Ferreira, P.M.; Marques, J.S.; Marcal, A.R.; Rozeira, J. PH 2-A dermoscopic image database for research and benchmarking. In Proceedings of the 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Osaka, Japan, 3–7 July 2013; pp. 5437–5440. [Google Scholar]

- Grzesiak-Kopeć, K.; Nowak, L.; Ogorzałek, M. Automatic diagnosis of melanoid skin lesions using machine learning methods. In International Conference on Artificial Intelligence and Soft Computing; Springer: Berlin/Heidelberg, Germany, 2015; pp. 577–585. [Google Scholar]

- Yu, L.; Chen, H.; Dou, Q.; Qin, J.; Heng, P.A. Automated melanoma recognition in dermoscopy images via very deep residual networks. IEEE Trans. Med. Imaging 2017, 36, 994–1004. [Google Scholar] [CrossRef] [PubMed]

- Oliveira, R.B.; Marranghello, N.; Pereira, A.S.; Tavares, J.M.R. A computational approach for detecting pigmented skin lesions in macroscopic images. Expert Syst. Appl. 2016, 61, 53–63. [Google Scholar] [CrossRef]

- Kasmi, R.; Mokrani, K. Classification of malignant melanoma and benign skin lesions: implementation of automatic ABCD rule. IET Image Process. 2016, 10, 448–455. [Google Scholar] [CrossRef]

- Amelard, R.; Glaister, J.; Wong, A.; Clausi, D.A. High-level intuitive features (HLIFs) for intuitive skin lesion description. IEEE Trans. Biomed. Eng. 2015, 62, 820–831. [Google Scholar] [CrossRef] [PubMed]

- Abbas, Q.; Emre Celebi, M.; Garcia, I.F.; Ahmad, W. Melanoma recognition framework based on expert definition of ABCD for dermoscopic images. Skin Res. Technol. 2013, 19, e93–e102. [Google Scholar] [CrossRef] [PubMed]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).