Using Process Evaluation Results to Compare Peer and Adult Leader Delivery of the PAWS (Peer-Education about Weight Steadiness) Club Program

Abstract

:1. Introduction

1.1. Overview of the PAWS Club Intervention

1.2. Aim of the Paper

2. Materials and Methods

2.1. Process Evaluation Methods

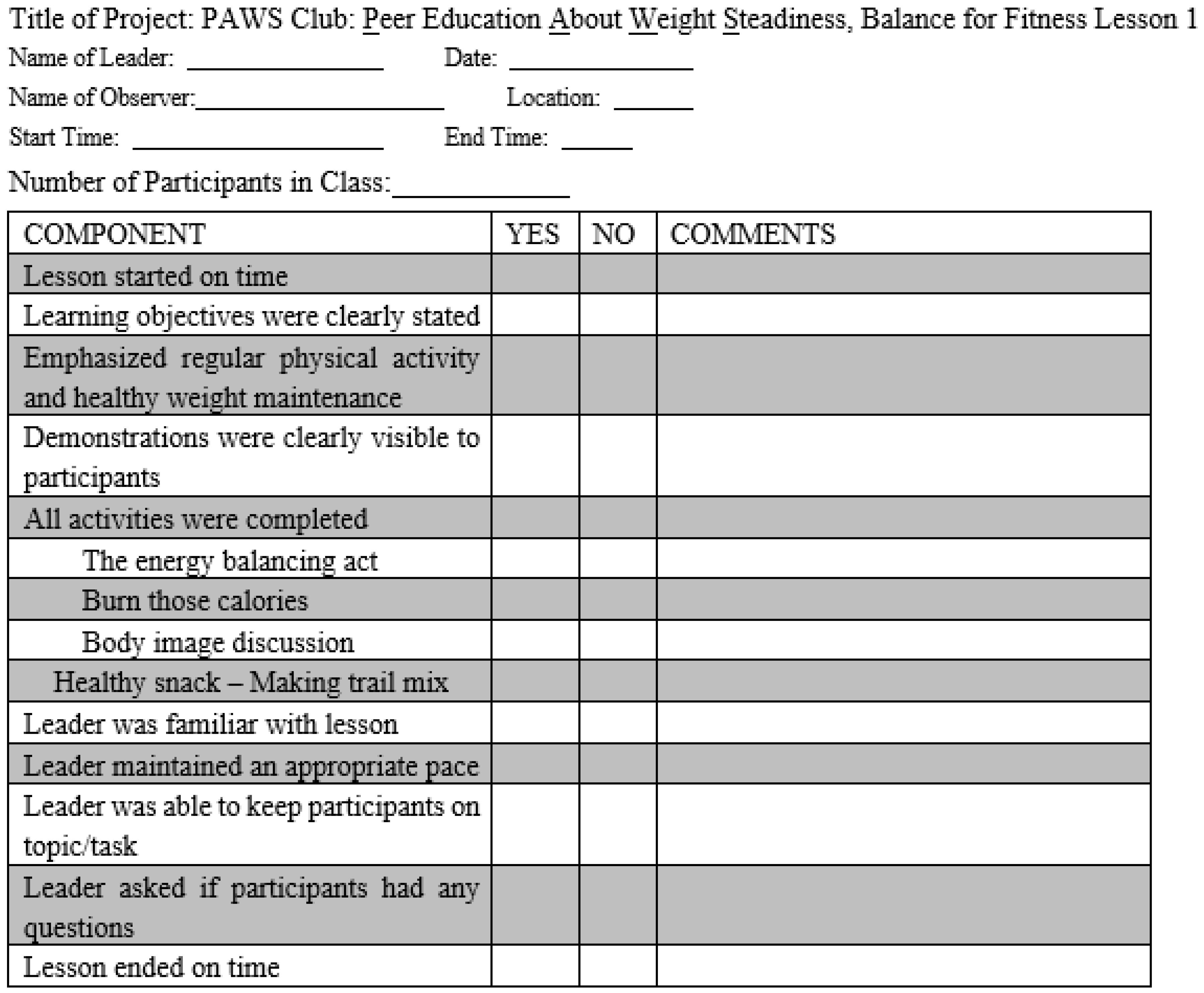

2.2. Details of the Fidelity Log Components

2.3. Scoring of the Fidelity Logs/Data Analyses

3. Results

3.1. Process Evaluation Results

3.2. Comparison between Peer-Led and Adult-Led Sessions

4. Discussion

4.1. Strengths

4.2. Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Griffin, T.L.; Clarke, J.L.; Lancashire, E.R.; Pallan, M.J.; Adab, P.; WAVES study trial investigators. Process evaluation results of a cluster randomized controlled childhood obesity prevention trial: The WAVES study. BMC Public Health 2017, 17, 681–693. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Simmonds, M.; Llewellyn, A.; Owen, C.; Woolacott, N. Predicting adult obesity from childhood obesity: A systematic review and meta-analysis. Obes. Rev. 2015, 17, 95–107. [Google Scholar] [CrossRef] [Green Version]

- Evans, A.; Ranjit, N.; Hoelscher, D.; Jovanovic, C.; Lopez, M.; McIntosh, A.; Ory, M.; Whittlesey, L.; McKyer, L.; Kirk, A.; et al. Impact of school-based vegetable garden and physical activity coordinated health interventions on weight status and weight-related behaviors of ethnically diverse, low-income students: Study design and baseline data of the Texas, Grow! Eat! Go! (TGEG) cluster-randomized controlled trial. BMC Public Health 2016, 16, 973. [Google Scholar] [CrossRef] [Green Version]

- McSweeney, L.; Araujo-Soares, V.; Rapley, T.; Adamson, A. A feasibility study with process evaluation of a preschool interven-tion to improve child and family lifestyle behaviors. BMC Public Health 2017, 17, 248–262. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mathews, L.B.; Moodie, M.M.; Simmons, A.M.; A Swinburn, B. The process evaluation of It’s Your Move! an Australian adolescent community-based obesity prevention project. BMC Public Health 2010, 10, 448. [Google Scholar] [CrossRef] [Green Version]

- A Jenkinson, K.; Naughton, G.; Benson, A.C. The GLAMA (Girls! Lead! Achieve! Mentor! Activate!) physical activity and peer leadership intervention pilot project: A process evaluation using the RE-AIM framework. BMC Public Health 2012, 12, 55. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Weaver, R.G.; Beets, M.W.; Hutto, B.; Saunders, R.P.; Moore, J.B.; Turner-McGrievy, G.; Huberty, J.L.; Ward, D.S.; Pate, R.R.; Beighle, A.; et al. Making healthy eating and physical activity policy practice: Process evaluation of a group randomized controlled intervention in afterschool programs. Health Educ. Res. 2015, 30, 849–865. [Google Scholar] [CrossRef] [Green Version]

- Waters, E.; de Silva, S.A.; Hall, B.; Brown, T.; Campbell, K.; Gao, Y.; Armstrong, R.; Prosser, L.; Summerbell, C.D. Interventions for preventing obesity in children (review). Cochrane Collab. 2011, 12, 1–212. [Google Scholar]

- Wang, Y.; Cai, L.; Wu, Y.; Wilson, R.F.; Weston, C.; Fawole, O.; Bleich, S.N.; Cheskin, L.J.; Showell, N.N.; Lau, B.D.; et al. What childhood obesity prevention programmes work? A sys-tematic review and meta-analysis. Obes. Rev. 2015, 16, 547–565. [Google Scholar] [CrossRef]

- Robertson, W.; Fleming, J.; Kamal, A.; Hamborg, T.; AKhan, K.; Griffiths, F.; Stewart-Brown, S.; Stallard, N.; Petrou, S.; Simkiss, D.; et al. Randomised controlled trial evaluating the effectiveness and cost-effectiveness of ‘Families for Health’, a family-based childhood obesity treatment intervention delivered in a community setting for ages 6 to 11 years. Health Technol. Assess. 2017, 21, 1–180. [Google Scholar] [CrossRef]

- Lloyd, J.; Dean, S.; Creanor, S.; Abraham, C.; Hillsdon, M.; Ryan, E.; Wyatt, K.M. Intervention fidelity in the definitive cluster randomized controlled trial of the Healthy Lifestyles Programme (HeLP) trial: Findings from the process evaluation. Int. J. Behav. Nutr. Phys. Act. 2017, 14, 163–176. [Google Scholar] [CrossRef] [Green Version]

- Craig, P.; Dieppe, P.; Macintyre, S.; Michie, S.; Nazareth, I.; Petticrew, M. Developing and evaluating complex interventions: The new Medical Research Council guidance. BMJ 2008, 337, 1665–1675. [Google Scholar] [CrossRef] [Green Version]

- Griffin, T.L.; Pallan, M.J.; Clarke, J.L.; Lancashire, E.R.; Lyon, A.; Perry, J.M.; Adab, P. Process evaluation design in a cluster randomized controlled childhood obesity prevention trial: The WAVES study. Int. J. Behav. Nutr. Phys. Act. 2014, 11, 1–12. [Google Scholar] [CrossRef] [Green Version]

- Grant, A.; Treweek, S.; Dreischulte, T.; Foy, R.; Guthrie, B. Process evaluations for cluster-randomized trials of complex interven-tions: A proposed framework for design and reporting. Trials 2013, 14, 15–24. [Google Scholar] [CrossRef] [Green Version]

- Schaap, R.; Bessems, K.; Otten, R.; Kremers, S.; Van Nassau, F. Measuring implementation fidelity of school-based obesity prevention programmes: A systematic review. Int. J. Behav. Nutr. Phys. Act. 2018, 15, 1–14. [Google Scholar] [CrossRef]

- Dusenbury, L.; Brannigan, R.; Falco, M.; Hansen, W.B. A review of research on fidelity of implementation: Implications for drug abuse prevention in school settings. Health Educ. Res. 2003, 18, 237–256. [Google Scholar] [CrossRef]

- Carroll, C.; Patterson, M.; Wood, S.; Booth, A.; Rick, J.; Balain, S. Conceptual framework for implementation fidelity. Implement. Sci. 2007, 2, 40–48. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Linnan, L.E.; Steckler, A.E. Process Evaluation for Public Health Interventions and Research; Jossey-Bass: San Francisco, CA, USA, 2002. [Google Scholar]

- Moore, G.F.; Audrey, S.; Bond, L.; Bonell, C.; Wen, H.; Moore, L.; O’Cathain, A.; Tinati, T.; Wight, D.; Baird, J.; et al. Process evaluation of complex interventions: Medical Re-search Council guidance. BMJ 2015, 350, h1258–h1269. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Campbell, R.; Rawlins, E.; Wells, S.; Kipping, R.R.; Chittleborough, C.R.; Peters, T.J.; Lawlor, D.A.; Jago, R. Intervention fidelity in a school-based diet and physical activity intervention in the UK: Active for Life Year 5. Int. J. Behav. Nutr. Phys. Act. 2015, 12, 141. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ruggiero, C.F.; Poirier, L.; Trude, A.C.B.; Yang, T.; Schwendler, T.; Gunen, B.; Loh, I.H.; Perepezko, K.; Nam, C.S.; Sato, P.; et al. Implementation of B’More Healthy Communities for Kids: Process evaluation of a multi-level, multi-component obesity prevention intervention. Health Educ. Res. 2018, 33, 458–472. [Google Scholar] [CrossRef]

- Sharma, S.V.; Chuang, R.J.; Dyrd-Williams, C.; Vandewater, E.; Butte, N.; Hoelscher, D.M. Using process evaluation for implemen-tation success of preschool-based programs for obesity prevention: The TX Childhood Obesity Research Demonstration Study. J. Sch. Health 2019, 89, 382–392. [Google Scholar] [CrossRef]

- Muzaffar, H.; Nikolaus, C.J.; Ogolsky, B.G.; Lane, A.; Liguori, C.; Nickols-Richardson, S.M. Promoting Cooking, Nutrition, and Physical Activity in Afterschool Settings. Am. J. Health Behav. 2019, 43, 1050–1063. [Google Scholar] [CrossRef] [PubMed]

- Baranowski, T.; Stables, G. Process Evaluations of the 5-a-Day Projects. Health Educ. Behav. 2000, 27, 157–166. [Google Scholar] [CrossRef]

- Audrey, S.; Holliday, J.; Parry-Langdon, N.; Campbell, R. Meeting the challenges of implementing process evaluation within randomized controlled trials: The example of ASSIST (A Stop Smoking in Schools Trial). Health Educ. Res. 2006, 21, 366–377. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Martens, M.; Van Assema, P.; Paulussen, T.; Schaalma, H.; Brug, J. Krachtvoer: Process evaluation of a Dutch programme for lower vocational schools to promote healthful diet. Health Educ. Res. 2006, 21, 695–704. [Google Scholar] [CrossRef]

- Ringwalt, C.L.; Ennett, S.; Johnson, R.; Rohrbach, L.A.; Simons-Rudolph, A.; Vincus, A.; Thorne, J. Factors associated with fidelity to sub-stance use prevention curriculum guides in the nation’s middle schools. Health Educ. Behav. 2003, 30, 375–391. [Google Scholar] [CrossRef]

- Pearson, M.L.; Chilton, R.; Wyatt, K.; Abraham, C.; Ford, T.; Woods, H.B.; Anderson, R.H. Implementing health promotion programmes in schools: A realist systematic review of research and experience in the United Kingdom. Implement. Sci. 2015, 10, 1–20. [Google Scholar] [CrossRef] [Green Version]

- Moore, G.; Audrey, S.; Barker, M.; Bond, L.; Bonell, C.; Cooper, C.; Hardeman, W.; Moore, L.; O’Cathain, A.; Tinati, T.; et al. Process evaluation in complex public health intervention studies: The need for guidance. J. Epidemiol. Community Health 2014, 68, 101–102. [Google Scholar] [CrossRef] [Green Version]

- Bonell, C.; Fletcher, A.; Morton, M.; Lorenc, T.; Moore, L. Realist randomized controlled trials: A new approach to evaluating com-plex public health interventions. Soc. Sci. Med. 2012, 75, 2299–2306. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Munro, A.; Bloor, M. Process evaluation: The new miracle ingredient in public health research? Qual. Res. 2010, 10, 699–713. [Google Scholar] [CrossRef]

| Sessions | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | Ave |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Number of lessons evaluated | 13 | 12 | 13 | 12 | 13 | 12 | 12 | 12 | 12 | 10 | 12 | 12 | 12 |

| Number of participants (R) | 6 | 6 | 6 | 4 | 4 | 5 | 4 | 4 | 4 | 4 | 5 | 4 | 5 |

| Number of leaders (R) | 3 | 3 | 2 | 2 | 3 | 3 | 3 | 3 | 3 | 3 | 3 | 3 | 3 |

| Duration (minutes) (D) | 64 | 71 | 66 | 70 | 71 | 70 | 75 | 69 | 62 | 60 | 70 | 63 | 68 |

| Lesson started on time (%) (F) | 46 | 100 | 46 | 92 | 62 | 83 | 75 | 66 | 66 | 50 | 75 | 92 | 71 |

| Lesson objectives were clearly stated (%) (F) | 77 | 50 | 62 | 33 | 38 | 75 | 92 | 33 | 75 | 50 | 75 | 75 | 61 |

| Emphasized objectives of the lesson (%) (Q) | 85 | 83 | 46 | 33 | 62 | 58 | 83 | 42 | 66 | 40 | 75 | 75 | 62 |

| Demonstrations visible to participants (%) (F) | 69 | 100 | 92 | 66 | 85 | 83 | 92 | 58 | 83 | 70 | 83 | 75 | 80 |

| % Of activitiesActivities completed (F) | 98 | 88 | 78 | 92 | 80 | 72 | 85 | 55 | 82 | 67 | 81 | 77 | 80 |

| Leader familiar with lesson (%) (F) | 62 | 42 | 46 | 83 | 46 | 50 | 42 | 58 | 66 | 50 | 66 | 58 | 56 |

| Leader maintained an appropriate pace (%) (Q) | 69 | 83 | 77 | 92 | 38 | 50 | 58 | 75 | 66 | 50 | 58 | 75 | 66 |

| Leader kept participants on track (%) (Q) | 69 | 50 | 77 | 58 | 54 | 75 | 66 | 66 | 58 | 20 | 50 | 75 | 60 |

| Leader asked if participants had any questions (%) (Q) | 31 | 33 | 46 | 25 | 8 | 25 | 25 | 25 | 17 | 0 | 8 | 17 | 22 |

| Lesson ended on time (%) (F) | 54 | 67 | 54 | 92 | 85 | 75 | 75 | 75 | 75 | 30 | 75 | 83 | 70 |

| Sessions | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | Ave |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Number of lessons evaluated | 8 | 12 | 9 | 12 | 9 | 12 | 10 | 12 | 10 | 14 | 9 | 13 | 11 |

| Number of participants (R) | 7 | 6 | 6 | 6 | 6 | 6 | 6 | 6 | 5 | 6 | 6 | 5 | 6 |

| Number of leaders (R) | 2 | 1 | 2 | 1 | 2 | 1 | 2 | 2 | 2 | 2 | 2 | 2 | 2 |

| Duration (minutes) (D) | 65 | 78 | 76 | 80 | 80 | 78 | 76 | 77 | 75 | 79 | 82 | 60 | 76 |

| Lesson started on time (%) (F) | 50 | 83 | 66 | 100 | 89 | 83 | 90 | 75 | 70 | 86 | 78 | 77 | 79 |

| Lesson objectives were clearly stated (%) (F) | 63 | 42 | 89 | 66 | 78 | 83 | 90 | 75 | 100 | 64 | 89 | 69 | 76 |

| Emphasized objectives of the lesson (%) (Q) | 88 | 83 | 56 | 58 | 89 | 92 | 100 | 83 | 90 | 86 | 100 | 77 | 84 |

| Demonstrations visible to participants (%) (F) | 75 | 92 | 100 | 92 | 89 | 92 | 100 | 83 | 100 | 86 | 89 | 77 | 90 |

| % Of activitiesActivities completed (F) | 100 | 85 | 95 | 100 | 91 | 86 | 93 | 71 | 92 | 80 | 68 | 75 | 86 |

| Leader familiar with lesson (%) (F) | 75 | 66 | 100 | 92 | 89 | 100 | 100 | 92 | 100 | 93 | 89 | 69 | 89 |

| Leader maintained an appropriate pace (%) (Q) | 100 | 92 | 78 | 92 | 78 | 100 | 100 | 83 | 90 | 93 | 89 | 77 | 89 |

| Leader kept participants on track (%) (Q) | 100 | 92 | 100 | 92 | 100 | 92 | 100 | 92 | 90 | 86 | 89 | 77 | 93 |

| Leader asked if participants had any questions (%) (Q) | 88 | 75 | 78 | 66 | 56 | 83 | 90 | 75 | 90 | 79 | 89 | 54 | 77 |

| Evaluation Criteria | Peer-Led Sessions | Adult-Led Sessions | Difference between Peer- and Adult-Led Sessions | p-Value |

|---|---|---|---|---|

| Number of times each lesson evaluated | 12 | 11 | 1 | N/A |

| Number of leaders (R) | 2–5 | 1–3 | 1–2 | N/A |

| Number of participants (R) | 4–5 | 5–6 | 1 | N/A |

| Duration of the lesson (D) | 68 min | 76 min | 8 min | N/A |

| Lesson started on time (F) | 71% (medium) | 79% (high) | 8% | 0.241 |

| Lesson objectives were clearly stated (F) | 61% (medium) | 76% (high) | 15% | 0.062 |

| Emphasized objectives of lesson (Q) | 62% (medium) | 84% (high) | 22% | 0.005 * |

| Demonstrations visible to participants (F) | 80% (high) | 90% (high) | 10% | 0.031 * |

| % Of activities completed (F) | 80% (high) | 86% (high) | 6% | 0.249 |

| Leader familiar with lesson (F) | 56% (medium) | 89% (high) | 33% | 0.000 * |

| Leader maintained an appropriate pace (Q) | 66% (medium) | 89% (high) | 23% | 0.000 * |

| Leader kept participants on track (Q) | 60% (medium) | 93% (high) | 33% | 0.000 * |

| Leader asked if participants had any questions (Q) | 22% (low) | 77% (high) | 55% | 0.000 * |

| Lesson ended on time (F) | 70% (medium) | 78%(high) | 8% | 0.205 |

| Fidelity Log Question | Summary for Peer Educators | Summary for Adult Educators |

|---|---|---|

| Lesson started on time | Most of the lessons started a little late due to either the educators coming late, the participants coming late, time finishing up questionnaires, two lessons for the same day, peer educators not prepared well, and sometimes a snack was served first. | Lessons started a little late most of the time due to adult educators coming in late due to finishing up their work for the day, or the kids coming late, or they were served snacks a little earlier, or the participants had to finish up the study questionnaires. |

| Lesson objectives were clearly stated | The lesson objectives were not explicitly stated most of the time. Sometimes the peer educators read the overview or mentioned a couple of objectives. Mostly the peer educators read the directions for the activities from the lesson plans. Sometimes the peer educators asked the participants questions before starting the class. | Most of the time the lesson objectives were not clearly stated by the adult educators. They did explain what they would do in class that day or go over the overview of that day’s lesson. Sometimes the adult educators would also go over what was learned in the last session. |

| Emphasized objectives of lesson | The peer educators did not emphasize all the lesson objectives. Some of the lesson objectives that they discussed more were how to be more physically active and the health impacts of exercise, eating breakfast, and eating fruits and vegetables. | The adult educators emphasized physical activity, key benefits of physical activity, ways to eat different types of fruits and vegetables, healthy snacks, how Americans eat out a lot, different restaurant foods, amount of sugar in drink labels, goal setting, food labels, family mealtimes, shopping and planning for meals, and MyPlate. |

| Demonstrations visible to participants | Peer educators made the demos visible to the participants. Sometimes the participants were not interested because they did not understand the activity, and peer educators did not explain the purpose of the activities but sometimes asked the participants questions after activities. | Adult educators sometimes did the demos in the front of the room instead of doing it at the table to prevent crowding at the table. Adult educators gave effective personal examples and gave clear instructions and explanations for the participants to understand the activity and be able to perform the activity on their own as well. |

| All activities were completed | Peer educators often missed some activities, rushed through some, or went out of order. | Completed activities most of the time. Sometimes missed activities or modified them. |

| % Of activities completed | No comments | No comments |

| Leader familiar with lesson | Peer educators mostly were not familiar with the lesson, did the activities out of order, and read from the lesson plans during the session. They were interactive with participants and were good facilitators. They needed help/reminders from the site coordinator. Sometimes they did not know which lesson was assigned for the day. Some skipped parts of the lesson or forgot to bring program binders. | Adult educators most of the time were familiar with the lesson, and sometimes added a lot of extras and reviewed the lesson to make sure all the content is covered. |

| Leader maintained an appropriate pace | Peer educators were mostly slow in delivering the lesson and encouraged too many distractions, lingered on at snack time, needed to be told when to move on, skipped some points in the lesson, went out of order, and had gaps in the lesson because they were unprepared. Sometimes they finished the lesson quickly and without much discussion with the participants. | Adult educators maintained a good pace most of the time to ensure all the content was covered. They sometimes added extra information. They also asked the kids if they had any questions related to the content. |

| Leader kept participants on track | Most of the time the peer educators were not able to keep the participants on the topic because they were reading from the program binders, participants started side convo and the educators encouraged off-topic chatting, participants interrupted the lesson by distracting other children, peer leaders were on their phones, kids were rowdy and educators unable to control, and sometimes leaders lost focus and did not cover the content sufficiently. Sometimes the peer educators were able to put them back on task after the interruptions. | Adult educators did their best to keep the participants on task/topic, asked questions to keep the participants engaged, redirected and brought the kids to focus again after distractions such as snack time or physical activity. Sometimes the kids did not cooperate. |

| Leader asked if participants had any questions | Peer educators most of the time just lectured or read from the lesson plans and very rarely asked questions. However, if the participants asked questions, they answered them thoughtfully. | Adult educators encouraged discussion by asking questions, discussed everything more than once, reviewed the previous lesson, and answered all the questions and turned them into learning opportunities. |

| Lesson ended on time | Most of the time the lesson ended a little early but around the expected end time or a few times it ended too late. | Most of the time the lesson ended a little early but the adult educators gave a good review and reminders at the end. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Muzaffar, H.; Nickols-Richardson, S.M. Using Process Evaluation Results to Compare Peer and Adult Leader Delivery of the PAWS (Peer-Education about Weight Steadiness) Club Program. Nutrients 2021, 13, 3901. https://doi.org/10.3390/nu13113901

Muzaffar H, Nickols-Richardson SM. Using Process Evaluation Results to Compare Peer and Adult Leader Delivery of the PAWS (Peer-Education about Weight Steadiness) Club Program. Nutrients. 2021; 13(11):3901. https://doi.org/10.3390/nu13113901

Chicago/Turabian StyleMuzaffar, Henna, and Sharon M. Nickols-Richardson. 2021. "Using Process Evaluation Results to Compare Peer and Adult Leader Delivery of the PAWS (Peer-Education about Weight Steadiness) Club Program" Nutrients 13, no. 11: 3901. https://doi.org/10.3390/nu13113901