eHealth Literacy of Australian Undergraduate Health Profession Students: A Descriptive Study

Abstract

1. Introduction

eHealth Literacy

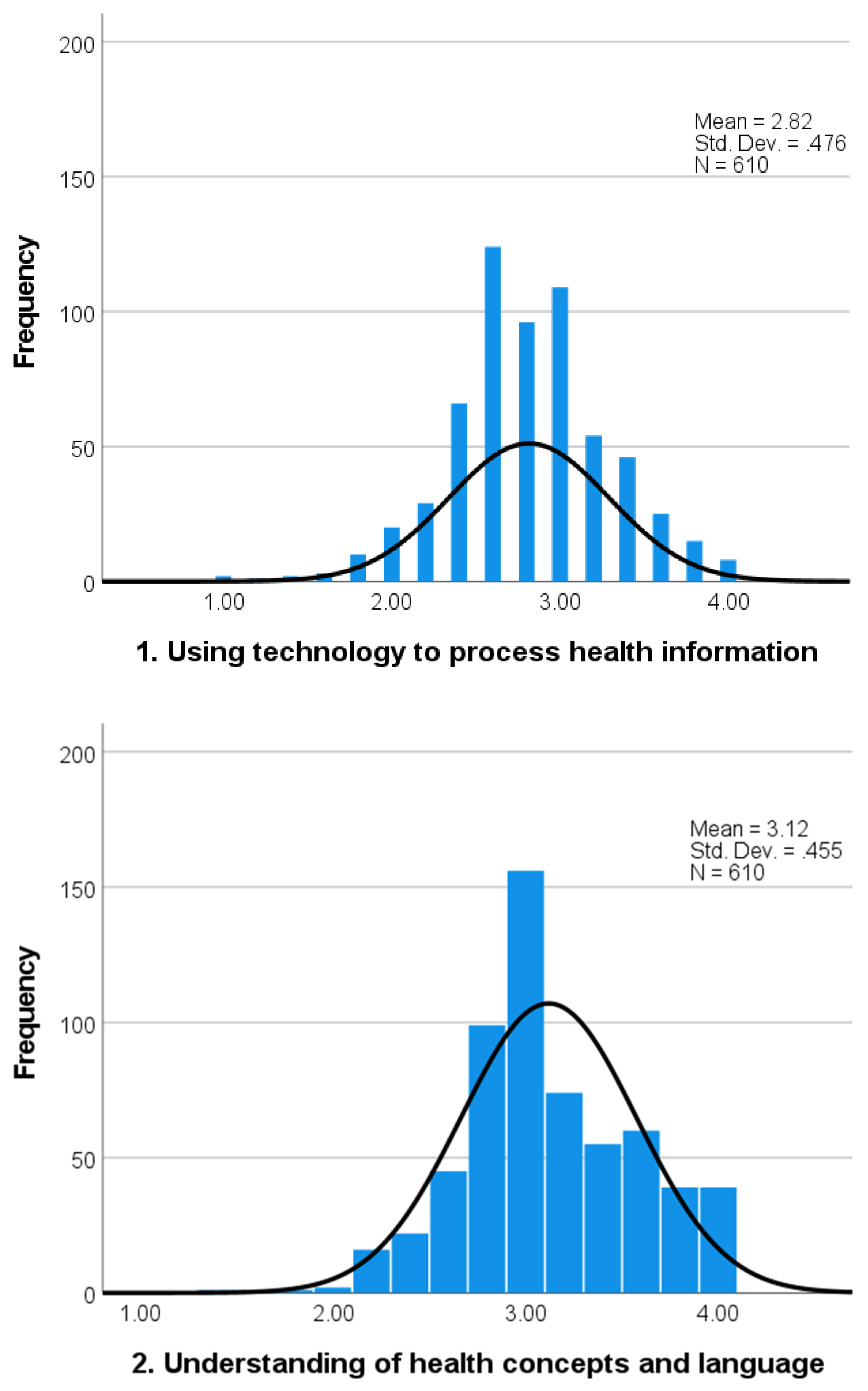

- Using technology to process health information;

- Understanding of health concepts and language;

- Ability to actively engage with digital services;

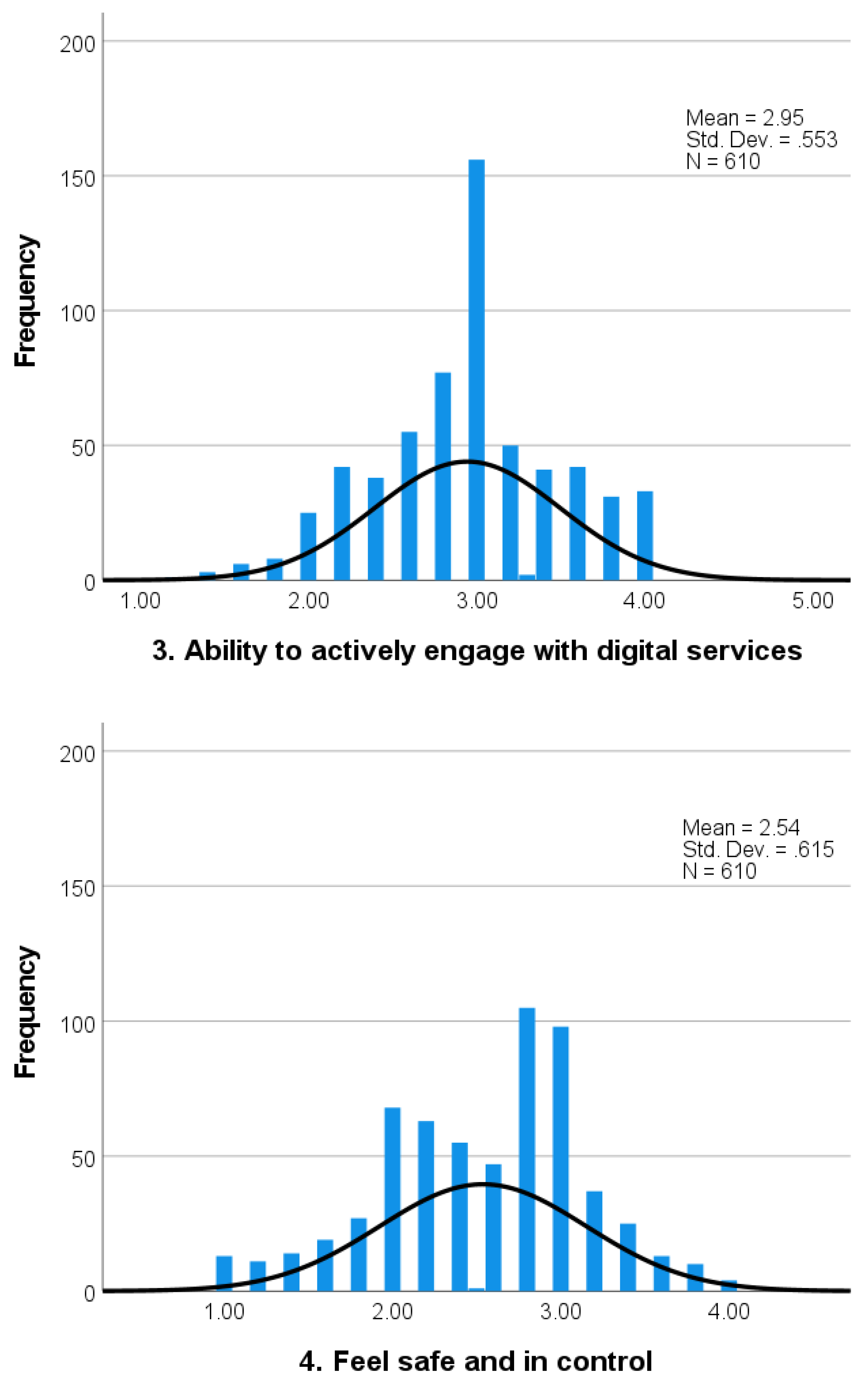

- Feel safe and in control;

- Motivated to engage with digital services;

- Access to digital services that work; and

- Digital services that suit individual needs [26].

- What are the eHealth literacy strengths and challenges of undergraduate health profession students as determined by the eHLQ?

- What sociodemographic factors are associated with the eHealth literacy of undergraduate health profession students?

- What are the implications for curriculum development with respect to eHealth literacy of undergraduate health profession students?

2. Materials and Methods

2.1. The eHealth Literacy Questionnaire (eHLQ)

2.2. Data Collection

2.3. Statistical Analysis

3. Results

3.1. Participant Characteristics

3.2. eHealth Literacy and Sociodemographic Factors

3.3. Effects of Sociodemographic Factors on the Combination of the Seven eHLQ Scales

3.4. Effects of Sociodemographic Factors on Individual eHLQ Scales

4. Discussion

4.1. Principal Findings

4.2. eHealth Literacy

4.3. Sociodemographic Factors

4.4. Implications for Curriculum Development

4.5. Limitations and Future Directions

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

Appendix B

| 1. Using Tech | 2. Health Concepts | 3. Ability | 4. Feel Safe | 5. Motivated | 6. Access | |

|---|---|---|---|---|---|---|

| 1. Understanding of health concepts and language | 0.58 | |||||

| 2. Ability to actively engage with digital service | 0.64 | 0.60 | ||||

| 3. Feel safe and in control | 0.40 | 0.29 | 0.32 | |||

| 4. Motivated to engage with digital services | 0.78 | 0.47 | 0.56 | 0.41 | ||

| 5. Access to digital services that work | 0.54 | 0.32 | 0.46 | 0.59 | 0.55 | |

| 6. Digital services that suit individual needs | 0.65 | 0.39 | 0.56 | 0.52 | 0.68 | 0.72 |

Appendix C

| 1. Using Tech | 2. Health Concepts | 3. Engage | 4. Feel Safe | 5. Motive | 6. Access | 7. Suit Needs | |

|---|---|---|---|---|---|---|---|

| Age | Mean diff [95% CI], p | ||||||

| Early adult vs. young adult | 0.03 [−0.14, 0.19], 0.99 | 0.14 [−0.01, 0.29], 0.07 | −0.010 [−0.19, 0.17], 1.00 | 0.33 [0.12, 0.53], <0.001 | 0.01 [−0.17, 0.18], 1.00 | 0.21 [0.04, 0.37], 0.01 | 0.09 [−0.10, 0.28], 0.62 |

| Early adult vs. middle-aged adult | 0.19 [0.06, 0.33], <0.001 | 0.23 [0.10, 0.36], <0.001 | 0.27 [0.11, 0.42], <0.001 | 0.29 [0.11, 0.46], <0.001 | 0.20 [0.05, 0.35], <0.001 | 0.18 [0.03, 0.31], 0.01 | 0.23 [0.07, 0.39], <0.001 |

| Early adult vs. older adult | 0.26 [0.12, 0.39], <0.001 | 0.30 [0.17, 0.43], <0.001 | 0.41 [0.25, 0.56], <0.001 | 0.42 [0.24, 0.59], <0.001 | 0.28 [0.13, 0.43], <0.001 | 0.25 [0.11, 0.39], <0.001 | 0.38 [0.22, 0.54], <0.001 |

| Young adult vs. middle-aged adult | 0.17 [0.02, 0.32], 0.02 | 0.09 [−0.05, 0.23], 0.37 | 0.28 [0.11, 0.45], <0.001 | −0.04 [−0.23, 0.15], 0.96 | 0.19 [0.03, 0.35], 0.01 | −0.03 [−0.18, 0.12], 0.95 | 0.14 [−0.03, 0.32], 0.14 |

| Young adult vs. older adult | 0.23 [0.08, 0.38], <0.001 | 0.16 [0.02, 0.30], 0.02 | 0.42 [0.25, 0.59], <0.001 | 0.09 [−0.10, 0.28], 0.65 | 0.27 [0.11, 0.43], <0.001 | 0.04 [−0.11, 0.20], 0.88 | 0.29 [0.12, 0.47], <0.001 |

| Middle-aged adult vs. older adult | 0.06 [−0.06, 0.19], 0.59 | 0.07 [−0.05, 0.19], 0.38 | 0.14 [−0.00, 0.28], 0.05 | 0.12 [−0.03, 0.28], 0.19 | 0.08 [−0.06, 0.22], 0.42 | 0.08 [−0.05, 0.20], 0.41 | 0.15 [0.00, 0.29], 0.05 |

| Education | Mean diff, p [95% CI] | ||||||

| Secondary or below vs. TAFE/Diploma | 0.01 [−0.01, 0.23], 0.10 | 0.22 [0.10, 0.33], <0.001 | 0.18 [0.04, 0.32], 0.01 | 0.27 [0.12, 0.42], <0.001 | 0.16 [0.03, 0.29], 0.01 | 0.16 [0.04, 0.28], <0.001 | 0.18 [0.04, 0.32], 0.01 |

| Secondary or below vs. University or above | 0.10 [−0.02, 0.22], 0.11 | 0.09 [−0.02, 0.21], 0.15 | 0.13 [−0.01, 0.27], 0.07 | 0.32 [0.17, 0.47], <0.001 | 0.14 [0.01, 0.27], 0.04 | 0.24 [0.12, 0.36], <0.001 | 0.18 [0.04, 0.32], 0.01 |

| TAFE/Diploma vs. University of above | −0.00 [−0.11, 0.10], 1.00 | −0.13 [−0.22, −0.03], 0.01 | −0.05 [−0.17, 0.07], 0.60 | 0.05 [−0.08, 0.18], 0.60 | −0.02 [−0.13, 0.09], 0.88 | 0.08 [−0.03, 0.18], 0.20 | 0.00 [−0.12, 0.12], 1.00 |

| Use of digital platform | Mean diff, p [95% CI] | ||||||

| Low user vs. medium user | −0.26 [−0.43, −0.11], <0.001 | −0.24 [−0.40, −0.09], <0.001 | −0.19 [−0.38, −0.01], 0.04 | −0.30 [−0.51, −0.10], <0.001 | −0.32 [−0.49, −0.15], <0.001 | −0.14 [−0.30, 0.03], 0.12 | −0.27 [−0.46, −0.08], 0.00 |

| Low user vs. high user | −0.34 [−0.53, −0.15], <0.001 | −0.28 [−0.46, −0.10], <0.001 | −0.39 [−0.61, −0.17], <0.001 | −0.38 [−0.62, −0.13], <0.001 | −0.45 [−0.65, −0.24], <0.001 | −0.21 [−0.40, −0.01], 0.03 | −0.43 [−0.66, −0.21], <0.001 |

| Medium user vs. high user | −0.07 [−0.20, 0.05], 0.30 | −0.04 [−0.15, 0.08], 0.72 | −0.20 [−0.34, −0.06], <0.001 | −0.07 [−0.23, 0.09], 0.55 | −0.13 [−0.26, 0.01], 0.07 | −0.07 [−0.19, 0.06], 0.41 | −0.16 [−0.31, −0.02], 0.02 |

References

- Australian Commission on Safety and Quality in Health Care. National Safety and Quality Health Service Standards, 2nd ed.; ACSQHC: Sydney, Australia, 2017. [Google Scholar]

- Batterham, R.W.; Hawkins, M.; Collins, P.; Buchbinder, R.; Osborne, R.H. Health literacy: Applying current concepts to improve health services and reduce health inequalities. Public Health 2016, 132, 3–12. [Google Scholar] [CrossRef] [PubMed]

- Bröder, J.; Chang, P.; Kickbusch, I.; Levin-Zamir, D.; McElhinney, E.; Nutbeam, D.; Okan, O.; Osborne, R.; Pelikan, J.; Rootman, I. IUHPE position statement on health literacy: A practical vision for a health literate world. Glob. Health Promot. 2018, 25, 79–88. [Google Scholar] [CrossRef]

- World Health Organization. Health Literacy Development for the Prevention and Control of Noncommunicable Diseases: Volume 1. Overview; World Health Organization: Geneva, Switzerland, 2022; ISBN 978-92-4-005533-9. [Google Scholar]

- Friis, K.; Lasgaard, M.; Osborne, R.H.; Maindal, H.T. Gaps in understanding health and engagement with healthcare providers across common long-term conditions: A population survey of health literacy in 29473 Danish citizens. BMJ Open 2016, 6, e009627. [Google Scholar] [CrossRef] [PubMed]

- Mather, C.; Douglas, T.; Jacques, A. Health literacy of undergraduate health profession students in Australia: A comparison of the island State of Tasmania and Other Australian universities. Kontakt 2018, 20, e386–e393. [Google Scholar] [CrossRef]

- Cheng, C.; Beauchamp, A.; Elsworth, G.R.; Osborne, R.H. Applying the electronic health literacy lens: Systematic review of electronic health interventions targeted at socially disadvantaged groups. J. Med. Internet Res. 2020, 22, e18476. [Google Scholar] [CrossRef]

- Foley, K.; Freeman, T.; Ward, P.; Lawler, A.; Osborne, R.; Fisher, M. Exploring access to, use of and benefits from population-oriented digital health services in Australia. Health Promot. Int. 2021, 36, 1105–1115. [Google Scholar] [CrossRef]

- World Health Organization. Global Strategy on Digital Health 2020–2025; World Health Oroganization: Geneva, Switzerland, 2021. [Google Scholar]

- Risoldi Cochrane, Z.; Gregory, P.; Wilson, A. Readability of consumer health information on the internet: A comparison of US government–funded and commercially funded websites. J. Health Commun. 2012, 17, 1003–1010. [Google Scholar] [CrossRef]

- Cheng, C.; Dunn, M. Health literacy and the Internet: A study on the readability of Australian online health information. Aust. N. Z. J. Public Health 2015, 39, 309–314. [Google Scholar] [CrossRef]

- Australian Government. Australia’s National Digital Health Strategy. In Safe, Seamless and Secure: Evolving Health and Care to Meet the Needs of Modern Australia; Australian Digital Health Agency, Ed.; Australian Government: Canberra, Australia, 2017. [Google Scholar]

- United Nations Educational Scientific and Cultural Organization. A Global Framework for Reference on Digital Literacy Skills for Indicator 4.4.2, Information Paper no 51; UNESCO: Montreal, QC, Canada, 2018. [Google Scholar]

- World Health Organization. eHealth at WHO; World Health Organization: Geneva, Switzerland, 2019; Available online: https://www.who.int/ehealth/about/en (accessed on 5 March 2022).

- Showell, C.; Cummings, E.; Turner, P. The invisibility of disadvantage: Why do we not notice? Stud. Health Technol. Inform. 2017, 235, 388–392. [Google Scholar]

- World Health Organization. Digital Health Literacy; WHO: Geneva, Switzerland, 2017. [Google Scholar]

- Baig, M.M.; GholamHosseini, H.; Moqeem, A.A.; Mirza, F.; Lindén, M. A systematic review of wearable patient monitoring systems–current challenges and opportunities for clinical adoption. J. Med. Syst. 2017, 41, 115. [Google Scholar] [CrossRef]

- Kashgary, A.; Alsolaimani, R.; Mosli, M.; Faraj, S. The role of mobile devices in doctor-patient communication: A systematic review and meta-analysis. J. Telemed. Telecare 2017, 23, 693–700. [Google Scholar] [CrossRef]

- Mather, C.A.; Gale, F.; Cummings, E.A. Governing mobile technology use for continuing professional development in the Australian nursing profession. BMC Nurs. 2017, 16, 17. [Google Scholar] [CrossRef][Green Version]

- Mather, C.; Cummings, E.; Gale, F. Nurses as stakeholders in the adoption of mobile technology in Australian health care environments: Interview study. JMIR Nurs. 2019, 2, e14279. [Google Scholar] [CrossRef]

- Australian Government. National Digital Health Workforce and Education Roadmap; Australian Digital Health Agency, Ed.; Australian Digital Health Agency: Sydney, Australia, 2020.

- Kasteren, Y.; Maeder, A.; Williams, P.A.; Damarell, R. Consumer perspectives on MyHealth Record: A review. Stud. Health Technol. Inform. 2017, 239, 146–152. [Google Scholar]

- Allen-Graham, J.; Mitchell, L.; Heriot, N.; Armani, R.; Langton, D.; Levinson, M.; Young, A.; Smith, J.A.; Kotsimbos, T.; Wilson, J.W. Electronic health records and online medical records: An asset or a liability under current conditions? Aust. Health Rev. 2018, 42, 59–65. [Google Scholar] [CrossRef]

- Walsh, L.; Hemsley, B.; Allan, M.; Adams, N.; Balandin, S.; Georgiou, A.; Higgins, I.; McCarthy, S.; Hill, S. The e-health literacy demands of Australia’s my health record: A heuristic evaluation of usability. Perspect. Health Inf. Manag. 2017, 14, 1f. [Google Scholar]

- Norman, C.D.; Skinner, H.A. eHEALS: The eHealth literacy scale. J. Med. Internet Res. 2006, 8, e27. [Google Scholar] [CrossRef]

- Norgaard, O.; Furstrand, D.; Klokker, L.; Karnoe, A.; Batterham, R.; Kayser, L.; Osborne, R.H. The e-health literacy framework: A conceptual framework for characterizing e-health users and their interaction with e-health systems. Knowl. Manag. E-Learn. Int. J. 2015, 7, 522–540. [Google Scholar]

- Chen, Y.-C.; Cheng, C.; Osborne, R.H.; Kayser, L.; Liu, C.-Y.; Chang, L.-C. Validity Testing and Cultural Adaptation of the eHealth Literacy Questionnaire (eHLQ) Among People With Chronic Diseases in Taiwan: Mixed Methods Study. J. Med. Internet Res. 2022, 24, e32855. [Google Scholar] [CrossRef]

- Cheng, C.; Elsworth, G.; Osborne, R.H. Validity evidence based on relations to other variables of the eHealth Literacy Questionnaire (eHLQ): Bayesian approach to test for known-groups validity. J. Med. Internet Res. 2021, 23, e30243. [Google Scholar] [CrossRef]

- Nielsen, A.S.; Hanna, L.; Larsen, B.F.; Appel, C.W.; Osborne, R.H.; Kayser, L. Readiness, acceptance and use of digital patient reported outcome in an outpatient clinic. Health Inform. J. 2022, 28, 14604582221106000. [Google Scholar] [CrossRef]

- Buchbinder, R.; Batterham, R.; Elsworth, G.; Dionne, C.E.; Irvin, E.; Osborne, R.H. A validity-driven approach to the understanding of the personal and societal burden of low back pain: Development of a conceptual and measurement model. Arthritis Res. Ther. 2011, 13, R152. [Google Scholar] [CrossRef]

- Kayser, L.; Karnoe, A.; Furstrand, D.; Batterham, R.; Christensen, K.B.; Elsworth, G.; Osborne, R.H. A multidimensional tool based on the eHealth literacy framework: Development and initial validity testing of the eHealth literacy questionnaire (eHLQ). J. Med. Internet Res. 2018, 20, e36. [Google Scholar] [CrossRef]

- Park, J.Y.; Min, J. Exploring Canadian pharmacy students’e-health literacy: A mixed method study. Pharm. Pract. 2020, 18, 1747. [Google Scholar]

- Dashti, S.; Peyman, N.; Tajfard, M.; Esmaeeli, H. E-Health literacy of medical and health sciences university students in Mashhad, Iran in 2016: A pilot study. Electron. Physician 2017, 9, 3966. [Google Scholar] [CrossRef]

- Rathnayake, S.; Senevirathna, A. Self-reported eHealth literacy skills among nursing students in Sri Lanka: A cross-sectional study. Nurse Educ. Today 2019, 78, 50–56. [Google Scholar] [CrossRef]

- Sharma, S.; Oli, N.; Thapa, B. Electronic health–literacy skills among nursing students. Adv. Med. Educ. Pract. 2019, 10, 527. [Google Scholar] [CrossRef]

- Park, H.; Lee, E. Self-reported eHealth literacy among undergraduate nursing students in South Korea: A pilot study. Nurse Educ. Today 2015, 35, 408–413. [Google Scholar] [CrossRef] [PubMed]

- Kim, S.; Jeon, J. Factors influencing eHealth literacy among Korean nursing students: A cross-sectional study. Nurs. Health Sci. 2020, 22, 667–674. [Google Scholar] [CrossRef] [PubMed]

- Holt, K.A.; Overgaard, D.; Engel, L.V.; Kayser, L. Health literacy, digital literacy and eHealth literacy in Danish nursing students at entry and graduate level: A cross sectional study. BMC Nurs. 2020, 19, 22. [Google Scholar] [CrossRef] [PubMed]

- Tsukahara, S.; Yamaguchi, S.; Igarashi, F.; Uruma, R.; Ikuina, N.; Iwakura, K.; Koizumi, K.; Sato, Y. Association of eHealth Literacy With Lifestyle Behaviors in University Students: Questionnaire-Based Cross-Sectional Study. J. Med. Internet Res. 2020, 22, e18155. [Google Scholar] [CrossRef]

- United Kingdom Government. Essential Digital Skills; United Kingdom Government: London, UK, 2018.

- Cheng, C.; Elsworth, G.R.; Osborne, R.H. Validity Evidence of the eHealth Literacy Questionnaire (eHLQ) Part 2: Mixed Methods Approach to Evaluate Test Content, Response Process, and Internal Structure in the Australian Community Health Setting. J. Med. Internet Res. 2022, 24, e32777. [Google Scholar] [CrossRef]

- Australian Bureau of Statistics. 2033.0.55.001-Census of Population and Housing: Socio-Economic Indexes for Areas (SEIFA); Australian Bureau of Statistics: Canberra, Australia, 2018.

- IBM Corp. IBM SPSS Statistics for Mac, version 25.0; IBM Corp: Armonk, NY, USA, 2017. [Google Scholar]

- Huberty, C.J.; Petoskey, M.D. Multivariate analysis of variance and covariance. In Handbook of Applied Multivariate Statistics and Mathematical Modeling; Tinsley, H.E.A., Brown, S.D., Eds.; Elsevier: New York, NY, USA, 2000; pp. 183–208. [Google Scholar]

- Huberly, C.; Morris, J. Multivariate analysis versus multiple univariate analysis. Psychol. Bull. 1989, 105, 302–308. [Google Scholar] [CrossRef]

- Tabachnick, B.; Fidell, L. Using Multivariate Statistics, 6th ed.; Pearson Education Limited: Harlow, UK, 2016. [Google Scholar]

- Bates, R.A. Multivariate research methods. In Research in Organizations: Foundations and Methods of Inquiry; Holton, E.F., Swanson, R.A., Eds.; Berrett-Koehler: San Francisco, CA, USA, 2005; pp. 115–141. [Google Scholar]

- Levine, T.R.; Hullett, C.R. Eta squared, partial eta squared, and misreporting of effect size in communication research. Hum. Commun. Res. 2002, 28, 612–625. [Google Scholar] [CrossRef]

- Richardson, J.T. Eta squared and partial eta squared as measures of effect size in educational research. Educ. Res. Rev. 2011, 6, 135–147. [Google Scholar] [CrossRef]

- Cohen, J. Statistical Power Analysis for the Behavioral Sciences. In Electronic Resource; Elsevier Science: St. Louis, MO, USA, 2013; p. 2013. [Google Scholar]

- Lee, S.; Lee, D.K. What is the proper way to apply the multiple comparison test? Korean J. Anesthesiol. 2018, 71, 353. [Google Scholar] [CrossRef]

- Howell, D.C. Statistical Methods for Psychology; Cengage Learning: Boston, MA, USA, 2012. [Google Scholar]

- McDonald, K. Ambulance Tasmania Data Breach Referred to Police. Pulse+IT, 2021. Available online: https://www.pulseitmagazine.com.au/australian-ehealth/5872-ambulance-tasmania-data-breach-referred-to-police (accessed on 18 January 2021).

- Australian Government. Notifiable Data Breaches Scheme 12-Month Insights Report; Office of the Information Commissioner, Ed.; Australian Government: Canberra, Australia, 2019.

- Mather, C.; Cummings, E.; Gale, F. Mobile learning in nursing: Tales from the profession. Stud. Health Technol. Inform. 2018, 252, 112–117. [Google Scholar]

- Healthconsult. ADHA National Assessment Framework and Operational Arrangements for Mobile Health Applications Consultation Background Paper; Healthconsult: Sydney, Australia, 2021. [Google Scholar]

- Trezona, A.; Rowlands, G.; Nutbeam, D. Progress in implementing national policies and strategies for health literacy—What have we learned so far? Int. J. Environ. Res. Public Health 2018, 15, 1554. [Google Scholar] [CrossRef]

- Pleasant, A.; Griffin, K.H.; Maish, C.; O’Leary, C.; Carmona, R. Health literacy interventions for children or adolescents: An overview and insights into practical applications. Int. Handb. Health Lit. 2019, 307. [Google Scholar]

- Kadimo, K.; Kebaetse, M.B.; Ketshogileng, D.; Seru, L.E.; Sebina, K.B.; Kovarik, C.; Balotlegi, K. Bring-your-own-device in medical schools and healthcare facilities: A review of the literature. Int. J. Med. Inform. 2018, 119, 94–102. [Google Scholar] [CrossRef]

- Roy Morgan Research. Measuring Australia’s Digital Divide The Australian Digital Inclusion Index 2019; Roy Morgan Research: Melbourne, Australia, 2019; Available online: https://www.csi.edu.au/media/2019_ADII_Report.pdf (accessed on 5 March 2022).

- Pew Research Center. Mobile Fact Sheet; Pew Research Center: Washington, DC, USA, 2021; Available online: https://www.pewresearch.org/internet/fact-sheet/mobile (accessed on 7 April 2022).

- Mather, C.; Cummings, E. Modelling digital knowledge transfer: Nurse supervisors transforming learning at point of care to advance nursing practice. Informatics 2017, 4, 12. [Google Scholar] [CrossRef]

- Hanik, B.; Stellefson, M. E-Health Literacy Competencies among Undergraduate Health Education Students: A Preliminary Study. Int. Electron. J. Health Educ. 2011, 14, 46–58. [Google Scholar]

- Australian Health Practitioner Regulation Agency. Social Media: How to Meet Your Obligations under the National Law; Australian Government: Canberra, Australia, 2020. Available online: https://www.ahpra.gov.au/Publications/Social-media-guidance.aspx (accessed on 8 December 2020).

- Porter, S.R.; Whitcomb, M.E.; Weitzer, W.H. Multiple surveys of students and survey fatigue. New Dir. Inst. Res. 2004, 2004, 63–73. [Google Scholar] [CrossRef]

| Characteristics | n (%) | Missing Data (n) |

|---|---|---|

| Age (Range 19–90, mean (SD) 44.7 (16.2)) | 597 | 13 |

| Early adult (19–25) | 129 (21.1) | |

| Young adult (26–40) | 99 (16.2) | |

| Middle-aged adult (41–55) | 186 (30.5) | |

| Older adult (56–90) | 183 (30.0) | |

| Sex | 605 | 5 |

| Female | 487 (79.8) | |

| Male | 118 (19.3) | |

| Education | 610 | 0 |

| Secondary school or below | 138 (22.6) | |

| Certificate or Diploma | 235 (38.5) | |

| University or above | 237 (38.9) | |

| Language at home | 608 | 2 |

| English | 497 (81.5) | |

| Other | 111 (18.2) | |

| Socioeconomic status (SES) * | 574 | 36 |

| IRSD 1–2 | 135 (22.1) | |

| IRSD 3–4 | 99 (16.2) | |

| IRSD 5–6 | 77 (12.6) | |

| IRSD 7–8 | 145 (23.8) | |

| IRSD 9–10 | 118 (19.3) | |

| Longstanding illness | 608 | 2 |

| Yes | 287 (47.0) | |

| No | 321 (52.6) | |

| Perceived health status | 610 | 0 |

| Good to Excellent | 529 (86.7) | |

| Fair to Poor | 81 (13.3) | |

| Private health insurance | 610 | 0 |

| Yes | 358 (58.7) | |

| No | 252 (41.3) | |

| Ownership of digital device | 610 | 0 |

| Less devices (owned 1–2 devices) | 245 (40.2) | |

| More devices (owned 3–4 devices) | 365 (59.8) | |

| Owned computer/laptop | 608 (99.7) | |

| Owned mobile phone or smartphone | 604 (99.0) | |

| Owned tablet | 366 (60.0) | |

| Owned other device | 32 (5.2) | |

| Use of digital communication platform | 610 | |

| Low use (used 1–2 platforms) | 54 (8.9) | |

| Medium user (used 3–5 platforms) | 458 (75.1) | |

| High user (used 6–9 platforms) | 98 (16.1) | |

| Used email | 608 (99.7) | |

| Used text message | 597 (97.9) | |

| Used Facebook | 510 (83.6) | |

| Used Twitter | 69 (11.3) | |

| Used Instagram | 245 (40.2) | |

| Used Snapchat | 181 (29.7) | |

| Used WhatsApp/WeChat | 161 (26.4) | |

| Used blogging | 25 (4.1) | |

| Used forum/chat room | 138 (22.6) | |

| Used other communication platform | 16 (2.6) | |

| Look for online information | 610 | 0 |

| Yes | 605 (99.2) | |

| No | 5 (0.8) | |

| Monitored health digitally | 610 | 0 |

| Yes | 325 (53.3) | |

| No | 285 (46.7) |

| Mean (SD), [95% CI] * | Missing Values (n) | |

|---|---|---|

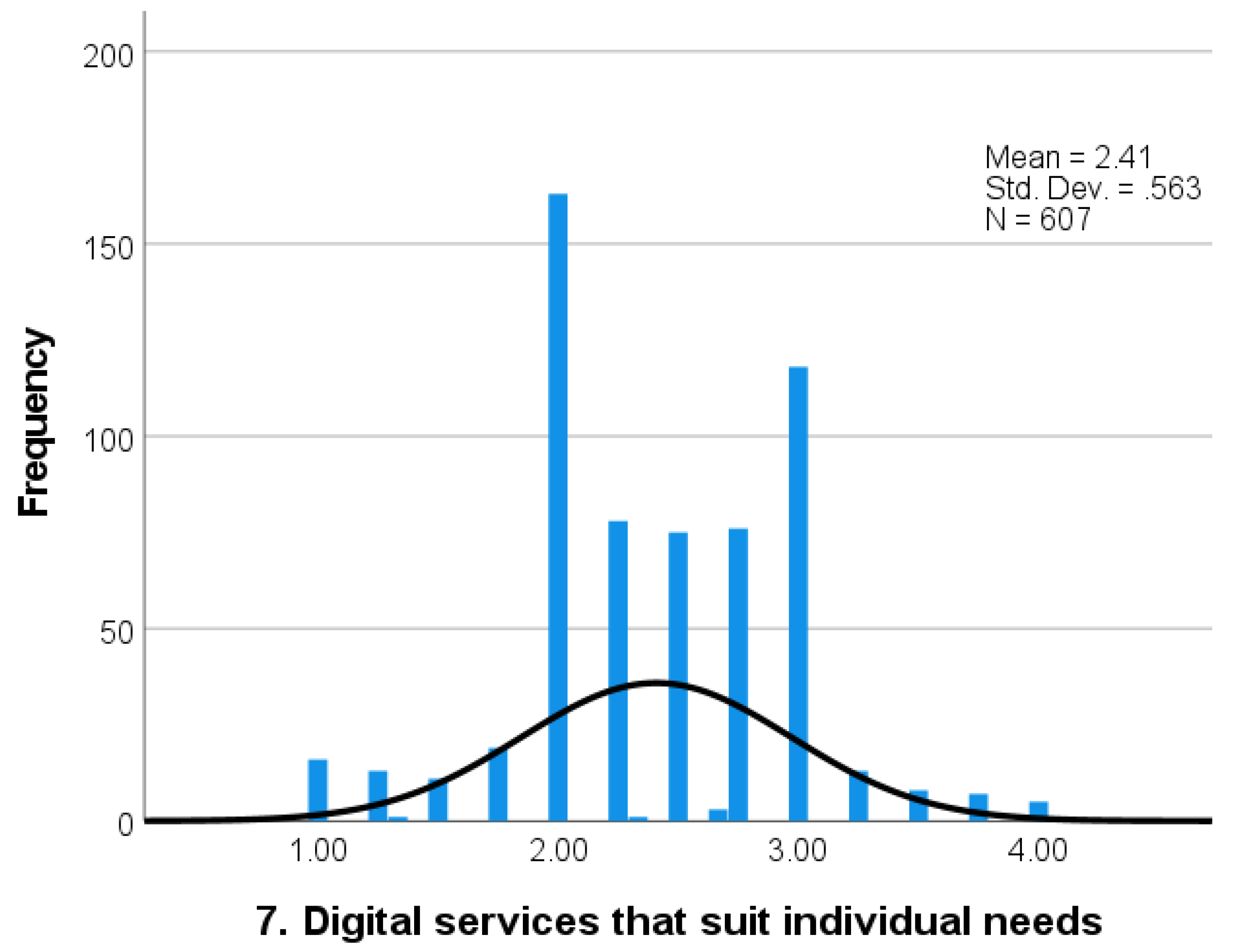

| 1. Using technology to process health information | 2.82 (0.48) [2.78–2.85] | 0 |

| 2. Understanding of health concepts and language | 3.12 (0.46) [3.0–3.16] | 0 |

| 3. Ability to actively engage with digital services | 2.95 (0.55) [2.90–2.99] | 0 |

| 4. Feel safe and in control | 2.54 (0.62) [2.49–2.59] | 0 |

| 5. Motivated to engage with digital services | 2.69 (0.52) [2.65–2.73] | 0 |

| 6. Access to digital services that work | 2.49 (0.48) [2.45–2.52] | 1 |

| 7. Digital services that suit individual needs | 2.41 (0.56) [2.36–2.45] | 3 |

| Variable | Pillai’s Trace | F | df | Error df | p | η2 * |

|---|---|---|---|---|---|---|

| Age | 0.19 | 5.61 | 21 | 1758 | <0.001 | 0.06 |

| Sex | 0.03 | 2.29 | 7 | 594 | 0.03 | 0.03 |

| Education | 0.09 | 3.94 | 14 | 1198 | <0.001 | 0.04 |

| Language at home | 0.04 | 3.35 | 7 | 597 | 0.00 | 0.04 |

| SES | 0.06 | 1.12 | 28 | 2252 | 0.30 | 0.01 |

| Longstanding illness | 0.01 | 1.05 | 7 | 597 | 0.40 | 0.01 |

| Perceived health status | 0.02 | 1.42 | 7 | 599 | 0.19 | 0.02 |

| Private health insurance | 0.03 | 2.85 | 7 | 599 | 0.01 | 0.03 |

| Ownership of digital device | 0.07 | 6.34 | 7 | 599 | <0.001 | 0.07 |

| Use of digital communication platform | 0.08 | 3.64 | 14 | 1198 | <0.001 | 0.04 |

| Monitored health digitally | 0.17 | 17.19 | 7 | 599 | <0.001 | 0.17 |

| 1. Using Tech | 2. Health Concepts | 3. Engage | 4. Feel Safe | 5. Motive | 6. Access | 7. Suit Needs | ||

|---|---|---|---|---|---|---|---|---|

| Age | ||||||||

| F (3, 593) | 10.46 | 13.18 | 22.07 | 12.82 | 10.90 | 7.37 | 14.06 | |

| p | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | <0.001 | |

| η2 | 0.05 | 0.06 | 0.10 | 0.06 | 0.05 | 0.04 | 0.07 | |

| Group | n | Mean score (SD) [95% CI] | ||||||

| Early adult (age 19–25) | 129 | 2.96 (0.53) [2.87, 3.05] | 3.31 (0.45) [3.24, 3.39] | 3.16 (0.47) [3.07, 3.24] | 2.82 (0.52) [2.73, 2.91] | 2.84 (0.56) [2.75, 2.94] | 2.66 (0.47) [2.57, 2.73] | 2.62 (0.54) [2.52, 2.71] |

| Young adult (age 26–40) | 99 | 2.94 (0.44) [2.84, 3.02] | 3.17 (0.45) [3.08, 3.26] | 3.17 (0.49) [3.07, 3.26] | 2.49 (0.64) [2.36, 2.62] | 2.83 (0.49) [2.74, 2.93] | 2.45 (0.49) [2.35, 2.55] | 2.53 (0.53) [2.42, 2.63] |

| Middle-aged adult (age 41–55) | 186 | 2.77 (0.44) [2.70, 2.83] | 3.08 (0.42) [3.02, 3.14] | 2.89 (0.53) [2.81, 2.96] | 2.53 (0.59) [2.44, 2.61] | 2.64 (0.49) [2.57, 2.72] | 2.48 (0.47) [2.41, 2.55] | 2.38 (0.53) [2.31, 2.46] |

| Older adult (age 56–90) | 183 | 2.71 (0.45) [2.64, 2.77] | 3.01 (0.44) [2.95, 3.07] | 2.75 (0.58) [2.67, 2.83] | 2.40 (0.61) [2.32, 2.50] | 2.56 (0.50) [2.49, 2.64] | 2.40 (0.47) [2.34, 2.47] | 2.24 (0.56) [2.16, 2.32] |

| Sex | ||||||||

| F (1, 603) | 2.60 | 6.96 | 9.70 | 0.31 | 5.97 | 0.37 | 0.86 | |

| p | 0.12 | 0.01 | 0.00 | 0.58 | 0.02 | 0.54 | 0.35 | |

| η2 | 0.00 | 0.01 | 0.02 | 0.00 | 0.01 | 0.00 | 0.00 | |

| Group | n | Mean score (SD) [95% CI] | ||||||

| Male | 118 | 2.88 (0.51) [2.78, 2.97] | 3.22 (0.47) [3.13, 3.30] | 3.09 (0.53) [2.99, 3.18] | 2.57 (0.65) [2.46, 2.69] | 2.80 (0.52) [2.70, 2.89] | 2.51 (0.52) [2.42, 2.61] | 2.45 (0.60) [2.34, 2.56] |

| Female | 487 | 2.80 (0.47) [2.76, 2.84] | 3.10 (0.45) [3.06, 3.14] | 2.91 (0.56) [2.86, 2.96] | 2.54 (0.60) [2.48, 2.59] | 2.67 (0.52) [2.62, 2.71] | 2.48 (0.48) [2.44, 2.52] | 2.40 (0.55) [2.35, 2.45] |

| Education | ||||||||

| F (2, 607) | 2.56 | 10.85 | 4.73 | 13.29 | 4.35 | 11.00 | 5.35 | |

| p | 0.08 | <0.001 | 0.01 | <0.001 | 0.01 | <0.001 | 0.01 | |

| η2 | 0.01 | 0.04 | 0.02 | 0.04 | 0.01 | 0.04 | 0.02 | |

| Group | n | Mean score (SD) [95% CI] | ||||||

| Secondary or below | 138 | 2.90 (0.52) [2.81, 2.98] | 3.24 (0.44) [3.16, 3.31] | 3.07 (0.49) [2.98, 3.14] | 2.77 (0.59) [2.67, 2.87] | 2.81 (0.53) [2.72, 2.90] | 2.64 (0.50) [2.56, 2.73] | 2.55 (0.56) [2.45, 2.64] |

| TAFE/Diploma | 235 | 2.79 (0.46) [2.73, 2.85] | 3.02 (0.45) [2.97, 3.08] | 2.89 (0.55) [2.81, 2.96] | 2.50 (0.60) [2.42, 2.58] | 2.65 (0.50) [2.58, 2.71] | 2.48 (0.49) [2.42, 2.54] | 2.37 (0.57) [2.30, 2.44] |

| University or above | 237 | 2.79 (0.47) [2.73, 2.85] | 3.15 (0.46) [3.09, 3.21] | 2.93 (0.58) [2.86, 3.01] | 2.44 (0.61) [2.37, 2.52] | 2.67 (0.52) [2.60, 2.74] | 2.40 (0.45) [2.35, 2.46] | 2.37 (0.55) [2.30, 2.44] |

| Language at home | ||||||||

| F (1, 606) | 0.17 | 1.99 | 0.03 | 2.70 | 3.91 | 3.72 | 10.37 | |

| p | 0.68 | 0.16 | 0.86 | 0.10 | 0.05 | 0.05 | 0.00 | |

| η2 | 0.00 | 0.00 | 0.00 | 0.00 | 0.01 | 0.01 | 0.02 | |

| Group | n | Mean score (SD) [95% CI] | ||||||

| English | 497 | 2.81 (0.48) [2.77, 2.85] | 3.13 (0.46) [3.09, 3.17] | 2.94 (0.57) [2.89, 2.99] | 2.52 (0.61) [2.47, 2.57] | 2.67 (0.53) [2.63, 2.72] | 2.47 (0.48) [2.43, 2.51] | 2.37 (0.56) [2.32, 2.42] |

| Other | 111 | 2.83 (0.44) [2.75, 2.91] | 3.07 (0.41) [2.99, 3.14] | 2.95 (0.50) [2.86, 3.05] | 2.63 (0.63) [2.51, 2.75] | 2.78 (0.48) [2.69, 2.87] | 2.57 (0.47) [2.48, 2.65] | 2.56 (0.55) [2.46, 2.67] |

| Private health insurance | ||||||||

| F (1, 608) | 0.42 | 5.19 | 0.34 | 0.12 | 0.13 | 4.92 | 4.06 | |

| p | 0.52 | 0.02 | 0.56 | 0.73 | 0.72 | 0.03 | 0.04 | |

| η2 | 0.00 | 0.01 | 0.00 | 0.00 | 0.00 | 0.01 | 0.01 | |

| Group | n | Mean score (SD) [95% CI] | ||||||

| Yes | 358 | 2.80 (0.49) [2.76, 2.86] | 3.15 (0.45) [3.10, 3.20] | 2.96 (0.57) [2.90, 3.01] | 2.53 (0.62) [2.47, 2.60] | 2.69 (0.53) [2.63, 2.74] | 2.45 (0.47) [2.40, 2.50] | 2.37 (0.56) [2.31, 2.43] |

| No | 252 | 2.83 (0.46) [2.78, 2.89] | 3.07 (0.45) [3.01, 3.13] | 2.93 (0.53) [2.86, 3.00] | 2.55 (0.61) [2.47, 2.62] | 2.70 (0.51) [2.64, 2.77] | 2.54 (0.50) [2.48, 2.60] | 2.46 (0.56) [2.39, 2.53] |

| Ownership of digital device | ||||||||

| F (1, 608) p η2 | 3.15 0.08 0.01 | 4.72 0.03 0.01 | 27.01 <0.001 0.04 | 2.04 0.15 0.00 | 3.49 0.06 0.01 | 0.32 0.57 0.00 | 1.34 0.25 0.00 | |

| Group | n | Mean score (SD) [95% CI] | ||||||

| Less device (1–2 devices) | 245 | 2.77 (0.45) [2.71, 2.83] | 3.07 (0.47) [3.01, 3.13] | 2.80 (0.54) [2.74, 2.87] | 2.58 (0.59) [2.51, 2.66] | 2.64 (0.50) [2.58, 2.71] | 2.50 (0.50) [2.44, 2.56] | 2.38 (0.56) [2.31, 2.45] |

| More device (3–4 devices) | 365 | 2.84 (0.49) [2.79, 2.89] | 3.15 (0.44) [3.11, 3.20] | 3.04 (0.54) [2.98, 3.09] | 2.51 (0.63) [2.44, 2.57] | 2.73 (0.53) [2.67, 2.78] | 2.48 (0.48) [2.43, 2.53] | 2.43 (0.56) [2.37, 2.49] |

| Use of digital communication platform | ||||||||

| F (2, 607) p η2 | 9.42 <0.001 0.03 | 7.85 <0.001 0.03 | 9.60 <0.001 0.03 | 7.17 <0.001 0.02 | 13.56 <0.001 0.04 | 3.14 0.04 0.01 | 10.48 <0.001 0.03 | |

| Group | n | Mean score (SD) [95% CI] | ||||||

| Low user (1–2 platforms) | 54 | 2.57 (0.53) [2.42, 2.71] | 2.89 (0.53) [2.75, 3.04] | 2.74 (0.61) [2.58, 2.90] | 2.24 (0.60) [2.09, 2.41] | 2.37 (0.56) [2.23, 2.54] | 2.35 (0.54) [2.22, 2.50] | 2.14 (0.57) [1.98, 2.29] |

| Medium user (3–5 platforms) | 458 | 2.83 (0.46) [2.78, 2.87] | 3.14 (0.44) [3.10, 3.18] | 2.93 (0.55) [2.88, 2.98] | 2.55 (0.61) [2.50, 2.61] | 2.70 (0.51) [2.65, 2.75] | 2.49 (0.47) [2.44, 2.53] | 2.41 (0.56) [2.36, 2.46] |

| High user (6–9 platforms) | 98 | 2.90 (0.87) [2.81, 3.00] | 3.17 (0.45) [3.08, 3.26] | 3.13 (0.48) [3.03, 3.23] | 2.63 (0.60) [2.51, 2.75] | 2.83 (0.47) [2.73, 2.92] | 2.56 (0.51) [2.45, 2.66] | 2.57 (0.52) [2.46, 2.68] |

| Monitored health digitally | ||||||||

| F (1, 608) p η2 | 83.08 <0.001 0.12 | 57.10 <0.001 0.09 | 78.54 <0.001 0.11 | 19.82 <0.001 0.03 | 87.38 <0.001 0.13 | 21.01 <0.001 0.03 | 52.31 <0.001 0.08 | |

| Group | n | Mean score (SD) [95% CI] | ||||||

| Yes | 325 | 2.97 (0.44) [2.92, 3.02] | 3.24 (0.43) [3.20, 3.29] | 3.12 (0.48) [3.07, 3.17] | 2.64 (0.59) [2.58, 2.70] | 2.87 (0.47) [2.82, 2.92] | 2.57 (0.48) [2.52, 2.62] | 2.56 (0.52) [2.50, 2.62] |

| No | 285 | 2.64 (0.46) [2.59, 2.69] | 2.98 (0.44) [2.93, 3.03] | 2.75 (0.56) [2.68, 2.80] | 2.42 (0.63) [2.35, 2.50] | 2.50 (0.51) [2.44, 2.56] | 2.39 (0.48) [2.34, 2.45] | 2.24 (0.56) [2.18, 2.31] |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mather, C.A.; Cheng, C.; Douglas, T.; Elsworth, G.; Osborne, R. eHealth Literacy of Australian Undergraduate Health Profession Students: A Descriptive Study. Int. J. Environ. Res. Public Health 2022, 19, 10751. https://doi.org/10.3390/ijerph191710751

Mather CA, Cheng C, Douglas T, Elsworth G, Osborne R. eHealth Literacy of Australian Undergraduate Health Profession Students: A Descriptive Study. International Journal of Environmental Research and Public Health. 2022; 19(17):10751. https://doi.org/10.3390/ijerph191710751

Chicago/Turabian StyleMather, Carey Ann, Christina Cheng, Tracy Douglas, Gerald Elsworth, and Richard Osborne. 2022. "eHealth Literacy of Australian Undergraduate Health Profession Students: A Descriptive Study" International Journal of Environmental Research and Public Health 19, no. 17: 10751. https://doi.org/10.3390/ijerph191710751

APA StyleMather, C. A., Cheng, C., Douglas, T., Elsworth, G., & Osborne, R. (2022). eHealth Literacy of Australian Undergraduate Health Profession Students: A Descriptive Study. International Journal of Environmental Research and Public Health, 19(17), 10751. https://doi.org/10.3390/ijerph191710751