Healthy vs. Unhealthy Food Images: Image Classification of Twitter Images

Abstract

1. Introduction

1.1. Background

1.2. Literature Review

1.2.1. Image Classification of Foods

1.2.2. Utilizing Social Media to Understand Health Outcomes

2. Materials and Methods

2.1. Overview

2.2. Data Collection

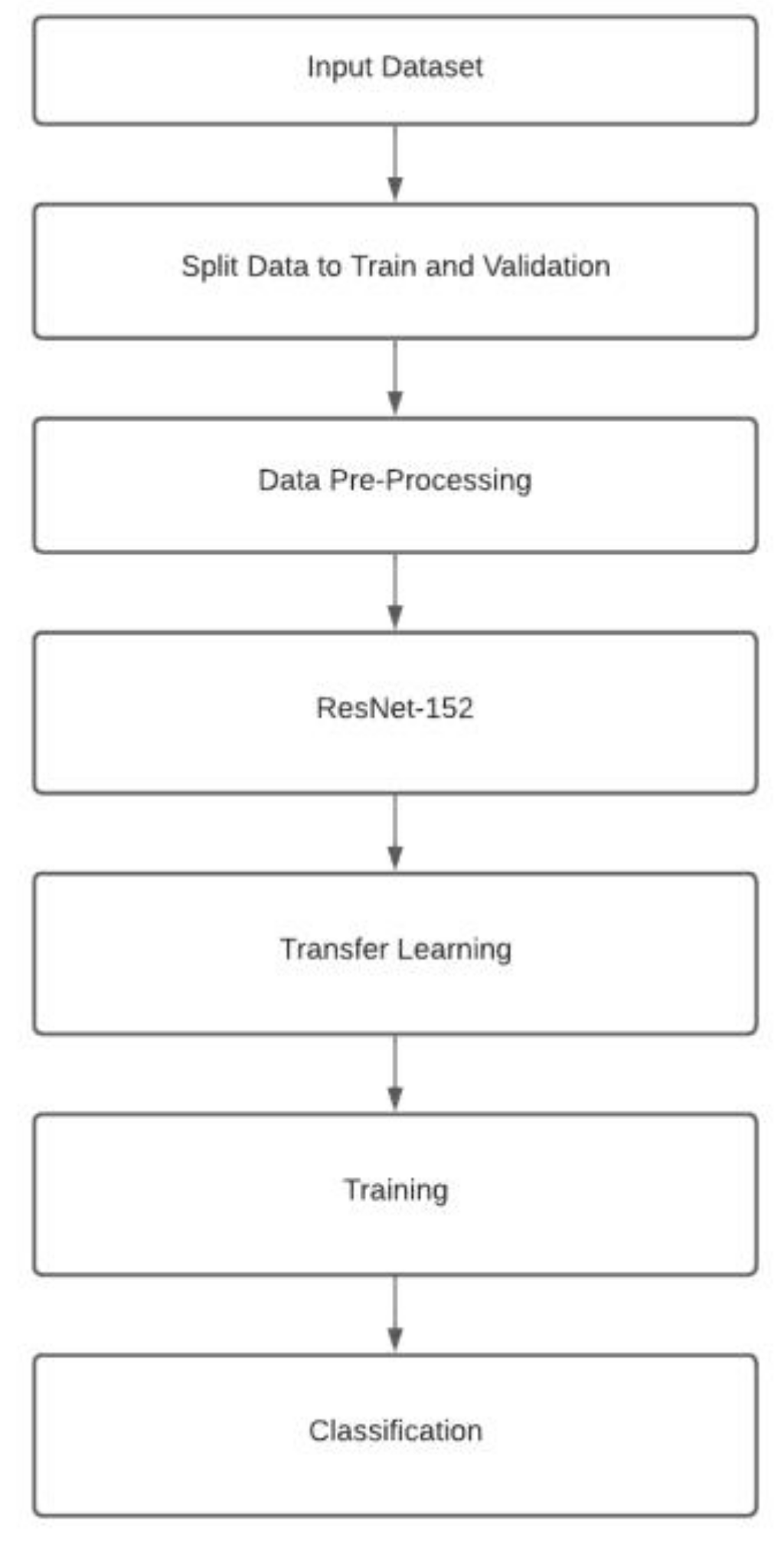

2.3. Image Classifier

3. Results

3.1. Training and Testing the Image Classifier

3.2. External Validity: Testing on Twitter Dataset

3.3. Error Analysis

4. Discussion

4.1. Principle Findings

4.2. Public Health Implications

4.3. Limitations and Future Direction

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hruby, A.; Hu, F.B. The Epidemiology of Obesity: A Big Picture. Pharmacoeconomics 2015, 33, 673–689. [Google Scholar] [CrossRef] [PubMed]

- Tremmel, M.; Gerdtham, U.-G.; Nilsson, P.M.; Saha, S. Economic Burden of Obesity: A Systematic Literature Review. Int. J. Environ. Res. Public Health 2017, 14, 435. [Google Scholar] [CrossRef] [PubMed]

- Bettadapura, V.; Thomaz, E.; Parnami, A.; Abowd, G.D.; Essa, I. Leveraging Context to Support Automated Food Recognition in Restaurants. In Proceedings of the 2015 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikola Village, HW, USA, 7 October 2015; pp. 580–587. [Google Scholar] [CrossRef]

- Martinel, N.; Foresti, G.L.; Micheloni, C. Wide-Slice Residual Networks for Food Recognition. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018; pp. 567–576. [Google Scholar] [CrossRef]

- NudeFood. Steak Burgers. 2022. Available online: https://twitter.com/nudfod/status/1477761636593287177/photo/1 (accessed on 29 November 2021).

- Park, A.; Li, C.; Bowling, J.; Ge, Y.; Dulin, M. Diet, Weight loss, Fitness, and Health related Image Sharing using Twitter: An Observation Study. In Proceedings of the 2020 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Seoul, Korea, 16–19 December 2020; pp. 2049–2053. [Google Scholar] [CrossRef]

- Qutteina, Y.; Hallez, L.; Mennes, N.; de Backer, C.; Smits, T. What Do Adolescents See on Social Media? A Diary Study of Food Marketing Images on Social Media. Front. Psychol. 2019, 10, 2637. [Google Scholar] [CrossRef] [PubMed]

- Amato, G.; Bolettieri, P.; Monteiro de Lira, V.; Muntean, C.I.; Perego, R.; Renso, C. Social Media Image Recognition for Food Trend Analysis. In Proceedings of the 40th International ACM SIGIR Conference on Research and Development in Information Retrieval (ACM), Tacoma, WA, USA, 10–18 August 2017; pp. 1333–1336. [Google Scholar] [CrossRef]

- Garimella, V.R.K.; Alfayad, A.; Weber, I. Social Media Image Analysis for Public Health. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems (ACM), San Jose, CA, USA, 7–12 May 2016; pp. 5543–5547. [Google Scholar] [CrossRef]

- Simeone, M.; Scarpato, D. Sustainable consumption: How does social media affect food choices? J. Clean. Prod. 2020, 277, 124036. [Google Scholar] [CrossRef]

- Hawkins, L.; Farrow, C.; Thomas, J.M. Does exposure to socially endorsed food images on social media influence food intake? Appetite 2021, 165, 105424. [Google Scholar] [CrossRef] [PubMed]

- McAllister, P.; Zheng, H.; Bond, R.; Moorhead, A. Combining deep residual neural network features with supervised machine learning algorithms to classify diverse food image datasets. Comput. Biol. Med. 2018, 95, 217–233. [Google Scholar] [CrossRef] [PubMed]

- Anthimopoulos, M.M.; Gianola, L.; Scarnato, L.; Diem, P.; Mougiakakou, S.G. A food recognition system for diabetic patients based on an optimized bag-of-features model. IEEE J. Biomed. Health Inform. 2014, 18, 1261–1271. [Google Scholar] [CrossRef] [PubMed]

- Singla, A.; Yuan, L.; Ebrahimi, T. Food/Non-food Image Classification and Food Categorization using Pre-Trained GoogLeNet Model. In Proceedings of the 2nd International Workshop on Multimedia Assisted Dietary Management (ACM), Lausanne, Switzerland, 16 October 2016; pp. 3–11. [Google Scholar] [CrossRef]

- Farooq, M.; Sazonov, E. Feature Extraction Using Deep Learning for Food Type Recognition; Springer: Berlin, Germany, 2017; pp. 464–472. [Google Scholar] [CrossRef]

- Rich, J.; Haddadi, H.; Hospedales, T.M. Towards Bottom-Up Analysis of Social Food. In Proceedings of the 6th International Conference on Digital Health Conference (ACM), Montréal, QC, Canada, 11–13 April 2016; pp. 111–120. [Google Scholar] [CrossRef]

- Kagaya, H.; Aizawa, K.; Ogawa, M. Food Detection and Recognition Using Convolutional Neural Network. In Proceedings of the 22nd ACM International Conference on Multimedia (ACM), Orlando, FL, USA, 3 November 2014; pp. 1085–1088. [Google Scholar] [CrossRef]

- Mejova, Y.; Haddadi, H.; Noulas, A.; Weber, I. FoodPorn. In Proceedings of the 5th International Conference on Digital Health (ACM), New York, NY, USA, 18–20 May 2015; pp. 51–58. [Google Scholar] [CrossRef]

- Abbar, S.; Mejova, Y.; Weber, I. You Tweet What You Eat. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems (ACM), New York, NY, USA, 18 April 2015; pp. 3197–3206. [Google Scholar] [CrossRef]

- Vydiswaran, V.; Romero, D.; Zhao, X.; Yu, D.; Gomez-Lopez, I.; Lu, J.X.; Lott, B.; Baylin, A.; Clarke, P.; Berrocal, V.; et al. “Bacon Bacon Bacon”: Food-Related Tweets and Sentiment in Metro Detroit. In Proceedings of the Twelfth International AAAI Conference on Web and Social Media, Stanford, CA, USA, 25–28 June 2018; pp. 692–695. [Google Scholar]

- Fried, D.; Surdeanu, M.; Kobourov, S.; Hingle, M.; Bell, D. Analyzing the language of food on social media. In Proceedings of the 2014 IEEE International Conference on Big Data (Big Data), Washington, DC, USA, 27–30 October 2014; pp. 778–783. [Google Scholar] [CrossRef]

- Jia, D.; Wei, D.; Socher, R.; Li, L.-J.; Li, K.; Li, F.-F. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA,, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G. ImageNet classification with deep convolutional neural networks. In Proceedings of the 25th International Conference on Neural Information Processing Systems (NIPS’12), Toronto, ON, Canada, 3 December 2012; pp. 1097–1105. [Google Scholar]

- Yosinski, J.; Clune, J.; Bengio, Y.; Lipson, H. How transferable are features in deep neural networks? arXiv 2014, arXiv:1411.1792. [Google Scholar]

- Keskar, N.S.; Socher, R. Improving Generalization Performance by Switching from Adam to SGD. arXiv 2017, arXiv:1712.07628. [Google Scholar]

- Gu, J.; Wang, Z.; Kuen, J.; Ma, L.; Shahroudy, A.; Shuai, B.; Liu, T.; Wang, X.; Wang, G.; Cai, J.; et al. Recent advances in convolutional neural networks. Pattern Recognit. 2018, 77, 354–377. [Google Scholar] [CrossRef]

- Ketkar, N. Introduction to PyTorch. Deep Learning with Python; Apress: Berkeley, CA, USA, 2017; pp. 195–208. [Google Scholar] [CrossRef]

- Akbari Fard, M.; Hadadi, H.; Tavakoli Targhi, A. Fruits and Vegetables Calorie Counter Using Convolutional Neural Networks. In Proceedings of the 6th International Conference on Digital Health Conference (ACM), New York, NY, USA, 11 April 2016; pp. 121–122. [Google Scholar] [CrossRef]

- Bolanos, M.; Radeva, P. Simultaneous food localization and recognition. In Proceedings of the 2016 23rd International Conference on Pattern Recognition (ICPR), Cancun, Mexico, 4–8 December 2016; pp. 3140–3145. [Google Scholar] [CrossRef]

- Jiang, L.; Qiu, B.; Liu, X.; Huang, C.; Lin, K. DeepFood: Food Image Analysis and Dietary Assessment via Deep Model. IEEE Access 2020, 8, 47477–47489. [Google Scholar] [CrossRef]

- Zhou, L.; Zhang, C.; Liu, F.; Qiu, Z.; He, Y. Application of Deep Learning in Food: A Review. Compr. Rev. Food Sci. Food Saf. 2019, 18, 1793–1811. [Google Scholar] [CrossRef] [PubMed]

- Mezgec, S.; Koroušić Seljak, B. NutriNet: A Deep Learning Food and Drink Image Recognition System for Dietary Assessment. Nutrients 2017, 9, 657. [Google Scholar] [CrossRef] [PubMed]

- Kagaya, H.; Aizawa, K. Highly Accurate Food/Non-Food Image Classification Based on a Deep Convolutional Neural Network; Springer: Berlin, Germany, 2015; pp. 350–357. [Google Scholar] [CrossRef]

- Culotta, A. Lightweight methods to estimate influenza rates and alcohol sales volume from Twitter messages. Lang. Resour. Eval. 2013, 47, 217–238. [Google Scholar] [CrossRef]

- Paul, M.J.; Dredze, M. You Are What You Tweet: Analyzing Twitter for Public Health. In Proceedings of the Fifth International AAAI Conference on Weblogs and Social Media, Barcelon, Spain, 24 February 2011; pp. 265–272. [Google Scholar]

- Chunara, R.; Andrews, J.R.; Brownstein, J.S. Social and news media enable estimation of epidemiological patterns early in the 2010 Haitian cholera outbreak. Am. J. Trop. Med. Hyg. 2012, 86, 39–45. [Google Scholar] [CrossRef] [PubMed]

- de Choudhury, M.; Counts, S.; Horvitz, E.J.; Hoff, A. Characterizing and predicting postpartum depression from shared facebook data. In Proceedings of the 17th ACM Conference on Computer Supported Cooperative Work & Social Computing (ACM), New York, NY, USA, 15 February 2014; pp. 626–638. [Google Scholar] [CrossRef]

- Mowery, D.; Park, A.; Bryan, C.; Conway, M. Towards Automatically Classifying Depressive Symptoms from Twitter Data for Population Health. In Proceedings of the Workshop on Computational Modeling of People’s Opinions, Personality, and Emotions in Social Media (PEOPLES), Osaka, Japan, 1 December 2019; pp. 182–191. [Google Scholar]

- Nutbeam, D. The evolving concept of health literacy. Soc. Sci. Med. 2008, 67, 2072–2078. [Google Scholar] [CrossRef] [PubMed]

- Health literacy: Report of the Council on Scientific Affairs. Ad Hoc Committee on Health Literacy for the Council on Scientific Affairs, American Medical Association. JAMA 1999, 281, 552–557.

- Park, A.; Zhu, S.-H.; Conway, M. The Readability of Electronic Cigarette Health Information and Advice: A Quantitative Analysis of Web-Based Information. JMIR Public Health Surveill. 2017, 3, e1. [Google Scholar] [CrossRef] [PubMed]

- Park, A.; Hartzler, A.L.; Huh, J.; McDonald, D.W.; Pratt, W. Automatically Detecting Failures in Natural Language Processing Tools for Online Community Text. J. Med. Internet Res. 2015, 17, e212. [Google Scholar] [CrossRef] [PubMed]

- Park, A.; Hartzler, A.L.; Huh, J.; Hsieh, G.; McDonald, D.W.; Pratt, W. “How Did We Get Here?”: Topic Drift in Online Health Discussions. J. Med. Internet Res. 2016, 18, e284. [Google Scholar] [CrossRef] [PubMed]

| Class | TP | FN | TN | FP | Precision | Recall | Accuracy | F1 Score |

|---|---|---|---|---|---|---|---|---|

| Healthy | 44 | 6 | 33 | 17 | 72.13 | 88.00 | 77.00 | 79.27 |

| Unhealthy | 39 | 11 | 32 | 18 | 68.42 | 78.00 | 71.00 | 72.90 |

| Definitively Healthy | 44 | 6 | 38 | 12 | 78.57 | 88.00 | 82.00 | 83.01 |

| Definitively Unhealthy | 42 | 8 | 37 | 13 | 76.36 | 84.00 | 79.00 | 79.99 |

| Overall | 169 | 31 | 140 | 60 | 73.79 | 84.50 | 77.25 | 78.78 |

| Class | Predicted Healthy | Predicted Unhealthy | Predicted Definitively Unhealthy | Predicted Definitively Healthy | ||||

|---|---|---|---|---|---|---|---|---|

| FN | FP | FN | FP | FN | FP | FN | FP | |

| Healthy | – | – | – | 4 | 2 | 4 | 4 | 9 |

| Unhealthy | 3 | 4 | – | – | 7 | 8 | 1 | 6 |

| Definitely Healthy | 4 | 6 | 1 | 3 | 1 | 3 | – | – |

| Definitely Unhealthy | 2 | 3 | 4 | 7 | – | – | 1 | 3 |

| Food Items | Predicted as Definitively Unhealthy | Predicted as Healthy |

|---|---|---|

| Cake (Definitely unhealthy) | 7 | 6 |

| Baking (Healthy) | 5 | 7 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Oduru, T.; Jordan, A.; Park, A. Healthy vs. Unhealthy Food Images: Image Classification of Twitter Images. Int. J. Environ. Res. Public Health 2022, 19, 923. https://doi.org/10.3390/ijerph19020923

Oduru T, Jordan A, Park A. Healthy vs. Unhealthy Food Images: Image Classification of Twitter Images. International Journal of Environmental Research and Public Health. 2022; 19(2):923. https://doi.org/10.3390/ijerph19020923

Chicago/Turabian StyleOduru, Tejaswini, Alexis Jordan, and Albert Park. 2022. "Healthy vs. Unhealthy Food Images: Image Classification of Twitter Images" International Journal of Environmental Research and Public Health 19, no. 2: 923. https://doi.org/10.3390/ijerph19020923

APA StyleOduru, T., Jordan, A., & Park, A. (2022). Healthy vs. Unhealthy Food Images: Image Classification of Twitter Images. International Journal of Environmental Research and Public Health, 19(2), 923. https://doi.org/10.3390/ijerph19020923