2D Pose Estimation vs. Inertial Measurement Unit-Based Motion Capture in Ergonomics: Assessing Postural Risk in Dental Assistants

Abstract

1. Introduction

2. Materials and Methods

2.1. Participants

- Usually remain in the same position for long periods because of the monotonous work (holding and suctioning).

- Experience frequent long periods in a chair without a break because of patient preparation and follow-up (e.g., removal of temporaries and impressions).

- Sitting position is subordinate to the positioning of the dentist.

- Frequently experience a poor field of vision (small intraoral view, intricate working area such as for filling application, and the dentist takes priority for the best view while the dental assistant must adapt).

- Additional equipment is required for compensation (e.g., magnifying or prism glasses or armrests on chairs).

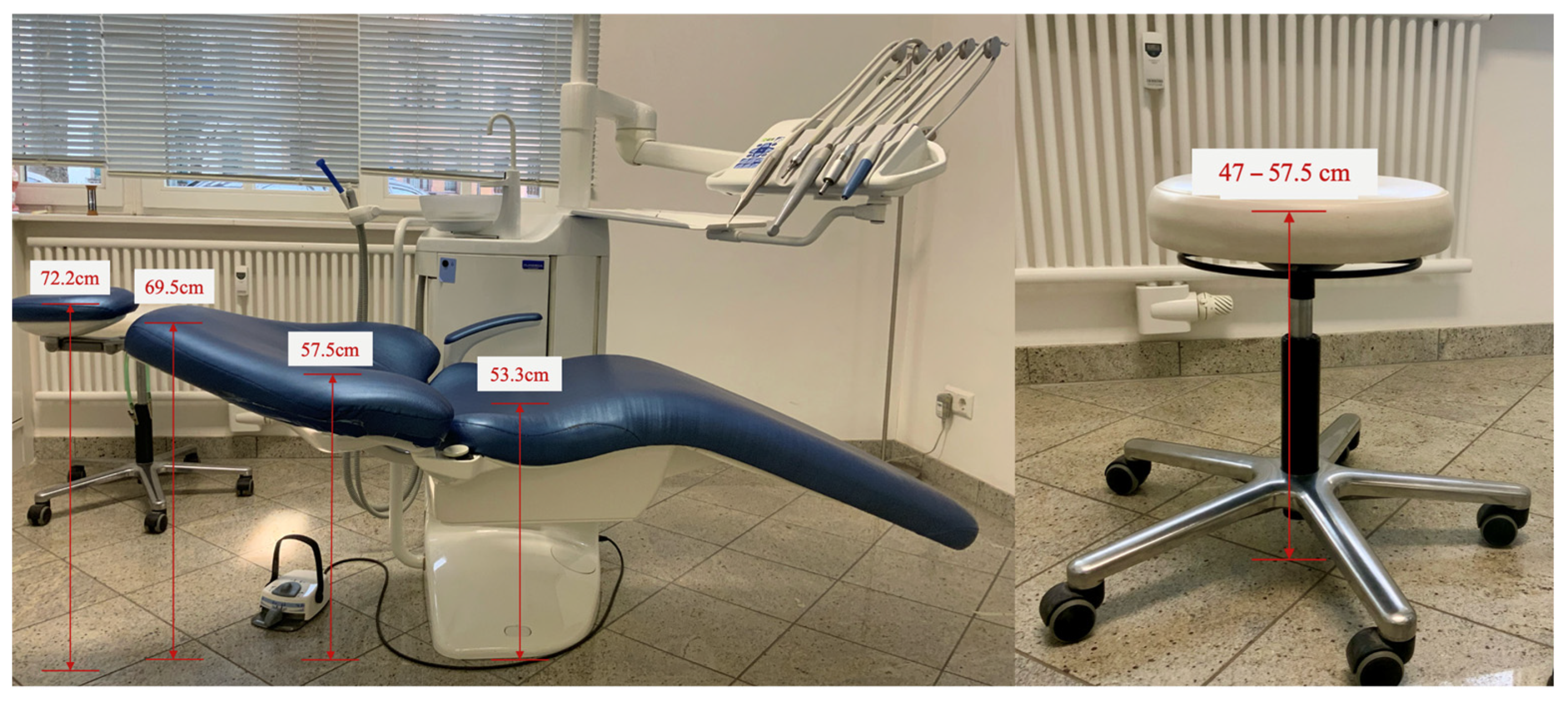

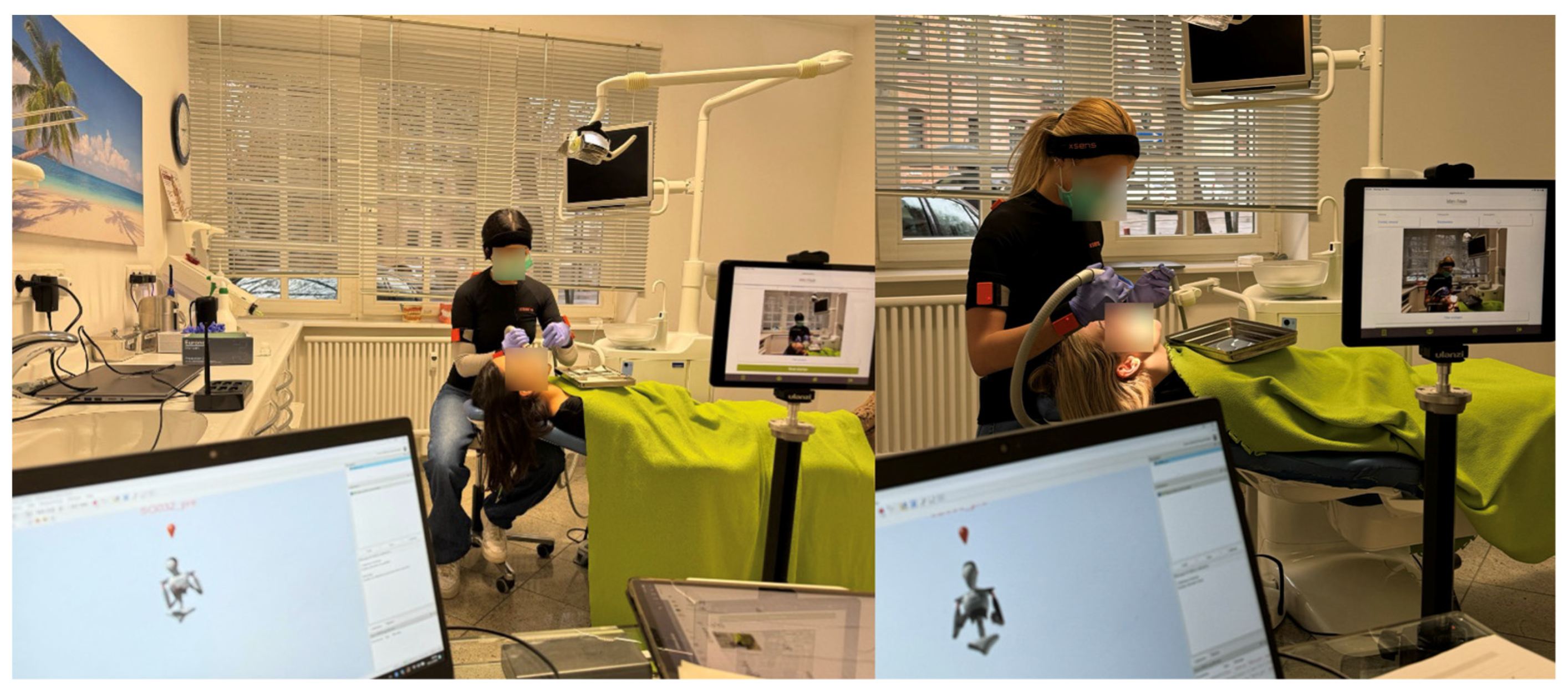

2.2. Experimental Settings

2.3. Experimental Task

- Sit in the assistant chair.

- Place a filling occlusally on tooth 36.

- Use the large suction cup to hold the lingual and the mirror so that the dentist has a clear view of the affected tooth surface.

- Assume a position as comfortable as possible for the patient and yourself.

- Remain in this position during the acoustic signal.

- You may try out the position once.

- Finally, perform the task.

2.4. Pose Estimation and IMU-Based MoCap

- Person Center Heatmap: Detects the geometric centers of individuals.

- Keypoint Regression Field: Predicts a full set of keypoints for each person.

- Person Keypoint Heatmap: Locates keypoints independently of person instances.

- Two-Dimensional Per-Keypoint Offset Field: Computes local offsets for subpixel keypoint precision.

2.5. Data Processing

2.6. Data Analysis

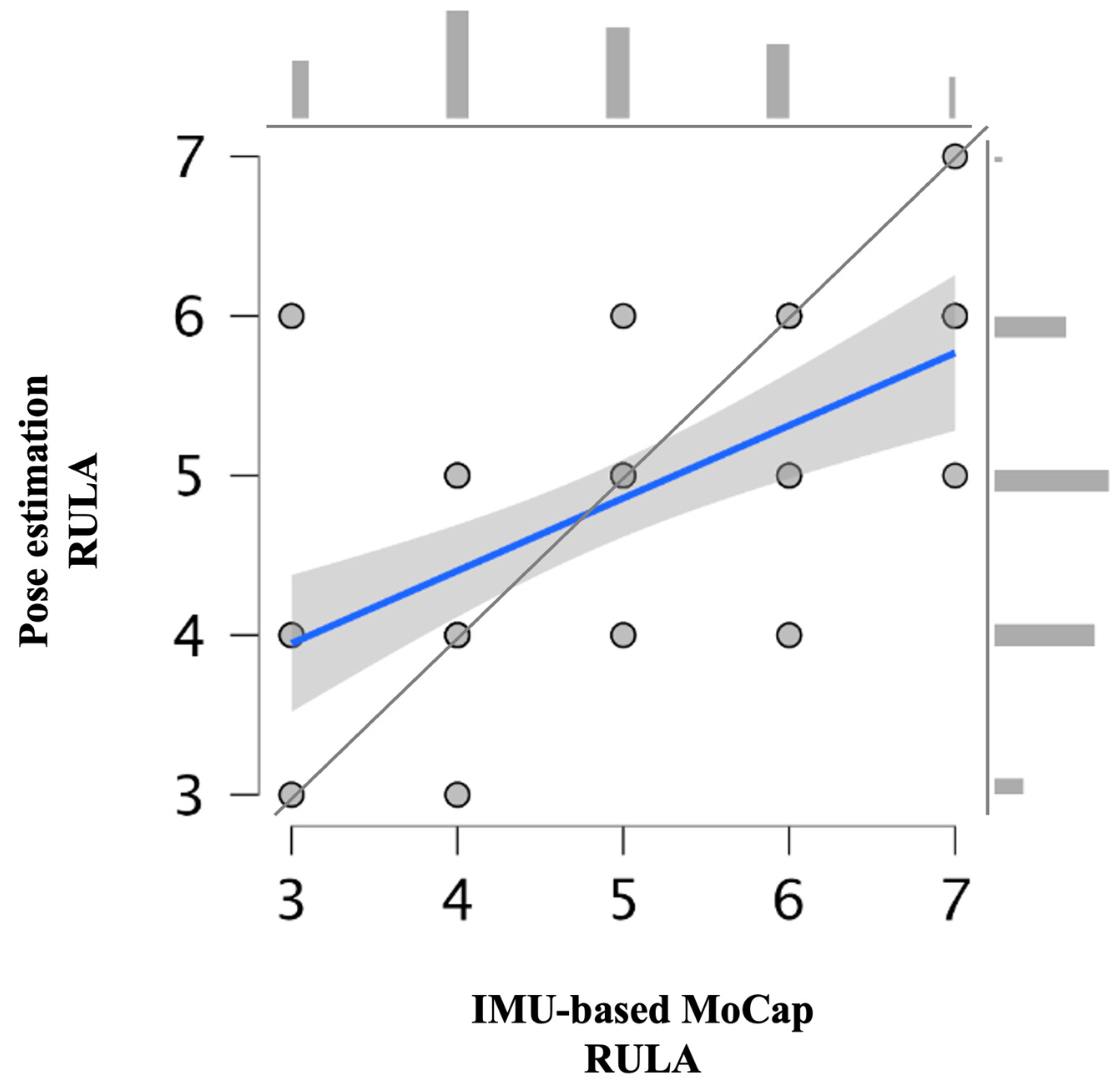

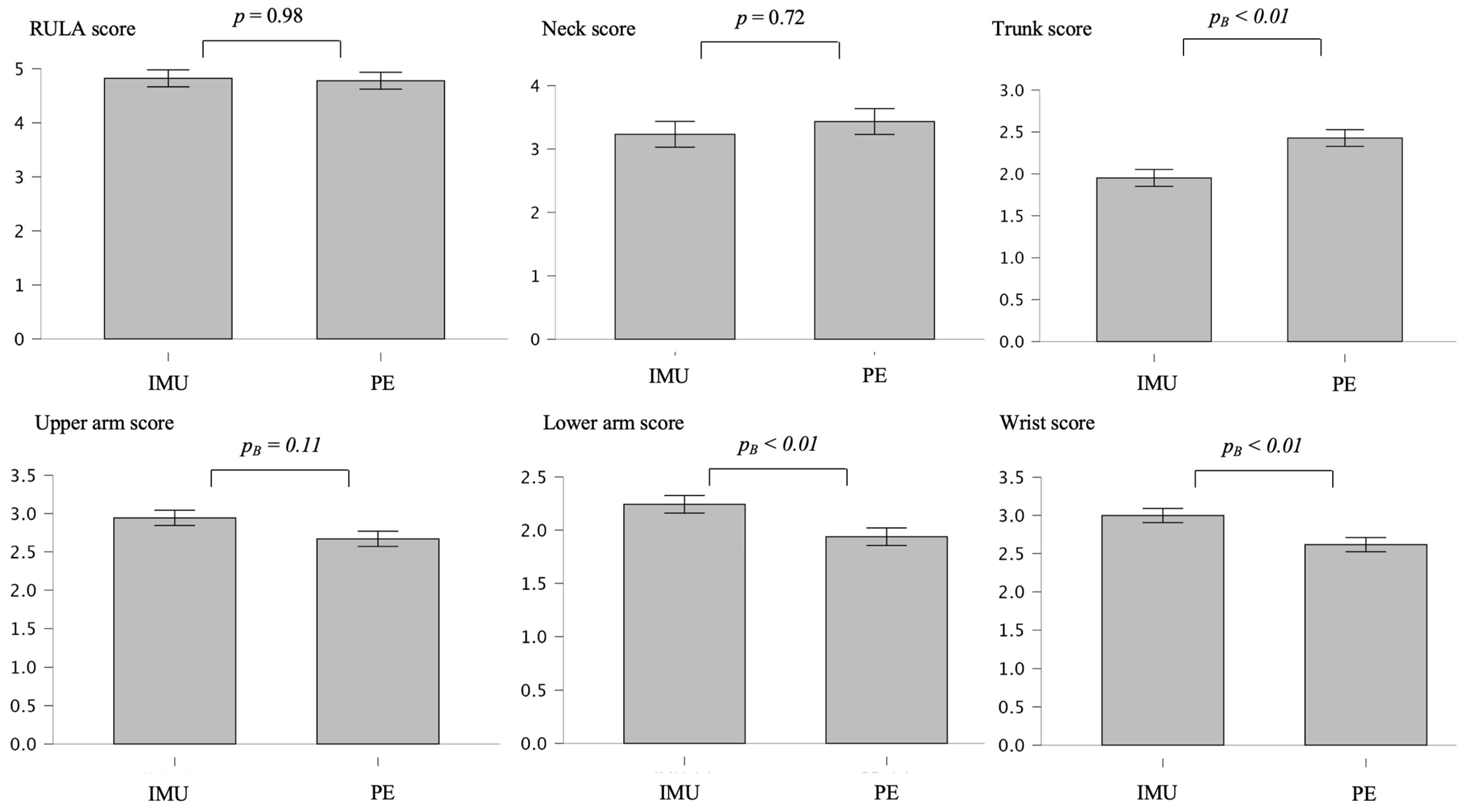

3. Results

4. Discussion

4.1. Main Findings

4.2. Methods

4.3. Future Research

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| IMU | Inertial measurement unit |

| MoCap | Motion capture |

| SD | Standard deviation |

| MSD | Musculoskeletal disorder |

| 2D | Two-dimensional |

| CI | Confidence interval |

| RULA | Rapid upper limb assessment |

References

- Hayes, M.J.; Cockrell, D.; Smith, D.R. A systematic review of musculoskeletal disorders among dental professionals. Int. J. Dent. Hyg. 2009, 7, 159–165. [Google Scholar] [CrossRef]

- Dable, R.A.; Wasnik, P.B.; Yeshwante, B.J.; Musani, S.I.; Patil, A.K.; Nagmode, S.N. Postural assessment of students evaluating the need of ergonomic seat and magnification in dentistry. J. Ind. Prosthodont. Soc. 2014, 14, 51–58. [Google Scholar] [CrossRef] [PubMed]

- Lietz, J.; Ulusoy, N.; Nienhaus, A. Prevention of musculoskeletal diseases and pain among dental professionals through ergonomic interventions: A systematic literature review. Int. J. Environ. Res. Public Health 2020, 17, 3482. [Google Scholar] [CrossRef]

- Gupta, A.; Bhat, M.; Mohammed, T.; Bansal, N.; Gupta, G. Ergonomics in dentistry. Int. J. Clin. Pediatr. Dent. 2014, 7, 30. [Google Scholar] [CrossRef] [PubMed]

- Torén, A. Muscle activity and range of motion during active trunk rotation in a sitting posture. Appl. Ergon. 2001, 32, 583–591. [Google Scholar] [CrossRef]

- Maurer-Grubinger, C.; Holzgreve, F.; Fraeulin, L.; Betz, W.; Erbe, C.; Brueggmann, D.; Wanke, E.M.; Nienhaus, A.; Groneberg, D.A.; Ohlendorf, D. Combining ergonomic risk assessment (RULA) with inertial motion capture technology in dentistry—Using the benefits from two worlds. Sensors 2021, 21, 4077. [Google Scholar] [CrossRef] [PubMed]

- Kee, D. Systematic comparison of OWAS, RULA, and REBA based on a literature review. Int. J. Environ. Res. Public Health 2022, 19, 595. [Google Scholar] [CrossRef]

- Maltry, L.; Holzgreve, F.; Maurer, C.; Wanke, E.; Ohlendorf, D. Improved ergonomic risk assessment through the combination of inertial sensors and observational methods exemplified by RULA. Zentralblatt Für Arbeitsmedizin Arbeitsschutz Und Ergon. 2020, 70, 236–239. [Google Scholar] [CrossRef]

- Kee, D. Comparison of OWAS, RULA and REBA for assessing potential work-related musculoskeletal disorders. Int. J. Ind. Ergon. 2021, 83, 103140. [Google Scholar] [CrossRef]

- Kim, W.; Sung, J.; Saakes, D.; Huang, C.; Xiong, S. Ergonomic postural assessment using a new open-source human pose estimation technology (OpenPose). Int. J. Ind. Ergon. 2021, 84, 103164. [Google Scholar] [CrossRef]

- Lowe, B.D.; Dempsey, P.G.; Jones, E.M. Ergonomics assessment methods used by ergonomics professionals. Appl. Ergon. 2019, 81, 102882. [Google Scholar] [CrossRef] [PubMed]

- Vignais, N.; Miezal, M.; Bleser, G.; Mura, K.; Gorecky, D.; Marin, F. Innovative system for real-time ergonomic feedback in industrial manufacturing. Appl. Ergon. 2013, 44, 566–574. [Google Scholar] [CrossRef]

- Holzgreve, F.; Fraeulin, L.; Betz, W.; Erbe, C.; Wanke, E.M.; Brüggmann, D.; Nienhaus, A.; Groneberg, D.A.; Maurer-Grubinger, C.; Ohlendorf, D. A RULA-based comparison of the ergonomic risk of typical working procedures for dentists and dental assistants of general dentistry, endodontology, oral and maxillofacial surgery, and orthodontics. Sensors 2022, 22, 805. [Google Scholar] [CrossRef] [PubMed]

- Zhao, W.; Wang, L.; Li, Y.; Liu, X.; Zhang, Y.; Yan, B.; Li, H. A Multi-Scale and Multi-Stage Human Pose Recognition Method Based on Convolutional Neural Networks for Non-Wearable Ergonomic Evaluation. Processes 2024, 12, 2419. [Google Scholar] [CrossRef]

- Nowara, R.; Holzgreve, F.; Golbach, R.; Wanke, E.M.; Maurer-Grubinger, C.; Erbe, C.; Brueggmann, D.; Nienhaus, A.; Groneberg, D.A.; Ohlendorf, D. Testing the Level of Agreement between Two Methodological Approaches of the Rapid Upper Limb Assessment (RULA) for Occupational Health Practice—An Exemplary Application in the Field of Dentistry. Bioengineering 2023, 10, 477. [Google Scholar] [CrossRef]

- Diego-Mas, J.-A.; Alcaide-Marzal, J.; Poveda-Bautista, R. Errors using observational methods for ergonomics assessment in real practice. Hum. Factors 2017, 59, 1173–1187. [Google Scholar] [CrossRef]

- Li, L.; Martin, T.; Xu, X. A novel vision-based real-time method for evaluating postural risk factors associated with musculoskeletal disorders. Appl. Ergon. 2020, 87, 103138. [Google Scholar] [CrossRef]

- Li, L.; Xu, X. A deep learning-based RULA method for working posture assessment. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, Washington, DC, USA, 28 October–1 November 2019; pp. 1090–1094. [Google Scholar]

- Dindorf, C.; Horst, F.; Slijepčević, D.; Dumphart, B.; Dully, J.; Zeppelzauer, M.; Horsak, B.; Fröhlich, M. Machine learning in biomechanics: Key applications and limitations in walking, running and sports movements. In Artificial Intelligence, Optimization, and Data Sciences in Sports; Blondin, M.J., Fister, I., Jr., Pardalos, P.M., Eds.; Springer Nature: Cham, Switzerland, 2025; pp. 91–148. [Google Scholar] [CrossRef]

- Baldinger, M.; Lippmann, K.; Senner, V. Artificial intelligence-based motion capture: Current technologies, applications and challenges. In Artificial Intelligence in Sports, Movement, and Health; Springer: New York, NY, USA, 2024; pp. 161–176. [Google Scholar]

- Kapse, S.; Wu, R.; Thamsuwan, O. Addressing ergonomic challenges in agriculture through AI-enabled posture classification. Appl. Sci. 2024, 14, 525. [Google Scholar] [CrossRef]

- Holzgreve, F.; Weis, T.; Grams, I.; Germann, U.; Wanke, E.M. Prävalenz von Muskel-Skelett-Erkrankungen in der Zahnmedizin. Zentralblatt für Arbeitsmedizin Arbeitsschutz und Ergon. 2022, 72, 140–146. [Google Scholar] [CrossRef]

- Votel, R.; Li, N. Next-generation pose detection with movenet and tensorflow. js. TensorFlow Blog 2021, 4, 4. [Google Scholar]

- Jo, B.; Kim, S. Comparative analysis of OpenPose, PoseNet, and MoveNet models for pose estimation in mobile devices. Trait. Du Signal 2022, 39, 119. [Google Scholar] [CrossRef]

- Humadi, A.; Nazarahari, M.; Ahmad, R.; Rouhani, H. In-field instrumented ergonomic risk assessment: Inertial measurement units versus Kinect V2. Int. J. Ind. Ergon. 2021, 84, 103147. [Google Scholar] [CrossRef]

- Roetenberg, D.; Luinge, H.; Slycke, P. Xsens MVN: Full 6DOF human motion tracking using miniature inertial sensors. Xsens Motion Technol. BV Tech. Rep. 2009, 1, 1–7. [Google Scholar]

- Humadi, A.; Nazarahari, M.; Ahmad, R.; Rouhani, H. Instrumented ergonomic risk assessment using wearable inertial measurement units: Impact of joint angle convention. IEEE Access 2020, 9, 7293–7305. [Google Scholar] [CrossRef]

- Deutsche Gesetzliche Unfallversicherung. Grundsätze für arbeitsmedizinische Vorsorgeuntersuchungen; 5. vollst. neu bearb; VBG-Ihre gesetzliche Unfallversicherung, Aufl, Gentner: Stuttgart, Germany, 2010. [Google Scholar]

- McAtamney, L.; Corlett, E.N. RULA: A survey method for the investigation of work-related upper limb disorders. Appl. Ergon. 1993, 24, 91–99. [Google Scholar] [CrossRef]

- Altman, D.G. Practical Statistics for Medical Research; Chapman and Hall/CRC: Boca Raton, FL, USA, 1990. [Google Scholar]

- Fleiss, J.L.; Cohen, J.; Everitt, B.S. Large sample standard errors of kappa and weighted kappa. Psychol. Bull. 1969, 72, 323. [Google Scholar] [CrossRef]

- Faul, F.; Erdfelder, E.; Buchner, A.; Lang, A.-G. Statistical power analyses using G* Power 3.1: Tests for correlation and regression analyses. Behav. Res. Methods 2009, 41, 1149–1160. [Google Scholar] [CrossRef]

- Teufl, W. Validation and Initial Applications of a Magnetometer-Free Inertial Sensor Based Motion Capture System for the Human Lower Body. Ph.D. Thesis, Technische Universität Kaiserslautern, Kaiserslautern, Germany, 2021. [Google Scholar]

- Von Marcard, T.; Pons-Moll, G.; Rosenhahn, B. Human pose estimation from video and imus. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 1533–1547. [Google Scholar] [CrossRef] [PubMed]

- Alvarez, H.Y. Validity of the MoveNet Deep Learning Model for Joint Angle Estimation. Ph.D. Thesis, San Francisco State University, San Francisco, CA, USA, 2023. [Google Scholar]

- Chen, W.; Jiang, Z.; Guo, H.; Ni, X. Fall detection based on key points of human-skeleton using openpose. Symmetry 2020, 12, 744. [Google Scholar] [CrossRef]

- Wade, L.; Needham, L.; McGuigan, P.; Bilzon, J. Applications and limitations of current markerless motion capture methods for clinical gait biomechanics. PeerJ 2022, 10, e12995. [Google Scholar] [CrossRef]

- Teufl, W.; Lorenz, M.; Miezal, M.; Taetz, B.; Fröhlich, M.; Bleser, G. Towards inertial sensor based mobile gait analysis: Event-detection and spatio-temporal parameters. Sensors 2018, 19, 38. [Google Scholar] [CrossRef] [PubMed]

- Al-Amri, M.; Nicholas, K.; Button, K.; Sparkes, V.; Sheeran, L.; Davies, J.L. Inertial measurement units for clinical movement analysis: Reliability and concurrent validity. Sensors 2018, 18, 719. [Google Scholar] [CrossRef] [PubMed]

- Ludwig, O.; Becker, S.; Fröhlich, M. Einführung in die Ganganalyse. In Einführung in die Ganganalyse: Grundlagen, Anwendungsgebiete, Messmethoden; Springer: New York, NY, USA, 2022; pp. 1–20. [Google Scholar]

- Robert-Lachaine, X.; Mecheri, H.; Larue, C.; Plamondon, A. Validation of inertial measurement units with an optoelectronic system for whole-body motion analysis. Med. Biol. Eng. Comput. 2017, 55, 609–619. [Google Scholar] [CrossRef]

- Ellegast, R.; Hermanns, I.; Schiefer, C. Workload assessment in field using the ambulatory CUELA system. In Digital Human Modeling: Proceedings of the Second International Conference, ICDHM 2009, Held as Part of HCI International 2009, San Diego, CA, USA, 19–24 July 2009; Proceedings 2; Springer: Berlin/Heidelberg, Germany, 2009; pp. 221–226. [Google Scholar]

- Martinez, K.B.; Nazarahari, M.; Rouhani, H. K-score: A novel scoring system to quantify fatigue-related ergonomic risk based on joint angle measurements via wearable inertial measurement units. Appl. Ergon. 2022, 102, 103757. [Google Scholar] [CrossRef]

| Age (y) | Weight | BMI | Height | Shoulder Height | Shoulder Width | Elbow Span | Wrist Span | Arm Span | |

|---|---|---|---|---|---|---|---|---|---|

| Mean | 19.56 | 63.41 | 21.56 | 165.00 | 138.74 | 37.97 | 81.42 | 126.95 | 162.79 |

| SD | 5.91 | 13.87 | 4.63 | 6.35 | 5.42 | 2.75 | 4.27 | 11.38 | 7.86 |

| Min | 15.00 | 42.20 | 14.08 | 152.20 | 125.50 | 29.80 | 72.80 | 110.50 | 145.00 |

| Max | 42.00 | 115.00 | 33.74 | 178.40 | 150.00 | 44.40 | 89.20 | 187.20 | 183.80 |

| Parameters | Modifications of RULA |

|---|---|

| Posture of the upper arm | Sagittal view: angle between shoulder–hip line and shoulder–elbow axis |

| Shoulder raising | Frontal view: application shows the inclination of the shoulder; +1 when one shoulder was raised |

| Upper arm abduction | Frontal view: angle between hip–shoulder line and shoulder–elbow axis |

| Arm supported | Application showed whether the participants were supporting themselves or not (−1) |

| Posture of the lower arm | Frontal and sagittal view: angle between shoulder–elbow line and elbow–wrist axis |

| Arm working across midline or out to side of body | +1 as soon as the wrist went beyond the center of the body |

| Wrist posture | Frontal and sagittal view: assessment of the angle between the elbow–wrist line and alignment of the hand |

| Wrist bending from midline | +1 as soon as the fingers were not an extension of the ellbow–wrist line |

| Rotation of the forearm or hand | Rotation of forearm or hand was scored with +1 or +2 depending on hand posture |

| Muscle use score of arm and wrist | Static and dynamic muscle use was consistently scored as +1 |

| Force/load score | This score was fixed to 0 because there was no lifting of dental instruments >2 kg in the dental practice |

| Posture of the neck | Sagittal view: angle between shoulder–hip line and shoulder–ear axis |

| Neck twist | Frontal and sagittal view: subjective assessment of the deviating position of the eye and nose from the body–midline |

| Neck tilt | Frontal view: +1 as soon as the angle of the eye line is over 0° |

| Trunk tilt | Sagittal view: subjective assessment of the alignment of the shoulder line to the hip line |

| Trunk twist | Frontal and sagittal view: subjective assessment of the alignment of the shoulder line to the hip line |

| Legs and feet supported | The value was fixed to +1 because the dental assistants remained seated during treatment, and their legs and feet were supported |

| Muscle use score of neck, trunk, and legs | Static and dynamic muscle use was consistently scored as +1 |

| Force/load score | This score was fixed to 0 because there was no lifting of dental instruments >2 kg in the dental practice |

| IMU RULA | PE RULA | IMU UA | PE UA | IMU LA | PE LA | IMU Wrist | PE Wrist | IMU Neck | PE Neck | IMU Trunk | PE Trunk | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Mean | 4.82 | 4.78 | 2.94 | 2.67 | 2.24 | 1.94 | 3.00 | 2.62 | 3.23 | 3.43 | 1.95 | 2.43 |

| Median | 5.00 | 5.00 | 3.00 | 2.75 | 2.50 | 2.00 | 3.02 | 2.75 | 3.10 | 3.50 | 2.00 | 2.50 |

| SD | 1.25 | 0.97 | 0.54 | 0.52 | 0.44 | 0.30 | 0.49 | 0.43 | 1.32 | 0.75 | 0.39 | 0.54 |

| Min | 3.00 | 3.00 | 1.50 | 1.50 | 1.00 | 1.25 | 1.75 | 1.50 | 1.00 | 1.50 | 1.00 | 1.00 |

| Max | 7.00 | 7.00 | 3.97 | 3.75 | 2.99 | 2.50 | 3.98 | 3.50 | 5.00 | 5.00 | 3.02 | 3.75 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Simon, S.; Meining, J.; Laurendi, L.; Berkefeld, T.; Dully, J.; Dindorf, C.; Fröhlich, M. 2D Pose Estimation vs. Inertial Measurement Unit-Based Motion Capture in Ergonomics: Assessing Postural Risk in Dental Assistants. Bioengineering 2025, 12, 403. https://doi.org/10.3390/bioengineering12040403

Simon S, Meining J, Laurendi L, Berkefeld T, Dully J, Dindorf C, Fröhlich M. 2D Pose Estimation vs. Inertial Measurement Unit-Based Motion Capture in Ergonomics: Assessing Postural Risk in Dental Assistants. Bioengineering. 2025; 12(4):403. https://doi.org/10.3390/bioengineering12040403

Chicago/Turabian StyleSimon, Steven, Jonna Meining, Laura Laurendi, Thorsten Berkefeld, Jonas Dully, Carlo Dindorf, and Michael Fröhlich. 2025. "2D Pose Estimation vs. Inertial Measurement Unit-Based Motion Capture in Ergonomics: Assessing Postural Risk in Dental Assistants" Bioengineering 12, no. 4: 403. https://doi.org/10.3390/bioengineering12040403

APA StyleSimon, S., Meining, J., Laurendi, L., Berkefeld, T., Dully, J., Dindorf, C., & Fröhlich, M. (2025). 2D Pose Estimation vs. Inertial Measurement Unit-Based Motion Capture in Ergonomics: Assessing Postural Risk in Dental Assistants. Bioengineering, 12(4), 403. https://doi.org/10.3390/bioengineering12040403