Abstract

In statistical analyses, especially those using a multiresponse regression model approach, a mathematical model that describes a functional relationship between more than one response variables and one or more predictor variables is often involved. The relationship between these variables is expressed by a regression function. In the multiresponse nonparametric regression (MNR) model that is part of the multiresponse regression model, estimating the regression function becomes the main problem, as there is a correlation between the responses such that it is necessary to include a symmetric weight matrix into a penalized weighted least square (PWLS) optimization during the estimation process. This is, of course, very complicated mathematically. In this study, to estimate the regression function of the MNR model, we developed a PWLS optimization method for the MNR model proposed by a previous researcher, and used a reproducing kernel Hilbert space (RKHS) approach based on a smoothing spline to obtain the solution to the developed PWLS optimization. Additionally, we determined the symmetric weight matrix and optimal smoothing parameter, and investigated the consistency of the regression function estimator. We provide an illustration of the effects of the smoothing parameters for the estimation results using simulation data. In the future, the theory generated from this study can be developed within the scope of statistical inference, especially for the purpose of testing hypotheses involving multiresponse nonparametric regression models and multiresponse semiparametric regression models, and can be used to estimate the nonparametric component of a multiresponse semiparametric regression model used to model Indonesian toddlers’ standard growth charts.

1. Introduction

A reproducing kernel Hilbert space (RKHS) theory was first introduced by Aronszajn in 1950 [1]. This theory was later developed by [1,2] to solve optimization problems in regression, especially nonparametric spline original regression. The RKHS approach was used by [3] for an M-type spline estimator. Next, Ref. [4] used the RKHS approach for a relaxed spline estimator.

There are many cases in our daily life that we have to analyze, especially cases involving the functional relationship between different variables. In statistics, to analyze the functional relationship between several variables, namely, the influence of the independent variable or predictor variable on the dependent variable or response variable, regression analysis is used. In regression analysis, it is necessary to build a mathematical model, which is commonly referred to as a regression model, and this functional relationship is expressed by a regression function. In regression analysis, there are two kinds of basic regression model approaches, namely, parametric regression models and nonparametric regression models. In general, the main problem in regression analysis whether using a parametric regression model approach or a nonparametric regression model approach is the problem of estimating the regression function. In the parametric regression model, the problem of estimating the regression function is the same as the problem of estimating the parameters of the parametric regression model where this is different from the nonparametric regression model. In nonparametric regression models, estimating the regression function is equivalent to estimating an unknown smooth function contained in a Sobolev space using smoothing techniques.

There are several frequently used smoothing techniques for estimating nonparametric regression functions, for example, local linear, local polynomial, kernel, and spline. The research results of several previous researchers have shown that smoothing techniques such as local linear, local polynomial, and kernel are highly recommended for estimating nonparametric regression functions for prediction purposes. These researchers include [5,6], who used local linear for predicting hypertension risk and predicting Mycobacterium tuberculosis numbers, respectively; Ref. [7] used local linear for determining boundary correction of nonparametric regression function; Ref. [8] used local linear for determining the bias reduction of a regression function estimate; Ref. [9] used local linear to design a standard growth chart for assessing the nutritional status of toddlers; Refs. [10,11] used local polynomial for estimating regression functions in cases of errors-in-variable and correlated errors, respectively; Refs. [12,13] used local polynomial to estimate the regression function for functional data and for finite population, respectively; Ref. [14] discussed smoothing techniques using kernel; Refs. [15,16] discussed consistency kernel regression estimation and estimating regression functions for cases of correlated errors using kernel, respectively; and Refs. [17,18] discussed estimating covariance matrix and selecting bandwidth using kernel, respectively. However, local linear, local polynomial, and kernel are highly dependent on the bandwidth in the neighborhood of the target point. Thus, if we use these local linear, local polynomial, or kernel approaches to estimate a model with fluctuating data, then we require a small bandwidth, and this results in too rough an estimation of the curve. This means that these local linear, local polynomial, and kernel approaches do not consider smoothness, only the goodness of fit. Therefore, for estimation models with fluctuating data in the sub-intervals, these local linear, local polynomial, and kernel methods are not good to use, as the results of estimation result in a large value of the mean square error (MSE). This is different from spline approaches, which consider goodness of fit and smoothness factors, as discussed by [1,19], who used splines for modeling observational data and estimating nonparametric regression functions. Furthermore, for prediction and interpretation purposes, smoothing techniques such as smoothing spline and truncated spline are better and more flexible for estimating the nonparametric regression functions [20]. Due to the flexible nature of these splines, many researchers have been interested in using and developing them in several cases. For examples, M-type splines were used by [21] to analyze variance for correlated data, and by [22] for estimating both nonparametric and semiparametric regression functions; truncated splines have been discussed by [23] to estimate mean arterial pressure for prediction purpose and by [24] to estimate blood pressure for prediction and interpretation purposes. Additionally, Ref. [25] developed truncated spline for estimating a semiparametric regression model and determining the asymptotic properties of the estimator. Furthermore, Ref. [26] discussed the flexibility of B-spline and penalties in estimating regression function; Ref. [27] discussed analyzing current status data using penalized spline; Ref. [28] analyzed the association between cortisol and ACTH hormones using bivariate spline; and Ref. [29] analyzed censored data using spline regression. In addition, Ref. [30] used both kernel and spline for estimating the regression function and selecting the optimal smoothing parameter of a uniresponse nonparametric regression (UNR) model; Refs. [31,32] developed both kernel and spline for estimating the regression function and for selecting the optimal smoothing parameter of a multiresponse nonparametric regression (MNR) model; and Ref. [33] discussed smoothing techniques, namely, kernel and spline, to estimate the coefficient of a rates model.

In regression modeling, a common problem involves more than one response variable observed at several values of predictor variables and between responses that are correlated with each other. The multiresponse nonparametric regression (MNR) model approach is appropriate for modeling the functions which represent the relationship between the response variable and predictor variable with correlated responses. In this model there is a correlation between the responses. Because of this correlation, it is necessary to construct a matrix called a weight matrix. Constructing the weight matrix is one of the things that distinguishes the MNR model approach from a classical model approach, that is, a parametric regression model or uniresponse nonparametric regression model approach. Thus, in the estimation process the regression function requires a weight matrix in the form of a symmetric matrix, especially a diagonal matrix. Furthermore, in the MNR model there are several smoothing techniques which can be used to estimate the regression function. One of these smoothing techniques is the smoothing spline approach. In recent years, studies on smoothing splines have attracted a great deal of attention and the methodology has been widely used in many areas of research, for example, for estimating regression functions of nonparametric regression models, in [34,35] used smoothing spline, mixed smoothing spline, and Fourier series; estimating regression functions were conducted by [36,37] for a semiparametric nonlinear regression model and a semiparametric regression model; and smoothing spline in an ANOVA model was discussed by [38]. Smoothing spline estimator, with its powerful and flexible properties, is one of the most popular estimators used for estimating regression function of the nonparametric regression model. Although the researchers mentioned above have previously discussed splines for estimating regression functions in many cases, none of them have used a reproducing kernel Hilbert space (RKHS) approach to estimate the regression function of the MNR model. On the other hand, even though there are studies, as mentioned above, that have used the RKHS approach for estimating regression functions, those researchers used the RKHS for estimating regression functions of single–response or uniresponse linear regression models only. This means that RKHS approaches were not used for estimating the regression function of the MNR model based on a smoothing spline estimator. In addition, although [34] used the RKHS approach to estimate the regression function of the MNR model, and also discussed it in a special case involving a simulation study; but Ref. [34] assumed that the three responses of the MNR model have the same smoothing parameter values, which in real life situation is a difficult assumption to fulfill. In addition, Ref. [34] did not discuss the consistency of the smoothing spline regression function estimator. Therefore, in this study we provide a theoretical discussion on estimating the smoothing spline regression function of the MNR model in case of unequal values of the smoothing parameters using the RKHS approach. In other words, in this study we discuss it for the more general case.

2. Materials and Methods

In this section, we briefly describe the materials and methods used according to the needs of this study, following the steps in the order in which they were carried out.

2.1. Multiresponse Nonparametric Regression Model

Suppose, given a paired observation which satisfies the following multiresponse nonparametric regression (MNR) model:

where is the observation value of the response variable on the response and the observation; represents an unknown nonparametric regression function of response which is assumed to be smooth in the sense that it is contained in a Sobolev space; is the observation value of a predictor variable on the response and the observation; and represents the value of the random error on the response and the observation, which is assumed to have zero mean and variance (heteroscedastic). In this study, we assume that the correlation between responses is .

In general, the main problem in MNR modelling is how we estimate the MNR model, which in this case is equivalent to the problem of estimating the regression function of the MNR model. There are many smoothing techniques that can be used to estimate the MNR model presented in (1), for example, kernel, local linear, splines, and local polynomial. One of these smoothing techniques is the spline approach, in which the smoothing spline is the most flexible estimator for estimating fluctuating data on sub-intervals. The following briefly presents the estimation method using the smoothing spline estimator. Further details related to the smoothing spline estimator can be found in [20].

2.2. Smoothing Spline Estimator

In this study, we estimated the regression function, of the MNR model presented in (1) based on the smoothing spline estimator using the reproducing kernel Hilbert space approach, which is discussed in the following section. An estimate of the regression function of the MNR model presented in (1) can be obtained by developing the penalized weighted least squares (PWLS) optimization method proposed by [31], which is only used for the two-response nonparametric regression model with the same variance of errors, namely, the homoscedastic case. We then develop the PWLS optimization to estimate a nonparametric regression model with more than two responses, namely, the MNR model, in case of unequal variance of errors, which is called as heteroscedastic case. Hence, the estimated smoothing spline of the MNR model presented in (1) can be obtained by carrying out the following PWLS optimization:

where ; are symmetric weight matrices, are smoothing parameters, and are unknown regression functions in a Sobolev space , where the Sobolev space is defined as follows:

Furthermore, to obtain the solution to the PWLS provided in (2), we used the reproducing kernel Hilbert space (RKHS) approach. In the following section, we provide a brief review of RKHS. Further details related to RKHS can be found in [39], a paper concerning the theory of RKHS, and in [40], a textbook which discusses the use of RKHS in probability and statistics.

2.3. Reproducing Kernel Hilbert Space

The need to reproducing kernel Hilbert space (RKHS) arises in various fields, including statistics, theory of approximation, theory of machine learning, theory of group representation, and complex analysis. In statistics, the RKHS method is often used as a method for estimating a regression function based on the smoothing spline estimator for prediction purposes. In machine learning, the RKHS method is arguably the most popular approach for dealing with nonlinearity in data. Several researchers have discussed the RKHS method; for example, Refs. [41,42] discussed the use of RKHS in Support Vector Machines (SVM) and optimization problems, respectively, and Refs. [43,44] discussed the use of RKHS in asymptotic distribution for regression and machine learning.

A Hilbert space is called an RKHS on a set X over field F if the following conditions are met [1,39]:

- (i)

- is a vector subspace of (X, ), where (X, ) is a vector space over ;

- (ii)

- is endowed with an inner product , making it into a Hilbert space;

- (iii)

- the linear evaluation functional , defined by , is bounded, for every X.

Furthermore, if is an RKHS on X, then for every X there exists a unique vector such that for every , . This is because every bounded linear functional is provided by the inner product with a unique vector in . The function is called a reproducing kernel (RK) for point . The reproducing kernel (RK) for is a two–variable function defined by . Hence, we have and .

In this study, we provide a simulation study to evaluate the performance of the proposed MNR model estimation method.

2.4. Simulation

The simulation in this study consists of a simulation to determine the optimal smoothing spline based on a generalized cross-validation (GCV) criterion to obtain the best estimated MNR model and a simulation to describe the effect of the smoothing parameters on the estimation results of the regression function of the MNR model based on minimal GCV value. We generate samples sized from the MNR model and provide an illustration of the effects of the smoothing parameters in order to estimate the results of the MNR model by comparing three kinds of different smoothing parameter values, namely, small, optimal, and large smoothing parameters values.

In the following section, we provide the results and discussion of this study covering estimation of the regression function of the MNR model using the RKHS approach by estimating the symmetric weight matrix and optimal smoothing parameters, a simulation study, and investigating the consistency of the smoothing spline regression function estimator.

3. Results and Discussions

The results and discussion presented in this section include estimating the regression function of the MNR model using RKHS, estimating the weight matrix, estimating the optimal smoothing parameter, investigating the consistency of the regression function estimator, a simulation study, and an application example using real data.

3.1. Estimating the Regression Function of the MNR Model Using the RKHS Approach

The MNR model presented in (1) can be expressed in matrix notation as follows:

where ,, , , , and .

We assume that is a zero mean random error with covariance . In this case, the covariance matrix is a symmetrical matrix, that is, it is a diagonal matrix which can be expressed as follows:

where is an -response covariance matrix of for .

To determine the regression function of the MNR model (1) using the RKHS approach, we first express the MNR model in a general smoothing spline regression model [20]. Therefore, we can express the MNR model (1) as follows:

where is an unknown smooth function, is a bounded linear functional, and is a Hilbert space.

Next, the Hilbert space is decomposed into a direct sum of the Hilbert subspace and Hilbert sub space , where has basis , has basis , and is as follows:

This implies that for , , and we can express every function as follows:

Because is the basis of the Hilbert subspace and is the basis of the Hilbert subspace , the function in (7) can be expressed as follows:

where ; ; ; ; ; and .

Hence, for and , we have

Because is a bounded linear functional in the Hilbert space , according to [20] there exists a Riesz representer such that

where and denote an inner product. Next, by considering Equations (8) and (9) and applying the properties of the inner product, the function can be written as follows:

Then, based on Equation (10), we can obtain the regression functions for , which are the regresion functions for the first response, the second response, …, and the pth response, as follows:

Hence, following Equation (11), we obtain the regression function for as follows:

where ; ; ; and .

Similarly, we obtain the regression functions for , which are , , …, , as follows:

Hence, based on Equations (12) and (13), we obtain the regression function of the MNR model as follows:

Thus, we can express the MNR model presented in (1) in matrix notation as follows:

where is an diagonal matrix with , ; is an vector of parameters; is an diagonal matrix; and is an vector of parameters.

Now, we can determine an estimated smoothing spline regression function of the MNR model presented in (1) using the RKHS approach by taking the solution of the following optimization:

with a constraint , where and .

Note that determining the solution to Equation (16) is equivalent to determining the solution to the following PWLS optimization:

where ; is a weighted least square that represents the goodness of fit, represents a penalty that measures smoothness, and represents a smoothing parameter which controls the trade-off between the goodness of fit and the penalty.

Next, we decompose the penalty presented in (17) as follows:

Because we have we are able to obtain the penalty presented in (17) or (18) as follows:

where . Furthermore, we can write the goodness of fit component in (17) as follows:

Based on Equations (19) and (20), we can express the PWLS optimization presented in (17) as follows:

The solution to (21) can be obtained by taking the partial diferentiation with respect to and . In this step, we obtain the estimations of and as follows:

where .

From this step, we obtain the estimation of the smoothing spline regression function of the MNR model presented in (1) or (15) as follows:

where , , , is an identity matrix with dimension , and .

3.2. Estimating the Symmetric Weight Matrix

Based on MNR model presented in (3), the from Equation (4) is a covariance matrix of the random error . To obtain the estimated weight matrix , where the weight matrix is the inverse of the covariance matrix, we first we consider a paired observation , ; which follows the MNR model presented in (3). Second, supposing that is a multivariate (i.e., N-variates where ) normally distributed random sample with mean and covariance , we have the following likelihood function:

Because and , the likelihood function presented in (23) can be written as follows:

Next, based on (24), the estimated weight matrix can be obtained by carrying out the following optimization:

According to [45], the maximum value of each component of the likelihood function in Equation (25) can be determined using the following equations:

We may express the estimated smoothing spline regression function presented in (22) as follows:

where , and .

Hence, the maximum likelihood estimator for the weight matrix is provided by:

This shows that the estimated weight matrix obtained above is a symmetric matrix, specifically, a diagonal matrix the main diagonal components of which are the estimated weight matrices of the first response, second response, etc., up to the p-th response.

3.3. Estimating Optimal Smoothing Parameters

In MNR modeling, selection of the optimal smoothing parameter value λ cannot be omitted, and is crucial to obtaining a good regression function fit of the MNR model based on the smoothing spline estimator. According to [46], there are several criteria that can be used to select λ, including minimizing cross-validation (CV), generalized cross-validation (GCV), Mallows’ , and Akaike’s information criterion (AIC). However, according to [47], for good regression function fitting based on the spline estimator Mallows’ and GCV are the most satisfactory.

In this section, we determine the optimal smoothing parameter value for good regression function fitting of the MNR model (1). Taking into account Equation (26), we may express the estimated smoothing spline regression function presented in (22) as follows:

where , . The mean squared error (MSE) of the estimated smoothing spline regression function presented in (26) is provided by

Hereinafter, we define this function as follows:

Therefore, based on (27), we can obtain the optimal smoothing parameter value, , by taking the solution of the following optimization:

where represents a positive real number set and .

Thus, the optimal smoothing parameter value is obtained from the minimizing process of the function in (27). The function in (27) is called the generalized cross-validation function [1].

3.4. Simulation Study

In this section, we provide a simulation study for estimating the smoothing spline regression function of the MNR model, where the performance of the proposed MNR model estimation method depends on the selection of an optimal smoothing parameter value. For example, we generate samples with size from an MNR model, namely, a three-response nonparametric regression model, as follows:

where and with correlations , , and variances , , . Based on the results of this simulation, we obtain a minimum generalized cross-validation (GCV) value of 2.526286 and three optimal smoothing parameter values, which are (for the first response), (for the second response), and (for the third response).

Next, we present an illustration of the effects of the smoothing parameters on the estimation results of the MNR Model by comparing three kinds of different smoothing parameter values, namely, , , and , which represent small smoothing parameter values; , , and , which represent optimal smoothing parameter values; and , , and , which represent large smoothing parameter values. In the following table and figures, we provide the results of this simulation study.

Table 1 shows that the smoothing parameter values of , , and are the optimal smoothing parameter values, as these smoothing parameters have the lowest GCV value (2.526286) among all the others. Thus, according to (28), these smoothing parameter values are the optimal smoothing parameters. We can write them as , , and . The optimal smoothing parameters provide the best estimation results for the MNR model presented in (29).

Table 1.

Comparison estimation results of MNR Model in (29) for three kinds of smoothing parameter values.

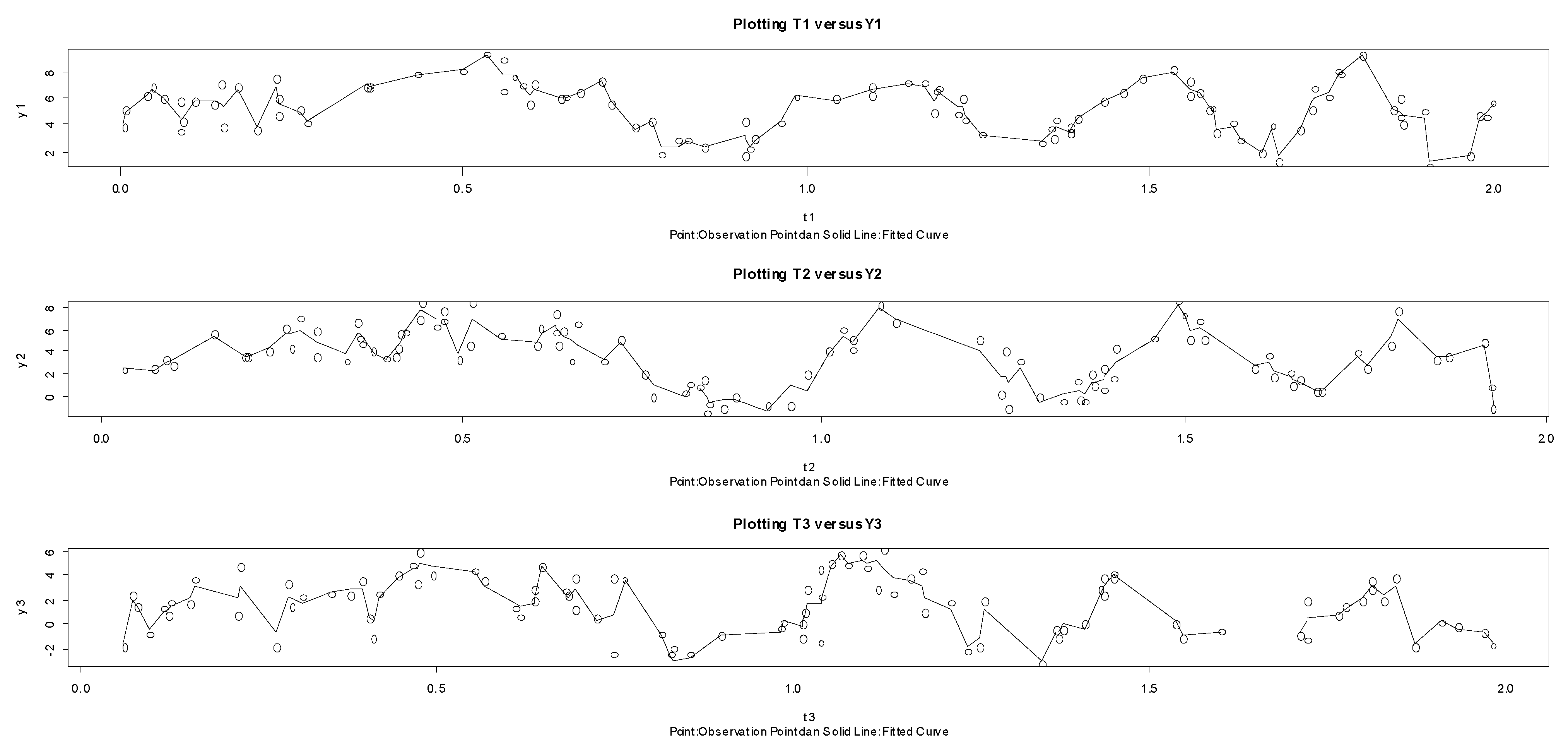

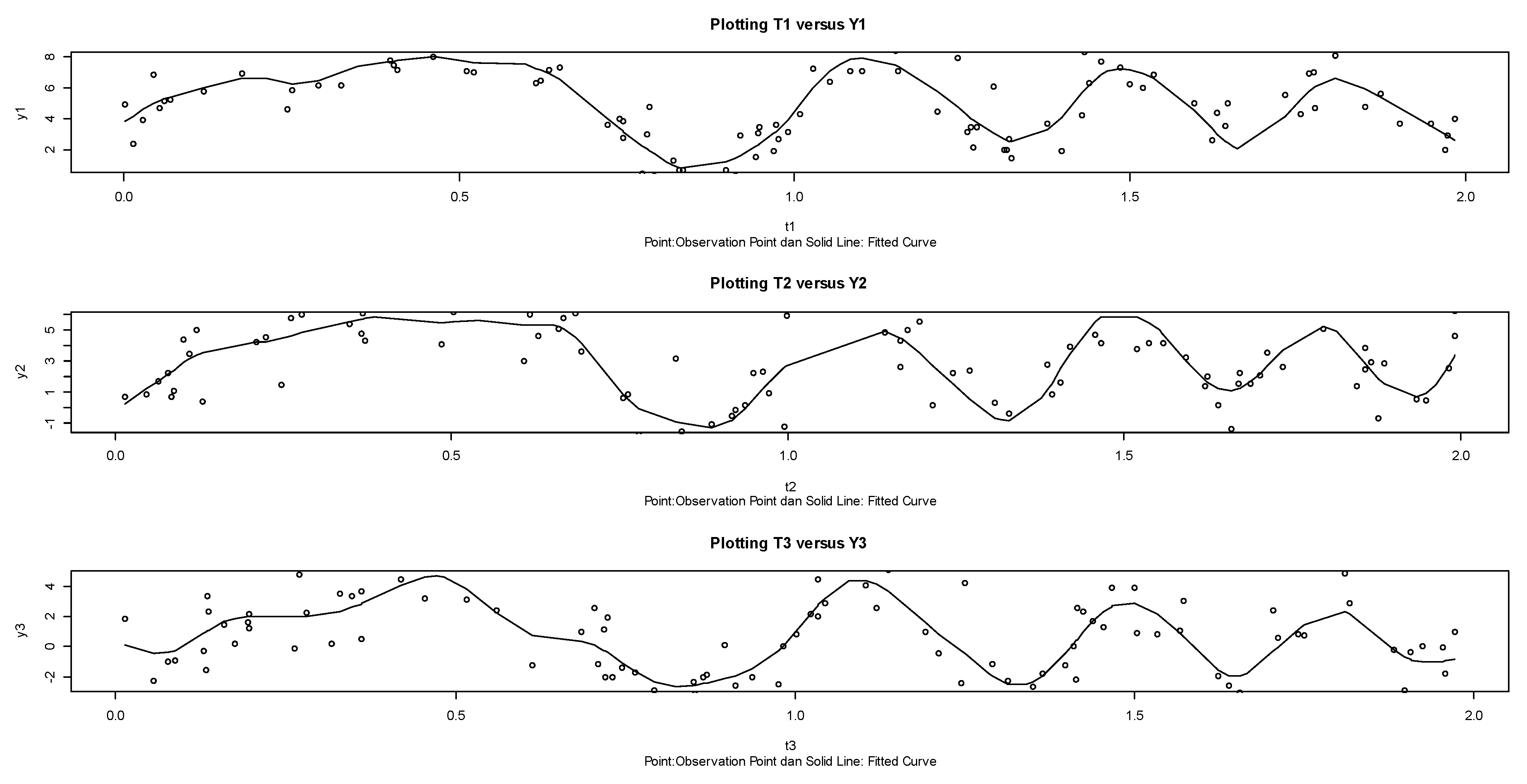

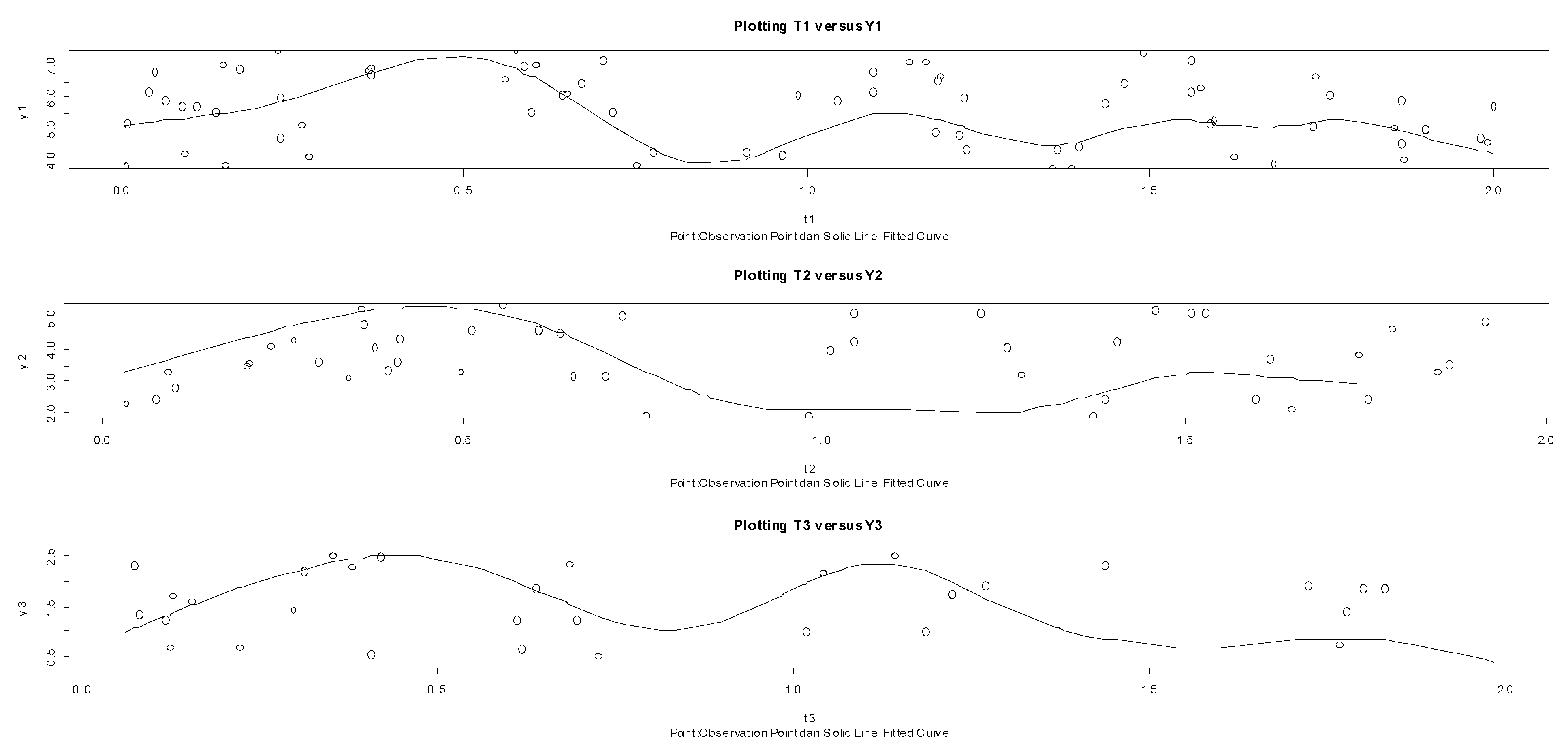

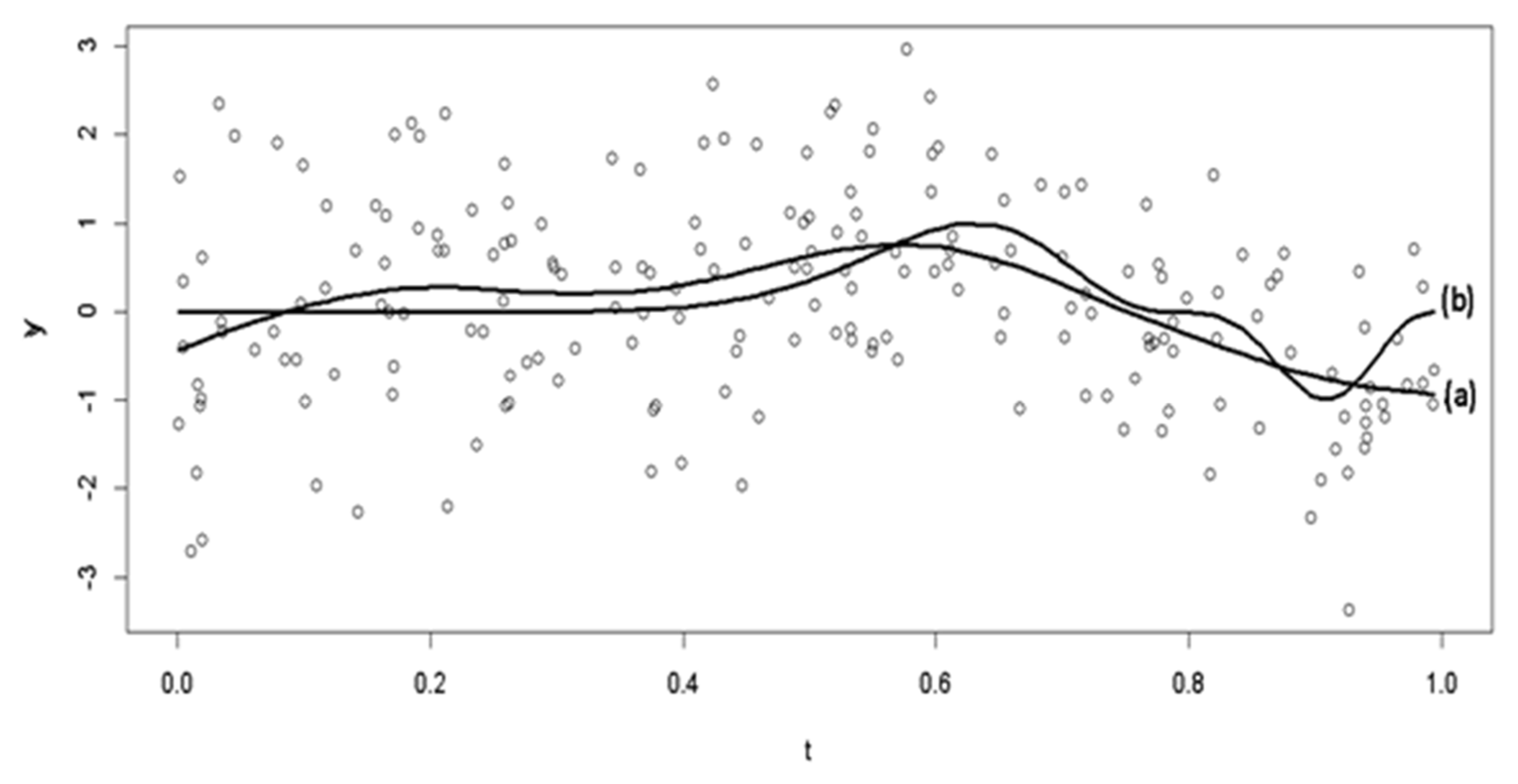

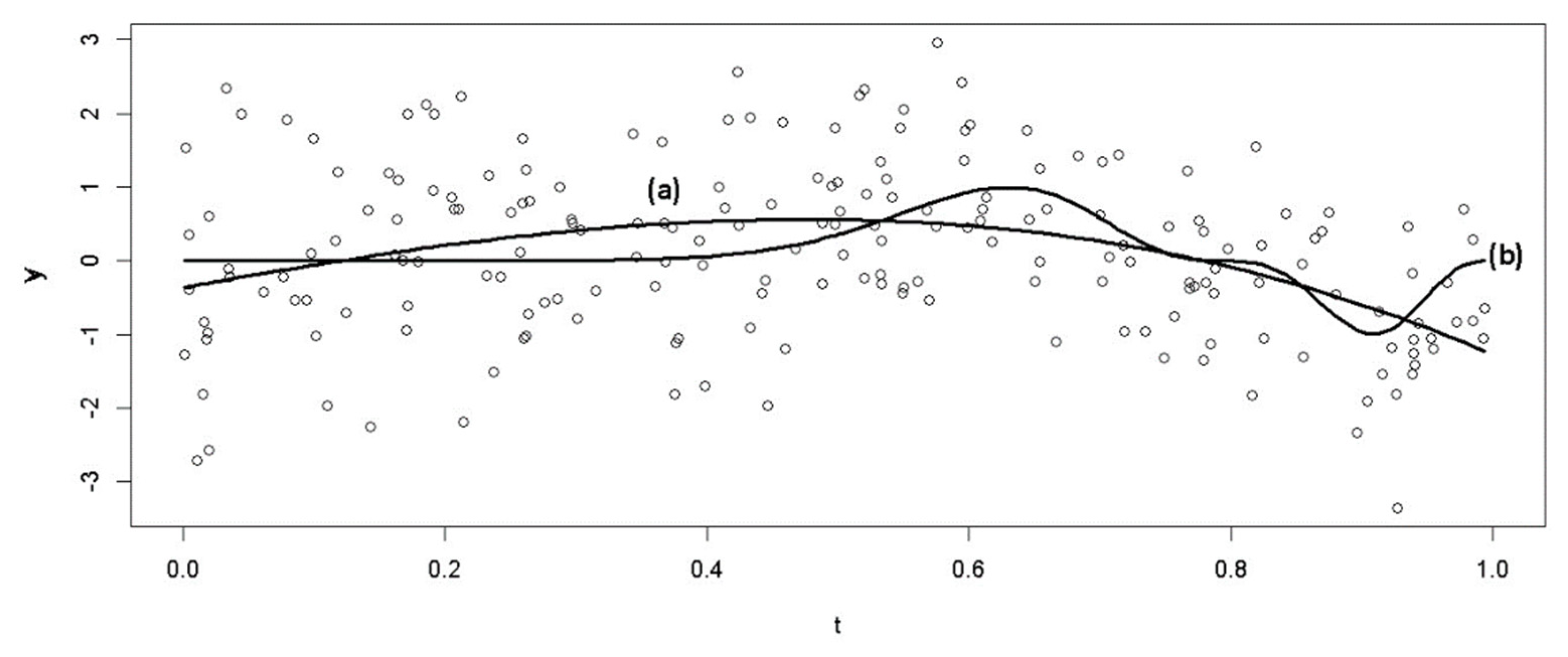

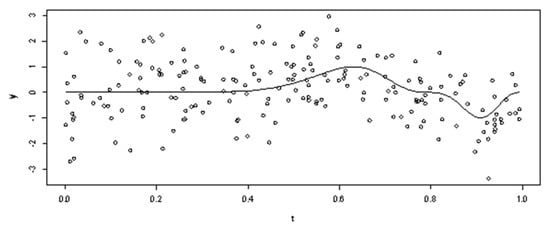

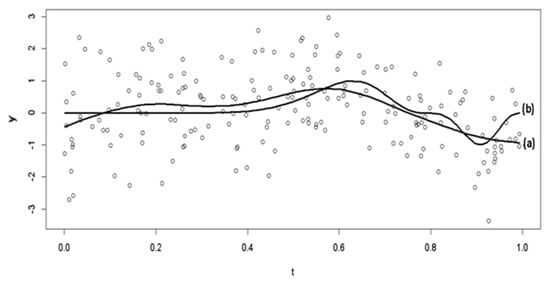

The plots of the estimated regression function of the MNR model presented in (29) for the three different smoothing parameters are shown in Figure 1, Figure 2 and Figure 3.

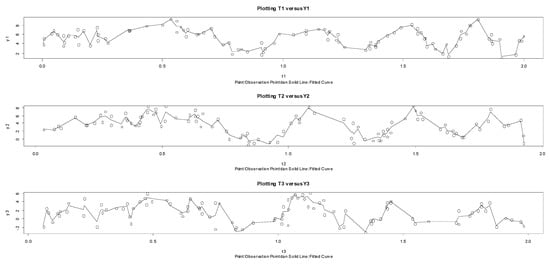

Figure 1.

Plots of estimated MNR Models in (29) for small smoothing parameters.

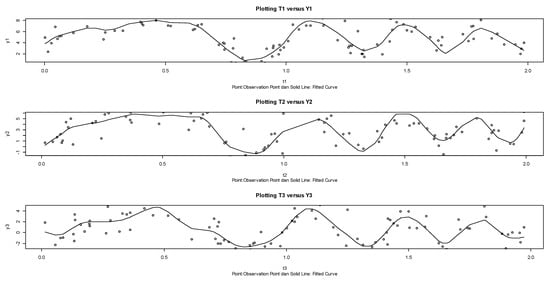

Figure 2.

Plots of estimated MNR Model in (29) for optimal smoothing parameters.

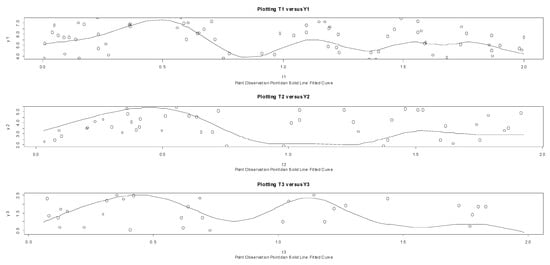

Figure 3.

Plots of estimated MNR Models in (29) for large smoothing parameters.

Figure 1 shows that for all responses the small smoothing parameter values provide estimates of the regression functions of the MNR model presented in (29) that are too rough, namely, () for the first response, () for the second response, and () for the third response.

Figure 2 shows that the optimal smoothing parameter values provide the best estimates of the regression functions of the MNR model presented in (29) for all responses, namely, () for the first response, () for the second response, and () for the third response.

Figure 3 shows that for all responses the large smoothing parameter values provide estimates of the regression functions of the MNR model presented in (29) that are too smooth, namely, () for the first response, () for the second response, and () for the third response.

3.5. Investigating the Consistency of the Smoothing Spline Regression Function Estimator

We first investigate the asymptotic properties of the smoothing spline regression function estimator based on the integrated mean square error (IMSE) criterion. We develop the IMSE proposed by [14] from the uniresponse case to the multiresponse case. Suppose that we decompose the IMSE into two components, and , as follows:

where and . Furthermore, in order to investigate the asymptotic property of , we assign the solution to PWLS optimization in the following theorem.

Theorem 1.

If is the solution that minimizes the following penalized weighted least square (PWLS):

Proof of Theorem 1.

In Section 3.1, we obtained the solution to the PWLS in (31), that is, , where as provided in (22), ,

, , , and is an identity matrix. Next, if we substitute into Equation (31), we find that the value that minimizes PWLS (32) is

Thus, Theorem 1 is proved. □

Furthermore, we investigate the asymptotic property of . For this purpose, we first provide the following assumptions.

Assumptions (A):

- (A1)

- For every , and .

- (A2)

- For every , .

- (A3)

- For any continuous function and , , the following statements are satisfied [48,49]:

- (a)

- , as .

- (b)

- dt, as .

- (c)

- , as .

Next, given Assumptions (A), the asymptotic property of is provided in the Theorem 2.

Theorem 2.

If the Assumptions in (A) hold, then, as .

Proof of Theorem 2.

Suppose is the value which minimizes the following penalized weighted least square (PWLS):

Hence, we obtain . Thus, for every we have

Because , we obtain

Thus, we have the following relationship:

for every .

Because every satisfies the relationship in (33), by taking , we have

where represents “big oh”. Details about “big oh” can be found in [14,50].

Thus, Theorem 2 is proved. □

Furthermore, the asymptotic property of is provided in Theorem 3.

Theorem 3.

If Assumptions (A) hold and

, then.

Proof of Theorem 3.

To investigate the asymptotic property of , we first define the following function:

where ; ; and .

Hence, for any we have

Next, by taking the solution to , for we have

Furthermore, if are the bases for the natural spline and , , then according to [51] this implies that

where is the rth response diagonal element of matrix H in (26).

Now, because Equation (35) holds for every , , it follows that determining the solution to Equation (35) is equivalent to determining the value of that satisfies the following equation:

We can express Equation (36) in matrix notation as follows:

where , , , and .

Hence, we obtain the estimator for in Equation (37) as follows:

where (here, is an RKHS) and is perpendicular to .

Thus, the estimator for the regression function can be expressed as follows:

Hence, for Equation (38) results in

Thus, for we have

From this step, for we have

In the next step, Refs. [51,52] provide an approximation for as follows:

Furthermore, Ref. [51] leads to the following result:

Next, using integral approximation [51], for we have

where . Thus, Theorem 3 is proved. □

Here, based on Theorems 2 and 3, we obtain the asymptotic property of the smoothing spline regression function estimator of the MNR model presented in (1) based on the integrated mean square error (IMSE) criterion, as follows:

where and .

The consistency of the smoothing spline regression function estimator of the MNR model presented in (1) is provided by the following theorem.

Theorem 4.

If is a smoothing spline estimator for regression function of the MNR model presented in (1), then is a consistent estimator for based on the integrated mean square error (IMSE) criterion.

Proof of Theorem 4.

Based on Equation (39), we have the following relationship:

Hence, according to [48], for any small positive number we have

Because , based on Equation (40) and applying the probability properties we have

Equation (41) means that the smoothing spline regression function estimator of the multiresponse nonparametric regression (MNR) model is a consistent estimator based on the integrated mean square error (IMSE) criterion. Thus, Theorem 4 is proved. □

3.6. Illustration of Theorems

Suppose a paired observation follows the multiresponse nonparametric regression (MNR) model:

Next, for every , we assume and such that . Hence, we have a nonparametric regression model as follows:

Based on the model presented in (43), we present an illustration related to the four theorems in Section 3.5 through a simulation study with sample size of . Based on this model, let , where is generated from a uniform (0,1) distribution and is generated from a standard normal distribution. The first step is to create plots of the observation values and , as shown in Figure 4.

Figure 4.

Plots of observation values and .

It can be seen from Figure 4 that there is a tendency towards variance inequality. For larger values of , the variance tends to be larger. Next, the data model is approximated by a weighted spline with a weight of , . The next step is to determine the order, number, and location of the knot points. Here, we use a weighted cubic spline model with two knot points, namely, 0.5 and 0.785. This weighted cubic spline model can be written as follows:

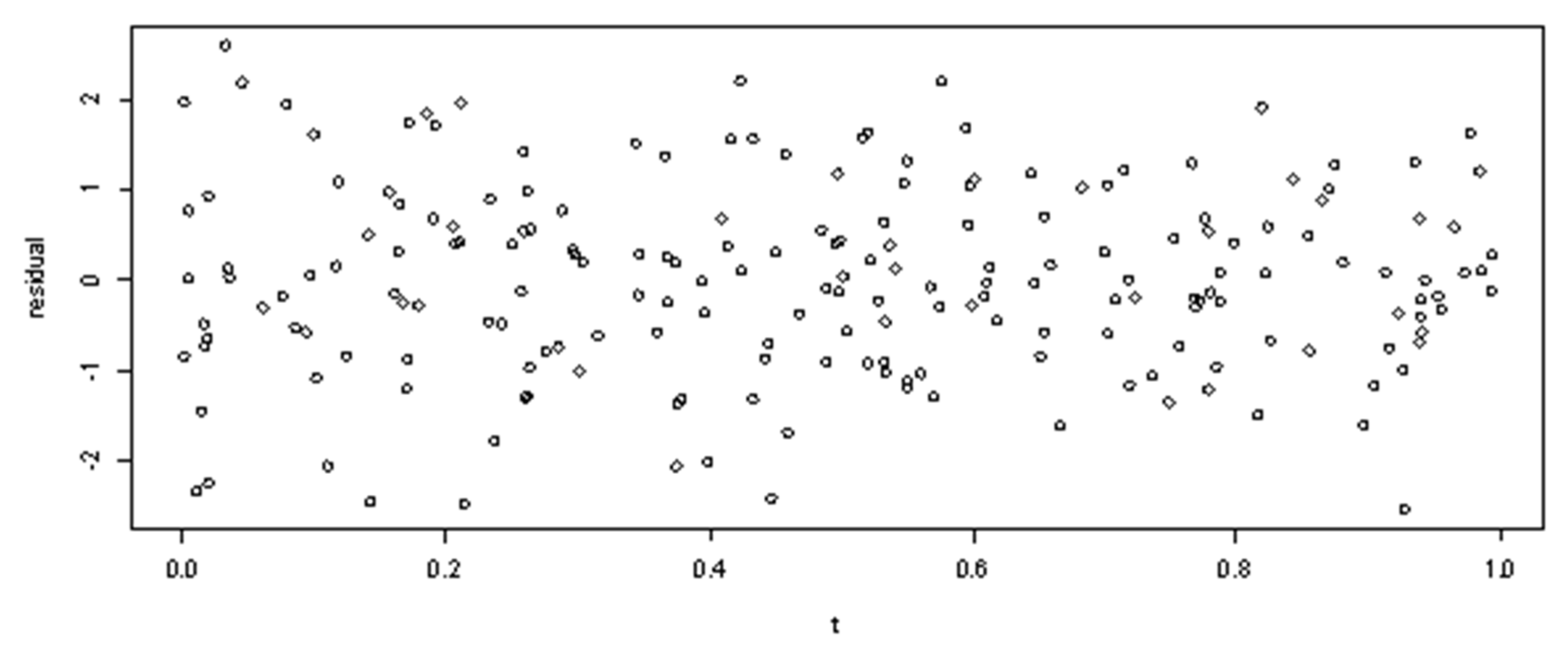

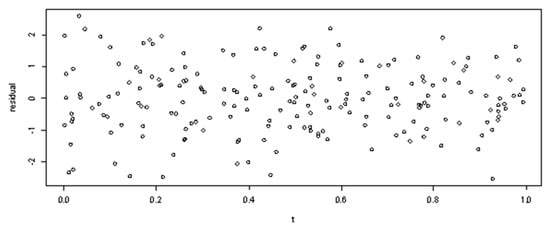

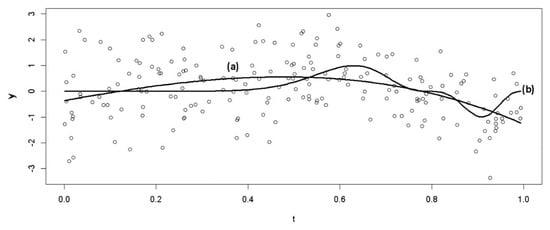

The plot of this weighted cubic spline with two knot points is shown in Figure 5. The plots of the residuals and the estimated weighted cubic spline are shown in Figure 6.

Figure 5.

Plots of (a) weighted cubic spline with two knot points and (b) curve of .

Figure 6.

Plots of residuals and estimation values of weighted cubic spline.

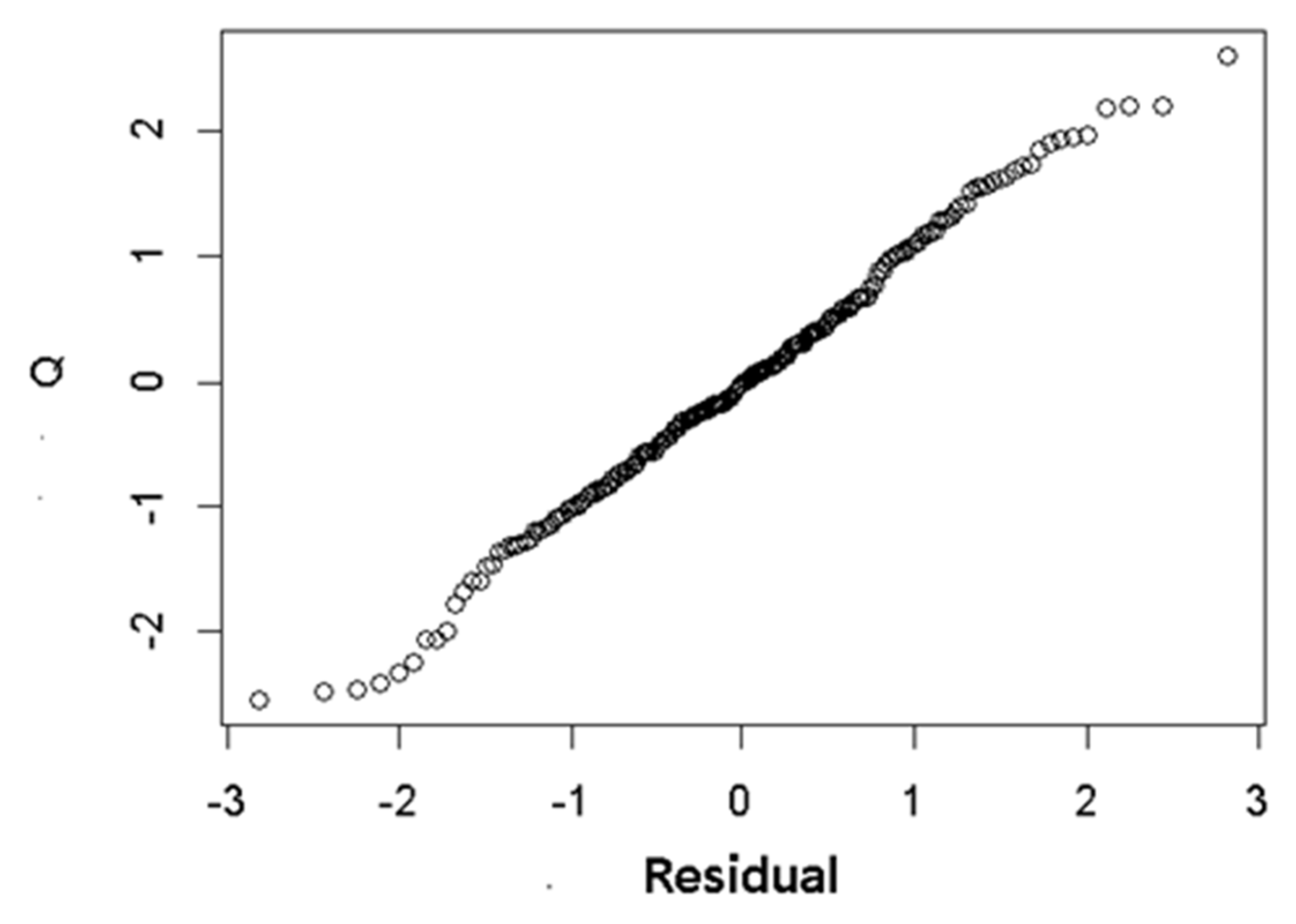

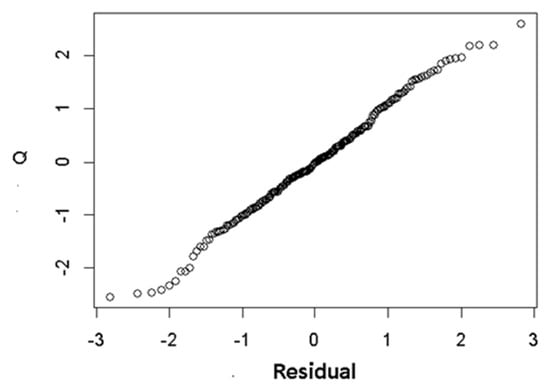

Figure 6 shows that with weight , the variance of the response variable y tends to be the same. Meanwhile, Figure 7 provides a residual normality plot for the weighted cubic spline model. The plot in Figure 7 shows no indication towards deviation from the normal distribution.

Figure 7.

Plot of weighted cubic spline residual normality.

Next, as a comparison, we investigate a weighted cubic polynomial model with weight , for fitting the model (43). The fitting of the weighted cubic polynomial model is shown in Figure 8. From the visualization in Figure 8, it can be seen that this weighted cubic polynomial approach tends to approach the function very globally. This is in contrast to the weighted cubic spline with two knot points in (44), which approaches the function more locally. Thus, the weighted cubic spline model with two knot points is adequate as an approximation model for the model presented in (43).

Figure 8.

Plots of (a) weighted cubic polynomial and (b) curve .

Based on the illustration above and Figure 4, Figure 5, Figure 6, Figure 7 and Figure 8, if is an estimator for model (43), that is, if is the Penalized Weighted Least Squares (PWLS) solution, then , from Equation (44) is an estimator for model (43) as well, such that , as provided by Theorem 1.

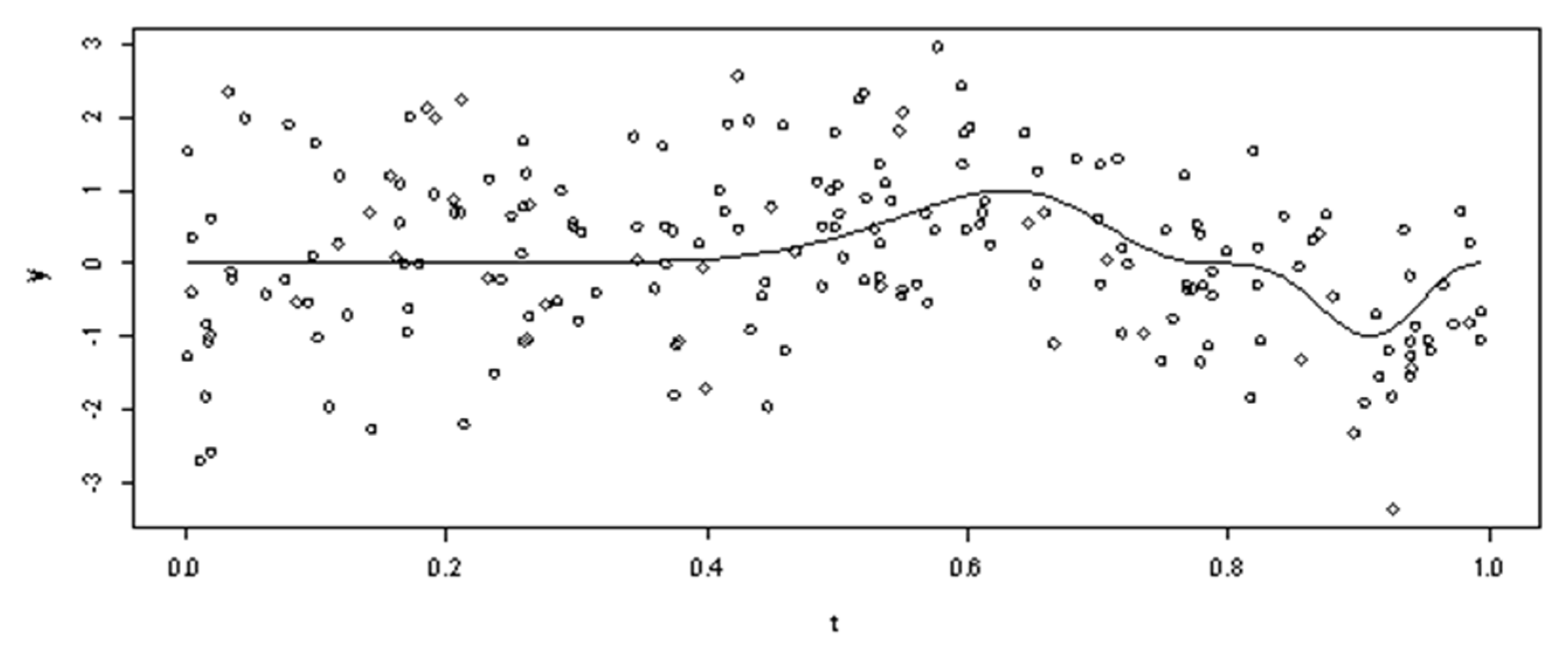

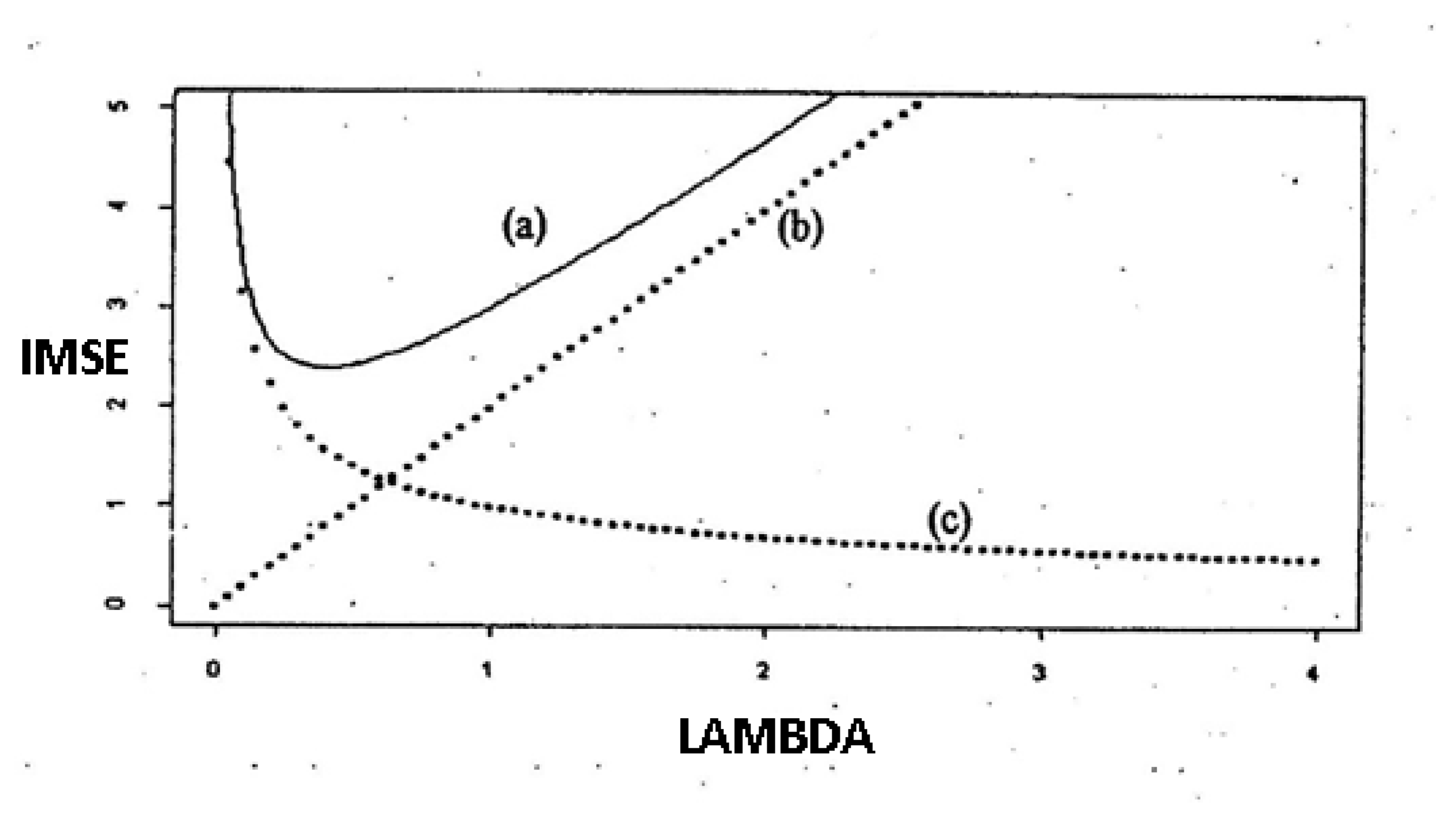

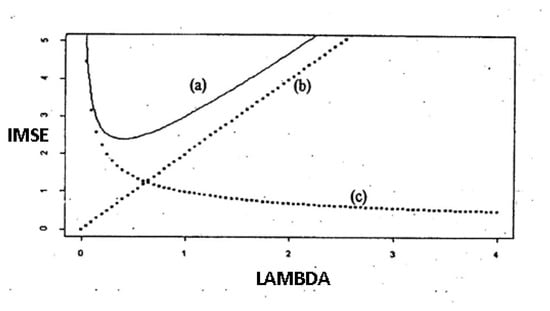

The plots of the asymptotic curves of , , and , where , are shown in Figure 9 [53].

Figure 9.

Plots of asymptotic curves of (a) , (b) , and (c) .

Figure 9 shows that the Integrated Mean Square Error (IMSE(λ)) curve represented by curve (a) is the sum of the quadratic bias () curve represented by curve (b) and the variance curve () represented by curve (c). It can be seen from Figure 9b that , as , that is, [14,50,54]. This means that remains bounded, as , which is provided by Theorem 2. Furthermore, Figure 9c shows that (that is, [14,50,54]. This means that remains bounded, as , which is provided by Theorem 3. Furthermore, Figure 9 shows that . According to [14,50,54], this means that . In other words, if remains bounded, as . According to [14,50,54], for any small positive number we have ; hence, is consistent.

4. Conclusions

The smoothing spline estimator with the RKHS approach has good ability to estimate the MNR model, which is a nonparametric regression model where the responses are correlated with each other, because the goodness of fit and smoothness of the estimation curve is controlled by the smoothing parameter, making the estimator very suitable for prediction purposes. Therefore, selection of the optimal smoothing parameter value cannot be omitted, and is crucial to good regression function fitting of the MNR model based on smoothing spline estimator using the RKHS approach. The estimator of the smoothing spline regression function of the MNR model that we obtained is linear with respect to the observations in Equation (22), and is a consistent estimator based on the integrated mean square error (IMSE) criterion. The main influence of this study is lies in the easier estimation of a multiresponse nonparametric regression model where there is a correlation between responses using the RKHS approach based on a smoothing spline estimator. This approach is easier, and faster, and more optimal, as estimation is carried out simultaneously instead of response by response for each observation. In addition, the theory generated in this study can be used to estimate the nonparametric component of the multiresponse semiparametric regression model used to model Indonesian toddlers’ standard growth charts. In the future, the results of this study can be further developed within the scope of statistical inference, especially for the purpose of testing hypotheses involving multiresponse nonparametric regression models and multiresponse semiparametric regression models.

Author Contributions

All authors have contributed to this research article. Conceptualization, B.L. and N.C.; methodology, B.L. and N.C.; software, B.L., N.C., D.A. and E.Y.; validation, B.L., N.C., D.A. and E.Y.; formal analysis, B.L., N.C. and D.A.; investigation, resource and data curation, B.L., N.C., D.A. and E.Y.; writing—original draft preparation, B.L. and N.C.; writing—review and editing, B.L., N.C. and D.A.; visualization, B.L. and N.C.; supervision, B.L., N.C. and D.A.; project administration, N.C. and B.L.; funding acquisition, N.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Directorate of Research, Technology, and Community Service (Direktorat Riset, Teknologi, dan Pengabdian kepada Masyarakat–DRTPM), the Ministry of Education, Culture, Research, and Technology, the Republic of Indonesia through the Featured Basic Research of Higher Education Grant (Hibah Penelitian Dasar Unggulan Perguruan Tinggi–PDUPT, Tahun Ketiga dari Tiga Tahun) with master contract number 010/E5/PG.02.00.PT/2022 and derivative contract number 781/UN3.15/PT/2022.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The authors confirm that there are no available data.

Acknowledgments

The authors thank Airlangga University for technical support and DRTPM of the Ministry of Education, Culture, Research, and Technology, the Republic of Indonesia for financial support. The authors are grateful to the editors and anonymous peer reviewers of the Symmetry journal, who provided comments, corrections, criticisms, and suggestions that were useful for improving the quality of this article.

Conflicts of Interest

The authors declare no conflict of interest. In addition, the funders had no role in the design of the study, in the collection, analysis, or interpretation of data, in the writing of the article, or in the decision to publish the results.

References

- Wahba, G. Spline Models for Observational Data; SIAM: Philadelphia, PA, USA, 1990. [Google Scholar]

- Kimeldorf, G.; Wahba, G. Some results on Tchebycheffian spline functions. J. Math. Anal. Appl. 1971, 33, 82–95. [Google Scholar] [CrossRef]

- Cox, D.D. Asymptotics for M-type smoothing spline. Ann. Stat. 1983, 11, 530–551. [Google Scholar] [CrossRef]

- Oehlert, G.W. Relaxed boundary smoothing spline. Ann. Stat. 1992, 20, 146–160. [Google Scholar] [CrossRef]

- Ana, E.; Chamidah, N.; Andriani, P.; Lestari, B. Modeling of hypertension risk factors using local linear of additive nonparametric logistic regression. J. Phys. Conf. Ser. 2019, 1397, 012067. [Google Scholar] [CrossRef]

- Chamidah, N.; Yonani, Y.S.; Ana, E.; Lestari, B. Identification the number of mycobacterium tuberculosis based on sputum image using local linear estimator. Bullet. Elect. Eng. Inform. 2020, 9, 2109–2116. [Google Scholar] [CrossRef]

- Cheruiyot, L.R. Local linear regression estimator on the boundary correction in nonparametric regression estimation. J. Stat. Theory Appl. 2020, 19, 460–471. [Google Scholar] [CrossRef]

- Cheng, M.-Y.; Huang, T.; Liu, P.; Peng, H. Bias reduction for nonparametric and semiparametric regression models. Stat. Sin. 2018, 28, 2749–2770. [Google Scholar] [CrossRef]

- Chamidah, N.; Zaman, B.; Muniroh, L.; Lestari, B. Designing local standard growth charts of children in East Java province using a local linear estimator. Int. J. Innov. Creat. Chang. 2020, 13, 45–67. [Google Scholar]

- Delaigle, A.; Fan, J.; Carroll, R.J. A design-adaptive local polynomial estimator for the errors-in-variables problem. J. Amer. Stat. Assoc. 2009, 104, 348–359. [Google Scholar] [CrossRef]

- Francisco-Fernandez, M.; Vilar-Fernandez, J.M. Local polynomial regression estimation with correlated errors. Comm. Statist. Theory Methods 2001, 30, 1271–1293. [Google Scholar] [CrossRef]

- Benhenni, K.; Degras, D. Local polynomial estimation of the mean function and its derivatives based on functional data and regular designs. ESAIM Probab. Stat. 2014, 18, 881–899. [Google Scholar] [CrossRef]

- Kikechi, C.B. On local polynomial regression estimators in finite populations. Int. J. Stat. Appl. Math. 2020, 5, 58–63. [Google Scholar]

- Wand, M.P.; Jones, M.C. Kernel Smoothing; Chapman & Hall: London, UK, 1995. [Google Scholar]

- Cui, W.; Wei, M. Strong consistency of kernel regression estimate. Open J. Stats. 2013, 3, 179–182. [Google Scholar] [CrossRef]

- De Brabanter, K.; De Brabanter, J.; Suykens, J.A.K.; De Moor, B. Kernel regression in the presence of correlated errors. J. Mach. Learn. Res. 2011, 12, 1955–1976. [Google Scholar]

- Chamidah, N.; Lestari, B. Estimating of covariance matrix using multi-response local polynomial estimator for designing children growth charts: A theoretically discussion. J. Phy. Conf. Ser. 2019, 1397, 012072. [Google Scholar] [CrossRef]

- Aydin, D.; Güneri, Ö.I.; Fit, A. Choice of bandwidth for nonparametric regression models using kernel smoothing: A simulation study. Int. J. Sci. Basic Appl. Res. 2016, 26, 47–61. [Google Scholar]

- Eubank, R.L. Nonparametric Regression and Spline Smoothing, 2nd ed.; Marcel Dekker: New York, NY, USA, 1999. [Google Scholar]

- Wang, Y. Smoothing Splines: Methods and Applications; Taylor & Francis Group: Boca Raton, FL, USA, 2011. [Google Scholar]

- Liu, A.; Qin, L.; Staudenmayer, J. M-type smoothing spline ANOVA for correlated data. J. Multivar. Anal. 2010, 101, 2282–2296. [Google Scholar] [CrossRef]

- Gao, J.; Shi, P. M-Type smoothing splines in nonparametric and semiparametric regression models. Stat. Sin. 1997, 7, 1155–1169. [Google Scholar]

- Chamidah, N.; Lestari, B.; Massaid, A.; Saifudin, T. Estimating mean arterial pressure affected by stress scores using spline nonparametric regression model approach. Commun. Math. Biol. Neurosci. 2020, 2020, 1–12. [Google Scholar]

- Fatmawati, I.N.; Budiantara, B.L. Comparison of smoothing and truncated spline estimators in estimating blood pressures models. Int. J. Innov. Creat. Change 2019, 5, 1177–1199. [Google Scholar]

- Chamidah, N.; Lestari, B.; Budiantara, I.N.; Saifudin, T.; Rulaningtyas, R.; Aryati, A.; Wardani, P.; Aydin, D. Consistency and asymptotic normality of estimator for parameters in multiresponse multipredictor semiparametric regression model. Symmetry 2022, 14, 336. [Google Scholar] [CrossRef]

- Eilers, P.H.C.; Marx, B.D. Flexible smoothing with B-splines and penalties. Statist. Sci. 1996, 11, 86–121. [Google Scholar] [CrossRef]

- Lu, M.; Liu, Y.; Li, C.-S. Efficient estimation of a linear transformation model for current status data via penalized splines. Stat. Meth. Medic. Res. 2020, 29, 3–14. [Google Scholar] [CrossRef]

- Wang, Y.; Guo, W.; Brown, M.B. Spline smoothing for bivariate data with applications to association between hormones. Stat. Sin. 2000, 10, 377–397. [Google Scholar]

- Yilmaz, E.; Ahmed, S.E.; Aydin, D. A-Spline regression for fitting a nonparametric regression function with censored data. Stats 2020, 3, 11. [Google Scholar] [CrossRef]

- Aydin, D. A comparison of the nonparametric regression models using smoothing spline and kernel regression. World Acad. Sci. Eng. Technol. 2007, 36, 253–257. [Google Scholar]

- Lestari, B.; Budiantara, I.N.; Chamidah, N. Smoothing parameter selection method for multiresponse nonparametric regression model using spline and kernel estimators approaches. J. Phy. Conf. Ser. 2019, 1397, 012064. [Google Scholar] [CrossRef]

- Lestari, B.; Budiantara, I.N.; Chamidah, N. Estimation of regression function in multiresponse nonparametric regression model using smoothing spline and kernel estimators. J. Phy. Conf. Ser. 2018, 1097, 012091. [Google Scholar] [CrossRef]

- Osmani, F.; Hajizadeh, E.; Mansouri, P. Kernel and regression spline smoothing techniques to estimate coefficient in rates model and its application in psoriasis. Med. J. Islam. Repub. Iran 2019, 33, 1–5. [Google Scholar] [CrossRef]

- Lestari, B.; Budiantara, I.N. Spline estimator and its asymptotic properties in multiresponse nonparametric regression model. Songklanakarin J. Sci. Technol. 2020, 42, 533–548. [Google Scholar]

- Mariati, M.P.A.M.; Budiantara, I.N.; Ratnasari, V. The application of mixed smoothing spline and Fourier series model in nonparametric regression. Symmetry 2021, 13, 2094. [Google Scholar] [CrossRef]

- Wang, Y.; Ke, C. Smoothing spline semiparametric nonlinear regression models. J. Comp. Graphical Stats. 2009, 18, 165–183. [Google Scholar] [CrossRef]

- Lestari, B.; Chamidah, N. Estimating regression function of multiresponse semiparametric regression model using smoothing spline. J. Southwest Jiaotong Univ. 2020, 55, 1–9. [Google Scholar]

- Gu, C. Smoothing Spline ANOVA Models; Springer: New York, NY, USA, 2002. [Google Scholar]

- Aronszajn, N. Theory of reproducing kernels. Transact. Amer. Math. Soc. 1950, 68, 337–404. [Google Scholar] [CrossRef]

- Berlinet, A.; Thomas-Agnan, C. Reproducing Kernel Hilbert Spaces in Probability and Statistics; Kluwer Academic: Norwell, MA, USA, 2004. [Google Scholar]

- Wahba, G. Support vector machines, reproducing kernel Hilbert spaces and the randomized GACV. Adv. Kernel Methods-Support Vector Learn. 1999, 6, 69–87. [Google Scholar]

- Scholkopf, B.; Smola, A.J. Learning with Kernels: Support Vector Machines, Regularization, Optimization, and Beyond; MIT Press: Cambridge, MA, USA, 2002. [Google Scholar]

- Zeng, X.; Xia, Y. Asymptotic distribution for regression in a symmetric periodic Gaussian kernel Hilbert space. Stat. Sin. 2019, 29, 1007–1024. [Google Scholar] [CrossRef]

- Hofmann, T.; Scholkopf, B.; Smola, A.J. Kernel methods in machine learning. Ann. Stat. 2008, 36, 1171–1220. [Google Scholar] [CrossRef]

- Johnson, R.A.; Wichern, D.W. Applied Multivariate Statistical Analysis; Prentice Hall: New York, NY, USA, 1982. [Google Scholar]

- Li, J.; Zhang, R. Penalized spline varying-coefficient single-index model. Commun. Stat.-Simul. Comp. 2010, 39, 221–239. [Google Scholar] [CrossRef]

- Ruppert, D.; Carroll, R. Penalized Regression Splines, Working Paper; School of Operation Research and Industrial Engineering, Cornell University: New York, NY, USA, 1997. [Google Scholar]

- Tunç, C.; Tunç, O. On the stability, integrability and boundedness analyses of systems of integro-differential equations with time-delay retardation. RACSAM 2021, 115, 115. [Google Scholar] [CrossRef]

- Aydin, A.; Korkmaz, E. Introduce Gâteaux and Frêchet derivatives in Riesz spaces. Appl. Appl. Math. Int. J. 2020, 15, 16. [Google Scholar]

- Sen, P.K.; Singer, J.M. Large Sample in Statistics: An Introduction with Applications; Chapman & Hall: London, UK, 1993. [Google Scholar]

- Eubank, R.L. Spline Smoothing and Nonparametric Regression; Marcel Dekker, Inc.: New York, NY, USA, 1988. [Google Scholar]

- Speckman, P. Spline smoothing and optimal rate of convergence in nonparametric regression models. Ann. Stat. 1985, 13, 970–983. [Google Scholar] [CrossRef]

- Budiantara, I.N. Estimator Spline dalam Regresi Nonparametrik dan Semiparametrik. Ph.D. Dissertation, Universitas Gadjah Mada, Yogyakarta, Indonesia, 2000. [Google Scholar]

- Serfling, R.J. Approximation Theorems of Mathematical Statistics; John Wiley: New York, NY, USA, 1980. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).