Video Processing from a Virtual Unmanned Aerial Vehicle: Comparing Two Approaches to Using OpenCV in Unity

Abstract

1. Introduction

2. Related Works

3. Materials and Methods

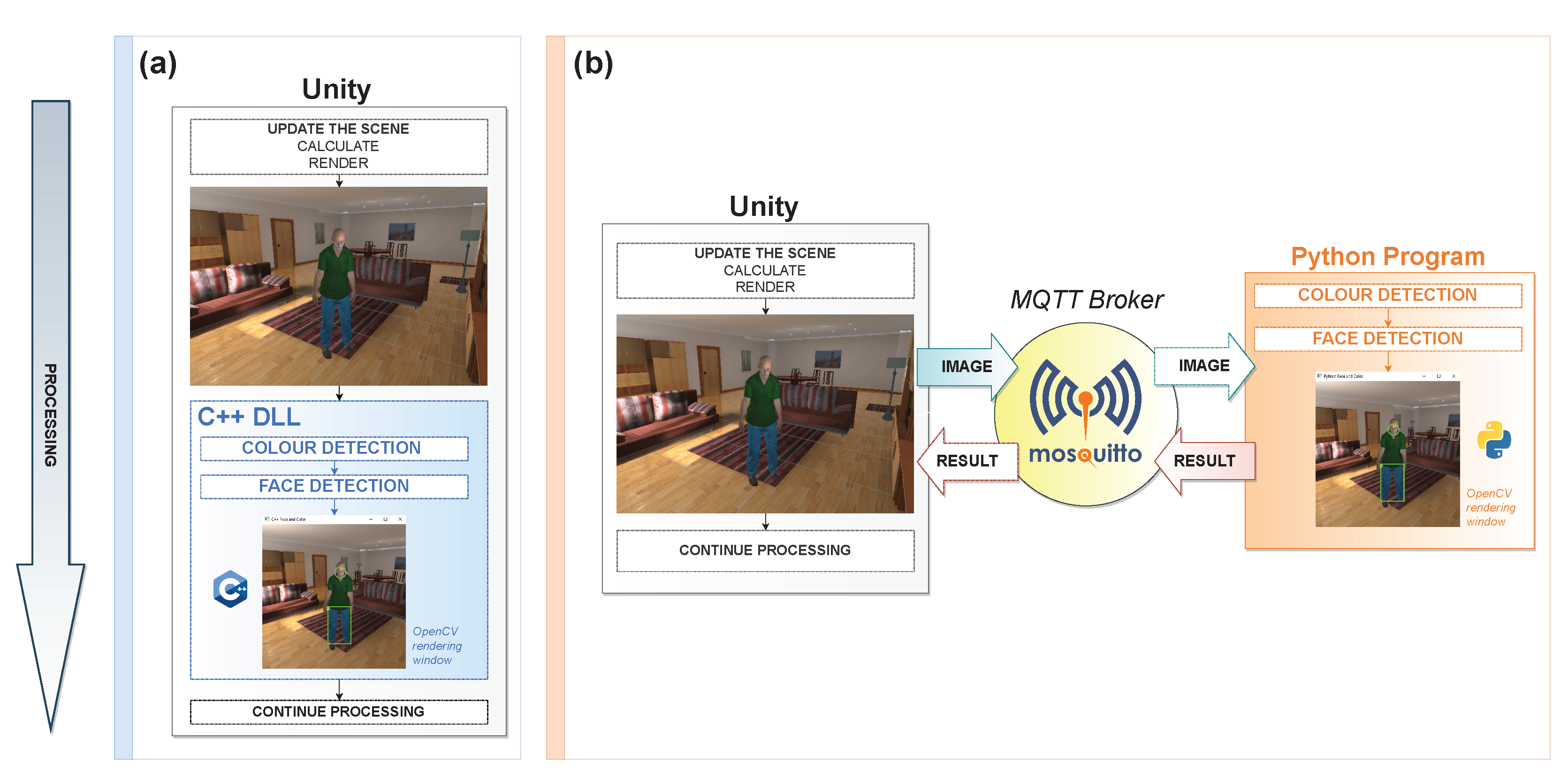

3.1. General Description of the Study

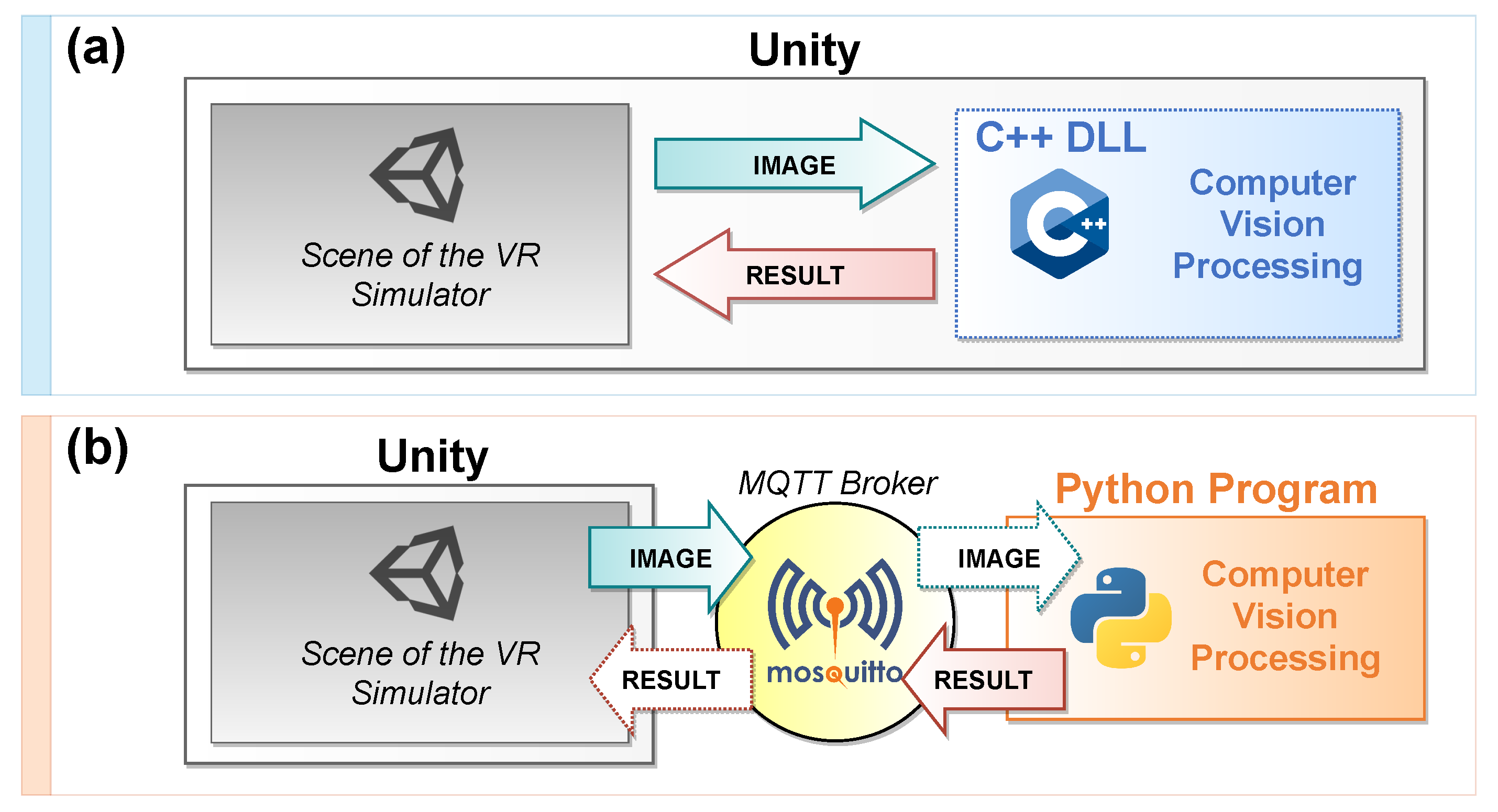

3.2. Assessment Approaches

3.3. Development

3.3.1. Development of the Native C++ Plugin

| Algorithm 1: C++ external function and pixel colour structure. |

| int process(Color32* raw, int width, int height); |

| struct Color32 { |

| uchar r; |

| uchar g; |

| uchar b; |

| uchar a; |

| }; |

3.3.2. Development of the Python Program

4. Results

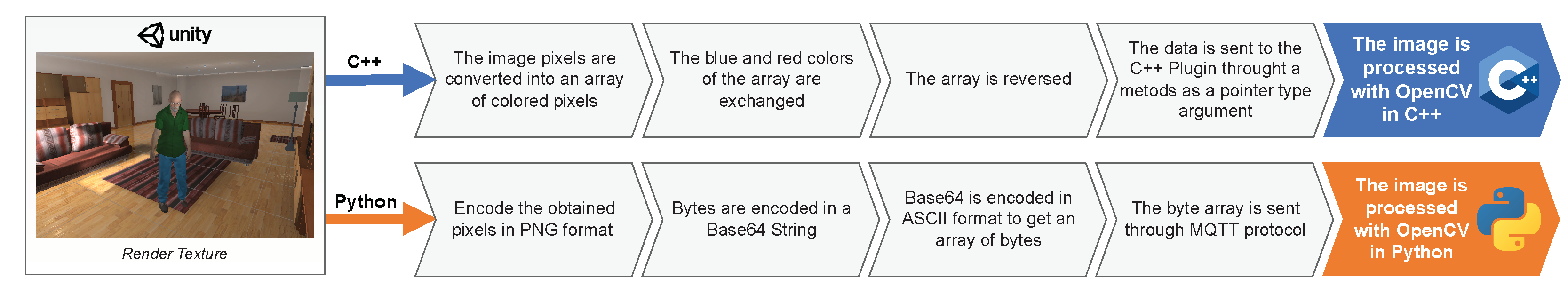

4.1. Processing Prior to Transmission

4.1.1. Data Conversion in C++

- The image is taken from the active TextureRender and stored in a Texture2D.

- RenderTexture.active = cameraTexture,

- tex.ReadPixels(source.Rect, 0, 0);

- The pixel array is extracted from the Texture2D object.

- Color32[] RawImg = tex.GetPixels32();

- Red and blue colours are inverted so that the image comes out with the exact colours and the same orientation as seen in the simulator. The GetPixels32() function returns an arrangement of its own Unity Color32 structure. When it is sent as a pointer to C++, it receives the image with the inverted colours and inverted image orientation.

- for (int i = 0; i < rawImg.Lenght; i++) {

- byte r = rawImg[i].r;

- byte g = rawImg[i].g;

- byte b = rawImg[i].b;

- rawImg[i].r = b;

- rawImg[i].b = r;

- rawImg[i].a = 255;

- }

- Array.Reverse(rawImg);

- The arrangement is sent as a pointer and also the length and width of the image.

- int result = process(rawImg, width, height);

4.1.2. Data Conversion in Python

- The image is taken from the active TextureRender and stored in a Texture2D.

- It is encoded in PNG format and converted to base64 text.

- byte[] bytes = tex.EncodeToPNG();

- string base64String = Convert.ToBase64String(bytes,

- 0, bytes.Lenght);

- The base64 text is converted to a byte array.

- bytes = Encoding.ASCII.GetBytes(base64String);

- The byte array is sent over the MQTT protocol.

- client.Publish(topic, bytes);

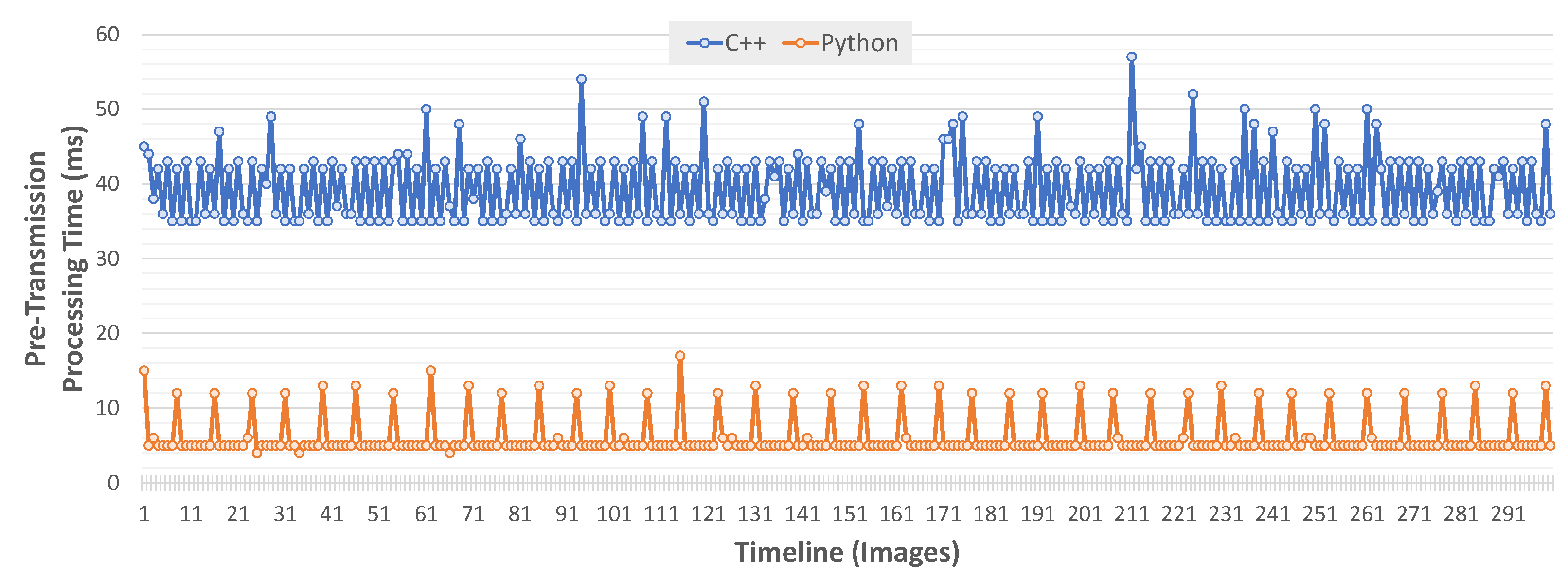

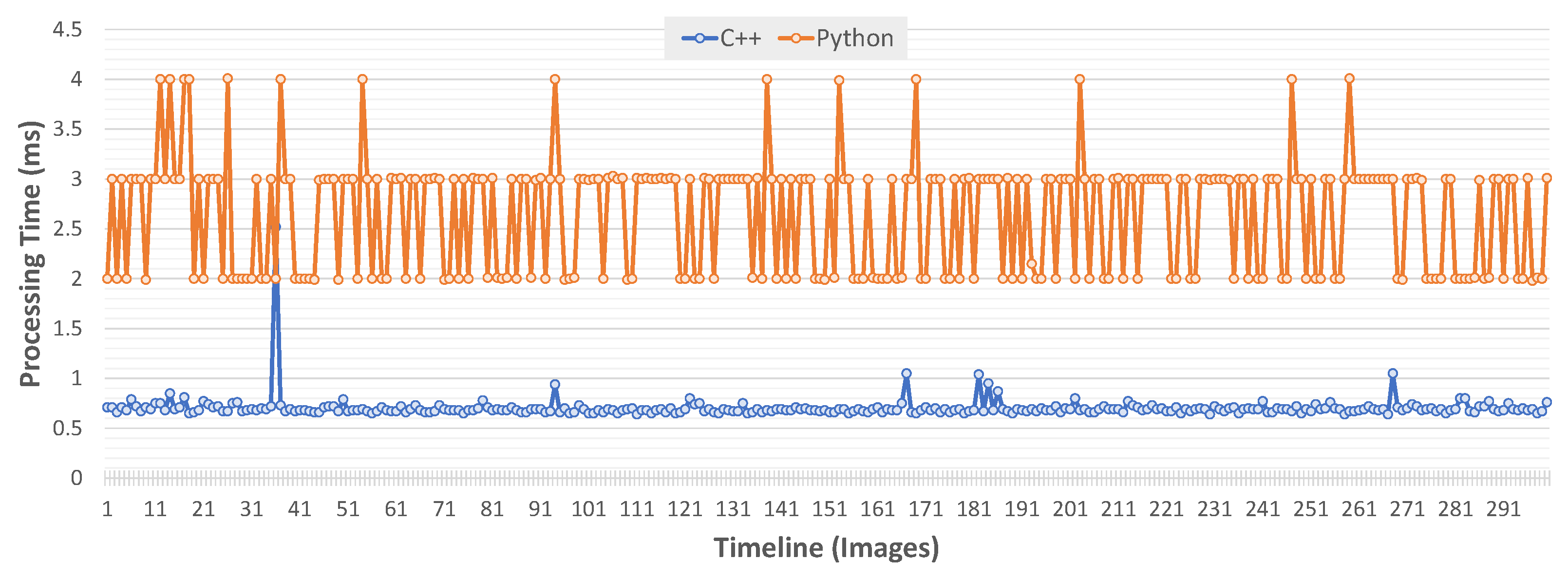

4.1.3. Performance Analysis of Processing Prior to Transmission

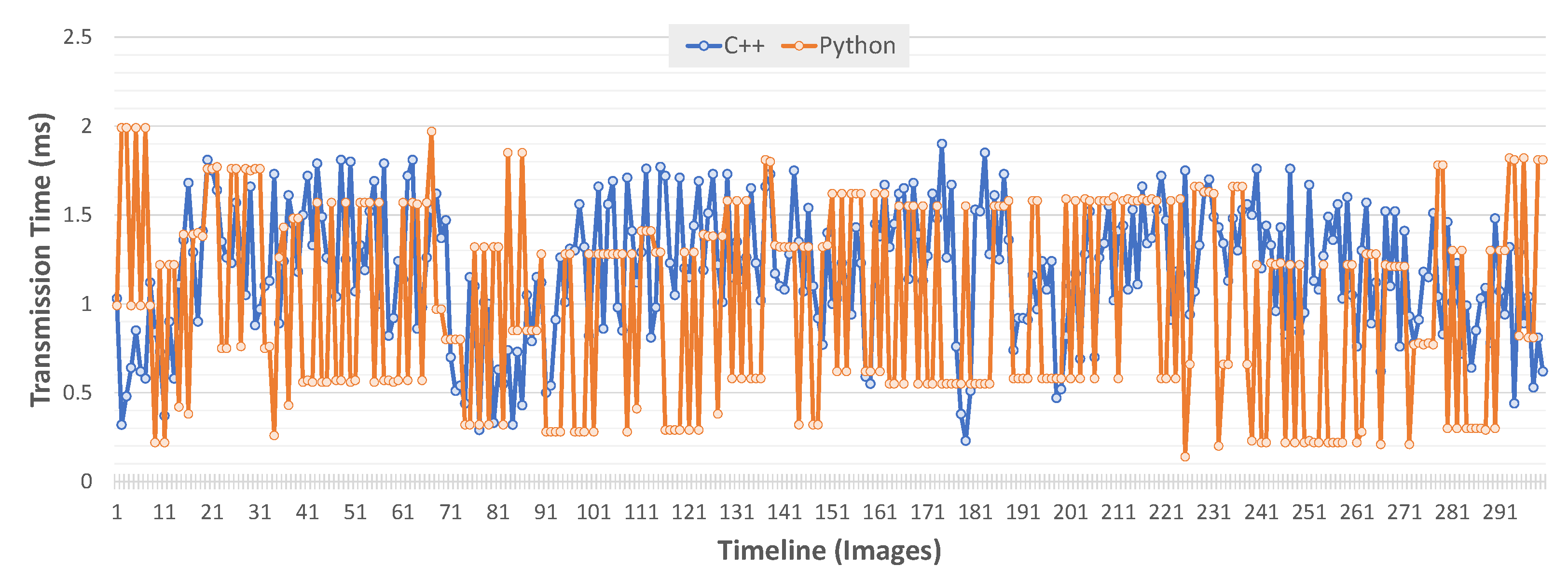

4.2. Data Transmission

4.3. Image Processing with OpenCV

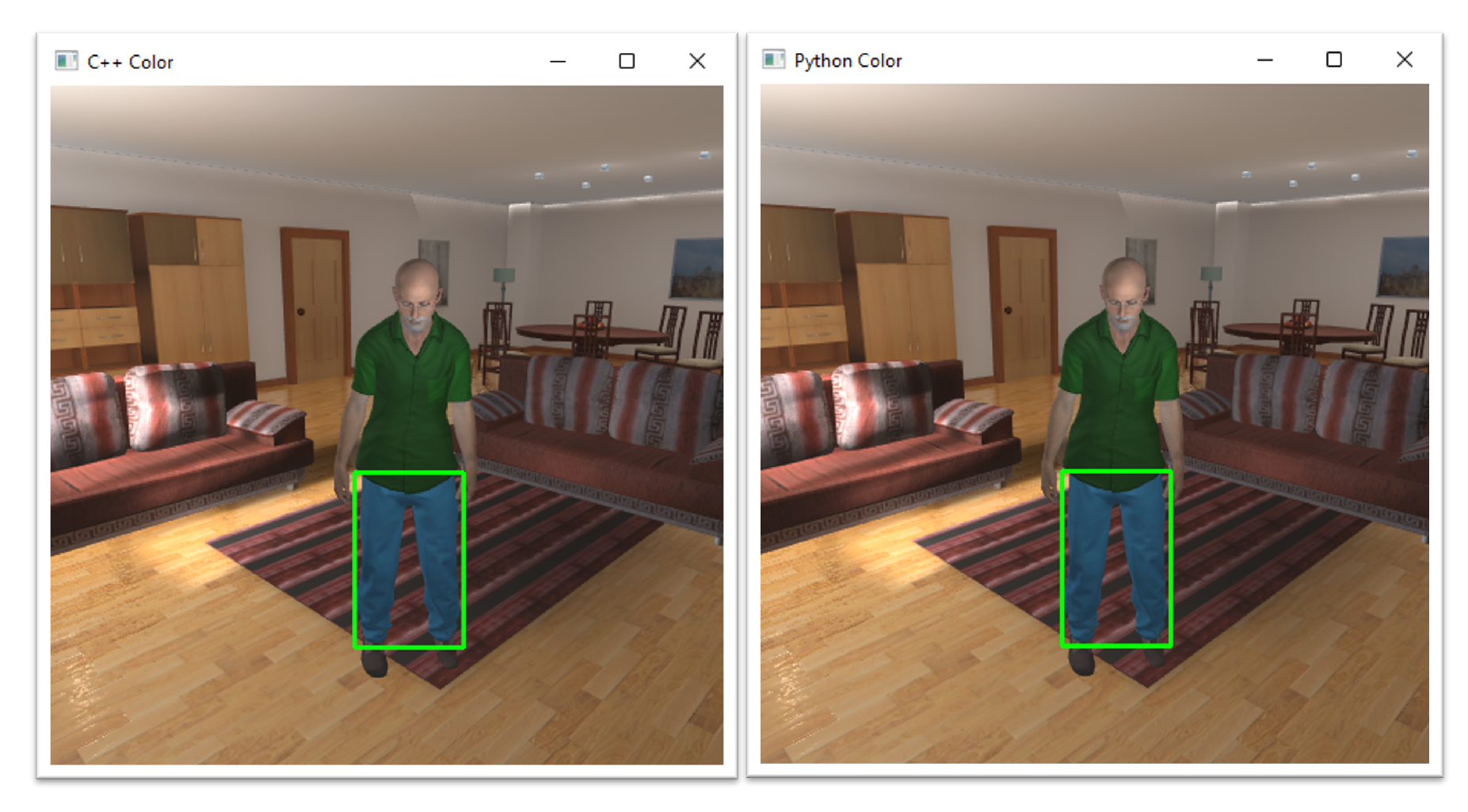

4.3.1. Colour Detection Algorithm

| Algorithm 2: Colour detection algorithm in C++. |

| findContours(redOnly, contours, hierarchy, |

| cv::RetrievalModes::RETR_TREE, |

| cv::ContourApproximationModes::CHAIN_APPROX_SIMPLE); |

| vector<Rect> boundRect(contours.size()); |

| int x0 = 0, y0 = 0, w0 = 0, h0 = 0; |

| for (int i = 0; i < contours.size(); i++) { |

| float area = contourArea((Mat)contours[i]); |

| if (area > 300) { |

| bpundRect[i] = boundingRect((Mat)contours[i]); |

| x0 = boundRect[i].x; |

| y0 = boundRect[i].y; |

| w0 = boundRect[i].width; |

| h0 = boundRect[i].height; |

| rectangle(frame, Point(x0, y0), |

| Point(x0 + w0, y0 + h0), |

| Scalar(0, 255, 0), 2, 8); |

| } |

} |

| Algorithm 3: Colour detection algorithm in Python. |

| contours, hierarchy = cv2.findContours(blue_mask, |

| cv2.RETR_TREE, |

| cv2.CHAIN_APPROX_SIMPLE) |

| for pic, contour in enumerate(contours): |

| area = cv2.contourArea(contour) |

| if (area > 300): |

| x, y, w, h = cv2.boundingRect(contour) |

| img = cv2.rectangle(img, (x,y), (x + w, y + h), |

(0, 255, 0), 2, 8) |

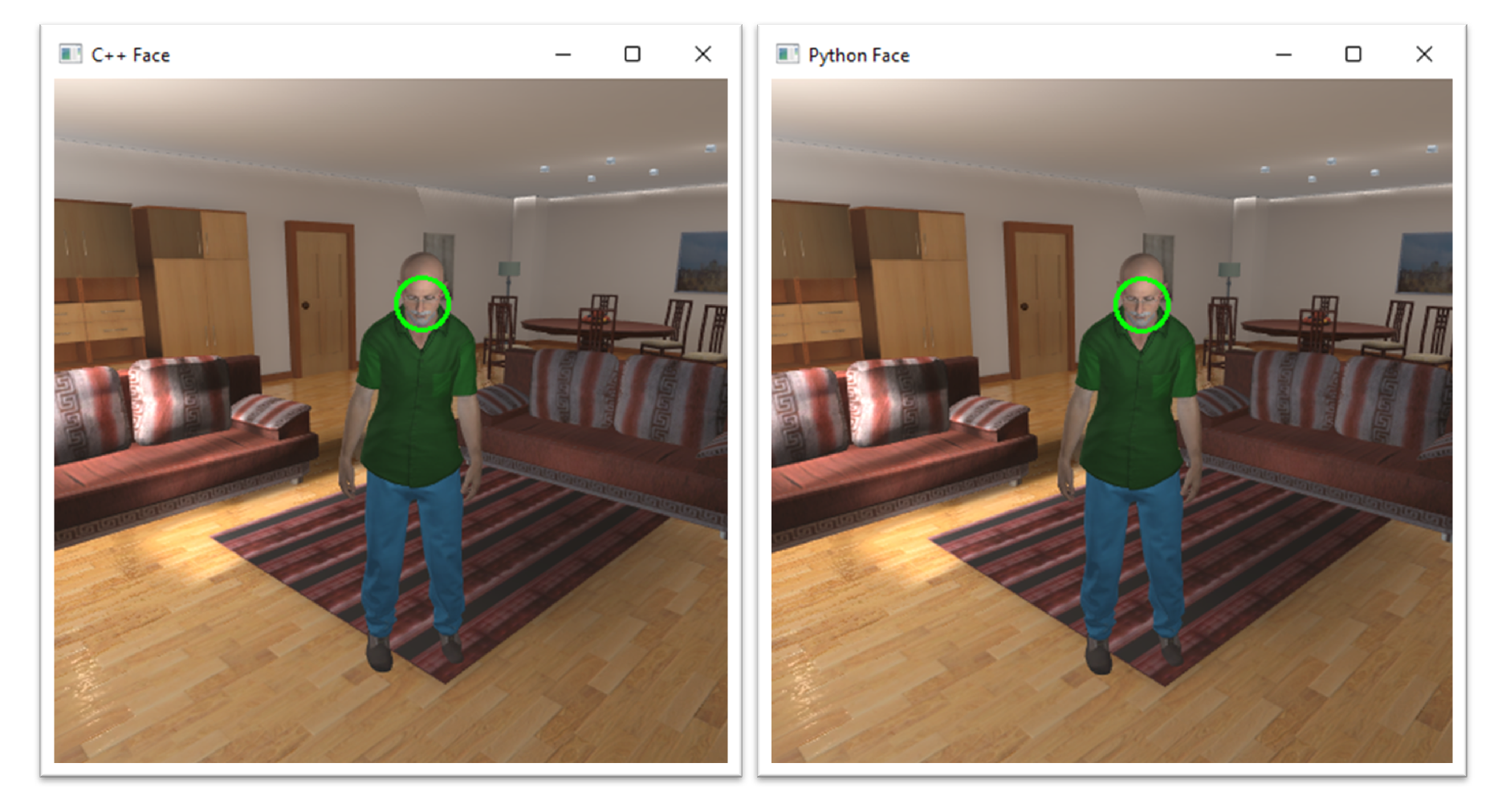

4.3.2. Face Detection Algorithm

| Algorithm 4: Face detection algorithm in C++. |

| cvtColor(img, gray, COLOR_BGR2GRAY); |

| cascade.detectMultiScale(gray, faces, 1.2, 3, 0 | |

| CASCADE_SCALE_IMAGE, Size(30, 30)); |

| vector<Rect> boundRect(faces.size()); |

| int detected = 0; |

| for (size_t i = 0; i < faces.size(); i++) { |

| Rect r = faces[i]; |

| Mat smallImgROI; |

| vector<Rect> nestedObjects; |

| Point center; |

| Scalar colour = Scalar(0, 255, 0); |

| int radius; |

| double aspect_ratio = (double)r.width / r.height; |

| if (0.75 < aspect_ratio && aspect_ratio < 1.3) { |

| center.x = cvRound(r.x + r.width * 0.5) * scale); |

| center.y = cvRound(r.y + r.width * 0.5) * scale); |

| radius = cvRound((r.width + r.height)* 0.25 * scale); |

| circle(img, center, radius, colour, 3, 8, 0); |

| detected = 1; |

| } |

| } |

| Algorithm 5: Face detection algorithm in Python. |

| gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY) |

| faces = faceCascade.detectMultiScale(gray, scaleFactor = 1.1, |

| minNeighbors = 3, |

| minSize = (30, 30)) |

| x1=0 |

| y1=0 |

| for (x, y, w, h) in faces: |

| aspect_ratio = w / h |

| if 0.75 < aspect_ratio and aspect_ratio < 1.3: |

| x1 = round((x + w * 0.5) * 1) |

| y1 = round((y + h * 0.5) * 1) |

| radius = round((w + h)* 0.25 * 1); |

| cv2.circle(img, (x1, y1), radius, (0, 255, 0), 3, 8, 0); |

4.3.3. Simultaneous Run of Both Algorithms

Both Algorithms Running in the Same Program

Both Algorithms Running in Different Programs (MQTT Only)

5. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| DLL | Dynamic-Link Library |

| FPS | Frames Per Second |

| MQTT | Message Queuing Telemetry Transport |

| UAV | Unmanned Aerial Vehicle |

| VR | Virtual Reality |

References

- Mercado-Ravell, D.A.; Castillo, P.; Lozano, R. Visual detection and tracking with UAVs, following a mobile object. Adv. Robot. 2019, 33, 388–402. [Google Scholar] [CrossRef]

- Prathaban, T.; Thean, W.; Sazali, M.I.S.M. A vision-based home security system using OpenCV on Raspberry Pi 3. AIP Conf. Proc. 2019, 2173, 020013. [Google Scholar] [CrossRef]

- Gómez-Reyes, J.K.; Benítez-Rangel, J.P.; Morales-Hernández, L.A.; Resendiz-Ochoa, E.; Camarillo-Gomez, K.A. Image Mosaicing Applied on UAVs Survey. Appl. Sci. 2022, 12, 2729. [Google Scholar] [CrossRef]

- Bouassida, S.; Neji, N.; Nouvelière, L.; Neji, J. Evaluating the Impact of Drone Signaling in Crosswalk Scenario. Appl. Sci. 2021, 11, 157. [Google Scholar] [CrossRef]

- Islam, S.; Huang, Q.; Afghah, F.; Fule, P.; Razi, A. Fire Frontline Monitoring by Enabling UAV-Based Virtual Reality with Adaptive Imaging Rate. In Proceedings of the 2019 53rd Asilomar Conference on Signals, Systems, and Computers, Grove, CA, USA, 3–6 November 2019; pp. 368–372. [Google Scholar] [CrossRef]

- Ribeiro, R.; Ramos, J.; Safadinho, D.; Reis, A.; Rabadão, C.; Barroso, J.; Pereira, A. Web AR Solution for UAV Pilot Training and Usability Testing. Sensors 2021, 21, 1456. [Google Scholar] [CrossRef] [PubMed]

- Selecký, M.; Faigl, J.; Rollo, M. Communication Architecture in Mixed-Reality Simulations of Unmanned Systems. Sensors 2018, 18, 853. [Google Scholar] [CrossRef] [PubMed]

- Lan, G.; Sun, J.; Li, C.; Ou, Z.; Luo, Z.; Liang, J.; Hao, Q. Development of UAV based virtual reality systems. In Proceedings of the 2016 IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems, Baden-Baden, Germany, 19–21 September 2016; pp. 481–486. [Google Scholar] [CrossRef]

- Belmonte, L.M.; García, A.S.; Segura, E.; Novais, P.; Morales, R.; Fernández-Caballero, A. Virtual Reality Simulation of a Quadrotor to Monitor Dependent People at Home. IEEE Trans. Emerg. Top. Comput. 2021, 9, 1301–1315. [Google Scholar] [CrossRef]

- Górriz, J.M.; Ramírez, J.; Ortíz, A.; Martínez-Murcia, F.J.; Segovia, F.; Suckling, J.; Leming, M.; Zhang, Y.D.; Álvarez Sánchez, J.R.; Bologna, G.; et al. Artificial intelligence within the interplay between natural and artificial computation: Advances in data science, trends and applications. Neurocomputing 2020, 410, 237–270. [Google Scholar] [CrossRef]

- Jie, L.; Jian, C.; Lei, W. Design of multi-mode UAV human-computer interaction system. In Proceedings of the 2017 IEEE International Conference on Unmanned Systems, Beijing, China, 27–29 October 2017; pp. 353–357. [Google Scholar] [CrossRef]

- Vukić, M.; Grgić, B.; Dinčir, D.; Kostelac, L.; Marković, I. Unity based Urban Environment Simulation for Autonomous Vehicle Stereo Vision Evaluation. In Proceedings of the 2019 42nd International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO), Opatija, Croatia, 20–24 May 2019; pp. 949–954. [Google Scholar] [CrossRef]

- Belmonte, L.M.; Morales, R.; Fernández-Caballero, A. Computer vision in autonomous unmanned aerial vehicles—A systematic mapping study. Appl. Sci. 2019, 9, 3196. [Google Scholar] [CrossRef]

- Arévalo, F.; Sunaringtyas, D.; Tito, C.; Piolo, C.; Schwung, A. Interactive Visual Procedure using an extended FMEA and Mixed-Reality. In Proceedings of the 2020 IEEE International Conference on Industrial Technology (ICIT), Buenos Aires, Argentina, 26–28 February 2020; pp. 286–291. [Google Scholar] [CrossRef]

- Tredinnick, R.; Boettcher, B.; Smith, S.; Solovy, S.; Ponto, K. Uni-CAVE: A Unity3D plugin for non-head mounted VR display systems. In Proceedings of the 2017 IEEE Virtual Reality (VR), Los Angeles, CA, USA, 18–22 March 2017; pp. 393–394. [Google Scholar] [CrossRef]

- Diachenko, D.; Partyshev, A.; Pizzagalli, S.L.; Bondarenko, Y.; Otto, T.; Kuts, V. Industrial Collaborative Robot Digital Twin integration and Control Using Robot Operating System. J. Mach. Eng. 2022, 22, 148110. [Google Scholar] [CrossRef]

- Yun, H.; Jun, M.B. Immersive and interactive cyber-physical system (I2CPS) and virtual reality interface for human involved robotic manufacturing. J. Manuf. Syst. 2022, 62, 234–248. [Google Scholar] [CrossRef]

- Caiza, G.; Bonilla-Vasconez, P.; Garcia, C.A.; Garcia, M.V. Augmented Reality for Robot Control in Low-cost Automation Context and IoT. In Proceedings of the 2020 25th IEEE International Conference on Emerging Technologies and Factory Automation, Vienna, Austria, 8–11 September 2020; pp. 1461–1464. [Google Scholar] [CrossRef]

- Kuzmic, J.; Rudolph, G. Comparison between Filtered Canny Edge Detector and Convolutional Neural Network for Real Time Lane Detection in a Unity 3D Simulator. In Proceedings of the 6th International Conference on Internet of Things, Big Data and Security, Online, 23–25 April 2021; Volume 1, pp. 148–155. [Google Scholar] [CrossRef]

- Wang, Z.; Han, K.; Tiwari, P. Digital Twin Simulation of Connected and Automated Vehicles with the Unity Game Engine. In Proceedings of the 2021 IEEE 1st International Conference on Digital Twins and Parallel Intelligence, Beijing, China, 15 July–15 August 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Mohammadzadeh, M.; Khosravi, H. A Novel Approach to Communicate with Video Game Character using Cascade Classifiers. J. AI Data Min. 2021, 9, 227–234. [Google Scholar] [CrossRef]

- Ileperuma, I.; Gunathilake, H.; Dilshan, K.; Nishali, S.; Gamage, A.; Priyadarshana, Y. An Enhanced Virtual Fitting Room using Deep Neural Networks. In Proceedings of the 2020 2nd International Conference on Advancements in Computing, Malabe, Sri Lanka, 10–11 December 2020; pp. 67–72. [Google Scholar] [CrossRef]

- Adão, T.; Pinho, T.; Pádua, L.; Magalhães, L.G.J.; Sousa, J.; Peres, E. Prototyping IoT-Based Virtual Environments: An Approach toward the Sustainable Remote Management of Distributed Mulsemedia Setups. Appl. Sci. 2021, 11, 8854. [Google Scholar] [CrossRef]

- Fleck, P.; Schmalstieg, D.; Arth, C. Creating IoT-Ready XR-WebApps with Unity3D. In Proceedings of the The 25th International Conference on 3D Web Technology, Virtual Event, 9–13 November 2020. [Google Scholar] [CrossRef]

- Borkman, S.; Crespi, A.; Dhakad, S.; Ganguly, S.; Hogins, J.; Jhang, Y.; Kamalzadeh, M.; Li, B.; Leal, S.; Parisi, P.; et al. Unity Perception: Generate Synthetic Data for Computer Vision. arXiv 2021, arXiv:2107.04259. [Google Scholar]

- Kerim, A.; Aslan, C.; Celikcan, U.; Erdem, E.; Erdem, A. NOVA: Rendering Virtual Worlds with Humans for Computer Vision Tasks. Comput. Graph. Forum 2021, 40, 258–272. [Google Scholar] [CrossRef]

- Saviolo, A.; Bonotto, M.; Evangelista, D.; Imperoli, M.; Menegatti, E.; Pretto, A. Learning to Segment Human Body Parts with Synthetically Trained Deep Convolutional Networks. arXiv 2021, arXiv:2102.01460. [Google Scholar]

- Numata, S.; Jozen, T. A 3D Marker Recognition Method for AR Game Development. In Proceedings of the 2021 IEEE 10th Global Conference on Consumer Electronics, Kyoto, Japan, 12–15 October 2021; pp. 553–554. [Google Scholar] [CrossRef]

- Alvey, B.; Anderson, D.T.; Buck, A.; Deardorff, M.; Scott, G.; Keller, J.M. Simulated Photorealistic Deep Learning Framework and Workflows to Accelerate Computer Vision and Unmanned Aerial Vehicle Research. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision Workshops, Montreal, BC, Canada, 11–17 October 2021; pp. 3882–3891. [Google Scholar] [CrossRef]

- Fedoseev, A.; Serpiva, V.; Karmanova, E.; Cabrera, M.A.; Shirokun, V.; Vasilev, I.; Savushkin, S.; Tsetserukou, D. DroneTrap: Drone Catching in Midair by Soft Robotic Hand with Color-Based Force Detection and Hand Gesture Recognition. In Proceedings of the 2021 IEEE 4th International Conference on Soft Robotics, New Haven, CT, USA, 12–16 April 2021; pp. 261–266. [Google Scholar] [CrossRef]

- Singh, N.; Chouhan, S.S.; Verma, K. Object Oriented Programming: Concepts, Limitations and Application Trends. In Proceedings of the 2021 5th International Conference on Information Systems and Computer Networks, Mathura, India, 22–23 October 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Balogun, M.O. Comparative Analysis of Complexity of C++ and Python Programming Languages. Asian J. Soc. Sci. Manag. Technol. 2022, 4, 1–12. [Google Scholar]

- Zehra, F.; Javed, M.; Khan, D.; Pasha, M. Comparative Analysis of C++ and Python in Terms of Memory and Time. 2020; Preprints. [Google Scholar] [CrossRef]

- Ullah, K.; Ahmed, I.; Ahmad, M.; Khan, I. Comparison of Person Tracking Algorithms Using Overhead View Implemented in OpenCV. In Proceedings of the 2019 9th Annual Information Technology, Electromechanical Engineering and Microelectronics Conference, Jaipur, India, 13–15 March 2019; pp. 284–289. [Google Scholar] [CrossRef]

- Boyko, N.; Basystiuk, O.; Shakhovska, N. Performance Evaluation and Comparison of Software for Face Recognition, Based on Dlib and Opencv Library. In Proceedings of the 2018 IEEE Second International Conference on Data Stream Mining Processing, Lviv, Ukraine, 21–25 August 2018; pp. 478–482. [Google Scholar] [CrossRef]

- Peter, J.; Karel, K.; Tomas, D.; Istvan, S. Comparison of tracking algorithms implemented in OpenCV. MATEC Web Conf. 2016, 76, 04031. [Google Scholar] [CrossRef]

- Raghava, N.; Gupta, K.; Kedia, I.; Goyal, A. An Experimental Comparison of Different Object Tracking Algorithms. In Proceedings of the 2020 International Conference on Communication and Signal Processing, Chennai, India, 28–30 July 2020; pp. 726–730. [Google Scholar] [CrossRef]

- Tang, K.; Wang, Y.; Liu, H.; Sheng, Y.; Wang, X.; Wei, Z. Design and Implementation of Push Notification System Based on the MQTT Protocol. In Proceedings of the 2013 International Conference on Information Science and Computer Applications, Changsha, China, 8–9 November 2013; pp. 116–119. [Google Scholar] [CrossRef][Green Version]

- Belmonte, L.M.; García, A.S.; Morales, R.; de la Vara, J.L.; López de la Rosa, F.; Fernández-Caballero, A. Feeling of safety and comfort towards a socially assistive unmanned aerial vehicle that monitors people in a virtual home. Sensors 2021, 21, 908. [Google Scholar] [CrossRef]

- Lozano-Monasor, E.; López, M.; Vigo-Bustos, F.; Fernández-Caballero, A. Facial expression recognition in ageing adults: From lab to ambient assisted living. J. Ambient Intell. Humaniz. Comput. 2017, 8, 567–578. [Google Scholar] [CrossRef]

- Martínez, A.; Belmonte, L.M.; García, A.S.; Fernández-Caballero, A.; Morales, R. Facial emotion recognition from an unmanned flying social robot for home care of dependent people. Electronics 2021, 10, 868. [Google Scholar] [CrossRef]

- Ammar Sameer Anaz, D.M.F. Comparison between Open CV and MATLAB Performance in Real Time Applications. Al-Rafidain Eng. J. 2015, 23, 183–190. [Google Scholar] [CrossRef]

- Guennouni, S.; Ahaitouf, A.; Mansouri, A. Multiple object detection using OpenCV on an embedded platform. In Proceedings of the 2014 Third IEEE International Colloquium in Information Science and Technology (CIST), Tetouan, Morocco, 20–22 October 2014; pp. 374–377. [Google Scholar] [CrossRef]

- Borsatti, D.; Cerroni, W.; Tonini, F.; Raffaelli, C. From IoT to Cloud: Applications and Performance of the MQTT Protocol. In Proceedings of the 2020 22nd International Conference on Transparent Optical Networks (ICTON), Bari, Italy, 19–23 July 2020; pp. 1–4. [Google Scholar] [CrossRef]

- Hasan, N.I.; Hasan, M.T.; Turja, N.H.; Raiyan, R.; Saha, S.; Hossain, M.F. A Cyber-Secured MQTT based Offline Automation System. In Proceedings of the 2019 International Conference on Wireless Communications Signal Processing and Networking (WiSPNET), Chennai, India, 21–23 March 2019; pp. 479–484. [Google Scholar] [CrossRef]

- Sanchez, S.A.; Romero, H.J.; Morales, A.D. A review: Comparison of performance metrics of pretrained models for object detection using the TensorFlow framework. IOP Conf. Ser. Mater. Sci. Eng. 2020, 844, 012024. [Google Scholar] [CrossRef]

- Jackson, S.; Murphy, P. Managing Work-Role Performance: Challenges for 21st Century Organizations and Employees. In The Changing Nature of Work Performance; Wiley: Hoboken, NJ, USA, 1999; pp. 325–365. [Google Scholar]

- Gholape, N.; Gour, A.; Mourya, S. Finding missing person using ML, AI. Int. Res. J. Mod. Eng. Technol. Sci. 2021, 3, 1517–1520. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bustamante, A.; Belmonte, L.M.; Morales, R.; Pereira, A.; Fernández-Caballero, A. Video Processing from a Virtual Unmanned Aerial Vehicle: Comparing Two Approaches to Using OpenCV in Unity. Appl. Sci. 2022, 12, 5958. https://doi.org/10.3390/app12125958

Bustamante A, Belmonte LM, Morales R, Pereira A, Fernández-Caballero A. Video Processing from a Virtual Unmanned Aerial Vehicle: Comparing Two Approaches to Using OpenCV in Unity. Applied Sciences. 2022; 12(12):5958. https://doi.org/10.3390/app12125958

Chicago/Turabian StyleBustamante, Andrés, Lidia M. Belmonte, Rafael Morales, António Pereira, and Antonio Fernández-Caballero. 2022. "Video Processing from a Virtual Unmanned Aerial Vehicle: Comparing Two Approaches to Using OpenCV in Unity" Applied Sciences 12, no. 12: 5958. https://doi.org/10.3390/app12125958

APA StyleBustamante, A., Belmonte, L. M., Morales, R., Pereira, A., & Fernández-Caballero, A. (2022). Video Processing from a Virtual Unmanned Aerial Vehicle: Comparing Two Approaches to Using OpenCV in Unity. Applied Sciences, 12(12), 5958. https://doi.org/10.3390/app12125958