1. Introduction

Simultaneous Localization and Mapping (SLAM) systems are fundamental technologies of intelligent vehicles. Though the basic function of SLAM [

1] is to estimate trajectory accurately in unknown environment, high-quality dense mapping results not only benefit the localization performance, but also are necessary for high-level human-given tasks [

2]. Thus, in recent years, 3D dense mapping has drawn much research attention. In this paper, we focus on real-time 3D dense mapping, which means that we have to rely on odometry results to aggregate map points and generate an accurate mesh model efficiently.

In terms of odometry, there are still inherent errors that are less studied in the existing LiDAR–inertial odometry methods. In recently years, LiDAR–inertial odometry has demonstrated its superior performance to odometry based on other sensor configurations. FastLIO2 [

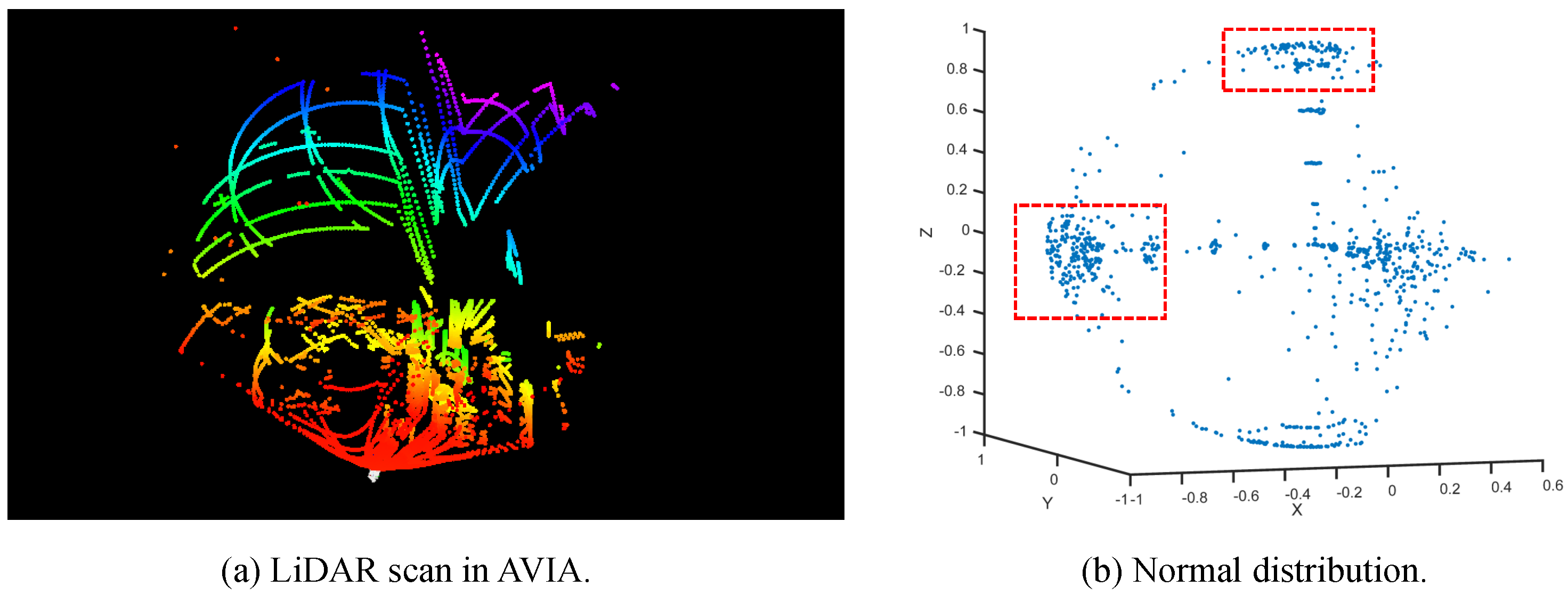

3] is the most typical and widely used LiDAR–inertial odometry method. Many novel odometry methods have been proposed based on the FastLIO2 framework. However, there remain two issues. Firstly, during the LiDAR scanning process, the inertial measurement units (IMUs) are incrementally integrated and then provide prior pose estimation for later LiDAR scan alignment. Essentially, the IMU is used to avoid LiDAR scan matching degeneration, especially in extreme environments. Odometry precision is mostly determined by the LiDAR scan-matching part. IMU-based prior constraints, scan-to-map matching, and point-to-plane registration are the three key factors for the success of FastLIO2. However, to further promote odometry precision, it is expected that the LiDAR measurements can provide rigid and enough pose estimation optimization, which requires that the LiDAR points’ corresponding residuals are spatially distributed evenly. However, such a requirement cannot be well satisfied. In most environments, the LiDAR measuremens are generally redundant in some directions, such as the ground, and are sparse in the other directions. Once the redundant observations play dominant roles during the optimization process, the optimal state estimation tends to overfit the redundant observations and thus depart from the real true state, which means that odometry precision is degraded.

Odometry is an essentially nonlinear optimization problem. Its cost function is highly nonlinear and the linearization error is unavoidable. The Kalman Filter (KF) and its variants are widely used in LiDAR–inertial odometry. However, they are constructed based on the Gaussian distribution hypothesis and aim to maximize the fused distribution of prior and posterior Gaussian distributions. Once the Gaussian distribution hypothesis is not well satisfied, the filter performance is thus degraded. The Gauss–Newton (GN) algorithm is another effective alternative for solving the nonlinear optimization problem. Though linearization errors are also unavoidable in GN, it computes the optimal iterative state based on second-order approximation, which is more robust to the Gaussian distribution approximation error. Thus, in this paper, we stress the importance of the GN algorithm in LiDAR–inertial odometry.

In terms of dense mapping, a common solution is to construct a TSDF-based implicit surface representation model [

4] and then use the marching cube algorithm [

5] to represent the surface explicitly by a set of triangle faces. For each voxel, its SDF value is defined as its nearest distance from its center points to its surface along LiDAR rays. These faces are targeted to approximate the zero-SDF-value surface. Marching cube is a well-developed algorithm; thus, the bottlenecks of dense mapping are determined by the TSDF voxel maintaining efficiency and the SDF values computing accuracy. In the existing TSDF-based mapping methods [

6,

7,

8,

9], the full 3D space is voxelized and the scale of the TSDF voxels is significantly increased with the environmental size. Since the marching cube algorithm is required to traverse all the voxels, the computational efficiency is limited in a large-scale environment. Furthermore, the SDF values are generally computed based on ray-casting mechanism, which is achieved by averaging the voxel-to-LiDAR-beam-end distances. However, given the 3D LiDAR measurements are noisy and sparse, such a mechanism is prone to errors and the surface normal information is not involved. Though a gradient field is then introduced in VoxField [

10] and significantly benefits the mapping accuracy, the computational burden is also enlarged due to gradient field maintenance and fails to meet the online requirement. Also, its gradient field is also sensitive to LiDAR points’ sparsity.

An effective way to alleviate the efficiency issue of dense mapping is to avoid processing all the voxels and focus on the voxels that are occupied. Recently, SLAMesh [

11] was proposed and it can achieve real-time dense mapping, which is most related to ours. In each occupied voxel, the authors project the points to the three coordinate directions, respectively, and approximate the surface by estimating the surface function using a Gaussian Process [

12,

13,

14]. Since they firstly sample vertices evenly on the estimated surface, triangle faces can be directly generated by connecting neighbor vertices. Then, the global mesh model is obtained by accumulating the faces. Though they significantly improve the mapping efficiency, a densely performing Gaussian Process still increases the computational cost. One important feature of SLAMesh is that the faces are generated separately in each voxel. This means that faces between neighboring voxels tend to fail to meet the smoothness requirement. Furthermore, their Gaussian Process-based surface approximation is implemented in the full voxel space. However, such a configuration tends to generate redundant surface estimation when the voxel is not fully occupied.

The marching cube algorithm has the advantage of generating a smooth, accurate, and less redundant dense surface. It is also efficient due to the application of a look-up table. Thus, in this paper, we propose a novel dense mapping system that fuses the advantages of TSDF-based mapping and the SLAMesh-like mapping paradigm. Specifically, firstly, we only perform voxelization in the occupied space and implement a voxel insert, retrieval, and update mechanism based on in C++. Then, for each voxel, we compute and update the SDF value of its center point by IMLS surface representation only using the contained map points, which can avoid estimating explicit surface function and can directly use the LiDAR points and normals to compute the voxel-to-surface distance accurately. Thirdly, we traverse all the updated voxels, and for each voxel, we formulate a cube according to its neighboring voxels. The marching cube algorithm is then performed on the cube to generate vertices and faces.

The main contributions of this paper are summarized as follows.

A residual-density-driven Gauss–Newton algorithm is proposed and applied in LiDAR–inertial odometry, which can promote pose estimation accuracy.

A novel TSDF-free dense mapping paradigm is proposed, which is more flexible and scalable than the traditional TSDF mapping paradigm. IMLS surface representation is introduced to compute SDF values accurately and efficiently, which is superior to the ray-casting-based mechanism.

We will release our code to benefit the robotics community.

The rest of this paper is organized as follows.

Section 2 presents the related work.

Section 3 gives the notations and

Section 4 presents the details of the proposed method. The experimental evaluations are shown in

Section 5.

Section 6 concludes the paper.

2. Related Work

LiDAR–inertial odometry: The first kinds of methods achieving LiDAR–inertial odometry are based on factor graphs. Such methods include LIOSAM [

15], LiLi-OM [

16], and FF-LINS [

17]. These methods incorporate feature-based LiDAR scan constraints and IMU pre-integration constraints into local and global pose graphs. Feature extraction, keyframe selection, sliding windows, and various marginalization mechanisms are then proposed in these methods to achieve better performance. Though factor graph-based methods are flexible and powerful, they are also known efficiency issues, especially when the factor scale grows. The second kinds of methods are based on filters. Such methods include FastLIO2 [

3], FasterLIO [

18], iG-LIO [

19], LOG-LIO [

20], PointLIO [

21], and Direct-LIO [

22]. FastLIO2 proposed IESKF-based scan-to-map registration and an efficient map data structure, i-kdtree. FastLIO2 has drawn much research attention due to its accuracy and efficiency performance. FasterLIO further promotes efficiency by using incremental voxels (iVox) as a point cloud spatial data structure. iVox is a novel 3D voxel data structure supporting incremental insertion and parallel approximated k-NN queries. Instead of implementing raw point-to-map registration, iG-LIO introduces the GICP [

23] registration algorithm into LiDAR–inertial odometry to achieve more robust and accurate LiDAR constraint computation. Among these methods, one common module is LiDAR scan undistortion using IMU measurements. Considering that such a process exhibits errors, PointLIO and Direct-LIO proposed a novel point-by-point LIO framework, which updates the state at each LiDAR point measurement. This framework allows an extremely high-frequency odometry output and fundamentally removes the artificial in-frame motion distortion [

21]. Furthermore, Wang et al. proposed a hierarchical LIO, which is composed of low-level LiDAR–inertial odometry based on the IEKF and high-level factor graph optimization [

24]. The third kind of method is incorporating LiDAR–inertial odometry into the mapping process. In SLICT [

25], the mapping thread maintains an octree-based global map of multi-scale surfels that can be updated incrementally. The odometry thread performs point-to-surfel registration. In LIO-GVM [

26], the authors argue that directly registering the source points to the nearest target points would generate mismatches and generating a map by directly aggregating LiDAR points is not elegant. They extend and apply VGICP [

27] in LiDAR–inertial odometry and probabilistic voxel-based map maintenance. From these methods, we can see that developing a more adaptable map data structure instead of a simple point set and incorporating novel representation into LiDAR–inertial odometry can effectively promote the system performance. Among these methods, researchers focus on alleviating the odometry degeneration by developing the odometry itself. However, there exists inherent degeneration in some environments due to the LiDAR scanning mechanism. The LiDAR sensors tend to hit more points on the ground and fewer points are hit on informative areas. Redundant and less-informative points tend to degrade any LiDAR odometry.

Dense mapping methods: KinectFusion [

28] is the first method that achieves visual-based real-time 3D reconstruction using TSDF-based environmental representation. Since it was proposed in KinectFusion, the basic TSDF-based mapping framework has been widely confirmed in the literature, which consists of TSDF-based representation, ray-casting-based TSDF updating, and marching cube-based mesh model generation. The main challenge turns out to be how to maintain the TSDF map. Then, lots of variations of KinectFusion have been proposed to alleviate the problem. BundleFusion [

29] pays more attention to global camera pose alignment and optimization. The authors directly integrate the observations into the TSDF-based map. To maintain the integration accuracy, they reintegrate the observations after the global poses are updated. Notice that the drift error is hard to tackle during the TSDF mapping process, since the map should be immediately updated once the poses are corrected; C-blox [

7] is proposed and it formulates the global map as a set of submaps and maintains the relative pose between submaps. TSDF mapping efficiency is also another important issue. In Vox-blox [

6], because the ray-casting operation in a 3D environment is time-cosuming, the TSDF updating performance is effectively prompted by the proposed grouped ray casting mechanism. To further improve the mapping efficiency, VDB-Fusion [

9] introduces an OpenVDB data structure [

30] to build a TSDF map. With the help of OpenVDB, the TSDF voxels are more efficient when extended, updated, and retrieved. Notice that the ray-casting-based TSDF value computing accuracy is limited. Lius et al. [

31] propose a novel surface normal estimation based on Gaussian confidence. Their SDF values are accurately computed by point-to-surface distance. However, their method cannot be applied in an incremental strategy. To alleviate such a problem, Vox-Field [

10] constructs and incrementally updates gradient voxels. Each TSDF value is updated considering the normal cues provided by the corresponding gradient voxels.

Recently, a novel dense mapping paradigm was proposed by the creators of SLAMesh [

11]. Instead of approximating the surface by TSDF voxels, they apply a Gaussian Process to explicitly model the surface in each occupied voxel and evenly generate vertices on the estimated surface. The mesh model can be directly obtained by connecting the vertices in an orderly manner. Though their method can be implemented in real time, there exist two critical issues compared with the TSDF-based mapping paradigm. Firstly, the Gaussian Process-based local surface model is not sensitive to the surface boundary and tends to generate redundant surfaces in free space. Secondly, in their method, vertices between voxels are not connected, which degrades the surface smoothness. In the TSDF mapping process, smoothness is achieved by neighboring cubes sharing the same TSDF voxels.

5. Experiments

We firstly evaluate our LiDAR–inertial odometry in terms of pose tracking accuracy and efficiency. Then, we evaluate the proposed dense mapping module on public datasets in terms of mapping accuracy and efficiency. For the odometry evaluation, the used public datasets include NCLT, UTBM, LIOSAM, and AVIA. We use evo (

https://github.com/MichaelGrupp/evo, accessed on 18 June 2024) tools for trajectory error evaluation and Absolute Pose Error (APE) is used. For the dense mapping evaluation, more LiDAR datesets are used, including KITTI [

33], Stevens-VLP16 [

34], and MaiCity [

35]. Some details of these datasets are presented as follows.

5.1. Datasets

The LiDAR–inertial datasets used for odometry evaluation are given as follows.

NCLT dataset (

http://robots.engin.umich.edu/nclt/, accessed on 18 June 2024): The dataset is collected using a Segway robot equipped with multiple sensors, including a Velodyne HDL-32E 3D LiDAR and an inertial measurement unit. Challenging elements including moving obstacles and long-term structural changes are captured. Since many sequences collected in different times are provided, we select several representative sequences for odometry evaluation. Ground truth poses of the dataset are also provided. For the long sequences, we only use the data in 1500 s.

UTBM dataset (

https://epan-utbm.github.io/utbm_robocar_dataset/, accessed on 19 June 2024): The dataset is collected by a robocar in the downtown (for long-term data) and suburban (for roundabout data) areas of Montbéliard in France. Two Velodyne HDL-32E 3D LiDAR sensors are equipped. Translational ground truth poses are provided using RTK. The vehicle is moving faster than that in NCLT dataset. Several representative sequences are selected for odometry evaluation. In the experiments, the left LiDAR sensor’s data are used.

LIOSAM dataset (

https://drive.google.com/drive/folders/1gJHwfdHCRdjP7vuT556pv8atqrCJPbUq, accessed on 19 June 2024): The dataset is collected by VLP-16 LiDAR. Two kinds of platforms are used. The first is a custom-built handheld device on the MIT campus. The other is collected in a park covered by vegetation, using an unmanned ground vehicle (UGV)—the Clearpath Jackal. No ground truth is provided.

AVIA dataset (

https://github.com/ziv-lin/r3live_dataset, accessed on 20 June 2024): The dataset is collected by a Livox LiDAR sensor in HKUST campus environment. It provides several end-to-end sequences without ground truth.

We perform trajectory estimation error evaluation on NCLT and UTBM datasets using the evo tool. We perform end-to-end error evaluation on the LIOSAM and AVIA datasets. End-to-end error evaluation is an effective odometry evaluation mechanism when ground truth poses are unavailable. It is used on the sequence whose start point is identical to the end point. Thus, the end-to-end errors are the odometry drift errors.

The LiDAR datasets are given as follows.

KITTI odometry dataset (

https://www.cvlibs.net/datasets/kitti/eval_odometry.php, accessed on 21 June 2024): The KITTI dataset is collected by a Velodyne HDL-64 LiDAR sensor mounted on a vehicle and widely used in the SLAM community. Ground truth poses are provided and the environments include typical outdoor elements.

Stevens-VLP16 dataset (

https://github.com/TixiaoShan/Stevens-VLP16-Dataset, accessed on 21 June 2024): This dataset is captured using a Velodyne VLP-16, which is mounted on a UGV—the Clearpath Jackal—on the Stevens Institute of Technology campus. This dataset is used for validating the robustness of the mapping methods to the LiDAR points’ sparsity.

MaiCity dataset (

https://www.ipb.uni-bonn.de/data/mai-city-dataset/index.html, accessed on 21 June 2024): A synthetic dataset generated from a 3D CAD model in urban-like scenarios. The data are collected by placing virtual sensors on the 3D cad model and, through ray casting techniques, a LiDAR scan is obtained by sampling the original mesh. Different to other datasets, the provided ground truth poses exhibit fewer errors.

The LiDAR datasets are only used for dense mapping evaluation.

5.2. Baselines

For the LiDAR–inertial odometry, the baselines are presented as follows.

FastLIO2 (

https://github.com/hku-mars/FAST_LIO, accessed on 16 June 2024): FastLIO2 is the most typical LiDAR–inertial odometry. The difference between FastLIO2 and our odometry is that we replace its IESKF with our proposed normal-density-driven Gauss–Newton algorithm to perform pose optimization. Thus, the comparison with FastLio2 can effectively demonstrate the performance of our odometry.

FasterLIO (

https://github.com/gaoxiang12/faster-lio, accessed on 16 June 2024): FasterLIO uses iVox as a spatial data structure. Pose tracking efficiency is effectively promoted with little accuracy loss. In this method, the LiDAR–inertial matching module remains unchanged compared with FastLIO2.

iG-LIO (

https://github.com/zijiechenrobotics/ig_lio, accessed on 16 June 2024): iG-LIO integrates the GICP constraints and inertial constraints into a unified estimation framework. Its efficiency is superior to FasterLIO and its accuracy is also competitive compared with the SOTA methods.

For dense mapping, though there are many existing methods; the two most representative methods are selected for comparison. Though Poisson surface reconstruction is widely used, it fails to meet the real-time and incremental update requirements. Thus, it is not included for comparison.

SLAMesh (

https://github.com/RuanJY/SLAMesh, accessed on 16 June 2024): SLAMesh is the most related work to ours and follows a common rule, which is only focusing on occupied voxels to accelerate the dense mapping efficiency. There are two important differences between SLAMesh and our method. Firstly, we use point-to-plane instead of point-to-mesh constraints during registration process. Secondly, SLAMesh uses a Gaussian Process to model the surface and generate triangle faces. In our method, we use IMLS to represent the surface and apply marching cube to generate faces.

VDBFusion (

https://github.com/PRBonn/vdbfusion_ros, accessed on 16 June 2024): VDBFusion performs TSDF-based dense mapping using the OpenVDB library, which can significantly accelerate the TSDF mapping process. However, the TSDF mapping mechanism remains traditional. We regard VDBFusion as a representative TSDF-based baseline, whose efficiency and mapping precision are superior to the traditional TSDF-based mapping methods, including VoxBlox.

5.3. LiDAR–Inertial Odometry Evaluation

5.3.1. Experimental Settings

For the baselines, we use their provided parameter files. If not provided, we formulate the parameter files and ensure that their odometry can be well initialized. In our method, for the velodyne LiDAR sensor datasets, the radius

r determining the neighbor set

in Equation (

8) is set to 0.1 and the variance

is set to 10. For the Livox sensor dataset,

r is set to 0.01. The rest of the parameters are identical to FastLIO2.

5.3.2. Comparison Study

We perform trajectory estimation error evaluation on the NCLT and UTBM datasets. The results are shown in

Table 2. We can tell that our method is superior to the baselines. In the NCLT dataset, the LiDAR is mounted on the top of the robot; thus, the ground points are redundant. In the UTBM dataset, the LiDAR is mounted on the top side of the car; thus, most points are occluded. Thus, our method achieves better accuracy improvements on NCLT compared with UTBM.

End-to-end error evaluations are also presented in

Table 3. We can tell that FastLIO2 is more stable than Faster-LIO and iG-LIO, which fail to track the pose on some LIOSAM sequences. These sequences are recorded with a customized handheld device, which has poor stability and requires the robustness of the algorithm. On LIOSAM sequences, our method can effectively reduce the drift errors. On the AVIA dataset, iG-LIO achieves the best odometry precision. This demonstrates that our method is less adaptive to the Livox sensor, since its scanning mechanism is different to Velodyne LiDARs and redundant points are not dominant. Our method is constructed based on FastLIO2, and the odometry evaluation results sufficiently demonstrate the effectiveness of our proposed density-driven GN algorithm.

5.3.3. Ablation Study

We also performed an ablation study of our method and the results are shown in

Table 4. FastLIO-GN denotes that we only replace the IESKF with the traditional GN algorithm. Prior-GN denotes that a pose prior constraint is applied without a density-driven weighting mechanism. Weighted-GN denotes that a density-driven weighting mechanism is applied while the prior constraint is not applied. In our method, both mechanisms are applied.

From the table, the following conclusions can be drawn. Firstly, directly replacing the IESKF with GN in FastLIO2 does not always obtain pose tracking accuracy improvement. The two optimizers are comparable. Secondly, applying an IMU-based prior constraint in GN can effectively promote the accuracy in most cases. Thirdly, the proposed density-driven weighting mechanism is also effective when it is applied in the traditional GN algorithm. Fourthly, the combination of a prior constraint and density-driven weighting mechanism achieves the best performance improvements.

5.4. Dense Mapping Evaluation

5.4.1. Evaluation Criterion

Three popular metrics are widely calculated with respect to the constructed global point cloud, including the TSDF error, Chamfer distance, and Reconstruction Coverage. However, a common problem of these criteria is that the global point cloud is used for both dense mapping construction and evaluation, which degrades the persuasiveness of these criteria. Thus, in this paper, we propose a novel criterion as follows.

Given a sequence of a LiDAR scan, we select one scan every two scans, and aggregate them into the global frames as the testing point cloud map

. The rest of the LiDAR scans are used for dense mapping

. The output of dense mapping methods can be regarded as a set of triangle meshes. Thus, for each point

in

, its projection distance to

is computed as

where

is the center point and

is the normal of the nearest triangle face. Then, the dense map quality is defined as the mean value and the standard variance of the projection distances of

to

.

5.4.2. Experimental Settings

The hyperparameters used in our system are presented as follows. In our paper, we use different voxel sizes for registration and dense mapping, though they can be simultaneously implemented by one common voxel set. Registration prefers to both efficiency and accuracy, and its precision is not sensitive to voxel size. Thus, we set the voxel size for registration as 1.2 m. For dense mapping, mapping precision is sensitive to voxel size; thus, we construct another

and the voxel size is set to 0.4 m.

h in Equation (

17) is set to 0.5 m. During the dense mapping process, voxels that are observed fewer than three times or contain fewer than three points are defined as unstable voxels, which will not be involved in the marching cube process. For the baselines, we use their default setting in their opened source code. In SLAMesh, the equivalent voxel size for face generation is 0.2 m. In VDB-Fusion, the TSDF voxel size is set to 0.1 m.

5.4.3. Qualitatively Comparison

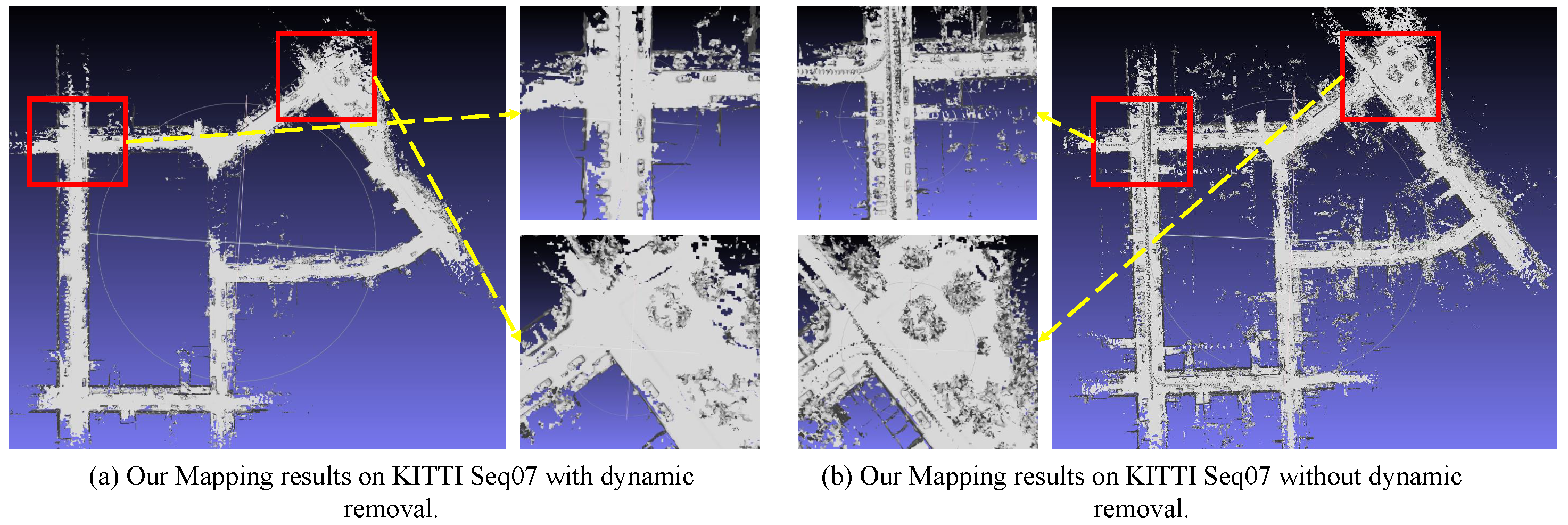

We firstly perform dense mapping on the KITTI dataset. Seq 07 is used for evaluation. The mapping results of our method with and without dynamic removal (unstable voxel removal) are shown in

Figure 4. The results demonstrate that the ghost area generated by dynamic objects can be effectively removed by filtering unstable voxels in our method. However, part of static areas, which are not sufficiently observed, are also misclassified as unstable and excluded in the final dense model. The mapping results of the baselines are shown in

Figure 5. We can tell that our dense model is superior to them in terms of mapping quality. There are lots of holes in their models. One critical issue is that we take one LiDAR scan from every two scans for dense mapping. The other scan is used for later testing. Such a mechanism means that their systems do not obtain enough LiDAR points to estimate the surface model smoothly. Both their key mechanisms, including the Gaussian Process-based surface model and TSDF computation, require a large number of points to obtain accurate surface parameters estimation. Our method, which is based on point marching cube, is more robust to the scale of LiDAR points and can generate a more accurate map model.

Secondly, we perform dense mapping on the MaiCity dataset. Seq 01 is used for evaluation. The mapping results are shown in

Figure 6. From the mapping results of SLAMesh, we can tell that there exists discontinuity between local mesh faces. The reason is that SLAMesh only performs surface estimation and face generation inside each occupied voxel. Surface estimation across voxels is neglected. In our method, face vertices are generated by interpolation on each edge according to the SDF values of cube vertices.

Thirdly, we perform dense mapping on the Stevens-VLP16 dataset. The mapping results are shown in

Figure 7. LiDAR points are sparser in this dataset and thus bring more challenges for dense mapping. Our method is still superior to the baselines.

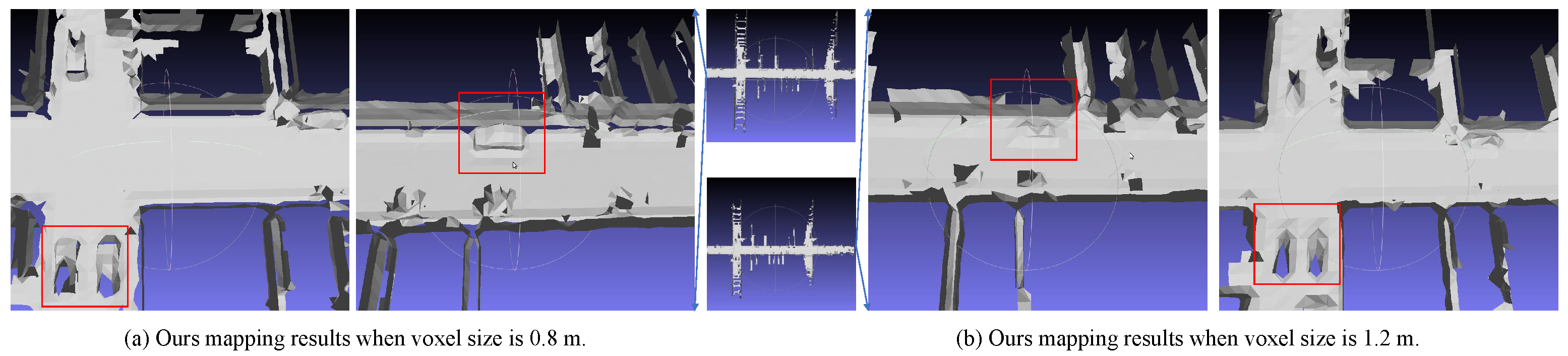

We also perform an ablation study of the mapping performance with regard to voxel size. The results are shown in

Figure 8. We can tell that the dense model is blurred when a large voxel size is set. When a large voxel is used, the generated faces within a cube tend to approximate the surface with large errors. Thus, we should set an appropriate voxel size to balance the mapping efficiency and precision.

5.4.4. Quantitatively Comparison

The quantitative evaluation results are shown in

Table 5. Our method is more precise than the baselines. The test point cloud map is constructed by aggregating the LiDAR scans collected from different viewpoints. Small errors indicate that the test point cloud map can well match the dense model. The reasons leading to a large matching error are discussed as follows. Firstly, in unstructured areas, the point cloud is unorganized. Surface approximation based on triangle face set still has errors. Secondly, due to the LiDAR points’ sparsity and noise, surface parameter estimation also still has errors, leading to wrong estimation of the zero-value surface. Thirdly, some voxels are not sufficiently activated, which leads to holes in the dense model.

Standard variance is another important index indicating the dense map quality. A large standard variance indicates that there exist a large number of testing points with large matching errors. From

Table 5, we can tell that our method is superior to the baselines in terms of the proposed evaluation criterion. Notice that this is achieved under the condition that our voxel size is larger than that in the baselines.

5.4.5. Efficiency Analysis

The computational time cost of each method on KITTI Seq 07 is shown in

Table 6. The definitions are presented as follows.

denotes the time to compute the normal of LiDAR points, which is only required in the IMLS-based algorithm in our method.

denotes the time cost to compute the overlapping voxels between the current scan and the global map.

denotes the time cost to obtain point correspondences.

denotes the Gaussian Process time cost in SLAMesh and

denotes the IMSL-based voxel SDF maintenance.

denotes the registration time cost.

denotes the time cost of updating the global map. These modules are implemented every frame.

denotes the time cost to generate the global mesh model, which is implemented every 20 frames. It is also highly related to the scale of the environment.

In terms of the , our method is more efficient. Firstly, instead of using point-to-mesh-based registration, we use distribution-based registration. Thus, we only need to compute the voxel correspondence between the current scan and global map. Thus, and only take up little time cost in our method, while SLAMesh spends more time on the point matching process.

However, in terms of , our method uses more time to perform pose estimation optimization, which indicates that the voxel-based constraints are less rigid than point-based constraints and thus makes the optimizer take more time to converge to the optimal solution. In SLAMesh, in terms of , Gaussian Process-based surface estimation is time-consuming. In our method, the IMLS-based surface representation is implicit and we only update the SDF values of the voxel center. Thus, in our method is less than . Generating the faces of the global map is still time-consuming given the vertices have been estimated. In our method, we traverse all the occupied voxels, formulate a cube, and implement the marching cube algorithm. In SLMesh, all the occupied voxels are traversed and the vertices formulating faces according to a certain rule in each voxel are directly connected. In VDBFusion, the main process is to integrate the LiDAR points into the global TSDF map and update the TSDF voxels using the ray casting algorithm.

Thus, we only present the final averaged time cost of VDBFusion per frame. We can tell that our method is superior to the baselines in terms of average efficiency.

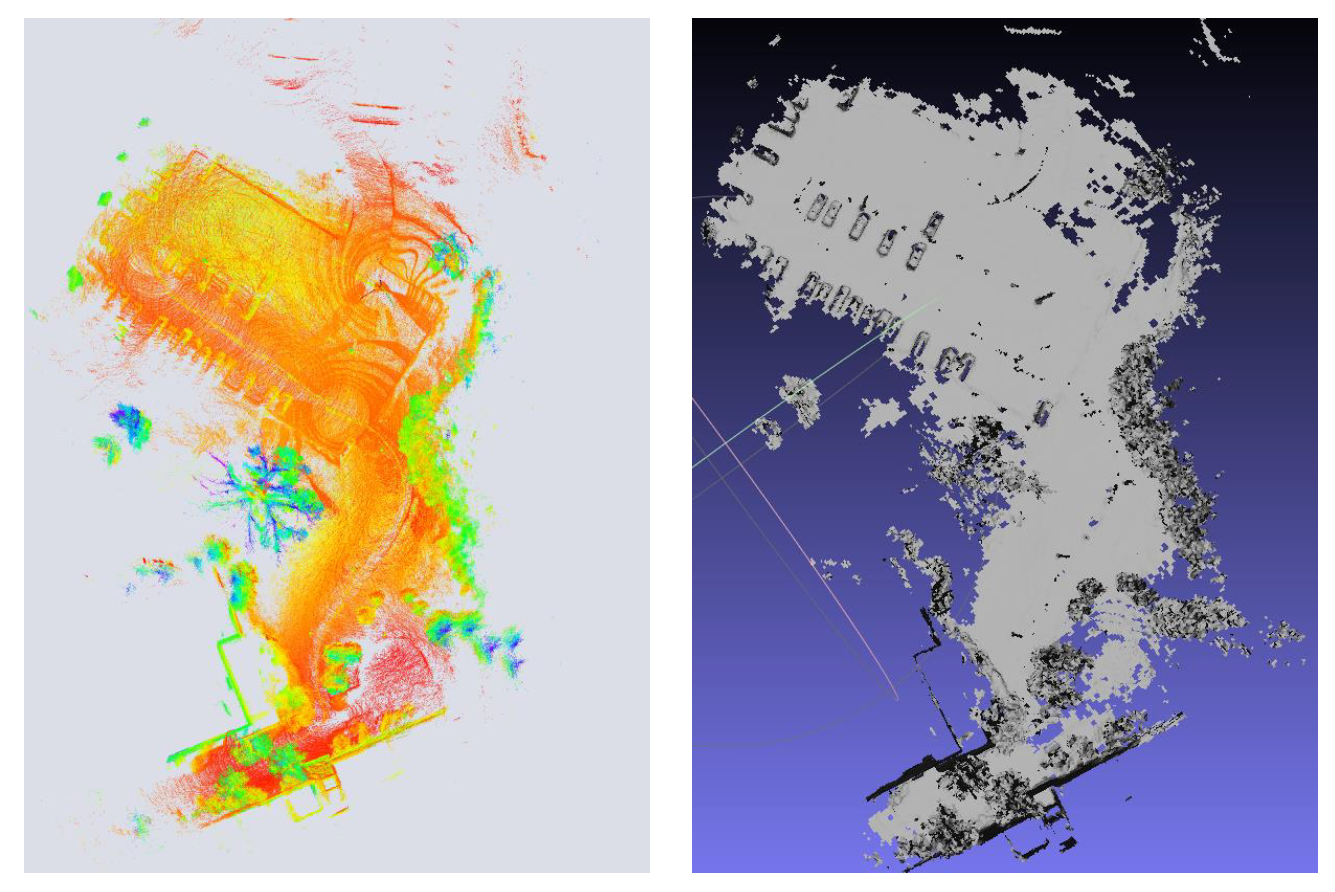

5.5. Full System Evaluation

Since we have validated the LiDAR–inertial odometry and TSDF-free dense mapping module, we incorporate them into a full system. The mapping result on the NCLT-20230110 sequence is shown in

Figure 9. The two modules are running in different threads. LiDAR–inertial odometry advertises undistorted globally registered LiDAR scans and estimated poses. The mapping module takes the undistorted globally registered LiDAR scans and updates the mesh map in real time. Quantitative evaluation of the mapping quality is presented in

Table 7. We can tell that the mapping quality can be effectively promoted by replacing FastLIO2 with our odometry.