Abstract

Large Language Models (LLMs) are becoming increasingly adopted in various industries worldwide. In particular, there is emerging research assessing the reliability of LLMs, such as ChatGPT, in performing triaging decisions in emergent settings. A unique aspect of emergency triaging is the process of trauma triaging. This process requires judicious consideration of mechanism of injury, severity of injury, patient stability, logistics of location and type of transport in order to ensure trauma patients have access to appropriate and timely trauma care. Current issues of overtriage and undertriage highlight the potential for the use of LLMs as a complementary tool to assist in more accurate triaging of the trauma patient. Despite this, there remains a gap in the literature surrounding the utility of LLMs in the trauma triaging process. This narrative review explores the current evidence for the potential for implementation of LLMs in trauma triaging. Overall, the literature highlights multifaceted applications of LLMs, especially in emergency trauma settings, albeit with clear limitations and ethical considerations, such as artificial hallucinations, biased outputs and data privacy issues. There remains room for more rigorous research into refining the consistency and capabilities of LLMs, ensuring their effective integration in real-world trauma triaging to improve patient outcomes and resource utilisation.

1. Introduction

Trauma represents approximately 12% of the global burden of disease, affecting more than one billion people and accounting for over six million deaths per year [1]. Trauma care seeks to triage patients by considering severity and mechanisms of injury, type of accessible transport, time from trauma centre and patient stability. The trauma triaging process therefore allows for early planning, rapid life-saving trauma care and appropriate escalation of trauma patients to reduce morbidity and mortality [2,3]. This involves two aspects: pre-hospital assessment and care, followed by emergency trauma escalation and activation [1,3,4,5,6]. The establishment of trauma centres with standardised criteria for trauma team activation (TTA) are central to facilitating optimised emergency trauma care in this way [4,7,8,9,10]. These triage protocols are set in place to rapidly identify patients that require urgent care based on the severity of their condition to prioritise early medical intervention and transport to the highest appropriate level of care [2,8]. Despite improvements in the systematic approach of assessing trauma patients, high rates of trauma-related morbidity and mortality persist [1,4]. Correct identification of trauma patients with time-critical injuries remains a challenge, with issues related to the undertriaging of cases, which is associated with delays in diagnosis and treatment and higher rates of trauma-related mortality [3]. Furthermore, challenges with overtriage exist and result in resource overuse and unnecessary strain on trauma and emergency services [2,4]. Current studies suggest the accuracy of pre-hospital triaging to be 55% and hospital triage rates between approximately 60% and 80% [2,11,12,13].

The advent of generative artificial intelligence (AI) technologies such as Large Language Models (LLMs) have led to a greater ability to manipulate big data and increase productivity in many industries including education, finance and technology. In particular, commercial open-access LLMs such as ChatGPT and Google Gemini (previously Google Bard) have significantly reduced accessibility and cost barriers to contemporary LLM technology [2,14]. Chat-based LLMs have a wide capacity to perform natural language processing (NLP) tasks and rapidly generate contextually relevant responses [2,15]. The capacity to do so has garnered interest for the use of LLMs within healthcare for assistance with diagnosis, risk prediction, auditing, research and clinical decision-making [2,15,16,17,18]. In the context of trauma, the ability to rapidly deal with unstructured data offers the potential application for LLMs to be a complementary tool to assist with accurate trauma triage. However, the use of these tools for this purpose remains poorly characterised. Interestingly, preliminary research in emergency triage suggests LLMs may allow for more increased efficiency in timely triaging, resource allocation and accuracy [14]. This narrative review evaluates the current landscape of research surrounding the use of LLMs as a tool for assisting trauma triage and explores the potential for its implementation.

2. The Current Landscape of Trauma Triage Protocols

Trauma triage describes the process where patients who have sustained traumatic injuries are stratified into different categories based on the severity of injury, mechanism of injury, expected resources required for management and type of trauma care escalation expected to be required [19]. The primary goal of this process is to prioritise immediate care for patients with life-threatening conditions, while allowing those in less critical states to be managed appropriately within the constraints of available resources. In many countries, a standardised triaging system, dictated by evidence-based guidelines, is used to provide direction and support to clinicians in providing effective and timely allocation of trauma-based resources [20]. This is particularly important due to the time-sensitive and precarious nature of traumatic injuries [10]. In Australia, the escalation of a patient in a trauma centre with potential severe injuries requires either a trauma alert or a trauma code (also known as trauma call), which aims to swiftly mobilise a multidisciplinary team of healthcare professionals to ensure that patients receive immediate and coordinated life-saving trauma care [21,22,23]. Criteria for the type of escalation include consideration of mechanism of injury and vital signs of the patient [21,22,23]. For hospitals that are not trauma centres, particularly those in rural and remote settings, similar trauma-based triaging systems are often adopted. In addition, trauma triaging in this way also allows health services to allocate resources more effectively, such as through dictating whether a trauma team is required and whether other resources such as theatre, blood banks and anaesthetic or intensive care supports are required to be on standby [10,24].

2.1. Current Trauma Triaging Systems

There are various triage systems implemented around the world, with the unified goal to provide effective and timely trauma care whilst optimising resource allocation [25]. In Australia, triaging is performed by a multidisciplinary team. The first responders are typically paramedics who are called to the scene and perform an initial assessment of the severity of the traumatic injuries, condition of the patient, vital signs and mechanism of injury. This information is communicated to the hospital through standardised frameworks such as MIST (mechanism, injuries, signs and symptoms, treatment), where an emergency department (ED) triage nurse, navigator or ED doctor determine whether a TTA is required based on the Australian Triage Scale. The latter notifies the multidisciplinary trauma team that conducts a primary and secondary survey for the concurrent assessment and management of traumatic injuries [21,22,23]. Similar processes occur in the United States of America (USA) with emphasis on mechanisms of injury and vitals, and the additional consideration on the mode of transport. There is great reliance on a tiered approach, from levels I to III based on severity. Level III involves less severe mechanism, single system injuries, and other physiological parameters not meeting the criteria for levels I and II. Level II involves patients suffering moderate injuries such as penetration of distal limbs, less severe burns, with GCS between 9 and 14. Level I indicates the utmost urgency and resource required to respond to patients, with severe penetrating injuries to critical areas, physiological parameters indicating unstable conditions prone to deterioration and special cases such as pregnancy over 20 weeks. Furthermore, there is an additional element of TTA based on the estimated time of arrival compared to Australia [26]. There are well-known disparities in the health outcomes between rural areas and metropolitan centres. This can be attributed to delayed access to definitive trauma care, transportation limitations and resource availability. In rural centres, triaging often involves initial assessment and stabilisation before further clinical decisions regarding further care or transfer. There is a prioritisation of stabilising patients and coordinating with transfer services to urban centres for more advanced capabilities [27]. Furthermore, standardisation of trauma triaging guidelines becomes more ambiguous in rural and remote settings, where access to appropriate trauma resources remains a challenge [27].

The most common approach in the USA used for patients aged 8 years old and above is the Simple Triage and Rapid Treatment (START) triage system, taking into account the patient’s vital signs, bleeding and capability to follow instructions [28]. A common triaging algorithm for children is the JumpSTART triage system, which further accounts for the likelihood of children suffering from respiratory failure and inability to follow verbal commands [29]. Other trauma triage systems include the Emergency Severity Index (ESI), a five-tier system which considers the stability of vital signs, resources available, time to disposition and level of distress, with the first question being a clinical judgement for triage nurses to seek whether a patient requires immediate life-saving interventions based on the vital signs [30]. Furthermore, the SALT triage system (Sort, Assess, Life-saving interventions, and Treatment/Transport) is another method used in emergency situations to stratify patients and is based on the severity of their injuries and the immediacy of the care required. The SALT triage system is similar to the START triage system but includes additional steps and considerations that are more comprehensive and adds life-saving interventions during the triage phase [31]. SALT is mainly utilised to divide patients up into five broad categories: immediate, expectant, delayed, minimal or deceased. This is based on an initial assessment of the patient’s ability to ambulate. Those unable to walk are then assessed for their response to instructions [32,33]. The Australasian Triage Scale is a system used in most ED settings in Australia, featuring a five-level triaging scale incorporating the patient’s appearance, physiological findings and presenting complaints [25,34]. The triaging levels range from category 1 (life-threatening trauma and requires immediate treatment) to category 5 (less urgent with max wait time up to 120 min) [35]. The Canadian Triaging System similarly contains five categories based on symptoms, with one as the most unstable patients needing immediate medical treatment and descending in severity to level five with non-urgent concerns [35]. Essentially, various triage systems are incorporated into emergency situations worldwide with the primary goal of systematic escalations of patients based on clinical severity and immediacy (summarised in Table 1).

Table 1.

Overview of trauma triaging systems.

2.2. Limitations of Current Trauma Triaging Guidelines: Over-Triaging and Under-Triaging

Despite the implementation of structured trauma protocols, challenges in overtriaging or undertriaging of trauma patients persist. Undertriaging is defined as the failure to detect severe injury, which compromises timely diagnosis and medical intervention. This severely increases the risk of adverse outcomes and mortality [36,37,38]. A study by Dinh et al., which included 11,398 severely injured patients, found that 34% of patients were undertriaged, of which 11% died [39]. Additionally, another study conducted by Schellenberg et al. found that 16% of patients in a population of 1423 routine trauma consults were undertriaged, of which 14% of patients were identified as high-risk undertriaged patients resulting in a 44% mortality rate [40]. Overtriaging is defined as the overestimation of less time-critical conditions. A retrospective observational study by Cameron et al., found that rates of overtriaging were 68% in Royal Darwin Hospital. The authors deemed that criteria based on factors including mechanism of injury decreased accuracy and resulted in over half of overtriage cases [24]. Overtriaging results in poor resource allocation and increases overall healthcare costs [41,42]. Newgard et al. conducted a study on 122 hospitals in the USA involving 301,214 patients and found that 34.3% were still transported to major trauma centres despite being low risk, costing the healthcare system 136.7 million dollars annually [42]. In addition, overtriaging can overcrowd trauma centres and therefore secondarily jeopardise patient outcomes, with a review showing a direct correlation between overtriaging and mortality rates in severely critical patients [43].

In North America, the Field Triage Guidelines advocate that rates of undertriaging should ideally be no more than 5% and overtriaging should be between 25% and 35% [44]. However, many studies have shown rates of undertriaging to range from 1.6% to 72% and rates of overtriaging ranged from 9.9% to 87.4% [44]. Specifically in Australia, between 2004 and 2008, the rates of undertriaging and overtriaging have been shown to be 42% and 21% [45]. In 2011–2012, the rates of undertriaging and overtriaging have been shown to be 12% and 77% in a study conducted in a New South Wales trauma centre [39]. Other studies have shown that hospitals worldwide have reported their undertriaging rates to be 30% and overtriaging rates to be as high as 71% [46,47,48,49]. In essence, despite clearly established and standardised guidelines for trauma triage and trauma team activation, there remains significant issues with accurate assessment and implementation of these guidelines in practice.

The importance of correct trauma triaging is crucial, as trauma is the leading cause of death for those aged 46 or younger, and the significant increase in trauma-related mortality in all age groups [50]. In a review conducted by Choi et al. outlining the impact of trauma triaging on patient outcomes, patients with moderate to severe injuries had significantly lower mortality rates when triaged correctly to trauma centres [50]. Furthermore, despite the rates of overtriaging and undertriaging not currently satisfying the benchmark of 5% for undertriaging and 25–30% for overtriaging, the incidence of patients treated in the ED is likely to rise due to the increasing number of patients with chronic and progressive diseases or involved in accidents [36]. A retrospective study by Tomas et al. analysed the trends of traumatic injury through an eight-year period, with 5,630,461 patients included in the classification, and outlined a significant increase in the incidence of global traumatic injury over the past 40 years [51]. Therefore, there is a need to improve the accuracy of trauma triaging to alleviate the burden of trauma on healthcare and reduce the rates of trauma-related disability and mortality [52].

3. Overview of Generative Artificial Intelligence and Large Language Models

Since its inception in 1956, AI technologies have advanced exponentially and now appear routinely in everyday life [53]. They have become widely accessible and integrable into products, workflows, and machinery. Possessing numerous applications, AI can be seen automating processes requiring speech recognition, visual identification, decision-making, and text analysis [54].

3.1. Defining Large Language Models

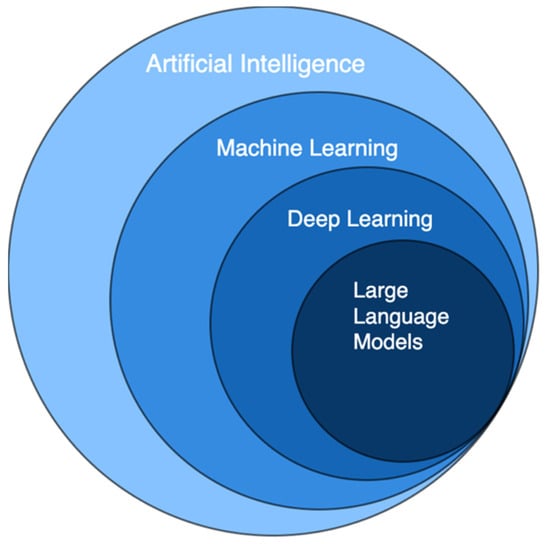

AI is broadly defined as the development of intelligent computer systems that are able to perform tasks in which human intelligence is typically required, imitating human decision-making processes and behaviours [55]. To accomplish this, different approaches and algorithms have been explored for various applications. For example, discriminative AI models utilise algorithms such as logistic regression and decision trees to identify and classify input data, whereas generative AI makes use of neural network models such as Generative Adversarial Networks (GAN) and transformers to generate new data [56]. Machine learning, a subset of AI, aims to develop systems that can learn and provide improved outputs from exposure to additional data over time, without being explicitly programmed to do so [57]. That is, machine learning models make predictions and decisions on new, unseen data, based on previously seen data that the model was trained on. Deep learning is a more advanced form of machine learning, in that it requires less human intervention. Where machine learning techniques usually necessitate manual human labelling of data prior to training, deep learning algorithms are able to extract relevant features from data automatically [58]. LLMs are a specific application of generative AI that exist as a subset of deep learning models [59]. Figure 1 visualises the AI taxonomy, in which machine learning, deep learning and LLMs are subsets of their former [60].

Figure 1.

Overview of artificial intelligence, which broadly defines intelligent computer systems. Large language models are types of deep learning models, which are subsets of machine learning models.

Where generative AI encompasses a wide range of applications, such as music composition and image generation, LLMs are specifically designed for NLP tasks—the analysis and synthesis of human language and speech [61]. LLMs are trained extensively on large corpuses of text data, learning the intricate relationships between words, and the patterns, semantics and grammar of human language [59]. These datasets may be compiled from a myriad of sources, including literary works, online forums, and web pages, and so the sizes of these datasets are vast. Ultimately, LLMs are able to provide coherent and relevant text outputs based on given prompts or inputs.

3.2. Accessible Large Language Models

A number of LLMs have been developed to be publicly available as commercial chat-based interfaces. In particular, the introduction of ChatGPT (OpenAI) in 2022, among similar developments such as Gemini (Google), has enabled the widespread use of large-scale, chat-based generative AI applications in virtually every field. Generative Pre-trained Transformer (GPT) uses LLMs specifically designed for NLP tasks such as translation, summarisation and sentiment analysis [59]. This was pre-trained on a web-scale corpus of publicly available data before being post-trained using reinforcement learning with human feedback [62]. Having over 175 billion input parameters, ChatGPT is able to act as a chatbot with the ability to understand the patterns and syntax of human language, processing complex requests and generating equally complex responses [63,64]. OpenAI provides an application programming interface (API) with which users can pre-train a chatbot similar to ChatGPT using their own specialised data. In addition to this, other models such as Retrieval-Augmented Generation (RAG) may be incorporated with ChatGPT’s base LLMs to introduce external data, producing more knowledgeable and specific responses [65]. When the task becomes specialised and distinct, such as triaging patients in hospital settings, these methods prove to increase the accuracy and consistency of ChatGPT performance [2]. LLMs have become widely accessible tools; their flexible nature assists businesses across multiple fields to improve the quality and timing of specific products and services.

3.3. Artificial Intelligence and Large Language Models in Healthcare

In healthcare, the prevalence of protocols enables AI models to be useful in diagnostics, monitoring and hospital administration among other processes in healthcare service delivery due to AI’s tremendous pattern recognition capability [54]. Radiographs, histology, and medical records can all be analysed by AI models, which act as second opinions in the process of diagnosis and management [66]. For these reasons, there are several examples of AI being applied in medical sciences, outside of and to a greater extent than chat-based LLMs like ChatGPT. While AI in general was not specifically designed for use in medicine, studies reveal promising results and a potential for these tools to be integrated into clinical practice in the near future [67].

3.3.1. The Role of Artificial Intelligence and Large Language Models in Imaging Based Diagnosis and Prognosis

Deep learning models have been trained to perform various imaging-based diagnosis and prognosis tasks with good outcomes. In 2016, researchers at Google trained a deep learning model using 128,175 fundoscopy images for the purpose of diabetic retinopathy diagnosis and prognosis [68]. Their model demonstrated high specificity and sensitivity for detecting referable retinopathies in shorter times, demonstrating its efficiency. Moreover, the model was able to detect diabetic retinopathy in early stages due to its ability to analyse images at pixel level; a task difficult for the human eye [69]. Another application of image-based diagnosis is in skin cancer detection. Mehr and Ameri demonstrated the viability of a deep learning model in skin cancer diagnosis [70]. Winkler et al. explored the benefits of human-machine cooperation and found that their market-approved model alone achieved higher diagnostic accuracy than dermatologists alone [71]. Furthermore, when dermatologists integrated the model to assist with their decision-making, their diagnostic sensitivity and specificity significantly improved. ChatGPT-4.0’s image evaluation feature has been studied in electrocardiography assessment. Günay et al. demonstrated its ability to outperform emergency medicine specialists as well as cardiologists [72]. Thus, with new developments in its technology, ChatGPT has potential to perform outside of solely text-based applications.

3.3.2. The Role of Large Language Models in Enhancing Clinical-Decision Making and Diagnostic Accuracy

ChatGPT and other LLMs have been heavily studied in healthcare settings in their capacity to assist clinicians with decision-making and diagnosis [73]. A study by Barash et al. demonstrated ChatGPT-4.0’s ability to consistently recommend the correct imaging referral type for real-world emergency cases, as well as provide appropriate radiology referrals and protocols [74]. With prompts provided about maxillofacial trauma patients, ChatGPT is able to assist in triaging and recommending appropriate referrals [15]. Delsoz et al. obtained a high diagnostic accuracy using ChatGPT-4.0 to diagnose corneal eye diseases from text-based prompts [75]. LLMs have proven able to provide highly accurate assistance in medical decision-making across multiple subspecialties, including hand surgery, plastics and general practice [76,77,78]. These decisions include synthesising differential diagnoses, generating treatment plans, and making suitable referrals [79]. In clinical practice, Goh et al. showed that physicians were willing to change their initial clinical impressions following LLMs assistance, thus improving their clinical decision-making accuracy [80]. Similarly, GPT-4.0’s high diagnostic accuracy in ED settings underscores its potential to be used as a supplementary tool by emergency physicians [81,82]. The high accuracy of responses in various studies demonstrates the possibility of LLMs to augment clinical decision-making.

3.3.3. Enhancing Patient Communication and Education with Large Language Models

Outside of hospitals and clinics, chat-based AI applications have been proposed to act as doctor–patient interfaces. These studies suggest that these models can provide reliable sources of information, in the absence of real professionals, to patient concerns and queries [83,84]. As generative AI models are designed to produce complex, coherent responses, the challenge lies in the extent of their pre-trained medical knowledge and understanding [85]. A recent cross-sectional study examined ChatGPT’s ability to answer patient questions on the public social media forum Reddit [83]. Evaluators preferred a majority of responses generated by ChatGPT over physician responses, surprisingly with regard to empathy as well. Similarly, it was able to provide appropriate recommendations for perioperative questions and concerns, carpal tunnel syndrome, and subdural haematomas [83,86,87]. Further exploration of this technology is justified, as exemplified benefits include lowering physician burnout, improving patient outcomes, and increasing public health literacy [85,88].

3.3.4. The Impact of Large Language Models on Academia and Research in Healthcare

In academia, the use of LLMs in medical education and research have been highly studied. For example, a study by Breeding et al. demonstrated that medical students prefer articles written by ChatGPT over evidence-based surgical textbooks [89]. These articles were on the pathogenesis, diagnosis and management of five common surgical pathologies; ChatGPT wrote clearer and more organised articles, whereas evidence-based articles were rated as more comprehensive. Additionally, students have shown significantly greater levels of interest in education and self-directed learning when participating in chatbot-based educational programs compared to typical non-synchronised pre-test and post-test programs [90]. In certain studies, ChatGPT-4.0 was demonstrated to achieve higher scores than medical students on medical examinations and so is able to be utilised as a tool pre-test to evaluate the validity of examination questions [91,92,93]. With research, ChatGPT is able to quickly analyse, summarise and interpret large amounts of data [94]. It also provides valid and meaningful feedback when peer reviewing papers, complementing human peer reviews and enhancing efficiency in the editorial process [95,96]. In a comparison of ChatGPT as a statistical analysis tool against traditional biostatistical software on pandemic data, Huang et al. demonstrated its ability to improve efficiency and user-friendliness [97]. Overall, LLMs are a powerful complementary tool in epidemiological research. Furthermore, they excel at text-based tasks, improving engagement with education and streamlining research processes, which make them highly useful in processing triaging information.

4. The Role of Large Language Models in Trauma Triaging

Effective triaging is a high-pressure task that requires swift decision-making and can be complicated by complex, diverse presentations [98]. The rationale for the use of LLMs to assist in trauma triaging is extensive and has garnered interest for its use in this space. Despite this, there remains a paucity of evidence to advocate for the use of these tools in real-world trauma triaging. Nonetheless, through the rapid processing of complex medical histories and inputs, LLMs possess immense potential to create timely preliminary assessments of patients that can be used to affect triage decisions. Furthermore, the innate capacity to recognise patterns and correlations from input data can improve the consistency and accuracy of triaging assessments that may not be immediately recognised by experienced clinicians. For these reasons, implementing LLMs use into triaging may reduce time constraints, improve accuracy, offer rapid and appropriate escalation of deteriorating trauma patients, improve resource allocations and alleviate burdens on emergency trauma systems [10,14,15,99]. Other potential applications of LLMs in improving triaging services include assisting and supplementing decision-making capacity for clinicians through generating triage recommendations and by providing simulated scenarios as a training tool [15]. To date, several studies have explored the potential application of LLMs as a tool for emergency trauma triaging, including ChatGPT-3.5, ChatGPT-4.0 and Google Gemini.

4.1. Current Evidence for the Use of Large Language Models in Trauma Triaging

4.1.1. Accuracy of Triaging

Studies using both generated clinical simulations and real ED patient data have shown potential promise for the use of LLMs in trauma triaging. Paslı et al. conducted a single-centre prospective observational study over a three-day period of patients presenting to the ED (n = 758) to compare the triage performance of ChatGPT-4.0, the emergency triage team and the emergency medicine physician based on the ESI scale. Both the emergency triage team and ChatGPT-4.0 performed with near-perfect concordance with the emergency medical physician (Cohen’s κ = 0.893 and 0.899, respectively; all comparisons at p < 0.001) [10]. Williams et al. conducted simulations on paired retrospective ED presentations (n = 500) to compare the decision-making accuracy of ChatGPT-4.0 with an ED resident physician in determining clinical acuity based on the ESI score [100]. They analysed 10,000 pairs of adult emergency department encounters, each with distinct ESI acuity levels. LLMs were queried to determine which patient in each pair had a higher acuity based on deidentified clinical notes from their initial ED documentation. The authors found comparable accuracy between ChatGPT-4.0 (mean = 0.88, 95% CI: 0.86–0.91) and ED resident physicians (mean = 0.86, 95% CI: 0.83–0.89) [100]. While the study focused on acuity classification, other critical aspects such as dynamic changes in patient status, were not evaluated [100]. In addition, generated simulations of constructed clinical vignettes (n = 45) conducted by Ito et al. evaluated GPT-4’s ability to diagnose and triage health conditions based on 45 clinical vignettes, comparing its performance to three physicians [101]. Results showed comparable performances in appropriate triaging between ChatGPT-4.0 (accuracy = 30/45, 66.7%; 95% CI 51.0–80.0%) and three physicians (accuracy = 30/45, 66.7%; 95% CI 51.0–80.0%; p = 0.99) [101]. Interestingly, this study further highlighted no changes in decision-making accuracy when accounting for ethnic demographic information [101]. In some instances, studies have highlighted superior performances from LLMs compared to medical professionals in trauma triaging. Meral et al. compared the performance of ChatGPT-4.0 and Google Gemini with emergency medicine specialists (n = 10) using case examples from the ESI Implementation Handbook (n = 100) [99]. ChatGPT-4.0 (mean = 70.60 ± 3.74) outperformed both Google Gemini (mean = 59.70 ± 2.49; p = 0.002) and emergency medicine specialists (mean = 57.30 ± 8.71, p < 0.001) in correct triage [99]. Furthermore, Google Gemini (mean = 39.50 ± 1.17) and GPT-4 (mean = 38.30 ± 2.31) both outperformed emergency medicine specialists (mean = 22.20 ± 7.77) when triaging immediate high-acuity patients (ESI 1–2 class) (all comparisons p < 0.001) [99]. Despite this, although it may be worth noting that the emergency medicine specialists participating in the study did not frequently use the ESI triage model in daily practice, which may have skewed their performance [99]. Interestingly, studies using training-enhanced chat-based LLMs have highlighted good accuracy in triaging. Gan et al. used a validated questionnaire consisting of 15 diverse mass casual incident scenarios to evaluate triage accuracy across four categories: “Walking wounded,” “Respiration,” “Perfusion” and “Mental Status” [19]. They found that ChatGPT-3.5 trained with the START protocol (n= 12/15, 80%) had greater accuracy than untrained ChatGPT-3.5 (n = 4/15, 26.7%) using clinical scenarios created by emergency medical services (EMS) teams [19]. Furthermore, Yazaki et al. provided 100 simulated triage scenarios to Retrieval Augmented Generation enhanced (RAG-enhanced GPT) models along with patient vital signs, symptoms, and observations from EMS teams to classify triage levels [2]. The performance of the RAG-enhanced LLMs was compared with that of emergency medical technicians and physicians [2]. Their results showed higher triage accuracy rates for Retrieval Augmented Generation enhanced (RAG-enhanced) ChatGPT-3.5 (70%) compared to two emergency physicians (50% and 47%) and emergency medical technicians (35% and 38%) based on the Japanese triaging system using simulated triaged scenarios (n = 100) [2]. These results highlight exciting potential for the use of LLMs in trauma triaging.

Despite these outcomes supporting the use of LLMs, there is also evidence to suggest more variable findings, highlighting deficits in applying chat-based AI to triage. Gan et al. compared the accuracy of mass casualty incident (MCI) triage using the START protocol between Google Bard, ChatGPT-3.5 and medical students for prompts created by EMS medical directors (n = 15). The accuracy scores of medical students were obtained in a prior study by Sapp et al. [102]. This study found similar accuracy rates between Google Bard (n = 9/15, 60%) and medical students (64.3%, n = 315). ChatGPT-3.5 performed significantly worse in comparison (n = 4/15, 26.67%, p = 0.002) [103]. However, it is important to acknowledge the small sample size of prompts (n = 15) used in this study, which limits the reliability of data. Furthermore, comparisons with the performance of medical students may not appropriately assess the utility of LLMs since triaging may be outside the scope of more junior, untrained professionals. In comparison to performances with medical professions, Masanneck et al. conducted 124 ED case vignettes from a tertiary care hospital in Germany, with the main outcome measured as the agreement between different raters’ triage classifications, quantified via Cohen’s κ statistic [104]. They showed substantial agreement between ChatGPT-4.0 and four untrained doctors (Cohen κ = 0.67 ± 0.037) based on the Manchester Triage System (MTS) [104]. However, it is important to note that the study did not provide LLMs with specific training or context on the MTS, which could have limited their accuracy in triage decision-making [104]. Other models such as ChatGPT-3.5 performed worse compared to ChatGPT-4.0 (Cohen κ 0.54 ± 0.024; p < 0.001) or displayed odd triaging behaviour [104]. Kim et al. compared the accuracy of triage between ChatGPT-3.5, ChatGPT-4.0 and four emergency department healthcare workers (one physician, three paramedics) using virtual patient cases (n = 202) and the Korean Triage and Acuity Scale (KTAS). ChatGPT-4.0 had moderate agreement (Fleiss’ κ = 0.532) with good reliability (ICC = 0.802), whilst ChatGPT-3.5 had fair agreement (Fleiss’ κ = 0.320) with moderate reliability (ICC = 0.52) compared to ED healthcare workers [105].

On the contrary, there have been studies that demonstrate poor triaging performance by LLMs. Franc et al. evaluated the effectiveness of ChatGPT in triaging patients using the Canadian Triage and Acuity Scale (CTAS) [14]. Six unique prompts were created by five emergency physicians and used to query ChatGPT with 61 validated patient vignettes. Each combination of a prompt and vignette was repeated 30 times, resulted in the simulation triages (n = 10,980) with an accuracy rate of 47.5% [14]. In these cases, providing ChatGPT-3.5 with more extensively detailed text was not associated with improved accuracy of triaging [14]. Sarbay et al. compared the accuracy of ChatGPT with emergency medicine specialists (n = 2) using case scenarios generated from the ESI handbook v4 (n = 50) [106]. This study demonstrated fair agreement between ChatGPT and emergency medicine specialists (κ mean = 0.341) [106]. However, it is important to note that the performance of ChatGPT is greater in high-acuity cases (ESI 1-2 class), with a sensitivity of 76.2% (95% CI: 52.8–91.8), specificity of 93.1% (95% CI: 77.2–99.2), positive predictive value (PPV) of 88.9% (95% CI: 65.3–98.6), and negative predictive value (NPV) of 84.4 (95% CI: 67.2–94.7) [106]. Zaboli et al. recreated clinical vignettes from real clinical cases (n = 30) to compare the performances of ChatGPT-3.5 and experienced triage nurses (n = 2) based on MTS guidelines [107]. This study revealed fair agreeance between ChatGPT-3.5 and triage nurses (unweighted Cohen κ = 0.278; 95% CI: 0.231–0.388) [107]. Therefore, despite the preliminary evidence supporting chat-based LLMs in trauma triaging processes, the current field demonstrates outcome heterogeneity.

4.1.2. Rates of Trauma Undertriage and Overtriage with Large Language Models

Of the studies that explored the triage accuracy of LLMs, some studies additionally explored rates of undertriage and overtriage with variable performance demonstrated. In particular, some studies have demonstrated positive outcomes in reducing rates of undertriage, but not overtriage with the use of LLMs. Yazaki et al. demonstrated low rates of undertriage with RAG-enhanced ChatGPT-3.5 (8%), compared to ChatGPT-3.5 (33%) and ChatGPT-4.0 (39%) using simulated triaged scenarios (n = 100) [2]. Masanneck et al. observed tendencies to undertriage by untrained doctors, whilst overtriage rates were greater in ChatGPT models when assessing case vignettes adapted from single-centre ED presentations (n = 124). However, no statistical comparisons were made [104]. Similarly, Meral et al. showed that both ChatGPT-4.0 (mean = 9.10 ± 2.92) and Google Gemini (mean = 5.10 ± 1.66) outperformed emergency medicine specialists (mean = 32.90 ± 11.83) in minimising undertriage rates (all comparisons p < 0.001) using case examples from the ESI Implementation Handbook (n = 100) [99]. However, emergency medicine specialists (mean = 9.80 ± 5.07) had the lowest rates of overtriage compared to ChatGPT-4.0 (mean = 20.30 ± 4.92, p < 0.001) and Google Gemini (mean = 35.20 ± 2.93, p < 0.001) [99]. Gan et al. showed that for undertriaging, Google Bard had zero incidences, ChatGPT-3.5 had one incidence (n = 1/15, 6.67%) using generated clinical scenarios (n = 15) [103]. Comparatively, medical students had an undertriage rate of 12.6%. In terms of overtriage, Google Bard had six incidences (n = 6/15, 40%), ChatGPT-3.5 had 10 incidences (n = 10/15, 66.67%) and medical students had an overtriage rate of 17.82% [103]. However, it is important to acknowledge the limited skillset of medical students in triaging, as well as smaller sample sizes which may overall influence comparisons of LLMs performances. Gan et al. further explored the effects of training on LLMs and found that ChatGPT-3.5 trained with the START protocol had no cases of undertriaging (n = 0/15, 0%) and overtriage rates of 20% (n = 3/15) [19]. However, other studies have concluded high rates of both undertriage and overtriage with LLMs use. Sarbay et al. observed overtriage and undertriage rates of 22% (n = 11/50) and 19% (n = 9/50), respectively, with the use of ChatGPT when assessing case scenarios generated from the ESI handbook v4 (n = 50) [106]. Furthermore, Franc et al. found an undertriage rate of 13.7% and overtriage rate of 38.7% with the use of ChatGPT for generated simulated triages (n = 10,980) [14]. Importantly, repeatability contributed to 21% of overall variation where even when the same prompt was used multiple times, the model’s responses lacked consistency. Furthermore, reproducibility (using different prompts) accounted for 4% of the variation, showing some inconsistencies between different prompt formats [14]. In total, current evidence regarding undertriaging and overtriaging rates with LLMs use is quite variable. In comparison to ideal rates of undertriaging (<5%) and overtriaging (25–35%) as defined by the Field Triage Guidelines, the performance of LLMs shows promise in few studies [44]. Overall, it is difficult to determine the performance of LLMs in reducing undertriage and overtriage rates given the variability of outcomes of current studies, as well as global rate estimations. That is, rates of under triaging ranged from 1.6% to 72% and rates of overtriaging ranged from 9.9% to 87.4% [44].

4.1.3. Evaluation of Large Language Models in Emergency Trauma Triaging: Strengths and Limitations in Current Research

Overall, many studies have demonstrated good outcomes of LLMs in terms of triaging accuracy, highlighting the exciting potential for the use of LLMs in emergency trauma triaging. A strength of these studies includes comparing triage performance of LLMs with trained emergency medical professionals, both with adapted real-world cases and generated clinical vignettes [2,10,19,99,100]. Furthermore, studies that explored the performance of LLMs within the field encompassed a diverse range of trauma triage scales, including ESI, START, the Japanese triaging system, KTAS, CTAS and MTS [2,10,14,19,99,100,104,105]. For studies that highlighted strong performance in concordance with emergency medical specialists, this includes triage systems such as ESI, START, and the Japanese triaging system, which demonstrates the adaptability of LLMs as a multipurpose tool for medical triaging [2,10,19,99,100]. A majority of included studies additionally had large sample sizes, which increases the reliability of rates of accuracy, overtriage and undertriage.

Despite these strengths and positive outcomes, it is important to appreciate the heterogeneity of current studies. With the exception of one prospective observational study, most studies are preliminary in silico simulations using either generated scenarios or retrospective real-world data. This reduces clinical translatability and may not reflect the performance of LLMs in a real-world setting, especially since data collection in the immediate situation may be incomplete [2,101,108]. Furthermore, it is important to appreciate the heterogeneity of current data surrounding accuracy, especially with undertriage and overtriage rates when applying LLMs to emergency trauma triaging. This provides challenges in determining the utility of LLMs as a complementary tool for trauma triaging. In particular, the variability of outcomes limits generalisable comparisons of accuracy, undertriage and overtriage rates studies with current global trends. The variability of outcomes generated from these studies may be attributed to either inherent differences between the performance of different LLMs or from heterogeneity in tested datasets. For the former, Kanthini et al. demonstrated the variability of performance of many LLMs in the clinical setting using the MEDIC framework [109]. This framework grades the clinical competence of LLMs based on five domains: clinical competence, medical reasoning, ethics and bias, data and language understanding, in-context learning, and clinical safety [109]. Furthermore, discrepancies in LLM performance may also be influenced by enhancements with specific training programs such as RAG [65]. To add, a common deficit found in many studies was the susceptibility of LLMs to ‘forget’ previous data after several inputs and required frequent updates. This includes pre-training with scoring systems used to assess the severity of scenarios [10,99]. In addressing the latter point, data variability of different cohort samples may influence the accuracy of LLMs in clinical decision-making, especially since performance of LLMs is dependent on typical learnt scenarios and pattern recognition. Specifically, these include cohort demographics such as rural versus metropolitan patients, ethnicity, country of publication, triaging systems used in studies, sex, age, and financial status [1,100,101,110,111,112]. For example, studies that used prospective or retrospective observational data mainly came from the USA, with one study conducted in Turkey and Italy [10,98,100,107]. Although not explicitly explored, it is important to acknowledge discrepancies in accessibility to immediate trauma care between countries with low or good access to resources. This influences significant aspects of immediate trauma care and triage including pre-hospital transfer, patient transfer and trauma preparedness. Furthermore, accessibility to trauma-specific infrastructure and centralised trauma specialists differs in lower- and higher-income countries, with many low socioeconomic countries not having formal trauma systems and registries [1,110,111,112]. Similarly, important factors determining trauma triage escalation, including mechanism and severity of injury, may differ in incidence in low-, middle- or high-income countries [112]. Specifically, previous studies have deemed the mechanism of injury to account for over half of overtriage cases [24]. All of these factors influence decision-making processes in traumatic cases and overall trauma-related outcomes. Hence, prompts generated from countries with different systems, accessibility to specific care and facilities, and epidemiology of presenting mechanism of injury may influence the accuracy and efficacy of LLMs in providing specific tailored triaging advice.

Additionally, prompt inputs were not necessarily standardised between studies, which may lead to output heterogeneity and explain the outcome variability evident in the literature. Specifically, this includes the completeness and structuring of input datasets, which may lead to inconsistencies between LLM decision-making outcomes within different studies [2,101].

4.2. Ethical Challenges, Bias and Future Directions for the Integration of Large Language Models into Trauma Triage Systems

Despite the preliminary evidence supporting the use of LLMs in trauma triaging, there are ethical considerations that must be accounted for prior to any meaningful evidence-based implementation of these tools in trauma triaging practice. Non-sensical, non-factual or irrelevant outputs created by generative AI without correlation to real-world data, dubbed ‘artificial hallucination’, raises legal and safety concerns of LLMs use in healthcare practice [15,105]. This risk is particularly relevant within healthcare due to increased susceptibility of artificial hallucinations with greater technical complexity of medical information [17]. Furthermore, the quality of outputs may be negatively influenced by inconsistent information, infrequent updates, lack of validation by medical professionals and incorrect data inputs, all of which may affect final triage outputs [98,103]. This increases risks of malpractice or delaying appropriate care and raises issues relating to the regulation of generative AI programs to ensure quality control [112]. Due to this, generation of misinformation with confidence creates safety and legal barriers against the implementation of LLMs into practice, including accountability for potential malpractice events [15,108,112,113,114,115].

Furthermore, the capacity of these tools to recognise patterns from input data may result in the generation of biased outputs that may limit equitable care amongst all patient populations. Particularly, this is a concern for demographic inputs such as sex, ethnicity and age [100,101]. Inappropriate algorithm biases based on these demographic inputs may exacerbate existing social, cultural or historical stereotypes that can lead to discriminatory care with potential significant clinical errors [114]. Although importantly, one study highlighted that ethnicity did not play a role in influencing the accuracy of LLMs [101]. Potential ramifications of biased outputs include underrepresentation of certain demographic groups, overemphasis on specific treatment, or perpetuating outdated practices [112]. Alternatively, the performance of LLMs may suffer in situations where rigorous guidelines are not commonly practiced such as non-trauma centres, or in areas including rural communities and developing countries with under-represented cohorts. For example, LLMs are typically trained with urban data, which may not appropriately represent health conditions and other socioeconomic factors required in holistic decision-making [116,117,118,119]. Deficits in pattern recognition may also influence the accuracy of LLMs in more complex cases. Shikino et al. observed decreases in diagnostic accuracy of ChatGPT-4.0 with increasing atypicality of clinical vignettes [116]. Furthermore, complexity of cases introduced by human judgement, empathy or more specialised knowledge can further influence the accuracy of LLMs [103]. This opens discussions into the need for expertise supervision with LLMs use in the clinical setting and potential use of teams with human physicians and LLMs [105].

Intuitively, issues surrounding privacy and security of confidential patient information and regulation of open-sourced LLMs have also been raised [104,112,120]. This involves informed patient consent to the use of LLMs and the safeguarding of sensitive patient information in cases such as data breaches, re-identification of patients despite proactive steps of deidentification and transparency to the use of data [114,121]. Current laws across the world have been set in place for data protection. In the European Union, LLMs are repeatedly assessed for compliance with the General Data Protection Regulation, which only permits access to health-related data with specific justifications such as informed consent [121]. In the USA, regulation of privacy is maintained under policies such as the Health Insurance Portability and Accountability Act (HIPAA) [114]. Considerations into robust security measures and strong privacy protections by healthcare organisations are required to aid the transition of LLMs in the clinical setting [114].

Overall, future randomised controlled trials and prospective observational studies exploring the accuracy, and rates of overtriage and undertriage of LLMs within the immediate clinical setting will further elucidate the potential utility of LLMs for trauma triaging [15,19]. To increase the equitability of LLMs outputs, demographic data including sex, age and ethnicity should further be explored to prevent underrepresentation and inherent biases [100,101]. Proof of concept studies also comparing the performance of LLMs with the use of multiple triage scores and in different settings including comparisons within metropolitan versus rural settings and within non-trauma centres may also ascertain the multipurpose applicability of LLMs in trauma triaging [2]. Finally, explorations into the robustness of LLMs to more complex or incomplete prompts will improve clinical relevance of future research and aid in translation to the practice [2]. On the other hand, investigations into regulation of LLMs, including safeguarding of patient confidential information, quality control measures, and transparency of LLMs use are required to overall help develop guidelines that will aid the transition of LLMs into clinical practice [112,113,114,115].

5. Conclusions

This review outlines a comprehensive and radical exploration of the potential applications of LLMs and their ability to be integrated into trauma triaging. At present, there is preliminary evidence to suggest that such LLMs have significant potential to augment the efficiency and accuracy of triaging decisions, and in some cases may outperform inexperienced or experienced professionals in specific trauma triaging scenarios. This offers the potential to better streamline trauma activation and triaging, especially in the context of an increase in trauma cases worldwide. However, these findings also occur in the background of conflicting evidence, with some studies suggesting that LLMs performed poorly compared to healthcare professionals for the same triaging indications. Hence, this variability in the outcomes introduces challenges for LLMs to be introduced and applied in real-word settings. While there are inconsistencies in decision-making, over triaging and under triaging, which complicates its application, it is undeniable that LLMs hold immense value in its development potential. Therefore, more rigorous research into the applications of LLMs is required to integrate such technology into applications of trauma triaging to reduce healthcare burden.

Author Contributions

Conceptualization, K.D.R.L.; methodology, K.D.R.L.; software, K.D.R.L.; validation, K.L., J.C., D.M. and K.D.R.L.; formal analysis, K.L., J.C., D.M. and K.D.R.L.; investigation, K.L., J.C., D.M. and K.D.R.L.; resources, K.D.R.L.; data curation, K.L., J.C., D.M. and K.D.R.L.; writing—original draft preparation, K.L., J.C., D.M. and K.D.R.L.; writing—review and editing, K.L., J.C., D.M. and K.D.R.L.; visualization, K.D.R.L.; supervision, K.D.R.L.; project administration, K.D.R.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

No new data was generated or developed in the work related to this manuscript. All referenced data is publicly available on medical databases.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Bedard, A.F.; Mata, L.V.; Dymond, C.; Moreira, F.; Dixon, J.; Schauer, S.G.; Ginde, A.A.; Bebarta, V.; Moore, E.E.; Mould-Millman, N.-K. A scoping review of worldwide studies evaluating the effects of prehospital time on trauma outcomes. Int. J. Emerg. Med. 2020, 13, 64. [Google Scholar] [CrossRef] [PubMed]

- Yazaki, M.; Maki, S.; Furuya, T.; Inoue, K.; Nagai, K.; Nagashima, Y.; Maruyama, J.; Toki, Y.; Kitagawa, K.; Iwata, S.; et al. Emergency Patient Triage Improvement through a Retrieval-Augmented Generation Enhanced Large-Scale Language Model. Prehosp. Emerg. Care 2024, 1–7. [Google Scholar] [CrossRef] [PubMed]

- Chen, X.; Guyette, F.X.; Peitzman, A.B.; Billiar, T.R.; Sperry, J.L.; Brown, J.B. Identifying patients with time-sensitive injuries: Association of mortality with increasing prehospital time. J. Trauma Acute Care Surg. 2019, 86, 1015–1022. [Google Scholar] [CrossRef] [PubMed]

- Morris, R.S.; Karam, B.S.; Murphy, P.B.; Jenkins, P.; Milia, D.J.; Hemmila, M.R.; Haines, K.L.; Puzio, T.J.; De Moya, M.A.; Tignanelli, C.J. Field-triage, hospital-triage and triage-assessment: A literature review of the current phases of adult trauma triage. J. Trauma Acute Care Surg. 2021, 90, e138–e145. [Google Scholar] [CrossRef] [PubMed]

- Voskens, F.J.; van Rein, E.A.; van der Sluijs, R.; Houwert, R.M.; Lichtveld, R.A.; Verleisdonk, E.J.; Segers, M.; van Olden, G.; Dijkgraaf, M.; Leenen, L.P. Accuracy of prehospital triage in selecting severely injured trauma patients. JAMA Surg. 2018, 153, 322–327. [Google Scholar] [CrossRef]

- Teixeira, P.G.; Inaba, K.; Hadjizacharia, P.; Brown, C.; Salim, A.; Rhee, P.; Browder, T.; Noguchi, T.T.; Demetriades, D. Preventable or potentially preventable mortality at a mature trauma center. J. Trauma 2007, 63, 1338–1346, discussion 1346–1347. [Google Scholar] [CrossRef]

- Schellenberg, M.; Docherty, S.; Owattanapanich, N.; Emigh, B.; Lutterman, P.; Karavites, L.; Switzer, E.; Wiepking, M.; Chudnofsky, C.; Inaba, K. Emergency physician and nurse discretion accurately triage high-risk trauma patients. Eur. J. Trauma Emerg. Surg. 2023, 49, 273–279. [Google Scholar] [CrossRef]

- van Rein, E.A.; van der Sluijs, R.; Voskens, F.J.; Lansink, K.W.; Houwert, R.M.; Lichtveld, R.A.; de Jongh, M.A.; Dijkgraaf, M.G.; Champion, H.R.; Beeres, F.J. Development and validation of a prediction model for prehospital triage of trauma patients. JAMA Surg. 2019, 154, 421–429. [Google Scholar] [CrossRef]

- MacKenzie, E.J.; Rivara, F.P.; Jurkovich, G.J.; Nathens, A.B.; Frey, K.P.; Egleston, B.L.; Salkever, D.S.; Scharfstein, D.O. A national evaluation of the effect of trauma-center care on mortality. N. Engl. J. Med. 2006, 354, 366–378. [Google Scholar] [CrossRef]

- Paslı, S.; Şahin, A.S.; Beşer, M.F.; Topçuoğlu, H.; Yadigaroğlu, M.; İmamoğlu, M. Assessing the precision of artificial intelligence in emergency department triage decisions: Insights from a study with ChatGPT. Am. J. Emerg. Med. 2024, 78, 170–175. [Google Scholar] [CrossRef]

- McKee, C.H.; Heffernan, R.W.; Willenbring, B.D.; Schwartz, R.B.; Liu, J.M.; Colella, M.R.; Lerner, E.B. Comparing the Accuracy of Mass Casualty Triage Systems When Used in an Adult Population. Prehosp. Emerg. Care 2020, 24, 515–524. [Google Scholar] [CrossRef] [PubMed]

- Tam, H.L.; Chung, S.F.; Lou, C.K. A review of triage accuracy and future direction. BMC Emerg. Med. 2018, 18, 58. [Google Scholar] [CrossRef] [PubMed]

- Suamchaiyaphum, K.; Jones, A.R.; Markaki, A. Triage accuracy of emergency nurses: An evidence-based review. J. Emerg. Nurs. 2023, 50, 44–54. [Google Scholar] [CrossRef] [PubMed]

- Franc, J.M.; Cheng, L.; Hart, A.; Hata, R.; Hertelendy, A. Repeatability, reproducibility, and diagnostic accuracy of a commercial large language model (ChatGPT) to perform emergency department triage using the Canadian triage and acuity scale. Can. J. Emerg. Med. 2024, 26, 40–46. [Google Scholar] [CrossRef] [PubMed]

- Frosolini, A.; Catarzi, L.; Benedetti, S.; Latini, L.; Chisci, G.; Franz, L.; Gennaro, P.; Gabriele, G. The role of large language models (LLMs) in providing triage for maxillofacial trauma cases: A preliminary study. Diagnostics 2024, 14, 839. [Google Scholar] [CrossRef]

- Merrell, L.A.; Fisher, N.D.; Egol, K.A. Large language models in orthopaedic trauma: A cutting-edge technology to enhance the field. JBJS 2023, 105, 1383–1387. [Google Scholar] [CrossRef]

- Le, K.D.R.; Tay, S.B.P.; Choy, K.T.; Verjans, J.; Sasanelli, N.; Kong, J.C.H. Applications of natural language processing tools in the surgical journey. Front. Surg. 2024, 11, 1403540. [Google Scholar] [CrossRef]

- Sasanelli, F.; Le, K.D.R.; Tay, S.B.P.; Tran, P.; Verjans, J.W. Applications of natural language processing tools in orthopaedic surgery: A scoping review. Appl. Sci. 2023, 13, 11586. [Google Scholar] [CrossRef]

- Gan, R.K.; Uddin, H.; Gan, A.Z.; Yew, Y.Y.; González, P.A. ChatGPT’s performance before and after teaching in mass casualty incident triage. Sci. Rep. 2023, 13, 20350. [Google Scholar] [CrossRef]

- Peta, D.; Day, A.; Lugari, W.S.; Gorman, V.; Pajo, V.M.T. Triage: A global perspective. J. Emerg. Nurs. 2023, 49, 814–825. [Google Scholar] [CrossRef]

- Trauma Victoria. Major Trauma Guidelines & Education—Victorian State Trauma System. Available online: https://trauma.reach.vic.gov.au/guidelines/early-trauma-care/early-activation (accessed on 19 August 2024).

- ACT Government Canberra Health Services. Trauma Team Activation and Roles & Responsibilities. Available online: https://www.canberrahealthservices.act.gov.au/__data/assets/word_doc/0010/1981693/Trauma-Team-Activation-and-Roles-and-Responsibilities.docx (accessed on 19 August 2024).

- NSW Health. Trauma Team Activation Guidelines—ST George Hospital (SGH). Available online: https://www.seslhd.health.nsw.gov.au/sites/default/files/groups/StGTrauma/Policies/BR372_SGH_Trauma_team_activation_guideline.pdf (accessed on 19 August 2024).

- Cameron, M.; McDermott, K.M.; Campbell, L. The performance of trauma team activation criteria at an Australian regional hospital. Injury 2019, 50, 39–45. [Google Scholar] [CrossRef] [PubMed]

- Yancey, C.C.; O’Rourke, M.C. Emergency Department Triage. In StatPearls; StatPearls Publishing: Treasure Island, FL, USA, 2024. [Google Scholar]

- UAM Medical Centre. Trauma Services Manual. Available online: https://medicine.uams.edu/surgery/wp-content/uploads/sites/5/2016/12/Trauma-Team-Activation-Criteria.pdf (accessed on 19 August 2024).

- McDonell, A.; Veitch, C.; Aitken, P.; Elcock, M. The organisation of trauma services for rural Australia. Australas. J. Paramed. 2009, 7, 1–14. [Google Scholar] [CrossRef]

- Bhalla, M.C.; Frey, J.; Rider, C.; Nord, M.; Hegerhorst, M. Simple Triage Algorithm and Rapid Treatment and Sort, Assess, Lifesaving, Interventions, Treatment, and Transportation mass casualty triage methods for sensitivity, specificity, and predictive values. Am. J. Emerg. Med. 2015, 33, 1687–1691. [Google Scholar] [CrossRef] [PubMed]

- Romig, L.E. Pediatric triage. A system to JumpSTART your triage of young patients at MCIs. JEMS J. Emerg. Med. Serv. 2002, 27, 52–58, 60. [Google Scholar]

- González, J.; Soltero, R. Emergency Severity Index (ESI) triage algorithm: Trends after implementation in the emergency department. Boletín Asoc. Médica Puerto Rico 2009, 101, 7–10. [Google Scholar]

- Clarkson, L.; Williams, M. EMS Mass Casualty Triage. In StatPearls; StatPearls Publishing: Treasure Island, FL, USA, 2024. [Google Scholar]

- Silvestri, S.; Field, A.; Mangalat, N.; Weatherford, T.; Hunter, C.; McGowan, Z.; Stamile, Z.; Mattox, T.; Barfield, T.; Afshari, A. Comparison of START and SALT triage methodologies to reference standard definitions and to a field mass casualty simulation. Am. J. Disaster Med. 2017, 12, 27–33. [Google Scholar] [CrossRef]

- SALT mass casualty triage: Concept endorsed by the American College of Emergency Physicians, American College of Surgeons Committee on Trauma, American Trauma Society, National Association of EMS Physicians, National Disaster Life Support Education Consortium, and State and Territorial Injury Prevention Directors Association. Disaster Med. Public Health Prep. 2008, 2, 245–246. [CrossRef] [PubMed]

- Grouse, A.; Bishop, R.; Bannon, A. The Manchester Triage System provides good reliability in an Australian emergency department. Emerg. Med. J. 2009, 26, 484–486. [Google Scholar] [CrossRef] [PubMed]

- Hodge, A.; Hugman, A.; Varndell, W.; Howes, K. A review of the quality assurance processes for the Australasian Triage Scale (ATS) and implications for future practice. Australas. Emerg. Nurs. J. 2013, 16, 21–29. [Google Scholar] [CrossRef]

- Huabbangyang, T.; Rojsaengroeng, R.; Tiyawat, G.; Silakoon, A.; Vanichkulbodee, A.; Sri-On, J.; Buathong, S. Associated factors of under and over-triage based on the emergency severity index; a retrospective cross-sectional study. Arch. Acad. Emerg. Med. 2023, 11, e57. [Google Scholar]

- Peng, J.; Xiang, H. Trauma undertriage and overtriage rates: Are we using the wrong formulas? Am. J. Emerg. Med. 2016, 34, 2191. [Google Scholar] [CrossRef] [PubMed]

- Yoder, A.; Bradburn, E.H.; Morgan, M.E.; Vernon, T.M.; Bresz, K.E.; Gross, B.W.; Cook, A.D.; Rogers, F.B. An analysis of overtriage and undertriage by advanced life support transport in a mature trauma system. J. Trauma Acute Care Surg. 2020, 88, 704–709. [Google Scholar] [CrossRef] [PubMed]

- Dinh, M.M.; Oliver, M.; Bein, K.J.; Roncal, S.; Byrne, C.M. Performance of the New South Wales Ambulance Service major trauma transport protocol (T1) at an inner city trauma centre. Emerg. Med. Australas. 2012, 24, 401–407. [Google Scholar] [CrossRef] [PubMed]

- Schellenberg, M.; Benjamin, E.; Bardes, J.M.; Inaba, K.; Demetriades, D. Undertriaged trauma patients: Who are we missing? J. Trauma Acute Care Surg. 2019, 87, 865–869. [Google Scholar] [CrossRef] [PubMed]

- Oh, B.Y.; Kim, K. Factors associated with the undertriage of patients with abdominal pain in an emergency room. Int. Emerg. Nurs. 2021, 54, 100933. [Google Scholar] [CrossRef]

- Newgard, C.D.; Staudenmayer, K.; Hsia, R.Y.; Mann, N.C.; Bulger, E.M.; Holmes, J.F.; Fleischman, R.; Gorman, K.; Haukoos, J.; McConnell, K.J. The cost of overtriage: More than one-third of low-risk injured patients were taken to major trauma centers. Health Aff. 2013, 32, 1591–1599. [Google Scholar] [CrossRef]

- Frykberg, E.R. Medical management of disasters and mass casualties from terrorist bombings: How can we cope? J. Trauma Acute Care Surg. 2002, 53, 201–212. [Google Scholar] [CrossRef]

- Lupton, J.R.; Davis-O’Reilly, C.; Jungbauer, R.M.; Newgard, C.D.; Fallat, M.E.; Brown, J.B.; Mann, N.C.; Jurkovich, G.J.; Bulger, E.; Gestring, M.L. Under-triage and over-triage using the field triage guidelines for injured patients: A systematic review. Prehosp. Emerg. Care 2023, 27, 38–45. [Google Scholar] [CrossRef]

- Curtis, K.; Olivier, J.; Mitchell, R.; Cook, A.; Rankin, T.; Rana, A.; Watson, W.L.; Nau, T. Evaluation of a tiered trauma call system in a level 1 trauma centre. Injury 2011, 42, 57–62. [Google Scholar] [CrossRef]

- Xiang, H.; Wheeler, K.K.; Groner, J.I.; Shi, J.; Haley, K.J. Undertriage of major trauma patients in the US emergency departments. Am. J. Emerg. Med. 2014, 32, 997–1004. [Google Scholar] [CrossRef]

- Dehli, T.; Fredriksen, K.; Osbakk, S.A.; Bartnes, K. Evaluation of a university hospital trauma team activation protocol. Scand. J. Trauma Resusc. Emerg. Med. 2011, 19, 18. [Google Scholar] [CrossRef] [PubMed]

- Staudenmayer, K.; Lin, F.; Mackersie, R.; Spain, D.; Hsia, R. Variability in California triage from 2005 to 2009: A population-based longitudinal study of severely injured patients. J. Trauma Acute Care Surg. 2014, 76, 1041–1047. [Google Scholar] [CrossRef] [PubMed]

- Rainer, T.H.; Cheung, N.; Yeung, J.H.; Graham, C.A. Do trauma teams make a difference?: A single centre registry study. Resuscitation 2007, 73, 374–381. [Google Scholar] [CrossRef] [PubMed]

- Choi, J.; Carlos, G.; Nassar, A.K.; Knowlton, L.M.; Spain, D.A. The impact of trauma systems on patient outcomes. Curr. Probl. Surg. 2021, 58, 100849. [Google Scholar] [CrossRef]

- Tomas, C.; Kallies, K.; Cronn, S.; Kostelac, C.; deRoon-Cassini, T.; Cassidy, L. Mechanisms of traumatic injury by demographic characteristics: An 8-year review of temporal trends from the National Trauma Data Bank. Inj. Prev. 2023, 29, 347–354. [Google Scholar] [CrossRef]

- af Ugglas, B.; Lindmarker, P.; Ekelund, U.; Djärv, T.; Holzmann, M.J. Emergency department crowding and mortality in 14 Swedish emergency departments, a cohort study leveraging the Swedish Emergency Registry (SVAR). PLoS ONE 2021, 16, e0247881. [Google Scholar] [CrossRef]

- Hamet, P.; Tremblay, J. Artificial intelligence in medicine. Metabolism 2017, 69, S36–S40. [Google Scholar] [CrossRef]

- Basu, K.; Sinha, R.; Ong, A.; Basu, T. Artificial intelligence: How is it changing medical sciences and its future? Indian J. Dermatol. 2020, 65, 365–370. [Google Scholar] [CrossRef]

- Xu, Y.; Liu, X.; Cao, X.; Huang, C.; Liu, E.; Qian, S.; Liu, X.; Wu, Y.; Dong, F.; Qiu, C.-W. Artificial intelligence: A powerful paradigm for scientific research. Innovation 2021, 2, 100179. [Google Scholar] [CrossRef]

- Tortora, L. Beyond Discrimination: Generative AI Applications and Ethical Challenges in Forensic Psychiatry. Front. Psychiatry 2024, 15, 1346059. [Google Scholar] [CrossRef]

- Nichols, J.A.; Herbert Chan, H.W.; Baker, M.A. Machine learning: Applications of artificial intelligence to imaging and diagnosis. Biophys. Rev. 2019, 11, 111–118. [Google Scholar] [CrossRef] [PubMed]

- Sarker, I.H. Deep learning: A comprehensive overview on techniques, taxonomy, applications and research directions. SN Comput. Sci. 2021, 2, 420. [Google Scholar] [CrossRef] [PubMed]

- Naveed, H.; Khan, A.U.; Qiu, S.; Saqib, M.; Anwar, S.; Usman, M.; Akhtar, N.; Barnes, N.; Mian, A. A comprehensive overview of large language models. arXiv 2023, arXiv:2307.06435. [Google Scholar]

- Shahab, O.; El Kurdi, B.; Shaukat, A.; Nadkarni, G.; Soroush, A. Large language models: A primer and gastroenterology applications. Ther. Adv. Gastroenterol. 2024, 17, 17562848241227031. [Google Scholar] [CrossRef] [PubMed]

- Khurana, D.; Koli, A.; Khatter, K.; Singh, S. Natural language processing: State of the art, current trends and challenges. Multimed. Tools Appl. 2023, 82, 3713–3744. [Google Scholar] [CrossRef]

- Ouyang, L.; Wu, J.; Jiang, X.; Almeida, D.; Wainwright, C.; Mishkin, P.; Zhang, C.; Agarwal, S.; Slama, K.; Ray, A. Training language models to follow instructions with human feedback. Adv. Neural Inf. Process. Syst. 2022, 35, 27730–27744. [Google Scholar]

- Christiano, P.F.; Leike, J.; Brown, T.; Martic, M.; Legg, S.; Amodei, D. Deep reinforcement learning from human preferences. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar] [CrossRef]

- Radford, A.; Narasimhan, K.; Salimans, T.; Sutskever, I. Improving Language Understanding by Generative Pre-Training. 2018. Available online: https://hayate-lab.com/wp-content/uploads/2023/05/43372bfa750340059ad87ac8e538c53b.pdf (accessed on 23 August 2024).

- Gao, Y.; Xiong, Y.; Gao, X.; Jia, K.; Pan, J.; Bi, Y.; Dai, Y.; Sun, J.; Wang, H. Retrieval-augmented generation for large language models: A survey. arXiv 2023, arXiv:2312.10997. [Google Scholar]

- Mintz, Y.; Brodie, R. Introduction to artificial intelligence in medicine. Minim. Invasive Ther. Allied Technol. 2019, 28, 73–81. [Google Scholar] [CrossRef]

- Cascella, M.; Semeraro, F.; Montomoli, J.; Bellini, V.; Piazza, O.; Bignami, E. The breakthrough of large language models release for medical applications: 1-year timeline and perspectives. J. Med. Syst. 2024, 48, 22. [Google Scholar] [CrossRef]

- Gulshan, V.; Peng, L.; Coram, M.; Stumpe, M.C.; Wu, D.; Narayanaswamy, A.; Venugopalan, S.; Widner, K.; Madams, T.; Cuadros, J. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA 2016, 316, 2402–2410. [Google Scholar] [CrossRef] [PubMed]

- Oh, K.; Kang, H.M.; Leem, D.; Lee, H.; Seo, K.Y.; Yoon, S. Early detection of diabetic retinopathy based on deep learning and ultra-wide-field fundus images. Sci. Rep. 2021, 11, 1897. [Google Scholar] [CrossRef] [PubMed]

- Ahmadi Mehr, R.; Ameri, A. Skin Cancer Detection Based on Deep Learning. J. Biomed. Phys. Eng. 2022, 12, 559–568. [Google Scholar] [PubMed]

- Winkler, J.K.; Blum, A.; Kommoss, K.; Enk, A.; Toberer, F.; Rosenberger, A.; Haenssle, H.A. Assessment of diagnostic performance of dermatologists cooperating with a convolutional neural network in a prospective clinical study: Human with machine. JAMA Dermatol. 2023, 159, 621–627. [Google Scholar] [CrossRef]

- Günay, S.; Öztürk, A.; Özerol, H.; Yiğit, Y.; Erenler, A.K. Comparison of emergency medicine specialist, cardiologist, and chat-GPT in electrocardiography assessment. Am. J. Emerg. Med. 2024, 80, 51–60. [Google Scholar] [CrossRef]

- Dave, T.; Athaluri, S.A.; Singh, S. ChatGPT in medicine: An overview of its applications, advantages, limitations, future prospects, and ethical considerations. Front. Artif. Intell. 2023, 6, 1169595. [Google Scholar] [CrossRef]

- Barash, Y.; Klang, E.; Konen, E.; Sorin, V. ChatGPT-4 assistance in optimizing emergency department radiology referrals and imaging selection. J. Am. Coll. Radiol. 2023, 20, 998–1003. [Google Scholar] [CrossRef]

- Delsoz, M.; Madadi, Y.; Raja, H.; Munir, W.M.; Tamm, B.; Mehravaran, S.; Soleimani, M.; Djalilian, A.; Yousefi, S. Performance of ChatGPT in diagnosis of corneal eye diseases. Cornea 2024, 43, 664–670. [Google Scholar] [CrossRef]

- Pressman, S.M.; Borna, S.; Gomez-Cabello, C.A.; Haider, S.A.; Forte, A.J. AI in Hand Surgery: Assessing Large Language Models in the Classification and Management of Hand Injuries. J. Clin. Med. 2024, 13, 2832. [Google Scholar] [CrossRef]

- Borna, S.; Gomez-Cabello, C.A.; Pressman, S.M.; Haider, S.A.; Forte, A.J. Comparative Analysis of Large Language Models in Emergency Plastic Surgery Decision-Making: The Role of Physical Exam Data. J. Pers. Med. 2024, 14, 612. [Google Scholar] [CrossRef]

- Ayoub, M.; Ballout, A.A.; Zayek, R.A.; Ayoub, N.F. Mind+ Machine: ChatGPT as a Basic Clinical Decisions Support Tool. Cureus 2023, 15, e43690. [Google Scholar] [CrossRef] [PubMed]

- Lahat, A.; Sharif, K.; Zoabi, N.; Shneor Patt, Y.; Sharif, Y.; Fisher, L.; Shani, U.; Arow, M.; Levin, R.; Klang, E. Assessing Generative Pretrained Transformers (GPT) in Clinical Decision-Making: Comparative Analysis of GPT-3.5 and GPT-4. J. Med. Internet Res. 2024, 26, e54571. [Google Scholar] [CrossRef] [PubMed]

- Goh, E.; Gallo, R.; Hom, J.; Strong, E.; Weng, Y.; Kerman, H.; Cool, J.; Kanjee, Z.; Parsons, A.S.; Ahuja, N. Influence of a Large Language Model on Diagnostic Reasoning: A Randomized Clinical Vignette Study. medRxiv 2024. [Google Scholar] [CrossRef]

- Hoppe, J.M.; Auer, M.K.; Strüven, A.; Massberg, S.; Stremmel, C. ChatGPT with GPT-4 Outperforms Emergency Department Physicians in Diagnostic Accuracy: Retrospective Analysis. J. Med. Internet Res. 2024, 26, e56110. [Google Scholar] [CrossRef] [PubMed]

- Haim, G.B.; Braun, A.; Eden, H.; Burshtein, L.; Barash, Y.; Irony, A.; Klang, E. AI in the ED: Assessing the efficacy of GPT models vs. physicians in medical score calculation. Am. J. Emerg. Med. 2024, 79, 161–166. [Google Scholar] [CrossRef]

- Ayers, J.W.; Poliak, A.; Dredze, M.; Leas, E.C.; Zhu, Z.; Kelley, J.B.; Faix, D.J.; Goodman, A.M.; Longhurst, C.A.; Hogarth, M. Comparing physician and artificial intelligence chatbot responses to patient questions posted to a public social media forum. JAMA Intern. Med. 2023, 183, 589–596. [Google Scholar] [CrossRef]

- Boyd, C.J.; Hemal, K.; Sorenson, T.J.; Patel, P.A.; Bekisz, J.M.; Choi, M.; Karp, N.S. Artificial Intelligence as a Triage Tool during the Perioperative Period: Pilot Study of Accuracy and Accessibility for Clinical Application. Plast. Reconstr. Surg. Glob. Open 2024, 12, e5580. [Google Scholar] [CrossRef]

- Reynolds, K.; Tejasvi, T. Potential use of ChatGPT in responding to patient questions and creating patient resources. JMIR Dermatol. 2024, 7, e48451. [Google Scholar] [CrossRef]

- Seth, I.; Xie, Y.; Rodwell, A.; Gracias, D.; Bulloch, G.; Hunter-Smith, D.J.; Rozen, W.M. Exploring the role of a large language model on carpal tunnel syndrome management: An observation study of ChatGPT. J. Hand Surg. 2023, 48, 1025–1033. [Google Scholar] [CrossRef]

- Gül, Ş.; Erdemir, İ.; Hanci, V.; Aydoğmuş, E.; Erkoç, Y.S. How artificial intelligence can provide information about subdural hematoma: Assessment of readability, reliability, and quality of ChatGPT, BARD, and perplexity responses. Medicine 2024, 103, e38009. [Google Scholar] [CrossRef]

- Mokmin, N.A.M.; Ibrahim, N.A. The evaluation of chatbot as a tool for health literacy education among undergraduate students. Educ. Inf. Technol. 2021, 26, 6033–6049. [Google Scholar] [CrossRef] [PubMed]

- Breeding, T.; Martinez, B.; Patel, H.; Nasef, H.; Arif, H.; Nakayama, D.; Elkbuli, A. The utilization of ChatGPT in reshaping future medical education and learning perspectives: A curse or a blessing? Am. Surg. 2024, 90, 560–566. [Google Scholar] [CrossRef] [PubMed]

- Han, J.-W.; Park, J.; Lee, H. Analysis of the effect of an artificial intelligence chatbot educational program on non-face-to-face classes: A quasi-experimental study. BMC Med. Educ. 2022, 22, 830. [Google Scholar] [CrossRef] [PubMed]

- Roos, J.; Kasapovic, A.; Jansen, T.; Kaczmarczyk, R. Artificial intelligence in medical education: Comparative analysis of ChatGPT, Bing, and medical students in Germany. JMIR Med. Educ. 2023, 9, e46482. [Google Scholar] [CrossRef]

- Friederichs, H.; Friederichs, W.J.; März, M. ChatGPT in medical school: How successful is AI in progress testing? Med. Educ. Online 2023, 28, 2220920. [Google Scholar] [CrossRef]

- Riedel, M.; Kaefinger, K.; Stuehrenberg, A.; Ritter, V.; Amann, N.; Graf, A.; Recker, F.; Klein, E.; Kiechle, M.; Riedel, F. ChatGPT’s performance in German OB/GYN exams–paving the way for AI-enhanced medical education and clinical practice. Front. Med. 2023, 10, 1296615. [Google Scholar] [CrossRef]

- Rudan, D.; Marčinko, D.; Degmečić, D.; Jakšić, N. Scarcity of research on psychological or psychiatric states using validated questionnaires in low-and middle-income countries: A ChatGPT-assisted bibliometric analysis and national case study on some psychometric properties. J. Glob. Health 2023, 13, 04102. [Google Scholar] [CrossRef]

- Biswas, S.; Dobaria, D.; Cohen, H.L. Focus: Big data: ChatGPT and the future of journal reviews: A feasibility study. Yale J. Biol. Med. 2023, 96, 415. [Google Scholar] [CrossRef]

- Saad, A.; Jenko, N.; Ariyaratne, S.; Birch, N.; Iyengar, K.P.; Davies, A.M.; Vaishya, R.; Botchu, R. Exploring the potential of ChatGPT in the peer review process: An observational study. Diabetes Metab. Syndr. Clin. Res. Rev. 2024, 18, 102946. [Google Scholar] [CrossRef]

- Huang, Y.; Wu, R.; He, J.; Xiang, Y. Evaluating ChatGPT-4.0’s data analytic proficiency in epidemiological studies: A comparative analysis with SAS, SPSS, and R. J. Glob. Health 2024, 14, 04070. [Google Scholar] [CrossRef]

- Gebrael, G.; Sahu, K.K.; Chigarira, B.; Tripathi, N.; Mathew Thomas, V.; Sayegh, N.; Maughan, B.L.; Agarwal, N.; Swami, U.; Li, H. Enhancing triage efficiency and accuracy in emergency rooms for patients with metastatic prostate cancer: A retrospective analysis of artificial intelligence-assisted triage using ChatGPT 4.0. Cancers 2023, 15, 3717. [Google Scholar] [CrossRef] [PubMed]

- Meral, G.; Ateş, S.; Günay, S.; Öztürk, A.; Kuşdoğan, M. Comparative analysis of ChatGPT, Gemini and emergency medicine specialist in ESI triage assessment. Am. J. Emerg. Med. 2024, 81, 146–150. [Google Scholar] [CrossRef] [PubMed]

- Williams, C.Y.; Zack, T.; Miao, B.Y.; Sushil, M.; Wang, M.; Kornblith, A.E.; Butte, A.J. Use of a large language model to assess clinical acuity of adults in the emergency department. JAMA Netw. Open 2024, 7, e248895. [Google Scholar] [CrossRef] [PubMed]

- Ito, N.; Kadomatsu, S.; Fujisawa, M.; Fukaguchi, K.; Ishizawa, R.; Kanda, N.; Kasugai, D.; Nakajima, M.; Goto, T.; Tsugawa, Y. The accuracy and potential racial and ethnic biases of GPT-4 in the diagnosis and triage of health conditions: Evaluation study. JMIR Med. Educ. 2023, 9, e47532. [Google Scholar] [CrossRef] [PubMed]

- Sapp, R.F.; Brice, J.H.; Myers, J.B.; Hinchey, P. Triage performance of first-year medical students using a multiple-casualty scenario, paper exercise. Prehosp. Disaster Med. 2010, 25, 239–245. [Google Scholar] [CrossRef]

- Gan, R.K.; Ogbodo, J.C.; Wee, Y.Z.; Gan, A.Z.; González, P.A. Performance of Google bard and ChatGPT in mass casualty incidents triage. Am. J. Emerg. Med. 2024, 75, 72–78. [Google Scholar] [CrossRef]

- Masanneck, L.; Schmidt, L.; Seifert, A.; Kölsche, T.; Huntemann, N.; Jansen, R.; Mehsin, M.; Bernhard, M.; Meuth, S.G.; Böhm, L. Triage Performance Across Large Language Models, ChatGPT, and Untrained Doctors in Emergency Medicine: Comparative Study. J. Med. Internet Res. 2024, 26, e53297. [Google Scholar] [CrossRef]

- Kim, J.H.; Kim, S.K.; Choi, J.; Lee, Y. Reliability of ChatGPT for performing triage task in the emergency department using the Korean Triage and Acuity Scale. Digit. Health 2024, 10, 20552076241227132. [Google Scholar] [CrossRef]

- Sarbay, İ.; Berikol, G.B.; Özturan, İ.U. Performance of emergency triage prediction of an open access natural language processing based chatbot application (ChatGPT): A preliminary, scenario-based cross-sectional study. Turk. J. Emerg. Med. 2023, 23, 156–161. [Google Scholar] [CrossRef]