1. Introduction

Let

be a counting process, where

denotes the number of events that occur by time

t. Let

denote the time between the

th and

nth events of the process,

. Let

denote the time of last arrival before a truncated time

and

be the time of the first arrival after the time

. If

is a sequence of identically independent distributed (iid) interarrival times of the events, then the process

is called a renewal process. One of the measures associated with a renewal process is the expected time of next arrival,

, after a specified time

. Note that

. Ref. [

1] provides the well-known result

, where

is the mean of the interarrival distribution and

is the renewal function. The proposed method presented in this article can be applied to any renewal process as long as

and

can be expressed as closed functions of the parameter(s) of the renewal process. The estimation of

has been considered in the literature. For instance, [

2] utilizes numerical methods based on an MCMC algorithm for estimating

when interarrival times follow a Pareto distribution.

The HPP is widely used in various fields of probability and applied statistics. Ref. [

3] applied an HPP to model interarrivals of earthquakes. Ref. [

4] provided a Bayesian approach to estimating the Poisson intensity function based on the Haar wavelet transform. The NHPP is also utilized in practice when the intensity function is not constant, as in the case of HPP. The NHPP model has numerous real-world applications. In the insurance industry, the occurrence of insurance claims can be effectively modeled using an NHPP. For example, the rate of car accident claims may increase during peak travel seasons or bad weather conditions. In financial market modeling, the arrival rate of transactions often exhibits time-varying characteristics. An NHPP can be considered to model these transactions. In software development, the NHPP can be considered as a possible model for the occurrence of software defects during testing. As testing progresses, the rate at which new defects are uncovered may decrease. Many authors have considered the power law (PL) intensity function for NHPP in the literature. Ref. [

5] assumed that the failures of a system follow an NHPP with the PL intensity function and mentioned that the NHPP is a model commonly used to describe a system with minimal repairs. For minimal repair cases, it is assumed that the system is restored to its state immediately before the failure. This means the repair does not improve the system’s inherent reliability. Ref. [

6] considered NHPP with linear and PL intensity functions to analyze COVID-19 cases. For NHPP, the rate of events (failures of a system) is not constant over time; it can increase or decrease, reflecting reliability growth or deterioration. Therefore, the occurrence rate is time-dependent. Under the PL intensity function, the failure intensity, or rate of failures per unit time, is modeled as a power function of time. The PL intensity is useful for systems undergoing continuous improvement or deterioration, where the reliability is not static. The PL mean-value function

, and its intensity function

, respectively, are defined as

where

and

are scale and shape parameters, respectively.

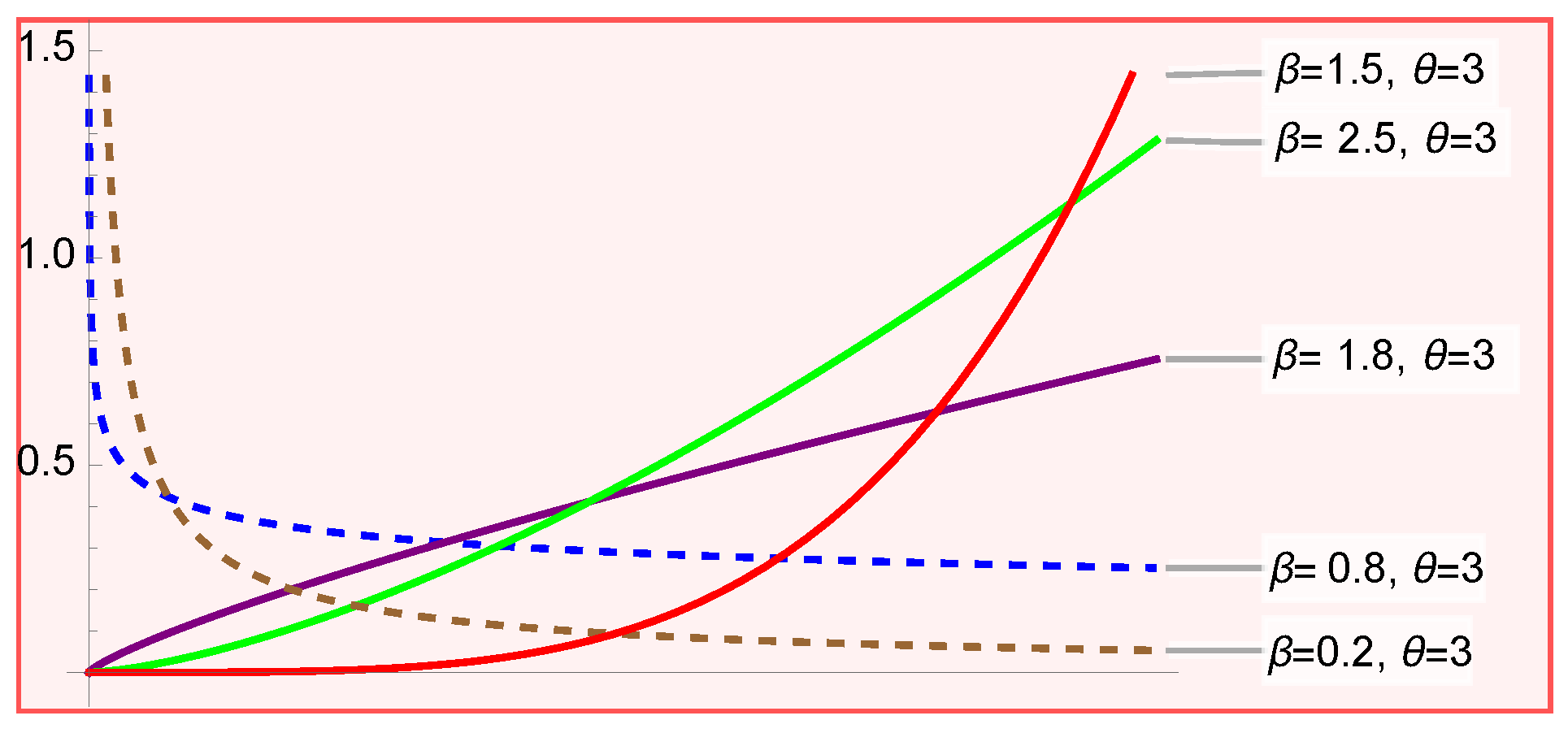

Figure 1 reveals that for

, the intensity function decreases, indicating that the occurrence rate of events diminishes. In the context of a repairable system, this suggests that minimal repairs are sufficient. Conversely, for

, the intensity function increases, indicating that failure rates rise.

The LINEX loss function has many advantages over other asymmetric loss functions, such as Mean Squared Error, Mean Absolute Error, and Binary Cross-Entropy, to name a few. The LINEX loss function provides different penalties based on whether the error is an overestimation or an underestimation, and the degree and direction of asymmetry can be controlled by choosing its parameter value (a positive value implies overestimation is more serious, and a negative value implies underestimation has a greater cost). The continuity and differentiability properties of the LINEX loss enable mathematical optimization to find the optimal Bayes estimate, and it converges to the quadratic loss function as its parameter approaches zero. Ref. [

7] used the loss function to predict lower bounds for the number of points in regression models. The loss function penalizes underestimation at an exponential rate while penalizing overestimation only linearly. Ref. [

8] considered the loss function to determine optimum process parameters for product quality. Ref. [

9] utilized the loss function with a variable parameter and considered several distributions for the parameter to find Bayes risks. Ref. [

10] applied the loss function to several resampling methods.

The main goal of this article is to provide novel Bayes estimators for the expected arrival of the event past a truncated time, , utilizing the LINEX loss function for two cases: 1. is assumed to be an HPP with rate ; 2. is assumed to be an NHPP with an intensity function . For the HPP case, Laptance’s method for a twice-differentiable function was applied to obtain the Bayes estimator using gamma and non-informative priors. For the NHPP case, Bayes estimators based on gamma priors for the parameters of the PL intensity, as well as the Jeffreys prior, are applied to obtain Bayesian estimates. Additionally, the article provides closed formulas for the posterior risk based on informative and non-informative priors for both HPP and NHPP. It proposes a criterion based on a minimax-type method to determine the optimal sample size to satisfy a specified percentage decrease in posterior risk.

The remainder of this article is organized as follows:

Section 2 consists of three subsections. It provides two Bayes estimators via gammas and the Jeffreys prior for the expected time of arrival of the event past a truncated time, assuming an HPP for the arrival times of events.

Section 3 has three subsections. In this section, the NHPP is considered utilizing the PL intensity function. The computation of the Bayes estimates via gamma priors, as well as a non-informative prior, is discussed, and simulation results are presented.

Section 4 has two subsections. In this section, a minimax-type algorithm is presented to compute the optimal sample size that meets a specified percentage decrease threshold level. In

Section 5, a numerical example is presented to illustrate the computations involved in the goodness-of-fit of data to NHPP and the optimal sample size.

2. Homogeneous Poisson Process

For the HPP with rate

, it is a well-known fact that

Exponential

,

, with the probability density function (pdf)

. It can be shown that

and

; as a result,

. Of course, this result can also be argued via the memoryless property of the Exponential distribution. Since the truncated time

is given in advance, we concentrate on deriving the Bayes estimator of the rate of occurrence

based on a random sample

. Let

denote the error of estimating a parameter

with an estimator

. The LINEX loss function is defined as

where

and

q are the shape and scale parameters, respectively, of the loss function. Without loss of generality, we let

. The shape parameter

can take negative and positive values.

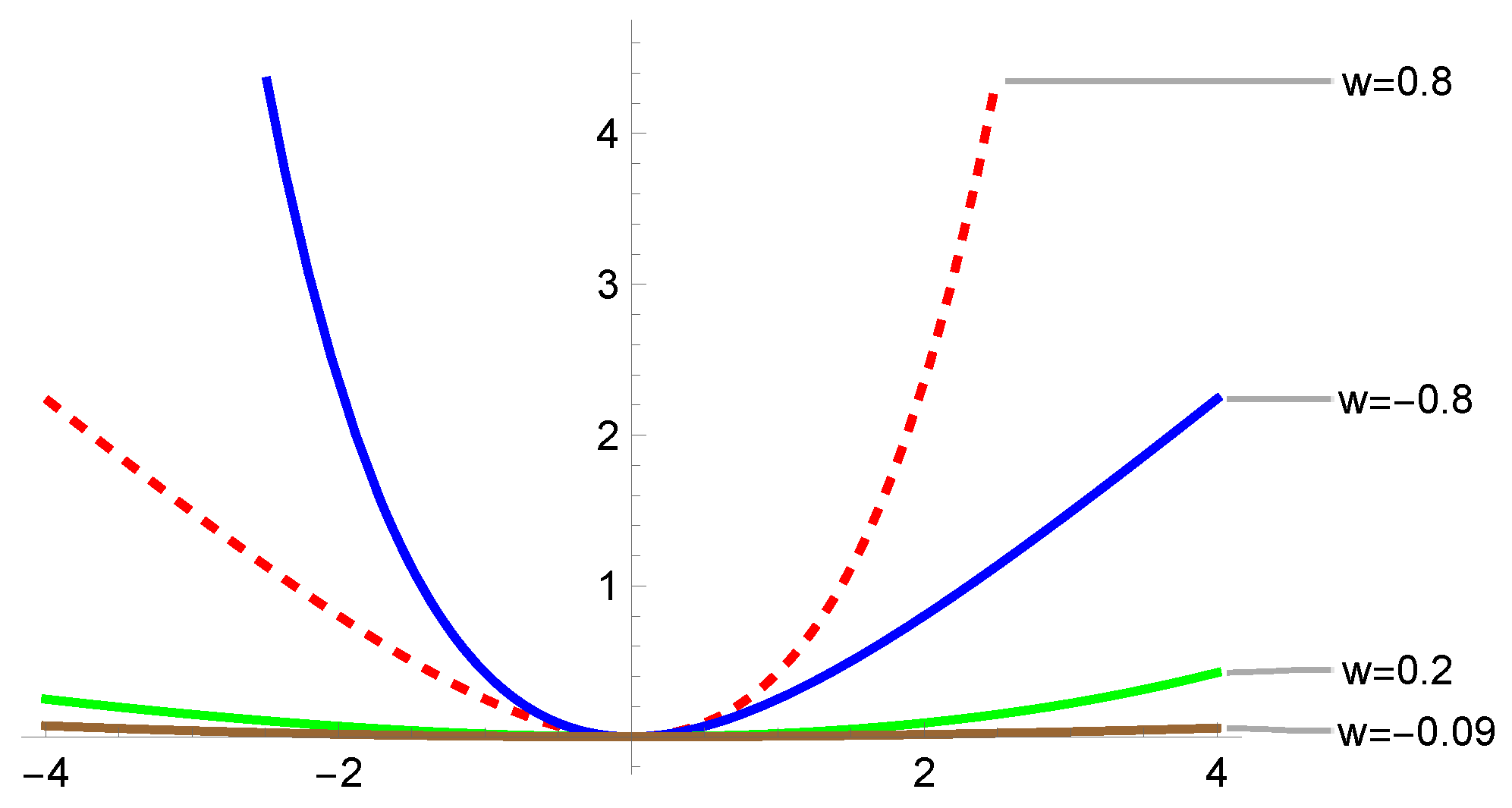

Figure 2 is the graph of the LINEX loss function, as defined above.

Figure 2 reveals that for both

and

, the loss function is asymmetric. For

and

, overestimation is more severe than when

and

. That is, for

, the loss function is greater (exponentially increases) than for

(linearly increases). For example, LINEX

, while LINEX

. On the other hand, when

, the loss function is almost linear for

and almost exponential for

. Additionally, when the shape parameter

in absolute value is less than 1 and is very small, the loss function is symmetric and resembles the squared-error loss function. It is worth mentioning that the squared error loss treats overestimation and underestimation equally, penalizing both with the square of the error. However, LINEX loss introduces asymmetry, allowing for different penalties for overestimation and underestimation, which are controlled by the parameter

.

The following Mathematica code provides a graph of the LINEX loss function for selected values of the shape parameter

in

Figure 2.

; ; ; Plot[{Exp[v w1] − v w1 − 1, Exp[v w2] − v w2 − 1, Exp[v w3] − v w3 − 1, Exp[v w4] − v w4 − 1]}, {v, −4, 4}, PlotStyle → {{Red, Dashed}, Blue, Green, Brown}, PlotLabels → {“w = 0.8”, “w = −0.8”, “w = 0.2”, “w = −0.09”}]

Ref. [

11] provides the general expression for the optimal Bayes estimator of

with respect to the LINEX loss function as

where

is the moment generating function for the posterior distribution of

, and

denotes the expected value with respect to

.

2.1. Bayes Estimator Based on a Gamma Prior

The gamma distribution, as a prior distribution in Bayesian analysis, has many advantages. It is a natural and appropriate choice for modeling non-negative parameters such as variances, rates, and scale parameters. The distribution serves as a conjugate prior for several important likelihood functions, and by adjusting its parameters, the gamma distribution can adopt a variety of shapes, allowing for a flexible representation of prior beliefs. In this article, the gamma distribution is utilized for the parameters of HPP and NHPP. According to Laplace’s method, for a twice-differentiable function

g(

x), which has a unique global maximum at

and for a large number

n,

Assume

is a parameter with the prior distribution

and let

denote the likelihood function. The moment generating function (mgf) for the posterior distribution of

is

where

is a random sample of interarrivals from the Exponential distribution. From (1), the LINEX Bayes estimator of

is

Based on a sample

of interarrival times from an HPP, the likelihood function is

, where

. First, we consider a gamma prior for

:

It can be shown that the posterior pdf for

can be written as

which is the pdf of gamma

. Therefore, the posterior distribution for

(which is the main parameter of interest), is inverse-gamma with the pdf

Bayesian analysis assumes that some information is available on the hyperparameters

and

based on the behavior of

. However, most of the time, such information is unavailable, and one can use a data-driven approach. This approach was implemented in ref. [

2] and many other articles by the author. In the context of the present article, the MLE

is used to choose the hyperparameter values. Simulation studies in

Section 2.3 indicate that for a smaller sample size, the Bayes estimator of

outperforms

in terms of accuracy as measured by the square root of average squared error (SASE). However, for large samples, the accuracy of MLE is comparable to that of the Bayes estimator. The numerical computations summarized in

Table 1,

Table 2 and

Table 3 confirm the findings. Since

we have,

In practice, where only one sample is available, the idea is to choose a large value for to minimize the variance of the prior distribution, and then solve for . However, for the simulation studies, using selected “true” values of and , we let . The idea is to utilize the MLE and ensure the relation between hyperparameters is consistent with the information contained in the MLE. Simulations confirm that using this approach, the gamma-based Bayes estimator outperforms both ML-based and Jeffreys-based estimators in terms of accuracy.

The closed formula for the mgf of the posterior (5) can be written as

where

is the modified BesselK function. The mgf can also be expressed in a closed form that does not involve the BesselK function. From (5), we get

where

. It can be shown that

has its global maximum at

From (2), an approximate expression for the posterior mfg under the gamma prior for

, after many algebraic manipulations, can be written as

As a result, the Bayes estimator is

.

2.2. Bayes Estimator Using Jeffreys Prior

Often, the true values of hyperparameters for a prior distribution are unknown to practitioners, or they are difficult to guess based on the behavior of the parameter of interest. Sometimes, even the prior distribution itself cannot be guessed. In this section, the Jeffreys prior is considered to obtain a closed expression for the Bayes estimator. Using a sample

from an Exponential(

) distribution, the likelihood function

, and the Jeffreys prior

where

is the Fisher information. The posterior pdf for

is expressed as

which is the pdf of gamma(

). From (7), the posterior pdf for

(the main parameter of interest) reduces to

As a result, the mgf for

in this case is

and can be approximated with

where

with the global maximum

. From (2) and (8), the mgf simplifies to

and then we compute

.

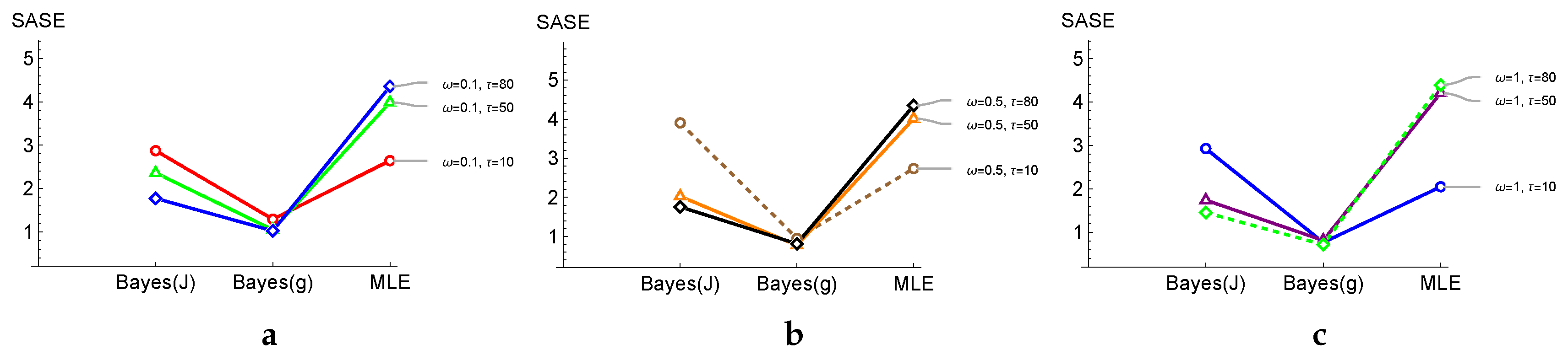

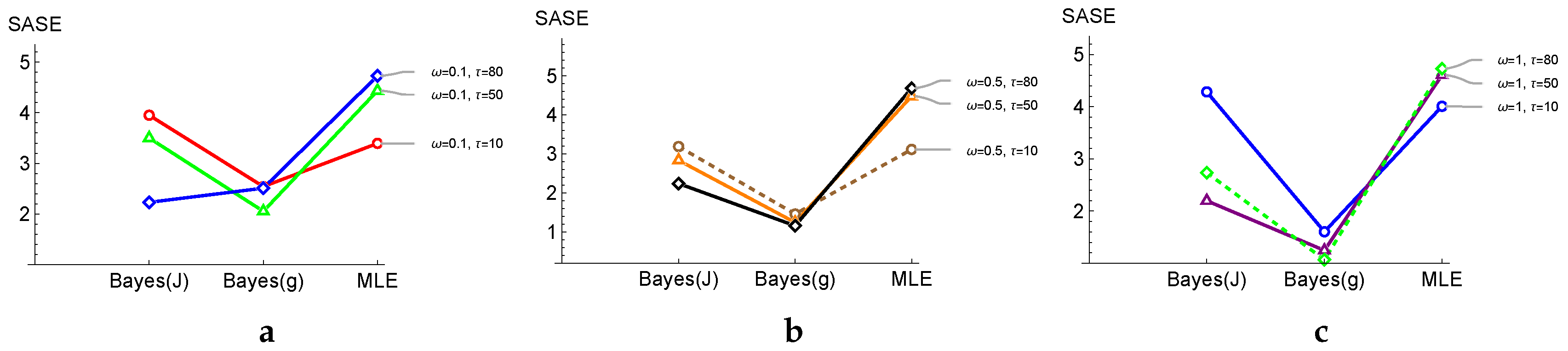

2.3. Simulation Results for HPP

This section summarizes the simulation results to assess the accuracy of the estimators obtained in

Section 2.1 and

Section 2.2. It is worth mentioning that the mgf,

, for the inverse-gamma distribution is not defined; however, for

is defined. This is the main reason that in

Table 1,

Table 2 and

Table 3 (

Section 2.3), only a few selected positive values of

are considered. Therefore, with this limitation, we are considering the cases where the loss function is asymmetric, with overestimation being more severe than underestimation. For example, in the design of a safety-critical system, overestimating might result in unnecessary maintenance. For selected values of input variables

, and

(see the discussion on choosing the hyperparameter values in

Section 2.1), the simulation code generates N = 2000 samples from the Exponential distribution with the parameter

. For every sample of size

n, the approximate values of

using (6) and (9) are found. The code also computes the ML estimate of

. For each set of

N simulated samples, the average of the estimates and the SASE,

are computed. The reason for using SASE as opposed to ASE,

, is that SASE resembles the standard deviation of a random variable, which is commonly used to compare variability. However, having SASE, one can obtain ASE based on SASE and vice versa.

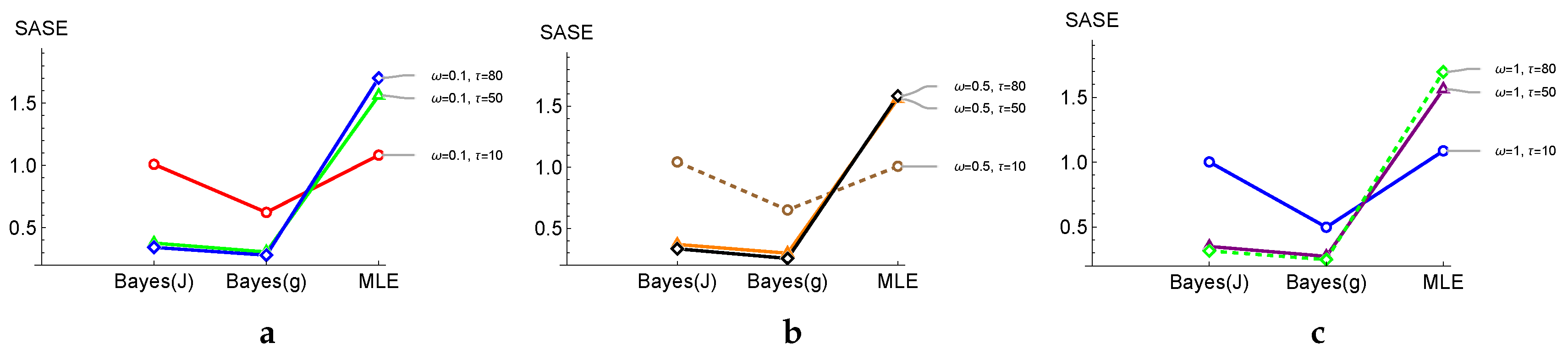

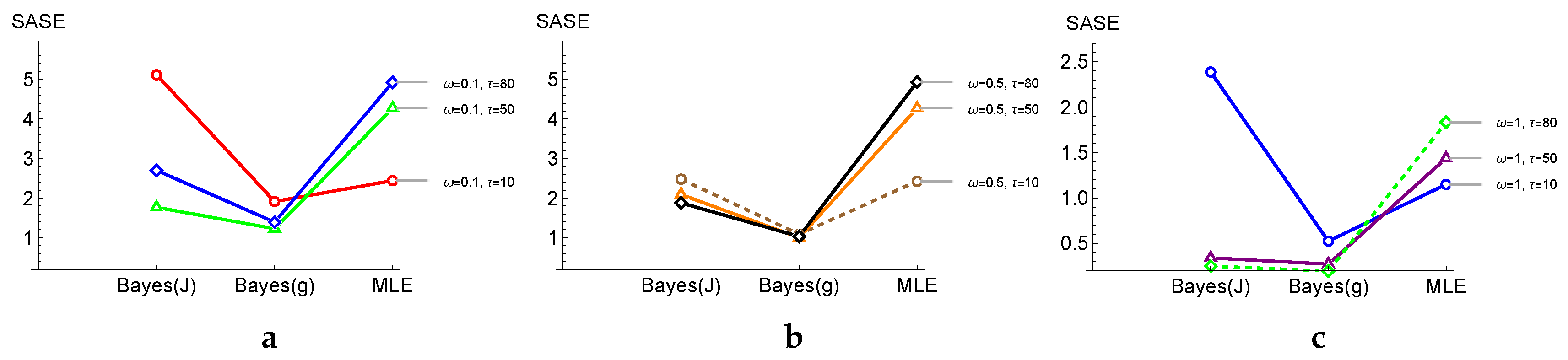

Table 1 lists the averages and values of

in parentheses.

and

denote Bayes estimates under gamma and Jeffreys priors, respectively.

Table 1 reveals that as

n increases, SASE decreases for all three estimators, regardless of the selected values for

, and

.

outperforms

with respect to their accuracy, as SASE for

in boldface is smaller. Also,

outperforms the MLE

, where

.

It is worth mentioning that

is the rate of occurrence of events under HPP, and that the estimator of

is of primary interest, not

. Note that MLEs of

and

are functionally related. Although selected values of

are shown in the first row of

Table 1,

Table 2 and

Table 3, the mean and SASE for

,

, and

are provided in the main body of the tables. The SASE numbers in boldface in

Table 1,

Table 2 and

Table 3 represent the smallest SASE values for selected values of the parameter

of the LINEX loss,

n,

, and

.

Table 3 lists four different selections for the parameters (

). For each selection, two values for

are listed. For instance, in the first row of the first selection,

,

is not derived using the relation

. In contrast, in the second row,

follows the relation as described in

Section 2.1 for the hyperparameter

. We observe that with the second option, the estimator

, for all four cases, produces the smallest SASE. However, when the hyperparameters are selected arbitrarily, as in the first row for each case, the estimator

has a slightly smaller or the same SASE value as the MLE, and

is no longer the best estimator. This confirms that the selection of the hyperparameter value, as proposed, is important.

4. Optimal Sample Size: A Minimax-Type Approach

In this section, an algorithm is proposed for determining the optimal value of

n, given that an HPP or NHPP is intended to be observed for a specified time

T. There are many optimal designs for finding

and

n in the Bayesian framework. For instance, [

13] provides optimal designs for Bayesian estimation of an HPP rate

for a set of loss functions; however, LINEX loss was not considered. The article presents two sequential methods and a non-sequential method to find conditions for the optimal stopping time

and

n. The process involves a substantial number of computations. Ref. [

14] considered an algorithm to determine the optimal stopping time of an HPP where the cost of observations grows linearly with

.

In this article, we approach the problem computationally and propose a minimax-type method. Suppose the process is intended to be observed for time T. We are interested in finding the sufficient/optimal number of events n before time T that meets the specified risk level. This would help avoid waiting for all events to occur by time T.

The steps for computing the optimal sample size are similar for HPP and NHPP cases. Code B (see the

Supplementary Materials) provides the computation of the optimal sample size based on the gamma priors for the NHPP case using the PL intensity function. The following steps are implemented in Code B.

Select a specialized percentage decrease threshold value for the risk. In Code B, we used 0.05. Select , and the maximum intended time T, to observe the events. Choose values for the hyperparameters b and d. T must be large enough to ensure that at least the initial arrival times do not exceed T; otherwise, the value of the optimal n would not converge.

Using the current arrival times

, up to

, compute the MLEs

, which are the parameters of the PL intensity function. These estimates, along with the hyperparameters

a and

c, must be updated (see

Section 3.3) as more data become available.

Compute the risks

and

using (17),

Section 4.2. Note that to find the latter risk, MLEs, and the hyperparameters (

), values within the while loop must be updated.

If , the latest value of n would be the optimal value based on the specifications; otherwise, in the while loop, increase the value of n by one, and repeat Steps 2–4. The code stops when the best value of n is identified.

4.1. The Case of HPP

Since

is an HPP with the rate

,

, and as a result, using (7), the posterior distribution of

is given by

which is the pdf of the inverse-gamma

, with

.

For an estimator

of a parameter

, based on data

the LINEX loss is

The posterior risk of the estimator with respect to a posterior pdf

is defined as

Using the above remark, it can be shown that the risk of the Bayes rule,

, simplifies to

where

For a given value of

T,

is decreasing in

n, with the limit approaching zero as

n increases. Since the longest time that the process is to be observed is

T, the optimal value for

n is proposed to be the smallest

n for which the percentage decrease in the risk is less than a specified threshold

. That is

where

is a small number such as 0.05. The above criteria guarantee that increasing

n to

does not significantly reduce

. Computationally, it is easy to find the optimal value of

n once

,

,

T, and

are given. It can also be shown that for the Jeffreys prior, the risk of the Bayes rule can be expressed as

where

For this case, the optimal value of

n can also be obtained using the same criterion as in (15).

Given a data set

from Exponential

, the Mathematica code (A), based on the gamma prior, uses a while loop to find the optimal value for

n. We only provide the code for the gamma prior case, as

outperforms the

estimator regarding accuracy (see

Table 1 and

Table 2). Note that the values for

and

should be selected by a user, and that

T must be large enough so that

for any values of

j in the loop, while the condition (15) is being checked. It is worth noting that the computations for the risks in (15) are sequential. That is, the MLE

is updated as

is added to the sample

in the While loop. As a result, the risks in (15) are updated in the loop.

Table 7 provides simulation results for the average values of the optimal

n for selected values of

and

for the gamma prior case.

Table 7 reveals that for the selected

, and

, a smaller

leads to a larger

n, as expected. The optimal sample size is smaller for a larger value of the hyperparameter

(which provides a more accurate Bayes estimate; see

Section 2.3). Also, for any selected values of

, and

, the three values of

provide almost similar optimal sample sizes.

4.2. The Case of NHPP

Recall that the gamma-based and non-informative-based Bayes estimators are numerically computed using (12) and (13). Based on the LINEX loss, like in

Section 4.1, the risk of the Bayes rule under LINEX loss can be written as

The expected values in (16), for both gamma and non-informative prior cases, are based on the corresponding posterior distributions (10) and (11). Therefore, the risks based on gamma and non-informative priors, respectively, can be computed via

Given a data set

on arrival times from the NHPP with the power-law intensity, the Mathematica code (B) uses a while loop to find the optimal value for

n. We only provide the code for the gamma prior case, as

outperforms the

estimator regarding accuracy (see

Table 4 and

Table 5). As in

Section 4.1, we are interested in the smallest values of

n for which

A user should select the values for the hyperparameters

b and

d, and

T must be large enough so that for the sum of interarrival times, we have

. That is,

, with

. This should be true for any value of

j in the loop while condition (19) is being checked. It is worth noting that the computations for the risks in (19) are sequential. That is, the MLEs

from

Section 3.1 are updated as

is added to the sample

in the while loop. As a result, the risks in (19) are updated in the loop. As mentioned in

Section 3.3, to get a more accurate Bayes

(g), using the data-driven approach, a large value for hyperparameter

b and a small value for

d (to ensure that

D in

is not a negative number) are selected, and then the hyperparameters

c and

d are found using the MLEs. That is,

It is noted that the second double integral on the far right in (17) cannot be computed via a numerical integration method in Mathematica. Therefore, we use the Monte Carlo method to simulate independent samples from and for each iteration based on the sample size j in the loop to approximate the second integral.

Table 8 provides simulation results for the average values of the optimal

n for selected values of

, and

based on (19).

From a practical perspective, when performing data analysis, one must select an appropriate value for that matches the specific application. Several options are available, some of which are discussed in the literature but are not covered in this article.

For this article, as previously noted, negative values of make it computationally impossible to provide Bayes estimates of the expected arrival past a truncated time for the HPP and NHPP cases. We considered only selected positive values in all tables. Due to this limitation, the proposed methods are applicable when overestimating the expected arrival beyond a truncated time is more critical than underestimating. We suggest selecting several potential values of where the choices can be based on previous similar applications, if available. For , compute risks (14) and (17) for HPP and NHPP cases, respectively, using the informative priors (which, as shown by simulations, are more accurate than non-informative priors) and then choose the that yields the smallest risk value.

5. Numerical Example

The following data represents the arrival of 31 events. The data is generated from an NHPP. The purpose of this example is to provide computational steps for the goodness-of-fit of the data to the NHPP, using the MLEs of the parameters for the NHPP under the PL intensity to determine the appropriate values (see

Section 4.2) for the hyperparameters

, and find the optimal sample size.

t = {0.10, 0.30, 1.05, 1.40, 1.94, 4.55, 5.60, 6.39, 16.87, 18.52, 23.74, 23.92, 27.27, 33.24, 34.17, 42.67, 43.49, 43.94, 44.44, 49.19, 63.13, 71.07, 78.60, 84.15, 85.02, 87.32, 89.98, 90.86, 102.44, 106.18, 117.6}

where

= 200; ref. [

15] considered the Laplace statistic (LS)

to assess the goodness-of-fit of an NHPP for several intensity functions, including power-law intensity.

denotes the mean-value function evaluated at time

. The test statistic rejects an NHPP if

where

Based on the above data, the MLE for

is

, and

. Note that the estimated value of the parameter

is not involved in the test statistic. Since

the null hypothesis that

t is from the NHPP with the PL intensity is not rejected at the 0.05 significance level. Using

and

for the hyperparameters (see

Section 4.2 for the selection of hyperparameters

a and

c) and

, the optimal sample sizes based on

and

, respectively, are

and

, via the Mathematica Code B.