Developing an Interactive VR CAVE for Immersive Shared Gaming Experiences

Abstract

1. Introduction

2. Related Work

2.1. Factors Affecting User Experience in Virtual Environments

2.2. Public VR Experiences

2.3. Motion Capture in VR

- Full-body tracking, which involves tracking the position and orientation of the user’s head, hands, feet, and other body parts to control the character’s movements within the game [51].

- Hand tracking, which involves tracking the movement of a user’s hands to control in-game actions [52]. This can include grabbing and manipulating objects, throwing projectiles, or performing hand gestures to trigger special abilities.

- Body posture and gesture recognition [53]. Some games use skeleton tracking to recognize specific body postures or gestures, such as a punch or a kick, to trigger in-game actions. This can provide a more intuitive and immersive gameplay experience, allowing the user to feel more connected to their character.

- Inertial measurement units (IMUs) [54]: IMUs are sensors that measure acceleration, rotation, and magnetic fields. They can be attached to different parts of the body, such as the hands, feet, and torso, to track movement.

- Depth-sensing cameras [55]: cameras that use depth-sensing technology, such as the Microsoft Kinect or Intel RealSense, can be used to track the movement of a user’s body.

- Optical motion capture [56]: This technology uses markers placed on a person’s body and cameras to track their movement. This is commonly used in film and video game production. Once the user’s movements have been tracked, the data can be used to animate an avatar in the virtual environment. This allows the user to see their movements reflected in the VR world, creating a more immersive experience.

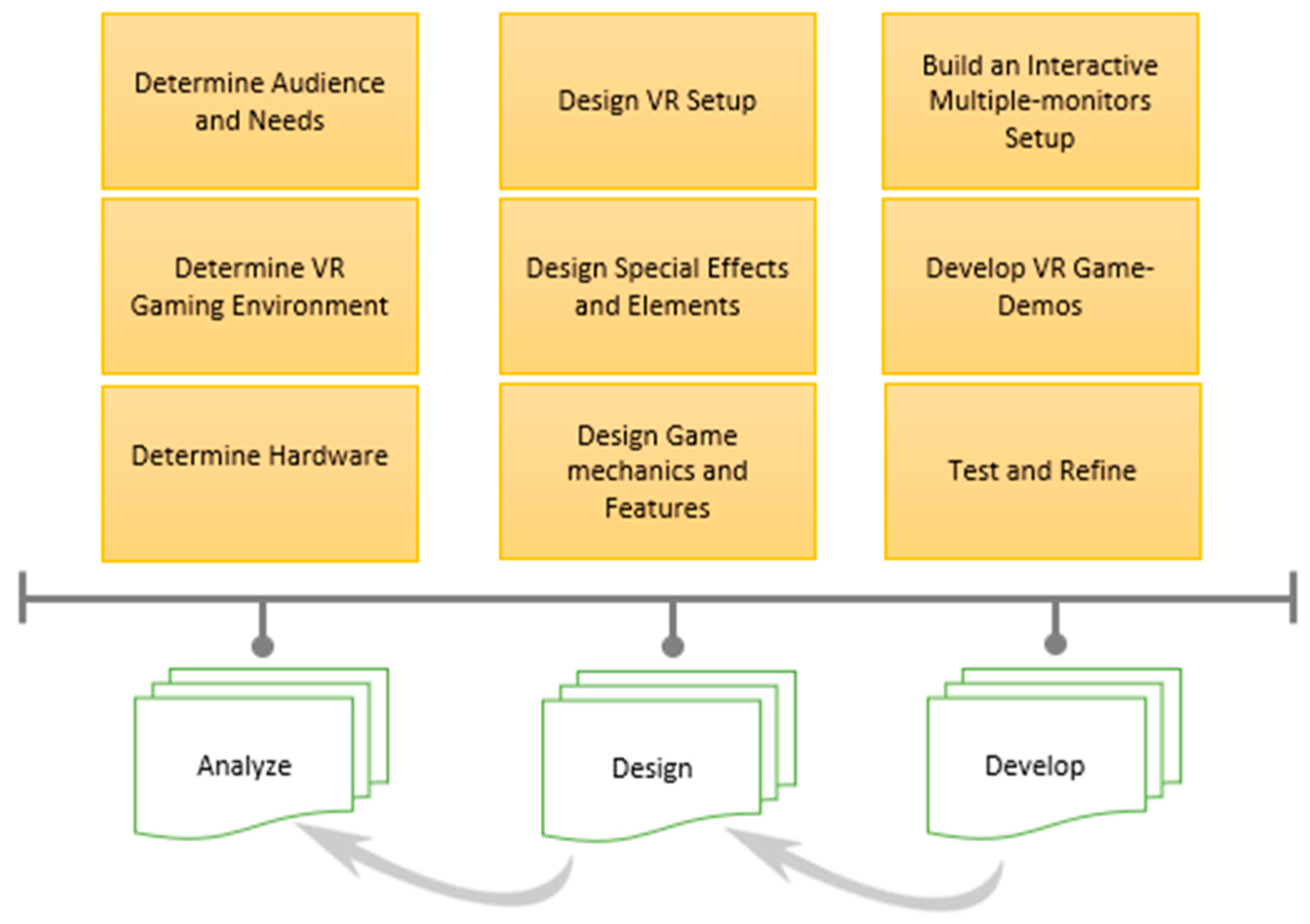

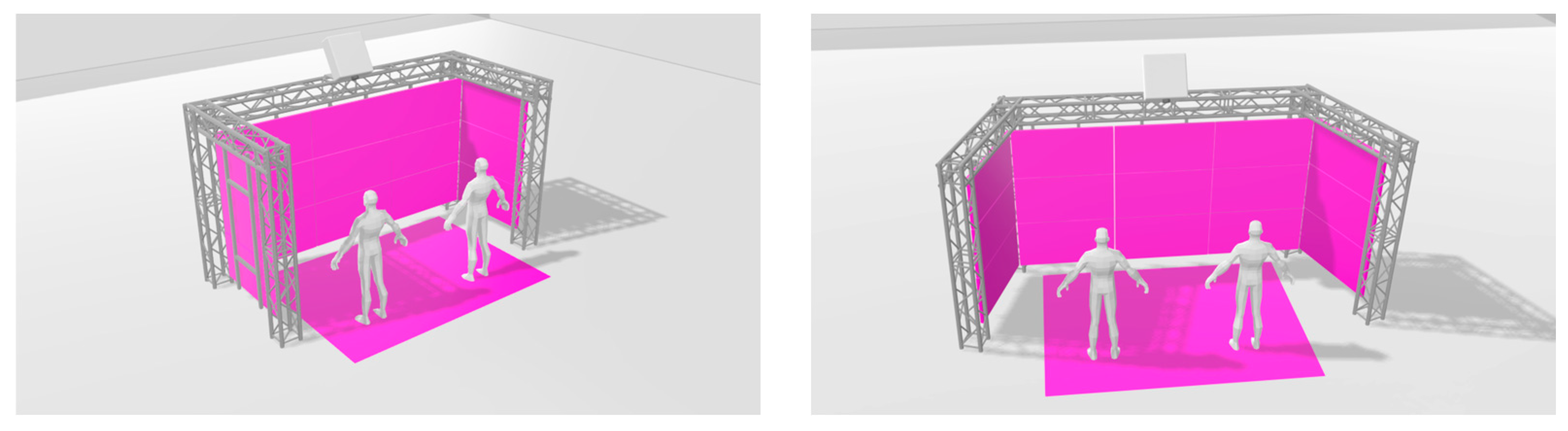

3. Implementing MobiCave

- -

- Scalability, so it can fit a room-sized space.

- -

- Usability, in normal room conditions, e.g., lighting.

- -

- Multiplayer, to allow collaboration.

- -

- Sharable, to allow bystanders to view fully and in this way to be part of the experience.

- -

- Interconnected, easily transferable setup and rapid installation/de-installation for field and traveling exhibit use.

- -

- Easy access for maintenance, to reduce expenses.

- -

- Power-efficient, to reduce cost and cooling and low thermal signature to minimize need for ventilation.

- -

- Low noise signature, so that the users and the bystanders can talk, and generated audio can be heard.

- -

- Safety, in terms of construction and electricity, to prevent accidents, injuries, or damage to equipment.

4. User Study

4.1. Participants

4.2. Procedure

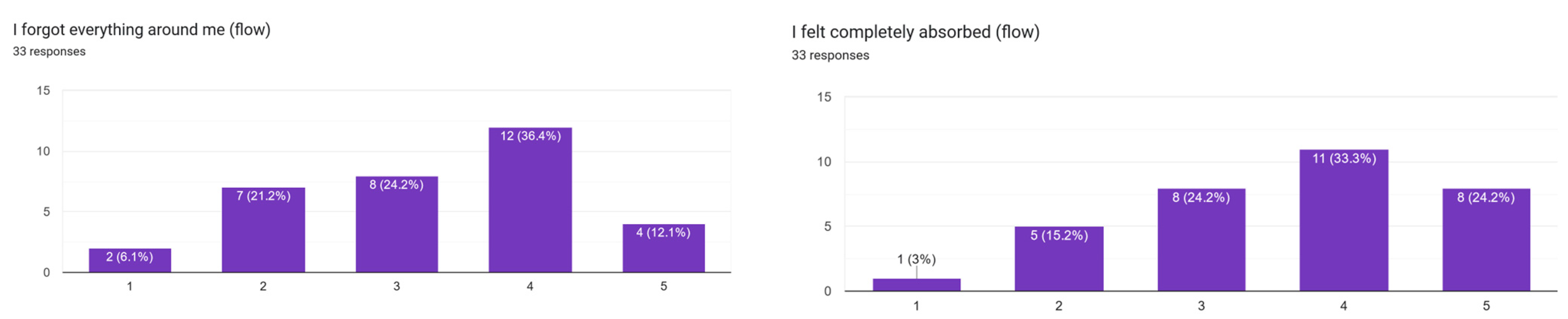

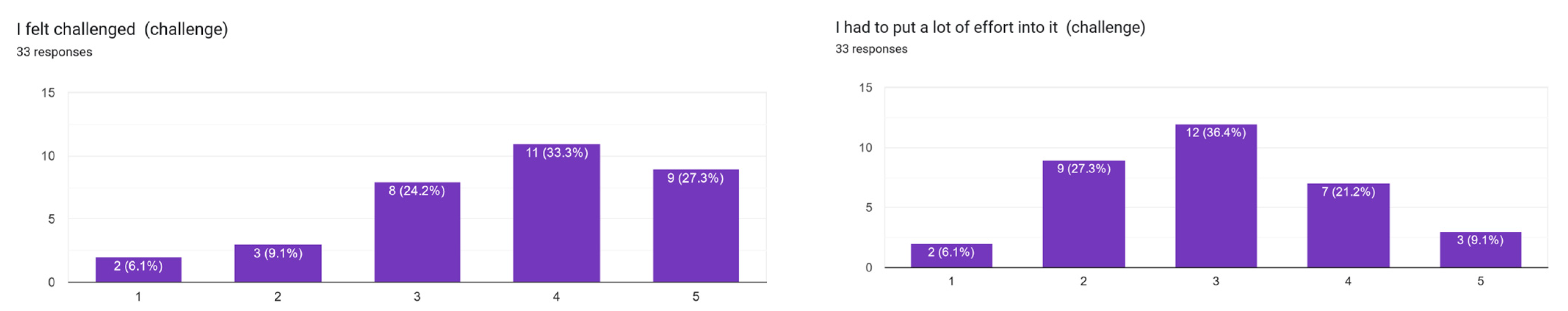

4.3. Metrics

4.4. Hypotheses—Analysis

4.5. Results

5. Discussion—Challenges and Opportunities

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ishii, A.; Tsuruta, M.; Suzuki, I.; Nakamae, S.; Suzuki, J.; Ochiai, Y. Let your world open: Cave-based visualization methods of public virtual reality towards a shareable vr experience. In Proceedings of the 10th Augmented Human International Conference, Reims, France, 11–12 March 2019; pp. 1–8. [Google Scholar]

- Mäkelä, V.; Radiah, R.; Alsherif, S.; Khamis, M.; Xiao, C.; Borchert, L.; Schmidt, A.; Alt, F. Virtual field studies: Conducting studies on public displays in virtual reality. In Proceedings of the 2020 Chi Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; pp. 1–15. [Google Scholar]

- Scavarelli, A.; Arya, A.; Teather, R.J. Virtual reality and augmented reality in social learning spaces: A literature review. Virtual Real. 2021, 25, 257–277. [Google Scholar] [CrossRef]

- Theodoropoulos, A.; Roinioti, E.; Dejonai, M.; Aggelakos, Y.; Lepouras, G. Turtle Heroes: Designing a Serious Game for a VR Interactive Tunnel. In Proceedings of the Games and Learning Alliance: 11th International Conference, GALA 2022, Tampere, Finland, 30 November–2 December 2022; pp. 3–10. [Google Scholar]

- Muhanna, M.A. Virtual reality and the CAVE: Taxonomy, interaction challenges and research directions. J. King Saud Univ-Comput. Inf. Sci. 2015, 27, 344–361. [Google Scholar] [CrossRef]

- Batista, A.F.; Thiry, M.; Gonçalves, R.Q.; Fernandes, A. Using technologies as virtual environments for computer teaching: A systematic review. Inform. Educ. 2020, 19, 201. [Google Scholar] [CrossRef]

- Liszio, S.; Emmerich, K.; Masuch, M. The influence of social entities in virtual reality games on player experience and immersion. In Proceedings of the 12th International Conference on the Foundations of Digital Games, Cape Cod, MA, USA, 14–17 August 2017; pp. 1–10. [Google Scholar]

- Kim, J.-H.; Kim, M.; Park, M.; Yoo, J. How interactivity and vividness influence consumer virtual reality shopping experience: The mediating role of telepresence. J. Res. Interact. Mark. 2021, 15, 502–525. [Google Scholar] [CrossRef]

- Weech, S.; Kenny, S.; Barnett-Cowan, M. Presence and cybersickness in virtual reality are negatively related: A review. Front. Psychol. 2019, 10, 158. [Google Scholar] [CrossRef]

- Deleuze, J.; Maurage, P.; Schimmenti, A.; Nuyens, F.; Melzer, A.; Billieux, J. Escaping reality through videogames is linked to an implicit preference for virtual over real-life stimuli. J. Affect. Disord. 2019, 245, 1024–1031. [Google Scholar] [CrossRef]

- Menin, A.; Torchelsen, R.; Nedel, L. An analysis of VR technology used in immersive simulations with a serious game perspective. IEEE Comput. Graph. Appl. 2018, 38, 57–73. [Google Scholar] [CrossRef]

- Evans, L.; Rzeszewski, M. Hermeneutic relations in VR: Immersion, embodiment, presence and HCI in VR gaming. In Proceedings of the HCI in Games: Second International Conference, HCI-Games 2020, Held as Part of the 22nd HCI International Conference, HCII 2020, Copenhagen, Denmark, 19–24 July 2020; pp. 23–38. [Google Scholar]

- Feng, Y.; Duives, D.C.; Hoogendoorn, S.P. Wayfinding behaviour in a multi-level building: A comparative study of HMD VR and Desktop VR. Adv. Eng. Inform. 2022, 51, 101475. [Google Scholar] [CrossRef]

- Azarby, S.; Rice, A. Scale Estimation for Design Decisions in Virtual Environments: Understanding the Impact of User Characteristics on Spatial Perception in Immersive Virtual Reality Systems. Buildings 2022, 12, 1461. [Google Scholar] [CrossRef]

- Steuer, J.; Biocca, F.; Levy, M.R. Defining virtual reality: Dimensions determining telepresence. Commun. Age Virtual Real. 1995, 33, 37–39. [Google Scholar] [CrossRef]

- Todd, C.; Mallya, S.; Majeed, S.; Rojas, J.; Naylor, K. VirtuNav: A Virtual Reality indoor navigation simulator with haptic and audio feedback for the visually impaired. In Proceedings of the 2014 IEEE Symposium on Computational Intelligence in Robotic Rehabilitation and Assistive Technologies (CIR2AT), Orlando, FL, USA, 9–12 December 2014; pp. 1–8. [Google Scholar]

- Vilar, E.; Rebelo, F.; Noriega, P. Indoor human wayfinding performance using vertical and horizontal signage in virtual reality. Hum. Factors Ergon. Manuf. Serv. Ind. 2014, 24, 601–615. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, H.; Kang, S.-C.; Al-Hussein, M. Virtual reality applications for the built environment: Research trends and opportunities. Autom. Constr. 2020, 118, 103311. [Google Scholar] [CrossRef]

- Leyrer, M.; Linkenauger, S.A.; Bülthoff, H.H.; Mohler, B.J. The importance of postural cues for determining eye height in immersive virtual reality. PLoS ONE 2015, 10, e0127000. [Google Scholar] [CrossRef] [PubMed]

- Taub, M.; Sawyer, R.; Smith, A.; Rowe, J.; Azevedo, R.; Lester, J. The agency effect: The impact of student agency on learning, emotions, and problem-solving behaviors in a game-based learning environment. Comput. Educ. 2020, 147, 103781. [Google Scholar] [CrossRef]

- Dobre, G.C.; Gillies, M.; Pan, X. Immersive machine learning for social attitude detection in virtual reality narrative games. Virtual Real. 2022, 26, 1519–1538. [Google Scholar] [CrossRef]

- Allman, S.A.; Cordy, J.; Hall, J.P.; Kleanthous, V.; Lander, E.R. Exploring the perception of additional information content in 360 3D VR video for teaching and learning. Virtual Worlds 2022, 1, 1–17. [Google Scholar] [CrossRef]

- Miller, H.L.; Bugnariu, N.L. Level of immersion in virtual environments impacts the ability to assess and teach social skills in autism spectrum disorder. Cyberpsychol. Behav. Soc. Netw. 2016, 19, 246–256. [Google Scholar] [CrossRef]

- Pollard, K.A.; Oiknine, A.H.; Files, B.T.; Sinatra, A.M.; Patton, D.; Ericson, M.; Thomas, J.; Khooshabeh, P. Level of immersion affects spatial learning in virtual environments: Results of a three-condition within-subjects study with long intersession intervals. Virtual Real. 2020, 24, 783–796. [Google Scholar] [CrossRef]

- Jacho, L.; Sobota, B.; Korečko, Š.; Hrozek, F. Semi-immersive virtual reality system with support for educational and pedagogical activities. In Proceedings of the 2014 IEEE 12th IEEE International Conference on Emerging eLearning Technologies and Applications (ICETA), Stary Smokovec, Slovakia, 4–5 December 2014; pp. 199–204. [Google Scholar]

- Steinicke, F.; Bruder, G. A self-experimentation report about long-term use of fully-immersive technology. In Proceedings of the 2nd ACM symposium on Spatial User Interaction, Honolulu, HI, USA, 4–5 October 2014; pp. 66–69. [Google Scholar]

- Lorenz, M.; Brade, J.; Diamond, L.; Sjölie, D.; Busch, M.; Tscheligi, M.; Klimant, P.; Heyde, C.-E.; Hammer, N. Presence and user experience in a virtual environment under the influence of ethanol: An explorative study. Sci. Rep. 2018, 8, 6407. [Google Scholar] [CrossRef]

- Regenbrecht, H.; Schubert, T. Real and illusory interactions enhance presence in virtual environments. Presence Teleoper. Virtual Environ. 2002, 11, 425–434. [Google Scholar] [CrossRef]

- Witmer, B.G.; Singer, M.J. Measuring presence in virtual environments: A presence questionnaire. Presence 1998, 7, 225–240. [Google Scholar] [CrossRef]

- Lok, B.; Naik, S.; Whitton, M.; Brooks, F.P. Effects of handling real objects and self-avatar fidelity on cognitive task performance and sense of presence in virtual environments. Presence 2003, 12, 615–628. [Google Scholar] [CrossRef]

- Azarby, S.; Rice, A. Spatial Perception Imperatives in Virtual Environments: Understanding the Impacts of View Usage Patterns on Spatial Design Decisions in Virtual Reality Systems. Buildings 2023, 13, 160. [Google Scholar] [CrossRef]

- Valvoda, J.T.; Kuhlen, T.; Bischof, C.H. Influence of exocentric avatars on the sensation of presence in room-mounted virtual environments. In Proceedings of the PRESENCE 2007: Proceedings of the 10th Annual International Workshop on Presence, Barcelona, Spain, 25–27 October 2007; pp. 59–69. [Google Scholar]

- Bangay, S.; Preston, L. An investigation into factors influencing immersion in interactive virtual reality environments. Stud. Health Technol. Inform. 1998, 58, 43–51. [Google Scholar] [PubMed]

- Lorenz, M.; Brade, J.; Klimant, P.; Heyde, C.-E.; Hammer, N. Age and gender effects on presence, user experience and usability in virtual environments–first insights. PLoS ONE 2023, 18, e0283565. [Google Scholar] [CrossRef]

- Dilanchian, A.T.; Andringa, R.; Boot, W.R. A pilot study exploring age differences in presence, workload, and cybersickness in the experience of immersive virtual reality environments. Front. Virtual Real. 2021, 2, 736793. [Google Scholar] [CrossRef]

- Bürger, D.; Pastel, S.; Chen, C.-H.; Petri, K.; Schmitz, M.; Wischerath, L.; Witte, K. Suitability test of virtual reality applications for older people considering the spatial orientation ability. Virtual Real. 2023, in press. [CrossRef]

- Cruz-Neira, C.; Sandin, D.J.; DeFanti, T.A.; Kenyon, R.V.; Hart, J.C. The CAVE: Audio visual experience automatic virtual environment. Commun. ACM 1992, 35, 64–73. [Google Scholar] [CrossRef]

- DeFanti, T.; Acevedo, D.; Ainsworth, R.; Brown, M.; Cutchin, S.; Dawe, G.; Doerr, K.-U.; Johnson, A.; Knox, C.; Kooima, R. The future of the CAVE. Open Eng. 2011, 1, 16–37. [Google Scholar] [CrossRef]

- DeFanti, T.A.; Dawe, G.; Sandin, D.J.; Schulze, J.P.; Otto, P.; Girado, J.; Kuester, F.; Smarr, L.; Rao, R. The StarCAVE, a third-generation CAVE and virtual reality OptIPortal. Future Gener. Comput. Syst. 2009, 25, 169–178. [Google Scholar] [CrossRef]

- Febretti, A.; Nishimoto, A.; Thigpen, T.; Talandis, J.; Long, L.; Pirtle, J.; Peterka, T.; Verlo, A.; Brown, M.; Plepys, D. CAVE2: A hybrid reality environment for immersive simulation and information analysis. In Proceedings of the Engineering Reality of Virtual Reality, Burlingame, CA, USA, 4–5 February 2013; Volume 8649, pp. 9–20. [Google Scholar]

- Gonçalves, A.; Bermúdez, S. KAVE: Building Kinect based CAVE automatic virtual environments, methods for surround-screen projection management, motion parallax and full-body interaction support. Proc. ACM Hum-Comput. Interact. 2018, 2, 1–15. [Google Scholar] [CrossRef]

- Eghbali, P.; Väänänen, K.; Jokela, T. Social acceptability of virtual reality in public spaces: Experiential factors and design recommendations. In Proceedings of the 18th International Conference on Mobile and Ubiquitous Multimedia, Pisa, Italy, 26–29 November 2019; pp. 1–11. [Google Scholar]

- Marrinan, T.; Nishimoto, A.; Insley, J.A.; Rizzi, S.; Johnson, A.; Papka, M.E. Interactive multi-modal display spaces for visual analysis. In Proceedings of the 2016 ACM International Conference on Interactive Surfaces and Spaces, Niagara Falls, ON, Canada, 6–9 November 2016; pp. 421–426. [Google Scholar]

- De Back, T.T.; Tinga, A.M.; Nguyen, P.; Louwerse, M.M. Benefits of immersive collaborative learning in CAVE-based virtual reality. Int. J. Educ. Technol. High. Educ. 2020, 17, 51. [Google Scholar] [CrossRef]

- Lee, B.; Hu, X.; Cordeil, M.; Prouzeau, A.; Jenny, B.; Dwyer, T. Shared surfaces and spaces: Collaborative data visualisation in a co-located immersive environment. IEEE Trans. Vis. Comput. Graph. 2020, 27, 1171–1181. [Google Scholar] [CrossRef]

- Van Leeuwen, J.; Hermans, K.; Quanjer, A.J.; Jylhä, A.; Nijman, H. Using virtual reality to increase civic participation in designing public spaces. In Proceedings of the 16th International Conference on World Wide Web (ECDG’18), Lyon, France, 16 April 2018; pp. 230–239. [Google Scholar]

- Gonçalves, A.; Borrego, A.; Latorre, J.; Llorens, R.; i Badia, S.B. Evaluation of a low-cost virtual reality surround-screen projection system. IEEE Trans. Vis. Comput. Graph. 2021, 28, 4452–4461. [Google Scholar] [CrossRef] [PubMed]

- Kalantari, S.; Neo, J.R.J. Virtual environments for design research: Lessons learned from use of fully immersive virtual reality in interior design research. J. Inter. Des. 2020, 45, 27–42. [Google Scholar] [CrossRef]

- Hagler, J.; Lankes, M.; Aschauer, A. The virtual house of medusa: Guiding museum visitors through a co-located mixed reality installation. In Proceedings of the Serious Games: 4th Joint International Conference, JCSG 2018, Darmstadt, Germany, 7–8 November 2018; pp. 182–188. [Google Scholar]

- Gall, J.; Stoll, C.; De Aguiar, E.; Theobalt, C.; Rosenhahn, B.; Seidel, H.-P. Motion capture using joint skeleton tracking and surface estimation. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 1746–1753. [Google Scholar]

- Sra, M.; Schmandt, C. Metaspace: Full-body tracking for immersive multiperson virtual reality. In Proceedings of the 28th Annual ACM Symposium on User Interface Software & Technology, Charlotte, NC, USA, 11–15 November 2015; pp. 47–48. [Google Scholar]

- Cameron, C.R.; DiValentin, L.W.; Manaktala, R.; McElhaney, A.C.; Nostrand, C.H.; Quinlan, O.J.; Sharpe, L.N.; Slagle, A.C.; Wood, C.D.; Zheng, Y.Y. Hand tracking and visualization in a virtual reality simulation. In Proceedings of the 2011 IEEE Systems and Information Engineering Design Symposium, Charlottesville, VA, USA, 29 April 2011; pp. 127–132. [Google Scholar]

- Sagayam, K.M.; Hemanth, D.J. Hand posture and gesture recognition techniques for virtual reality applications: A survey. Virtual Real. 2017, 21, 91–107. [Google Scholar] [CrossRef]

- Jambrošic, K.; Krhen, M.; Horvat, M.; Oberman, T. The use of inertial measurement units in virtual reality systems for auralization applications. In Proceedings of the International Congress on Acoustics, Aachen, Germany, 9–13 September 2019; pp. 2611–2618. [Google Scholar]

- Alaee, G.; Deasi, A.P.; Pena-Castillo, L.; Brown, E.; Meruvia-Pastor, O. A user study on augmented virtuality using depth sensing cameras for near-range awareness in immersive VR. In Proceedings of the IEEE VR’s 4th Workshop on Everyday Virtual Reality (WEVR 2018), Reutlingen, Germany, 18–22 March 2018; Volume 10, p. 3. [Google Scholar]

- Welch, G.F. History: The use of the kalman filter for human motion tracking in virtual reality. Presence 2009, 18, 72–91. [Google Scholar] [CrossRef]

- Vincze, A.; Jurchiș, R. Quiet Eye as a mechanism for table tennis performance under fatigue and complexity. J. Mot. Behav. 2022, 54, 657–668. [Google Scholar] [CrossRef]

- Games, B. Beat Saber; Game Oculus VR Beat Games: Prague, Czech Republic, 2019. [Google Scholar]

- Moeslund, T.B.; Granum, E. A survey of computer vision-based human motion capture. Comput. Vis. Image Underst. 2001, 81, 231–268. [Google Scholar] [CrossRef]

- Beddiar, D.R.; Nini, B.; Sabokrou, M.; Hadid, A. Vision-based human activity recognition: A survey. Multimed. Tools Appl. 2020, 79, 30509–30555. [Google Scholar] [CrossRef]

- Caserman, P.; Garcia-Agundez, A.; Konrad, R.; Göbel, S.; Steinmetz, R. Real-time body tracking in virtual reality using a Vive tracker. Virtual Real. 2019, 23, 155–168. [Google Scholar] [CrossRef]

- Franzò, M.; Pica, A.; Pascucci, S.; Marinozzi, F.; Bini, F. Hybrid System Mixed Reality and Marker-Less Motion Tracking for Sports Rehabilitation of Martial Arts Athletes. Appl. Sci. 2023, 13, 2587. [Google Scholar] [CrossRef]

- Zone, R. Deep image: 3D in art and science. In Proceedings of the Stereoscopic Displays and Virtual Reality Systems III, San Jose, CA, USA, 30 January–2 February 1996; Volume 2653, pp. 4–8. [Google Scholar]

- Boletsis, C. A user experience questionnaire for VR locomotion: Formulation and preliminary evaluation. In Proceedings of the Augmented Reality, Virtual Reality, and Computer Graphics: 7th International Conference, AVR 2020, Lecce, Italy, 7–10 September 2020; pp. 157–167. [Google Scholar]

- Calvillo-Gámez, E.H.; Cairns, P.; Cox, A.L. Assessing the core elements of the gaming experience. In Evaluating User Experience in Games; Human-Computer Interaction Series; Springer: London, UK, 2015; pp. 37–62. [Google Scholar]

- Abeele, V.V.; Spiel, K.; Nacke, L.; Johnson, D.; Gerling, K. Development and validation of the player experience inventory: A scale to measure player experiences at the level of functional and psychosocial consequences. Int. J. Hum-Comput. Stud. 2020, 135, 102370. [Google Scholar] [CrossRef]

- Baños, R.M.; Botella, C.; Garcia-Palacios, A.; Villa, H.; Perpiñá, C.; Alcaniz, M. Presence and reality judgment in virtual environments: A unitary construct? Cyberpsychol. Behav. 2000, 3, 327–335. [Google Scholar] [CrossRef]

- Sweetser, P.; Wyeth, P. GameFlow: A model for evaluating player enjoyment in games. Comput. Entertain. CIE 2005, 3, 3. [Google Scholar] [CrossRef]

- Brockmyer, J.H.; Fox, C.M.; Curtiss, K.A.; McBroom, E.; Burkhart, K.M.; Pidruzny, J.N. The development of the Game Engagement Questionnaire: A measure of engagement in video game-playing. J. Exp. Soc. Psychol. 2009, 45, 624–634. [Google Scholar] [CrossRef]

- Kennedy, R.S.; Lane, N.E.; Berbaum, K.S.; Lilienthal, M.G. Simulator sickness questionnaire: An enhanced method for quantifying simulator sickness. Int. J. Aviat. Psychol. 1993, 3, 203–220. [Google Scholar] [CrossRef]

- Phan, M.H.; Keebler, J.R.; Chaparro, B.S. The development and validation of the game user experience satisfaction scale (GUESS). Hum. Factors 2016, 58, 1217–1247. [Google Scholar] [CrossRef]

- Rigby, J.M.; Brumby, D.P.; Gould, S.J.; Cox, A.L. Development of a questionnaire to measure immersion in video media: The Film IEQ. In Proceedings of the 2019 ACM International Conference on Interactive Experiences for TV and Online Video, Salford, UK, 5–7 June 2019; pp. 35–46. [Google Scholar]

- Keller, J.M. Development of Two Measures of Learner Motivation; Florida State University: Tallahassee, FL, USA, 2006. [Google Scholar]

- Holly, M.; Pirker, J.; Resch, S.; Brettschuh, S.; Gütl, C. Designing VR experiences–expectations for teaching and learning in VR. Educ. Technol. Soc. 2021, 24, 107–119. [Google Scholar]

- Schrepp, M.; Hinderks, A.; Thomaschewski, J. Applying the user experience questionnaire (UEQ) in different evaluation scenarios. In Proceedings of the Design, User Experience, and Usability. Theories, Methods, and Tools for Designing the User Experience: Third International Conference, DUXU 2014, Held as Part of HCI International 2014, Heraklion, Crete, Greece, 22–27 June 2014; pp. 383–392. [Google Scholar]

| VR System | Screen Resolution | Number of Projectors—Panels | Field of View | Users |

|---|---|---|---|---|

| Immersive HMD (e.g., Oculus Quest 2) | 1832 × 1920 per eye | N/A | ~110° | 1 |

| CAVE2 | 4096 × 3072 per screen (×72 screens) | 72 LCD panels | 320° horizontal, 135° vertical | Multiple |

| CAVE1 | 1280 × 1024 per screen (×3 screens) | 3 projectors | 180° horizontal, 90° vertical | Multiple |

| Powerwall | 4096 × 2160 | 1–4 | 180° horizontal, 60° vertical | Multiple |

| Tiled display wall | 1920 × 1080 per tile (×multiple tiles) | Multiple | Dependent on configuration | Multiple |

| Construct | Item Description in the Survey | Factor | Source |

|---|---|---|---|

| Immersion–Presence–Flow | I was interested in the task | IMM1 | [64,65] |

| I found it impressive | IMM2 | [64,65] | |

| Do you think this setup is immersive? | IMM3 | [66] | |

| I felt like being there, into the scene | PRE | [67,68] | |

| I forgot everything around me | FLO1 | [66,67,69] | |

| I felt completely absorbed | FLO2 | [66,67,69] | |

| Perceived Usability | I thought the VR navigation technique was easy to use | PER_USA1 | [64,65] |

| I found the VR navigation technique unnecessarily complex | PER_USA2 | [64,65] | |

| I think that I would like to use this VR navigation technique frequently | PER_USA3 | [64] | |

| I think that I would need the support of a technical person to be able to use this VR navigation technique | PER_USA4 | [64] | |

| I felt very confident using the VR navigation technique | PER_USA5 | [64] | |

| - | PER_USA6 | [64] | |

| Comfort—Positive and Negative aspects | I felt challenged (Positive Challenge) | CHA1 | [64,66,68] |

| I had to put a lot of effort into it (Negative Challenge) | CHA2 | [64,65] | |

| I felt good (Positive Effect) | POS1 | [64,65,69] | |

| I felt skillful (Positive Effect, Competence) | POS2 | [64,65,68] | |

| I felt bored (Negative Effect) | NEG1 | [64,65,69] | |

| I found it tiresome (Negative Effect) | NEG2 | [64,65,69] | |

| Did you experience any simulation sickness? | SIC | [70] | |

| Possible Usage | Do you think this setup can be good for playing games? (Enjoyment) | ENJ | [71,72] |

| Do you think this setup can have learning impact e.g., for students visiting the university? (Learning) | LEA | [73,74] | |

| Do you think this setup can be good for the bystanders to be part of the experience? (Motivation-Engagement) | MOT | [71,75] |

| Construct | Item | Mean | SD |

|---|---|---|---|

| Immersion–Presence–Flow | IMM1 | 4.36 | 0.783 |

| IMM2 | 4.39 | 0.747 | |

| IMM3 | 4.21 | 0.600 | |

| PRE | 3.70 | 0.984 | |

| FLO1 | 3.27 | 1.126 | |

| FLO2 | 3.61 | 1.116 | |

| Perceived Usability | PER_USA1 | 3.48 | 1.093 |

| PER_USA2 | 1.97 | 1.075 | |

| PER_USA3 | 3.82 | 0.808 | |

| PER_USA4 | 2.15 | 1.253 | |

| PER_USA5 | 3.70 | 1.045 | |

| PER_USA6 | 4.03 | 0.984 | |

| Comfort—Positive and Negative aspects | CHA1 | 3.67 | 1.164 |

| CHA2 | 3.00 | 1.061 | |

| POS1 | 4.61 | 0.556 | |

| POS2 | 3.79 | 1.193 | |

| NEG1 | 1.21 | 0.485 | |

| NEG2 | 2.00 | 1.173 | |

| SIC | 1.45 | 1.003 | |

| Possible Usage | ENJ | 4.61 | 0.556 |

| LEA | 4.52 | 0.834 | |

| MOT | 4.15 | 0.939 |

| IMM1 | IMM2 | IMM3 | PRE | FLO1 | FLO2 | ||

|---|---|---|---|---|---|---|---|

| IMM1 | Correlation Coefficient | 1,000 | 0.337 | 0.563 ** | 0.533 ** | 0.215 | 0.131 |

| Sig. (2-tailed) | 0 | 0.055 | <0.001 | 0.001 | 0.230 | 0.467 | |

| N | 33 | 33 | 33 | 33 | 33 | 33 | |

| IMM2 | Correlation Coefficient | 0.337 | 1,000 | 0.472 ** | 0.260 | 0.220 | 0.518 ** |

| Sig. (2-tailed) | 0.055 | 0 | 0.006 | 0.144 | 0.219 | 0.002 | |

| N | 33 | 33 | 33 | 33 | 33 | 33 | |

| IMM3 | Correlation Coefficient | 0.563 ** | 0.472 ** | 1,000 | 0.338 | 0.363 * | 0.418 * |

| Sig. (2-tailed) | <0.001 | 0.006 | . | 0.055 | 0.038 | 0.015 | |

| N | 33 | 33 | 33 | 33 | 33 | 33 | |

| PRE | Correlation Coefficient | 0.533 ** | 0.260 | 0.338 | 1,000 | 0.278 | 0.265 |

| Sig. (2-tailed) | 0.001 | 0.144 | 0.055 | . | 0.117 | 0.136 | |

| N | 33 | 33 | 33 | 33 | 33 | 33 | |

| FLO1 | Correlation Coefficient | 0.215 | 0.220 | 0.363 * | 0.278 | 1,000 | 0.751 ** |

| Sig. (2-tailed) | 0.230 | 0.219 | 0.038 | 0.117 | . | <0.001 | |

| N | 33 | 33 | 33 | 33 | 33 | 33 | |

| FLO2 | Correlation Coefficient | 0.131 | 0.518 ** | 0.418 * | 0.265 | 0.751 ** | 1,000 |

| Sig. (2-tailed) | 0.467 | 0.002 | 0.015 | 0.136 | <0.001 | . | |

| N | 33 | 33 | 33 | 33 | 33 | 33 | |

| PER1 | PER2 | PER3 | PER4 | PER5 | PER6 | ||

|---|---|---|---|---|---|---|---|

| PER1 | Correlation Coefficient | 1,000 | 0.210 | 0.381 * | 0.089 | 0.219 | 0.487 ** |

| Sig. (2-tailed) | . | 0.240 | 0.029 | 0.621 | 0.222 | 0.004 | |

| N | 33 | 33 | 33 | 33 | 33 | 33 | |

| PER2 | Correlation Coefficient | 0.210 | 1,000 | 0.130 | 0.204 | −0.034 | −0.108 |

| Sig. (2-tailed) | 0.240 | . | 0.469 | 0.254 | 0.852 | 0.551 | |

| N | 33 | 33 | 33 | 33 | 33 | 33 | |

| PER3 | Correlation Coefficient | 0.381 * | 0.130 | 1,000 | 0.172 | 0.208 | 0.207 |

| Sig. (2-tailed) | 0.029 | 0.469 | . | 0.339 | 0.246 | 0.247 | |

| N | 33 | 33 | 33 | 33 | 33 | 33 | |

| PER4 | Correlation Coefficient | 0.089 | 0.204 | 0.172 | 1,000 | −0.209 | −0.305 |

| Sig. (2-tailed) | 0.621 | 0.254 | 0.339 | . | 0.243 | 0.085 | |

| N | 33 | 33 | 33 | 33 | 33 | 33 | |

| PER5 | Correlation Coefficient | 0.219 | −0.034 | 0.208 | −0.209 | 1,000 | 0.462 ** |

| Sig. (2-tailed) | 0.222 | 0.852 | 0.246 | 0.243 | . | 0.007 | |

| N | 33 | 33 | 33 | 33 | 33 | 33 | |

| PER6 | Correlation Coefficient | 0.487 ** | −0.108 | 0.207 | −0.305 | 0.462 ** | 1,000 |

| Sig. (2-tailed) | 0.004 | 0.551 | 0.247 | 0.085 | 0.07 | . | |

| N | 33 | 33 | 33 | 33 | 33 | 33 | |

| CHA1 | CHA2 | POS1 | POS2 | NEG1 | NEG2 | SIC | ||

|---|---|---|---|---|---|---|---|---|

| CHA1 | Correlation Coefficient | 1,000 | 0.324 | 0.218 | 0.355 * | 0.065 | −0.111 | 0.080 |

| Sig. (2-tailed) | . | 0.066 | 0.223 | 0.043 | 0.718 | 0.539 | 0.657 | |

| N | 33 | 33 | 33 | 33 | 33 | 33 | 33 | |

| CHA2 | Correlation Coefficient | 0.324 | 1,000 | −0.054 | −0.049 | 0.439 * | 0.110 | 0.288 |

| Sig. (2-tailed) | 0.066 | . | 0.766 | 0.786 | 0.011 | 0.541 | 0.104 | |

| N | 33 | 33 | 33 | 33 | 33 | 33 | 33 | |

| POS1 | Correlation Coefficient | 0.218 | −0.054 | 1,000 | 0.558 ** | −0.160 | −0.507 ** | 0.022 |

| Sig. (2-tailed) | 0.223 | 0.766 | . | <0.001 | 0.373 | 0.003 | 0.902 | |

| N | 33 | 33 | 33 | 33 | 33 | 33 | 33 | |

| POS2 | Correlation Coefficient | 0.355 * | −0.049 | 0.558 ** | 1,000 | −0.326 | −0.095 | 0.008 |

| Sig. (2-tailed) | 0.043 | 0.786 | <0.001 | . | 0.064 | 0.599 | 0.966 | |

| N | 33 | 33 | 33 | 33 | 33 | 33 | 33 | |

| NEG1 | Correlation Coefficient | 0.065 | 0.439 * | −0.160 | −0.326 | 1,000 | 0.331 | −0.024 |

| Sig. (2-tailed) | 0.718 | 0.011 | 0.373 | 0.064 | . | 0.060 | 0.894 | |

| N | 33 | 33 | 33 | 33 | 33 | 33 | 33 | |

| NEG2 | Correlation Coefficient | −0.111 | 0.110 | −0.507 ** | −0.095 | 0.331 | 1,000 | 0.019 |

| Sig. (2-tailed) | 0.539 | 0.541 | 0.003 | 0.599 | 0.060 | . | 0.917 | |

| N | 33 | 33 | 33 | 33 | 33 | 33 | 33 | |

| SIC | Correlation Coefficient | 0.080 | 0.288 | 0.022 | 0.008 | −0.024 | 0.019 | 1,000 |

| Sig. (2-tailed) | 0.657 | 0.104 | 0.902 | 0.966 | 0.894 | 0.917 | . | |

| N | 33 | 33 | 33 | 33 | 33 | 33 | 33 | |

| ENJ | LEA | MOT | ||

|---|---|---|---|---|

| ENJ | Correlation Coefficient | 1,000 | 0.257 | 0.305 |

| Sig. (2-tailed) | . | 0.148 | 0.084 | |

| N | 33 | 33 | 33 | |

| LEA | Correlation Coefficient | 0.257 | 1,000 | 0.369 * |

| Sig. (2-tailed) | 0.148 | . | 0.035 | |

| N | 33 | 33 | 33 | |

| MOT | Correlation Coefficient | 0.305 | 0.369 * | 1,000 |

| Sig. (2-tailed) | 0.084 | 0.035 | . | |

| N | 33 | 33 | 33 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Theodoropoulos, A.; Stavropoulou, D.; Papadopoulos, P.; Platis, N.; Lepouras, G. Developing an Interactive VR CAVE for Immersive Shared Gaming Experiences. Virtual Worlds 2023, 2, 162-181. https://doi.org/10.3390/virtualworlds2020010

Theodoropoulos A, Stavropoulou D, Papadopoulos P, Platis N, Lepouras G. Developing an Interactive VR CAVE for Immersive Shared Gaming Experiences. Virtual Worlds. 2023; 2(2):162-181. https://doi.org/10.3390/virtualworlds2020010

Chicago/Turabian StyleTheodoropoulos, Anastasios, Dimitra Stavropoulou, Panagiotis Papadopoulos, Nikos Platis, and George Lepouras. 2023. "Developing an Interactive VR CAVE for Immersive Shared Gaming Experiences" Virtual Worlds 2, no. 2: 162-181. https://doi.org/10.3390/virtualworlds2020010

APA StyleTheodoropoulos, A., Stavropoulou, D., Papadopoulos, P., Platis, N., & Lepouras, G. (2023). Developing an Interactive VR CAVE for Immersive Shared Gaming Experiences. Virtual Worlds, 2(2), 162-181. https://doi.org/10.3390/virtualworlds2020010