Efficient Representations of Spatially Variant Point Spread Functions with Butterfly Transforms in Bayesian Imaging Algorithms †

Abstract

1. Introduction

2. Methods

2.1. Fast Fourier Transformation

2.2. Butterfly Transform and Convolution

3. Information Field Theory

4. Parallel and Serial Likelihoods

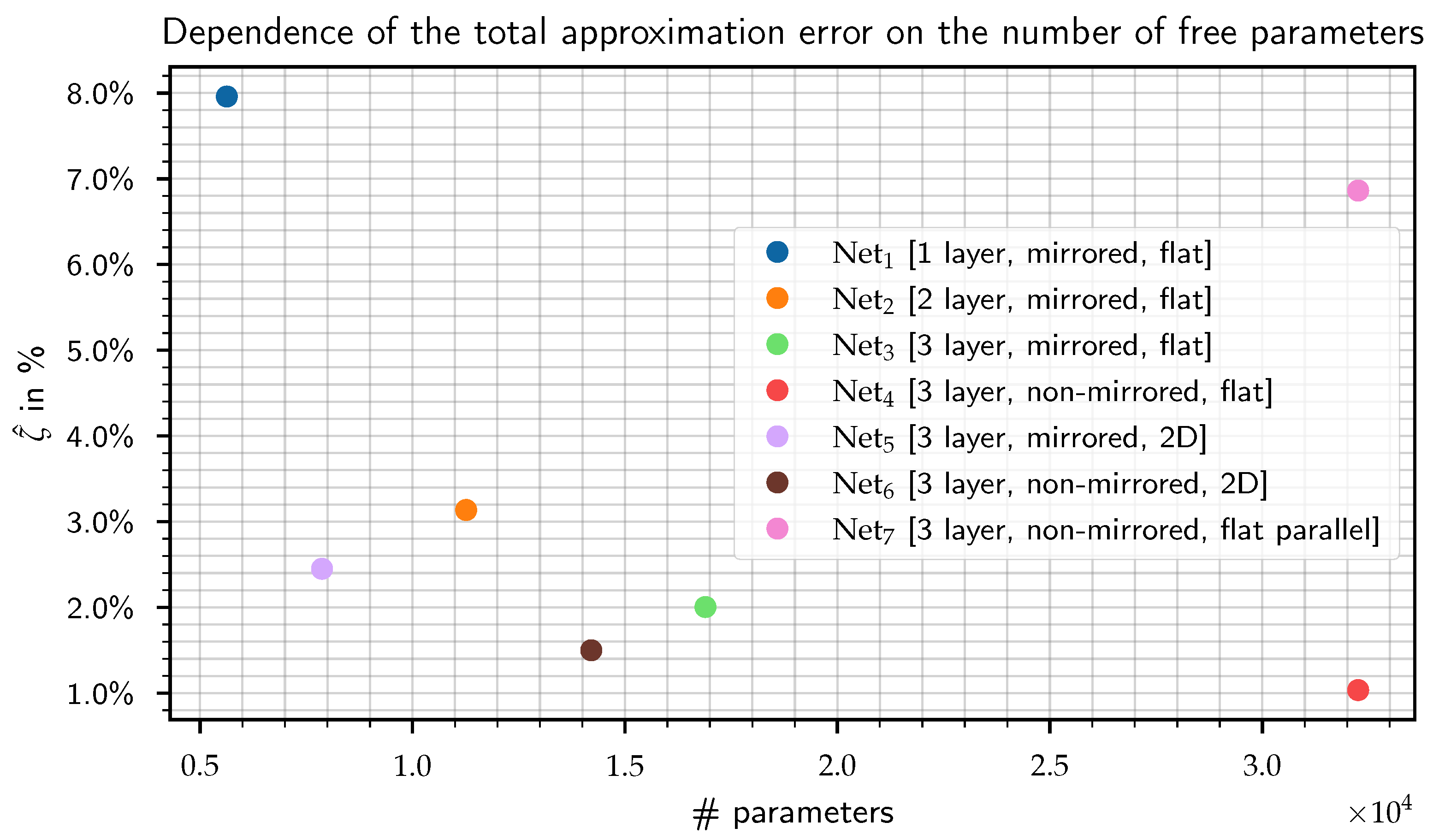

5. Evaluation of the Response Approximation

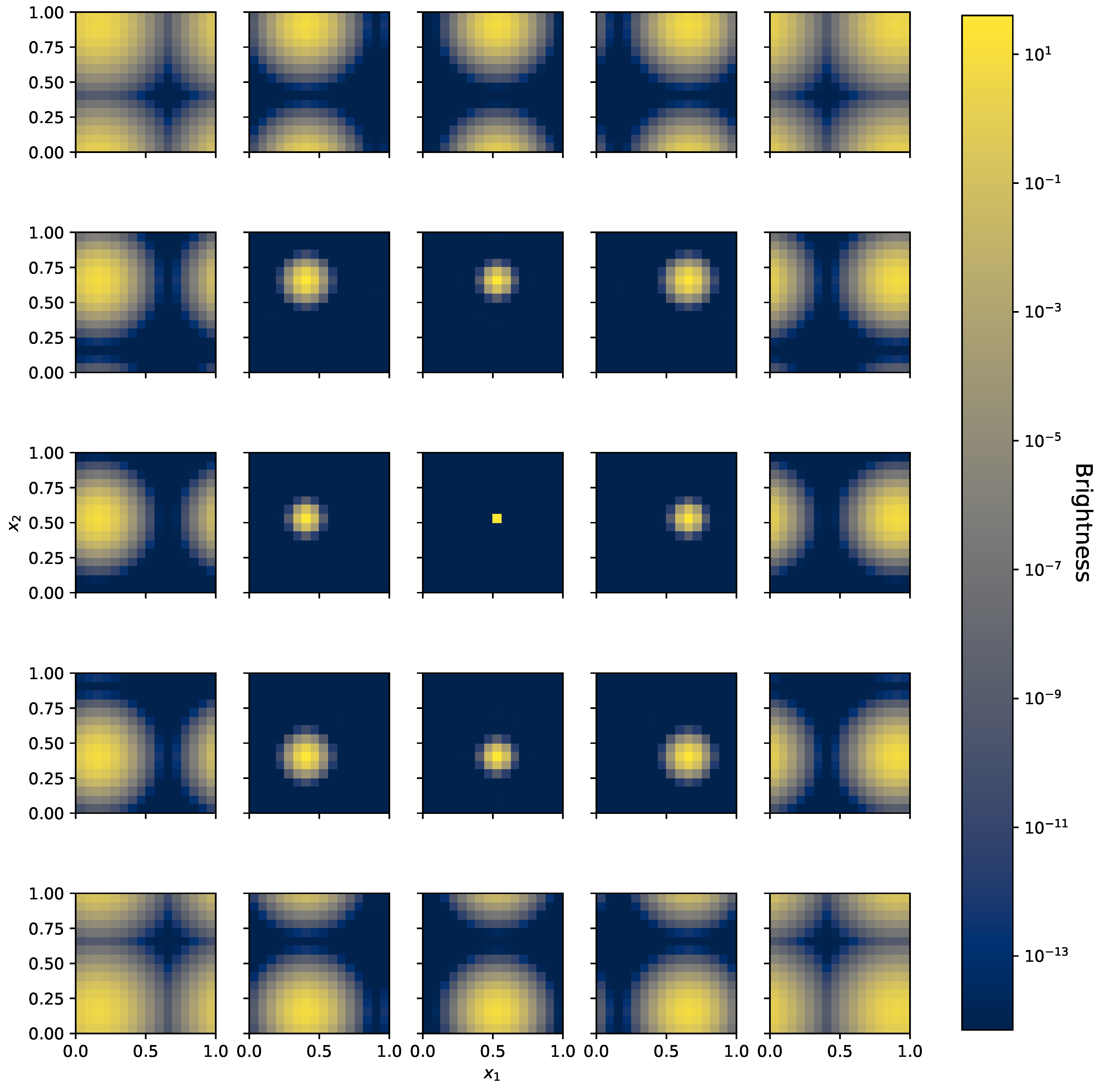

6. Synthetic Response

7. Results

8. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Predehl, P.; Andritschke, R.; Arefiev, V.; Babyshkin, V.; Batanov, O.; Becker, W.; Böhringer, H.; Bogomolov, A.; Boller, T.; Borm, K.; et al. The eROSITA X-ray telescope on SRG. arXiv 2020, arXiv:2010.03477. [Google Scholar] [CrossRef]

- Weisskopf, M.C.; Tananbaum, H.D.; Van Speybroeck, L.P.; O’Dell, S.L. Chandra X-ray Observatory (CXO): Overview. In Proceedings of the X-ray Optics, Instruments, and Missions III; SPIE: Bellingham, DC, USA, 2000; Volume 4012, pp. 2–16. [Google Scholar]

- Selig, M.; Bell, M.R.; Junklewitz, H.; Oppermann, N.; Reinecke, M.; Greiner, M.; Pachajoa, C.; Enßlin, T.A. NIFTY—Numerical Information Field Theory—A versatile PYTHON library for signal inference. Astron. Astrophys. 2013, 554, A26. [Google Scholar] [CrossRef]

- Steininger, T.; Dixit, J.; Frank, P.; Greiner, M.; Hutschenreuter, S.; Knollmüller, J.; Leike, R.; Porqueres, N.; Pumpe, D.; Reinecke, M.; et al. NIFTy 3—Numerical Information Field Theory: A Python Framework for Multicomponent Signal Inference on HPC Clusters. Ann. Phys. 2019, 531, 1800290. [Google Scholar] [CrossRef]

- Arras, P.; Baltac, M.; Ensslin, T.A.; Frank, P.; Hutschenreuter, S.; Knollmueller, J.; Leike, R.; Newrzella, M.N.; Platz, L.; Reinecke, M.; et al. Nifty5: Numerical Information Field Theory v5; record ascl:1903.008; Astrophysics Source Code Library: College Park, MD, USA, 2019. [Google Scholar]

- Enßlin, T.A.; Frommert, M.; Kitaura, F.S. Information field theory for cosmological perturbation reconstruction and nonlinear signal analysis. Phys. Rev. D 2009, 80, 105005. [Google Scholar] [CrossRef]

- Enßlin, T. Astrophysical data analysis with information field theory. In Proceedings of the AIP Conference Proceedings, Canberra, ACT, Australia, 15–20 December 2013; American Institute of Physics: College Park, MD, USA, 2014; Volume 1636, pp. 49–54. [Google Scholar]

- Enßlin, T. Information field theory. In Proceedings of the AIP Conference Proceedings, Garching, Germany, 15–20 July 2012; American Institute of Physics: College Park, MD, USA, 2013; Volume 1553, pp. 184–191. [Google Scholar]

- Enßlin, T.A. Information theory for fields. Ann. Phys. 2019, 531, 1800127. [Google Scholar] [CrossRef]

- Dao, T.; Gu, A.; Eichhorn, M.; Rudra, A.; Ré, C. Learning fast algorithms for linear transforms using butterfly factorizations. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; Volume 97, pp. 1517–1527. [Google Scholar]

- Cooley, J.W.; Tukey, J.W. An algorithm for the machine calculation of complex Fourier series. Math. Comput. 1965, 19, 297–301. [Google Scholar] [CrossRef]

- Wolberg, G. Fast Fourier Transforms: A Review; Department of Computer Science, Columbia University: New York, NY, USA, 1988. [Google Scholar]

- Knollmüller, J.; Enßlin, T.A. Metric Gaussian Variational Inference. arXiv 2019, arXiv:1901.11033. [Google Scholar]

- Frank, P.; Leike, R.; Enßlin, T.A. Geometric variational inference. Entropy 2021, 23, 853. [Google Scholar] [CrossRef] [PubMed]

- Nocedal, J.; Wright, S. Numerical Optimization; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2006; pp. 168–170. [Google Scholar]

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M.; et al. TensorFlow: Large-Scale Machine Learning on Heterogeneous Systems. 2015. Available online: tensorflow.org (accessed on 8 December 2022).

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In Advances in Neural Information Processing Systems 32; Wallach, H., Larochelle, H., Beygelzimer, A., d’Alché-Buc, F., Fox, E., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2019; pp. 8024–8035. [Google Scholar]

| Network Name | |||||||

|---|---|---|---|---|---|---|---|

| # BCOs | 1 | 2 | 3 | 3 | 3 | 3 | 3 |

| architecture | mr | mr | mr | nmr | mr | nmr | nmr |

| design | flat | flat | flat | flat | 2D | 2D | flat |

| likelihood | serial | serial | serial | serial | serial | serial | parallel |

| in % | |||||||

| # parameters | 5632 | 7872 | |||||

| Density in % |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Eberle, V.; Frank, P.; Stadler, J.; Streit, S.; Enßlin, T. Efficient Representations of Spatially Variant Point Spread Functions with Butterfly Transforms in Bayesian Imaging Algorithms. Phys. Sci. Forum 2022, 5, 33. https://doi.org/10.3390/psf2022005033

Eberle V, Frank P, Stadler J, Streit S, Enßlin T. Efficient Representations of Spatially Variant Point Spread Functions with Butterfly Transforms in Bayesian Imaging Algorithms. Physical Sciences Forum. 2022; 5(1):33. https://doi.org/10.3390/psf2022005033

Chicago/Turabian StyleEberle, Vincent, Philipp Frank, Julia Stadler, Silvan Streit, and Torsten Enßlin. 2022. "Efficient Representations of Spatially Variant Point Spread Functions with Butterfly Transforms in Bayesian Imaging Algorithms" Physical Sciences Forum 5, no. 1: 33. https://doi.org/10.3390/psf2022005033

APA StyleEberle, V., Frank, P., Stadler, J., Streit, S., & Enßlin, T. (2022). Efficient Representations of Spatially Variant Point Spread Functions with Butterfly Transforms in Bayesian Imaging Algorithms. Physical Sciences Forum, 5(1), 33. https://doi.org/10.3390/psf2022005033