Abstract

Cosmic Rays (CRs) are powerful tools for the investigation of the structure of the magnetic fields in the galactic halo and the properties of the Inter-Stellar Medium. There are two parameters of CR propagation models: The galactic halo (half-) thickness, H, and the diffusion coefficient, D, are loosely constrained by current CR flux measurements; in particular, a large degeneracy exists, as only is well measured. The Be/Be isotopic flux ratio (thanks to the 2 My lifetime of Be) can be used as a radioactive clock that provides the measurement of the residence time of CRs in the galaxy. This is an important tool for solving the degeneracy of . Past measurements of the Be/Be isotopic flux ratios in CRs are scarce, limited to low energy, and affected by large uncertainties. Here, a new technique for measuring the Be/Be isotopic flux ratio in magnetic spectrometers with a data-driven approach is presented. As an example, by applying the method to beryllium events that were published by the PAMELA experiment, it is now possible to determine the important Be/Be measurements while avoiding the prohibitive uncertainties coming from Monte Carlo simulations. It is shown how the accuracy of the PAMELA data permits one to infer a value of the halo thickness with a precision of up to 25%.

1. Introduction

Cosmic rays (CRs) are a powerful tool for the investigation of exotic physics/astrophysics: The compositions of high-energy CRs provide information on the mysterious galactic PeVatrons and the small anti-matter components in CRs could identify the annihilation of dark matter in our galaxy.

In addition, the structure of the magnetic fields in the galactic halo and the properties of the Inter-Stellar Medium can also be probed with detailed CR flux measurements. In particular, the ratio of secondary CRs (such as Li, Be, or B) with respect to the primary CRs (such as He, C, and O) is able to provide the “grammage”, that is, the amount of material crossed by CRs in their journey through the galaxy.

There are two parameters of CR propagation models: The galactic halo (half-) thickness, H, and the diffusion coefficient, D, are loosely constrained by such a grammage measurement; in particular, a large degeneracy exists, as only is well measured [1].

The uncertainties of the D and H parameters (the latter is known to be in the range of 3–8 kpc) also reflects on the accuracy of the determination of secondary anti-proton and positron fluxes, which are the background for the searches for dark matter or exotic (astro-)physics [2,3,4,5].

The abundances of long-living unstable isotopes in CRs can be used as radioactive clocks that provide measurements of the residence time of CRs in the galaxy. This time information is complementary to the crossed grammage; thus, the abundance of radioactive isotopes in CRs is an important tool for solving the existing degeneracy in CR propagation models.

Beryllium Isotopic Measurements in Cosmic Rays

Only few elements in cosmic rays (Be, Al, Cl, Mg, and Fe) contain long-living radioactive isotopes; among them, beryllium is the lightest, i.e., the most promising for the measurement of isotopic composition in the relativistic kinetic energy range. Three beryllium isotopes are found in cosmic rays:

- Be: Stable as a bare nucleus in CRs. On Earth, it decays through electron capture (T = 53 days).

- Be: Stable.

- Be -radioactive nucleus (T = 1.39 years).

The missing Be has a central role in the stellar and Big-Bang nucleosynthesis; its extremely short half-life (8.19 × 10 s) represents a bottleneck for an efficient synthesis of heavier nuclei in the universe. From the point of view of measurement, this “isotopic hole” in the mass spectrum of beryllium is very useful in determining large amounts of Be and in reducing the contamination in the identification of Be and Be.

The identification of beryllium isotopes in magnetic spectrometers requires the simultaneous measurement of particle rigidity, , and velocity, . This allows the reconstruction of the particle mass, .

The typical mass resolution of magnetic spectrometers onboard past or current CR experiments ( amu) does not allow for the event-by-event identification of isotopes; therefore, the “traditional” approach to the measurement of the isotopic abundances of beryllium relies on the comparison of the experimental mass distribution with a Monte Carlo simulation.

This approach requires a very-well-tuned Monte Carlo simulation of the experiment, and the possible small residual discrepancies with the real detector’s response could prevent the measurement of the (interesting) small amounts of Be.

This issue is well described in [6], where the ”Monte-Carlo-based” analysis of beryllium events collected by the PAMELA experiment allowed only the measurement of Be/(Be+Be).

In the following, a data-driven approach to the measurement of the isotopic abundances of beryllium with magnetic spectrometers is described; this can allow one to avoid the issues related to Monte Carlo simulations. In particular, as an example, the application of this new approach to the beryllium event counts from PAMELA (gathered from Figures 3 and 4 of [6]) is shown, and a preliminary measurement of Be/Be in the 0.2–0.85 GeV/n range is provided.

2. Data-Driven Analysis

With knowledge of the true values of beryllium isotopes’ masses and a physically motivated scaling of the mass resolution for the three beryllium isotopes, the shapes of the isotope mass distributions can be self-consistently retrieved solely from the measured data.

In particular, the expected mass resolution for a magnetic spectrometer is:

Typically, the isotopic measurement is pursued in kinetic energy/nucleon bins (i.e., in bins); therefore, the contribution of the velocity to the mass resolution is constant for the different isotopes.

Moreover, in the (low) kinetic energy range that accessible with current isotopic measurements, the rigidity resolution is dominated by multiple Coulomb scattering, i.e., is practically constant for the different isotopes.

Finally, the masses of the three Be isotopes are within 30%; therefore, for a fixed value, the rigidity values for different Be isotopes are within 30%; for this reason, with a very good approximation, is constant, and we can assume that RMS(M)/<M> is the same for the three unknown mass distributions (hereafter also called templates).

2.1. Template Transformations

We can define , , and as the unknown normalized templates for Be, Be, and Be, respectively, and Be/Be as their unknown isotope abundance fractions.

A template can transform into the template by applying the operator , and we can assume that just transforms the coordinates ; therefore, to ensure template normalization:

In principle, an infinite set of functions are able to perform a transformation among two specific templates; however, we are typically interested in monotonic functions that quantiles by avoiding template folding. A very simple set of transformations are the linear ones defined by translation and scale transformations: .

transforms a normal distribution into a normal distribution.

Defining as the RMS of template and as the median of template , the linear transformation is the function .

The same transformation applied to a different template provides: and .

The linear transformation that satisfies the assumption ( constant) is simply , that is, a pure scaling depending only on known beryllium isotope mass ratios and not on the unknown mass resolution or template shapes.

2.2. Data-Driven Template Evaluation and Fit

Defining the known (measured) data distribution and assuming the three as fixed, the following system can be considered:

Therefore, the Be template can be written as:

where the last four terms—ghost—templates, are defined by:

Under the linear approximation, the median of ghost templates can be evaluated:

Profiting from the fact that the ghost templates are placed beyond and that we know that , the contribution of ghost templates in Equation (4) is small, and can be iteratively evaluated with the measured data by using Equation (5) and the linear approximation.

Once is obtained, the other templates are also straightforwardly obtained by using and , and a value for the fixed , , and configuration is obtained through a comparison with . The best-fit value of , , and is obtained by minimizing the on the allowed configuration space ().

This data-driven approach was tested on Monte-Carlo-simulated events, and it is able to correctly retrieve the injected isotopic ratios within statistical fluctuations. In the following, the example of the application to the beryllium events published by the PAMELA experiment [6] is shown.

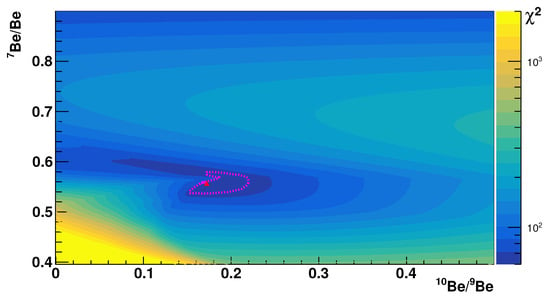

In Figure 1, a map for the vs. parameter space is shown for the example of the PAMELA–ToF events in the 0.65–0.85 GeV/n range (the last bin of our analysis). The best fit is marked by a red triangle, and the 68% confidence interval is surrounded by a red contour.

Figure 1.

Unbiased map of the configurations for PAMELA–ToF in the 0.65–0.85 GeV/n region.

It is important to note that the three naive solutions of the data-driven analysis are obviously , , and , and these obvious solutions are characterized by . When statistics are scarce, the bias induced by these naive solutions has a non-negligible impact on the physical best-fit position and on the evaluation of the correct confidence interval. To remove this bias, a bootstrap method was used, i.e., we simulated 100 pseudo-experiments in which we randomly extracted the data distribution from the measured value according to the known Poisson statistics. Therefore, the templates coming from the data-driven analysis were used to extract the map from each pseudo-experiment, and Figure 1 shows the unbiased map obtained with the average of the maps of the 100 pseudo-experiments. In this way, the unbiased obtained with the three naive solutions is non-zero and is similar to the one obtained with the unbiased physical solution.

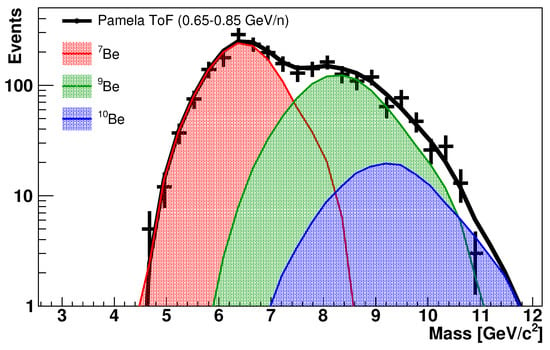

In Figure 2, the best fit for the example of PAMELA–ToF in the 0.65–0.85 GeV/n region is shown. The templates were obtained with this data-driven approach.

Figure 2.

Example of the measurement of beryllium isotopes with the data-driven analysis of the PAMELA–ToF data collected in the 0.65–0.85 GeV/n range.

Finally, it is important to note that the results of this data-driven approach are identical by construction, even when applying an arbitrary/overall scaling of the reconstructed mass value. For this reason, the results obtained through the data-driven analysis are quite solid in terms of the possible mis-calibrations of the rigidity/velocity scale, which could prevent the traditional MC-based analysis, as shown in [6]. As a practical example, we can also apply the data-driven analysis to events measured by the PAMELA calorimeter (Figure 4 of Reference [6]), even without a tuned Monte Carlo model/calibration for the measurement.

3. Results and Discussion

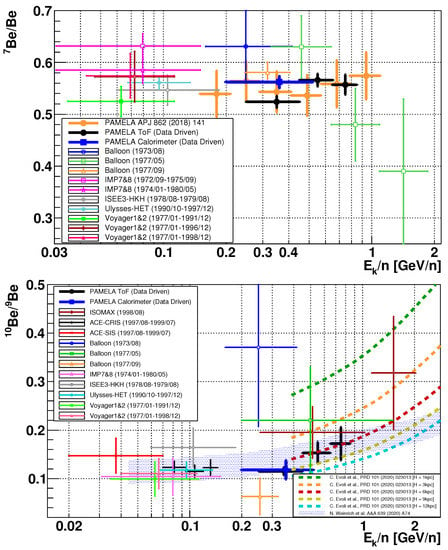

The results of the data-driven analysis of the Be/Be and Be/Be fractions using the PAMELA data [6] are shown in Figure 3 and are compared with previous measurements [7,8,9,10,11,12,13,14,15,16,17,18].

Figure 3.

Comparison of the results of the data-driven analysis for Be/Be and Be fraction with those of previous experiments and a Monte-Carlo-based analysis. Statistic error bars are only drawn for the data-driven analysis results.

The measurements obtained by PAMELA–ToF (black dots) are in reasonable agreement with the those obtained with the PAMELA calorimeter (blue square); for both results, only the statistic error bars are shown. The complete evaluation of the systematic uncertainties for these results is dominated by the possible differences in the selection acceptance for Be, Be, and Be. These cannot be estimated without a Monte Carlo simulation of the detector; however, the contribution is expected to be small (few %) in comparison with the wide statistical error bars. In particular, regarding Be/Be, the results of the data-driven analysis are in agreement with the ones published in [6] based on the fit of the Monte Carlo template of the PAMELA data (orange dots), suggesting non-dominant systematics.

The new information provided by this data-driven analysis when applied to PAMELA data is a relatively precise estimation of the Be/Be ratio in the range of 0.2–0.85 GeV/n, where existing measurements are scarce and affected by large uncertainty. In particular, it is interesting to note that this measurement is in good agreement with that of the model of [1,2,4,5], which provided recent predictions of Be/Be tuned with the up-to-date AMS-02 fluxes (and previous low-energy Be/Be measurements).

The precision of the PAMELA data improves the knowledge of Be/Be with “large” energy, with a sizable impact on the measurement of the galactic halo (half-) thickness parameter, which is currently known to be in a wide range ( kpc) [4].

To quantify the sensitivity of the PAMELA measurements to the halo thickness parameter, the model of [1] is plotted in Figure 3 for different values of H in the range of 1–12 kpc. A simple fit for the model [1] in the sub-range of 0.45–0.85 GeV/n provides kpc.

In conclusion, the data-driven analysis of of the measurement of beryllium isotopes with magnetic spectrometers is useful for reducing the systematics related to Monte-Carlo-based analyses. A determination of the halo thickness parameter with an error of the order of can be achieved, entering into the era of precise measurements expected by the forthcoming AMS-02 and HELIX results.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

References

- Evoli, C.; Morlino, G.; Blasi, P.; Aloisio, R. AMS-02 beryllium data and its implication for cosmic ray transport. Phys. Rev. D 2020, 101, 023013. [Google Scholar] [CrossRef] [Green Version]

- Feng, J.; Tomassetti, N.; Oliva, A. Bayesian analysis of spatial-dependent cosmic-ray propagation: Astrophysical background of antiprotons and positrons. Phys. Rev. D 2016, 94, 123007. [Google Scholar] [CrossRef] [Green Version]

- Korsmeier, M.; Cuoco, A. Galactic cosmic-ray propagation in the light of AMS-02: Analysis of protons, helium, and antiprotons. Phys. Rev. D 2016, 94, 123019. [Google Scholar] [CrossRef] [Green Version]

- Weinrich, N.; Boudaud, M.; Derome, L.; Génolini, Y.; Lavalle, J.; Maurin, D.; Salati, P.; Serpico, P.; Weymann-Despres, G. Galactic halo size in the light of recent AMS-02 data. A&A 2020, 639, A74. [Google Scholar]

- Luque, P.D.L.T.; Mazziotta, M.N.; Loparco, F.; Gargano, F.; Serini, D. Implications of current nuclear cross sections on secondary cosmic rays with the upcoming DRAGON2 code. arXiv 2021, arXiv:2101.01547. [Google Scholar]

- Menn, W.; Bogomolov, E.A.; Simon, M.; Vasilyev, G.; Adriani, O.; Barbarino, G.C.; Bazilevskaya, G.A.; Bellotti, R.; Boezio, M.; Bongi, M.; et al. Lithium and Beryllium Isotopes with the PAMELA Experiment. APJ 2018, 862, 141. [Google Scholar] [CrossRef]

- Yanasak, N.E.; Wiedenbeck, M.E.; Mewaldt, R.A.; Davis, A.J.; Cummings, A.C.; George, J.S.; Leske, R.A.; Stone, E.C.; Christian, E.R.; Von Rosenvinge, T.T.; et al. Measurement of the Secondary Radionuclides 10Be, 26Al, 36Cl, 54Mn, and 14C and Implications for the Galactic Cosmic-Ray Age. APJ 2001, 563, 768. [Google Scholar] [CrossRef] [Green Version]

- Hagen, F.A.; Fisher, A.J.; Ormes, J.F. Be-10 abundance and the age of cosmic rays - A balloon measurement. APJ 1977, 212, 262. [Google Scholar] [CrossRef]

- Buffington, A.; Orth, C.D.; Mast, T.S. A measurement of cosmic-ray beryllium isotopes from 200 to 1500 MeV per nucleon. APJ 1979, 226, 355. [Google Scholar] [CrossRef]

- Webber, W.R.; Kish, J. Further Studies of the Isotopic Composition of Cosmic Ray Li, Be and B Nuclei-Implications for the Cosmic Ray Age. Int. Cosm. Ray Conf. 1979, 1, 389. [Google Scholar]

- Garcia-Munoz, M.; Mason, G.M.; Simpson, J.A. The age of the galactic cosmic rays derived from the abundance of Be-10. APJ 1977, 217, 859. [Google Scholar] [CrossRef]

- Garcia-Munoz, M.; Simpson, J.A.; Wefel, J.P. The propagation life-time of galactic cosmic rays determined from the measurement of the Beryllium isotopes. Int. Cosm. Ray Conf. 1981, 2, 75. [Google Scholar]

- Wiedenbeck, M.E.; Greiner, D.E. A cosmic-ray age based on the abundance of Be-10. APJ Lett. 1980, 239, L139. [Google Scholar] [CrossRef]

- Hams, T.; Barbier, L.M.; Bremerich, M.; Christian, E.R.; de Nolfo, G.A.; Geier, S.; Göbel, H.; Gupta, S.K.; Hof, M.; Menn, W.; et al. Measurement of the Abundance of Radioactive 10Be and Other Light Isotopes in Cosmic Radiation up to 2 GeV Nucleon-1 with the Balloon-borne Instrument ISOMAX. APJ 2004, 611, 892. [Google Scholar] [CrossRef] [Green Version]

- Connell, J.J. Galactic Cosmic-Ray Confinement Time: ULYSSES High Energy Telescope Measurements of the Secondary Radionuclide 10Be. APJ Lett. 1998, 501, L59. [Google Scholar] [CrossRef]

- Lukasiak, A.; Ferr, O.P.; McDonald, F.B.; Webber, W.R. The isotopic composition of cosmic-ray beryllium and its implication for the cosmic ray’s age. APJ 1994, 423, 426. [Google Scholar] [CrossRef]

- Lukasiak, A.; McDonald, F.B.; Webber, W.R. Voyager Measurements of the Isotopic Composition of Li, Be and B Nuclei. Int. Cosm. Ray Conf. 1997, 3, 389. [Google Scholar]

- Lukasiak, A. Voyager Measurements of the Charge and Isotopic Composition of Cosmic Ray Li, Be and B Nuclei and Implications for Their Production in the Galaxy. Int. Cosm. Ray Conf. 1999, 3, 41. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).