Abstract

As the demand for renewable energy sources continues to increase, solar energy is becoming an increasingly popular option. Therefore, effective training in solar energy systems design and operation is crucial to ensure the successful implementation of solar energy technology. To make this training accessible to a wide range of people from different backgrounds, it is important to develop effective and engaging training methods. Immersive virtual reality (VR) has emerged as a promising tool for enhancing solar energy training and education. In this paper, a unique method is presented to evaluate the effectiveness of an immersive VR experience for solar energy systems design using a multi-modal approach that includes a detailed analysis of user engagement. To gain a detailed analysis of user engagement, the VR experience was segmented into multiple scenes. Moreover, an eye-tracker and wireless wearable sensors were used to accurately measure user engagement and performance in each scene. The results demonstrate that the immersive VR experience was effective in improving users’ understanding of solar energy systems design and their ability to perform complex tasks. Moreover, by using sensors to measure user engagement, specific areas that required improvement were identified and insights for enhancing the design of future VR training experiences for solar energy systems design were provided. This research not only advances VR applications in solar energy education but also offers valuable insights for designing effective and engaging training modules using multi-modal sensory input and real-time user engagement analytics.

1. Introduction

Addressing some of the world’s most pressing environmental challenges demands a shift in the way we design and develop renewable energy systems. Conventional methods rely on experts creating designs on 2D flat screens using outdated CAD models, often at large distances from the actual site or community [1]. This separation between the physical and digital environments often leads to challenges for designers who must adapt real-world site images to the limitations of 2D flat screens. However, research suggests that enabling users to virtually experience the site as if they were physically present can enhance design effectiveness and user understanding [2]. In fact, 3D visualisation tools, with their interactive and representational capabilities can further facilitate higher levels of user engagement, ultimately enhancing learning and understanding [3]. Moreover, teaching people about climate change is important because it helps them understand and solve such problems in their own communities [4,5].

Virtual reality (VR) is one of the most promising 3D visualisation tools in education, training and instructional design, offering users an innovative immersive experience. Several studies have demonstrated the benefits of VR, which can replicate complex scenarios and environments, offering practical learning experience [6]. It has even been called the “learning aid of the 21st century” [7], since it lets students see and interact with abstract ideas in virtual worlds, making them easier to understand. [8]. Risky scenarios can also be simulated in virtual reality, enabling a safe environment for students to learn or practice. For instance, neurosurgical procedures training [6], use a turning lathe [9] and aerial firefighting training scenarios [10]. VR technology enables remote collaboration where users can work together and interact with virtual objects in real-time, regardless of their physical locations [11].

According to Al Farsi et al., despite their overwhelming promise, VR in education faces three main limitations [12]:

- High cost and uncertain effectiveness: VR technology can be expensive and its effectiveness in education is not always guaranteed.

- Reliance on powerful technology: VR experiences require powerful computers, complex software and sophisticated supporting technologies to function properly.

- Instructors’ lack of confidence: Educators may not feel confident using VR due to a lack of training or experience with the technology.

Furthermore, some VR systems are bulky and uncomfortable to wear, which can hinder the immersive experience [13]. Some users may also experience nausea, dizziness, or other symptoms of cyber-sickness when using VR [14,15]. Nevertheless, despite these limitations, research continues to explore the potential of VR in education and training. Numerous VR studies investigate the relationship between user performance and their sense of presence within the virtual environment, particularly in educational and training contexts [16,17]. It is argued that this immersion and sense of presence leads to a deeper understanding of climate change issues [18,19].

The working principle of a VR experience for solar energy systems includes developing immersive VR environments that mimic actual solar energy generation and system design. These VR environments integrate virtual objects of solar energy system components such as solar panels, inverters, batteries and charge controller that closely resemble their real-world counterparts. These features provide users with an immersive and visually authentic experience. The VR environments allow users to navigate the virtual environment and interact with 3D objects, which are the elements of the solar energy system. Many factors should be considered to provide real-time data for the solar energy system, such as solar irradiance, panel orientation and shading effects.

In the literature, several studies have explored using VR for solar energy systems education [20]. For example, VR has been used to create realistic simulations of solar energy systems where users can explore different system configurations [21], see the effect of orientation and shadowing on solar panel performance and display energy generation in real-time [22]. Moreover, Lopez et al. in [23] presented a VR study designed to foster students’ self-directed learning in photovoltaic power plant installation. Similarly, Abichandani et al. in [21] introduced a VR educational system that taught students the fundamentals of photovoltaic (PV) cells, solar modules and various PV array configurations. Furthermore, Alqallaf et al. [14] proposed a VR game-based approach for teaching basic solar energy system design concepts to higher education students. A comprehensive literature review of PV systems education has also been published [24]. However, in all these situations, the evaluation of training has been carried out using subjective self-reported data and questionnaires.

Researchers are increasingly exploring and evaluating this immersive environment to enhance user experience, comprehension of content, decision-making and problem-solving [25]. Maintaining positive psychological states such as motivation and engagement is crucial to prevent boredom and loss of focus after repeated exposure to VR [26]. The latest VR headsets, including HTC Vive and Oculus Rift, deliver high levels of immersion to users [27,28]. This immersion influences the level of presence, which is the sensation of being in the virtual world [29]. Jennett et al. in [30] discussed the three core concepts frequently used to characterise engagement experiences: flow, cognitive absorption and presence.

In recent human-computer interaction studies, measuring user experience (UX) typically relies on self-reported data, questionnaires, and user performance. However, questionnaires as self-assessment methods face two main challenges: the potential for misinterpretation and misunderstanding of the items’ meanings, and the risk of eliciting stereotypical responses [31]. Current research advocates for the integration of physiological measures into immersive virtual reality applications and experiments, as they can significantly complement self-report data when estimating users’ emotions and stress levels [32]. Furthermore, combining both objective and subjective methods leads to more reliable results [31].

Many contemporary theories of emotion view the autonomic nervous system’s (ANS) activity as a significant contributor to emotional responses [33]. Bio-signals, such as electrocardiography, electroencephalography and blood pressure monitoring can provide objective data. Engagement is associated with physiological changes, including increased heart rate, sweating, tensed muscles and rapid breathing [34]. The degree of engagement affects the autonomic nervous system, which in turn influences physiological changes in the body [34]. McNeal et al. in [35] presented a study using galvanic skin response (GSR) to measure students’ engagement levels in an Introductory Environmental Geology Course. Similarly, Lee et al. in [36] used electrodermal activity (EDA) measurements to gauge cognitive engagement in Maker learning activities. Darnell and Krieg used heart rate measurements via wristwatch monitors to assess cognitive engagement among medical school students [37].

Since engagement levels influence the autonomic nervous system and are linked to physiological changes in the body, these responses can be measured through specific biosignals, including those reflecting learner engagement in class. By combining physiological parameters like heart rate, breathing rate, skin conductance and other sensor data, we can gain a comprehensive understanding of users’ emotional state. Therefore, this research aims to demonstrate the effectiveness of combining these evaluation methods to gain a deeper understanding of user behaviour while interacting with our 3D solar energy design tool. By shifting data capture from post-experiment to during the experiment, we aim to gain richer data on physiological and behavioral cues, allowing for a more detailed mapping of emotional states and cognitive load.

2. Eye Tracking in VR

Eye trackers are tools that measure what users are unconsciously focusing on, giving valuable insights for educators [38]. Tiny sensors mounted on a headset track eye movements and where users fix their gaze [39]. Researchers use this data to understand what grabs user attention and how long they focus on certain areas, which helps them design more user-friendly and engaging digital experiences.

Studying eye tracking in VR environments offers new ways to understand user attention and thinking, especially with head-mounted displays (HMDs) that have built-in eye tracking [40,41,42]. Companies such as FOVE and Tobii are developing advanced eye-tracking systems specifically for VR [43,44]. In fact, eye tracking is a well-established method for assessing usability and user experience in various settings [45]. A review by Li et al. confirmed that eye tracking is useful for measuring mental workload, immersion level and user experience in VR [46].

Analysing eye movements is important for evaluating cognitive function since they can reveal a person’s mental state more accurately than other biological signals [47]. Eye tracking measures fixations (where the eye stays still) and saccades (quick eye movements) [48,49]. By studying this data, researchers can gauge user engagement by looking at how many times and for how long users fixate on something [50,51].

Another indicator of user attention is pupil dilation, which is controlled by the autonomic nervous system [52,53,54]. Research has shown connections between pupil dilation, how engaged someone is with a task, and how difficult the task is [55,56,57].

Eye blinking rates are also influenced by how someone is thinking, how engaged they are with a task and how tired they are [58]. Studies have shown a link between blinking rate and task difficulty or engagement [59,60,61].

A theory called the Cognitive Load Theory (CLT) suggests that learning is best when our working memory (the part of our brain that holds onto information for short periods) is not overloaded [62,63,64]. Researchers use eye-tracking data to measure cognitive load and ensure VR experiences are not overwhelming learners [65]. By combining eye tracking with other data, researchers can gain valuable insights into how people learn in VR environments. This information can then be used to improve VR learning experiences and ultimately help people learn more effectively.

3. Approach and Hypotheses

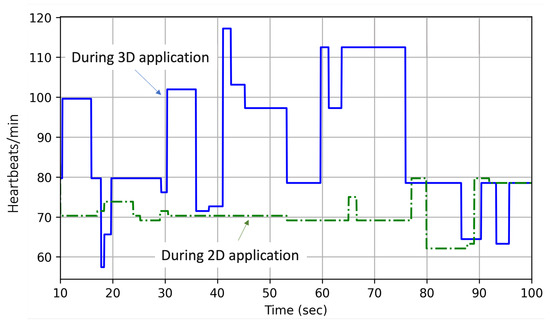

A previous study [66] compared learner engagement levels in a 2D application and a 3D immersive virtual reality application for designing solar energy systems. Learner vital signs were measured using a non-invasive radar sensor and the data were validated with self-reported questionnaires. The study confirmed the hypothesis that a 3D virtual reality application leads to higher engagement levels than a 2D application. Figure 1 illustrates the heart rate of a participant who designed a solar energy system using both 2D and 3D applications. According to the figure, the participant experienced higher levels of heart rate in the 3D experiment, which can be interpreted as increased engagment.

Figure 1.

Comparison between a participant’s heart rate measured using a radar sensor during interaction with a 2D application and a 3D immersive virtual reality environment.

In the present study, our goal was to measure learner engagement while designing a solar energy system in a 3D immersive environment, analysing biofeedback and eye-tracking data. We compared biofeedback reflecting engagement levels within the VR experience across different scenes. Identifying areas of low learner engagement can guide modifications to the application’s design, thereby increasing learner attention and focus. Moreover, our approach to collecting data offers the key advantages of being wireless, wearable and unobtrusive compared to other methods of measuring vital signs and eye-tracking data. By integrating physiological and behavioural data such as heart rate and eye tracking, with an immersive virtual reality environment, we aimed to gain deeper insights into user interaction and perspectives. This integration allowed us to conduct in-depth analyses that reflected users’ cognitive state and engagement with the virtual experience.

To further explore learner engagement and experience within the 3D immersive virtual reality environment, we will focus on specific scenes within the application and analyse eye-tracking and biofeedback data. With this focus, we propose the following three research questions (RQs) and hypotheses (Hs):

- RQ1: How does user engagement differ across various scenes within the 3D immersive virtual reality environment when designing solar energy systems?

- H1: Users’ engagement levels vary across different scenes in the 3D immersive virtual reality environment, with certain scenes eliciting higher engagement levels than others during the solar energy system design process.

- RQ2: How do eye-tracking data and biofeedback correlate with user experience (UX) and engagement levels in the 3D immersive virtual reality environment?

- H2: Eye-tracking data and biofeedback effectively reflect users’ UX and engagement levels, with an increased number of fixations and lower blink rates indicating higher engagement in the 3D immersive virtual reality environment.

- RQ3: To what extent do modifications in the 3D immersive virtual reality environment, informed by biofeedback and eye-tracking data, improve user engagement and focus in designing solar energy systems?

- H3: Modifications to the 3D immersive virtual reality environment, based on biofeedback and eye-tracking data, lead to significant improvements in user engagement and focus in designing solar energy systems.

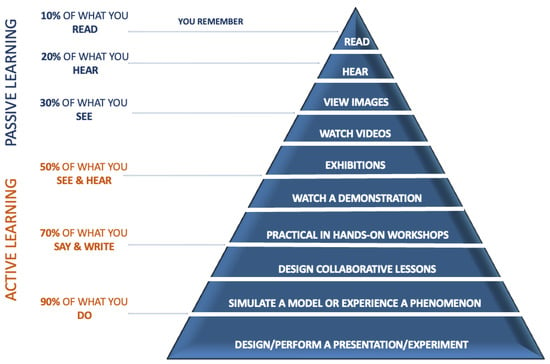

In accordance with Dale’s cone theory [67], which structures learning experiences, we chose to focus on active and passive learning through hands-on experiences, since these are situated at the base of the cone, as shown from Figure 2. This decision stems from the fact that teaching sustainable energy subjects typically relies on a theoretical approach, which may not provide the most effective learning experience [68].

Figure 2.

Developed by Edgar Dale, the cone of experience categorises learning experiences and suggests that learning by doing leads to better information retention compared to simply hearing, seeing or observing.

4. Methodology

As mentioned earlier, traditional evaluation methods such as quizzes, multiple-choice questions and self-reported questionnaires may not always provide the most accurate assessment of VR experiences. Instead, researchers should consider using operational, protocol and behavioural measurements that are combined with neurocognitive methods to evaluate user experience for a more comprehensive evaluation [69,70,71,72]. Operational measurements often assess a learner’s ability to correctly operate equipment or machinery, while protocol measurements evaluate whether the learner adheres to a prescribed process for a specific job task. In contrast, behavioural measurements examine whether the learner exhibits the desired behaviour in a given situation. Given that solar energy systems design involves a set of procedures and best practices (a protocol) that designers must follow, we developed a methodology to evaluate the effectiveness of our VR experience.

To gain a deeper understanding of learner engagement, we segmented the VR experience into three scenes. Our objective was to observe participants as they interacted with the VR experience, identifying the aspects that captured their interest, the elements they grasped quickly, the parts they wanted to explore further and the areas they found challenging. We also aimed to pinpoint unclear rules and mechanics that were not yet fully developed within our VR experience. By analysing participants’ interactions, we sought to determine which mechanics were enjoyable and which ones needed fine-tuning to balance the experience and guide users toward the intended learning objectives at an appropriate pace. Our motivation behind this approach was to gain insights into the design of the VR experience and refine it accordingly. To collect real-time data during this process, we used physiological sensors as an additional evaluation tool.

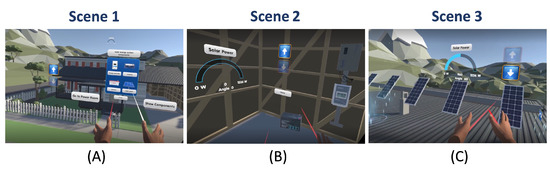

As previously mentioned, we divided the VR experience into three main scenes, each corresponding to a crucial task in a typical solar energy system design project. These tasks are vital for ensuring the efficiency, functionality and optimal performance of the solar energy system. Here is a summary of the tasks associated with each scene:

Scene 1: Site Selection—Users begin by choosing a location for installing the solar energy system. Accurate site selection is crucial for maximising solar energy production, as it accounts for factors such as available sunlight, local weather conditions and physical constraints.

Scene 2: Power Room—In this scene, users explore the power room, where they can interact with the essential system components such as batteries, inverters and charge controllers. Users can use the VR controllers to grab and install the components on a stand. Familiarising themselves with these components and understanding their roles is essential for designing a functional solar energy system that meets energy production and storage requirements.

Scene 3: Solar Panel Installation—The third scene takes users to the house’s roof, where they can experiment with the arrangement of solar panels on a stand. Users can add, remove and adjust the tilt of the panels, observing the effects of these changes on the solar power output and electricity generation, as displayed by a gauge. This task is critical in the design process, as optimising the solar panel arrangement can significantly impact the system’s overall efficiency and energy production.

Users can move around the VR environment using a teleporting system with the ability to walk steps in the virtual environment. We chose to use this system to minimise motion sickness while moving around the environment.

By incorporating these essential tasks into the VR experience, we wanted to give users hands-on experience and develop a comprehensive understanding of the solar energy systems design process.

In the following sections, we will provide a detailed account of the hardware and software used for developing and evaluating the effectiveness of our VR experience.

4.1. Hardware

Psychophysiological signals were collected using the Shimmer Sensing Kit [73], which featured a sampling rate of 204 Hz for producing an electrocardiogram (ECG). The ECG electrodes were placed on users as shown in Figure 3: Right Arm (RA), Right Leg (RL), Left Arm (LA), Left Leg (LL), and Chest (V1).

Figure 3.

Hardware setup and electrode placement: A wireless Shimmer3 ECG system using Bluetooth and WiFi for heart rate data streaming. The Shimmer kit collected data using ConsensysPRO software version 1.6.0. The HP Reverb G2 Omnicept has a built-in eye-tracking headset and other sensors that were used to gather participants’ heart rate, cognitive load and eye-tracking data.

The virtual environment was displayed through the HP Reverb G2 Omnicept [74], equipped with an integrated eye-tracking system powered by Tobii. This Head Mounted Display (HMD) offers a field of view of 114 degrees, presenting the scene with a resolution of 2160 × 2160 pixels per eye and a combined resolution of 4320 × 2160 pixels. The headset also features a refresh rate of 90 Hz. Furthermore, the integrated sensors in the headset provide heart rate, cognitive load, and eye-tracking data, enabling the tracking of user engagement and the evaluation of user responses in real time. These data also facilitate a deeper understanding of user performance and inform decision-making regarding the application’s design.

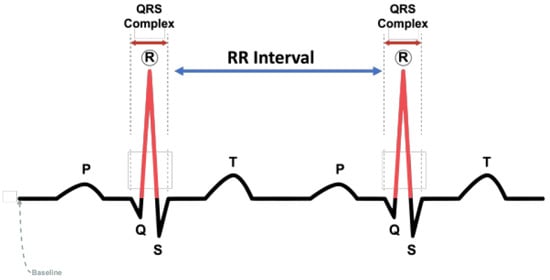

4.2. ECG and Eye-Tracking Data

Heartbeats are decomposed into five main waves: P, Q, R, S and T [75]. The R waves can be used from the electrocardiogram to determine the heart rate in beats per minute (BPM). This wave is a part of the QRS complex, which is the main spike shown in the ECG signals representing Ventricular depolarisation. Figure 4 shows the ECG Waveform and QRS complex, which can be used to calculate the heartbeats from the ECG signals.

Figure 4.

ECG signals that show QRS wave for calculating heartbeats.

The Shimmer software, known as ConsensysPRO, uses an ECG-to-HR algorithm to provide users with access to heart rate data collected from the ECG sensor. ECG signals were collected simultaneously from participants using five disposable electrodes attached to their skin. These data were streamed via Bluetooth to ConsensysPRO and saved as individual CSV files for each participant.

For eye-tracking, cognitive load and heart rate data capture, three custom scripts were developed. These scripts extended the HP Omicept headset’s Software Development Kit (SDK) version 1.14.0 using C# programming language. The scripts subscribed to the relevant information messages provided by the SDK.

These scripts were linked with their corresponding scenes in the VR application and activated upon scene start. Each script recorded eye-tracking data associated with each frame in real-time. This data was stored in separate files for each scene and each participant.

The header information captured from the HP Omicept VR headset can be represented succinctly as the sum of the following components, :

where each term is defined as follows:

- T: OmniceptTimeMicroSeconds.

- : Gaze vectors for the left, right, and combined eyes respectively, with components in coordinates.

- : Pupil position vectors for the left and right eyes, respectively, with components in coordinates.

- ; : Pupil dilation and its confidence for the left and right eyes.

- ; : Eye openness and its confidence for the left and right eyes.

- : Heart Rate in BPM. and : Heart Rate Variability metrics.

- : Cognitive Load, its standard deviation, and state, respectively.

At this stage, a low-pass filter was applied to the ECG signals to preserve crucial low-frequency components while attenuating high-frequency noise.

Similarly, eye-tracking data were cleaned and pre-processed using Python (3.9). Filtering the eye-tracking data from the VR headset involved using a confidence level of 1, which refers to selecting only the most certain and reliable data for analysis, ensuring the analysed data are free from errors and biases.

As the three scenes in the VR application have varying durations and are task-based, blinking and the number of fixations were normalised using the MinMaxScaler function. This transformation adjusted the values to a range between 0 and 1, facilitating data comparison across different scenes while also eliminating the effects of varying scene durations or individual characteristics.

4.3. Virtual Reality Setup

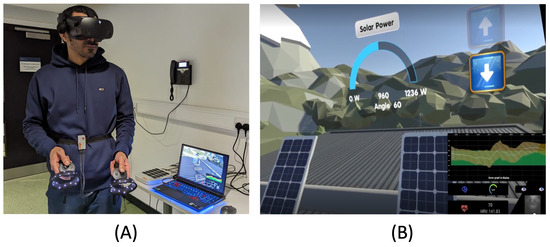

The experiment was conducted in a VR lab, with participants taking part voluntarily via an ethically approved consent form that was approved by our university. Initially, participants were briefed on the instructions and the purpose of the experiment. Then, they were asked for permission to place the ECG electrodes at the specified positions. Figure 5A shows a participant wearing the ECG electrodes and the VR headset. Omnicept Overlay was used to visualise heart rate and cognitive load data concurrently. Open Broadcaster Software (OBS) version 28.1.2 from OBS studio was used to record and live-stream the VR application, including the overlay screen, as shown in Figure 5B. Prior to starting the VR application, calibration was carried out for each participant to ensure optimal accuracy when performing the eye-tracking measurements, as depicted in Figure 6.

Figure 5.

The experiment setup. (A) the user is wearing the ECG sensor and the HP headset to perform the VR application. At the same time, the application was live-streaming and recorded on a laptop. The picture was taken from a VR lab at the University of Glasgow. (B) The screen of the OBS studio where the overlay app transparently appeared for casting the heart rate and cognitive load data from the HP headset.

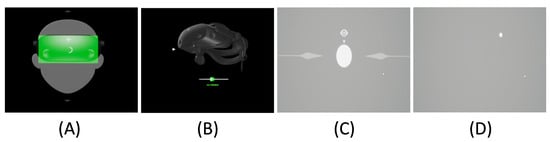

Figure 6.

Before starting the VR application, participants performed a calibration for the eye-tracking to ensure optimal data accuracy. The procedure involved (A) adjusting the head position, followed by (B) setting the interpupillary distance (IPD) using the slider. Subsequently, (C) participants focused on the centre of the screen, and finally, (D) they were instructed to follow the dot.

Moreover, the 3D application was developed using the Unity3D game engine [76], version 2020.3.25f1. All the scripts were developed using C# in Visual Studio within the game engine. OpenXR was used in this application to create VR functionality such as grabbing and locomotion. The OpenXR Plugin package was used for implementing all VR-specific features. The 3D objects for the VR application were taken from the Unity assets store. Blender software version 2.93.4 was used for modelling the solar panels’ stand and its handle. As previously mentioned, our application was divided into the three main scenes shown in Figure 7. The VR application development involved overcoming several obstacles which are represented in simulating PV system behaviour in real-time. This step required creating many scripts that ensure all the system components are installed in the power room (the battery, inverter and charge controller), and calculating the amount of electricity based on the number of solar panels and the angle of the stand. To display the electricity generated from the system, we have added a sprite image to show the amount of electricity generated using the fill amount property.

Figure 7.

The three main scenes in the VR application: (A) Site Selection, where users are positioned in front of the house, learning about system components before installing the solar energy system. (B) The Power Room, where users can grab and place the system components on the designated stand. (C) Solar Power Installation, where users can add, remove, and adjust the angle of the stand, observing changes in the power generated from the system via the gauge chart.

Furthermore, we implemented the VR application on a Lenovo laptop with Windows 11 having a 64-bit operating system and an Intel Core i7 with an NVIDIA GeForce RTX 3070 graphics card.

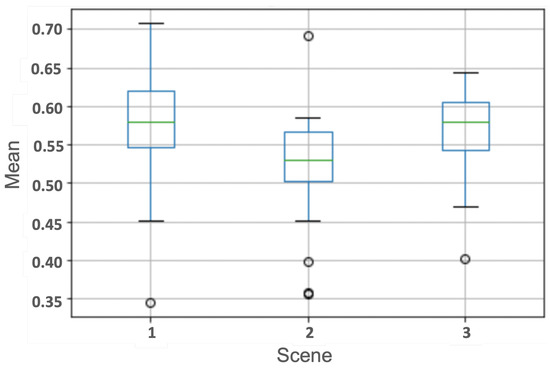

4.4. Finding the Average Brightness for the VR Scenes

Numerous studies have reported that eye-tracking data, particularly pupil dilation, can be influenced by the luminance of the environment. As a result, we analysed the brightness levels of the three scenes in the VR application. We recorded a 30-s video for each scene and used Python code with the OpenCV video processing library to extract frames from the videos and convert them to grayscale. Subsequently, we calculated the lightness value for each frame by determining the mean pixel value of the grayscale frame, summing up all pixel values, and dividing by the total pixel count. Finally, we computed the average lightness values for the entire video by adding all the lightness values and dividing by the total number of frames.

4.5. Self-Report Questionnaire Design

Participants took part in the project voluntarily via ethically approved consent using anonymous and confidential online self-report questionnaires after experiencing the virtual reality application and collecting the vital data using the headset and the ECG sensor. They were also informed that they were able to withdraw their participation from the project at any time. The questionnaire consists of fourteen questions designed to measure the engagement and immersion level of the participants in general and for each scene. The first question was whether the participants had previous experience with virtual reality. The second question asked participants to rate how easy the application was from 1 to 5, where 1 means ‘Extremely Difficult’ and 5 means ’Extremely Easy’. The third question was about asking the participants if they felt engaged in the virtual environment or not. The fourth question asked participants to select the scene they felt more engaged in, in front of the house, the power room, or the roof. In questions 5, 6 and 7, participants rated their engagement level in each scene from 1 to 5, where 5 is the highest engagement level. Questions 8 to 14 were taken from the unified questionnaire on user experience (UX) in an immersive virtual environment(IVEQ) proposed by [31] related to measuring engagement and immersion sub-scales.

4.6. Participants

A total of 27 students volunteered to take part in our experiment. These participants included 17 males and 10 females, ranging from 25 to 42 years old. All participants were healthy and did not take medication for heart problems or mental diseases.

5. Results

5.1. Self-Reported Questionnaire Results

Participants completed self-report questionnaires that assessed their sense of immersion and engagement in each scene of the application. Out of the 27 users, 12 (44% of the participants) had previous experience with VR. On a scale of 1 to 5, with 1 being extremely difficult and 5 being extremely easy, 23 participants (85% of the population) rated the application as easy to use, selecting scores of 4 and 5. Meanwhile, 4 participants (15%) chose scores of 3 or below.

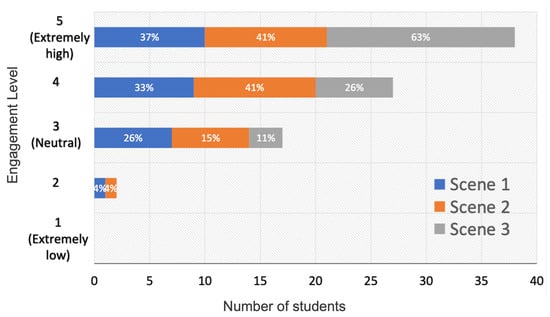

Regarding engagement in VR, 48% of participants strongly agreed that they felt engaged, 44% agreed, 4% were neutral, and 4% strongly disagreed. When asked which scene they felt most engaged in, 4% chose the front of the house scene, 30% chose the power room scene, and 67% chose the house roof scene. Figure 8 illustrates participants’ responses when rating their engagement level during the three scenes.

Figure 8.

Figure illustrates the participants’ engagement levels during the three scenes, with 5 representing extremely high engagement and 1 representing extremely low engagement.

When asked if the visual aspects of the virtual environment engaged them, 44% of participants rated the engagement as extremely high (5), 41% chose 4, and 15% chose 3. In terms of feeling compelled or motivated to move around inside the virtual environment and complete the application, 41% of participants rated this aspect as extremely high (5). Furthermore, 59% of participants rated their involvement in the virtual environment as extremely high (5).

Regarding stimulation from the virtual environment, 52% of participants selected an extremely high rating (5), while only one participant (4%) chose a neutral rating (3). As for becoming so involved in the virtual environment that they were unaware of things happening around them, 33% rated this aspect as extremely high (5) and 37% rated it as 4. When asked if they felt physically present in the virtual environment, 30% rated this aspect as 5 and 52% rated it as 4. Finally, 33% rated their involvement in the virtual environment as extremely high (5) when it came to losing track of time, while 37% rated it as 4.

5.2. Vital Signs and Eye Tracking Data

Based on the literature, we analysed the data representing users’ engagement level, such as heart rate, cognitive load, blinking rate, pupil dilation and the number of fixations. Headset data was subsequently analysed to provide real-time insights.

5.2.1. Heart Rate

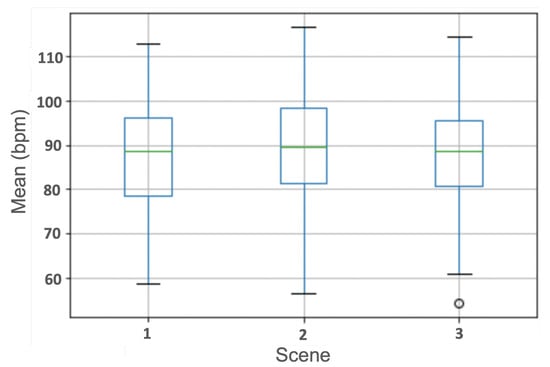

After analysing the heart rate data from the ECG sensor and the sensor from the HP headset, we found that the accuracy for the HP headset was 86.72% compared with the ECG sensor. Therefore, we decided to rely on the ECG data for analysing the heartbeat signals. Interestingly, the mean heart rate across the three scenes was nearly identical (see Figure 9). This suggests that heart rate data may not be a significant factor in measuring engagement in a VR environment.

Figure 9.

The mean heart rate values during the three scenes. The finding shows that there is no significant difference in the ECG signals for the three scenes, which indicates that there is no impact of the heart rate data in measuring the level of engagement in a virtual reality environment. The circle in the diagram represents an outlier data.

5.2.2. Cognitive Load

As anticipated, our results show that the highest cognitive load was observed in Scene 1 due to the amount of text included in this scene. Users in the first scene had to read and comprehend the role of each component in the solar energy system. This may indicate that the cognitive demands of processing textual information, particularly when learning new concepts, are higher compared to the other tasks.

Scene 2 exhibited the lowest cognitive load values, as the task involved grabbing and placing 3D objects, the system components, on a stand. This suggests that the task in Scene 2 was more intuitive and relied primarily on users’ motor skills, thus requiring less cognitive effort.

Scene 3 was intermediate, as it combined interactions with 3D objects and observation of the results generated by the system. This could be interpreted as an indication that users were engaged in both cognitive processing and motor skills, balancing the overall cognitive load.

Figure 10 displays the box plot of cognitive load data across the three scenes. These findings can inform future refinements of the VR experience by optimising the amount and presentation of information in each scene, balancing cognitive load and ensuring that users remain engaged throughout the experience.

Figure 10.

The cognitive load of the users during the three scenes in the virtual reality application. The results showed that Scene 1 had the highest cognitive load while Scene 2 had the lowest. The circles represent outliers data.

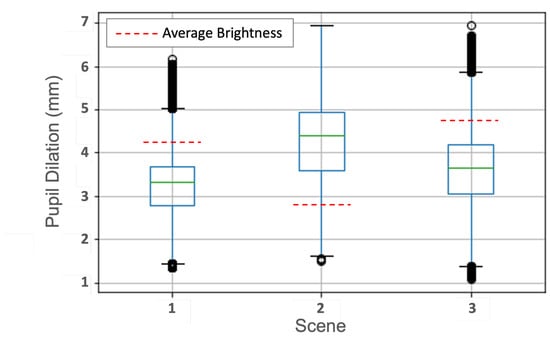

5.2.3. Pupil Dilation

We examined the changes in pupil dilation among participants across the three scenes. Our findings show the greatest pupil dilation for participants in Scene 2, the power room. As previously mentioned, pupil size tends to decrease as the brightness of the visual environment increases. Figure 11 illustrates the noticeable difference in average brightness in Scene 2 compared to the other scenes, which led to increased pupil size for participants.

Figure 11.

The pupil dilation for the participants within the three scenes. Our results showed that the largest pupil dilation occurred in scene 2 while the smallest dilation appeared in scene 1. The red line represents the average brightness level for each scene in 30 s. Scene 2 had the lowest brightness level, while scenes 1 and 3 had close brightness levels. The circles in the diagram represent outlier data.

The brightness level for Scenes 1 and 3 is nearly the same, but the pupil size for participants in Scene 3 is larger than in Scene 1. This discrepancy may be attributed to the difference in the tasks’ difficulty and the nature of the environment, as pupil size typically increases with a rise in mental activity and engagement level.

These results suggest that the tasks in Scene 2 and Scene 3 could have been more cognitively engaging or demanding for participants, while Scene 1, despite its textual information, may not have induced the same level of mental effort. Additionally, the variations in brightness between scenes may have influenced pupil dilation, further affecting the interpretation of cognitive load or engagement. Future iterations of the VR experience may benefit from taking these factors into account to optimise the balance between engagement, cognitive load, and visual design.

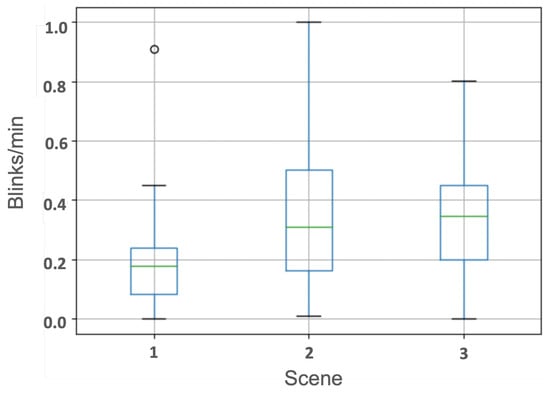

5.2.4. Blinking Rate

Figure 12 illustrates the normalised blinking rate for participants across the three scenes. We normalised the data for the blinking rate in each scene to account for the differences in duration between them. The highest number of blinks occurred in Scene 2, followed by Scene 3 and Scene 1, respectively.

Figure 12.

The difference in blinking among the three scenes. The results showed that Scene 2 had the highest blinking rate, while Scene 1 had the lowest. The circle shown in the diagram represents outlier data.

The differences in blinking rates among the scenes could suggest varying levels of cognitive load, attention, or visual engagement for the participants. A higher blinking rate in Scene 2 might indicate increased cognitive effort, possibly due to the interaction with 3D objects or the lower brightness level in that scene. Meanwhile, the lower blinking rates in Scenes 1 and 3 might be indicative of reduced cognitive load or increased focus on the tasks at hand. It is essential to consider these factors when evaluating user engagement and cognitive load in the VR experience. Further analysis of the relationship between blinking rate and other physiological or behavioural data might offer additional insights into the effectiveness of each scene in promoting learning and engagement.

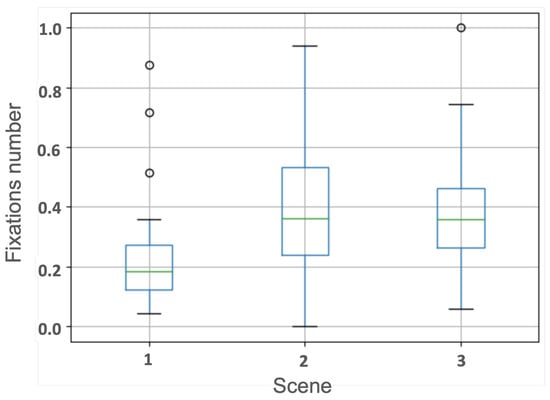

5.2.5. Number of Fixations

Figure 13 presents the number of fixations for participants during the three scenes. Our findings reveal a significant difference in the number of fixations across the scenes, with Scene 2 having the highest number of fixations.

Figure 13.

The number of fixations during the three scenes. Scene 2 had the highest fixations number, while Scene 1 had the lowest. The circles represent outlier data.

The higher number of fixations in Scene 2 could be indicative of increased visual attention or cognitive effort, as participants may have been more focused on manipulating and placing the 3D objects in the power room. This could also suggest that Scene 2 was more engaging or required more intricate interactions, drawing the participants’ gaze more frequently to various elements within the scene.

Conversely, the lower number of fixations in Scenes 1 and 3 might imply that participants found these scenes less visually demanding or cognitively challenging. However, it is essential to consider the context of the tasks and the nature of the interactions within each scene when interpreting these findings. A more detailed analysis of the spatial distribution and duration of fixations, alongside other physiological or behavioural data, could offer a deeper understanding of the participants’ engagement, learning, and cognitive load during each scene in the VR experience.

Finally, Table 1 provides a summary of the mean and standard deviation values for all parameters across the three scenes in the VR application.

Table 1.

Summary of the Collected Data Analysis.

6. Discussion

Human–computer interaction innovations depend heavily on understanding users’ mental states. This study investigated user experience during a 3D immersive virtual environment using an HP Omnicept headset and the ECG sensor on a solar energy systems design task. We investigated whether eye tracking, heart rate and cognitive load data would be associated with increasing engagement and cognitive level in each scene of the VR application. We also formulated three RQs and hypotheses at the start of the experiment around these areas and we will now discuss our findings related to these hypotheses.

We hypothesised that diverse emotional responses within the three scenes would have produced various attention patterns. Results from our study showed that the user experience and engagement level could be estimated by analysing eye-tracking data, as the eyes can reveal much more about a person’s emotions than most people realise and are a slightly more enigmatic indicator of their emotions.

Despite the survey showing greater engagement in a specific scene, there is no difference in the heart rate data. The participants in the three scenes had almost equal heart rate levels, and there was no significant impact from the ECG signals related to the engagement level. The VR game was likely immersive and engaging for all participants during the three scenes. Our finding with the ECG sensor contradicts what was shown by the study of Murphy and Higgins in [77]. They used ECG and EEG sensors to assess user engagement in an immersive virtual reality environment. Their findings showed that the heart rate is a good indicator for measuring the users’ engagement level in virtual reality.

It was hypothesised that using an approach that increased cognitive load when teaching a topic to students would have an adverse effect on their performance [78]. A task becomes more challenging and places a heavier intrinsic load on working memory when it has a greater number of interconnected informational components. The cognitive load in scene 1 is higher than in scenes 2 and 3. This was to be expected because participants needed to read and understand the role of the solar energy system components, as there is a lot of text in this scene.

Psychologists have long been curious about how changes in pupil size and mental activity relate to one another [79]. Also, mental activities are closely connected with problem difficulty, which affects pupil response [56,80]. We predicted that pupil dilation would be affected by the users’ engagement and performance in the VR application in different scenes. Pupil diameter is a complicated parameter in eye-tracking because it is affected by the brightness of the visual stimulation [81] and cognitive load [82]. Our results showed increased pupil dilation in scene 2, which had a lower average brightness level than in scenes 1 and 3. This was aligned with the literature that the pupil size and brightness of the visual environment are found to be inversely proportional [83]. This finding suggests the importance of considering the effect of visual brightness on pupil size while reliably measuring the user experience in virtual reality applications. Moreover, when comparing scenes 1 and 3, there is no significant difference in the average brightness level between them, but the pupil dilation in scene 3 is larger than in scene 1. This indicated the relationship between pupil dilation and task difficulty [84], increasing the level of interest and arousal [85] and users’ attention [86].

As we mentioned earlier, Scenes 1 and 3 have different physical efforts. In scene 1, users read and understand the role of the solar energy system components by clicking on buttons and demonstrating the information. In scene 3, users interact more with the 3D objects as they grab and place the solar panels and try different situations of the system. This physical activity has an impact on pupil dilation. Our results revealed that the size of the pupil increased in response to physical exertion like one of a previous study indicated that pupil size increased during physical effort [87].

Higher blink rates are frequently observed in insight problem-solving situations and creativity performance [88,89]. However, a previous study observed the relationship between the blinking rate and visual attention [59]. The result of this study indicated that the blinking rate increases when visual attention is engaging and vice versa. Our findings indicated the relationship between the blinking rate and task difficulty. The blinking rate in Scene 1 was lower than in Scenes 2 and 3, as the task in scene 1 was very easy to perform. This aligned with the finding from the study by Tanaka and Yamaoka [61], which shows that the blinking rate with the difficult task was higher than for the easy task. In addition, the blinking rate is affected by the nature of the task. Users experienced different types of tasks during the three scenes, which produced different levels of blinking rates. Also, endogenous blinks are reduced when a task demands more concentration [90], which was clearly obvious with the blinking rate in Scene 1. The amount of text in Scene 1 reduced the blinking rate of the participants as they had to be focused on comprehending the presented information. This finding matches the literature that indicated that the blinking rate reduced during reading [60].

The relationship between blink rate and cognitive load is often found to be inverse. The fundamental hypothesis from earlier studies is that when cognitive load is at its lowest, we blink more frequently because we believe we can blink without missing anything. Moreover, blinking inhibition may be an adaptive strategy that shields delicate cognitive processes from disruption when a mental load is raised [91].

The number of fixations in eye-tracking data can provide insights into several aspects of visual processing, attention, and cognitive engagement. The number of fixations might indicate which parts of a visual environment appeal to the user. A scene with a higher fixation number may show that the user finds that area more visually attractive or interesting. The number of fixations may also be a good indicator of the difficulty of a task or stimuli. As we expect a user moves his eyes to absorb information and make sense of the visual input. Scene 2 had the highest number of fixations which suggests the high level of users’ interest in this scene. Increasing the number of fixations in Scene 3 may suggest that the user spent more time processing and integrating information from various scene areas. We assumed that participants would look more at the solar energy power, the output, of the system they already built simultaneously with trying a new design. This process attracted users’ attention and motivated them to try different design scenarios.

A high number of fixations, which occur when a user revisits the same place or object repeatedly, may indicate a high level of interest. That might, however, also be a sign of understanding issues [92]. It is crucial to know that fixation number may not always give a full understanding of visual processing. Other eye-tracking elements, such as fixation duration, saccades or sensory data, should be considered to interpret the data precisely. It is important to note that eye-tracking research is a complicated field, and analysing data should be conducted in conjunction with other relevant measures.

Our findings indicated the importance of capturing several physiological data for monitoring the user experience. Data from eye tracking can be highly helpful in testing the usability and user experience of any VR game. The capacity of eye tracking to detect variations in involvement during particular tasks enables the researcher to link particular contexts to particular outcomes and demonstrate that engagement was the mediating factor [84]. Researchers can also learn more about the motivations behind users’ responses and behaviours as they engage with photorealistic items, environments, and pretty much any stimuli by submerging research participants in a virtual reality world.

Numerous studies in the literature on solar energy system design have evaluated the effectiveness, usability, and user experience of their VR applications via common assessment techniques. These techniques include gathering quantitative and qualitative data from users about their experience via surveys [23,93,94], pre and post test [95,96] and interviews [97]. Despite the advantages of using these methods in assessing VR experiences and proving their validity in measuring UX [31], they also have certain limitations. As mentioned earlier, these methods rely on self-reported data and subjective interpretations of users’ behaviours and attitudes. This can lead to biases and inaccurate feedback, as many users prefer to provide answers that are socially acceptable or have difficulty expressing how they feel.

Our study provides valuable contributions to the learning technology field and highlights the educational benefits of using virtual reality in learning. Analysing eye-tracking and Electrocardiography data offers insights into the enhancement of instructional design and understanding what keeps engaged to develop educational content more effectively. Also, through monitoring engagement and bio-feedback alongside post-experience assessments, researchers will be able to assess the VR effectiveness in conveying knowledge and skills. Researchers can detect the most effective elements of the VR application that motivate and engage learners. This information can be utilised to develop better strategies to enhance involvement in educational materials. In addition, our findings can inspire further research that combines engagement, multi-modal physiological data and learning outcomes for assessing VR applications that help comprehend the mechanisms of immersive learning.

7. Summary

We summarise our findings and illustrate how our results are aligned with our original research hypotheses (H).

H1: We hypothesised that diverse emotional responses within the three scenes would produce various attention patterns. Results from our study showed that user experience and engagement could be estimated by analysing eye-tracking data, as the eyes can reveal much more about a person’s emotions than most people realise and are a slightly more enigmatic indicator of their emotions. The number of fixations might indicate which parts of a visual environment appeal to the user. Scene 2 had the highest number of fixations, which suggests a high level of user interest in this scene. The high number of fixations in Scene 3 may suggest that users spent more time processing and integrating information from various scene areas.

H2: We predicted that pupil dilation would be affected by user engagement and performance in the VR application in different scenes. Our results showed increased pupil dilation in Scene 2, which had a lower average brightness level than in Scenes 1 and 3. Our findings align with existing literature, which suggests an inverse relationship between pupil size and the brightness of the visual environment. This finding highlights the importance of considering the impact of visual brightness on pupil size when reliably measuring user experience in virtual reality applications. Moreover, when comparing Scenes 1 and 3, there is no significant difference in average brightness between them, but the pupil dilation in Scene 3 is larger than in Scene 1. This indicated the relationship between pupil dilation and task difficulty, potentially leading to heightened user engagement and attention.

H3: We hypothesized that using an approach that increased cognitive load during instruction would negatively impact student performance. Our results confirmed this hypothesis, showing that cognitive load was highest in Scene 1. This was unsurprising, as Scene 1 presented a complex topic with extensive text requiring participants to read and understand various solar energy system components. Furthermore, our findings aligned with previous research by Tanaka and Yamaoka. Blink rate in Scene 1, which involved a simpler task, was lower compared to Scenes 2 and 3. This supports the notion that blink rate increases with task difficulty.

In summary, our VR application fosters higher engagement and intuitive interaction with 3D objects. By incorporating physiological sensors and eye-tracking, it provides valuable insights into user engagement and cognitive load, allowing future optimization of the learning experience. The VR setup’s ability to simulate real-world conditions and provide immediate feedback on design choices accelerates learning and improves accuracy compared to traditional 2D design methods. Furthermore, the application’s user-friendly interface and positive user reception suggest that our VR approach can significantly enhance learning outcomes in solar energy system design education. However, while integrating physiological measures offers a more comprehensive understanding of user engagement, it comes with the added complexity of data analysis and interpretation, requiring specialized tools and expertise. Moreover, although the impact of scene brightness on pupil dilation was explored, further work is clearly required for careful visual design to optimise user engagement and learning outcomes without causing visual strain or misinterpreting cognitive load.

8. Conclusions

This paper highlights how combining multimodal data channels, which include a variety of objective and subjective metrics, can offer insights into a more comprehensive understanding of learner engagement and evaluate user experience. We compared the captured data in different scenes of a VR solar energy systems design task to investigate learner engagement levels. Our findings showed that analysing eye-tracking and vital signs data allows a comprehensive analysis of user engagement and cognitive load measurements for designers and developers to develop applications that meet user expectations. For example, the majority of our participants found the VR application easy to use and felt highly engaged. Most users rated their engagement as extremely high, indicating the application’s effectiveness in capturing user interest and attention. Moreover, cognitive load varied across different scenes, with the highest cognitive load observed in the text-heavy first scene and the lowest in the more interactive second scene. This demonstrates the importance of balancing informational content and interactive elements to maintain user engagement. Moreover, pupil dilation varied significantly across scenes, with the greatest dilation in Scene 2. This finding suggests a correlation between pupil dilation and the level of cognitive engagement, offering a valuable metric for assessing user attention in VR environments. The blinking rate and number of fixations varied among scenes, providing insights into the varying cognitive demands and user focus in different parts of the VR experience.

The impact of these findings is multifaceted, as they contribute to understanding user engagement and experience in immersive virtual environments. By highlighting the importance of multimodal data channels and revealing the limitations of heart rate data in representing user engagement, our paper offers valuable insights for developers, designers and educators. Future research should explore the optimisation of content and interaction balance in VR applications, aiming to maximise user engagement and learning outcomes. Further investigation into the relationship between physiological responses and user experience can provide deeper insights into effective VR design strategies.

Author Contributions

N.A. devised the experiments for investigation and wrote the original manuscript. N.A. and A.A. performed the experiments, collected data, and created the visualisations. R.G. supervised the project, conceptualised the research, provided necessary resources, and reviewed and edited the manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

This study was ethically approved by Glasgow University’s College of Science and Engineering Ethics Committee (application number: 300230160).

Informed Consent Statement

Informed consent was obtained from all participants involved in this study.

Data Availability Statement

Data are available from the corresponding author on reasonable request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Zisos, A.; Sakki, G.K.; Efstratiadis, A. Mixing Renewable Energy with Pumped Hydropower Storage: Design Optimization under Uncertainty and Other Challenges. Sustainability 2023, 15, 13313. [Google Scholar] [CrossRef]

- Gerhard, D.; Norton, W.J. Virtual Reality Usability Design; CRC Press: Boca Raton, FL, USA, 2022. [Google Scholar] [CrossRef]

- Rivera, D. Visualizing Machine Learning in 3D. In Proceedings of the 28th ACM Symposium on Virtual Reality Software and Technology (VRST ’22), Tsukuba, Japan, 29 November–1 December 2022; ACM: New York, NY, USA, 2022. [Google Scholar] [CrossRef]

- Lehtonen, A.; Salonen, A.O.; Cantell, H. Climate Change Education: A New Approach for a World of Wicked Problems. In Sustainability, Human Well-Being, and the Future of Education; Springer International Publishing: Berlin/Heidelberg, Germany, 2018; pp. 339–374. [Google Scholar] [CrossRef]

- Cambridge International. Empowering Learners through Climate Change Education. Introduction Paper. 2023. Available online: https://www.cambridgeinternational.org/climatechangeeducation (accessed on 25 February 2024).

- Sabbagh, A.J.; Bajunaid, K.M.; Alarifi, N.; Winkler-Schwartz, A.; Alsideiri, G.; Al-Zhrani, G.; Alotaibi, F.E.; Bugdadi, A.; Laroche, D.; Del Maestro, R.F. Roadmap for developing complex virtual reality simulation scenarios: Subpial neurosurgical tumor resection model. World Neurosurg. 2020, 139, e220–e229. [Google Scholar] [CrossRef]

- Rogers, S. Virtual reality: The learning aid of the 21st century. Forbes 2019. [Google Scholar]

- Sala, N. Applications of virtual reality technologies in architecture and in engineering. Int. J. Space Technol. Manag. Innov. 2013, 3, 78–88. [Google Scholar] [CrossRef]

- Antonietti, A.; Imperio, E.; Rasi, C.; Sacco, M. Virtual reality and hypermedia in learning to use a turning lathe. J. Comput. Assist. Learn. 2001, 17, 142–155. [Google Scholar] [CrossRef]

- Clifford, R.M.; Jung, S.; Hoermann, S.; Billinghurst, M.; Lindeman, R.W. Creating a stressful decision making environment for aerial firefighter training in virtual reality. In Proceedings of the 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Osaka, Japan, 23–27 March 2019; pp. 181–189. [Google Scholar]

- Pouliquen-Lardy, L.; Milleville-Pennel, I.; Guillaume, F.; Mars, F. Remote collaboration in virtual reality: Asymmetrical effects of task distribution on spatial processing and mental workload. Virtual Real. 2016, 20, 213–220. [Google Scholar] [CrossRef]

- Al Farsi, G.; Yusof, A.B.M.; Fauzi, W.J.B.; Rusli, M.E.B.; Malik, S.I.; Tawafak, R.M.; Mathew, R.; Jabbar, J. The practicality of virtual reality applications in education: Limitations and recommendations. J. Hunan Univ. Nat. Sci. 2021, 48, 142–155. [Google Scholar]

- Ray, A.B.; Deb, S. Smartphone based virtual reality systems in classroom teaching—A study on the effects of learning outcome. In Proceedings of the 2016 IEEE Eighth International Conference on Technology for Education (T4E), Mumbai, India, 2–4 December 2016; pp. 68–71. [Google Scholar]

- AlQallaf, N.; Chen, X.; Ge, Y.; Khan, A.; Zoha, A.; Hussain, S.; Ghannam, R. Teaching solar energy systems design using game-based virtual reality. In Proceedings of the 2022 IEEE Global Engineering Education Conference (EDUCON), Tunis, Tunisia, 28–31 March 2022; pp. 956–960. [Google Scholar]

- Weech, S.; Kenny, S.; Barnett-Cowan, M. Presence and cybersickness in virtual reality are negatively related: A review. Front. Psychol. 2019, 10, 158. [Google Scholar] [CrossRef]

- Carruth, D.W. Virtual reality for education and workforce training. In Proceedings of the 2017 15th International Conference on Emerging eLearning Technologies and Applications (ICETA), Stary Smokovec, Slovakia, 26–27 October 2017; pp. 1–6. [Google Scholar]

- Vora, J.; Nair, S.; Gramopadhye, A.K.; Duchowski, A.T.; Melloy, B.J.; Kanki, B. Using virtual reality technology for aircraft visual inspection training: Presence and comparison studies. Appl. Ergon. 2002, 33, 559–570. [Google Scholar] [CrossRef]

- Markowitz, D.M.; Bailenson, J.N. Virtual reality and the psychology of climate change. Curr. Opin. Psychol. 2021, 42, 60–65. [Google Scholar] [CrossRef]

- Queiroz, A.C.M.; Fauville, G.; Abeles, A.T.; Levett, A.; Bailenson, J.N. The Efficacy of Virtual Reality in Climate Change Education Increases with Amount of Body Movement and Message Specificity. Sustainability 2023, 15, 5814. [Google Scholar] [CrossRef]

- Pinthong, J.; Kaewmanee, W. Virtual reality of solar farm for the solar energy system training. In Proceedings of the 2020 5th International STEM Education Conference (iSTEM-Ed), Hua Hin, Thailand, 4–6 November 2020; pp. 2–4. [Google Scholar]

- Abichandani, P.; Mcintyre, W.; Fligor, W.; Lobo, D. Solar energy education through a cloud-based desktop virtual reality system. IEEE Access 2019, 7, 147081–147093. [Google Scholar] [CrossRef]

- Chiou, R.; Nguyen, H.V.; Husanu, I.N.C.; Tseng, T.L.B. Developing VR-Based Solar Cell Lab Module in Green Manufacturing Education. In Proceedings of the 2021 ASEE Virtual Annual Conference Content Access, Virtual Online, 26–29 July 2021. [Google Scholar]

- Gonzalez Lopez, J.M.; Jimenez Betancourt, R.O.; Ramirez Arredondo, J.M.; Villalvazo Laureano, E.; Rodriguez Haro, F. Incorporating virtual reality into the teaching and training of Grid-Tie photovoltaic power plants design. Appl. Sci. 2019, 9, 4480. [Google Scholar] [CrossRef]

- Alqallaf, N.; Ghannam, R. Immersive Learning in Photovoltaic Energy Education: A Comprehensive Review of Virtual Reality Applications. Solar 2024, 4, 136–161. [Google Scholar] [CrossRef]

- Burns, M. Immersive Learning for Teacher Professional Development. eLearn 2012, 2012, 1. [Google Scholar] [CrossRef]

- Caldas, O.I.; Aviles, O.F.; Rodriguez-Guerrero, C. Effects of presence and challenge variations on emotional engagement in immersive virtual environments. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 1109–1116. [Google Scholar] [CrossRef] [PubMed]

- Casey, P.; Baggili, I.; Yarramreddy, A. Immersive virtual reality attacks and the human joystick. IEEE Trans. Dependable Secur. Comput. 2019, 18, 550–562. [Google Scholar] [CrossRef]

- Radianti, J.; Majchrzak, T.A.; Fromm, J.; Wohlgenannt, I. A systematic review of immersive virtual reality applications for higher education: Design elements, lessons learned, and research agenda. Comput. Educ. 2020, 147, 103778. [Google Scholar] [CrossRef]

- Borrego, A.; Latorre, J.; Alcañiz, M.; Llorens, R. Comparison of Oculus Rift and HTC Vive: Feasibility for virtual reality-based exploration, navigation, exergaming, and rehabilitation. Games Health J. 2018, 7, 151–156. [Google Scholar] [CrossRef]

- Jennett, C.; Cox, A.L.; Cairns, P.; Dhoparee, S.; Epps, A.; Tijs, T.; Walton, A. Measuring and defining the experience of immersion in games. Int. J. Hum.-Comput. Stud. 2008, 66, 641–661. [Google Scholar] [CrossRef]

- Tcha-Tokey, K.; Christmann, O.; Loup-Escande, E.; Richir, S. Proposition and Validation of a Questionnaire to Measure the User Experience in Immersive Virtual Environments. Int. J. Virtual Real. 2016, 16, 33–48. [Google Scholar] [CrossRef]

- Yao, L.; Liu, Y.; Li, W.; Zhou, L.; Ge, Y.; Chai, J.; Sun, X. Using physiological measures to evaluate user experience of mobile applications. In Proceedings of the International Conference on Engineering Psychology and Cognitive Ergonomics, Heraklion, Greece, 22–27 June 2014; Springer: Berlin/Heidelberg, Germany, 2014; pp. 301–310. [Google Scholar]

- Kreibig, S.D. Autonomic nervous system activity in emotion: A review. Biol. Psychol. 2010, 84, 394–421. [Google Scholar] [CrossRef] [PubMed]

- Kim, P.W. Real-time bio-signal-processing of students based on an intelligent algorithm for Internet of Things to assess engagement levels in a classroom. Future Gener. Comput. Syst. 2018, 86, 716–722. [Google Scholar] [CrossRef]

- McNeal, K.S.; Spry, J.M.; Mitra, R.; Tipton, J.L. Measuring student engagement, knowledge, and perceptions of climate change in an introductory environmental geology course. J. Geosci. Educ. 2014, 62, 655–667. [Google Scholar] [CrossRef]

- Lee, V.R.; Fischback, L.; Cain, R. A wearables-based approach to detect and identify momentary engagement in afterschool Makerspace programs. Contemp. Educ. Psychol. 2019, 59, 101789. [Google Scholar] [CrossRef]

- Darnell, D.K.; Krieg, P.A. Student engagement, assessed using heart rate, shows no reset following active learning sessions in lectures. PLoS ONE 2019, 14, e0225709. [Google Scholar] [CrossRef] [PubMed]

- Llanes-Jurado, J.; Marín-Morales, J.; Guixeres, J.; Alcañiz, M. Development and calibration of an eye-tracking fixation identification algorithm for immersive virtual reality. Sensors 2020, 20, 4956. [Google Scholar] [CrossRef]

- Sharma, C.; Dubey, S.K. Analysis of eye tracking techniques in usability and HCI perspective. In Proceedings of the 2014 International Conference on Computing for Sustainable Global Development (INDIACom), New Delhi, India, 5–7 March 2014; pp. 607–612. [Google Scholar]

- Rosa, P.J.; Gamito, P.; Oliveira, J.; Morais, D.; Pavlovic, M.; Smyth, O. Show me your eyes! The combined use of eye tracking and virtual reality applications for cognitive assessment. In Proceedings of the 3rd 2015 Workshop on ICTs for Improving Patients Rehabilitation Research Techniques, Lisbon, Portugal, 1–2 October 2015; pp. 135–138. [Google Scholar]

- Hayhoe, M.M. Advances in relating eye movements and cognition. Infancy 2004, 6, 267–274. [Google Scholar] [CrossRef]

- Lutz, O.H.M.; Burmeister, C.; dos Santos, L.F.; Morkisch, N.; Dohle, C.; Krüger, J. Application of head-mounted devices with eye-tracking in virtual reality therapy. Curr. Dir. Biomed. Eng. 2017, 3, 53–56. [Google Scholar] [CrossRef]

- Global Leader in Eye Tracking for over 20 Years. Available online: https://www.tobii.com/ (accessed on 7 February 2024).

- FOVE Co., Ltd. Available online: https://fove-inc.com/ (accessed on 7 February 2024).

- Jacob, R.J.; Karn, K.S. Eye tracking in human-computer interaction and usability research: Ready to deliver the promises. In The Mind’s Eye; Elsevier: Amsterdam, The Netherlands, 2003; pp. 573–605. [Google Scholar]

- Li, F.; Lee, C.H.; Feng, S.; Trappey, A.; Gilani, F. Prospective on eye-tracking-based studies in immersive virtual reality. In Proceedings of the 2021 IEEE 24th International Conference on Computer Supported Cooperative Work in Design (CSCWD), Dalian, China, 5–7 May 2021; pp. 861–866. [Google Scholar]

- Sun, X.; Sun, X.; Wang, Q.; Wang, X.; Feng, L.; Yang, Y.; Jing, Y.; Yang, C.; Zhang, S. Biosensors toward behaviour detection in diagnosis of alzheimer’s disease. Front. Bioeng. Biotechnol. 2022, 10, 1031833. [Google Scholar] [CrossRef]

- Xia, Y.; Khamis, M.; Fernandez, F.A.; Heidari, H.; Butt, H.; Ahmed, Z.; Wilkinson, T.; Ghannam, R. State-of-the-Art in Smart Contact Lenses for Human–Machine Interaction. IEEE Trans. Hum.-Mach. Syst. 2023, 53, 187–200. [Google Scholar] [CrossRef]

- Azevedo, R.; Aleven, V. International Handbook of Metacognition and Learning Technologies; Springer: Berlin/Heidelberg, Germany, 2013; Volume 26. [Google Scholar]

- Ales, F.; Giromini, L.; Zennaro, A. Complexity and cognitive engagement in the Rorschach task: An eye-tracking study. J. Personal. Assessment 2020, 102, 538–550. [Google Scholar] [CrossRef] [PubMed]

- Bergstrom, J.R.; Schall, A. Eye Tracking in User Experience Design; Elsevier: Amsterdam, The Netherlands, 2014. [Google Scholar]

- Partala, T.; Surakka, V. Pupil size variation as an indication of affective processing. Int. J. Hum.-Comput. Stud. 2003, 59, 185–198. [Google Scholar] [CrossRef]

- Hopstaken, J.F.; Van Der Linden, D.; Bakker, A.B.; Kompier, M.A. A multifaceted investigation of the link between mental fatigue and task disengagement. Psychophysiology 2015, 52, 305–315. [Google Scholar] [CrossRef] [PubMed]

- Bradley, M.M.; Miccoli, L.; Escrig, M.A.; Lang, P.J. The pupil as a measure of emotional arousal and autonomic activation. Psychophysiology 2008, 45, 602–607. [Google Scholar] [CrossRef] [PubMed]

- Gilzenrat, M.S.; Nieuwenhuis, S.; Jepma, M.; Cohen, J.D. Pupil diameter tracks changes in control state predicted by the adaptive gain theory of locus coeruleus function. Cogn. Affect. Behav. Neurosci. 2010, 10, 252–269. [Google Scholar] [CrossRef] [PubMed]

- Hess, E.H.; Polt, J.M. Pupil Size in Relation to Mental Activity during Simple Problem-Solving. Science 1964, 143, 1190–1192. [Google Scholar] [CrossRef]

- Zekveld, A.A.; Kramer, S.E.; Festen, J.M. Pupil response as an indication of effortful listening: The influence of sentence intelligibility. Ear Hear. 2010, 31, 480–490. [Google Scholar] [CrossRef]

- Wascher, E.; Heppner, H.; Möckel, T.; Kobald, S.O.; Getzmann, S. Eye-blinks in choice response tasks uncover hidden aspects of information processing. EXCLI J. 2015, 14, 1207. [Google Scholar]

- Sakai, T.; Tamaki, H.; Ota, Y.; Egusa, R.; Imagaki, S.; Kusunoki, F.; Sugimoto, M.; Mizoguchi, H. Eda-based estimation of visual attention by observation of eye blink frequency. Int. J. Smart Sens. Intell. Syst. 2017, 10, 1–12. [Google Scholar] [CrossRef]

- Bentivoglio, A.R.; Bressman, S.B.; Cassetta, E.; Carretta, D.; Tonali, P.; Albanese, A. Analysis of blink rate patterns in normal subjects. Mov. Disord. 1997, 12, 1028–1034. [Google Scholar] [CrossRef] [PubMed]

- Tanaka, Y.; Yamaoka, K. Blink activity and task difficulty. Percept. Mot. Ski. 1993, 77, 55–66. [Google Scholar] [CrossRef] [PubMed]

- Paas, F.; Renkl, A.; Sweller, J. Cognitive load theory and instructional design: Recent developments. Educ. Psychol. 2003, 38, 1–4. [Google Scholar] [CrossRef]

- Sweller, J.; Van Merrienboer, J.J.; Paas, F.G. Cognitive architecture and instructional design. Educ. Psychol. Rev. 1998, 10, 251–296. [Google Scholar] [CrossRef]

- Kirschner, P.A. Cognitive load theory: Implications of cognitive load theory on the design of learning. Learn. Instr. 2002, 12, 1–10. [Google Scholar] [CrossRef]

- Leppink, J.; van den Heuvel, A. The evolution of cognitive load theory and its application to medical education. Perspect. Med. Educ. 2015, 4, 119–127. [Google Scholar] [CrossRef] [PubMed]

- AlQallaf, N.; Ayaz, F.; Bhatti, S.; Hussain, S.; Zoha, A.; Ghannam, R. Solar Energy Systems Design in 2D and 3D: A Comparison of User Vital Signs. In Proceedings of the 2022 29th IEEE International Conference on Electronics, Circuits and Systems (ICECS), Glasgow, UK, 24–26 October 2022; pp. 1–4. [Google Scholar] [CrossRef]

- Dale, E. Audiovisual Methods in Teaching; Dryden Press: England, UK, 1969. [Google Scholar]

- Dale, A.; Newman, L. Sustainable development, education and literacy. Int. J. Sustain. High. Educ. 2005, 6, 351–362. [Google Scholar] [CrossRef]

- Alsuradi, H.; Eid, M. EEG-based Machine Learning Models to Evaluate Haptic Delay: Should We Label Data Based on Self-Reporting or Physical Stimulation? IEEE Trans. Haptics 2023, 16, 524–529. [Google Scholar] [CrossRef] [PubMed]

- De Lorenzis, F.; Pratticò, F.G.; Repetto, M.; Pons, E.; Lamberti, F. Immersive Virtual Reality for procedural training: Comparing traditional and learning by teaching approaches. Comput. Ind. 2023, 144, 103785. [Google Scholar] [CrossRef]

- Pontonnier, C.; Dumont, G.; Samani, A.; Madeleine, P.; Badawi, M. Designing and evaluating a workstation in real and virtual environment: Toward virtual reality based ergonomic design sessions. J. Multimodal User Interfaces 2014, 8, 199–208. [Google Scholar] [CrossRef]

- Lin, Y.; Lan, Y.; Wang, S. A method for evaluating the learning concentration in head-mounted virtual reality interaction. Virtual Reality 2022, 27, 863–885. [Google Scholar] [CrossRef]

- Shimmersensing. Shimmer3 ECG Unit. In Shimmer Wearable Sensor Technology; Shimmersensing: Dublin, Ireland, 2022. [Google Scholar]

- HP. com. HP Reverb G2 Omnicept Edition. In HP Reverb G2 Omnicept Edition; HP Inc.: Palo Alto, CA, USA, 2022. [Google Scholar]

- Drew, B. Standardization of electrode placement for continuous patient monitoring: Introduction of an assessment tool to compare proposed electrocardiogram lead configurations. J. Electrocardiol. 2011, 44, 115–118. [Google Scholar] [CrossRef] [PubMed]

- Technologies, U. Unity Real-Time Development Platform | 3D, 2D VR & AR Engine. Available online: https://unity.com/ (accessed on 9 January 2024).

- Murphy, D.; Higgins, C. Secondary inputs for measuring user engagement in immersive VR education environments. arXiv 2019, arXiv:1910.01586. [Google Scholar]

- Sweller, J.; Ayres, P.; Kalyuga, S.; Sweller, J.; Ayres, P.; Kalyuga, S. Measuring cognitive load. In Cognitive Load Theory; Cambridge University Press: Cambridge, UK, 2011; pp. 71–85. [Google Scholar]

- Goldwater, B.C. Psychological significance of pupillary movements. Psychol. Bull. 1972, 77, 340. [Google Scholar] [CrossRef] [PubMed]

- Chen, S.; Epps, J. Automatic classification of eye activity for cognitive load measurement with emotion interference. Comput. Methods Programs Biomed. 2013, 110, 111–124. [Google Scholar] [CrossRef] [PubMed]

- Laeng, B.; Sulutvedt, U. The eye pupil adjusts to imaginary light. Psychol. Sci. 2014, 25, 188–197. [Google Scholar] [CrossRef] [PubMed]

- Piquado, T.; Isaacowitz, D.; Wingfield, A. Pupillometry as a measure of cognitive effort in younger and older adults. Psychophysiology 2010, 47, 560–569. [Google Scholar] [CrossRef]

- Mathôt, S.; Grainger, J.; Strijkers, K. Pupillary responses to words that convey a sense of brightness or darkness. Psychol. Sci. 2017, 28, 1116–1124. [Google Scholar] [CrossRef] [PubMed]

- Miller, B.W. Using reading times and eye-movements to measure cognitive engagement. Educ. Psychol. 2015, 50, 31–42. [Google Scholar] [CrossRef]

- Albert, W.; Tullis, T. Measuring the User Experience; Elsevier: Amsterdam, The Netherlands, 2010. [Google Scholar]

- Wierda, S.M.; van Rijn, H.; Taatgen, N.A.; Martens, S. Pupil dilation deconvolution reveals the dynamics of attention at high temporal resolution. Proc. Natl. Acad. Sci. USA 2012, 109, 8456–8460. [Google Scholar] [CrossRef]

- Zénon, A.; Sidibé, M.; Olivier, E. Pupil size variations correlate with physical effort perception. Front. Behav. Neurosci. 2014, 8, 286. [Google Scholar] [PubMed]

- Ueda, Y.; Tominaga, A.; Kajimura, S.; Nomura, M. Spontaneous eye blinks during creative task correlate with divergent processing. Psychol. Res. 2016, 80, 652–659. [Google Scholar] [CrossRef] [PubMed]

- Salvi, C.; Bricolo, E.; Franconeri, S.L.; Kounios, J.; Beeman, M. Sudden insight is associated with shutting out visual inputs. Psychon. Bull. Rev. 2015, 22, 1814–1819. [Google Scholar] [CrossRef] [PubMed]

- Ledger, H. The effect cognitive load has on eye blinking. Plymouth Stud. Sci. 2013, 6, 206–223. [Google Scholar]

- Holland, M.K.; Tarlow, G. Blinking and mental load. Psychol. Rep. 1972, 31, 119–127. [Google Scholar] [CrossRef]

- Tobii.com. Metrics. In Tobii XR Devzone; Tobii: Stockholm, Sweden, 2022. [Google Scholar]

- Ritter, K.A.; Chambers, T.L. PV-VR: A Virtual Reality Training Application Using Guided Virtual Tours of the Photovoltaic Applied Research and Testing (PART) Lab. In Proceedings of the 2019 ASEE Annual Conference & Exposition, Tampa, FL, USA, 16–19 June 2019. [Google Scholar]

- Hatzilygeroudis, I.; Kovas, K.; Grivokostopoulou, F.; Palkova, Z. A hybrid educational platform based on virtual world for teaching solar energy. In Proceedings of the EDULEARN14 Proceedings, Barcelona, Spain, 7–9 July 2014; IATED: Seville, Spain, 2014; pp. 522–530. [Google Scholar]

- Grivokostopoulou, F.; Perikos, I.; Kovas, K.; Hatzilygeroudis, I. Learning approaches in a 3D virtual environment for learning energy generation from renewable sources. In Proceedings of the Twenty-Ninth International Flairs Conference, Key Largo, FL, USA, 16–18 May 2016. [Google Scholar]

- Ritter, K., III; Chambers, T.L. Three-dimensional modeled environments versus 360 degree panoramas for mobile virtual reality training. Virtual Real. 2022, 26, 571–581. [Google Scholar] [CrossRef]

- Arntz, A.; Eimler, S.C.; Keßler, D.; Thomas, J.; Helgert, A.; Rehm, M.; Graf, E.; Wientzek, S.; Budur, B. Walking on the Bright Sight: Evaluating a Photovoltaics Virtual Reality Education Application. In Proceedings of the 2021 IEEE International Conference on Artificial Intelligence and Virtual Reality (AIVR), Taichung, Taiwan, 15–17 November 2021; pp. 295–301. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).