Abstract

This paper presents the convergence analysis of a newly proposed algorithm for approximating solutions to split equality variational inequality and fixed point problems in real Hilbert spaces. We establish that, under reasonably mild conditions, specifically when the involved mappings are quasimonotone, uniformly continuous, and quasi-nonexpansive, the sequences generated by the algorithm converge strongly to a solution of the problem. Furthermore, we provide several numerical experiments to demonstrate the practical effectiveness of the proposed method and compare its performance with that of existing algorithms.

1. Introduction

Variational inequality problems (VIPs) and fixed-point problems (FPPs) play a central role in optimization and equilibrium theory, with wide-ranging applications in fields like engineering, economics, and operations research. Simply put, FPPs help us find values that remain unchanged under a given transformation, an idea deeply explored by mathematicians like Banach, Brouwer, and Schauder [1]. On the other hand, VIPs are used to model systems where certain inequality conditions must be met, such as physical or economic equilibrium situations. These were formalized in the mid-20th century by researchers like Stampacchia [2] and Fichera [3].

As problems in real-world systems grew more complex, so did the mathematical models needed to handle them. In many cases, constraints and objectives are distributed across different spaces or subsystems, which traditional VIPs and FPPs cannot fully capture. This gave rise to extended models like the split equality variational inequality problem (SEVIP) and the split equality fixed point problem (SEFPP). These models aim to find solutions that not only satisfy variational inequalities or fixed point conditions but also meet additional coupling constraints that link variables from separate domains.

The idea of splitting constraints across domains originally came from the split feasibility problem (SFP), introduced by Elfving and later generalized by Moudafi into the more flexible split equality feasibility problem (SEFP) [4]. These problems are especially relevant in applications such as medical image reconstruction, signal processing, and decentralized decision-making systems [5,6]. Further extending these ideas, the split equality variational inequality and fixed point problem (SEVIFPP) combines both VIP and FPP conditions, making it a powerful framework for tackling hybrid equilibrium problems.

Solving these problems efficiently requires robust iterative algorithms. Early approaches relied on projection methods and the well-known extragradient technique introduced by Korpelevich [7]. Later, improvements such as the subgradient extragradient method [8] and Tseng modified method [9] addressed various limitations, including high computational cost and limited applicability to nonmonotone settings. Other researchers introduced enhancements using inertial and viscosity terms to increase convergence speed and algorithm stability [10,11,12].

More recent contributions by Zhao [13], Kwelegano et al. [14], and others have further advanced the field by designing iterative schemes tailored to the structure of SEVIP and SEVIFPP. These methods are particularly effective when dealing with quasimonotone, pseudomonotone, or quasi-nonexpansive mappings, each of which poses unique mathematical challenges [15,16,17,18,19]. Building on these developments, Mekuriaw et al. [20] recently introduced new inertial-like algorithms that show promising convergence behavior.

Inspired by this evolving body of work, the current study proposes new iterative algorithms to solve SEVIFPP more efficiently. The aim is to develop techniques that not only guarantee convergence under more general conditions but also improve computation time and practical applicability.

Let be a Hilbert space with a non-empty closed convex subset B. A fixed point problem (FPP) seeks an element such that for a given operator , with the set of solutions denoted by . Fixed point theory has a rich history, originating from foundational results by Poincaré, Brouwer, Kakutani, Schauder, and Banach, particularly the Banach contraction principle [1].

Variational inequality problems (VIPs) provide another important framework in non-linear analysis and optimization. For a mapping , the classical VIP seeks such that

with the solution set denoted as . First studied by Stampacchia [2] and Fichera [3], VIPs are crucial for modeling equilibrium problems under constraints, especially in systems with non-linearity or interacting subsystems. A related concept is the Minty variational inequality problem (MVIP), which requires

with the solution set . If Z is continuous and convex, then [15]; for pseudomonotone and continuous Z, the two sets coincide [17]; however, for quasi-monotone Z, the reverse inclusion may not hold [16].

The split equality feasibility problem (SEFP), introduced by Moudafi [4], extends these ideas to multiple domains. Given two Hilbert spaces , , subsets and , and bounded linear operators , , the SEFP seeks such that

This formulation generalizes the split feasibility problem (SFP) introduced by Elfving [21] and has applications in image reconstruction, signal processing, and optimization [5,6].

By replacing the sets B and E in SEFP with solution sets of VIPs, we obtain the split equality variational inequality problem (SEVIP):

where and are operators.

Likewise, the split equality fixed point problem (SEFPP) is defined as:

where and are the respective fixed point sets.

A further extension, the split equality variational inequality and fixed point problem (SEVIFPP), combines both structures. It involves finding

where and are non-linear mappings.

Different projection-like iterative techniques were developed to solve VIP under various relevant conditions. The most basic approach for resolving optimization issues is the projection gradient method defined as;

where the constant is positive. It can be demonstrated with ease that the iterative approach (1) converges weakly to a unique solution of if Z is inversely strongly monotone and Lipschitz continuous.

To overcome the strong monotonicity requirement and achieve monotonicity, Korpelevich [7] suggested an extragradient method for resolving VIP.

where Z is monotone and Lipschitz continuous from a non-empty, closed, and convex subset of a real Hilbert space into a space itself and is a positive constant. When , this method’s weak convergence was achieved. This method’s primary flaw is that it necessitates the computation of two metric projections onto B for every iteration. The extragradient method becomes extremely complex and expensive to construct if the set B is not simple. Numerous authors were inspired by this to suggest some modified extragradient techniques.

Censor et al. [8] developed the subgradient extragradient technique, which addresses this problem by replacing the second projection onto B with a projection onto a straightforward half-space :

Here, and L signifies the Lipschitz constant of Z. They demonstrated that (3) converges weakly to if Z is monotone and Lipschitz. When Z is monotone (or pseudomonotone), several writers have demonstrated the poor convergence of the subgradient extragradient approach (3) (see to [22,23] for some new findings, for instance).

Later, it is noted that two projections and two operator evaluations must still be computed for each iteration of the Censor et al. [8] approach. These turn the subgradient extragradient approach (3) computationally costly in applications where the structure of Z is complex and Z has a complex process of assessment.

The Tseng extragradient method was presented by Tseng [9] in 2000.

where is falling between 0 and and L being Z’s Lipschitz constant. He discovered that the sequence has a weak convergence to a point in , where X is a bounded linear operator from to and are both real Hibert spaces. The Tseng method has an advantage over Censor et al. in that it only has to make one projection onto the possible set in every iteration. Numerous additions to Tseng’s algorithm have been documented in scholarly works (see [24,25,26] as an example).

The subsequent iterative method for solving the equivalent SEVIP in the Hilbert spaces was created in 2022 by Kwelegano et al. [14].

Let . Select randomly.

For , perform simultaneous computations,

While

and and and are the smallest non-negative integers t and v, correspondingly so that

and the sequences . Additionally, the mappings and on bounded subsets of the non-empty closed convex subsets B and E of and , respectively, are pseudomonotone, uniformly continuous, and sequentially weakly continuous.They demonstrated that, under appropriate assumptions, the resulting sequence strongly converges to a problem solution (5). Several scholars have utilized a line search strategy to get rid the requirement of the underlying mapping Z and J’s Lipschitz continuity.

The iterative procedure below was presented by Zhao [13] in 2015 for the category of quasi-nonexpansive mappings, i.e., and with non-empty fixed point sets F(Z) and F(J):

This showed that, under certain assumptions, the procedure in (6) weakly converges to a solution of the split equality fixed point issue. This is without the need for an understanding of the norms of X and Y.

Tan [11] proposed the following method in 2022 to address the common solution of the fixed point problem related to demicontractive mapping J and variational inequality problem related to monotone and Lipschitz continuous mapping Z.

The method (7) converges strongly to a common point in (VI(B,Z) and F(J) utilizing the inertial and viscosity techniques.

In an effort to solve split equality variational inequality and fixed point problems involving uniformly continuous quasi-monotone mappings for the MVIP and quasi-non expansive demiclosed mappings for the fixed point problem in Hilbert spaces, Tan and Cho [10], Kwelegano et al. [14], Thong and Vuong [27], Zhao [13], and Polyak [28] stipulated motivation and encouragement. By motivating from Tan and Cho [10], Kwelegano et al. [14], Thong and Vuong [27], Zhao [13] and Polyyak [28], Mekuriaw, Zegeye, Takele and Tufa [20] proposed the inertial-like subgradient extragradient algorithm and Inertial-like Tseng subgradient extragradient method. In this direction, we extended the recent results achieved by Mekuriaw, Zegeye, Takele and Tufa [20], and we proposed a new algorithm for solving (SEVIFPP).

2. Preliminaries

In this section, there is an overview of the core definitions and pertinent findings needed to proof our key results. In the following, let be a Hilbert space whose norm and inner product are represented by and , respectively. We compose to suggest that the sequence converges weakly to r and to suggest that the sequence converges strongly to r. Remember that the projection of onto B (nearest point or metric), represented by . The meaning of is given below. There is a single nearest point in B for each , indicated by in a way that

Here, B is a closed convex subset of a Hilbert space .

Definition 1

([29]). Suppose we have a real Hilbert space Ω. Then, a non-linear operator is known as L-Lipschitz continuous if there is a constant , such that .

Definition 2

([29]). Assume we have a real Hilbert space Ω. Then, a non-linear operator is called contraction if for a constant , such that .

Definition 3

([29]). Let Ω be a Hilbert space and be a linear operator, an operator is called nonexpansive if such that .

Definition 4

([29]). Consider a non-linear operator where Ω is a Hilbert space. Thus, Z is called quasi non-expensive if is non-empty and

Definition 5

([29]). A non-linear operator is called firmly nonexpansive if is nonexpansive, or correspondingly,

or similarly, Z is firmly nonexpansive strictly in the event that Z can be articulated as

as well as is nonexpansive; projections are firmly nonexpansive.

The following features of are well known.

- (P1)

- Thus, is firmly nonexpansive. is specifically nonexpansive from onto B.Additionally, there is

- (P2)

- So, for each , we obtain

- (P3)

Definition 6

([30]). Suppose we have an real Hilbert space Ω and a non-linear operator is called monotone if it satisfies

Definition 7

([31]). An operator , where Ω is a real Hilbert space is referred to as pseudomonotone if

Definition 8

([31]). A non-linear operator is called quasi-monotone if

Definition 9

([31]). Consider that is a non-linear operator such that . Thus, the operator , where I is the identity operator on Ω, which is said to be demiclosed at zero, and in case for any , the following simplification holds true:

Lemma 1

([32]). Let Ω be an real Hilbert space. Then, the following results are proper:

(1) .

(2) .

(3) , where .

Lemma 2

([33]). If is a continuous and quasi-nonexpansive operator, and B is a closed convex subset of a Hilbert space Ω; then, is closed, convex and non-empty.

Lemma 3

([34]). Suppose that , where and are real Hilbert spaces. If and , where C is non-empty, closed and convex subset of Ω; thus, we have

Lemma 4

([35]). Let be an operator, and B be a closed, convex and non-empty subset of a real Hilbert space Ω. Therefore, the following inequality holds:

Lemma 5

([16]). Let be an opertor, Ω is a Hilbert space, and B is a non-empty, closed, and convex subset of Ω. The following list shows the locations of non-empty . If either

(i) Z is pseudomonotone on B and ;

(ii) Z is quasi-monotone on B, on B and B is bounded;

(iii) Z is quasi-monotone on B, and there exists such that then is non-empty.

Lemma 6

([36]). Let be a sequence of non-negative real numbers and be a subsequence of with the property fulfilling ; then, there exists a non-decreasing sequence of such that and

Lemma 7

([37]). Assume that is a sequence of real numbers and is a sequence of non-negative real numbers such that fulfilling

If , then .

Lemma 8

([20]). Assume that the conditions (C1)–(C6) described in Chapter 3 are retained. Suppose and . Let and be sequences generated by the proposed algorithm. Let be a subsequence of such that and . Then .

3. Refined Algorithm for Split Equality Variational Inequality and Fixed Point Problems

This section covers the convergence analysis of the refined inertial-like subgradient extragradient algorithm. In the continuation, we will make the following assumptions.

- (C1) Let the sets B and E be non-empty, closed, and convex subsets of the real Hilbert spaces and , respectively.

- (C2) Let and be quasi-monotone, uniformly continuous, and and , whenever and are sequences in B and E, respectively, such that and .

- (C3) Let and be quasi-nonexpansive mappings such that and are demiclosed at zero.

- (C4) Let and be bounded linear mappings and let and be adjoints of X and Y, respectively, where is another real Hilbert space.

- (C5) Let .

- (C6) Let and be the sequences satisfying and , where with and , for some .

Proposed Inertial-like Subgradient Extragradient Algorithm

Initialization: Let . Set . Iterative steps:

Step 1. Given the iterates and in , choose such that , where

Step 2. Set

Step 3. Compute

where

and the smallest non-negative integer i satisfying

and and the smallest non-negative integer v satisfying

Step 4. Compute

where with

for , otherwise , for some . Set and go to Step 1.

Remark 1.

Similarly, we get and .

Remark 2.

Proof.

If we consider the case when , then . Thus, we have and hence (11) is satisfied for . Let and assume on the contrary that

That is,

Since is continuous, we have

which implies by the uniform continuity of Z on B that

Now, put . Then, by the projection property (P2) we have

which implies that

Taking the limit as of (21) and making use of (17) and (19), we obtain that , which is a contradiction to our assumption that . Thus, there exists a non-negative integer t which satisfies (11). Thus, (11) is well-defined. The proof concerning line search rule (12) is similar and hence the proof is complete. □

Theorem 1.

Suppose conditions (C1)–(C6) are satisfied. Then, the sequences and generated by newly refined algorithm are bounded.

Proof.

Suppose and let

Then, we obtain

and

Moreover, we have from the quasi-nonexpansive property of M that

Similarly, we obtain that

Substituting (25) into (23), we get

Similarly, we get

From (13), (22) and Lemma 1, we get

Similarly, we have

Combining (29) and (30), we get

Substituting (27) and (28), into (31), we obtain

Since , using the definition of in (10), property (P1) of metric projection and Lemma 1, we have

Thus, the inequality in (33) implies that

Since , we have . Taking and rearranging, we get .

Hence,

Using (34) and (35) and Lemma 1,

Since by definition of and by (P2), we obtain 0. This, together with the definition of , the Schwartz inequality, and the fact that for any two real numbers x and y, give us

Using (37) in (36)

Similarly, we get

Adding (38) and (39), we get

Now, using the definition of in (13) and property (P3) of the metric projection , we have

Similarly,

Adding (41) and (42) and using (14) for the case , we have

For the case in (14), we can easily show that (43) holds. Using the definitions of and given in (8) and applying Lemma 1, we have

and

which imply that

Substituting (40) and (43) into (32)

Hence,

Substituting (44) into (46) and taking the properties of and into account, we obtain

Which can be written as

where

Theorem 2.

Suppose conditions (C1)–(C6) hold. Let and . Then, the sequence produced by the refined algorithm strongly converges to a point such that .

Proof.

Let . From the definitions of and , (22), (27), (28) and Lemma 1 we have

this implies

But (40) and (43) imply that and , respectively. Thus, by putting these two inequalities into (51), we get

From Remark 1, we have that the sequences and are bounded. Thus, we obtain from the boundedness of and that

for some . Similarly, we have

for some .

Case 1. Let .

Since it is bounded, exists. Then, taking the limit on both sides of (58) as and taking the conditions on the parameters into account, we obtain

In the same way, we also get

Furthermore, we have,

and

By the definition of in (13) and property (P3) of metric projection, we have

Thus, combining (61) and (63), we get

By non-expansive property of and from (62) we have

By the boundedness of and Theorem 1, there is a subsequence of with and

Hence, from (59) and (60), we get

Now, from (8), we get that and as and hence and . Using (67) and Lemma 8, we obtain . From (64), we obtain

Thus, we have from (68) that , which together with (62) and the demiclosedness of gives that . Similarly, we obtain that . Hence, we obtain

Then, using Lemma 1 (2), we get

Since X is a bounded linear mapping, we get , and similarly we have .

Thus, taking limsup on both sides of (69) and using (61), we obtain . Thus, we obtain and hence . In addition, since , Equation (66) and Lemma 3 gives

From (8) and (13),

From (64) and (65), we have that

Using the assumptions on and , since is bounded, Remark 1, (59) and (72) imply that the limit of the right hand side of the last inequality in (71) is zero.

Hence, combining the assumption on , Remark 1 and (75), we obtain 0. Therefore, from (56) and Lemma 7, we get which implies that and as .

Case 2. Assume that there is a subsequence of such that . In this instance, a non-decreasing sequence of exists, as indicated by Lemma 6, such that and the following inequality holds for :

From (56) we have

Combining (76) and (77) we obtain

This implies that

Similar reasoning to that of Case 1 leads us to the conclusion that 0 which implies that , that is, as . □

4. Numerical Examples

Some numerical examples illustrating the behavior of our proposed systems are provided in this section.

Example 1.

Let be a Hilbert spaces with norm and inner product for . Let and which are non-empty, closed, and convex subsets of . We define Z and J on by:

if

as the denumerator in is positive because therefore . Since it only holds if . Now, to check the implification for quasi-monotone

as and if then

This shows that Z is a quasi-monotone operator. In the same way, we can show that J is quasi-monotone.

The distance between the points is

The distance between their function values

Using the property of uniform continuity, we want to find δ such that which implies that . Notice that the function is continuous because the function smoothly transitions between 0 and 1 as moves from and there are no jumps and discontinuities. Also, the function is bounded (since its values lie between 0 and 1). This function is uniformly continuous on the real line because its derivative is bounded. Specifically:

as , as and as . So the derivative function lies between 0 and . Thus, the derivative function is bounded, i.e.,

Since the maximum of is which gives Lipschitz constant and implies that

if we want

we can use the Lipschitz condition which implies that . So for given ϵ, this implies that . Hence, Z is uniform continuous.

In a same way, it can be shown that J is quasi-monotone and uniformly continuous mappings on .

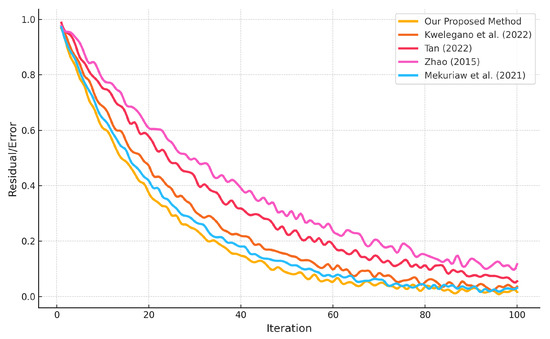

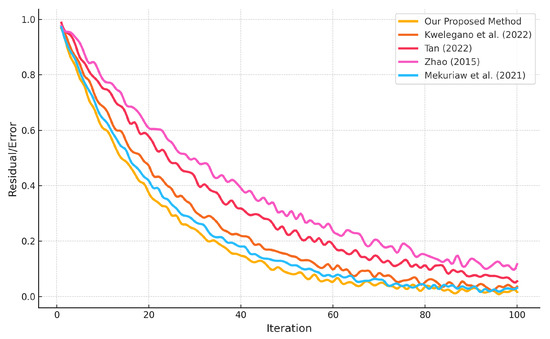

If we also define and by and , respectively, then X and Y are both bounded linear mappings with adjoint mappings and given by and , respectively. Let given by and such that and both are quasi-nonexpansive mappings. Take and with given point and initial points and . Then, conditions are satisfied. Using MATLAB R2020a, we obtain the Figure 1 which shows that the sequences generated by the previous introduced inertial-like subgradient extragradient method and our proposed inertial-like subgradient extragradient algorithm as given in Section Proposed Inertial-like Subgradient Extragradient Algorithm converge strongly to the solution (see Figure 1).

Figure 1.

Convergence of with tolerance [10,13,14,20].

Example 2.

Let with norm for all and inner product for all . Consider and . Then, B and E are closed and convex subsets of Ω. Let be defined by

and

we have two cases for both operators. Let us see for Z.

case (i): here, if

which imples

it is obvious that and since it only holds if . Now, to check the implification for quasi-monotone

as therefore

this implies quasi-monotonocity for case (i).

case (ii): here, if

since for all and this is only true if . Now check for implification.

as and ; thus,

Hence, Z is a quasi-monotone operator. Similarly, J is also quasi-monotone.

To check the uniform continuity of Z.

Case (i): , here

The absolute value function is uniformly continuous because the function is linear, and linear functions are uniformly continuous. The absolute value operator does not change the uniform continuity, so is uniform continuous.

Case (ii): , here

Since is bounded in this domain and remains continuous uniformly because the derivative is bounded on B. Hence, for all the difference between can be made arbitrarily small by controlling the difference and . Therefore, Z is uniformly continuous in both parts of its fragmentary definition. In a similar way, J is uniform continuous.

Now, we will check which values of satisfy the MVI. Let us try for . Since if . So here only one case arises in Z if , i.e., if ; then, .

check for

Therefore, . Similarly, we can see that . Define the mappings by

and

If , , thus and if in this case also, . Therefore, likewise and thus and

Moreover, we have

thus, M is quasi-nonexpansive. Similarly, one can show that N is quasi-nonexpansive. Also note that

By Definition 9, assume and , if this implies and if this implies , by the convergence property both cases imply that .

If then

and if then

both cases imply that is demiclosed at zero. In a similar way, we can also see that N is demiclosed at zero.

Define by and . Then, X and Y are bounded linear mappings and and . Moreover, we have that and hence . Taking and , the conditions are satisfied.

5. Conclusions

In this study, we introduce a novel inertial-like subgradient extragradient algorithm designed to solve split equality variational inequality and fixed point problems in real Hilbert spaces. The proposed method generates strongly convergent sequences under the assumption that the involved mappings are quasi-monotone and uniformly continuous, an assumption that generalizes the more restrictive Lipschitz continuity and pseudomonotonicity commonly found in the existing literature. To enhance the performance of the algorithm, we incorporated both inertial and subgradient-based strategies. Additionally, we explored potential applications of our approach to various related problems. Finally, we provided numerical examples to illustrate the effectiveness and practical utility of the proposed methods.

Author Contributions

Conceptualization, K.A. (Khushdil Ahmad) and K.S.; methodology, K.S.; software, K.A. (Khushdil Ahmad); validation, K.A. (Khadija Ahsan) and K.A. (Khushdil Ahmad); formal analysis, K.A. (Khadija Ahsan); investigation, K.A. (Khadija Ahsan); resources, K.A. (Khushdil Ahmad); data curation, K.A. (Khushdil Ahmad); writing—original draft preparation, K.A. (Khadija Ahsan); writing—review and editing, K.A. (Khushdil Ahmad); visualization, K.S.; supervision, K.S.; project administration, K.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Acknowledgments

The second author expresses their thanks to the Office of Research Innovation and Commercialization (ORIC), Government College University, Lahore, Pakistan, for its generous support and for facilitating this research work under project #0421/ORIC/24.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Banach, S. Sur les operations dans les ensembles abstraits et leur application aux equations integrales. Fundam. Math. 1922, 3, 133–181. [Google Scholar] [CrossRef]

- Stampacchia, G. Formes bilineaires coercitives sur les ensembles convexes. Comptes Rendus Hebdomadaires Des Seances De L Academie Des Sciences 1964, 258, 4413. [Google Scholar]

- Fichera, G. Problemi Elastostatici con Vincoli Unilaterali: Il Problema di Signorini con Ambigue Condizioni al Contorno; Accademia nazionale dei Lincei: Roma, Italy, 1964. [Google Scholar]

- Moudafi, A. Alternating CQ-algorithm for convex feasibility and split fixed-point problems. J. Nonlinear Convex. Anal. 2014, 15, 809–818. [Google Scholar]

- Byrne, C. A unified treatment of some iterative algorithms in signal processing and image reconstruction. Inverse Probl. 2003, 20, 103. [Google Scholar] [CrossRef]

- Combettes, P.L. The convex feasibility problem in image recovery. In Advances in Imaging and Electron Physics; Elsevier: Amsterdam, The Netherlands, 1996; Volume 95, pp. 155–270. [Google Scholar]

- Korpelevich, G.M. The extragradient method for finding saddle points and other problems. Matecon 1976, 12, 747–756. [Google Scholar]

- Censor, Y.; Gibali, A.; Reich, S. Strong convergence of subgradient extragradient methods for the variational inequality problem in Hilbert space. Optim. Methods Softw. 2011, 26, 827–845. [Google Scholar] [CrossRef]

- Tseng, P. A modified forward-backward splitting method for maximal monotone mappings. SIAM J. Control Optim. 2000, 38, 431–446. [Google Scholar] [CrossRef]

- Tan, B.; Cho, S.Y. Inertial extragradient algorithms with non-monotone stepsizes for pseudomonotone variational inequalities and applications. Comput. Appl. Math. 2022, 41, 121. [Google Scholar] [CrossRef]

- Tan, B.; Zhou, Z.; Li, S. Viscosity-type inertial extragradient algorithms for solving variational inequality problems and fixed point problems. J. Appl. Math. Comput. 2022, 68, 1387–1411. [Google Scholar] [CrossRef]

- Sunthrayuth, P.; Adamu, A.; Muangchoo, K.; Ekvittayaniphon, S. Strongly convergent two-step inertial subgradient extragradient methods for solving quasi-monotone variational inequalities with applications. Commun. Nonlinear Sci. Numer. Simul. 2025, 150, 108959. [Google Scholar] [CrossRef]

- Zhao, J. Solving split equality fixed-point problem of quasi-nonexpansive mappings without prior knowledge of operators norms. Optimization 2015, 64, 2619–2630. [Google Scholar] [CrossRef]

- Kwelegano, K.M.; Zegeye, H.; Boikanyo, O.A. An Iterative method for split equality variational inequality problems for non-Lipschitz pseudomonotone mappings. Rendiconti del Circolo Matematico di Palermo Series 2 2022, 71, 325–348. [Google Scholar] [CrossRef]

- Zheng, L. A double projection algorithm for quasimonotone variational inequalities in Banach spaces. J. Inequalities Appl. 2018, 2018, 256. [Google Scholar] [CrossRef] [PubMed]

- Ye, M.; He, Y. A double projection method for solving variational inequalities without monotonicity. Comput. Optim. Appl. 2015, 60, 141–150. [Google Scholar] [CrossRef]

- Cottle, R.W.; Yao, J.C. Pseudo-monotone complementarity problems in Hilbert space. J. Optim. Theory Appl. 1992, 75, 281–295. [Google Scholar] [CrossRef]

- Rahman, L.U.; Arshad, M.; Thabet, S.T.M.; Kedim, I. Iterative construction of fixed points for functional equations and fractional differential equations. J. Math. 2023, 2023, 6677650. [Google Scholar] [CrossRef]

- Ullah, K.; Thabet, S.T.M.; Kamal, A.; Ahmad, J.; Ahmad, F. Convergence analysis of an iteration process for a class of generalized nonexpansive mappings with application to fractional differential equations. Discret. Dyn. Nat. Soc. 2023, 2023, 8432560. [Google Scholar] [CrossRef]

- Mekuriaw, G.; Zegeye, H.; Takele, M.H.; Tufa, A.R. Algorithms for split equality variational inequality and fixed problems. Appl. Anal. 2024, 103, 3267–3294. [Google Scholar] [CrossRef]

- Censor, Y.; Elfving, T. A multiprojection algorithm using Bregman projections in a product space. Numer. Algorithms 1994, 8, 221–239. [Google Scholar] [CrossRef]

- Censor, Y.; Gibali, A.; Reich, S. The subgradient extragradient method for solving variational inequalities in Hilbert space. J. Optim. Theory Appl. 2011, 148, 318–335. [Google Scholar] [CrossRef]

- Kraikaew, R.; Saejung, S. Strong convergence of the Halpern subgradient extragradient method for solving variational inequalities in Hilbert spaces. J. Optim. Theory Appl. 2014, 163, 399–412. [Google Scholar] [CrossRef]

- Shehu, Y. Single projection algorithm for variational inequalities in Banach spaces with application to contact problem. Acta Math. Sci. 2020, 40, 1045–1063. [Google Scholar] [CrossRef]

- Zegeye, H.; Shahzad, N. Extragradient method for solutions of variational inequality problems in Banach spaces. Abstr. Appl. Anal. 2013, 2013, 832548. [Google Scholar] [CrossRef]

- Zhu, L.J.; Liou, Y.C. A Tseng-Type algorithm with self-adaptive techniques for solving the split problem of fixed points and pseudomonotone variational inequalities in Hilbert spaces. Axioms 2021, 10, 152. [Google Scholar] [CrossRef]

- Thong, D.V.; Vuong, P.T. Modified Tseng’s extragradient methods for solving pseudo-monotone variational inequalities. Optimization 2019, 68, 2207–2226. [Google Scholar] [CrossRef]

- Polyak, B.T. Some methods of speeding up the convergence of iteration methods. Ussr Comput. Math. Math. Phys. 1964, 4, 1–17. [Google Scholar] [CrossRef]

- Kirk, W.A. Contraction Mappings and Extensions. In Handbook of Metric Fixed Point Theory; Kirk, W.A., Sims, B., Eds.; Springer: Dordrecht, The Netherlands, 2001. [Google Scholar]

- Kesornprom, S.; Cholamjiak, P. A modified inertial proximal gradient method for minimization problems and applications. AIMS Math. 2022, 7, 8147–8161. [Google Scholar] [CrossRef]

- Thong, D.V.; Cholamjiak, P.; Michael, T.; Cho, Y.J. Strong convergence of inertial subgradient extragradient algorithm for solving pseudomonotone equilibrium problems. Optim. Lett. 2022, 16, 545–573. [Google Scholar] [CrossRef]

- Zegeye, H.; Shahzad, N. Convergence of Mann type iteration method for generalized asymptotically nonexpansive mappings. Comput. Math. Appl. 2011, 62, 4007–4014. [Google Scholar] [CrossRef]

- Dotson, W., Jr. Fixed points of quasi-nonexpansive mappings. J. Aust. Math. Soc. 1972, 13, 167–170. [Google Scholar] [CrossRef]

- Boikanyo, O.A.; Zegeye, H. Split equality variational inequality problems for pseudomonotone mappings in Banach spaces. Stud. Univ. Babes-Bolyai Math. 2021, 66, 139–158. [Google Scholar] [CrossRef]

- Denisov, S.; Semenov, V.; Chabak, L. Convergence of the modified extragradient method for variational inequalities with non-Lipschitz operators. Cybern. Syst. Anal. 2015, 51, 757–765. [Google Scholar] [CrossRef]

- Xu, H.K. Iterative algorithms for nonlinear operators. J. Lond. Math. Soc. 2002, 66, 240–256. [Google Scholar] [CrossRef]

- Maingé, P.E. A hybrid extragradient-viscosity method for monotone operators and fixed point problems. SIAM J. Control Optim. 2008, 47, 1499–1515. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).