Building Geometry Generation Example Applying GPT Models

Abstract

1. Introduction

Architecture Examples Applying AI

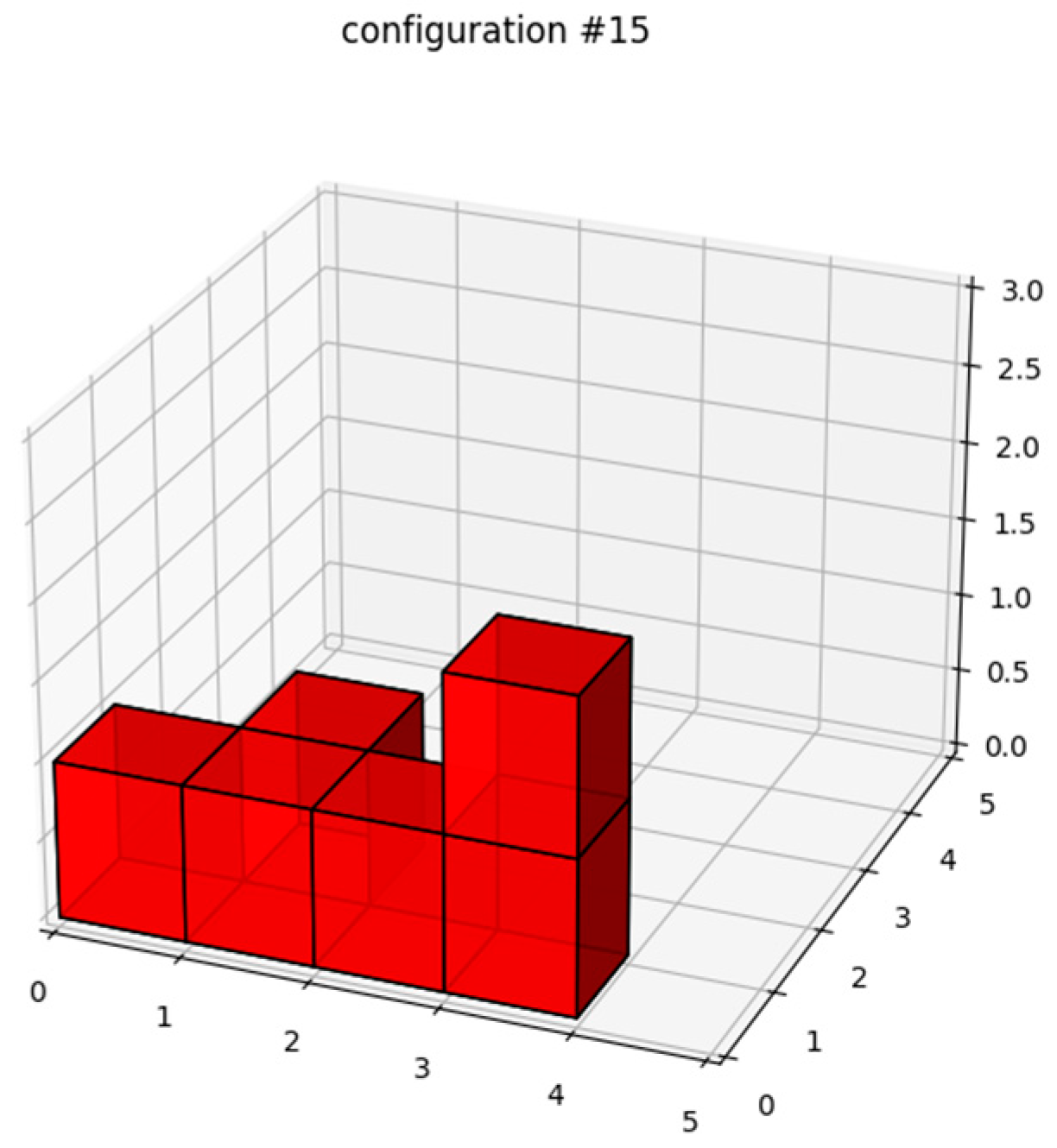

2. Problem Definition: Modular Building Geometry Generation

3. Theoretical Background of Potential Solution Methods

3.1. Combinatorial Problems

3.2. Artificial Intelligence Supporting Combinatorial Problems

3.3. Large Language Models

3.4. ChatGPTs

- Interpreting and reformulating tasks—it helps to present the problem in a more understandable form.

- Solution planning—it can create solution plans with different approaches.

- Analogy recognition—if it sees a solution to a problem, it can handle similar problems independently.

- Recursive thinking—it can think step by step and in a rule-based manner (e.g., performing Fibonacci calculation, development of combinatorial sequences).

- Generative abilities—e.g., on request, it lists the permutations of a 4-element set or all minimal covering sets of a given graph.

3.5. Limitations and Challenges of LLMs

- Lack of precise numerical calculations: The model does not “calculate” but guesses the answer, often based on previous similar examples. This is especially dangerous with large numbers or exponentially growing structures, in which even a small deviation can result in an order-of-magnitude error.

- Following misleading patterns: The model may be using templates that have been seen before in the case of similar problems but are not applicable to the specific task.

- Lack of algorithmic thinking: Combinatorics often requires not only the application of formulas but also structured thinking, condition testing, and recursion.

- Overgeneralization and false certainty—hallucination: An answer based on linguistic statistical patterns can convincingly suggest accuracy, when in fact the answer is incorrect or unverified.

- Scalability limits: As discussed previously, combinatory problems often have exponential search spaces. Language models cannot traverse the search space, so they are essentially just guessing.

- Statistical errors: The output tokens are sequentially generated as mentioned above instead of the model first figuring out what it really wants to say and only then choosing how to say it. As a result, a bad statistical decision can later be more serious.

3.6. Prompt Engineering

3.7. Hybrid Systems

3.8. Main Components of Hybrid Systems

- The deterministic algorithmic core is the central component of the hybrid systems that use classical algorithms, such as backtracking, dynamic programming, or graph algorithms. Its primary role is to ensure that solutions are fully valid and that all rules applicable to the problem are followed, thus guaranteeing reliable results.

- The AI or learning module is the learning-capable component of the hybrid system, which can include various machine learning models, such as decision trees, neural networks, language models, or graph neural networks (GNNs). Its task is to provide intelligent support to the problem-solving process, such as learning heuristics; ranking decision points; and estimating costs, probabilities, or priorities. It can thus significantly reduce the size of the search space or improve the efficiency of the search by optimizing the control logic, especially for complex or unstructured problems.

- The knowledge base or rule system is the element of the hybrid system that contains the explicitly declared rules, relations, and constraints, thereby ensuring the formal structure and interpretability of the problem space. This component allows the AI module to understand the context; interpret situations; and make coherent, rule-based decisions. The knowledge base significantly increases the explainability and transparency of the system, since there is explicit logical or set-theoretic knowledge behind the AI operation that can be verified by humans.

- The memory or experience database is a component of hybrid systems that records the results, solution patterns, and errors of previous runs, thus allowing the system to learn from past experiences. This adaptive behavior supports dynamic development and adaptation to new situations and provides the opportunity to apply transfer learning, in which the system can use a previously learned structure for other, similar problems.

- The search controller or decision engine is the central control unit of the hybrid system, which dynamically coordinates the solution process based on classical algorithms or AI in a given situation. This component decides when it is appropriate to use deterministic search and when it is worth entrusting the decision to the AI module, for example, based on heuristic ranking or learned priority. The operation of the decision engine can be rule-driven, such that predefined logic determines the steps, or it can also apply reinforcement learning, during which the system improves its own control strategy based on experience about the effectiveness of the solution.

- The interface is the communication layer of the hybrid system towards the user or other systems, which allows the understandable and accessible presentation of results, suggestions, and processes. This module provides explainability, feedback, and visualization, which is especially important in complex combinatorial problems, in which the justification of decisions is essential. If the system also includes language models, the interface can even provide natural language responses, facilitating user interaction and integration into other systems or services.

- The LangChain ecosystem is an open-source development framework that enables LLMs, such as those in the GPT series, to not only generate text but also function as a part of complex, interactive applications [30]. With LangChain, LLMs can call external tools (e.g., Python interpreter, database query, web search); manage memory during conversations or sessions; extract information from documents; and make autonomous logical decisions, for example, acting as AI agents to solve tasks in multiple steps.

4. Combinatorial Approach to the Solution of the Family House Building Geometry Generation Architecture Problem

5. GPT Approach to the Solution of the Family House Building Geometry Generation Architecture Problem

5.1. ChatGPT 3.5 Model Solution

5.2. ChatGPT 4o Model Solution

- Primary search attempts (brute force and combinations)Steps:

- Generation of all possible positions: (x, y, z), where x, y ∈ {0, 1, 2, 3, 4} and z ∈ {0, 1, 2}. This means a total of 5 × 5 × 3 = 75 positions.

- Selection of all possible 6-element combinations from these options.

- Checking all combinations according to all the given rules.

- 2.

- BacktrackingSteps:

- Creation of a recursive algorithm that places new cubes in space step by step.

- Checking at each step, whether the partial placement complies with the rules so far.

- Backtracking, if a given configuration is not valid.

- 3.

- Rule check one by oneSteps:

- Disabling rules one by one to see which has the biggest limiting factor.

- Relaxing the rules, checking which rule results in unfeasible placements.

- 4.

- Random sampling and configuration generationSteps:

- Generating random configurations: Placing the first cube on the bottom level (z = 0) to satisfy rule 3.Placing additional cubes only so that they are in contact with at least one other cube (rule 2).Checking whether the entire configuration satisfies all the rules.

- Testing thousands of random configurations and counting how many satisfy all the criteria.

- Estimating the number of valid configurations by extrapolation.

5.3. Hybrid Expert Model Solution

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Onatayo, D.; Onososen, A.; Oyediran, A.O.; Oyediran, H.; Arowoiya, V.; Onatayo, E. Generative AI Applications in Architecture, Engineering, and Construction: Trends, Implications for Practice, Education & Imperatives for Upskilling—A Review. Architecture 2024, 4, 877–902. [Google Scholar] [CrossRef]

- Koehler, D. Large Scale Architectures: A Review of the Architecture of Generative AI. Technol. Archit. Des. 2024, 8, 394–399. [Google Scholar] [CrossRef]

- Chae, H.J.; Ko, S.H. Experiments and Evaluation of Architectural Form Generation through ChatGPT. J. Archit. Inst. Korea 2024, 40, 131–140. [Google Scholar]

- Salingaros, N.A. How Architecture Builds Intelligence: Lessons from AI. Multimodal Technol. Interact. 2024, 9, 2. [Google Scholar] [CrossRef]

- Salingaros, N.A. Façade Psychology Is Hardwired: AI Selects Windows Supporting Health. Buildings 2025, 15, 1645. [Google Scholar] [CrossRef]

- You, H.; Ye, Y.; Zhou, T.; Zhu, Q.; Du, J. Robot-Enabled Construction Assembly with Automated Sequence Planning Based on ChatGPT: RoboGPT. Buildings 2023, 13, 1772. [Google Scholar] [CrossRef]

- Ayman, A.; Mansour, Y.; Eldaly, H. Applying machine learning algorithms to architectural parameters for form generation. Autom. Constr. 2024, 166, 105624. [Google Scholar] [CrossRef]

- Storcz, T.; Ercsey, Z.; Horváth, K.R.; Kovács, Z.; Dávid, B.; Kistelegdi, I. Energy Design Synthesis: Algorithmic Generation of Building Shape Configurations. Energies 2023, 16, 2254. [Google Scholar] [CrossRef]

- Yeretzian, A.; Partamian, H.; Dabaghi, M.; Jabr, R. Integrating building shape optimization into the architectural design process. Archit. Sci. Rev. 2020, 63, 63–73. [Google Scholar] [CrossRef]

- Fang, Y.; Cho, S. Design optimization of building geometry and fenestration for daylighting and energy performance. Solar Energy 2019, 191, 7–18. [Google Scholar] [CrossRef]

- Lu, J.; Luo, X.; Cao, X. Research on geometry optimization of park office buildings with the goal of zero energy. Energy 2024, 306, 132179. [Google Scholar] [CrossRef]

- Hooper, A.; Nicol, C. The design and planning of residential development: Standard house types in the speculative housebuilding industry. Environ. Plan. B Plan. Des. 1999, 26, 793–805. [Google Scholar] [CrossRef]

- Burkard, R.E. Efficiently solvable special cases of hard combinatorial optimization problems. Math. Program. Ser. B 1997, 79, 55–69. [Google Scholar] [CrossRef]

- Kalauz, K.; Frits, M.; Bertok, B. Algorithmic model generation for multi-site multi-period planning of clean processes by P-graphs. J. Clean. Prod. 2024, 434, 140192. [Google Scholar] [CrossRef]

- Papadimitriou, C.H.; Steiglitz, K. Combinatorial Optimization: Algorithms and Complexity; Courier Corporation: North Chelmsford, MA, USA, 1998; ISBN 0-486-40258-4. [Google Scholar]

- Russel, S.; Norvig, P. Artificial Intelligence A Modern Approach, 3rd ed.; Pearson Education Inc.: London, UK, 1995. [Google Scholar]

- Mitchell, T.M. Machine Learning; McGraw-Hill Science/Engineering/Math: Columbus, OH, USA, 1997; ISBN 0070428077. [Google Scholar]

- Caruana, R.; Niculescu-Mizil, A. An empirical comparison of supervised learning algorithms. In Proceedings of the 23rd International Conference on Machine Learning-ICML ’06, Pittsburgh, PA, USA, 25–29 June 2006; ACM Press: New York, NY, USA, 2006; pp. 161–168. [Google Scholar] [CrossRef]

- Alloghani, M.; Al-Jumeily, D.; Mustafina, J.; Hussain, A.; Aljaaf, A.J. A Systematic Review on Supervised and Unsupervised Machine Learning Algorithms for Data Science. In Supervised and Unsupervised Learning for Data Science; Springer: Cham, Switzerland, 2020; pp. 3–21. [Google Scholar] [CrossRef]

- Morales, E.F.; Escalante, H.J. A brief introduction to supervised, unsupervised, and reinforcement learning. In Biosignal Processing and Classification Using Computational Learning and Intelligence; Elsevier: Amsterdam, The Netherlands, 2022; pp. 111–129. [Google Scholar] [CrossRef]

- Agüera y Arcas, B. Do Large Language Models Understand Us? Daedalus 2022, 151, 183–197. [Google Scholar] [CrossRef]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language models are few-shot learners. In Proceedings of the 34th International Conference on Neural Information Processing Systems (NIPS ’20), Vancouver, BC, Canada, 6–12 December 2020; Curran Associates Inc.: Red Hook, NY, USA, 2020; pp. 1877–1901. [Google Scholar]

- Naveed, H.; Khan, A.U.; Qiu, S.; Saqib, M.; Anwar, S.; Usman, M.; Akhtar, N.; Barnes, N.; Mian, A. A Comprehensive Overview of Large Language Models. arXiv 2024, arXiv:2307.06435. [Google Scholar]

- Raffel, C.; Shazeer, N.; Roberts, A.; Lee, K.; Narang, S.; Matena, M.; Zhou, Y.; Li, W.; Liu, P.J. Exploring the limits of transfer learning with a unified text-to-text transformer. J. Mach. Learn. Res. 2020, 21, 5485–5551. Available online: https://dl.acm.org/doi/abs/10.5555/3455716.3455856 (accessed on 24 June 2025).

- Radford, A.; Narasimhan, K.; Salimans, T.; Sutskever, I. Improving Language Understanding by Generative Pre-Training. 2018. Available online: https://cdn.openai.com/research-covers/language-unsupervised/language_understanding_paper.pdf (accessed on 24 June 2025).

- OpenAI; Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Leoni Aleman, F.; Almeida, D.; Altenschmidt, J.; Altman, S.; et al. GPT-4 Technical Report. arXiv 2024, arXiv:2303.08774. [Google Scholar]

- Sahoo, P.; Singh, A.K.; Saha, S.; Jain, V.; Mondal, S.; Chadha, A. A Systematic Survey of Prompt Engineering in Large Language Models: Techniques and Applications. arXiv 2025, arXiv:2402.07927. [Google Scholar]

- Gao, C.; Lan, X.; Li, N.; Yuan, Y.; Ding, J.; Zhou, Z.; Xu, F.; Li, Y. Large language models empowered agent-based modeling and simulation: A survey and perspectives. Humanit. Soc. Sci. Commun. 2024, 11, 1259. [Google Scholar] [CrossRef]

- Abraham, A.; Hong, T.-P.; Kotecha, K.; Ma, K.; Manghirmalani Mishra, P.; Gandhi, N. (Eds.) Hybrid Intelligent Systems; Springer Nature: Cham, Switzerland, 2023; Volume 647. [Google Scholar] [CrossRef]

- Auffarth, B. Generative AI with LangChain; Packt Publishing: Birmingham, UK, 2023. [Google Scholar]

| Model Base | Result | Reason | Usability |

|---|---|---|---|

| ChatGPT 3.5 | No valid result | Linguistic statistical approach | Generate summaries Hints on solution directions |

| ChatGPT 4o | No valid result Valuable partial results | Linguistic statistical approach Agent support | Support solution generation |

| ChatGPT 4o-based hybrid | Valid result | Generative AI model support IT expert intervention | Generate solutions |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ercsey, Z.; Storcz, T. Building Geometry Generation Example Applying GPT Models. Architecture 2025, 5, 79. https://doi.org/10.3390/architecture5030079

Ercsey Z, Storcz T. Building Geometry Generation Example Applying GPT Models. Architecture. 2025; 5(3):79. https://doi.org/10.3390/architecture5030079

Chicago/Turabian StyleErcsey, Zsolt, and Tamás Storcz. 2025. "Building Geometry Generation Example Applying GPT Models" Architecture 5, no. 3: 79. https://doi.org/10.3390/architecture5030079

APA StyleErcsey, Z., & Storcz, T. (2025). Building Geometry Generation Example Applying GPT Models. Architecture, 5(3), 79. https://doi.org/10.3390/architecture5030079