Abstract

Performance evaluation is crucial for environmental design and sustainable development, especially so for architecture and landscape architecture. However, such performance evaluations remain rare in practice. It is argued that the concerns over potential negative evaluations and a lack of funding are the two main barriers preventing the undertaking of performance evaluations. This research investigated how these two barriers were overcome in practice by studying 41 evaluation cases in the New Zealand landscape architecture field, as well as several international and architectural case studies for comparison. A range of enablers for performance evaluation practices were identified by this research, including funding sources and models that were not documented by existing literature, as well as two strategies for handling the risks of negative evaluation. All of the identified enablers share the same underlying logic—the benefits and costs of an evaluation should be well-regulated by certain mechanisms to keep the benefits of an evaluation greater than, or at least balanced with, the costs, for all the parties involved in the evaluation.

1. Introduction

Sustainable development is a timeless objective of the contemporary world. Green growth, as a practical tool for sustainable development, was expected to help address or mitigate the key challenges human beings are facing—climate change, water scarcity, biodiversity loss, air pollution, and so on [1,2,3]. Consuming 35% of energy, 16% of water and contributing 38% of carbon emissions globally, the sector of the built environment is considered one of the least environmentally friendly industries [4,5]. A more sustainable environmental design industry, therefore, was considered to have a key role to play in green growth [4,6,7]. The international community of environmental design professionals, for example, jointly published a communique just before the 2021 Climate Change Conference (COP26) and committed to action to their full capacity to contribute to carbon emissions reductions in order to retain a better probability of meeting the Paris Agreement’s 1.5 °C Goal [8,9]. In the context of sustainable development, an increasing number of sustainable features have been integrated into projects in the built environment in recent years. Measuring the actual performance and contribution of these efforts made toward a more sustainable future, therefore, is considered vital [10].

Performance evaluation assesses the actual performance of implemented environmental designs or interventions. Such evaluations are carried out in a range of forms, including Post-Occupancy Evaluation, Building Performance Evaluation, Landscape Performance Evaluation, building appraisal, building evaluation, and building diagnosis [11,12,13,14,15,16,17,18,19,20,21,22,23,24].

The significance of performance evaluations is emphasized by numerous environmental design scholars and practitioners. The importance mainly lies in four aspects. Firstly, the practice of evaluating complete projects generates verified knowledge and helps to expand the profession’s body of knowledge[25,26]. Learning from the actual performance of implemented designs by using “a kind of planful science-based approach” is a fundamental way of helping the profession’s body of knowledge to grow [25] (p. 46). Secondly, evaluating complete projects can help design professionals to better understand how their designs perform and function for users [27,28,29], and therefore, inform future design practices and public policies and contribute to sustainability through making future designs more user-friendly [15,30,31,32,33]. Performance evaluations are the essential link in the process of identifying what users like and dislike and feeding these lessons back into future designs [15,33]. Thirdly, by evaluating a completed project, there are also opportunities to help the evaluated project to achieve better performance. It is suggested that evaluations of occupied developments could help to collect feedback from occupants and facility managers [14,34]. Based on this collected feedback, the designers could then help site managers to better manage the space [14,34]. The designers, in collaboration with facility managers and owners, can also fine-tune the development to mitigate the underperforming aspects or enhance the expected performance [14,34]. In extreme cases, such evaluation can even “indicate a complete redesign of a site” [27] (p. 128). Finally, evaluating complete projects in a rigorous way can also help the profession to better communicate the value of their work, making the profession more accessible to mainstream science, the public, and decision-makers [12,32,35].

Despite the high importance perceived and recognized by numerous scholars and practitioners, performance evaluations remain rare in practice [27,33,36,37,38,39,40]. Two barriers are frequently documented in the literature as preventing the implementation of performance evaluations of built environment projects.

The first barrier that is often noted is a lack of funding and motivation, a situation considered by many as a fundamental reason for the underuse of evaluation [28,30,33,41,42,43,44,45,46]. This is discussed in the literature primarily from two perspectives—the client and the designer. It is argued that clients, who normally see limited benefits from evaluating their projects, are often reluctant to pay for an evaluation [33,42]. In other cases, the clients believe that the evaluation cost has already been covered by their investments in the development process, and therefore, are reluctant to pay anything more after the completion of the projects [47]. Similarly, there is little incentive for a designer to pay for an evaluation. It is observed that designers were seldom asked to prove the success of their past designs in terms of function and performance, either by their clients or the judges of many professional awards [30]. The literature suggests that the root cause of the lack of funding is that both the client and the designer tend to believe that there are no substantial benefits to them from undertaking an evaluation, and as a result, they have no motivation to fund evaluations.

The second barrier is that there are potential risks from negative evaluations, and this is also considered one of the main factors preventing the implementation of evaluation practices [28,41,42,44,47,48,49,50]. As with the funding and motivation barrier noted above, the risks from negative evaluations are also discussed in the literature mostly from the perspectives of the designers and clients, who were both considered vulnerable or sensitive to negative evaluation outcomes. From the perspective of designers, the negative outcome of an evaluation may damage their reputation, and in extreme cases, may result in litigation [36,41,47]. “A prevailing culture of litigation and blame” was considered as a major inhibitor for conducting evaluation [28] (p. 2088). The inexplicit allocation of the responsibility for the potential failures identified by an evaluation was considered a catalyst, which presents the designers with a no-win situation, and makes them more reluctant to undertake evaluations examining the performance of their design decisions [47,48]. As well as designers, clients may also be reluctant to conduct evaluations [44], being concerned with “the potential for bad publicity if problems are uncovered so soon after a large expenditure of funds” [44].

While the main barriers preventing evaluations are well-identified in the literature, little is known about how these barriers can be overcome. Although there have been some innovative and beneficial attempts, few have been well-documented and disseminated. This indicates a need to conduct a comprehensive review to investigate how existing performance evaluations overcome the identified barriers, and to summarize the key enablers for performance evaluations. This study aims to bridge this research gap.

This research studied a wide range of performance evaluation cases carried out in the New Zealand landscape architecture field. By investigating and analyzing how these cases were funded and conducted, this paper presents a range of enablers, illustrating how the various cases overcame the barriers.

With a 50-year history of education and practice, the New Zealand landscape architecture discipline is well-established and well-recognized worldwide. Its ‘terrain’ of performance evaluation can, thus, be considered representative. The study uses a formal method for the investigation and analysis of the New Zealand landscape architecture cases, using the sequential study and data saturation approach [51,52,53]. In addition, there is an informal and complementary component to the research, drawing in useful examples from other countries and allied disciplines. Although this research has a particular emphasis on the landscape-related architectural developments, the enablers identified are potentially applicable to the broader context of the built environment. Just as landscape architecture can draw on the experience of architecture in Building Performance Evaluation (BPE), so too can the reverse be true.

2. Methods

2.1. Overall Research Strategy

The main focus of this research was to investigate the breadth, rather than depth, of the ‘terrain’ of evaluation practice, and the emphasis was, therefore, on collecting as wide a range of examples as possible. However, it was not a possible goal to collect all existing evaluation cases within a finite research timeframe. Instead, it was more realistic and practical to study a manageable number of cases as the basis for this research. In order to investigate the overall ‘terrain’ by studying only a finite number of cases, a ‘sequential’ case study approach [51,52] was adopted by this research. In such studies, the overall ‘terrain’ is built gradually through collecting and investigating cases one by one. The investigation of each case can help the researchers to achieve findings and, at the same time, identify some questions about the rest of the ‘terrain’ [51]. These questions, in turn, may help the researchers to navigate through the study of the subsequent case [51]. An overall understanding can be gained when data saturation is achieved [51]. This means that there should be very few new or surprising findings from the last cases studied [51]. This type of research method is called the ‘sequential’ study method by Yin [51]. The following subsections explain how the ‘sequential’ case study method was adopted in this research and how data saturation was achieved.

2.2. Case Collecting

The first step of this ‘sequential’ study is to collect materials describing relevant information about existing performance evaluation cases. Collected materials include academic journal articles; reports by professional institutes, academic institutes and the government; articles published on professional and academic websites and professional magazines; books; newspapers; evaluation data; photos; and maps.

Since evaluation happens in both professional and academic fields, relevant text materials were collected from both professional and academic channels, which included academic databases (Google Scholar), search engine (Google Search), and reference lists from collected materials.

2.3. Case Coding and Investigating

The collected case materials were then open coded to draw the key themes from the materials, such as the type of funders or evaluators, and the motivation for them to finance or conduct an evaluation. Open coding is a common first step in content analysis practices [54]. In this step, all the text segments within the collected materials which signal ideas relevant to the research intents were assigned a code or codes to draw the identifiable idea from the texts. As the name “open coding” implies, the purpose of this stage of coding is to open the researchers up to all kinds of theoretical possibilities and avoid being affected by preconceived notions to minimize the biases that would possibly result from the coding process [55,56]. Therefore, no predetermined codes were adopted in this step. Instead, all codes were created in the coding process according to the meaning expressed by the texts with particular focus on the contents related to the barriers and enablers of the evaluations. Through the open coding process, 152 initial codes were created (As this research is a part of a larger project, themes other than the ones related to the barriers and enablers of performance evaluation practices are also coded in the research process and are included in these 152 initial codes. While this paper is focused only on the barriers and enablers, other outputs from the larger project, including the ‘terrain’ of the evaluation models and methods will be addressed in a separate article.).

As all the initial codes were created without considering the interrelationships between and hierarchical connections of the codes purposely, it was expected that some codes created in the open coding process might be different expressions denoting very similar meanings (e.g., ‘government-funded’ and ‘financially supported by the government’). In some other cases, for example, by comparing an initial code with another, it was found that there is a subordinate relationship between these two codes (e.g., ‘evaluated by stakeholders’ and ‘evaluated by designers’). Therefore, it was necessary to conduct a comprehensive examination of the initial codes to identify the relationships between the code, as well as to build a hierarchical structure for them [56,57,58]. This process is called axial coding in qualitative content analysis studies [56]. Axial coding is often the second step of coding, which is normally conducted after achieving open codes [56]. In this step, the initial codes are grouped, edited and structured to form a series of more abstract codes, which can better reflect the characteristics, trends and divergences that underlie the texts [56,57,58,59,60]. In this axial coding process, the 152 initial codes were grouped, edited, merged and structured into 109 final codes (As explained previously, more codes other than the ones related to the barriers and enablers are also included in these 109 final codes.). The final codes related to the background information of the cases and the information about barriers and enablers are shown in Table A1 in Appendix A.

It is worth noting that, for most of the cases, the relevant information about their evaluations was commonly distributed in more than one document. Therefore, in the axial coding stage, by comparing the initially coded cases (whose codes were largely derived from a single document), gaps in information were revealed. These gaps were then taken as the targets for further case investigations. A range of channels were used for further case investigation to collect more and targeted information of those coded cases. The channels used include academic databases (Google Scholar), search engine (Google Search), physical library, email communication with relevant entities or individuals and phone interviews.

2.4. Achieving Saturation

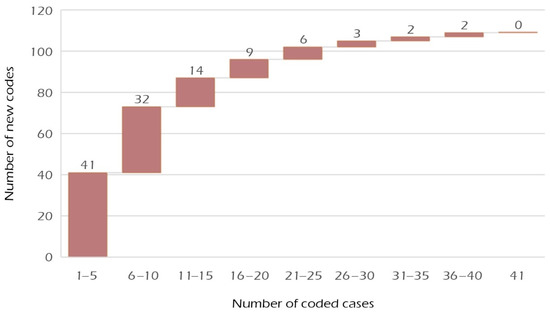

In the early stage of the open coding process, a large number of new codes were quickly developed (as shown in Figure 1). However, as the coding proceeded, an increasing number of repeating codes appeared, and the number of newly created codes gradually reduced (as shown in Figure 1). According to Fusch and Ness [53], there is no “one size fits all” pattern for determining if data saturation has been reached, since the pattern of reaching data saturation varies largely and is highly dependent on the research methods. A practical way of handling data saturation is explicitly deciding how data saturation will be achieved when designing a study and clearly reporting when, how, and to what extent the data saturation has been achieved when communicating the study result [51,53]. Therefore, this research took 10% of repetitively coded cases as the benchmark of data saturation when designing the research. This means that the researchers kept collecting and coding cases until the final 10% (or more) of collected cases were coded without using new axial codes. In the actual coding process, among the 41 cases collected, the last five were coded completely with existing codes (as shown in Figure 1), at a proportion of 12.2% (higher than the 10% rate for determining saturation), at which data saturation is considered achieved.

Figure 1.

The number of newly created codes (counted as the equivalent number of axial codes) reduced as the coding proceeded, and data saturation was reached after studying 41 cases.

3. Results

3.1. The Raw Data

Overall, 41 cases were collected by the researchers. As shown in Table A1 in Appendix A, the collected cases range widely in terms of their locations (across the whole New Zealand), project types (including public parks, neighborhoods, campuses, retail and commercial developments, landfills, traffic facilities, and urban planning projects), times of project completion (ranging from 1969 to ongoing), and times of evaluation (ranging from 1991 to 2019). Achieving data saturation means these cases comprehensively reflect the ‘terrain’ of the New Zealand landscape performance evaluation practices. The main characteristics related to the enablers for practice include funding sources, motivations, types of evaluators, and the interrelationship between these factors. The following subsections present these characteristics.

3.2. Funding Sources

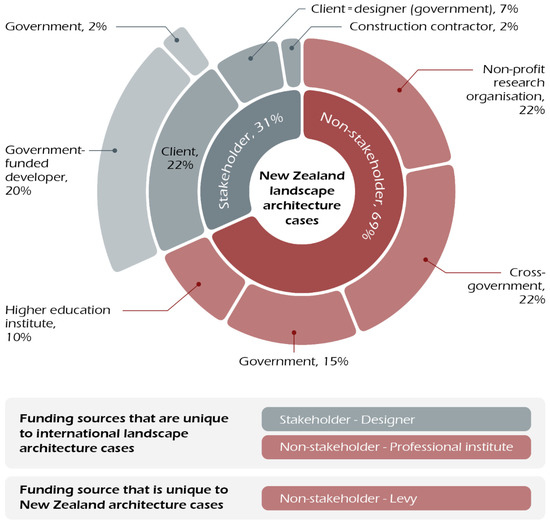

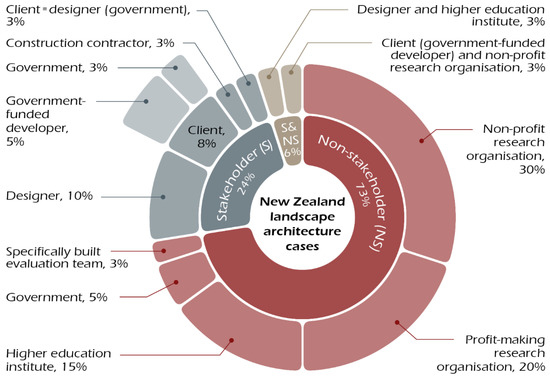

A range of funding sources supporting current evaluation practices were identified. The types of funding sources and their percentage are illustrated in Figure 2. (It is worth noting that unique funding sources identified from the examples beyond the New Zealand landscape architecture field were not counted in the percentage calculation, as these examples are not a part of the comprehensive investigation of the ‘terrain’ of the New Zealand landscape architectural practices. These examples, instead, was studied for their characteristics that are unique to the New Zealand landscape architecture cases to form comparisons, as explained in the introduction. The percentages presented in Figure 2, as well as Figure 3, Figure 4, Figure 5 and Figure 6 in later subsections, were calculated to represent the ‘terrain’ of the New Zealand landscape architectural practices, which was studied comprehensively as the main focus of this research.).

Figure 2.

Funding sources of collected performance evaluation cases (n = 41).

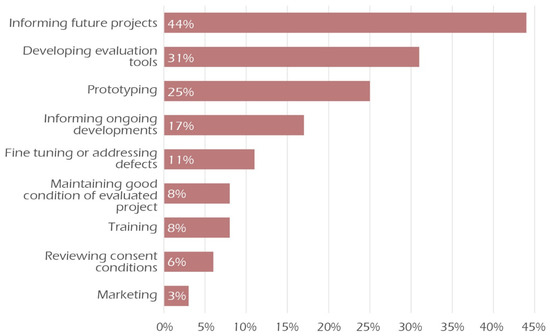

Figure 3.

Purposes of evaluation (n = 36 (The motivations of five cases are unknown)).

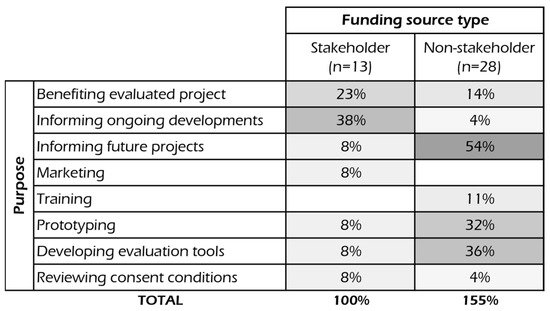

Figure 4.

The distribution of the cases funded by two funding source categories by purpose (As some cases were conducted for more than one purpose, the sum of the proportions in the non-stakeholder group is great than 100%).

Figure 5.

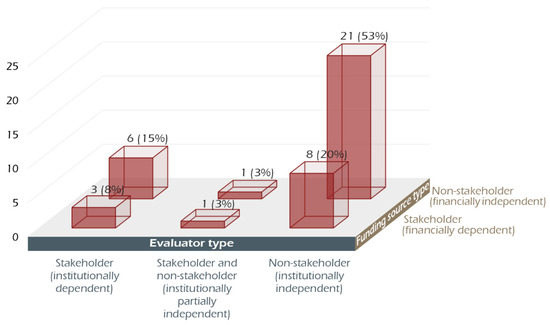

Case distribution by evaluator types (n = 40 (The evaluator type of one of the collected cases is unknown. Therefore, only 40 cases were included in this analysis)).

Figure 6.

Number and percentage of cases by funding source categories and evaluator categories (n = 40 (The evaluator type of one of the collected cases is unknown. Therefore, only 40 cases were included in this analysis)).

As shown in Figure 2, the funding sources of the collected New Zealand landscape architectural evaluations can generally be divided into two categories—stakeholder (as shown in red) and non-stakeholder (as shown in grey). Stakeholder refers to the parties that are in direct relation to the evaluated projects, such as the client, the designer and the construction contractor of the project. (The roles within a project were described from the architectural professionals’ perspective in this paper. Therefore, the word “client” in this paper refers to the party who commissions architects (or other type of designers) to design a project.) One-third of the collected projects were funded by this stakeholder category. Within the stakeholder category (shown in dark grey), the client sub-category makes up the largest proportion (shown in medium grey), accounting for 22% of the 41 collected cases. This client sub-category can be further divided into two sub-sub-categories (as shown in light grey). While the majority (20%) of cases within the 22% share of client-funded sub-category are government-funded developers, only a small proportion (2%) of evaluations are funded by the government, whose role is the client in the evaluated projects, commissioning designers to design the projects. The government and the government-funded developers, from another perspective, also act as the representatives of end users, who are normally a key consideration and driving force in landscape performance evaluations. For ease of discussion, the representative roles (i.e., executive roles)—the government and government-funded developers—rather than the represented individual users, are adopted for the discussions about the funding sources in this paper, as well as the evaluators in later sections. Besides the client, there are also 7% of evaluations financed by funders who play a dual role as a client and designer in the evaluated projects (government design team, for example). Construction contractors, as a type of stakeholder, also funded 2% of the collected evaluations.

The second category—non-stakeholder (as shown in Figure 2, in red)—refers to the parties that are not directly related to a project (or not directly involved in the development process of a project). This includes cross-governmental committees, non-profit research organizations, government agencies and higher education institutes, which account for 22%, 22%, 15% and 10% of the funding sources of the collected cases, respectively.

While the data in our study is largely focused on the geographic context of New Zealand and the professional context of landscape architecture, it is also helpful to point to some other examples. By exploring the evaluation cases abroad, as well as the cases in the architecture field, several unique funding sources were identified. Firstly, there are a number of evaluation cases in the United States landscape field funded by landscape architecture firm itself. Secondly, the Landscape Architecture Foundation (LAF) in the United States, as a professional or academic institute, has financially supported more than 160 evaluation projects since 2010. Thirdly, the Building Research Levy, as a special funding scheme supporting hundreds of research programs (which includes a number of evaluation cases), is identified in the New Zealand architecture field. None of these three types of funding sources were found in the New Zealand landscape architecture field.

Combining all the cases within and beyond the New Zealand landscape architecture field, a more comprehensive terrain of the funding sources was revealed, which not only suggests new possibilities for New Zealand landscape architecture practices, but more generally for environmental design practices in all countries that have a similar landscape industry context.

3.3. Motivations

The motivations for the funding parties to finance an evaluation is the key factor to be understood in order to help performance evaluation practices to overcome the identified funding barrier. As shown in Figure 3, about half of New Zealand landscape architectural evaluation cases were conducted to inform future projects, or at least included it as part of their purpose for evaluation. With a proportion of 31% and 25%, respectively, testing and developing evaluation tools and examining design prototypes are the second and third most common purposes for carrying out an evaluation. Following these, 17% of evaluation cases indicated that a key purpose of the evaluation is to inform the later stages of the evaluated ongoing projects. An approximately further one-fifth of evaluations were conducted with a purpose of benefitting the evaluated project and their users, either through addressing defects (11%), or maintaining the condition of the evaluated project (8%). Other purposes identified from the collected cases include training in evaluation skills (8%), reviewing the degree to which consent conditions are fulfilled (6%), and marketing (3%). (The reference to ‘consent conditions’ relates to the resource consents or building consents that are required to implement construction in New Zealand. Some consents may be granted with certain conditions. These evaluation cases were conducted to review the degree to which the conditions are fulfilled.)

The purposes outlined above are explained in detail in the next subsection, along with the funding sources of the evaluated projects.

3.4. The Correlation between the Funding Source and the Motivation

The purpose of an evaluation often reflects the motivation for a funder to fund the evaluation. By correlating the purpose of evaluation with the funding source, it was found that different funder groups finance evaluations for different purposes. As illustrated in Figure 4, within the category of stakeholder-funded cases, the purpose for 38% of evaluations was to inform later stages of the evaluated ongoing project, while another 23% was to benefit evaluated projects by fine-tuning the evaluated project, addressing identified defects, or informing future maintenance and management of the evaluated project. There is also a purpose that is unique to stakeholders—using the data generated from an evaluation for marketing purposes (8%).

A different distribution is presented by the cases funded by non-stakeholders (see Figure 4). Compared with those funded by stakeholders, there is a significantly lower proportion of cases conducted to inform ongoing developments (4% for non-stakeholder vs. 38% for stakeholder). By contrast, a considerably higher percentage of non-stakeholder financed evaluations were conducted to inform future projects (35% vs. 8%), develop evaluation tools (23% vs. 8%), and inform design prototyping (21% vs. 8%).

A clearly discernible correlation is illustrated by the patterns explained above—stakeholders often tend to fund a project for short-term benefits, while non-stakeholders tend to fund a project for long-term benefits. This is decided by the nature of these two categories of funders. As most of the stakeholders are profit-making independent entities, it is not surprising that the main motivation for them to fund a project is to obtain expectable returns from an evaluation. Such returns take place in the form of benefiting the evaluated projects, informing ongoing developments, and enhancing marketing. These three types of purposes together make up more than two-thirds of the stakeholder-funded evaluations. In contrast, most non-stakeholders were not expecting to get short-term returns from the evaluations they funded. The motivations for 96% of these evaluations are in relation to the future benefits of the sponsor entities (e.g., informing future projects), as well as the industry as a whole (e.g., prototyping and developing evaluation tools). Few tangible benefits can be expected to be obtained in the short term by the evaluations with the purpose of informing ongoing developments, perfecting evaluation tools, prototyping and training.

3.5. Evaluator

Apart from the funder of a project, another important role in an evaluation is the evaluator. By analyzing the collected cases, a wide range of evaluation implementors were identified. The types of evaluators and the case distribution by evaluator type are shown in Figure 5. Overall, approximately three quarters of the collected evaluation cases were conducted by non-stakeholders of the evaluated project (as shown in red), while around a quarter of the cases were evaluated by stakeholders (as shown in grey).

Within the category of the non-stakeholder-evaluated cases, two-thirds of the cases were evaluated by non-profit research organizations and profit-making research organizations, which makes up 30% and 20% of all collected New Zealand landscape architecture cases, respectively. Scholars and students in higher education institutes, as non-stakeholders, also conducted a range of evaluations, which account for 15% of the collected cases. A further 5% and 3% of cases were evaluated by the government and specifically built evaluation teams. These two types of evaluators are also considered as non-stakeholder in terms of the evaluated project. Within the category of the stakeholder, more than one-third of the cases were evaluated by landscape architecture firms, which account for 10% of all collected cases. Government-funded developers and territorial authorities, with their roles of client, also carried out several evaluations. The cases they conducted make up 5% and 3% of the collected cases, respectively. Other stakeholder evaluators identified by this research include construction contractors and government design teams who play a dual role as both client and designer within the evaluated projects. Apart from the cases that are solely evaluated by stakeholders or non-stakeholders, there are also a small proportion of projects evaluated jointly (as shown in brown) by both stakeholders (e.g., designer and client) and non-stakeholders (e.g., higher education institute and non-profit research organization).

3.6. Cooperative Relationships between the Funder and Evaluator

The funder and evaluator of a project are not isolated factors driving an evaluation. By overlapping the funder and evaluator information, a clear pattern of how these two main roles within an evaluation work together to deliver an evaluation is revealed. As illustrated in Figure 6, more than half (53%) of the collected evaluation cases are jointly delivered by non-stakeholder funders and non-stakeholder evaluators. One-fifth of the collected cases were evaluated by non-stakeholders, under the commission and financial support of the stakeholders of the evaluated projects. Six cases (15%), on the contrary, were evaluated by stakeholders under the commission of the non-stakeholder. There are also a small proportion of cases both funded and evaluated by the stakeholders of the evaluated project. There are also two cases evaluated jointly by both stakeholders and non-stakeholders. These two cases were funded by each of the two categories of funders, respectively.

4. Discussion

As explained in the literature review, the two main barriers preventing the implementation of performance evaluations are a lack of funding and the potential risks from negative evaluations. By mapping the terrain of the characteristics of the New Zealand landscape architecture performance evaluation practices and making comparison to some examples in the wider context, some useful strategies or supporting mechanisms were identified. These strategies and mechanisms are discussed in the following subsections.

4.1. Possible Funding Sources

The results around the funding sources reflect the views of a range of scholars [28,30,33,42,43,47] who observed that, in most cases, both clients and designers are reluctant to pay for an evaluation. However, in many existing studies, the funding issue was discovered as a multi-choice question (or an either-or question)—should the firm pay for the evaluations or should the client? Therefore, when evidence showed that both the design firms and the clients are reluctant to pay for an evaluation, the studies reviewed concluded that funding is a major barrier preventing evaluation practices. Conversely, by turning the question into an open-ended question—In what way should evaluations be funded? (and how were evaluations funded in existing cases?)—this research found that, besides the client-pays and firm-pays model, there are many other ways of funding an evaluation.

As explained in Section 3.2, stakeholders, such as the clients and designers, funded no more than one-third of the collected cases. More than two-thirds of evaluations were financed by non-stakeholders of the evaluated project. Such non-stakeholder funders included non-profit research organizations, cross-government committees (e.g., the New Zealand Urban Design Protocol led by the Ministry for the Environment), territorial authorities (e.g. City Councils), and higher education institutes.

Also, as explained in Section 3.6—the cooperative relationships between the funder and evaluator—there were a considerable number of cases funded by territorial authorities and cross-government committees, but were evaluated by other parties, such as the client, designer, government design team, higher education institutes and profit-making research organizations. This indicates a useful strategy for the parties who would like to conduct an evaluation but were prevented by insufficient funding to attempt acquiring financial support from territorial authorities and the government. The rationale for why governments should support performance evaluation is discussed in Section 4.2.

Some unique funding mechanisms observed beyond the New Zealand landscape architecture field were also investigated as supplements. The Case Study Investigation (CSI) programme is the world’s leading research programme in the field of Landscape Performance Evaluation (LPE). Funded by the Landscape Architecture Foundation (LAF), a professional and academic institute in the United States, the CSI programme has provided around 56,000 USD for about ten research teams each year since 2010 to measure and document the actual performance of exemplary implemented landscape projects. Over the past ten years, more than 160 cases have been studied through the CSI programme. Apart from these standardized performance evaluation projects, the CSI also funded research on the evaluation framework and evaluation techniques. All these efforts have largely contributed to the rapid growth of the field of LPE in the past decade.

The building research levy is another funding mechanism that was identified as being successful in contributing to the increasing implementation of performance evaluations. The Building Research Association of New Zealand (BRANZ) is an independent industry-governed organization set up in 1969 by an agreement between industry participants. In the same year, Parliament passed the Building Research Levy Act. This Act imposed a levy on the industry at the industry’s own request to constantly build their body of knowledge and boost the development of the industry. Since then, every consented construction project over 20,000 NZD is required to pay a levy of 0.1% of the project cost to BRANZ before they get their building consents. This levy is used by BRANZ to support studies that benefit the building industry in New Zealand. Performance evaluations have made up a considerable proportion of these levy-funded studies. According to the BRANZ annual reports, the building research levy has been having very positive impacts on the whole industry [61,62].

4.2. Countermeasures for the Risks of Negative Evaluations

The result of this study also echoes the observations of a range of scholars [28,41,44,47] who identified the possible risks of negative evaluations as a major obstacle preventing evaluation practices. However, most discussion around this issue was again limited within the two-party system—the possible negative evaluations are risky for both clients and designers. As a result, the only two parties within the system are reluctant to undertake evaluations. However, as explained in the Results section, more than half of performance evaluations were jointly delivered by non-stakeholder (i.e., neither clients nor designers) funders and evaluators, while only 8% of cases were delivered by stakeholder funders and evaluators. More than 90% of the evaluations have the involvement of parties other than the client or the designer of the evaluated project. These non-stakeholder parties include the government, higher education institutes, profit-making and no-profit research organizations. This finding suggests that, instead of fully relying on the designer and clients who are sensitive and vulnerable to negative evaluations, a more viable strategy is to seek more contributions from independent non-stakeholders.

Apart from the ‘stand-alone’ strategy discussed above, a ‘benefits-oriented’ evaluation strategy developed by the CSI programme has also been identified as successful by this study. In the CSI programme, academics and students from universities collaborate with the designers of the evaluated projects to assess their actual performance. This means that, for every CSI evaluation, there are contributions from the project designer. According to the working documents of the CSI programme, the main focus of the evaluations carried out under the CSI frameworks is to quantify and document the benefits achieved by selective high-performing landscape projects. In addition, as participants in the 2021 intake of the CSI programme, we received a copy of the handbook that guides the process. We noted that words like ‘encourage’ and ‘hope’ were used to foster a positive framing of the outcomes of the evaluations. Although the LAF officially suggests that the researchers are objective about the results and include the negative findings in the final evaluation publications, there are no “hard” regulations on this. In addition, the LAF also advises the researchers to communicate with the firms about their intentions to publish any negative findings as a courtesy before deciding to include these results. The firms were also given opportunities to interpret the negative outcomes from their own perspective. These measures can, to a large extent, protect the firms from any potential harm by the negative evaluation results. As a result, almost all of the evaluation outcomes produced under that CSI framework are positive. Although there is relatively less focus on the “lessons” than the “benefits”, the strategy adopted by LAF has considerably increased the possibility of having the designers involved and getting their support, which is often very important for achieving a deeper understanding of the performance of a project. On the other hand, the main outcome of the CSI evaluations, the quantified benefits, can help the landscape profession to better communicate the value of the landscape architects’ works. Overall, two strategies were identified by this research to help overcome the barriers caused by the risks of negative evaluations—the ‘stand-alone’ strategy and the ‘benefits-oriented’ strategy.

4.3. The Underlying Logic of Enabling an Evaluation

Externality refers to the benefits or costs resulting from an activity for a party who cannot influence the decision-making related to the activity [63]. Performance evaluation is a type of practice of high externality—conducting more evaluations can benefit the whole industry, but at the same time, conducting more evaluations can also result in costs for certain parties. The costs include the financial inputs and the impacts from negative evaluation results. The high externality of evaluation practices is the fundamental reason why most environmental design scholars and practitioners believe it is beneficial to conduct more evaluations, but at the same time, such practices remain rare in practice. Many in the industry would like to see the industry grow and contribute more to the built environment, but few would be willing to pay for the growth of the whole industry while others do not, and at the same time, take considerable risks. There are questions over who should pay for such growth and who should bare the risks.

In contrast, all the funding mechanisms that have been identified as successful in this study handled the externality judiciously. In other words, let the beneficiaries pay. The results of this study agree with the observations of Hadjri and Crozier [43] that there is always no clear single beneficiary in most performance evaluations. Instead, the beneficiaries of performance evaluation are the industry as a whole and the public—the end-users of the most evaluated and future landscape developments, and as the literature shows, conducting more performance evaluations can (1) help the profession’s body of knowledge to grow [25,30]; (2) help the profession to better communicate the value of their work [12,32]; (3) inform future design practices and public policies and better contribute to sustainability [15,27,28,30,31,33]; and (4) help the evaluated project to achieve better performance [14,27,34]. The territorial authorities and the government, as an important funding source identified, are representative of the public, the ultimate beneficiary of evaluation practices. The other two funding mechanisms identified as successful—the CSI programme funded by the LAF, and the building research levy managed by BRANZ—are two bodies of practice developed spontaneously by the industry, acting as the representative of the industry as a whole. Within these three funding mechanisms, the externality (one pays for all, others enjoy for free) is minimized. Every individual beneficiary pays for the beneficial evaluations through certain channels, which include rates (though territorial authorities), taxes (through governments), levies (through industrial organizations), membership, and sponsorship (through industrial institutes). (‘Rates,’ as mentioned above, are a type of tax collected, managed, and allocated by the local governments in New Zealand to run their jurisdictions).

Similarly, the two identified countermeasures for risks of negative evaluations also minimized the externality of evaluations wisely. The ‘stand-alone’ strategy avoided laying all the ‘burdens’ of an evaluation (i.e., financial inputs, labor, and the potential risks of negative impacts) on the stakeholders (clients and designers), who are often financially and reputationally sensitive. The benefits-oriented approach, on the other hand, makes the evaluations less risky and more rewarding for the stakeholders by a series of measures described in the previous subsection.

Overcoming concerns over potential negative evaluations is crucial for the health of the built environment design professions. It is a situation where the greater good needs to prevail over an individual firm’s risk aversion. Evaluations are part of the wider suite of approaches to design criticism, and as Bernadette Blanchon explains, “criticism is fully part of the creative process itself” [64] (p. 67). Constructively receiving criticism—whether negative or positive—is a trait that comes with maturity. While landscape architecture is relatively young as a design profession, having only been formalized as a profession in the mid-nineteenth century, it has now gained a critical mass and body of knowledge that supports it in standing alongside other built environment design professions such as architecture. The maturity to accept criticism and participate in evaluation is integral in the creation of a healthy and robust professional discourse, and in the long-term improvement of the built environment.

5. Conclusions

In conclusion, despite a range of commentators emphasizing the need for performance evaluations, in landscape architecture in New Zealand it remains limited in practice. This reflects concerns over potential negative evaluations and a lack of funding, and these barriers are shared by the wider context of the environmental design field. This research investigated how these barriers were overcome in practice by successfully implemented evaluations and identified a range of enablers for performance evaluation practices, including the funding sources beyond the two-party system of designer and client, the CSI and BRANZ funding models, as well as the ‘stand-alone’ strategy and the ‘benefits-oriented’ strategies for handling the risks of negative evaluation. The identified enablers all share the same underlying logic—the benefits and costs of an evaluation should be well-regulated by certain mechanisms (as the ones presented in this paper) to keep the benefits of an evaluation greater than, or at least balanced with, the costs, for all the parties involved in the evaluation.

Although the enablers and experience were largely drawn from landscape architecture field, they are also instrumental for other environmental design professions. As landscape architecture projects tend to rely more on public funding, the lessons drawn from this study are especially true for practice within the public realm, for which stakeholder-related issues parallel those commonly experienced in landscape architecture.

Author Contributions

Conceptualization, G.C., J.B. and S.D.; methodology, G.C., J.B. and S.D.; formal analysis, G.C.; investigation, G.C.; writing—original draft preparation, G.C.; writing—review and editing, J.B. and S.D.; visualization, G.C.; supervision, J.B. and S.D. All authors have read and agreed to the published version of the manuscript.

Funding

This publication has been partially financed by the Lincoln University Open Access Fund.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Some data presented in this study are available from the corresponding author, upon reasonable request.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Table A1.

Axial codes developed for the 41 collected cases.

Table A1.

Axial codes developed for the 41 collected cases.

| No. | Project | Place | Completed | Time of Evaluation | Funding Source Type | Evaluator Type | Purpose |

|---|---|---|---|---|---|---|---|

| 1 | Waitangi Park | Wellington | 2006 | 2007 | Non-stakeholder—Government | Non-stakeholder—Government | Benefiting evaluated project—Maintaining good condition of evaluated project; Reviewing consent conditions |

| 2 | Waitangi Park | Wellington | 2006 | 2007 | Stakeholder—Client—Government-funded developer (wholly-owned and non wholly-owned) | Non-stakeholder—Profit-making research organisation | Benefiting evaluated project—Maintaining good condition of evaluated project |

| 3 | Waitangi Park | Wellington | 2006 | 2007 | Non-stakeholder—Higher education institute | Non-stakeholder—Higher education institute | Benefiting evaluated project—Maintaining good condition of evaluated project; Training |

| 4 | Stonefields | Auckland | 2010 | 2011 | Non-stakeholder—Government | Non-stakeholder—Profit-making research organisation | Informing future projects |

| 5 | The Altair | Wellington | 2006 | 2011 | Non-stakeholder—Government | Non-stakeholder—Profit-making research organisation | Informing future projects |

| 6 | Chester Courts | Christchurch | 1995 | 2011 | Non-stakeholder—Government | Non-stakeholder—Profit-making research organisation | Informing future projects |

| 7 | Buckley Precinct in Hobsonville Point | Auckland | Ongoing | 2013 | Stakeholder—Client—Government-funded developer (wholly-owned and non wholly-owned) | Stakeholder and non-stakeholder—Client (government-funded developer) and non-profit research organisation | Benefiting evaluated project—Fine-tuning or addressing defects; Informing ongoing developments; Developing evaluation tools |

| 8 | Hobsonville Point | Auckland | Ongoing | 2016 | Stakeholder—Client—Government-funded developer (wholly-owned and non wholly-owned) | Non-stakeholder—Non-profit research organisation | Informing ongoing developments |

| 9 | Hobsonville Point | Auckland | Ongoing | 2018 | Stakeholder—Client—Government-funded developer (wholly-owned and non wholly-owned) | Non-stakeholder—Non-profit research organisation | Informing ongoing developments |

| 10 | Hobsonville Point | Auckland | Ongoing | 2020 | Stakeholder—Client—Government-funded developer (wholly-owned and non wholly-owned) | Non-stakeholder—Non-profit research organisation | Informing ongoing developments |

| 11 | Hobsonville Point | Auckland | Ongoing | 2019 | Non-stakeholder—Government | Non-stakeholder—Higher education institute | Informing future projects; Developing evaluation tools |

| 12 | Hobsonville Point | Auckland | Ongoing | 2018 | Stakeholder—Client—Government-funded developer (wholly-owned and non wholly-owned) | Stakeholder—Client—Government-funded developer (wholly-owned and non wholly-owned) | Benefiting evaluated project—Fine-tuning or addressing defects; Informing ongoing developments; Marketing |

| 13 | Auckland University of Technology | Auckland | 2006 | 2005 | Non-stakeholder—Cross-Government | Stakeholder—Designer | Informing future projects |

| 14 | Beaumont Quarter | Auckland | 2001—ongoing | 2005 | Non-stakeholder—Cross-Government | Non-stakeholder—Government | Informing future projects |

| 15 | Botany Downs | Auckland | 2004 | 2005 | Non-stakeholder—Cross-Government | Non-stakeholder—Higher education institute | Informing future projects |

| 16 | Chancery | Auckland | 2000 | 2005 | Non-stakeholder—Cross-Government | Stakeholder—Designer | Informing future projects |

| 17 | New Lynn Town Centre | Auckland | Ongoing | 2005 | Non-stakeholder—Cross-Government | Stakeholder—Client—Government | Informing future projects |

| 18 | New Plymouth Foreshore | New Plymouth | 2003 | 2005 | Non-stakeholder—Cross-Government | Stakeholder—Designer | Informing future projects |

| 19 | Northwood Residential Area | Christchurch | 2004 | 2005 | Non-stakeholder—Cross-Government | Stakeholder—Designer | Informing future projects |

| 20 | West Quay | Napier | 2000—ongoing | 2005 | Non-stakeholder—Cross-Government | Stakeholder—Client = designer (government) | Informing future projects |

| 21 | Harbour View | Auckland | 1996—present | 2005 | Non-stakeholder—Cross-Government | Stakeholder and non-stakeholder—Designer and higher education institute | Informing future projects |

| 22 | Harbour View | Auckland | 1997—present | 2008 | Non-stakeholder—Non-profit research organisation | Non-stakeholder—Non-profit research organisation | Prototyping; Developing evaluation tools |

| 23 | Petone | Hutt City | Unknown | Unknown | Non-stakeholder—Non-profit research organisation | Non-stakeholder—Non-profit research organisation | Prototyping; Developing evaluation tools |

| 24 | Blake St, Ponsonby | Auckland | Unknown | Unknown | Non-stakeholder—Non-profit research organisation | Non-stakeholder—Non-profit research organisation | Prototyping; Developing evaluation tools |

| 25 | East Inner City | Christchurch | Unknown | Unknown | Non-stakeholder—Non-profit research organisation | Non-stakeholder—Non-profit research organisation | Prototyping; Developing evaluation tools |

| 26 | Aranui | Christchurch | Unknown | Unknown | Non-stakeholder—Non-profit research organisation | Non-stakeholder—Non-profit research organisation | Prototyping; Developing evaluation tools |

| 27 | Dannemora | Auckland | Unknown | Unknown | Non-stakeholder—Non-profit research organisation | Non-stakeholder—Non-profit research organisation | Prototyping; Developing evaluation tools |

| 28 | Waimanu Bay | Auckland | Unknown | Unknown | Non-stakeholder—Non-profit research organisation | Non-stakeholder—Non-profit research organisation | Prototyping; Developing evaluation tools |

| 29 | West Harbour | Auckland | Unknown | Unknown | Non-stakeholder—Non-profit research organisation | Non-stakeholder—Non-profit research organisation | Prototyping; Developing evaluation tools |

| 30 | Addison | Auckland | Unknown | Unknown | Non-stakeholder—Non-profit research organisation | Non-stakeholder—Non-profit research organisation | Prototyping; Developing evaluation tools |

| 31 | Manukau Square | Auckland | 2005 | Unknown | Stakeholder—Construction contractor | Stakeholder—Construction contractor | Unknown |

| 32 | Victoria Square | Christchurch | Unknown | 1991 | Non-stakeholder—Higher education institute | Non-stakeholder—Higher education institute | Informing ongoing developments; Informing future projects |

| 33 | Burwood Landfill | Christchurch | 2002 | 2000 | Non-stakeholder—Government | Non-stakeholder—Profit-making research organisation | Informing future projects |

| 34 | Kate Valley | Christchurch | 2005 | 2019 | Stakeholder—Client—Government-funded developer (wholly-owned and non wholly-owned) | Stakeholder—Client—Government-funded developer (wholly-owned and non wholly-owned) | Unknown |

| 35 | Kate Valley | Christchurch | 2005 | 2019 | Stakeholder—Client—Government-funded developer (wholly-owned and non wholly-owned) | Non-stakeholder—Purposely built evaluation team | Reviewing consent conditions |

| 36 | Tennyson Street cycle facilities | Christchurch | 2001 | 2004 | Stakeholder—Client = designer (government) | Non-stakeholder—Profit-making research organisation | Unknown |

| 37 | Tennyson Street cycle facilities | Christchurch | 2001 | 2004 | Stakeholder—Client = designer (government) | Unknown | Unknown |

| 38 | Tennyson Street cycle facilities | Christchurch | 2001 | 2004 | Stakeholder—Client = designer (government) | Non-stakeholder—Profit-making research organisation | Unknown |

| 39 | Tennyson Street cycle facilities | Christchurch | 2001 | 2008 | Stakeholder—Client = designer (government) | Non-stakeholder—Profit-making research organisation | Informing future projects; Prototyping |

| 40 | Beckenham (including Tennyson Street) | Christchurch | Unknown | 2017 | Non-stakeholder—Higher education institute | Non-stakeholder—Higher education institute | Benefiting evaluated project—Fine-tuning or addressing defects; Training |

| 41 | Tennyson Street | Christchurch | Unknown | 2018 | Non-stakeholder—Higher education institute | Non-stakeholder—Higher education institute | Benefiting evaluated project—Fine-tuning or addressing defects; Training |

References

- Organisation for Economic Co-Operation and Development. Interim Report of the Green Growth Strategy: Implementing Our Commitment for a Sustainable Future; OECD Publishing: Paris, France, 2010. [Google Scholar]

- United Nations Environment Programme. A Guidance Manual for Green Economy Policy Assessment; United Nations: New York, NY, USA, 2014. [Google Scholar]

- Söderholm, P. The green economy transition: The challenges of technological change for sustainability. Sustain. Earth 2020, 3, 6. [Google Scholar] [CrossRef]

- United Nations Environment Programme. 2020 Global Status Report for Buildings and Construction: Towards a Zero-Emission, Efficient and Resilient Buildings and Construction Sector; United Nations Environment Programme: Nairobi, Kenya, 2020. [Google Scholar]

- Ametepey, S.O.; Ansah, S.K. Impacts of construction activities on the environment: The case of Ghana. J. Constr. Proj. Manag. Innov. 2014, 4, 934–948. [Google Scholar]

- Chidimma, N.-O.R.; Ogochukwu, O.F.; Chinwe, S.-A. The 2030 agenda for sustainable development in Nigeria: The role of the architect. Sci. Technol. Public Policy 2020, 4, 15. [Google Scholar] [CrossRef]

- de Medina, M.G.A.; Hayter, J.; Dennis, J.; Duncan, C.; Riveros, R.; Takano, F.; Helms, K.; Pallares, M.; Samaha, S.; Mercer-Clarke, C. A Landscape Architectural Guide to the United Nations 17 Sustainable Development Goals; International Federation of Landscape Architecture Europe: Brussels, Belgium, 2021. [Google Scholar]

- Architecture 2030. Building Industry Leaders to World Governments: It’s Time to Lead on Climate. Available online: https://cop26communique.org/media/ (accessed on 30 October 2021).

- International Federation of Landscape Architects. IFLA Climate Action Commitment. Available online: https://www.iflaworld.com/ifla-climate-action-commitment-statement (accessed on 30 October 2021).

- Rosenbaum, E. Green Growth—Magic Bullet or Damp Squib? Sustainability 2017, 9, 1092. [Google Scholar] [CrossRef] [Green Version]

- Duffy, F. Building appraisal: A personal view. J. Build. Apprais. 2009, 4, 149–156. [Google Scholar] [CrossRef]

- Deming, M.E.; Swaffield, S. Landscape Architecture Research: Inquiry, Strategy, Design; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2011. [Google Scholar]

- Preiser, W.F.E. Building performance assessment—from POE to BPE, a personal perspective. Archit. Sci. Rev. 2005, 48, 201–204. [Google Scholar] [CrossRef]

- Preiser, W.F.E.; Vischer, J.C. Assessing Building Performance; Routledge: Abingdon, Oxon, UK, 2005. [Google Scholar]

- Preiser, W.F.E.; Rabinowitz, H.Z.; White, E.T. Post-Occupancy Evaluation; Routledge: Abingdon, Oxon, UK, 2015. [Google Scholar]

- Preiser, W.F.E.; Davis, A.T.; Salama, A.M.; Hardy, A. Architecture Beyond Criticism: Expert Judgment and Performance Evaluation; Routledge: Abingdon, Oxon, UK, 2014. [Google Scholar]

- Preiser, W.F.E. Building Evaluation; Plenum Press: New York, NY, USA, 1989. [Google Scholar]

- Preiser, W.F.E.; Hardy, A.E.; Schramm, U. Building Performance Evaluation: From Delivery Process to Life Cycle Phases; Springer International Publishing: Cham, Switzerland, 2017. [Google Scholar]

- Preiser, W.F.E.; Nasar, J.L. Assessing building performance: Its evolution from post-occupancy evaluation. Int. J. Archit. Res. 2008, 2, 84–99. [Google Scholar] [CrossRef]

- Yang, B.; Blackmore, P.; Binder, C. Assessing residential landscape performance: Visual and bioclimatic analyses through in-situ data. Landsc. Archit. 2015, 1, 87–98. [Google Scholar]

- Yang, B. Landscape performance evaluation in socio-ecological practice: Current status and prospects. Socio-Ecol. Pract. Res. 2020, 2, 91–104. [Google Scholar] [CrossRef] [Green Version]

- Wang, Z.; Yang, B.; Li, S.; Binder, C. Economic benefits: Metrics and methods for landscape performance assessment. Sustainability 2016, 8, 424. [Google Scholar] [CrossRef] [Green Version]

- Yang, B.; Li, S.; Binder, C. A research frontier in landscape architecture: Landscape performance and assessment of social benefits. Landsc. Res. 2016, 41, 314–329. [Google Scholar] [CrossRef]

- Canfield, J.; Yang, B.; Whitlow, H. Evaluating Landscape Performance—A Guidebook for Metrics and Methods Selection; Landscape Archiecture Foundation: Washington, DC, USA, 2018. [Google Scholar]

- Nassauer, J.I. Landscape as medium. Landsc. Archit. Front. 2017, 5, 42–47. [Google Scholar] [CrossRef]

- Landscape Architecture Foundation. about Landscape Performance. Available online: https://www.landscapeperformance.org/about-landscape-performance (accessed on 2021, October 19).

- Bowring, J. Landscape Architecture Criticism; Routledge: London, UK, 2020. [Google Scholar]

- Roberts, C.J.; Edwards, D.J.; Hosseini, M.R.; Mateo-Garcia, M.; Owusu-Manu, D.G. Post-occupancy evaluation: A review of literature. Eng. Constr. Archit. Manag. 2019, 26, 2084–2106. [Google Scholar] [CrossRef]

- Landscape Architecture Foundation. Keeping Promises: Exploring the Role of Post-Occupancy Evaluation in Landscape Architecture. Available online: https://www.landscapeperformance.org/blog/2014/11/role-of-poe (accessed on 23 September 2021).

- Marcus, C.C.; Tacha, A.; Drum, R.; Artuso, S.; Dockham, K. Why don’t landscape architects perform more POEs? Landsc. Archit. 2008, 98, 16–21. [Google Scholar]

- Ozdil, T.R. Economic Value of Urban Design; VDM Publishing: Munich, Germany, 2008. [Google Scholar]

- Ozdil, T.R. Social value of urban landscapes: Performance study lessons from two iconic Texas projects. Landsc. Archit. Front. 2016, 4, 12–30. [Google Scholar]

- Arnold, P. Best of both worlds with POE. Build 2011, 2, 38–39. [Google Scholar]

- National Research Council. Learning from Our Buildings: A State-of-the-Practice Summary of Post-Occupancy Evaluation; The National Academies Press: Washington, DC, USA, 2001; Volume 145, p. 137. [Google Scholar]

- Barnes, M. Evaluating Landscape Performance. Available online: https://www.landfx.com/videos/webinars/item/5492-evaluating-landscape-performance.html (accessed on 19 October 2021).

- Doidge, C. Post-occupancy evaluation. In Proceedings of the Architectural Education Exchange 2001 Architectural Educators: Responding to Change, Cardiff, UK, 11–12 September 2001; p. 32. [Google Scholar]

- Hiromoto, J. Architect & Design Sustainable Design Leaders: Post Occupancy Evaluation Survey Report; SOM: New York, NY, USA, 2015. [Google Scholar]

- Carmona, M.; Sieh, L. Performance Measurement in Planning—Towards a Holistic View. Environ. Plan. C: Gov. Policy 2008, 26, 428–454. [Google Scholar] [CrossRef]

- Carmona, M.; Sieh, L. Performance Measurement Innovation in English Planning Authorities. Plan. Theory Pract. 2005, 6, 303–333. [Google Scholar] [CrossRef]

- Laurian, L.; Crawford, J.; Day, M.; Kouwenhoven, P.; Mason, G.; Ericksen, N.; Beattie, L. Evaluating the Outcomes of Plans: Theory, Practice, and Methodology. Environ. Plan. B: Plan. Des. 2010, 37, 740–757. [Google Scholar] [CrossRef] [Green Version]

- Bordass, B.; Leaman, A.; Ruyssevelt, P. Assessing building performance in use 5: Conclusions and implications. Build. Res. Inf. 2001, 29, 144–157. [Google Scholar] [CrossRef]

- Cooper, I. Post-occupancy evaluation-where are you? Build. Res. Inf. 2001, 29, 158–163. [Google Scholar] [CrossRef]

- Hadjri, K.; Crozier, C. Post-occupancy evaluation: Purpose, benefits and barriers. Facilities 2009, 27, 21–33. [Google Scholar] [CrossRef]

- Lackney, J.A. The State of Post-Occupancy Evaluation in the Practice of Educational Design; ERIC: Online, 2001. [Google Scholar]

- Vischer, J. Post-occupancy evaluation: A multifaceted tool for building improvement. In Learning from out Buildings: A State-of-the-Practice Summary of Post-Occupancy Evaluation; National Academies Press: Washington, DC, USA, 2002; pp. 23–34. [Google Scholar]

- Zimmerman, A.; Martin, M. Post-occupancy evaluation: Benefits and barriers. Build. Res. Inf. 2001, 29, 168–174. [Google Scholar] [CrossRef]

- Riley, M.; Kokkarinen, N.; Pitt, M. Assessing post occupancy evaluation in higher education facilities. J. Facil. Manag. 2010, 8, 202–213. [Google Scholar] [CrossRef]

- Jiao, Y.; Wang, Y.; Zhang, S.; Li, Y.; Yang, B.; Yuan, L. A cloud approach to unified lifecycle data management in architecture, engineering, construction and facilities management: Integrating BIMs and SNS. Adv. Eng. Inform. 2013, 27, 173–188. [Google Scholar] [CrossRef]

- Jaunzens, D.; Grigg, P.; Cohen, R.; Watson, M.; Picton, E. Building performance feedback: Getting started; BRE Electronic Publications: Bracknell, UK, 2003. [Google Scholar]

- Cohen, R.; Standeven, M.; Bordass, B.; Leaman, A. Assessing building performance in use 1: The Probe process. Build. Res. Inf. 2001, 29, 85–102. [Google Scholar] [CrossRef]

- Yin, R.K. Case Study Research: Design and Methods, 5th ed.; Sage: Thousand Oaks, CA, USA, 2014; p. 282. [Google Scholar]

- Small, M.L. ‘How many cases do I need?’: On science and the logic of case selection in field-based research. Ethnography 2009, 10, 5–38. [Google Scholar] [CrossRef]

- Fusch, P.I.; Ness, L.R. Are we there yet? Data saturation in qualitative research. Qual. Rep. 2015, 20, 1408. [Google Scholar] [CrossRef]

- Vollstedt, M.; Rezat, S. An introduction to grounded theory with a special focus on axial coding and the coding paradigm. Compend. Early Career Res. Math. Educ. 2019, 13, 81–100. [Google Scholar]

- Glaser, B.G.; Holton, J. Remodeling Grounded Theory. Forum Qual. Sozialforschung/Forum: Qual. Soc. Res. 2004, 5. [Google Scholar] [CrossRef]

- Strauss, A.; Corbin, J. Basics of Qualitative Research: Grounded Theory Procedures and Techniques; Sage Publications: Thousand Oaks, CA, USA, 1990. [Google Scholar]

- Charmaz, K. Constructing Grounded Theory: A Practical Guide through Qualitative Analysis; Sage: Thousand Oaks, CA, USA, 2006. [Google Scholar]

- Krippendorff, K. Content Analysis: An Introduction to Its Methodology; Sage Publications: Thousand Oaks, CA, USA, 2018. [Google Scholar]

- Elo, S.; Kyngäs, H. The qualitative content analysis process. J. Adv. Nurs. 2008, 62, 107–115. [Google Scholar] [CrossRef] [PubMed]

- Schreier, M. Qualitative Content Analysis in Practice; Sage Publications: Thousand Oaks, CA, USA, 2012. [Google Scholar]

- Building Research Association New Zealand. BRANZ Annual Review 2021; BRANZ Incorporated: Porirua City, New Zealand, 2021. [Google Scholar]

- Building Research Association New Zealand. Levy in Action 2021; BRANZ Incorporated: Porirua City, New Zealand, 2021. [Google Scholar]

- Khemani, R.S. Glossary of Industrial Organisation Economics and Competition Law; Organisation for Economic Co-Operation and Development (OECD): Washington, DC, USA, 1993. [Google Scholar]

- Blanchon, B. Criticism: The potential of the scholarly reading of constructed landscapes. Or, the difficult art of interpretation. J. Landsc. Archit. 2016, 11, 66–71. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).