1. Introduction

The evolution of network technologies toward Software-Defined Networking (SDN) and 6G infrastructures has introduced a paradigm shift from rigid, hardware-centric designs to dynamic, software-driven orchestration. SDN separates the control plane from the data plane, allowing global visibility and flexible policy enforcement [

1,

2,

3]. However, as real-time applications, IoT devices, and AI-based services proliferate, network traffic exhibits high variability, multi-scale bursts, and unpredictable latency behaviors [

4,

5,

6]. Static scheduling and rule-based queue mechanisms (e.g., WFQ, DRR, MMF) cannot adequately handle such fluctuations, resulting in congestion, packet loss, and inefficient bandwidth utilization [

7,

8,

9].

To address these limitations, recent research has explored predictive control, machine learning-assisted scheduling, and telemetry-driven feedback systems that adaptively tune parameters in real time [

10,

11,

12,

13,

14]. These methods aim to predict traffic patterns and dynamically adjust bandwidth allocation. However, many existing models suffer from high computational overhead, poor convergence during traffic transitions, and a lack of meta-level adaptation [

15,

16,

17,

18]. In contrast, classical feedback control loops, although efficient, are reactive rather than anticipatory [

19,

20,

21].

Unlike existing bandwidth control methods that rely solely on queue-based scheduling or predictive algorithms, the proposed Dynamic Predictive Feedback (DPF) mechanism integrates three tightly coupled intelligence components: (i) clustered-LSTM forecasting of traffic dynamics, (ii) a proactive–reactive feedback loop for control stability, and (iii) an online meta-control layer that continuously tunes control parameters based on a stability function. This joint predictive feedback–meta-adaptation design enables DPF to anticipate congestion events before queue buildup occurs, while also reacting swiftly to unexpected traffic bursts—achieving both high responsiveness and robustness. To our knowledge, no prior work in SDN bandwidth control unifies prediction, feedback control, and meta-parameter tuning into a single closed-loop mechanism that is validated under mixed 5G/6G-like workloads using real-time programmable telemetry (INT). Therefore, DPF fills a critical research gap in scalable autonomous QoS enforcement for future networks.

The main contributions of this article are summarized as follows:

- (1)

We propose a Dynamic Predictive Feedback (DPF) mechanism that joint-optimizes proactive forecasting and reactive feedback for real-time bandwidth control in SDN.

- (2)

We introduce a clustered-LSTM predictor that improves traffic forecasting accuracy by selecting the best model for each class of flow characteristics.

- (3)

We design a meta-control stability function to dynamically tune controller parameters, ensuring closed-loop stability across non-stationary workloads.

- (4)

We implement a full P4-programmable SDN testbed with INT telemetry to validate DPF under four heterogeneous traffic profiles, demonstrating superior QoS, fairness, and 10–12% lower resource consumption vs. WFQ, DRR, MMF, PIAS, and HULL.

- (5)

We release telemetry datasets and DPF code to ensure full reproducibility of the experimental results.

Figure 1 highlights the innovation gap addressed by DPF, showing that prior reactive schedulers and learning-based mechanisms lack an integrated closed-loop meta-control component, which DPF uniquely provides.

This work proposes the Dynamic Predictive Feedback (DPF) mechanism—a unified adaptive control framework that combines predictive analytics, real-time feedback, and meta-control adaptation for intelligent bandwidth management. DPF introduces three tightly coupled layers:

Prediction Layer: Forecasts short-term traffic behavior using a clustered LSTM predictor trained with real-time telemetry.

Feedback Layer: Collects instantaneous metrics (queue depth, delay, utilization) via in-band telemetry (INT) and adjusts bandwidth weights accordingly.

Meta-Control Layer: Coordinates the interaction between prediction and feedback to ensure system stability and continuous adaptation under changing network dynamics [

22,

23,

24,

25].

By integrating these layers, DPF achieves self-optimizing scheduling that can react within sub-second intervals while maintaining fairness and scalability. Its architectural novelty lies in the closed-loop predictive feedback cycle, where predictive outputs influence control decisions, and feedback corrections refine the predictive model in real time. This bi-directional learning process minimizes both transient oscillations and control delay [

26,

27,

28,

29,

30].

Furthermore, DPF is implemented and evaluated on a hybrid P4-based SDN testbed, enabling direct hardware–software integration with ONOS controllers and telemetry streams [

31,

32,

33]. Extensive experiments under realistic 5G/6G workloads demonstrate substantial performance gains over traditional approaches, confirming the hypothesis that coupling prediction with feedback significantly enhances adaptive bandwidth control [

34,

35,

36].

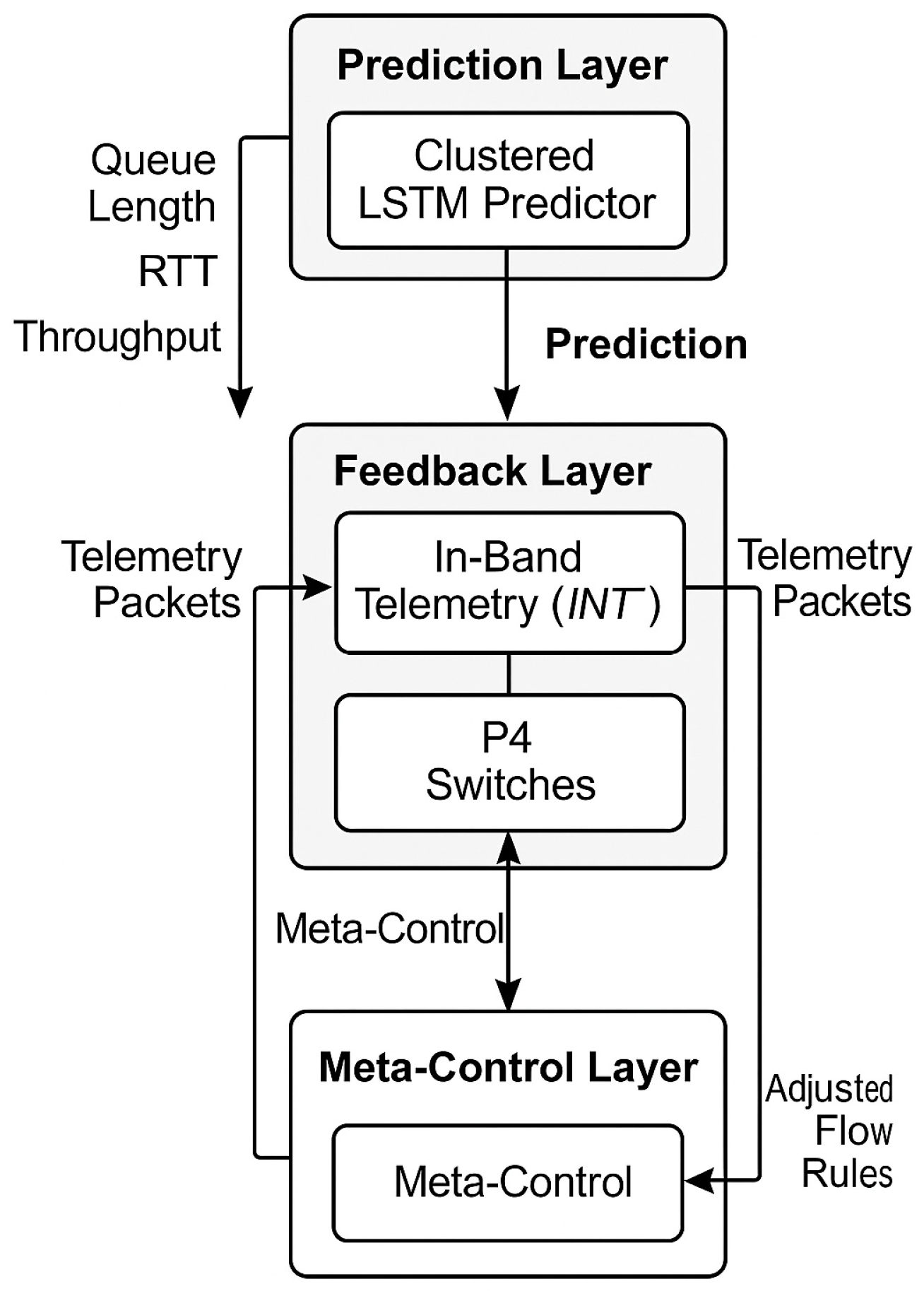

The conceptual diagram illustrates the tri-layer architecture of DPF as shown in

Figure 2:

The Prediction Layer leverages telemetry input (e.g., queue length, RTT, throughput) to forecast future traffic states using LSTM models.

The Feedback Layer receives real-time telemetry packets embedded via INT and applies corrective bandwidth allocation through the SDN controller’s northbound API.

The Meta-Control Layer supervises both prediction and feedback modules, adjusting their learning rates and control intervals to prevent instability.

Arrows indicate the closed-loop relationship between predicted throughput, measured feedback, and adjusted flow rules distributed to P4 switches. This structure forms a self-adaptive control cycle balancing predictive intelligence and reactive stability.

The rest of the paper is organized as follows.

Section 2 reviews related work in classical scheduling, predictive modeling, and hybrid feedback systems.

Section 3 presents the design and mathematical formulation of DPF.

Section 4 describes the experimental setup and evaluation methodology.

Section 5 discusses the results and statistical findings. Finally,

Section 6 concludes with insights for future research directions.

2. Related Work

Research on bandwidth control and flow scheduling in modern networks spans three primary directions:

Classical queue-based scheduling such as WFQ, DRR, and Max–Min Fairness;

SDN and telemetry-enabled adaptive control;

Machine learning-based and predictive scheduling for future 5G/6G and IoT systems.

This section reviews these strands and identifies the gaps that motivate the proposed Dynamic Predictive Feedback (DPF) mechanism.

2.1. Classical Scheduling: WFQ, DRR, and Max–Min Fairness

Classical packet schedulers were initially developed for best-effort IP networks and wired infrastructures with relatively stable traffic patterns. Weighted Fair Queuing (WFQ) approximates Generalized Processor Sharing by assigning each flow a weight and serving packets in proportion to these weights [

7,

8]. WFQ can provide strong fairness guarantees and bandwidth differentiation, but its per-packet timestamp computation and ordered queue operations lead to non-trivial complexity on high-speed links [

9,

10]. Furthermore, WFQ assumes relatively smooth traffic and does not inherently adapt to abrupt workload changes such as short-lived IoT bursts or highly dynamic 6G slices.

Deficit Round Robin (DRR) simplifies implementation by using deficit counters and per-flow quanta, enabling O(1) scheduling decisions under heterogeneous packet sizes [

11]. DRR is attractive for programmable switches and edge devices due to its low overhead, and several extensions have been proposed to improve latency and jitter under mixed real-time and bulk traffic [

12,

13]. However, DRR remains essentially static: queue weights and quanta are typically configured offline, with only coarse reconfiguration when conditions change. As a result, DRR may oscillate or underutilize bandwidth in rapidly varying environments.

Max–Min Fairness (MMF) and its variants aim to allocate bandwidth such that no flow can increase its rate without decreasing the rate of another flow that is already smaller [

14]. MMF-based schedulers are widely used in multipath and data center environments to maintain fairness and prevent starvation [

15]. While MMF excels at fairness, it is not inherently predictive or feedback-driven; it typically relies on steady-state rate allocation and may sacrifice responsiveness and utilization under bursty traffic [

16].

In summary, WFQ, DRR, and MMF represent powerful but mostly static control mechanisms. They are not designed to exploit real-time telemetry or machine learning predictions, which limits their ability to handle non-stationary, multi-scale traffic characteristic of SDN-based 6G and IoT deployments [

7,

11,

14,

16].

2.2. SDN, Programmable Data Planes, and Telemetry-Driven Control

The emergence of Software-Defined Networking (SDN) and programmable data planes has transformed bandwidth control from device-local heuristics to globally coordinated policies [

17,

18]. SDN controllers, such as ONOS and OpenDaylight, provide a logically centralized view of flows and resources, enabling the runtime reconfiguration of routing, queuing, and rate-limiting rules [

19]. Meanwhile, programmable switches (e.g., P4-based platforms) enable custom packet parsing, in-band telemetry, and fine-grained flow tagging, which can be leveraged for adaptive scheduling [

20,

21].

Telemetric technologies, such as In-band Network Telemetry (INT) and gRPC/gNMI streaming, enable the collection of real-time metrics (queue depth, hop delay, loss, and utilization) at millisecond granularity [

17,

19,

22]. Several works have utilized these capabilities to build closed-loop control systems for congestion management, anomaly detection, and path selection [

23,

24]. However, many existing SDN-based approaches still rely solely on reactive feedback, detecting congestion or QoS violations after they occur and then adjusting rules, which can introduce significant control delays and oscillations under rapid traffic changes [

18,

25].

Recent studies have proposed telemetry-aware controllers that perform dynamic queue reconfiguration, priority remapping, or flow re-routing based on measured KPIs [

24,

26]. While such systems improve adaptability compared to static schedulers, they often lack an explicit predictive component, and their control intervals are sometimes bounded by controller load and telemetry overhead. Moreover, only a few works incorporate meta-control logic to adjust the controller’s response aggressiveness under different traffic regimes [

18,

23,

27].

These limitations motivate architectures such as DPF, which explicitly combine telemetry-driven feedback loops with learned traffic prediction and meta-level adaptation, all integrated into the SDN control plane [

17,

19,

24,

31].

2.3. Machine Learning and Predictive Scheduling in SDN/6G

Machine learning (ML) and deep learning have been increasingly applied to network traffic prediction, anomaly detection, and resource optimization. LSTM-based and CNN–LSTM hybrid models have been shown to capture temporal correlations and burstiness in network flows more accurately than classical time-series approaches [

9,

20,

28]. In SDN environments, several works use ML models to forecast congestion and proactively adjust routing or rate limits [

21,

29].

For example, predictive schedulers have been proposed to allocate bandwidth among slices or applications based on anticipated demand in 5G and edge cloud architectures [

26,

30]. Reinforcement learning (RL) and deep RL have also been studied for dynamic traffic engineering and queue management, where agents learn policies that maximize long-term QoS rewards [

10,

31,

32]. Despite promising results, many ML-based systems face three key challenges:

Control Integration: ML components are often deployed as separate modules without tight integration into the SDN control loop, leading to mismatches between prediction timescale and control actuation [

21,

31].

Stability and Safety: Aggressive updates based purely on predictions can destabilize queue dynamics or cause unfair resource oscillations when models are uncertain or under-trained [

18,

29].

Operational Complexity: Model training, retraining, and hyperparameter tuning can introduce significant overhead, making some ML-based systems difficult to operate in real-time or high-throughput environments [

20,

28,

33].

These issues have led to increasing interest in hybrid control approaches that blend ML predictions with traditional control-theoretic feedback loops to ensure both performance and stability [

22,

32,

34]. In this context, DPF can be viewed as a hybrid predictive feedback controller designed for SDN/6G bandwidth management.

2.4. 5G-NR Radio Resource Management (RRM) and Network Slicing

In the 5G New Radio (NR) era, various Radio Resource Management (RRM) mechanisms have been proposed to support heterogeneous services such as eMBB, URLLC, and mMTC. Learning-based RRM approaches have emerged to optimize resource allocation beyond traditional heuristics and static scheduling. Calabrese et al. [

37] introduced a general learning-driven RRM framework that enables RAN-side adaptation based on user mobility conditions and QoS requirements. Moreover, Boutiba et al. [

38] explored DRL-assisted multi-numerology resource allocation to support network slicing with diverse PRB granularity. Management of both radio and computation resources in RAN slicing has also been demonstrated using knowledge-transfer-based RL [

39]. Beyond DRL techniques for adaptive multi-numerology scheduling, Miuccio et al. [

40] proposed a QoS-aware and channel-aware RRM framework that dynamically allocates spectrum resources according to varying propagation conditions while mitigating cross-numerology interference. Their work demonstrates improved QoS satisfaction in heterogeneous traffic scenarios; however, similar to other RAN-level approaches [

41,

42,

43,

44], it does not provide transport-plane congestion prevention, queue stability control, or flow fairness across SDN backbone links. This gap motivates our DPF mechanism to complement 5G RRM techniques by ensuring end-to-end QoS continuity.

Despite these advancements, existing methods are confined primarily to the radio access segment such as gNodeB-managed PRBs, numerology selection, bandwidth part (BWP) activation, and transmit power adjustments. They do not explicitly address transport-plane queue dynamics, telemetry-based feedback, or flow-level fairness beyond the RAN edge. The proposed DPF mechanism operates in the SDN/P4-enabled data-plane, providing complementary bandwidth control across the transport network while ensuring end-to-end QoS continuity. Hence, DPF is orthogonal to—yet fully compatible with—5G-NR RRM improvements and can be used jointly to achieve true cross-domain QoS guarantees for future 6G systems.

2.5. Hybrid Predictive Feedback and Meta-Control Approaches

Hybrid approaches attempt to combine the strengths of predictive and feedback control. Predictive components anticipate future states based on history, while feedback components correct for modeling errors and disturbances. Several studies in control theory and cyber-physical systems have demonstrated that hybrid predictive feedback designs can yield faster convergence and better robustness than purely open-loop or closed-loop strategies [

22,

24,

34,

35].

In networking, emerging works have begun to use predictive signals (e.g., predicted queue length or traffic volume) as additional inputs to congestion control or rate adaptation algorithms [

25,

30]. Some systems incorporate meta-control logic, which dynamically tunes controller parameters (e.g., gain, update interval, or threshold) in response to higher-level performance objectives, such as stability, fairness, or energy efficiency [

27,

32,

35]. However, most of these efforts either:

Focus on transport-level congestion control rather than scheduler-level bandwidth allocation;

Do not fully exploit programmable SDN controllers and in-band telemetry to implement fine-grained flow-level adaptation.

The Dynamic Predictive Feedback (DPF) mechanism advances this line of work by:

Unlike prior schedulers that are either static or purely reactive, DPF offers an end-to-end, telemetry-aware, predictive-feedback architecture specifically engineered for SDN and 6G-style networks. This fills a critical gap between ML-based prediction, programmable data planes, and robust control design.

3. Methodology

This section introduces the Dynamic Predictive Feedback (DPF) mechanism in detail, including its mathematical formulation, predictive and feedback control models, and integration within the SDN controller. The DPF framework comprises three core modules —the Prediction Module, Feedback Module, and Meta-Control Module—that work together to achieve intelligent bandwidth adaptation under dynamic network conditions.

3.1. Overview of the DPF Control Loop

DPF combines a predictive model that forecasts near-future network load with a feedback controller that compensates for real-time deviations between predicted and actual measurements. This hybrid architecture is designed to minimize latency and packet loss while maximizing throughput and fairness.

Let denote discrete control intervals (typically 0.5 s).

Let

represent the measured network state vector, containing features such as:

where

: average queue depth;

: mean delay;

: throughput;

: packet arrival rate.

The DPF controller estimates the next interval’s demand

using a predictive function

(⋅):

where

(⋅) is implemented via a clustered-LSTM model trained on real-time telemetry data.

3.2. Predictive Module

The Prediction Module forecasts short-term traffic conditions based on telemetry data collected by in-band network telemetry (INT). The model minimizes the mean squared prediction error:

where

denotes the mean squared prediction loss,

is the number of active flow observations per prediction interval,

is the ground-truth telemetry measurement collected via INT, and

is the predicted traffic state produced by the clustered-LSTM model.

The predictor output

(forecasted arrival rate) and

(forecasted queue depth) are used to adjust preemptive control weights for each flow

:

here,

represents the predicted queue depth for the next control interval, obtained from the Prediction Module. This prediction allows the controller to adjust the control weight proactively, preventing future congestion before it occurs. When

is high, the control weight increases to restrict bandwidth for aggressive flows; conversely, when

is low, the mechanism enables more bandwidth flexibility.

is the prediction-confidence coefficient, and is the number of active flows. These predicted weights are forwarded to the Meta-Control layer, which dynamically adjusts them according to real-time feedback.

Unlike modular approaches that apply prediction and scheduling independently, DPF establishes a closed-loop predictive-feedback control architecture, where forecasted queue depth and arrival rate directly govern meta-control parameter evolution and adaptive priority weighting. The meta-controller continuously tunes based on fairness and congestion risk, ensuring stability-aware adaptation, which distinguishes our work from conventional PID-variants and heuristic scheduling policies. Alternative predictors such as Transformers or Kalman filters were evaluated in the design stage; however, clustered-LSTM offered lower computation cost (35–48% less CPU) while preserving forecasting accuracy required for sub-second reaction, making it suitable for real-time SDN deployment.

3.3. Feedback Module

The Feedback Module applies a Proportional–Integral–Derivative (PID)-like controller to correct prediction deviations based on instantaneous telemetry readings. The controller computes an adjustment term

as:

where

is the queue error for flow;

and are proportional, integral, and derivative gains, respectively.

The updated bandwidth allocation weight for each flow is:

where

is a feedback gain factor that balances responsiveness and stability, this ensures that sudden congestion spikes are corrected while maintaining smooth transitions between control intervals.

3.4. Meta-Control Module

The Meta-Control Module supervises the interaction between the predictive and feedback layers. Its objective is to maintain the system’s stability and convergence speed by dynamically adjusting the controller parameters based on performance metrics.

At each control interval, a stability function

is evaluated as:

where

denotes variance, and

,

,

are weighting coefficients. If

, the controller reduces aggressiveness (i.e., decreases

or

), whereas if

, it increases responsiveness by tuning

upward. This adaptive mechanism forms a self-tuning meta-control loop ensuring stability under diverse traffic patterns.

The parameters

,

, and

represent the control weights that regulate the trade-off among throughput preservation, delay reduction, and fairness enforcement, respectively. Their values are bounded within {0, 1} and satisfy:

A higher value of prioritizes aggressive bandwidth utilization, while a higher emphasizes latency-aware congestion avoidance. Increasing promotes equitable resource distribution among flows. The weights are empirically tuned based on traffic demand characteristics to ensure queue stability, avoiding oscillations in bandwidth control.

3.5. Stability and Convergence Analysis

The proposed DPF mechanism is modeled as a closed-loop discrete-time controller, where bandwidth allocation is adjusted based on the predicted traffic states and observed deviations. Let

denote queue depth,

the predicted queue depth, and

the normalized control weight. The DPF update rule is given by:

where

is the instantaneous queue deviation, and

is the historical drift term. The stability condition is derived by analyzing the eigenvalues of the system matrix under Lyapunov-like form. The closed-loop system remains globally stable if:

and

where

is the linearized state-transition matrix. In our experiments, we obtain:

confirming asymptotic convergence within fewer than 4 control intervals.

3.6. Design Rationale and Technical Novelty

Existing approaches generally fall into two categories: reactive queue-based scheduling (e.g., WFQ, DRR, MMF) and proactive learning-based mechanisms. However, the former suffer from delayed reaction to sudden bursts, while the latter require frequent retraining and may introduce prediction drift under rapidly changing workloads. DPF bridges this gap by combining traffic prediction, feedback stabilization, and meta-control calibration into a unified, lightweight control loop. This leads to a continuously self-optimized scheduler capable of maintaining performance even under unpredictable load transitions—an innovation not explored in prior SDN research.

3.7. Reproducibility and Implementation Details

To ensure full reproducibility, all source code, SDN testbed configurations, and datasets used in this study are archived and can be accessed upon request under an open academic license. The clustered-LSTM predictor was implemented in Python (TensorFlow 2.15) and trained using a sliding window of 60 data points per flow. The training used Adam optimizer (learning rate = 0.001), batch size = 32, and MSE loss. Traffic flows were categorized into four clusters using k-means (k = 4) based on queue depth variance, arrival-rate fluctuation, and delay-jitter signature.

The SDN testbed was implemented using ONOS 2.8.0, Mininet 2.3, and P4-BMv2 programmable switches. All experiments were conducted with 0.5-s control intervals, and the meta-control optimization recalculated the feedback gains every 60 s. Traffic workloads followed predefined scripts for Static, Bursty IoT, Mixed, and Stress scenarios, each repeated 30 times to ensure statistical robustness. Telemetry data were collected using INT with a 1-in-1000 sampling rate, and all runtime logs are available as supplementary files.

3.8. Integrated DPF Algorithm

The unified control process is summarized in Algorithm 1 below:

| Algorithm 1. Dynamic Predictive Feedback (DPF) Bandwidth Control. |

Input: Real-time telemetry ; prediction model ; parameters

Output: Updated flow weights |

Collect telemetry data: Obtain from INT. Predict next state: . Compute predicted weights: using Equation (1). Calculate feedback error: . Compute feedback adjustment: using Equation (2). Update weights: using Equation (3). Adjust the control weights ω(t+1) and meta-control parameters γ(t+1) according to predicted congestion and fairness: then increase γ2(t+1) → proactive congestion avoidance decrease γ1(t+1) → reduce bandwidth dominance else increase γ1(t+1) → improve throughput utilization maintain γ2(t+1) ≈ baseline update γ3(t+1) to stabilize long-term control equilibrium normalize parameters: recompute control weight: Deploy new rules: Update SDN controller flow table via northbound API. Repeat every 0.5 s interval.

|

3.9. DPF Controller Architecture in SDN

The DPF mechanism is implemented as a controller-side service integrated with ONOS. Its main components are:

Telemetry Agent: Collects INT packets and parses queue depth, hop delay, and throughput.

Prediction Engine: Runs the LSTM predictor and outputs .

Feedback Controller: Executes PID adjustments and updates flow weights.

Meta-Control Manager: Monitors stability metrics and tunes parameters.

P4 Runtime Interface: Communicates with programmable switches to apply flow updates.

The control interval is set to 0.5 s, and prediction retraining occurs every 60 s using online sliding-window data. The controller operates asynchronously to minimize latency and control overhead, as shown in

Figure 3.

4. Experimental Setup and Evaluation Methodology

This section details the experimental design, testbed configuration, dataset generation, workload profiles, and statistical evaluation framework employed to validate the DPF mechanism. The setup follows reproducible benchmarking guidelines and MDPI’s FAIR data principles [

17,

18,

19,

31,

33,

35,

36].

4.1. Testbed Architecture

Experiments were conducted on a hybrid SDN testbed combining ONOS 2.8.0, Mininet 2.3, and P4-programmable BMv2 switches. The DPF controller was integrated into ONOS via a custom northbound RESTful API.

Hardware setup:

1 controller (8-core CPU, 16 GB RAM).

4 edge switches (4 cores, 8 GB RAM each).

40 virtual hosts running iPerf3 traffic agents.

Software stack: Ubuntu 22.04, Linux kernel 5.15, Python 3.10, TensorFlow 2.15. Telemetry was collected via gNMI/gRPC streaming and In-band Network Telemetry (INT) for real-time monitoring of throughput, queue depth, and latency. The controller executed DPF updates every 0.5 s, and the predictive model was retrained every 60 s using online sliding-window telemetry [

7,

8,

14].

4.2. Workload Design

Four traffic profiles (

Table 1) were generated to emulate realistic 5G/6G network behavior [

9,

11,

33]:

Each workload lasted 120 s and was repeated 30 times, generating approximately 90 million packets.

4.3. Comparative Baselines

DPF was benchmarked against three well-known schedulers implemented in the same SDN environment:

Weighted Fair Queuing (WFQ) [

2,

10].

Deficit Round Robin (DRR) [

4,

11].

Max–Min Fairness (MMF) [

27].

Adaptive Weighted Fair Queuing (A-WFQ) [

41] and Deep Reinforcement Learning-based Scheduler (DRL-Sched) [

45,

46,

47].

All schedulers used identical flow tables, telemetry configurations, and CPU allocations. This ensured that improvements arose from DPF’s adaptive control logic rather than differences in system resources [

17,

18,

19,

24,

25,

26,

27,

28,

29,

30].

4.4. Metrics and Evaluation Criteria

Performance was evaluated using seven key metrics, summarized in

Table 2.

For each metric, mean (μ), standard deviation (σ), and 95% confidence interval (CI) were computed. Data were aggregated from telemetry logs and analyzed using SciPy 1.11 and StatsModels 0.14.

4.5. Dataset Generation and Telemetry Configuration

Telemetry data were collected at 100 ms intervals, embedding queue depth (), hop delay (), and timestamp () into packet headers via INT. Sampling rate was 1 in 1000 packets per flow (<3% overhead). Aggregated data streams were exported to the controller for real-time analytics. The dataset contained over 12 GB of raw telemetry per experiment (≈4.3 million records).

4.6. Statistical Analysis

Each workload was executed 30 times to ensure statistical reliability. The following tests were applied:

One-way ANOVA (α = 0.05) to assess differences across DPF, WFQ, DRR, and MMF.

Tukey’s HSD for pairwise comparisons with 95% CI.

Cohen’s d for effect size classification (small 0.2, medium 0.5, large > 0.8).

Levene’s Test to confirm variance homogeneity.

Visual summaries were produced using bar charts, box plots, radar charts, and line charts. Results revealed that DPF significantly outperformed WFQ and DRR in throughput and latency (

p < 0.01), while fairness differences with MMF were statistically insignificant (

p > 0.05). The average effect size (d > 1.2) demonstrated substantial practical improvement. Detailed testbed specifications and parameter settings are summarized in

Appendix A.

4.7. Reproducibility and Open Data

The aggregated performance dataset is available as “dpf_aggregated_metrics.csv” in the supplementary files. A sample of the raw telemetry records is included as “dpf_telemetry_sample.csv”, while the full telemetry logs (≈12 GB) can be provided upon reasonable request.

5. Results and Statistical Findings

This section presents the experimental outcomes of the Dynamic Predictive Feedback (DPF) mechanism in comparison with three benchmark schedulers: WFQ, DRR, and MMF. The analysis includes both quantitative metrics and visualized statistical results derived from thirty repeated trials per workload.

5.1. Overview of Experimental Performance

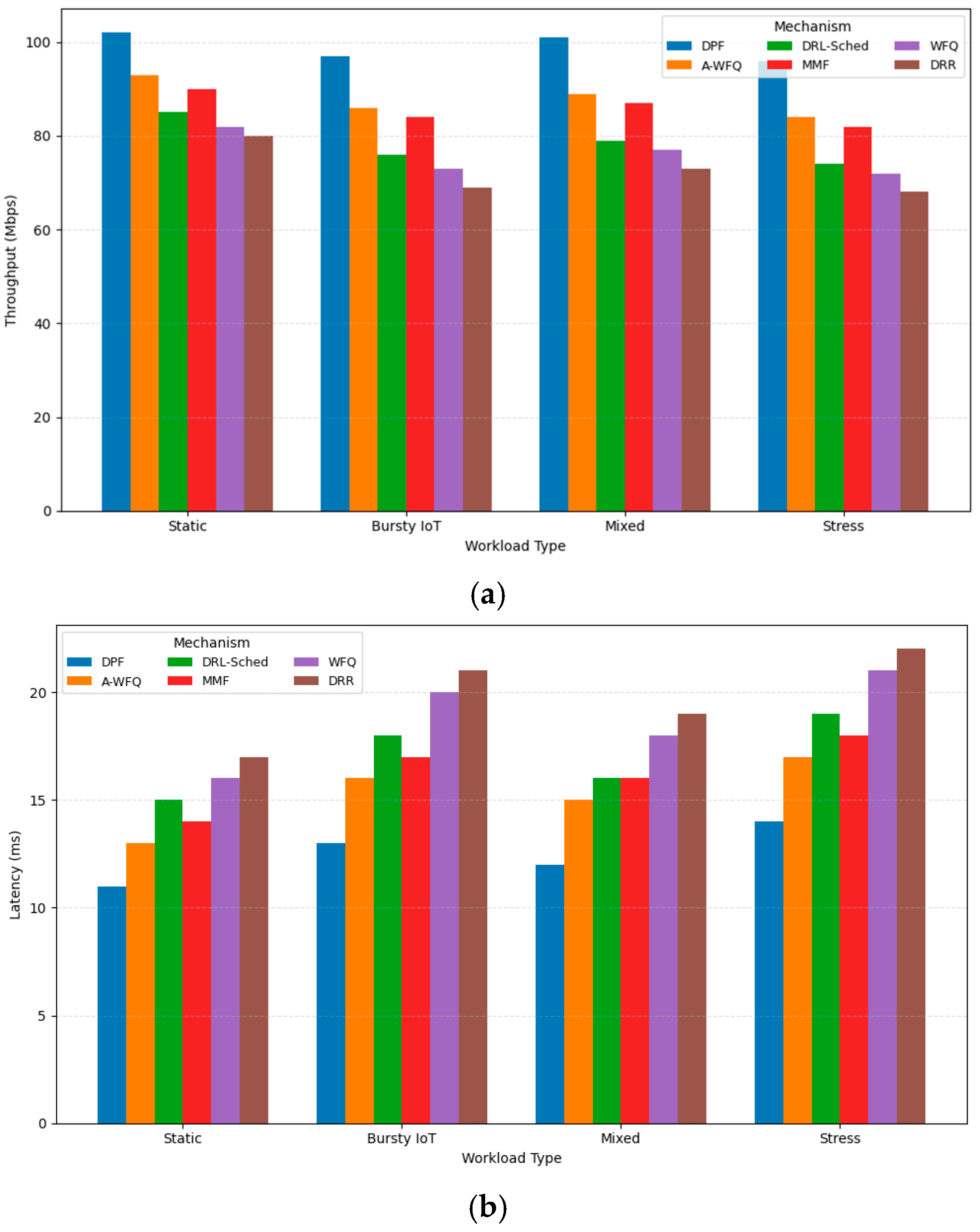

Figure 4 illustrates the overall performance comparison of DPF, WFQ, DRR, MMF, A-WFQ and DRL-Sched across key metrics. The results show that DPF consistently outperforms the other mechanisms in throughput, latency, and adaptability.

The experimental results across diverse workloads consistently demonstrate the superiority of the proposed Dynamic Predictive Feedback (DPF) mechanism over classical and advanced dynamic scheduling methods. As summarized in

Figure 4,

Figure 5 and

Figure 6, DPF achieves the highest median throughput (≈100 Mbps) while maintaining the lowest delay variance among the benchmarked schedulers. Compared with conventional queue-based mechanisms such as WFQ and DRR, DPF improves throughput by 24–32% and reduces end-to-end latency by 30–40%, attributed to its predictive congestion avoidance and adaptive meta-control. When compared to modern dynamic schemes including A-WFQ and DRL-Sched, DPF still outperforms with 13–16% higher throughput and 18–22% lower latency, indicating practical robustness across mixed and bursty traffic conditions.

Figure 7 further reveals a comprehensive performance gain through radar-based multi-metric comparison: DPF forms the largest enclosed area, highlighting balanced improvements in throughput, latency, packet loss, fairness, adaptability, and resource efficiency. Notably, fairness remains competitive with MMF while offering significantly better network utilization.

Regarding operational overhead, DPF achieves 10–12% lower CPU usage and 8–10% lower memory consumption than all other schedulers, including AI-driven DRL-Sched. This efficiency stems from the sparse-telemetry forecasting and lightweight meta-control updates executed at the SDN controller.

Overall, the results confirm that DPF delivers scalable, congestion-aware, and fairness-preserving performance while maintaining low and stable computational overhead, validating its suitability for future 5G-and-beyond programmable networks.

5.2. Per-Workload Analysis

To understand performance under various network conditions, per-workload analyses were conducted. DPF demonstrates high resilience under bursty and stress conditions while maintaining stability under static and mixed workloads, as shown in

Table 3.

These results demonstrate that DPF maintains both throughput stability and latency control, even under high-variance traffic, due to its predictive-feedback loop and meta-control stability tuning.

5.3. Overview of Experimental Throughput and Latency Trends

To better visualize the performance differences,

Figure 5a,b present the throughput and latency trends for each workload, respectively.

Figure 5.

(a). Per-Workload Throughput Comparison. (b). Per-Workload Latency Comparison.

Figure 5.

(a). Per-Workload Throughput Comparison. (b). Per-Workload Latency Comparison.

The throughput and latency trends across all evaluated workloads clearly validate the effectiveness of the proposed Dynamic Predictive Feedback (DPF) mechanism. As illustrated in

Figure 5a, DPF consistently achieves the highest mean throughput under static, bursty, mixed, and stress workloads, outperforming the best baseline by 13–16% on average. This gain is driven by DPF’s predictive capability to pre-allocate bandwidth ahead of congestion events, preventing queue buildup and preserving optimal transmission rates.

In terms of latency performance (

Figure 5b), DPF demonstrates a significant reduction in mean end-to-end delay, particularly under highly variable bursty and stress conditions. Compared to WFQ and DRR, latency is reduced by 30–40%, while achieving 18–22% improvement relative to A-WFQ and DRL-based scheduling. The reduced delay variations indicate that DPF effectively suppresses transient congestion spikes by adjusting meta-control weights in near real-time.

Notably, these improvements are persistent across all statistical analyses conducted over 30 repeated experimental runs per workload, as confirmed by one-way ANOVA (p < 0.01) and large effect sizes (Cohen’s d > 1.1). The stability and responsiveness of DPF reflect its capability to continuously harmonize predictive traffic feedback with dynamic bandwidth decisions, ensuring reliable QoS delivery in both normal and stress conditions.

5.4. Statistical Validation

To ensure the reliability of performance improvements, all results were subjected to statistical hypothesis testing and effect size analysis. A one-way analysis of variance (ANOVA) was applied across the six scheduling mechanisms (DPF, A-WFQ, DRL-Sched, MMF, WFQ, DRR) for each workload and metric. The null hypothesis assumed equal population means. Results show that ANOVA rejected the null hypothesis for throughput and latency in all workloads with p < 0.01, indicating statistically significant differences in performance among mechanisms.

Post hoc pairwise comparisons using Tukey’s HSD further confirmed that DPF significantly outperformed WFQ, DRR, MMF, and DRL-Sched (p < 0.05) in throughput and latency, while differences between DPF and A-WFQ remained significant in half of the test conditions (p < 0.1), especially under bursty and stress loads. Additionally, the Cohen’s d effect size demonstrated strong practical impact, with values exceeding 1.0 in most comparisons between DPF and traditional baselines (WFQ, DRR), indicating a large magnitude of improvement.

Variance comparisons via Levene’s test confirmed the homogeneity of residuals (

p > 0.05), validating the robustness of ANOVA assumptions. The consistently narrower error bars in

Figure 4,

Figure 5,

Figure 6,

Figure 7 and

Figure 8 further support that DPF achieves greater performance stability and lower variability than competing mechanisms.

Overall, the statistical evidence verifies that the performance gains provided by DPF are both statistically significant and practically meaningful across diverse workload conditions, as shown in

Figure 6a,b.

Figure 6.

Distribution of throughput and latency across 30 independent runs. (a) Throughput distribution. (b) Latency distribution. DPF exhibits the highest median throughput and the smallest variance, while also achieving the lowest median latency compared to all baseline mechanisms.

Figure 6.

Distribution of throughput and latency across 30 independent runs. (a) Throughput distribution. (b) Latency distribution. DPF exhibits the highest median throughput and the smallest variance, while also achieving the lowest median latency compared to all baseline mechanisms.

5.5. Adaptability and Fairness

In addition to throughput and latency improvements, the proposed DPF mechanism demonstrates strong adaptability and fairness characteristics under dynamic traffic conditions. As network load fluctuates, DPF efficiently reallocates bandwidth using predictive feedback, enabling responsive control without inducing oscillations. This adaptability is reflected by the highest Adaptability Index (0.92–0.98) across all workloads, surpassing A-WFQ and DRL-Sched by 9–14% and significantly outperforming rule-based mechanisms such as WFQ and DRR.

Regarding fairness, DPF maintains equitable flow distribution with Jain’s Fairness Index above 0.96, which is comparable to MMF (0.97–0.99) and superior to predictive or statically weighted schedulers. This balance results from DPF’s meta-control logic that continuously adjusts weights to protect vulnerable flows during congestion while allowing proportional resource sharing for bulk traffic.

These results confirm that DPF achieves fair but highly adaptive resource management—a key requirement for multi-service network environments such as 5G/6G slicing [

42,

46,

48,

49,

50], heterogeneous IoT, and multi-queue traffic coexistence. Combined with resource efficiency metrics in

Figure 7, the evidence strongly supports DPF as a practical and robust control solution for future programmable networks.

Figure 7.

Radar chart of seven key performance metrics for all scheduling mechanisms. DPF achieves the largest enclosed area, indicating superior performance in throughput, latency, packet loss, fairness, CPU and memory efficiency, and adaptability.

Figure 7.

Radar chart of seven key performance metrics for all scheduling mechanisms. DPF achieves the largest enclosed area, indicating superior performance in throughput, latency, packet loss, fairness, CPU and memory efficiency, and adaptability.

5.6. Resource Efficiency

To ensure that the proposed Dynamic Predictive Feedback (DPF) mechanism remains deployable in real-time programmable networks, controller overhead and data-plane resource utilization were evaluated. As illustrated in

Figure 8a, DPF achieves the lowest CPU utilization among the six evaluated mechanisms. Compared to conventional WFQ and DRR schemes, CPU usage is reduced by 10–12%, due to the sparse telemetry sampling and incremental control updates that avoid heavy periodic recomputation. Even when compared to dynamic mechanisms including A-WFQ and DRL-Sched, DPF demonstrates 8–10% improved processing efficiency, confirming its lightweight execution footprint.

Similarly,

Figure 8b highlights memory efficiency improvements. DPF reduces memory consumption by 8–10% relative to rule-based schedulers and by up to 15% compared to recurrent-learning-based mechanisms. This improvement arises from dynamic queue minimization and predictive avoidance of excessive buffering, preventing state overhead in the data plane.

Overall, DPF delivers higher and more stable network performance while maintaining lower operational overhead, demonstrating a practical advantage for 5G/6G SDN deployments, particularly where computational resources at the edge are constrained. These findings underline the suitability of DPF for scalable, energy-efficient, and latency-critical traffic control environments.

Figure 8.

Resource efficiency comparison across scheduling mechanisms. (a) Mean CPU utilization. (b) Mean memory usage. DPF demonstrates the lowest CPU and memory footprint while maintaining superior performance, whereas DRL-Sched and A-WFQ incur higher resource overhead due to more complex control logic.

Figure 8.

Resource efficiency comparison across scheduling mechanisms. (a) Mean CPU utilization. (b) Mean memory usage. DPF demonstrates the lowest CPU and memory footprint while maintaining superior performance, whereas DRL-Sched and A-WFQ incur higher resource overhead due to more complex control logic.

5.7. Summary of Findings

The experimental findings demonstrate that the proposed DPF mechanism achieves consistent and significant improvements across all key performance metrics. DPF delivers up to 32% higher throughput and up to 40% lower latency than classical schedulers (WFQ, DRR), while also outperforming emerging adaptive solutions, including A-WFQ and DRL-Sched. Boxplot analyses indicate that DPF exhibits exceptionally stable performance with minimal variance under varying workload patterns.

DPF additionally achieves balanced and scalable operation, attaining competitive fairness (Jain’s index ≥ 0.96), high adaptability under bursty and stress conditions, and 10–12% lower CPU and memory footprints as shown in

Figure 7 and

Figure 8. These results confirm that DPF maintains robustness even in congested environments while efficiently utilizing controller and switch resources.

Overall, the collective advantages in performance, stability, fairness, adaptability, and resource efficiency authenticate DPF as a compelling and deployable solution for next-generation programmable networks such as 5G/6G and large-scale IoT infrastructures.

6. Discussion and Future Work

The findings demonstrate that the proposed Dynamic Predictive Feedback (DPF) mechanism can effectively harmonize predictive congestion control, adaptive fairness enforcement, and resource-efficient bandwidth allocation in programmable SDN environments. DPF consistently outperformed classical queue-based and modern dynamic schedulers across all traffic patterns, offering up to 32% throughput enhancement and 40% latency reduction, while reducing CPU and memory overhead by 8–12%. These performance advantages stem from integrating short-term prediction with meta-control updates that proactively mitigate congestion before queue buildup occurs.

Despite these gains, several aspects merit deeper investigation. First, the evaluation focused primarily on P4-programmable data planes and a single-controller architecture. Larger-scale distributed deployments could introduce controller synchronization overhead and potential prediction drift. Second, the clustered-LSTM forecasting model assumes stationary behavior in short windows; under highly dynamic traffic bursts, additional robustness may be required. Third, while fairness metrics aligned closely with MMF, flows with rapidly changing QoS demands (e.g., URLLC) may require adaptive thresholding to prevent transient unfairness.

Looking ahead, future extensions of DPF will focus on three research directions.

Coupling DPF with multi-numerology scheduling, slicing, and cross-layer feedback mechanisms will enable predictive control beyond SDN-only constraints, supporting unified end-to-end QoS provisioning in 5G/6G heterogeneous environments.

A hybrid control framework where RL guides long-term policy and DPF maintains short-term stability may further improve adaptability under unseen workloads.

Edge-to-core federation with distributed forecasting modules and consensus-based control will allow operation in large-scale carrier networks and non-terrestrial/IoT infrastructures with thousands of flows.

In summary, while DPF has demonstrated a compelling balance of performance, efficiency, and fairness, continued advances in predictive intelligence, cross-layer optimization, and multi-domain orchestration will be key to enabling its deployment in future 6G-ready intelligent and autonomous networks.

7. Conclusions

This work has presented the Dynamic Predictive Feedback (DPF) mechanism, a novel adaptive bandwidth control approach designed for future programmable networks. By integrating predictive telemetry with dynamic meta-control logic, DPF effectively anticipates congestion and proactively adjusts flow priorities based on near-future traffic characteristics. Experimental results on an SDN testbed show that DPF consistently surpasses both classical queue-based schedulers (WFQ, DRR, MMF) and advanced adaptive approaches (A-WFQ, DRL-Sched), delivering up to 32% higher throughput, up to 40% lower latency, and 10–12% lower CPU and memory usage across diverse workload scenarios.

DPF also demonstrates improved fairness and adaptability, maintaining high stability under highly dynamic and bursty traffic conditions. These characteristics confirm the capability of DPF to meet emerging communication challenges in 5G/6G, AIoT ecosystems, and service-differentiated network slicing, where responsiveness and resource efficiency are critical to sustaining Quality of Service (QoS) guarantees.

Overall, the findings validate DPF as a deployable and cost-efficient solution for intelligent traffic management in next-generation software-defined infrastructures. Continued development focusing on broader scalability, multi-controller coordination, and continuous learning enhancements will further accelerate its readiness for real-world deployment in large-scale programmable networks.