Bijective Network-to-Image Encoding for Interpretable CNN-Based Intrusion Detection System

Abstract

1. Introduction

1.1. Contribution

- An improved NeT2I and I2NeT implementation that encodes network traffic into RGB images and decodes the images back to network traffic in a bijective manner.

- Developed and evaluated a novel Plug-and-Play CiNeT detection algorithm, a CNN-based IDS tailored for resource constrained edge devices for 5G and beyond.

- A comparative study of CiNeT between TensorFlow and PyTorch across varying architectural depths in terms of performance trade-offs, resource usage, scalability, and speed of training and testing.

- Validation of results across UNSW NB-15, InSDN, and TON_IoT datasets.

1.2. Structure of the Paper

2. Related Work

3. Proposed Algorithm

3.1. Encoding and Decoding Network Traffic (NeT2I-I2NeT Pipeline)

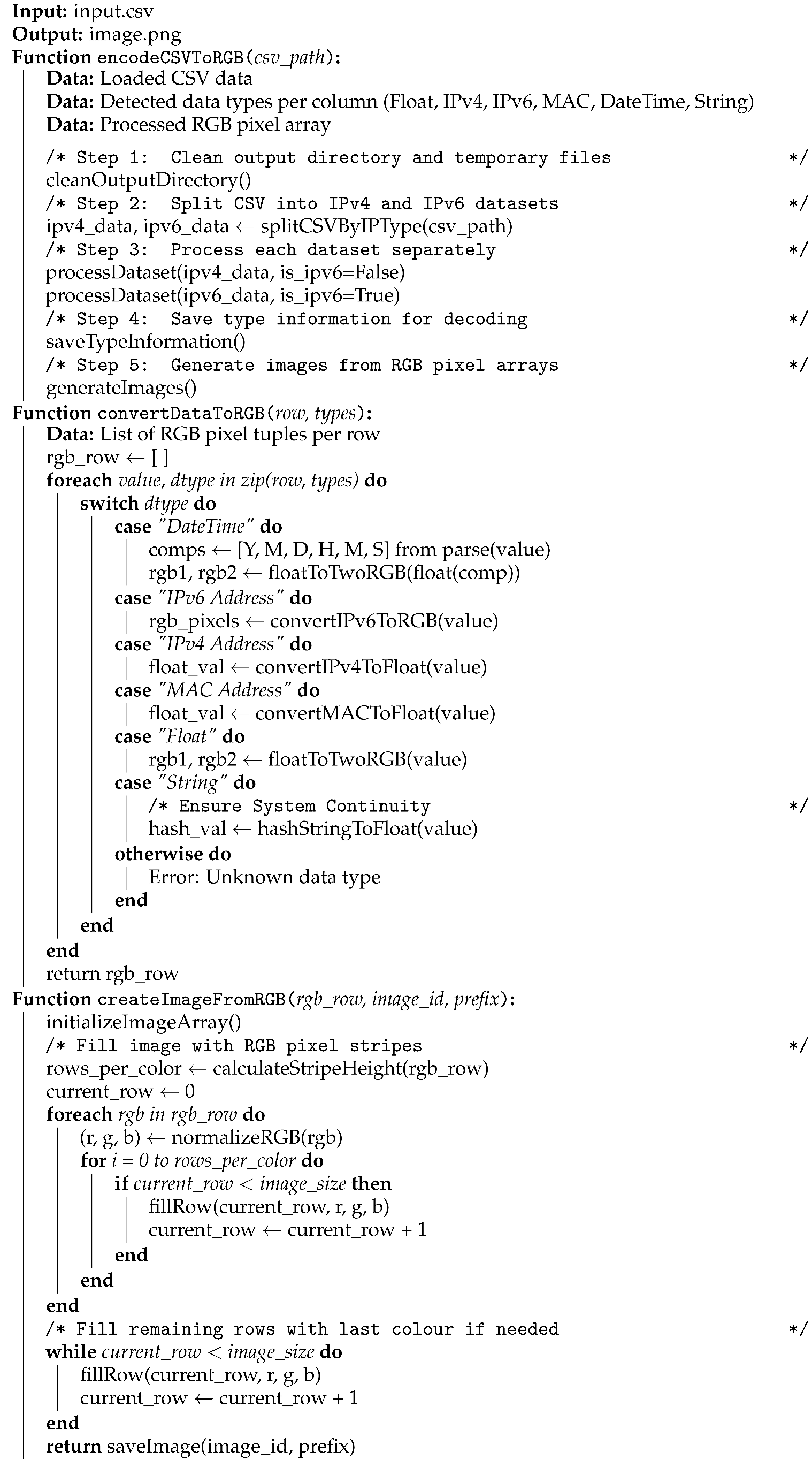

| Algorithm 1: Encoding Algorithm: NeT2I |

|

| Algorithm 2: Decoding Algorithm: I2NeT |

|

3.2. Detection Algorithm (CiNeT)

3.2.1. TensorFlow

| Algorithm 3: CiNeT-TF Algorithm (3-Layer) |

|

3.2.2. PyTorch

| Algorithm 4: CiNeT-PT Algorithm (4-Layer) |

|

4. Evaluation Metrics for the Algorithms

4.1. Workflow and Datasets

4.2. Theoretical and Empirical Performance Analysis

4.3. Theoretical Analysis

4.4. Reproducibility and Implementation Details

- RandomRotation (±40°)

- RandomHorizontalFlip (0.5)

- RandomAffine (translate = (0.2, 0.2), scale = (0.8, 1.2), shear = 0.2)

- ColorJitter (brightness = 0.2, contrast = 0.2, saturation = 0.2, hue = 0.1)

- total_images < 100, candidates: {4, 8, 16}

- 100 ≤ total_images < 500, candidates: {8, 16, 32}

- 500 ≤ total_images < 2000, candidates: {16, 32, 64}

- 2000 ≤ total_images < 5000, candidates: {32, 64, 128}

- , candidates: {64, 128, 256}

- Binary: binary cross-entropy (TensorFlow)/BCEWithLogitsLoss (PyTorch)

- Multi-class: categorical cross-entropy (TensorFlow)/CrossEntropyLoss (PyTorch)

4.5. Empirical Computational Complexity

4.5.1. Execution Time

4.5.2. CPU Usage

4.5.3. Memory Utilisation

4.5.4. GPU Utilisation

5. Results and Analysis

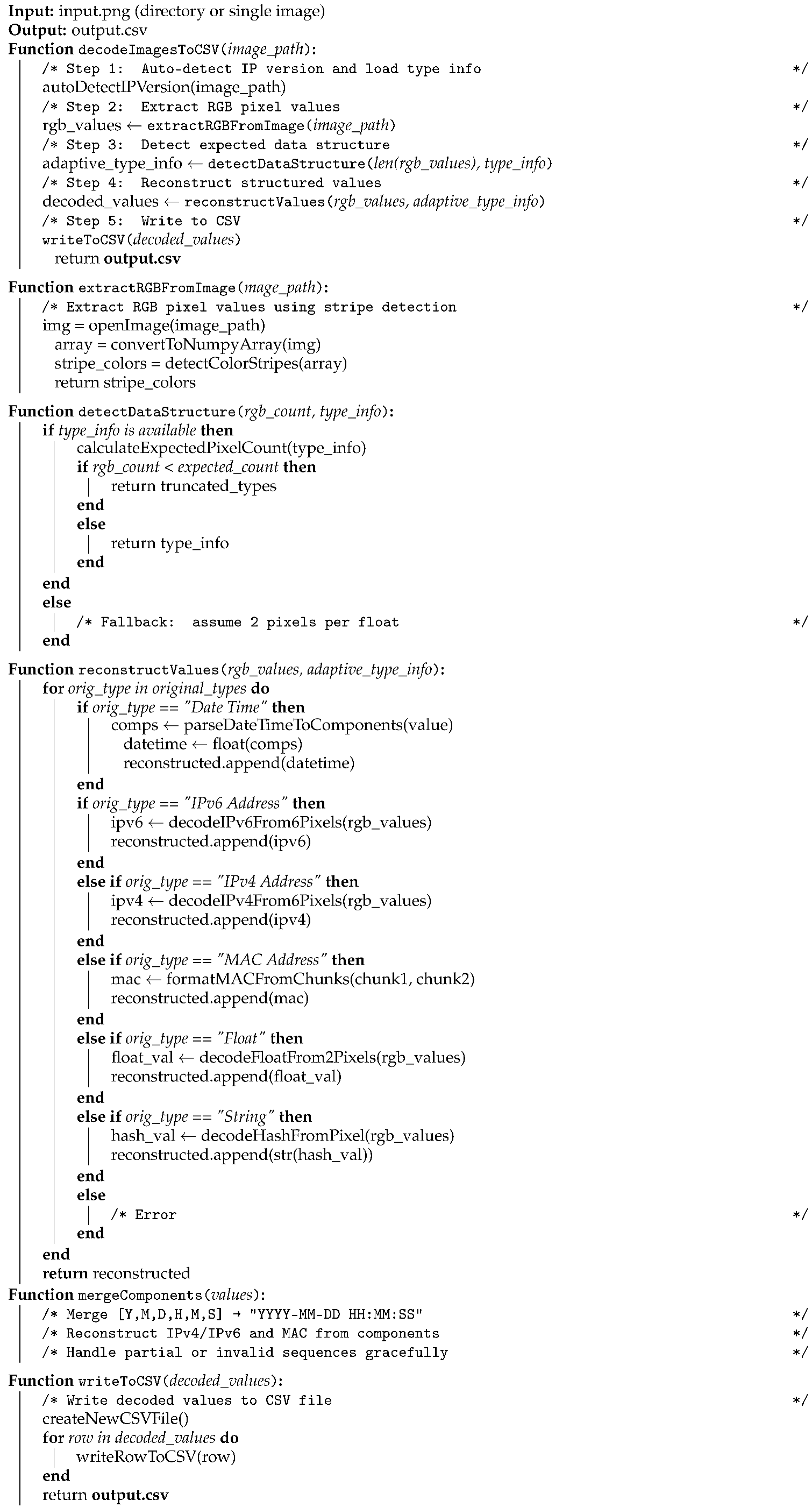

5.1. Encoded Images

- struct.pack(‘!f’,192.0)→IEEE 754 []→RGB

- struct.pack(‘!f’,168.0)→IEEE 754 []→RGB

- struct.pack(‘!f’,1.0)→IEEE 754 []→RGB

- struct.pack(‘!f’,100.0)→IEEE 754 []→RGB

- 00:1A:2B:3C:4D:5E→ and

- →67019

- →3951966

- struct.pack(‘!f’, 67019.0)→IEEE 754 []→RGB

- struct.pack(‘!f’, 3951966.0)→IEEE 754 []→RGB

- IPv6Adress.packed(2001:0db8:85a3:0000:0000:8a2e:0370:7334)→[]

- Appending []→[]→RGB

5.2. Computational Complexity

5.2.1. Execution Time

5.2.2. CPU Usage

5.2.3. Memory Utilisation

5.2.4. GPU Utilisation

5.3. Theoretical Analysis (Big-O Notation)

5.3.1. Theoretical Complexity for NeT2I

- Reading the CSV file is , as each row must be scanned and passed across columns.

- The nested loop, which iterates over each row and feature, results in iterations.

- −

- Within the loop, each data entry is encoded using

- −

- Therefore, processing one row is and for n rows,

- Image generation of p by p pixels, where p is the number of RGB stripes, will result in

- As the pixels are of a fixed size and do not scale with the number of rows or features,

- The input as per the above

- The output image, as per the above

5.3.2. Theoretical Complexity for I2NeT

- Discovery of images with and sorting m files

- Loading the JSON file as

- Image decoding and RGB extraction, involves iterating over p rows in the image, resulting in

- Employing the JSON file, calculation of the pixel count, with d as the number of features, which is constant, resulting in

- Value reconstruction similar to value encoding is

- For one image with m images, . With cost being smaller, the total time complexity can be stated using

- The list of images

- Extracting RGB pixel data and the reconstruction for a row

- Hence, the final CSV

5.3.3. Theoretical Complexity for CiNeT

- For each sample, the model conducts a forward pass through convolutional layers and fully connected layers. As the number of operations is determined by the model architecture and the operations per sample are constant, the forward pass is

- Similarly, the back propagation can be construed as being approximately proportional to the above, hence it is also

- The optimizer updates the weights and this can be , where P is the number of parameters.

- The above steps are repeated for each sample, resulting in times per epoch, with the loop being repeated E times.

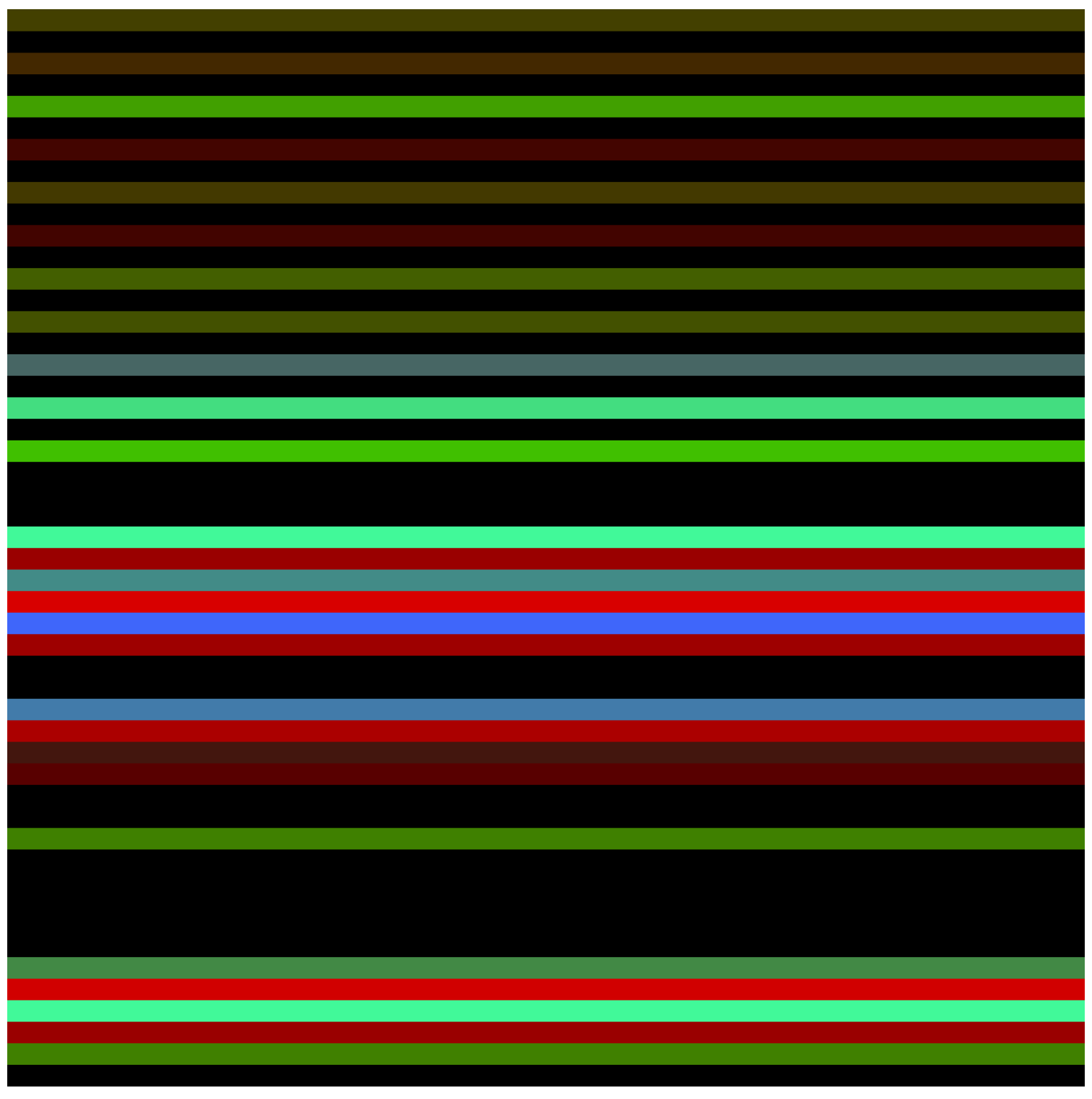

5.4. Training and Validation

5.5. Evaluation of Detection

6. Discussion

7. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Parkvall, S.; Dahlman, E.; Furuskar, A.; Frenne, M. NR: The new 5G radio access technology. IEEE Commun. Stand. Mag. 2018, 1, 24–30. [Google Scholar] [CrossRef]

- Lin, X. The bridge toward 6G: 5G-Advanced evolution in 3GPP Release I9. IEEE Commun. Stand. Mag. 2025, 9, 28–35. [Google Scholar] [CrossRef]

- Chen, W.; Lin, X.; Lee, J.; Toskala, A.; Sun, S.; Chiasserini, C.F.; Liu, L. 5G-advanced toward 6G: Past, present, and future. IEEE J. Sel. Areas Commun. 2023, 41, 1592–1619. [Google Scholar] [CrossRef]

- Lin, X. An overview of 5G advanced evolution in 3GPP release 18. IEEE Commun. Stand. Mag. 2022, 6, 77–83. [Google Scholar] [CrossRef]

- Eleftherakis, S.; Giustiniano, D.; Kourtellis, N. SoK: Evaluating 5G-Advanced Protocols Against Legacy and Emerging Privacy and Security Attacks. In Proceedings of the 18th ACM Conference on Security and Privacy in Wireless and Mobile Networks, Arlington, VA, USA, 30 June–3 July 2025; pp. 196–210. [Google Scholar]

- Michaelides, S.; Lenz, S.; Vogt, T.; Henze, M. Secure integration of 5G in industrial networks: State of the art, challenges and opportunities. Future Gener. Comput. Syst. 2025, 166, 107645. [Google Scholar] [CrossRef]

- Bodenhausen, J.; Sorgatz, C.; Vogt, T.; Grafflage, K.; Rötzel, S.; Rademacher, M.; Henze, M. Securing wireless communication in critical infrastructure: Challenges and opportunities. In Proceedings of the International Conference on Mobile and Ubiquitous Systems: Computing, Networking, and Services, Melbourne, Australia, 14–17 November 2023; Springer: Berlin/Heidelberg, Germany, 2023; pp. 333–352. [Google Scholar]

- Taylor, P. Mobile Phone Subscriptions Worldwide 2024. 2025. Available online: https://www.statista.com/statistics/262950/global-mobile-subscriptions-since-1993/ (accessed on 17 September 2025).

- Ericsson. Mobile Data Traffic Forecast—Ericsson Mobility Report. 2024. Available online: https://www.ericsson.com/en/reports-and-papers/mobility-report/dataforecasts/mobile-traffic-forecast (accessed on 17 September 2025).

- Wang, Y.; Wang, J. Research on Computer Network Big Data Security Defense System Based on Support Vector Machine and Deep Learning. In Proceedings of the 2025 IEEE International Conference on Electronics, Energy Systems and Power Engineering (EESPE), Shenyang, China, 17–19 March 2025; IEEE: Piscataway, NJ, USA, 2025; pp. 386–392. [Google Scholar]

- Ma, J.; Li, S. The construction method of computer network security defense system based on multisource big data. Sci. Program. 2022, 2022, 7300977. [Google Scholar] [CrossRef]

- Apruzzese, G.; Laskov, P.; Montes de Oca, E.; Mallouli, W.; Brdalo Rapa, L.; Grammatopoulos, A.V.; Di Franco, F. The role of machine learning in cybersecurity. Digit. Threat. Res. Pract. 2023, 4, 8. [Google Scholar] [CrossRef]

- Ozkan-Okay, M.; Akin, E.; Aslan, Ö.; Kosunalp, S.; Iliev, T.; Stoyanov, I.; Beloev, I. A comprehensive survey: Evaluating the efficiency of artificial intelligence and machine learning techniques on cyber security solutions. IEEE Access 2024, 12, 12229–12256. [Google Scholar] [CrossRef]

- Ali, M.L.; Thakur, K.; Schmeelk, S.; Debello, J.; Dragos, D. Deep Learning vs. Machine Learning for Intrusion Detection in Computer Networks: A Comparative Study. Appl. Sci. 2025, 15, 1903. [Google Scholar] [CrossRef]

- Rosenberg, I.; Shabtai, A.; Elovici, Y.; Rokach, L. Adversarial machine learning attacks and defense methods in the cyber security domain. ACM Comput. Surv. (CSUR) 2021, 54, 108. [Google Scholar]

- Kaloudi, N.; Li, J. The ai-based cyber threat landscape: A survey. ACM Comput. Surv. (CSUR) 2020, 53, 20. [Google Scholar] [CrossRef]

- Chauhan, N.K.; Singh, K. A review on conventional machine learning vs deep learning. In Proceedings of the 2018 International Conference on Computing, Power and Communication Technologies (GUCON), Greater Noida, India, 28–29 September 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 347–352. [Google Scholar]

- Charmet, F.; Tanuwidjaja, H.C.; Ayoubi, S.; Gimenez, P.F.; Han, Y.; Jmila, H.; Blanc, G.; Takahashi, T.; Zhang, Z. Explainable artificial intelligence for cybersecurity: A literature survey. Ann. Telecommun. 2022, 77, 789–812. [Google Scholar] [CrossRef]

- Al-Turaiki, I.; Altwaijry, N. A convolutional neural network for improved anomaly-based network intrusion detection. Big Data 2021, 9, 233–252. [Google Scholar] [CrossRef]

- Kim, J.; Kim, J.; Kim, H.; Shim, M.; Choi, E. CNN-based network intrusion detection against denial-of-service attacks. Electronics 2020, 9, 916. [Google Scholar] [CrossRef]

- Nazarri, M.N.A.A.; Yusof, M.H.M.; Almohammedi, A.A. Generating network intrusion image through IGTD algorithm for CNN classification. In Proceedings of the 2023 3rd International Conference on Computing and Information Technology (ICCIT), Tabuk, Saudi Arabia, 10–11 May 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 172–177. [Google Scholar]

- Shahriari, M.; Ramler, R.; Fischer, L. How do deep-learning framework versions affect the reproducibility of neural network models? Mach. Learn. Knowl. Extr. 2022, 4, 888–911. [Google Scholar] [CrossRef]

- Moustafa, N.; Slay, J. UNSW-NB15: A comprehensive data set for network intrusion detection systems (UNSW-NB15 network data set). In Proceedings of the 2015 Military Communications and Information Systems Conference (MilCIS), Canberra, Australia, 10–12 November 2015; pp. 1–6. [Google Scholar]

- Elsayed, M.S.; Le-Khac, N.A.; Jurcut, A.D. InSDN: A novel SDN intrusion dataset. IEEE Access 2020, 8, 165263–165284. [Google Scholar] [CrossRef]

- Moustafa, N. The ToN_IoT Datasets. Available online: https://research.unsw.edu.au/projects/toniot-datasets (accessed on 17 September 2025).

- Fernando, O.A.; Xiao, H.; Spring, J. New Algorithms for the Detection of Malicious Traffic in 5G-MEC. In Proceedings of the 2023 IEEE Wireless Communications and Networking Conference (WCNC), Glasgow, UK, 26–29 March 2023; IEEE: Piscataway, NJ, USA, 2023. [Google Scholar]

- Adadi, A.; Berrada, M. Peeking inside the black-box: A survey on explainable artificial intelligence (XAI). IEEE Access 2018, 6, 52138–52160. [Google Scholar] [CrossRef]

- Tareq, I.; Elbagoury, B.M.; El-Regaily, S.; El-Horbaty, E.S.M. Analysis of ton-iot, unw-nb15, and edge-iiot datasets using dl in cybersecurity for iot. Appl. Sci. 2022, 12, 9572. [Google Scholar] [CrossRef]

- Kolhar, M.; Aldossary, S.M. A deep learning approach for securing IoT infrastructure with emphasis on smart vertical networks. Designs 2023, 7, 139. [Google Scholar] [CrossRef]

- Yin, C.; Zhu, Y.; Fei, J.; He, X. A deep learning approach for intrusion detection using recurrent neural networks. IEEE Access 2017, 5, 21954–21961. [Google Scholar] [CrossRef]

- Shaheen, F.; Verma, B.; Asafuddoula, M. Impact of automatic feature extraction in deep learning architecture. In Proceedings of the 2016 International Conference on Digital Image Computing: Techniques and Applications (DICTA), Gold Coast, Australia, 30 November–2 December 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1–8. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. Pytorch: An imperative style, high-performance deep learning library. Adv. Neural Inf. Process. Syst. 2019, 32. [Google Scholar]

- Dash, N.; Chakravarty, S.; Rath, A.K.; Giri, N.C.; AboRas, K.M.; Gowtham, N. An optimized LSTM-based deep learning model for anomaly network intrusion detection. Sci. Rep. 2025, 15, 1554. [Google Scholar] [CrossRef] [PubMed]

- Thakkar, A.; Kikani, N.; Geddam, R. Fusion of linear and non-linear dimensionality reduction techniques for feature reduction in LSTM-based Intrusion Detection System. Appl. Soft Comput. 2024, 154, 111378. [Google Scholar] [CrossRef]

- Bukhari, S.M.S.; Zafar, M.H.; Abou Houran, M.; Moosavi, S.K.R.; Mansoor, M.; Muaaz, M.; Sanfilippo, F. Secure and privacy-preserving intrusion detection in wireless sensor networks: Federated learning with SCNN-Bi-LSTM for enhanced reliability. Ad Hoc Netw. 2024, 155, 103407. [Google Scholar] [CrossRef]

- Hossain, M.D.; Inoue, H.; Ochiai, H.; Fall, D.; Kadobayashi, Y. LSTM-based intrusion detection system for in-vehicle can bus communications. IEEE Access 2020, 8, 185489–185502. [Google Scholar] [CrossRef]

- Lira, O.G.; Marroquin, A.; To, M.A. Harnessing the advanced capabilities of llm for adaptive intrusion detection systems. In Proceedings of the International Conference on Advanced Information Networking and Applications, Kitakyushu, Japan, 17–19 April 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 453–464. [Google Scholar]

- Adjewa, F.; Esseghir, M.; Merghem-Boulahia, L.; Kacfah, C. Llm-based continuous intrusion detection framework for next-gen networks. In Proceedings of the 2025 International Wireless Communications and Mobile Computing (IWCMC), Abu Dhabi, United Arab Emirates, 12–16 May 2025; IEEE: Piscataway, NJ, USA, 2025; pp. 1198–1203. [Google Scholar]

- Gao, Y.; He, Y.; Li, X.; Zhao, B.; Lin, H.; Liang, Y.; Zhong, J.; Zhang, H.; Wang, J.; Zeng, Y.; et al. An empirical study on low gpu utilization of deep learning jobs. In Proceedings of the IEEE/ACM 46th International Conference on Software Engineering, Lisbon, Portugal, 14–20 April 2024; pp. 1–13. [Google Scholar]

- Amini, M.; Asemian, G.; Kantarci, B.; Ellement, C.; Erol-Kantarci, M. Deep Fusion Intelligence: Enhancing 5G Security Against Over-the-Air Attacks. IEEE Trans. Mach. Learn. Commun. Netw. 2025, 3, 263–279. [Google Scholar] [CrossRef]

- Rajabi, S.; Asgari, S.; Jamali, S.; Fotohi, R. An intrusion detection system using the artificial neural network-based approach and firefly algorithm. Wirel. Pers. Commun. 2024, 137, 2409–2440. [Google Scholar] [CrossRef]

- Alzubi, O.A.; Alzubi, J.A.; Qiqieh, I.; Al-Zoubi, A. An IoT intrusion detection approach based on salp swarm and artificial neural network. Int. J. Netw. Manag. 2025, 35, e2296. [Google Scholar] [CrossRef]

- Azzaoui, H.; Boukhamla, A.Z.E.; Perazzo, P.; Alazab, M.; Ravi, V. A lightweight cooperative intrusion detection system for rpl-based iot. Wirel. Pers. Commun. 2024, 134, 2235–2258. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Azizjon, M.; Jumabek, A.; Kim, W. 1D CNN based network intrusion detection with normalization on imbalanced data. In Proceedings of the 2020 International Conference on Artificial Intelligence in Information and Communication (ICAIIC), Fukuoka, Japan, 19–21 February 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 218–224. [Google Scholar]

- Abdulraheem, M.H.; Ibraheem, N.B. Anomaly-Based Intrusion Detection System Using One Dimensional and Two Dimensional Convolutions. In Proceedings of the International Conference on Applied Computing to Support Industry: Innovation and Technology, Ramadi, Iraq, 15–16 September 2019; Springer: Berlin/Heidelberg, Germany, 2019; pp. 409–423. [Google Scholar]

- Mohammadpour, L.; Ling, T.C.; Liew, C.S.; Aryanfar, A. A survey of CNN-based network intrusion detection. Appl. Sci. 2022, 12, 8162. [Google Scholar] [CrossRef]

- Elouardi, S.; Motii, A.; Jouhari, M.; Amadou, A.N.H.; Hedabou, M. A survey on Hybrid-CNN and LLMs for intrusion detection systems: Recent IoT datasets. IEEE Access 2024, 48, 180009–180033. [Google Scholar] [CrossRef]

- Wang, L.H.; Dai, Q.; Du, T.; Chen, L.f. Lightweight intrusion detection model based on CNN and knowledge distillation. Appl. Soft Comput. 2024, 165, 112118. [Google Scholar] [CrossRef]

- Abed, R.A.; Hamza, E.K.; Humaidi, A.J. A modified CNN-IDS model for enhancing the efficacy of intrusion detection system. Meas. Sens. 2024, 35, 101299. [Google Scholar] [CrossRef]

- El-Ghamry, A.; Darwish, A.; Hassanien, A.E. An optimized CNN-based intrusion detection system for reducing risks in smart farming. Internet Things 2023, 22, 100709. [Google Scholar] [CrossRef]

- Yang, L.; Shami, A. A transfer learning and optimized CNN based intrusion detection system for Internet of Vehicles. In Proceedings of the ICC 2022—IEEE International Conference on Communications, Seoul, Republic of Korea, 16–20 May 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 2774–2779. [Google Scholar]

- Lu, K.D.; Huang, J.C.; Zeng, G.Q.; Chen, M.R.; Geng, G.G.; Weng, J. Multi-objective discrete extremal optimization of variable-length blocks-based CNN by joint NAS and HPO for intrusion detection in IIoT. IEEE Trans. Dependable Secur. Comput. 2025, 22, 4266–4283. [Google Scholar] [CrossRef]

- Kharoubi, K.; Cherbal, S.; Mechta, D.; Gawanmeh, A. Network intrusion detection system using convolutional neural networks: Nids-dl-cnn for iot security. Clust. Comput. 2025, 28, 219. [Google Scholar] [CrossRef]

- Torre, D.; Chennamaneni, A.; Jo, J.; Vyas, G.; Sabrsula, B. Toward enhancing privacy preservation of a federated learning cnn intrusion detection system in iot: Method and empirical study. ACM Trans. Softw. Eng. Methodol. 2025, 34, 53. [Google Scholar] [CrossRef]

- Kim, I.; Chung, T.M. Malicious-Traffic Classification Using Deep Learning with Packet Bytes and Arrival Time. In Proceedings of the International Conference on Future Data and Security Engineering, Quy Nhon, Vietnam, 25–27 November 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 345–356. [Google Scholar]

- Wang, Z.; Ghaleb, F.A.; Zainal, A.; Siraj, M.M.; Lu, X. An efficient intrusion detection model based on convolutional spiking neural network. Sci. Rep. 2024, 14, 7054. [Google Scholar] [CrossRef]

- Yue, C.; Wang, L.; Wang, D.; Duo, R.; Nie, X. An ensemble intrusion detection method for train Ethernet consist network based on CNN and RNN. IEEE Access 2021, 9, 59527–59539. [Google Scholar] [CrossRef]

- Liu, Y.; Kang, J.; Li, Y.; Ji, B. A Network Intrusion Detection Method Based on CNN and CBAM. In Proceedings of the IEEE INFOCOM 2021—IEEE Conference on Computer Communications Workshops (INFOCOM WKSHPS), Vancouver, BC, Canada, 9–13 May 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–6. [Google Scholar]

- Ding, Y.; Zhai, Y. Intrusion detection system for NSL-KDD dataset using convolutional neural networks. In Proceedings of the 2018 2nd International Conference on Computer Science and Artificial Intelligence, Las Vegas, NV, USA, 12–14 December 2018; pp. 81–85. [Google Scholar]

- Shapira, T.; Shavitt, Y. Flowpic: Encrypted internet traffic classification is as easy as image recognition. In Proceedings of the IEEE INFOCOM 2019—IEEE Conference on Computer Communications Workshops (INFOCOM WKSHPS), Paris, France, 29 April–2 May 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 680–687. [Google Scholar]

- Janabi, A.H.; Kanakis, T.; Johnson, M. Convolutional Neural Network Based Algorithm for Early Warning Proactive System Security in Software Defined Networks. IEEE Access 2022, 10, 14301–14310. [Google Scholar] [CrossRef]

- Farrukh, Y.A.; Wali, S.; Khan, I.; Bastian, N.D. Senet-i: An approach for detecting network intrusions through serialized network traffic images. Eng. Appl. Artif. Intell. 2023, 126, 107169. [Google Scholar] [CrossRef]

- Zilberman, A.; Dvir, A.; Stulman, A. IPv6 Routing Protocol for Low-Power and Lossy Networks Security Vulnerabilities and Mitigation Techniques: A Survey. ACM Comput. Surv. 2025, 57, 280. [Google Scholar] [CrossRef]

- IR Team. IPv4 to IPv6 Migration: Complete Enterprise Guide for 2025; IR Team: Sydney, Australia, 2025. [Google Scholar]

- Riedy, J.; Demmel, J. Augmented arithmetic operations proposed for IEEE-754 2018. In Proceedings of the 2018 IEEE 25th Symposium on Computer Arithmetic (ARITH), Amherst, MA, USA, 25–27 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 45–52. [Google Scholar]

- Fernando, O. OMESHF/NeT2I: NeT2I (Network to Image) Is a Python Package for Converting Network Traffic Data into Image Representations. Available online: https://github.com/omeshF/NeT2I (accessed on 17 September 2025).

- Fernando, O. NeT2I. Available online: https://pypi.org/project/net2i/ (accessed on 17 September 2025).

- Fernando, O. OMESHF/I2NeT: I2NeT (Image to Network) Is the Reverse Companion to NeT2I. It Decodes RGB Images Created from Network Traffic Data Back into Structured Tabular Form. Available online: https://github.com/omeshF/I2NeT (accessed on 17 September 2025).

- Fernando, O. I2NeT. Available online: https://pypi.org/project/i2net/ (accessed on 17 September 2025).

- Fernando, O. OMESHF/CiNeT: CiNeT (Classify in Network Transformation) Is a Project That Provides Dynamic CNN Classifiers with GPU/RAM Monitoring in Both TensorFlow/Keras and PyTorch. Available online: https://github.com/omeshF/CiNeT (accessed on 17 September 2025).

- Darst, B.F.; Malecki, K.C.; Engelman, C.D. Using recursive feature elimination in random forest to account for correlated variables in high dimensional data. BMC Genet. 2018, 19, 65. [Google Scholar] [CrossRef] [PubMed]

- Sedgewick, R.; Wayne, K. Algorithms: Part I; Addison-Wesley Professional: Boston, MA, USA, 2014. [Google Scholar]

- Blackburn, S.M.; Garner, R.; Hoffmann, C.; Khang, A.M.; McKinley, K.S.; Bentzur, R.; Diwan, A.; Feinberg, D.; Frampton, D.; Guyer, S.Z.; et al. The DaCapo benchmarks: Java benchmarking development and analysis. In Proceedings of the 21st Annual ACM SIGPLAN Conference on Object-Oriented Programming Systems, Languages, and Applications, Portland, OR, USA, 22–26 October 2006; pp. 169–190. [Google Scholar]

- Barbarossa, S.; Sardellitti, S.; Ceci, E. Joint Communications and Computation: A Survey on Mobile Edge Computing. IEEE Signal Process. Mag. 2018, 35, 36–58. [Google Scholar]

- Gorelick, M.; Ozsvald, I. High Performance Python: Practical Performant Programming for Humans; O’Reilly Media: Sebastopol, CA, USA, 2020. [Google Scholar]

- Buttazzo, G.C. Hard Real-Time Computing Systems: Predictable Scheduling Algorithms and Applications; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2011; Volume 24. [Google Scholar]

- Sencan, E.; Kulkarni, D.; Coskun, A.; Konate, K. Analyzing GPU Utilization in HPC Workloads: Insights from Large-Scale Systems. In Proceedings of the Practice and Experience in Advanced Research Computing (PEARC ’25), New York, NY, USA, 20–24 July 2025; pp. 1–10. [Google Scholar] [CrossRef]

- Ganguly, D.; Mofrad, M.H.; Znati, T.; Melhem, R.; Lange, J.R. Harvesting Underutilized Resources to Improve Responsiveness and Tolerance to Crash and Silent Faults for Data-Intensive Applications. In Proceedings of the 2017 IEEE 10th International Conference on Cloud Computing (CLOUD), Honolulu, HI, USA, 25–30 June 2017; pp. 536–543. [Google Scholar] [CrossRef]

- Ananthanarayanan, G.; Ghodsi, A.; Shenker, S.; Stoica, I. Why let resources idle? Aggressive cloning of jobs with Dolly. In Proceedings of the 4th USENIX Workshop on Hot Topics in Cloud Computing (HotCloud 12), Boston, MA, USA, 12–13 June 2012. [Google Scholar]

- Govindan, S.; Sivasubramaniam, A.; Urgaonkar, B. Benefits and limitations of tapping into stored energy for datacenters. In Proceedings of the 38th Annual International Symposium on Computer Architecture, San Jose, CA, USA, 4–8 June 2011; pp. 341–352. [Google Scholar]

- Papadimitriou, C.H. Computational complexity. In Encyclopedia of Computer Science; Wiley: Hoboken, NJ, USA, 2003; pp. 260–265. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Tambon, F.; Nikanjam, A.; An, L.; Khomh, F.; Antoniol, G. Silent Bugs in Deep Learning Frameworks: An Empirical Study of Keras and TensorFlow. arXiv 2021, arXiv:2112.13314. [Google Scholar] [CrossRef]

- Zhang, Y.; Chen, Y.; Cheung, S.C.; Xiong, Y.; Zhang, L. An Empirical Study on TensorFlow Program Bugs. In Proceedings of the 27th ACM SIGSOFT International Symposium on Software Testing and Analysis, Amsterdam, The Netherlands, 16–21 July 2018; ACM: New York, NY, USA, 2018; pp. 129–140. [Google Scholar]

- Zhang, H.; Zhang, L.; Jiang, Y. Overfitting and underfitting analysis for deep learning based end-to-end communication systems. In Proceedings of the 2019 11th International Conference on Wireless Communications and Signal Processing (WCSP), Xi’an, China, 23–25 October 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–6. [Google Scholar]

- Fernando, O.A.; Xiao, H.; Spring, J. Developing a Testbed with P4 to Generate Datasets for the Analysis of 5G-MEC Security. In Proceedings of the 2022 IEEE Wireless Communications and Networking Conference (WCNC), Austin, TX, USA, 10–13 April 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 2256–2261. [Google Scholar]

- Fernando, O.A.; Xiao, H.; Spring, J.; Che, X. A Performance Evaluation for Software Defined Networks with P4. Network 2025, 5, 21. [Google Scholar] [CrossRef]

- Fernando, O.A. Real-Time Application of Deep Learning to Intrusion Detection in 5G-Multi-Access Edge Computing. Ph.D. Thesis, University of Hertfordshire, Hatfield, UK, 2024. [Google Scholar]

| Dataset | Selected Features |

|---|---|

| In-SDN | f2, f3, f4, f5, f6, f8, f9, f10, f11, f12, f13, f15, f19, f21, f22, f23, f24, f27, f31, f68, f78 |

| UNSW NB-15 | f1, f2, f4, f5, f6, f7, f10, f11, f16, f17, f18, f19, f21, f24, f25, f26, f27, f28, f38, f40, f45 |

| ToN IoT | f1, f2, f3, f4, f5, f6, f7, f8, f9, f10, f11, f12, f13, f14, f15, f16, f17, f18, f19, f20, f21 |

| Aspect | Details |

|---|---|

| Deep Learning Framework | PyTorch 2.1.0, TensorFlow 2.15.0 |

| CUDA/cuDNN | CUDA 12.2, cuDNN 8.9 |

| Python Version | 3.10.12 |

| Random Seeds | 42 for all experiments |

| Learning Rate | 1 × (RMSProp) |

| Batch size | Selected dynamically based on dataset size |

| Epochs | 100 |

| Data Augmentation | RandomRotation RandomHorizontalFlip RandomAffine ColorJitter |

| Training/Validation/Test | 70%, 15%, 15% |

| Kernel Size | 3 × 3 |

| Pooling Kernel | 2 × 2 |

| Loss Function (TF) | Binary_crossentropy/Categorical Cross _Entropy |

| Loss Function (PT) | BCEWithLogitsLoss/CrossEntropyLoss |

| Class Imbalance Handling | Class-weighted loss (inverse frequency weighting) |

| Algorithm | Dataset | Number of Images | Total Execution Time | Execution Time per Image | CPU Usage | Memory Utilisation |

|---|---|---|---|---|---|---|

| NeT2I | InSDN | 215,000 | 99 s | 0.00046 s | 100% | 18% |

| UNSW NB 15 | 215,000 | 100 s | 0.000465 s | 100% | 19% | |

| TON-IoT | 215,000 | 100 s | 0.000465 s | 100% | 19% | |

| I2NeT | InSDN | 215,000 | 103 s | 0.00047 s | 100% | 20% |

| UNSW NB 15 | 215,000 | 102 s | 0.000474 s | 100% | 20% | |

| TON-IoT | 215,000 | 101 s | 0.000469 s | 100% | 20% |

| Algorithm | Layers | Training Time | Validation Time | Testing Time | GPU Usage | Memory Utilisation |

|---|---|---|---|---|---|---|

| CiNeT-TF | 1 Layer | 12.35 h | 1.49 h | 25 s | 98.2% | 26.9% |

| 2 Layers | 12.11 h | 2.01 h | 43 s | 99.9% | 27.5% | |

| 3 Layers | 13.25 h | 2.11 h | 1.24 min | 99.9% | 27.7% | |

| 4 Layers | 15.1 h | 2.35 h | 2.15 min | 99.9% | 29.5% | |

| 5 Layers | 17.45 h | 3.05 h | 3.30 min | 99.9% | 30% | |

| CiNeT-PT | 1 Layer | 5.1 h | 3.19 h | 2.01 min | 5.1% | 8.4% |

| 2 Layers | 5.24 h | 3.29 h | 2.09 min | 8.9% | 9.4% | |

| 3 Layers | 5.38 h | 3.31 h | 2.11 min | 13.2% | 10.6% | |

| 4 Layers | 6.01 h | 3.42 h | 2.13 min | 14.8% | 11% | |

| 5 Layers | 6.22 h | 3.45 h | 2.20 min | 15.8% | 12.1% |

| Dataset | CiNeT-TF (%) | CiNeT-PT (%) | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| 1L | 2L | 3L | 4L | 5L | 1L | 2L | 3L | 4L | 5L | |

| InSDN | 94.3 | 95.8 | 97.1 | 96.5 | 95.9 | 96.7 | 97.8 | 98.4 | 99.1 | 98.6 |

| UNSW-NB15 | 93.6 | 95.0 | 96.8 | 96.0 | 95.3 | 95.9 | 97.1 | 98.0 | 98.9 | 98.3 |

| ToN-IoT | 94.8 | 96.0 | 97.2 | 96.7 | 96.1 | 97.0 | 98.0 | 98.7 | 99.2 | 98.8 |

| Traffic Class | InSDN | UNSW-NB15 | ToN-IoT | |||

|---|---|---|---|---|---|---|

| CiNeT-TF | CiNeT-PT | CiNeT-TF | CiNeT-PT | CiNeT-TF | CiNeT-PT | |

| (3L) | (4L) | (3L) | (4L) | (3L) | (4L) | |

| Normal | 98.5 | 99.0 | 96.9 | 97.5 | 99.1 | 99.4 |

| DDoS | 98.7 | 99.3 | 97.2 | 98.1 | 99.0 | 99.5 |

| DoS | 97.5 | 98.4 | 95.8 | 96.9 | 98.1 | 98.8 |

| Reconnaissance | 96.3 | 97.5 | 94.6 | 95.7 | 97.0 | 97.8 |

| Exploits | 95.1 | 96.4 | 93.2 | 94.5 | 95.9 | 96.7 |

| Backdoor | 94.0 | 95.2 | 91.8 | 93.0 | 94.8 | 95.6 |

| Traffic Class | InSDN | UNSW-NB15 | ToN-IoT | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Acc | Prec | Rec | F1 | Acc | Prec | Rec | F1 | Acc | Prec | Rec | F1 | |

| Normal | 99.0 | 98.7 | 99.2 | 98.9 | 97.5 | 97.1 | 97.8 | 97.4 | 99.4 | 99.2 | 99.5 | 99.3 |

| DDoS | 99.3 | 99.1 | 99.4 | 99.2 | 98.1 | 97.8 | 98.3 | 98.0 | 99.5 | 99.3 | 99.6 | 99.4 |

| DoS | 98.4 | 98.0 | 98.7 | 98.3 | 96.9 | 96.5 | 97.2 | 96.8 | 98.8 | 98.5 | 99.0 | 98.7 |

| Reconnaissance | 97.5 | 97.0 | 97.9 | 97.4 | 95.7 | 95.2 | 96.0 | 95.6 | 97.8 | 97.4 | 98.1 | 97.7 |

| Exploits | 96.4 | 95.9 | 96.8 | 96.3 | 94.5 | 94.0 | 94.9 | 94.4 | 96.7 | 96.3 | 97.0 | 96.6 |

| Backdoor | 95.2 | 94.7 | 95.6 | 95.1 | 93.0 | 92.5 | 93.4 | 92.9 | 95.6 | 95.2 | 95.9 | 95.5 |

| Metric | CiNeT-TF (3 Layer) Mean ± STD | CiNeT-PT (4 Layer) Mean ± STD |

|---|---|---|

| Training Time (h) | 13.25 ± 0.32 | 6.01 ± 0.15 |

| Accuracy (%) | 97.2 ± 0.4 | 99.2 ± 0.1 |

| GPU Usage (%) | 99.9 ± 0.1 | 14.8 ± 1.2 |

| Memory Utilisation (%) | 27.7 ± 2.5 | 11 ± 0.7 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fernando, O.A.; Spring, J.; Xiao, H. Bijective Network-to-Image Encoding for Interpretable CNN-Based Intrusion Detection System. Network 2025, 5, 42. https://doi.org/10.3390/network5040042

Fernando OA, Spring J, Xiao H. Bijective Network-to-Image Encoding for Interpretable CNN-Based Intrusion Detection System. Network. 2025; 5(4):42. https://doi.org/10.3390/network5040042

Chicago/Turabian StyleFernando, Omesh A., Joseph Spring, and Hannan Xiao. 2025. "Bijective Network-to-Image Encoding for Interpretable CNN-Based Intrusion Detection System" Network 5, no. 4: 42. https://doi.org/10.3390/network5040042

APA StyleFernando, O. A., Spring, J., & Xiao, H. (2025). Bijective Network-to-Image Encoding for Interpretable CNN-Based Intrusion Detection System. Network, 5(4), 42. https://doi.org/10.3390/network5040042