Abstract

The successful integration of Terrestrial and Non-Terrestrial Networks (T/NTNs) in 6G is poised to revolutionize demanding domains like Earth Observation (EO) and Intelligent Transportation Systems (ITSs). Still, it requires Distributed Machine Learning (DML) frameworks that are scalable, private, and efficient. Existing methods, such as Federated Learning (FL) and Split Learning (SL), face critical limitations in terms of client computation burden and latency. To address these challenges, this paper proposes a novel hierarchical DML paradigm. We first introduce Federated Split Transfer Learning (FSTL), a foundational framework that synergizes FL, SL, and Transfer Learning (TL) to enable efficient, privacy-preserving learning within a single client group. We then extend this concept to the Generalized FSTL (GFSTL) framework, a scalable, multi-group architecture designed for complex and large-scale networks. GFSTL orchestrates parallel training across multiple client groups managed by intermediate servers (RSUs/HAPs) and aggregates them at a higher-level central server, significantly enhancing performance. We apply this framework to a unified T/NTN architecture that seamlessly integrates vehicular, aerial, and satellite assets, enabling advanced applications in 6G ITS and EO. Comprehensive simulations using the YOLOv5 model on the Cityscapes dataset validate our approach. The results show that GFSTL not only achieves faster convergence and higher detection accuracy but also substantially reduces communication overhead compared to baseline FL, and critically, both detection accuracy and end-to-end latency remain essentially invariant as the number of participating users grows, making GFSTL especially well suited for large-scale heterogeneous 6G ITS deployments. We also provide a formal latency decomposition and analysis that explains this scaling behavior. This work establishes GFSTL as a robust and practical solution for enabling the intelligent, connected, and resilient ecosystems required for next-generation transportation and environmental monitoring.

1. Introduction

With the 6G vision set to shape society in the 2030s into a more advanced, digitized, fully connected, and intelligent world, transportation networks are undergoing a significant transformation, converging into Intelligent Transportation Systems (ITSs) [1]. The field of ITS is rapidly expanding, with the aim of improving the safety, efficiency and sustainability of traditional transportation systems by using technologies such as Machine Learning (ML), the Internet of Things (IoT) and advanced communication modes [2]. The presence of IoT subsystems facilitates the generation of large amounts of data from various components of the ITS infrastructure over time [3]. These data can be used to provide intelligent solutions, optimize traffic management, improve user experiences, and improve environmental outcomes. However, significant challenges arise in the acquisition and analysis of data from various sources, including vehicles, sensors, and traffic cameras [4].

Recent advances in Distributed Machine Learning (DML) techniques offer promising solutions to these challenges. Among them, Federated Learning (FL) has emerged as a particularly effective paradigm for training ML models in a distributed manner, enabling collaboration while maintaining data privacy [5]. FL facilitates shared model training without requiring the exchange of individual data among multiple devices. Each device trains a local model on its data and then updates a central server with its progress. The server aggregates the updates and applies them to a global shared model. However, a significant limitation of FL lies in the necessity for each client to independently train the entire ML model. This process demands substantial computational resources and becomes particularly challenging for clients with constrained resources, notably within ITS contexts, where the use of complex models such as Deep Neural Networks (DNNs) is common [6]. Furthermore, iterative transmission of local and global model parameters has raised new privacy concerns, including the risks of data poisoning and model inversions, highlighting the need for improved security mechanisms in FL frameworks [7].

To mitigate the limitations of FL, Split Learning (SL) presents an alternative approach. SL allows complex ML models to be trained by dividing them into two parts, with each part trained on a client or a server using local data from distributed clients [8]. This method significantly reduces the computational burden on resource-limited devices, as only a portion of the model is trained locally and communication is limited to the activation of the cut layer. Consequently, SL enhances model privacy by preventing direct access to the entire model on either side. Recent research has demonstrated the potential of SL-inspired frameworks to enhance the efficiency and scalability of FL approaches, allowing the training of more sophisticated DNNs while maintaining privacy and reducing costs [9].

In addition to these methods, Transfer Learning (TL) from the meta-learning family has gained attention for its ability to improve training efficiency by facilitating knowledge transfer from previous tasks to new related tasks [10]. TL can accelerate convergence rates, reduce reliance on labeled data, and enhance the robustness of ML models in various vehicular scenarios. The integration of TL with FL, particularly in resource-constrained ITS environments, presents a valuable opportunity to leverage previous knowledge and improve model performance [11]. However, existing works have yet to comprehensively address how these techniques can be optimally combined to maximize their benefits in dynamic and heterogeneous environments.

Earth Observation (EO) has emerged as a complementary technology that can significantly enhance the capabilities of ITS. EO involves the collection and analysis of satellite and aerial data, providing critical information on traffic conditions, weather patterns, and environmental changes [12]. This integration allows ITS to benefit from real-time data streams, facilitating improved traffic management, hazard detection, and overall situational awareness [13]. However, integrating EO with ITS presents its own set of challenges, including the need for efficient data fusion techniques from heterogeneous sources, real-time processing capabilities, and advanced ML methodologies to analyze vast amounts of EO data effectively [14].

In the context of the 6G vision, Non-Terrestrial Networks (NTNs) play a pivotal role in providing global coverage and capacity enhancements for traditional terrestrial networks. Various NTN platforms, including High-Altitude Platforms (HAPs), offer significant advantages to vehicular users (VUs), such as reduced transmission distances, improved coverage, and flexible deployment options [15]. These characteristics make NTNs particularly well-suited for supporting intelligent solutions in ITS by facilitating efficient DML methods and enhancing connectivity in remote or underserved areas [16].

In the space of ITS-oriented DML, several hybrid frameworks have been explored, each contributing valuable insights, yet leaving important gaps. For example, ref. [17] introduces a hybrid of FL and SL tailored to ITS, enabling personalized model training across vehicles while preserving data privacy; however, it lacks TL integration and does not consider the latency and resource constraints of multilayer network deployments. Another relevant study, ref. [18], evaluates the security and effectiveness of FL, SL, and TL in vehicular networks; while it clarifies which techniques are feasible for privacy preservation, it remains a feasibility study and does not propose a unified framework that combines these paradigms with scalability in multilayer NTN settings. A third work [19] develops a variant of Federated Split Learning (FSL) that addresses the challenge of vehicles lacking labeled data by splitting the model between onboard units and RSUs, preserving both data and label privacy; however, it does not address dynamic grouping, hierarchical aggregation, or real-time adaptation in 6G NTN-enhanced ITS.

1.1. Gaps in the Literature and Motivations

Table 1 summarizes representative prior works and highlights the main differences relative to our approach. Although existing studies have advanced FL, SL, TL and hybrid schemes for edge and vehicular settings, several important gaps remain that limit their applicability to multilayer 6G ITS and NTN-enabled Earth Observation. The most salient gaps are as follows:

- 1.

- Under-utilization of multilayer NTN architectures: Many prior frameworks assume single-tier or purely terrestrial deployments and therefore do not exploit the potential benefits of coordinated processing across RSUs, HAPs, and satellites. This omission reduces opportunities for latency-aware placement, load balancing across tiers, and resilience in coverage-challenged regions—all critical for large-scale ITS.

- 2.

- Limited onboard and in-space data processing: Existing works rarely address efficient processing of EO data within non-terrestrial platforms or the trade-offs introduced by performing model tasks in space (e.g., at HAPs/LEO nodes). Without concrete strategies for in-space inference/aggregation, systems face increased communication cost or delayed decision-making for time-sensitive ITS applications.

- 3.

- Insufficient integration of DML paradigms for resource-constrained, heterogeneous clients: While FL, SL, and TL have been studied individually (and some combinations proposed), there is a lack of comprehensive frameworks that jointly leverage model partitioning, transfer initialization, and hierarchical aggregation to simultaneously address privacy, compute constraints, and converge quickly in highly heterogeneous ITS fleets.

The emerging 6G paradigm, characterized by ubiquitous and seamless T/NT connectivity, extreme low-latency links, pervasive edge intelligence, and AI-native network management, directly addresses the limitations noted above. Native NTN integration in 6G enables hierarchical, multilayer orchestration (e.g., RSU/UAV/HAP/satellite) that supports latency-aware placement and multi-tier aggregation. Likewise, 6G’s stronger edge computing and deterministic service capabilities make practical the notion of in-space or at-edge model processing for EO and real-time ITS tasks, reducing the need for bulky raw-data transfers. Finally, the AI-native control plane and advanced resource-slicing features foreseen for 6G facilitate dynamic model partitioning and adaptive use of FL/SL/TL mechanisms across heterogeneous clients. These 6G capabilities therefore motivate and make feasible the design objectives of this paper: a multilayer joint T/NT, latency-aware DML framework that unifies Federated, Split, and Transfer Learning and maps model components to the most appropriate network tier, improving scalability, privacy, and real-time performance for ITS and NTN-based EO scenarios.

Table 1.

Comparison of the most related works and gaps/differences relative to our work.

Table 1.

Comparison of the most related works and gaps/differences relative to our work.

| Ref. | Contributions | Differences With Our Work |

|---|---|---|

| [6] | Multitask FL with hybrid client selection/aggregation in edge networks. | Pure FL (no SL/TL); no multilayer hierarchy or NTN integration; not tailored to ITS. |

| [8] | Evaluates and optimizes DML techniques for IoT; benchmarking focus. | Not ITS-centric; not hierarchical FL/SL over T/NTNs nor real-time ITS latency study. |

| [9] | Wireless Distributed Learning: hybrid split+federated approach. | No T/NTN tiering, no EO/ITS integration, and no multilayer ITS latency/accuracy study. |

| [11] | Federated Transfer Learning for cross-domain sensing with resource efficiency. | No ITS; no SL or multilayer NTN; narrower modality than our EO/vehicular perception. |

| [15] | 6G-enabled advanced transportation systems; network capabilities and use cases. | No DML pipeline or a multilayer T/NTN latency/accuracy quantification. |

| [16] | Explores the potential of NTNs in next-gen ITS; positioning paper. | Lacks concrete DML training/aggregation across layers and empirical ITS evaluation. |

| [17] | Personalized FSL for ITS-distributed training: single-layer training. | No hierarchy, no EO integration, and no unified FL/SL/TL orchestration across T/NTNs. |

| [18] | Feasibility of SL/TL/FL for security in ITS; SL outperforms FL/TL baselines; security focus. | No ITS; no hierarchical T/NTN training or EO-ITS fusion; no multi-tier aggregation. |

| [19] | FSL with data/label privacy in vehicular networks. | No multilayer architecture and no joint FL+SL+TL orchestration. |

To address these gaps, we propose two comprehensive methodologies: Federated Split Transfer Learning (FSTL) and its generalized version, Generalized Federated Split Transfer Learning (GFSTL). Both methods are designed for deployment in T/NTNs (or joint air–ground networks) and will be applied to three specific applications: vehicular scenarios, EO, and then their combination as an ITS scenario. The FSTL framework integrates the advantages of FL, SL, and TL, providing a scalable solution to train ML models in resource-constrained environments [20]. Meanwhile, GFSTL introduces a flexible approach that allows the independent use of FL and SL servers, catering to diverse user requirements. This novel architecture not only reduces latency and enhances the number of participants in the federated training process but also improves overall model accuracy and privacy protection. We will evaluate the effectiveness of the proposed methodologies through simulations in typical 6G ITS scenarios using advanced architectures like ResNet and You Only Look Once (YOLO) [21], demonstrating their superiority over traditional FL methods in terms of convergence rates, training accuracy, and overall latency.

1.2. Key Contributions

This paper introduces GFSTL and FSTL, two novel DML frameworks designed for multilayer 6G ITS and NTN-enabled EO. The main contributions are summarized as follows:

- 1.

- New hybrid learning frameworks (FSTL and GFSTL): We propose FSTL and its generalized version (GFSTL). FSTL integrates FL, SL, and TL into a single pipeline tailored for resource-constrained edge nodes. GFSTL extends FSTL by supporting multiple independent FL/SL server placements and hierarchical aggregation across Road-Side Units (RSUs) and HAPs, enabling flexible deployment across Terrestrial and Non-Terrestrial Network layers (see Section 3 and Section 4).

- 2.

- Exploitation of multilayer T/NTN architectures for scalable DML: We explicitly design the frameworks to exploit the multilayer structure of NTNs (RSUs, UAVs, HAPs, Low/Medium Earth Orbit (LEO/MEO) satellites) so that computation and aggregation are performed at the most appropriate network tier (see the multilayer architectures in Section 5). This hierarchical design reduces wall-clock training time, improves scalability, and increases the number of feasible participants in federated training (see Section 6 and the latency analysis in Section 5.4).

- 3.

- Efficient Data Processing for Real-Time EO/ITS Applications: The paper proposes novel strategies for real-time data processing in space to optimize the utilization of EO/ITS data. By leveraging GFSTL’s flexible architecture, we address the inefficiencies in current EO/ITS systems, enabling more effective data transmission and model updates, even in highly dynamic environments with limited resources (Section 5).

- 4.

- Application to Diverse Use Cases and Comprehensive Evaluation: The proposed methodologies, system architecture, training process, added benefits, and challenges are introduced and evaluated across three distinct use cases: vehicular scenarios (Section 5.1), EO (Section 5.2), and their integration into a unified ITS scenario (Section 5.3). This work also provides a comprehensive latency analysis for a multilayer 6G ITS environment (Section 5.4), showcasing the practical benefits of our approach in large-scale, real-world deployments.

- 5.

- Enhanced Latency, Accuracy, and Privacy in DML: Our proposed methods significantly reduce latency and enhance training accuracy compared to traditional FL-based frameworks. The introduction of flexible server configurations in GFSTL further improves the number of participants in the FL process while ensuring robust privacy protection. These advances are demonstrated through simulations in typical 6G ITS scenarios, where the proposed methodologies outperform traditional FL techniques in terms of convergence rates, model accuracy (Section 6.2 and Section 6.3), and overall system latency (Section 6.4).

- 6.

- Practical split/transfer parameterization for ITS and EO tasks: We provide a concrete parameterization (model cut-points, smashed-representation sizing, and YOLO/ResNet choices) that maps model components to node capabilities (RSU/HAP/edge) (Section 6.1), balancing accuracy, computation, and communication. This parameterization is validated empirically in Section 6 and supports real-time ITS perception tasks using compact intermediate representations.

- 7.

- Comprehensive performance and latency study under unified experimental settings: We evaluate FL, SL, FSL, FSTL, and GFSTL on a representative ITS scenario using the same hardware/network assumptions to ensure a fair comparison. This study quantifies trade-offs in accuracy, convergence speed, per-round latency, and communication volume and demonstrates our proposed frameworks’ improvements in these dimensions (results and discussion in Section 6 and Section 7).

The above contributions jointly advance the state of the art by presenting scalable, privacy-preserving, and latency-aware DML solutions that are directly applicable to multilayer 6G ITSs and NTN-based EO systems.

1.3. Organization of the Paper

This paper is organized as follows: Section 2 provides an overview of existing DML frameworks, including FL, SL, FSL, and TL, discussing their advantages and limitations. Section 3 details the proposed FSTL framework, outlining its architecture and training process and showcasing its application in ITSs. Building upon this, Section 4 introduces the GFSTL framework, presenting its architecture and training algorithm for scalable, multi-group network environments. Section 5 demonstrates the versatility of GFSTL through two distinct use cases: a vehicular Aerial–Ground Integrated Network (AGIN) and an NTN-based EO system, followed by a comprehensive latency analysis for a unified multi-layer 6G ITS scenario. Section 6 presents the simulation setup and parameters, evaluation metrics, and the results validating the proposed methodologies. Finally, Section 7 provides a discussion of the findings, limitations, and future directions, with Section 8 concluding the paper.

2. Distributed Machine Learning Frameworks

DML has become an essential approach to enable collaborative model training across multiple devices without requiring centralized data storage. This method is particularly valuable in scenarios like ITS, NTNs, and EO, where large volumes of data are distributed among many devices, and privacy concerns or bandwidth limitations make data centralization impractical. By allowing each device to process its data locally and share only model updates with a central server, DML frameworks ensure that privacy is maintained while enabling efficient large-scale model training. Some of the most widely adopted DML techniques include FL and SL, each offering unique benefits to address the challenges of distributed data environments [22].

2.1. Federated Learning

FL is one of the foundational methods for DML, allowing multiple clients (e.g., VUs in an ITS) to collaboratively train a shared ML model without sharing their raw data. FL ensures privacy and reduces the bandwidth needed for communication, making it well-suited for environments where data privacy is critical, such as ITSs [23]. Each client performs model training on its local data and only transmits model updates (such as gradients) to a central server for aggregation.

Consider a system with N distributed users defined through the set , where each user i has a local dataset containing labeled data samples. The feature vector is the k-th sample, while is its corresponding label. The task is to train a global model by minimizing a global loss function between all clients without sharing the raw data.

During each round of communication, users update their local models by minimizing their local loss functions , where represents the global model in round t. The updated local model parameters of each user are sent to the server, which aggregates them using a weighted averaging scheme, such as FedAvg [24]:

The process continues until the model converges or a stopping criterion is met, such as a target loss value. The advantages of FL are as follows:

- Data Privacy: FL allows users to keep their raw data local, making it ideal for privacy-sensitive scenarios.

- Reduced Communication Overhead: Only the model parameters are exchanged, not the raw data, saving bandwidth.

- Adaptability: FL can continuously improve models with real-time data from users, making it responsive to dynamic environments.

The disadvantages are the following:

- Communication Latency: Multiple rounds of communication are required, increasing the overall latency, especially in bandwidth-constrained networks.

- Convergence Speed: Achieving convergence may require many iterations, resulting in high computational and communication costs for resource-constrained users.

While FL addresses privacy and communication challenges, its iterative nature introduces certain limitations, particularly in environments where latency or computational resources are limited [25]. To overcome these challenges, more advanced frameworks such as SL have been introduced. The interested reader could refer to [26] for a comprehensive analysis of FL algorithms.

2.2. Split Learning

Although FL provides a solid foundation for DML, it can place significant computational and communication burdens on clients, especially when training deep models [27]. To mitigate these issues, SL divides the learning process between clients and servers. In SL, each client processes only the lower layers of the model, while the server handles the remaining computations. This reduces the computational load on clients and improves privacy by keeping raw data within the client device [28].

In the context of an ITS scenario, consider a model that is divided into two components: (i) the client-side model , which each user or client maintains locally, and (ii) the server-side model , which is responsible for completing the forward and backward passes on the server. This split architecture allows resource-constrained devices, such as VUs, to perform only the initial stages of the computation, reducing their computational and storage requirements.

The SL process involves the following steps for a single forward–backward round:

- 1.

- Each user processes its local data using the client-side model , which generates intermediate activations:These intermediate activations represent compressed feature representations and are transmitted to the server.

- 2.

- Upon receiving , the server performs the forward pass using its merge model to produce predictions :This completes the forward pass for the entire model using both client- and server-side components.

- 3.

- Next, the server begins the backward pass on the server-side model to compute the gradients of its model parameters based on the loss function, typically using a method such as stochastic gradient descent. The server then calculates the gradient of the intermediate activations , denoted as , and transmits this gradient back to the user.

- 4.

- Finally, the user completes the backward pass locally on its split model using . This enables each client to update their local model parameters for based on its unique data, thus maintaining data privacy and security.

This split process enables each user to perform forward and backward propagation collaboratively with the server, forming a full round of training over the model . By structuring the model in this manner, SL allows resource-constrained client devices to participate in training without handling the full computational load. The server’s ability to process intermediate activations reduces client–server communication overhead while preserving data privacy by ensuring that raw data remains local to each client.

The advantages of SL are as follows:

- Reduced Client-Side Load: Clients only need to compute part of the model, reducing their computational overhead.

- Privacy Preservation: Only smashed data (intermediate activations) is transmitted, protecting the privacy of raw data.

- Lower Communication Cost: Fewer data need to be exchanged between clients and the server, as only intermediate activations are shared.

The disadvantages are the following:

- Sequential Processing: The split model introduces latency because clients and the server must alternate between steps.

- Reduced Model Expressiveness: Splitting the model might reduce its capacity, potentially leading to performance degradation.

Although SL reduces computational load and improves privacy, it can still suffer latency issues due to its sequential nature [29]. The interested reader could refer to [30] for a comprehensive analysis of SL algorithms.

2.3. Federated Split Learning

To overcome earlier limitations, a novel framework named FSL merges the benefits of FL and SL. FSL brings together the privacy safeguards characteristic of FL with the computational efficiency found in SL. This framework allows clients to execute a segment of the model processing locally, as per SL, followed by employing the federated averaging technique from FL to consolidate intermediate client outputs. Consequently, this synthesis taps into the strengths of both systems while mitigating their weaknesses [31].

In FSL, each user computes intermediate activations locally, which are sent to the server for aggregation. The server merges these activations using a federated averaging scheme:

The merged activations are then used to update the global model, and the updated parameters are distributed back to the users. By combining the FL distributed training process with the SL efficient model split approach, FSL can achieve better scalability and improved privacy. The advantages of FSL are as follows:

- Hybrid Approach: FSL benefits from both FL and SL, enabling DML with lower communication costs and enhanced privacy.

- Scalability: The federated averaging mechanism allows the framework to scale effectively across many clients without increasing the communication burden.

- Reduced Client-Side Load: Like SL, FSL reduces the computational burden on clients by only requiring them to compute the lower layers of the model.

The disadvantages are the following:

- Slow Convergence: The nature of SL can result in slower convergence, particularly in large-scale networks.

- High Local Training Requirements: Clients still need sufficient local computational resources to handle their portion of the model, which may not always be feasible in resource-constrained environments.

Although FSL improves the benefits offered by both FL and SL, its implementation can suffer from delayed convergence rates, particularly in extensive deployment scenarios [32]. To further optimize the efficiency of the learning procedure, TL can be integrated with FSL, giving rise to the FSTL methodology proposed later. Before analyzing FSTL, we introduce TL, focusing on its advantages.

2.4. Transfer Learning

TL is a powerful technique that improves model performance by leveraging knowledge gained from previous tasks, enabling efficient training on new but related tasks. TL is especially useful in scenarios with limited data or heterogeneous data distributions among clients, as it reduces the training required for convergence and enhances overall model accuracy [33]. By initializing clients with pre-trained models developed on large datasets, TL allows each client to start training from a more informed state, rather than from random initialization [34].

Formally, let represent the source domain with samples and associated labels . The objective is to learn a model that minimizes the loss function in this domain, i.e.,

where is a chosen loss function (e.g., cross-entropy) and is the model prediction for input .

Once trained on the source domain, the model is fine-tuned on the target domain with samples to minimize the loss function :

TL offers the following advantages:

- Accelerated Training: TL reduces the training time required for model convergence, which is particularly advantageous in real-time or resource-constrained applications.

- Improved Model Accuracy: Leveraging learned knowledge from a related domain improves model performance, especially when training data is limited or heterogeneous.

- Enhanced Generalization: Pre-trained models offer better adaptability across diverse client datasets, as they can transfer features to new tasks effectively.

3. Federated Split Transfer Learning

In the realm of FSL, TL offers significant advantages. The allocation of a pre-trained model to each client expedites the learning process and enhances the model’s capacity to generalize across the diverse datasets of clients. This synergistic approach is especially beneficial when there are disparities in client data distributions, as the pre-trained features and patterns provide a solid foundation for adaptation. As FSL progresses to FSTL, TL further strengthens the learning architecture. The fusion of TL with FSL optimizes both efficiency and scalability while maintaining the privacy and computational benefits inherent to FSL. This collaboration facilitates quicker and more adaptable model training in distributed settings, which is particularly advantageous for a range of applications that feature distinct data properties.

In FSTL, the goal is to enhance DML capabilities by combining the benefits of FL, SL, and TL. The FSTL framework is particularly advantageous in scenarios where resource-constrained devices, such as VUs or unmanned aerial vehicles (UAVs), participate in collaborative learning while maintaining data privacy and minimizing communication overhead.

3.1. FSTL Architecture

The architecture of FSTL begins with a pre-trained neural network model , which consists of L layers. This model is divided into a specific cut layer k (where ) with two parts:

- 1.

- is the portion up to layer k, which is deployed on each user for local processing, and

- 2.

- represents the remaining layers, which are managed by the server in the SL paradigm.

During training, the generic i-th VU processes its local dataset using the client-side split model , generating intermediate representations . These representations are then transmitted to the SL server-side model , which processes the intermediate data and updates the global model parameters. The split structure allows users to handle computationally lighter tasks while offloading the most demanding parts to the server, thereby reducing local resource consumption.

By integrating TL into the FSL paradigm, the FSTL framework leverages the advantages of pre-trained models on the client side. The pre-trained layers up to the cut layer k capture generalizable features and patterns that are useful across different tasks. Meanwhile, the remaining layers on the server allow further refinement and collaborative learning among distributed users. As a result, FSTL benefits from more efficient knowledge transfer, improved model performance, and faster convergence compared to traditional SL or FL methods.

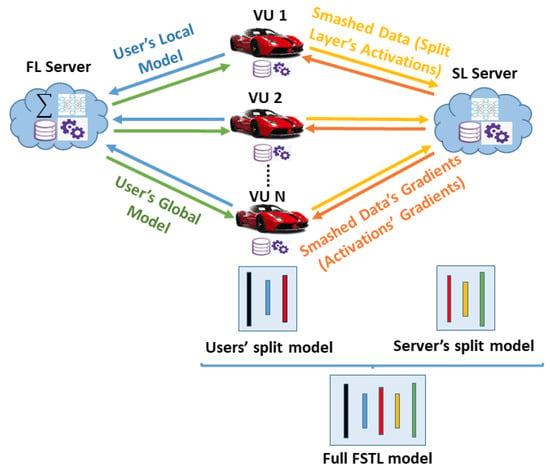

A key improvement in the FSTL framework over traditional SL is its capacity to enable parallel processing by allowing all clients to communicate simultaneously with both the SL and FL servers. This architecture, as illustrated in Figure 1, offers several distinct advantages over sequential methods:

- Higher Convergence Rate: By enabling parallel, simultaneous updates from all clients, the model can learn from a diverse set of data within each training round. This avoids the slow, one-by-one sequential processing inherent in standard SL, leading to a more efficient path to model convergence.

- Reduced Communication Bottlenecks: In a sequential learning setup, the server can only communicate with one client at a time, creating a significant bottleneck that scales with the number of users. The FSTL structure removes this limitation by allowing the central server to handle communications with all participating clients in parallel, thereby improving network efficiency.

- Balanced Computational Loads: The framework design inherently distributes the workload. While each client handles the initial, less intensive part of the model, the server manages the more computationally demanding tasks and the aggregation of updates from all clients. This parallel structure ensures a more balanced and efficient use of computational resources throughout the network.

Figure 1.

Overall structure of FSTL.

3.2. FSTL Training Process

The FSTL training procedure is an iterative, distributed process that allows multiple users to collaboratively train a model while managing data privacy and distributing computational load. The model is divided into two components: (i) the client-side model, which is hosted locally by each user, and (ii) the server-side model, which is hosted centrally.

To formalize the training process, let denote the parameters of the client-side model and denote the parameters of the server-side model. The procedure follows the following steps:

- 1.

- Initialization:

- (a)

- The global model parameters are initialized using pre-trained weights from a TL model.

- (b)

- A specific layer index k is chosen, determining the split between the client and server computations. The layers before k are hosted on the client side, while the remaining layers are hosted on the server.

- 2.

- Iterative Training: For each training iteration , the following steps occur:

- (a)

- The initial global model parameters are distributed to all participating users.

- (b)

- Each user i processes its local data through its client-side split model to generate intermediate representations:

- (c)

- The user sends these intermediate representations to the central server.

- (d)

- The central server aggregates the intermediate representations from all users by applying the following merge function :

- (e)

- Using , the server performs a forward–backward pass to compute an updated version of the global model parameters:Here, L denotes the loss function, while is the learning rate. This forward–backward process, which leverages federated averaging, is conducted in a way that preserves privacy, since only aggregated updates are calculated.

- (f)

- The central server updates by setting

- (g)

- Each user i then receives the updated global model parameters from the server and uses them to update their own server-side model parameters as follows:

- (h)

- Finally, each user i updates its client-side model parameters as follows:

This iterative process repeats until the model reaches a stopping criterion, such as convergence of the loss function or a predetermined number of training rounds. In each iteration, users independently process their local data through the client-side model to produce intermediate representations that they then share with the central server. The server merges these representations and performs federated updates to enhance the global model, subsequently sending the updated parameters back to users. This cycle of local data processing, secure sharing, and federated aggregation enables DML while preserving data privacy and distributing computational demands across the network.

3.3. FSTL Advantages

FSTL provides a comprehensive and powerful framework for DML, integrating the advantages of FL, SL, and TL. This approach allows individuals to handle data locally while taking advantage of the global collaborative capabilities offered by TL-enhanced learning. This integration significantly improves model performance, accelerates convergence rates, and mitigates resource limitations, making it particularly suitable for dynamic and privacy-sensitive environments such as ITSs [35]. The benefits of FSTL can be elaborated upon as follows:

- 1.

- Data Privacy and Security: With the retention of raw data on the user’s side, FSTL guarantees that sensitive personal information is not exposed or communicated outward. This addresses the fundamental privacy issues associated with collaborative learning frameworks.

- 2.

- Efficient Communication: The transmission involves only the intermediate data representations between clients and the central server, thus significantly reducing communication overhead in the process.

- 3.

- Accelerated Convergence: By employing models that have been pre-trained, convergence is more rapidly achieved since these models utilize existing knowledge instead of starting the learning process from the beginning.

- 4.

- Improved Performance: The server’s collaborative learning approach fosters improvements in the global model across all users, facilitating the adaptability of the framework to ever-changing environments and diverse ITS scenarios.

3.4. FSTL in ITS Scenarios

In the context of a 6G-enabled ITS, achieving accurate and adaptive model learning requires various sources of data input to reflect the real-world conditions vehicles face on the roads. In ITS scenarios, FSTL is expanded to encompass not only VUs but also UAVs and strategically located cameras on streets and intersections. This variety of data sources provides more comprehensive environmental information, which is crucial for creating high-efficiency and responsive ITS applications.

VUs provide ground-based data, including real-time information on vehicle location, speed, and road conditions, factors crucial for direct traffic management and road safety. UAVs, on the other hand, contribute an aerial perspective, capturing broad-area observations that can help monitor larger traffic patterns, assess road congestion, and even support emergency responses through quick area scans. Cameras placed on streets and intersections provide fixed high-frequency snapshots of specific intersections or traffic points, enabling an accurate assessment of local traffic density, pedestrian movements, and potential hazards. Together, these users form a comprehensive multiperspective network that aligns with the 6G ITS goals by connecting each component of the ITS infrastructure.

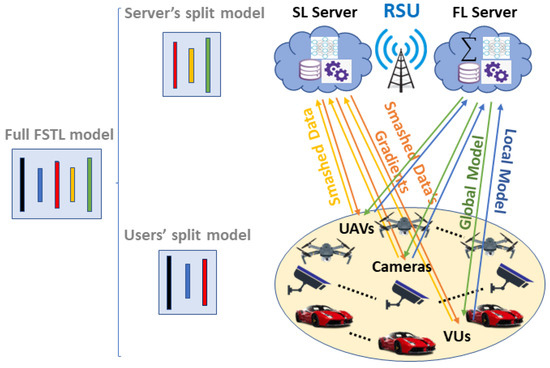

In this ITS FSTL scenario, as illustrated in Figure 2, the Road-Side Unit (RSU) serves as the FSL server. Here, each type of user (i.e., VUs, UAVs, and cameras) retains its raw data locally, thus preserving privacy, and sends only intermediate representations, known as smashed data, to the RSU for further processing and model training. This approach significantly reduces communication overhead by limiting exchanged data to model updates, supporting low-latency interaction, and reducing bandwidth demands, actions important for real-time ITS applications.

Figure 2.

FSTL structure for ITSs with the RSU as the FSL Server.

3.5. Benefits of FSTL in ITSs

The RSU serves as the main FSL server, which allows collaborative training between VUs, UAVs, and cameras by integrating data from multiple perspectives, promoting a model that can adapt to varying road conditions. Positioned near traffic points, RSUs are strategically located to provide reliable connection points for each type of user device. They facilitate low-latency communication and rapid data processing, allowing the ITS framework to respond promptly to traffic changes, weather impacts, and other dynamic factors. By aggregating insights from these diverse data sources, the FSTL framework in the ITS supports real-time adaptability and robust decision-making, which are critical for modern transportation systems.

3.6. FSTL Training Process

Each user, in coordination with the RSU, participates in iterative model updates that allow the server to refine the model collaboratively. The following iterative algorithm (Algorithm 1) outlines this training process. The algorithm begins by initializing the central server’s (RSU) model parameters, denoted as , with an initial state (Line 1). Simultaneously, each of the N individual users initializes their local model parameters, , with their respective initial states (Lines 2–3). This sets up the initial state for both the global and local models before training commences. Each user then independently performs a forward propagation step on their local model using their private local data (Line 4). Instead of sending their raw data or full model, each user computes and transmits an intermediate representation () to the RSU (Line 5). This likely encapsulates features or activations derived from the local data, without revealing the data itself, thus contributing to data privacy. Upon receiving the intermediate representations () from all active users, the RSU performs a forward propagation step on its own server model with these aggregated representations (Line 6). Subsequently, the RSU computes the gradients with respect to its server model parameters, , by backpropagating through its model (Line 7). These computed server gradients are then transmitted back to the corresponding users (Line 8). This signifies a collaborative gradient computation where the server contributes to the overall gradient direction. Once users receive the server’s gradients (), they backpropagate these gradients through their local models (Line 9). This allows them to compute their own local gradients (). Finally, each user updates their local model parameters () using an optimizer, such as Stochastic Gradient Descent (SGD), with a learning rate (Line 10). The update rule indicates a standard gradient descent step to minimize a local loss function. After all users have performed their local updates, the RSU aggregates the gradients received from all users. Line 12 describes this aggregation as federated averaging, where a weighted average of gradients () is computed. It is important to note that the algorithm describes the server receiving back from users in Line 8, and then aggregating gradients from all users in Line 12. This implies that either refers to that was sent to users and now is being considered as a contribution or users are sending back their local gradients for aggregation. Assuming here refers to some form of local gradient or update contribution from each user. The RSU then updates its own model parameters () using this aggregated gradient, again using a learning rate (Line 13: ). This step is crucial for integrating the collective learning from all users into the global model. In the final step of each iteration, the RSU sends its updated server model parameters () back to all users (Line 14). Each user then synchronizes their local model () with the updated server model using a weighted average, where . Here, is a hyperparameter that controls the influence of the global server model on the local model, while likely refers to the user’s previously updated local model or perhaps an average of their own previous model and their received . This synchronization step ensures that the local models align with the collective knowledge gained by the server, enabling a more robust and generalized model across the distributed system.

| Algorithm 1 FSTL iterative algorithm for ITSs |

| Input: |

| Output: Updated local and server model parameters and |

|

This FSTL setup enables real-time, collaborative model improvement across VUs, UAVs, and cameras, strengthening the ITSs’ adaptability and performance. The DML approach, anchored by the RSU, balances data privacy and computational efficiency, ensuring that data remain local while intermediate updates support a cohesive, up-to-date model. Federated averaging at the RSU uses user-specific weights to adjust each client’s contributions to the global model, enhancing the generalization of the model for varying traffic patterns, weather conditions, and environmental factors unique to each type of user. Using the combined data perspectives of VUs, UAVs, and cameras, FSTL in ITSs addresses real-world challenges such as traffic flow management, safety monitoring, and emergency response optimization, ensuring robust, privacy-preserving DML that meets the demands of 6G ITS environments.

4. Generalized Federated Split Transfer Learning

The GFSTL framework extends the FSTL methodology, facilitating scalability across numerous client groups, with each group comprising several users. In contrast to the conventional FSTL configuration, which involves training a singular global model for the entire user base, GFSTL segregates the users into discrete groups. This segmentation allows for more adaptable and parallelized model training. This architecture is uniquely suited for complex environments such as NTNs, where users are scattered in various geographical regions and often encounter varying connectivity conditions [36]. In this section, we describe the architecture of GFSTL and the benefits it brings to DML over NTNs, particularly in scenarios such as vehicular networks or EO systems.

4.1. GFSTL Architecture

The GFSTL architecture begins by partitioning users into m distinct groups. Each group operates independently with its own subset of users, but all groups work toward training a single global model. Initially, each client (user) is assigned an identical local model, while the server initializes the global model. The server maintains and orchestrates model updates across the groups in parallel, ensuring that the computational load is evenly distributed.

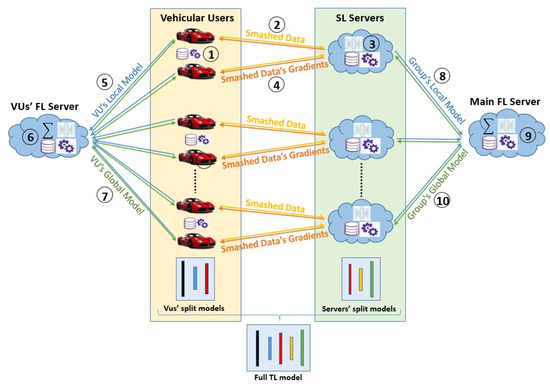

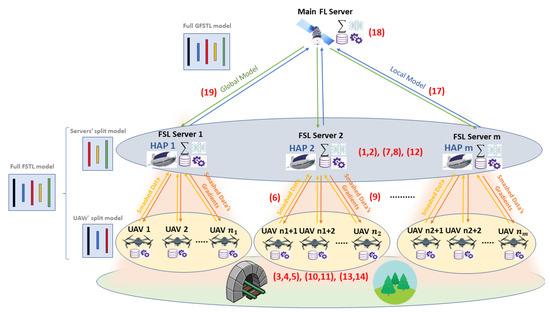

In Figure 3, the architecture of the GFSTL approach is depicted, where each rounded number identifies the corresponding phase of the process.At the beginning of the training process ①, all clients receive a copy of the same initial model. These users perform forward propagation on their local datasets in parallel, generating smashed data, which is the intermediate output from the split neural network layers. These smashed data are sent to the SL server associated with each group ②. The server randomly assigns smashed data from each client to one of the predefined groups ③. In each group, forward and backward propagation are performed sequentially within the group, while the groups themselves operate in parallel ④, ensuring efficient use of computational resources. Each SL server (group) produces an updated submodel, which is returned to the corresponding clients for further local backpropagation. This procedure ensures privacy and efficient use of resources. Privacy is maintained by transmitting only smashed data, which contains no raw information about the local datasets, while computational efficiency is achieved through parallelization at the group level. Gradients from the SL server are applied to the local models, allowing each client to update its parameters based on the feedback received from the server. Once each group completes its round of FSTL, client-side FL servers aggregate submodels at the group level (⑤–⑦). Subsequently, the main FL server, which could be a central server at a higher-level node, aggregates all the submodels from different groups into a unified global model (⑧–⑩), completing the learning round.

Figure 3.

GFSTL architecture.

This architecture can accommodate various data configurations, including scenarios with non-iid (non-independent and identically distributed) data, vertically partitioned data, or extended splits across layers. Moreover, the architecture supports different privacy-preserving techniques, as the main server never accesses raw data from the clients, ensuring data confidentiality.

4.2. GFSTL Training Process

The GFSTL framework, as outlined in Algorithm 2, leverages SL within FL groups. This architecture involves multiple SL servers, each managing a distinct group of users (clients), with a main FL server coordinating across these SL servers for hierarchical aggregation and global model updates. This design aims to enhance privacy, scalability, and efficiency by distributing computational burdens and localizing data. The training process begins with the specified input parameters, namely N (total number of clients), initial client model parameters , initial server model parameters , (synchronization weight), and (learning rate), and ultimately produces the output of the updated client and server model parameters and . The training proceeds in an iterative fashion, encompassing nested loops. The outer loop iterates through each of the m SL server groups and is indexed by j (Line 1). Within this loop, each SL server j is initialized with its server-side model parameters, , set to their initial state (Line 2). Following this, an inner loop commences for each client i belonging to group j, where is the number of clients in group j (Line 3). Each client i initializes its local model parameters, , with pre-trained values (Line 4). The client then performs a forward propagation step on its local model using its private local data (Line 5). Instead of transmitting raw data, the client computes and sends intermediate smashed data () to its corresponding SL server j (Line 6). Upon receiving from its clients, the SL server j performs forward propagation on its server-side model using the received smashed data (Line 7). Subsequently, the SL server computes and performs backpropagation to determine the gradients with respect to its server model parameters, (Line 8). These computed server gradients, , are then sent back from the SL server to the respective client i (Line 9). Upon receiving , the client i completes the backpropagation on its own local model using these received gradients (Line 10). After completing backpropagation, the client updates its local model parameters, , using an optimizer, typically Stochastic Gradient Descent (SGD), according to the rule , where is the learning rate and represents the local gradients (Line 11). After all clients in a group have performed their local updates (Line 15 marks the end of the inner loop), the SL server j aggregates the gradients (or updates) from all clients in its group using federated averaging (Line 12). This produces an aggregated gradient that is calculated as , where n is the number of participating clients in the current aggregation round and are weights. The SL server then updates its own model parameters, , using an optimizer (e.g., SGD) with the learning rate and the aggregated gradient , with (Line 13). Following the SL server model update, each client’s local model parameters, , are synchronized with the updated server model using a weighted average, with , where controls the influence of the global server model and likely refers to the client’s previous local model (Line 14). This concludes the operations within each group’s inner client loop (Line 15) and the outer group loop (Line 16). After all SL servers have completed their group-level training iterations, they send their updated model parameters to the main FL server (Line 17). The main FL server then aggregates these parameters from all SL servers to form a single, unified global model (Line 18). Finally, this newly formed global model is sent back to all the groups (SL servers and subsequently clients) for synchronization or subsequent training rounds (Line 19). The algorithm then returns the updated local client model parameters for all users and the updated server model parameters for the RSU/main FL server (Line 20).

| Algorithm 2 GFSTL iterative algorithm |

| Input: |

| Output: |

|

4.3. GFSTL Advantages

The GFSTL architecture provides several key advantages in DML systems, especially when applied to NTNs:

- 1.

- Scalability: By distributing clients into multiple groups, the architecture significantly reduces the computational load on any single server. This group-based structure enables scalable training across large numbers of users, each contributing to the global model.

- 2.

- Privacy Preservation: GFSTL ensures that raw data never leave the local client devices. Only smashed data, which contain no identifiable information, are transmitted to the SL server, thus preserving the privacy of sensitive user data.

- 3.

- Efficient Resource Utilization: The parallelization of groups allows efficient use of computational resources. The training process within each group is sequential, but the groups themselves operate in parallel, which reduces the overall training time and improves performance in large-scale systems.

- 4.

- Improved Model Accuracy: By enabling the use of TL with pre-trained models, GFSTL accelerates the convergence of the global model. Moreover, the aggregation of submodels across groups ensures that the global model is more robust, with improved accuracy due to contributions from diverse groups of users.

- 5.

- Low Communication Overhead: Since only model parameters are exchanged between users and SL servers, the communication overhead is minimal. This is particularly advantageous in NTNs, where communication links can be intermittent or costly.

Taking advantage of these benefits, GFSTL emerges as a highly efficient, scalable, and privacy-enhancing approach suitable for executing DML within intricate settings like NTNs.

4.4. Summary of Advantages and Disadvantages of DML Approaches

This section synthesizes the strengths and limitations of the principal DML paradigms considered in this work, i.e., FL, SL, FSL, and TL, and contrasts them with our proposed FSTL and its generalized, multilayer form (GFSTL). Section 2, Section 3 and Section 4 present the detailed mechanics of each approach and motivate the design choices behind FSTL/GFSTL; here we summarize the practical trade-offs most relevant to ITS deployments. In brief, FL scales well and preserves local data but incurs large per-round communication and high client compute costs [37]; SL reduces client computation and communication volume yet suffers from serial processing and server-side bottlenecks [28]; FSL attempts to combine the two but still inherits limitations in single-tier deployments [38]; and TL accelerates convergence and reduces training effort at the cost of possible domain mismatch [39]. FSTL unifies these techniques to reduce client load and communication while leveraging pre-trained models for faster convergence, and GFSTL further adds hierarchical, multi-server orchestration to bound aggregation latency and improve robustness in multilayer T/NTN settings (see Section 3 and Section 4 and the latency analysis in Section 5.4). Table 2 collects these trade-offs in a compact form to facilitate a direct comparison and to clarify the specific performance dimensions addressed by our proposals.

Table 2.

Comparison of Distributed Machine Learning techniques: advantages and disadvantages.

5. Application of GFSTL in 6G

Having established the theoretical foundations of the FSTL and GFSTL frameworks, this section transitions to their practical application within the complex, multilayered 6G ecosystem. The GFSTL framework is designed to leverage the advanced capabilities of 6G networks, which integrate various layers of T/NTNs for enhanced scalability and robustness. Specifically, the use of HAPs as central FL servers, alongside ground-based RSUs as local FSL servers, allows for efficient model aggregation and training across diverse geographical regions. The architecture supports seamless communication across satellite, aerial, and terrestrial domains, ensuring high coverage and minimal latency in dynamic ITS environments. This multi-tier architecture facilitates real-time data processing and decision-making, which are crucial for applications like vehicular networks and EO.

The true potential of a scalable, hierarchical framework like GFSTL is realized when it is deployed across integrated T/NTNs to solve real-world problems. To demonstrate this versatility, we will first explore two distinct, high-impact use cases: a GFSTL-based architecture for vehicular AGIN and a configuration for NTN-based EO. We will then present a unified architecture that synthesizes these scenarios, providing a comprehensive solution for the next-generation ITS, and conclude with a formal latency analysis of this integrated system.

5.1. Use Case 1: GFSTL in Vehicular Aerial–Ground Integrated Network

Aerial networks have gained significant attention as a viable solution to extend connectivity and support advanced applications in complex and challenging environments. These networks are particularly beneficial for vehicular scenarios, which are characterized by high mobility, inconsistent connectivity, and dynamic data distribution, factors that pose substantial challenges to traditional ML methods [40]. In our previous work [20], the concept of FSTL was introduced to address these challenges in vehicular scenarios. Based on this, the current study expands the applicability of FSTL by integrating it with aerial networks to establish a unified AGIN for vehicular scenarios. This framework allows for full exploitation of the GFSTL paradigm.

Using HAPs and the existing capabilities of FSTL, we introduce GFSTL to harness the advantages of aerial networks, enabling efficient, scalable, and secure training and inference processes for VUs. The proposed AGIN architecture is designed to overcome the limitations of ground-based networks by integrating aerial nodes, enhancing connectivity, reducing latency, and maintaining data privacy.

5.1.1. System Architecture and Methodology

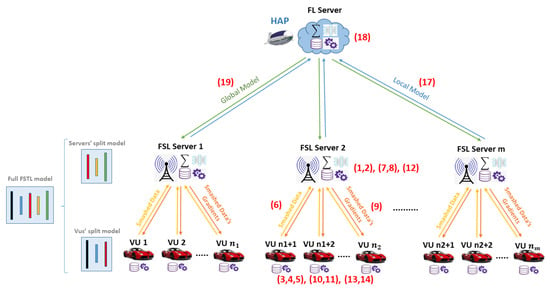

In the proposed scenario shown in Figure 4, the RSUs operate as both SL and FL servers for VUs, facilitating the execution of FSTL. Meanwhile, HAPs serve as the main FL servers, overseeing the aggregation of models across multiple RSUs. This architecture enables SL to operate locally on the VUs, ensuring that raw data remain on the devices, thus safeguarding privacy. At the same time, more computationally intensive tasks, such as model training, are offloaded to HAPs. This distributed approach significantly reduces communication overhead, as only model updates, rather than raw data, are transmitted between VUs and HAPs.

Figure 4.

GFSTL structure for vehicular AGIN.

The FL paradigm in this setup enables collaborative training across multiple VUs, promoting knowledge sharing and allowing the models to adapt to diverse vehicular environments. Each RSU is responsible for aggregating the updates of its local group of VUs, while the HAP performs higher-level aggregation, merging model updates from all RSUs into a unified global model.

5.1.2. Advantages of Integrating GFSTL with Aerial Networks

The integration of FSTL with HAPs unlocks several new possibilities for vehicular scenarios. First, high-altitude placement of HAPs ensures extensive coverage, reduces the likelihood of interference, and maintains seamless connectivity for VUs, even in remote or underserved areas where terrestrial infrastructure may be limited or unavailable. Second, the FL architecture improves collective intelligence across VUs by enabling them to collaboratively train models that are better adapted to dynamic conditions and the varying vehicular scenarios encountered on the ground. Through the AGIN framework, the combination of RSUs and HAPs offers enhanced connectivity, greater data privacy, and improved decision-making capabilities. Using both ground and aerial elements, our proposed approach overcomes the connectivity limitations inherent in traditional vehicular networks, providing reliable and scalable solutions to support intelligent vehicular networks.

5.1.3. GFSTL Workflow in Vehicular Scenarios

In the vehicular AGIN scenario, GFSTL is employed to enable collaborative learning across multiple vehicles while ensuring data privacy throughout the process. Each RSU acts as the FSL server for its designated group of VUs, handling model updates and exchanging gradients with the VUs. Once each group completes the FSTL training process, the aggregated FSTL models are sent to the HAP, which serves as the main FL server, to perform a final aggregation across all groups.

Specifically, the workflow can be described as follows:

- 1.

- The RSUs first act as local FSTL servers, managing the training and model aggregation for their respective groups of VUs.

- 2.

- Each VU performs a local computation and forwards its model updates to its corresponding RSU, which aggregates these updates.

- 3.

- Once the FSTL process is completed for all groups of VUs managed by the RSUs, the fully trained FSTL models are transmitted from the RSUs to the HAP.

- 4.

- The HAP, which functions as the main FL server, aggregates the complete set of model parameters from all RSUs, thereby finalizing the global model.

This architecture not only optimizes the training process by distributing the computation and aggregation tasks between RSUs and HAPs but also preserves privacy by ensuring that raw data never leaves the local VUs. Figure 5 illustrates the structure of the proposed GFSTL setup on an aerial network, and the numbers within the figure correspond to the steps outlined in Algorithm 2.

Figure 5.

GFSTL structure for Earth Observation using Non-Terrestrial layers.

5.2. Use Case 2: GFSTL in NTN-Based EO

In this section, we introduce the proposed GFSTL process for EO applications, along with its key advantages and the challenges it seeks to address. This generalized architecture, illustrated in Figure 5, is suited for multilayer EO applications. This framework integrates NTNs, including low-altitude platforms (LAPs), HAPs, and Low Earth Orbit (LEO) satellites, into the GFSTL architecture, leveraging their distinct advantages to enhance EO data collection, processing, and analysis.

5.2.1. Motivation and Proposed Framework

The growing need for accurate and high-resolution EO data in applications such as environmental monitoring, climate change analysis, and disaster response presents several challenges, particularly in terms of data collection, processing, and transmission. Traditional EO systems, which are highly dependent on satellite networks, often suffer from latency, data security concerns, and limited accuracy in capturing information in dense or remote environments (e.g., forests and urban areas) [41]. Our proposed framework addresses these limitations by optimally combining the precision and task-specific capabilities of UAVs with the extended coverage and computational power of HAPs. UAVs are ideal for collecting high-precision data in specific areas, especially in challenging environments, while HAPs provide broader coverage and handle computationally intensive tasks. By integrating these NTNs into the GFSTL architecture, our framework ensures efficient and secure DML across multiple EO platforms. This framework addresses the first major gap in the literature by enabling optimal integration of UAVs, HAPs, and LEO satellites to enhance accuracy, coverage, and resource efficiency in EO applications. Furthermore, our framework introduces an efficient DML method through GFSTL, which combines the strengths of FL for secure model training, TL to expedite the training process, and SL to ensure that resource-constrained UAVs can participate effectively in the learning process.

5.2.2. Framework Components and Advantages

In the proposed framework, HAPs function as FSL servers, which integrate both the SL and FL server functionalities. This allows UAVs to retain raw, high-quality data locally while offloading computationally intensive tasks to HAPs. This setup ensures privacy preservation and reduces the bandwidth required for transmitting large volumes of data since only intermediate outputs (smashed data) are transmitted. LEO satellites serve as the central main FL servers, orchestrating collaborative model training across multiple HAP-UAV groups. This architecture fosters collective intelligence capable of adapting to various EO scenarios. Using the hierarchical structure of NTNs, the framework optimizes resource utilization while ensuring scalability and adaptability to different EO tasks, such as image analysis, clustering, and object recognition.

The proposed framework brings numerous advantages:

- 1.

- Increased Accuracy: UAVs can focus on capturing precise data in specific regions, especially in areas where satellite imagery might be less effective (e.g., dense forests). This localized data collection ensures higher accuracy and relevance of the EO data.

- 2.

- Resource Efficiency: HAPs serve as intermediaries between UAVs and LEO satellites, efficiently distributing computational tasks and enabling seamless data processing across layers.

- 3.

- Privacy and Security: The FSL approach ensures that raw data remains on local devices (UAVs), preserving privacy while transmitting only intermediate data (smashed data) to HAP servers. This minimizes the risk of data breaches and ensures compliance with privacy regulations.

- 4.

- Reduced Latency: By using TL to initialize the training process with pre-trained models, the framework reduces the total training time, allowing faster convergence of the global model.

5.2.3. GFSTL Training Process for EO over NTNs

The GFSTL process over NTNs for EO applications follows the process outlined in Algorithm 2, where the role of the FL server is taken by the LEO satellite, the role of the FSL server is taken by the HAPs, and the role of clients is taken by the UAVs. The process begins by initializing the UAV models and server models (parameters and ), which are pre-trained on a DNN (such as ResNet). Each UAV performs forward propagation using its local data, sending the intermediate output (i.e., smashed data) to the HAP server for further processing. The HAP server computes gradients for the server model parameters and sends them back to the UAVs, allowing backpropagation and local model updates. For a better understanding, the number in Figure 5 refers to the step individualized by the lines in Algorithm 2.

5.2.4. Added Benefits and Challenges

The GFSTL framework brings significant improvements to EO applications by addressing key gaps in data collection, processing, and learning efficiency. The use of UAVs for precision data capture improves the quality of the images and data collected, especially in challenging terrains. The integration of HAPs and TL accelerates the training process, optimizing computational resources and improving model convergence.

However, several challenges need to be addressed for the successful deployment of GFSTL in EO systems. One major challenge is task compatibility; choosing a pre-trained model that aligns well with the target domain is critical to avoid domain shift and model drift. Additionally, scalability remains a concern, especially as more UAV-HAP groups are added. These challenges must be carefully considered to ensure optimal performance.

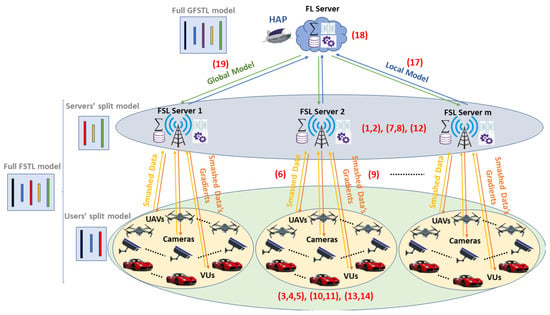

5.3. Unified Multilayer 6G ITS Architecture

The 6G era envisions a fully connected world where all elements of the ITS infrastructure are seamlessly integrated, from vehicles to aerial systems and ground-based sensors. This hyper-connectivity provides the foundation for real-time data processing and intelligent decision-making, which are essential to modern transportation systems. A hierarchical multilayer architecture, such as AGIN or NTNs, is ideally suited to support the demands of 6G ITSs, where the massive scale, low latency, and need for privacy-preserving DML are critical factors. This section introduces the concept of multilayer GFSTL in the context of 6G ITSs, providing a flexible, scalable, and efficient solution to the challenges posed by high data volumes and complex model training scenarios.

5.3.1. Architecture and Components

In the 6G ITS ecosystem, all components of the ITS infrastructure—including VUs, UAVs, and ground-based cameras (strategically placed at intersections, cross-roads, and high-traffic areas)—are interconnected to improve safety, efficiency, and overall traffic management. This requires a hierarchical multilayer system where computational tasks are distributed across different network layers, improving both resource management and real-time decision making. The architecture consists of three main components:

- 1.

- Users (VUs, UAVs, and Cameras): VUs are vehicles equipped with various sensors and communication modules that gather real-time traffic and environmental data. UAVs provide additional data, particularly in areas that are difficult to monitor or reach from the ground, providing support in vehicle navigation, surveillance, and emergency responses. Street cameras positioned at critical points such as intersections and crossroads monitor traffic flow, detect accidents, and feed real-time data into the network.

- 2.

- FSL Servers (RSUs and Base Stations (BSs)): RSUs and BSs act as FSL servers. They aggregate and process data received from nearby VUs, UAVs, and cameras, performing preliminary computations.

- 3.

- Main FL Server (HAP): The HAP serves as the main FL server. It receives and aggregates the models trained by the FSL servers (RSUs/BSs), conducting the final stage of training, which is aggregation to a global model that integrates data from all layers of the ITS.

The hierarchical structure, as depicted in Figure 6, enables efficient model training through GFSTL, where the learning tasks are split across multiple layers: ground-based (VUs, RSUs, and BS) and aerial (UAVs and HAPs) layers. This ensures both real-time data analysis and long-term predictive modeling, addressing the dynamic requirements of 6G ITSs.

Figure 6.

Multilayer GFSTL architecture for 6G ITSs.

5.3.2. Training Process and Iterative Algorithm

In the multilayer GFSTL framework for 6G ITS, the training is carried out iteratively as defined in Algorithm 2. Here, the HAP functions as the FL server; RSUs serve as FSL servers; and VUs and cameras operate as clients. Each layer aggregates its knowledge into the global model using a blend of SL, FL, and TL methodologies. The process is as follows:

- 1.

- Initialization: Each client (VUs, UAVs, and cameras) is initialized with a pre-trained model that is specific to its task, with parameters denoted as for the client model and for the server model.

- 2.

- Local Model Update: Clients perform forward propagation on their local models using the collected data, producing intermediate results (smashed data) that are sent to the nearest FSL server (RSU or BS).

- 3.

- FSL Server Aggregation: RSUs and BSs aggregate the intermediate results, perform forward and backward propagation on their server models, and send the corresponding gradients back to the clients to update their local models.

- 4.

- Main FL Server Aggregation: Once all FSL servers complete their local training, they send the updated models to the main FL server (HAP), aggregating these models into a unified global model.

- 5.

- Global Model Update: The global model is redistributed to the clients through the FSL servers for the next training iteration.

The iterative process described above ensures that all 6G ITS infrastructure components collaborate to build a unified, intelligent model. This model adapts to dynamic traffic conditions and learns from various data sources.

5.3.3. Added Benefits and Challenges

In the following, we delve into the core aspects of the proposed GFSTL framework, outlining its significant advantages and the inherent complexities that need to be addressed. The unique architecture of GFSTL offers a robust solution for ITSs while also presenting several considerations for its optimal implementation.

Benefits: The GFSTL framework is designed to deliver a multitude of advantages that enhance the efficiency, privacy, and reliability of ITSs. These benefits stem from its innovative, multilayered, and distributed approach that addresses key limitations of traditional centralized systems.

- Scalability: The multilayer GFSTL is inherently scalable, allowing it to accommodate the increasing number of VUs, UAVs, and cameras as the ITS infrastructure grows. Its distributed nature ensures that the computational load is balanced throughout the system.

- Privacy Preservation: Since raw data remain on the client side (VUs, UAVs, and cameras), the model leverages FSL to ensure privacy-preserving model training, which is crucial for sensitive traffic data and user privacy.

- Reduced Latency: By distributing computational tasks across FSL servers (RSUs/BSs) and the main FL server (HAP), GFSTL minimizes communication overhead and reduces the time needed to update the global model.

- Resilience: The integration of multiple hierarchical layers ensures redundancy, making the system more resilient to failures in individual components, such as RSUs or UAVs.

Challenges: Despite its promising benefits, the deployment of a sophisticated system like GFSTL in a 6G ITS environment comes with its own set of challenges. These complexities arise from the distributed nature of the system, the diversity of data sources, and the need for careful resource orchestration to ensure optimal performance and model accuracy.

- Resource Management: Efficiently managing network and computational resources across VUs, UAVs, cameras, RSUs, and the HAP is critical. Bandwidth allocation, processing power, and memory management are areas that need careful optimization.

- Data Heterogeneity: Data collected from various components (VUs, UAVs, and cameras) may differ in format and quality. Ensuring that the global model can generalize across heterogeneous data sources is a challenging task.

- Model Convergence: Although TL and SL help to accelerate the training process, achieving convergence in a system as complex as the 6G ITS requires fine-tuning of hyperparameters, especially with regard to the learning rate and aggregation intervals.

5.4. Latency Analysis for a Multilayer 6G ITS Scenario

Here we present a simplified analysis of latency across various DML methodologies used in distributed 6G ITSs, specifically FL, SL, FSL, FSTL, and GFSTL. In distributed frameworks, the overall latency is influenced by both computation and communication times. For our analysis, we assume that all users, comprising VUs, UAVs, and street cameras, have uniform data distributions. Including all these client types creates a more realistic ITS scenario, aligning with the 6G vision of interconnected ITS infrastructure.

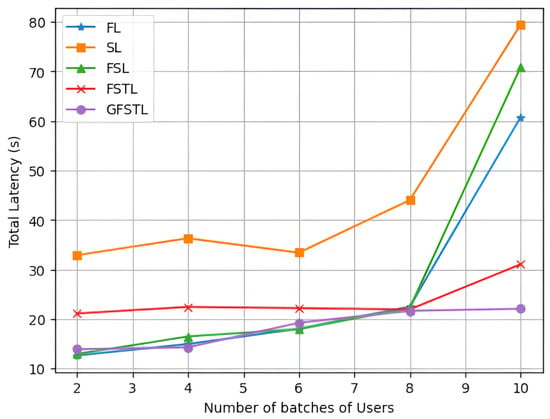

In the ITS scenario under consideration, latency is analyzed by defining several key parameters. Let d denote the total data size that is processed, while h represents the size of the intermediate (smashed) layer’s output during SL or FSL. The data transmission rate, R, is a variable that depends on the communication medium. For training times, T signifies the time required to train a complete DNN from scratch, while and represent training times in FSTL and GFSTL, respectively, using a pre-trained TL model. Additional factors further impact latency in these frameworks. For example, denotes the time required for performing the full aggregation of the model in FL, while represents the merging time of the smashed parameters in SL and FSL. Aggregation times are also split into for handling aggregation on each user group side and for managing aggregation on the main FL server side. Furthermore, the complete model contains q parameters, with r as the ratio of the size of the submodel on the user side to the size of the complete model, calculated as . total latency is the sum of both the computation and communication times. Communication latency is particularly significant in DML due to bidirectional transmission requirements. For example, in expressions such as and , factor 2 reflects the need to upload model updates from users to the server and to download updated parameters back from the server to clients.

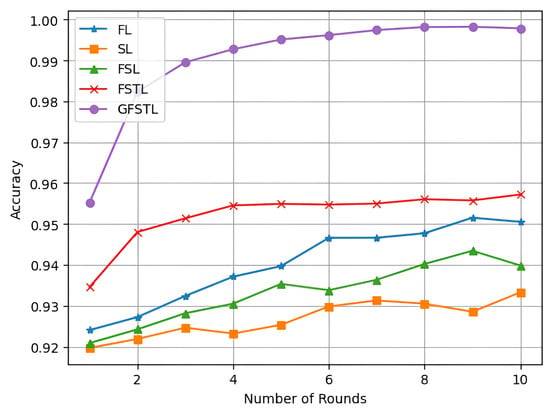

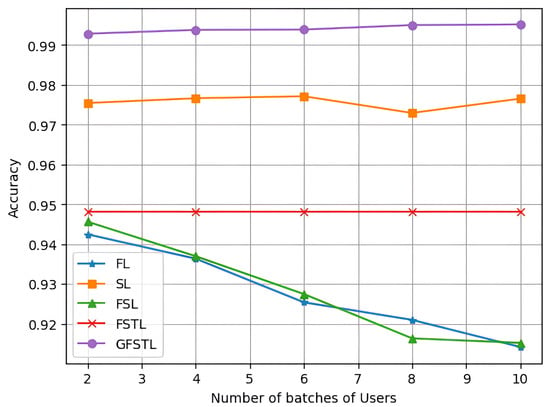

Table 3 and the numerical results in Section 6 demonstrate how latency scales with the number of users n across these methods. In particular, as n increases, SL becomes less efficient because its training time is directly proportional to n due to the serial process. In contrast, FL, FSL, and FSTL methods benefit from parallel training between clients, thus reducing total time even with a larger user base. In terms of latency ordering,

Table 3.

Latency analysis of five DML methods per round.

This ordering of latency reflects specific advantages within each framework. FSTL, for instance, achieves lower latency than FSL by integrating TL, which leverages pre-trained models to expedite convergence. This allows FSTL to start from a more informed state, represented by , and ultimately accelerates the training process. FSL also demonstrates greater efficiency over FL because it requires aggregation of fewer parameters, which makes it less computationally demanding; here, indicates that merging the smaller model segments in FSL is faster than aggregation of the entire model in FL. In contrast, SL exhibits the highest latency of these methods due to its reliance on serial processing steps, which prolongs training time and makes it comparatively slower in performance.

In GFSTL, latency is influenced by both the number of users and the distribution between various groups. Let denote the number of users in the j-th group. GFSTL latency depends on the slowest group in the system, where communication time is dictated by the group with the greatest delay. To account for this, we employ the operator, which represents the overall latency in GFSTL as

Table 3 presents the results of the combined latency analysis for all methods, FL, SL, FSL, FSTL, and GFSTL, illustrating the influence of client numbers n, data size d, and communication rate R on total latency.