1. Introduction

Artificial Intelligence (AI) is an area that is still growing but is already making a significant contribution today [

1]. In recent years, it has played a key role in several areas, from medicine to education, security, and even the automotive industry. The field of AI still has huge growth potential, and we are still far from even imagining how much it will change our lives and influence society as we know it today.

The automotive industry is the main topic covered in this work. This industry has kept pace with the great technological evolution that has taken place over the years. The cars produced today are much more powerful than those used in the past, not only in terms of speed but also in terms of the technology built into them. With all this progress, it is inevitable to talk about risk. Driving is an activity that requires attention and concentration, but many people take high risks in their driving. According to the Público newspaper, Portugal recorded a 1.5% increase in the number of fatalities in road accidents in 2023 compared to the previous year [

2]. Meanwhile, according to the European Commission, road fatalities in the EU decreased by 3% in 2024 compared to 2023, marking a positive trend but still far from the ambitious goal of halving road deaths by 2030. In Portugal, the reduction was only 1%, indicating that progress remains slow [

3]. These data underscore the urgent need for advanced technologies that can further enhance road safety. Artificial Intelligence, through real-time driver behavior assessment, emerges as a key tool to address this challenge by identifying and mitigating aggressive driving patterns.

The approach to this project began with data collection using sensors such as accelerometers and gyroscopes to capture detailed information about the vehicle’s movements in different driving conditions. The data was preprocessed, which is an essential stage to be able to classify driving behaviors assertively, as the data can be inconsistent. Among the essential preprocessing techniques applied, data normalization ensures that sensor values are on a comparable scale (0 and 1), making it easier to detect meaningful patterns in driver behavior. Subsequently, the normalized data were organized into sliding windows to capture essential temporal dependencies. To model these temporal patterns, a neural network architecture of the LSTM (Long Short-Term Memory) type was used, which is ideal for temporal sequences due to its ability to retain both long and short-term information. Additionally, a Conv1D layer was incorporated to capture local patterns within each driving maneuver, effectively acting as an internal sliding window and helping the model learn more compact and representative features. The model was then trained and validated using accuracy as an evaluation metric, given the task of distinguishing between aggressive and non-aggressive driving behaviors. Finally, the model was tested on real-world driving data to assess its performance under diverse conditions, highlighting the solution’s potential for improving road safety through driver behavior monitoring. This hybrid architecture proved effective in learning temporal dynamics while maintaining robustness against variability in the input data.

The main objective of this research is to develop an AI-based system for assessing driver behavior, focusing on distinguishing aggressive and non-aggressive driving patterns using LSTM-based models. A key aim is to identify the most effective LSTM model capable of maximizing classification accuracy. To this end, various configurations were systematically explored to determine the optimal combination of hyperparameters and architectural choices that best capture the temporal dynamics of driving behavior. The experimental phase focused on evaluating different model configurations by tuning critical parameters such as the number of LSTM units, the size of the internal convolutional kernel, and the application of regularization techniques like Dropout. These procedures were conducted using pre-segmented driving maneuvers, which allowed for clearer pattern recognition and more robust model evaluation. The goal was to identify the configurations that delivered the highest accuracy and reliability in detecting aggressive and non-aggressive behaviors.

Unlike other studies that often aggregate driving data without distinguishing between specific maneuvers, this research introduces a structured segmentation approach that categorizes driving events individually, allowing the LSTM network to learn distinct behavior patterns. Additionally, this study validates predictions using real-world GPS trajectories in Google My Maps, ensuring the model’s applicability beyond pre-existing datasets. Another key contribution is the comparative evaluation of two different data processing methodologies, demonstrating that organizing data by maneuver type significantly enhances classification accuracy. Furthermore, this study establishes a framework for future expansion into a multiclass classification system, where specific driving maneuvers, such as accelerations, braking, and turns, can be distinguished. Lastly, this work places a strong emphasis on preprocessing techniques, including data normalization and augmentation, to ensure the robustness and generalizability of the AI model.

This document is organized as follows:

Section 2 presents the literature review, which discusses some of the work related to the topic of this study;

Section 3 is related to the methodologies and approaches adopted;

Section 4 presents the results obtained;

Section 5 discusses the study’s limitations and directions for future research; and finally,

Section 6 concludes the work.

2. Literature Review

This section provides a review of key published papers on the subject, highlighting major findings and trends identified in recent research.

The work

D3: Abnormal Driving Behaviors Detection and Identification Using Smartphone Sensors by Chen et al. [

4] proposes an innovative system called D

3, which uses smartphone sensors, such as accelerometers and orientation sensors, to monitor abnormal driving behaviors in real-time. The system identifies six main types of dangerous behavior: Weaving; Swerving; Sideslipping; Fast U-turn; Turning with a wide radius and Sudden braking. The study analyzes data collected from 20 drivers over 6 months and uses 16 features extracted from these measurements to train an SVM (Support Vector Machine) model, which classifies the behaviors. The system was 95.36% accurate, demonstrating its efficiency in improving road safety.

The paper

Mobile Phone-Based Drunk Driving Detection by Dai et al. [

5] proposes an efficient system for detecting drunk driving behaviors using only smartphone sensors, such as accelerometers and orientation sensors. The main goal is to identify dangerous maneuvers associated with drunkenness, such as lane deviations and sudden changes in speed and direction. Implemented on Android devices, the system monitors lateral and longitudinal acceleration, triggering alerts when it detects abnormal deviations. Tests have shown high accuracy, with a low rate of false positives, even in different driving environments and phone positions within the vehicle.

The research

Driver Behavior Analysis for Safe Driving: A Survey by Kaplan et al. [

6] offers a comprehensive analysis of driver behavior analysis methods, with a focus on driver-oriented applications. The study classifies these applications into three main subcategories: accident prevention, evaluation of driving styles, and prediction of driver intentions. To do this, quantitative factors such as speed and acceleration are considered, as well as qualitative factors such as distraction and personality characteristics. Among the most used methods are image processing techniques, Hidden Markov Models (HMM), and SVM. The paper suggests that hybrid systems combining visual and non-visual methods can improve accuracy in detecting dangerous behavior and points to the implementation of vehicular ad hoc networks (VANETs) as a solution for disseminating distracted driver data to prevent large-scale accidents.

The study

Investigating Car Users’ Driving Behavior Through Speed Analysis, conducted by Eboli et al. [

7], studies the driver behavior with a focus on speed as a crucial factor for road safety. The research uses instantaneous speed data collected by smartphones with GPS installed in vehicles that traveled a stretch of rural road in Italy. During the study, 27 drivers repeated the route several times, allowing detailed speed data to be collected. Based on this data, the drivers were classified into three categories of behavior: safe, unsafe, and safe but potentially dangerous. The study concludes that speeds significantly above or below average increase the risk of accidents, suggesting that awareness policies and adjustments to speed limits could improve safety on rural and two-lane roads.

Driver Behavior Classification System Analysis Using Machine Learning Methods is a work carried out by Ghandour et al. [

8] that refers to a comparative analysis of different driver behavior classification methods using machine learning techniques. The study analyzes behaviors such as distraction, aggression, drowsiness, and normal driving, based on a dataset collected in real-time from six drivers. Four main algorithms are compared: Logistic Regression, Artificial Neural Networks (ANN), Gradient Boosting, and Random Forest. The results showed that Gradient Boosting performed best in terms of accuracy and robustness, outperforming the other models, especially in classifying aggressive and drowsy drivers. The paper concludes that methods based on Gradient Boosting are promising for the classification of driving behaviors in real-time, suggesting that the inclusion of additional variables, such as road conditions and the driver’s mental state, can further improve the accuracy of the models.

Brahim et al. [

9] proposed a solution based on smartphone sensors and machine learning algorithms for classifying driver behavior in real time. Using sensors such as accelerometers, gyroscopes, and GPS, driving data is collected and processed to detect behaviors such as normal, intermediate, aggressive, and dangerous driving. The CARLA simulation environment was used to generate varied driving scenarios, including different weather conditions and speed limits, to test the algorithms in realistic situations. The data is then analyzed using variants of Gradient Boosting Decision Trees (GBDT) and LSTM. Among the algorithms tested, LightGBM was the most efficient, achieving an accuracy of 88%. The study concludes that the use of smartphone sensors, combined with advanced algorithms, offers an effective and low-cost solution for classifying driving behavior in real-time monitoring systems.

In the area of financial time-series forecasting, neural network models such as LSTM and ConvLSTM have consistently demonstrated superior performance compared to traditional approaches. For example, when applied to orange juice futures, these models outperformed ARIMA and Support Vector Regression (SVR), effectively capturing long-term and short-term temporal dependencies and delivering lower prediction errors [

10]. This serves as further evidence of the ability of LSTM architectures to handle complex sequential patterns, reinforcing their suitability for driver behavior modeling.

Beyond driving behavior analysis, LSTM-based architectures have demonstrated high performance in various other domains involving sequential data. In the context of building occupancy prediction, a hybrid Graph Neural Network (GNN) and LSTM model were shown to outperform traditional Recurrent Neural Networks (RNNs) and Gated Recurrent Units (GRUs), achieving closer alignment with real occupancy profiles [

11]. This reinforces the effectiveness of LSTM models in capturing complex temporal dependencies, supporting their application in driver behavior classification.

LSTM and other recurrent neural networks have also proven highly effective in electromyographic (EMG) signal classification. A recent study showed that by optimizing RNN architecture using the Grey Wolf Optimization algorithm, it was possible to achieve approximately 98% classification accuracy for upper limb movements, while also outperforming GRUs and bidirectional networks in processing speed [

12]. These findings further highlight the capacity of LSTM-based models to capture complex temporal patterns in biological time-series data, reinforcing their applicability in driver behavior analysis.

In industrial control contexts, LSTM-based architecture has also been leveraged to enhance system responsiveness and safety. Specifically, a recent study demonstrated that a symmetry-enhanced LSTM recurrent neural network significantly reduced oscillations in overhead crane systems during material transport [

13]. These results underscore the versatility of LSTM models for managing dynamic behaviors in mechanical systems, further supporting their suitability for modeling and classifying driving behavior sequences.

Similarly, in the domain of fuel consumption prediction, incorporating “jerk” (rate of change in acceleration) into neural network models has been shown to enhance accuracy significantly. One study demonstrated that LSTM-based models, when including “jerk” as an input variable, achieved the greatest performance improvements under high-speed or expressway driving conditions [

14]. This finding highlights the importance of nuanced temporal features in sequential modeling tasks, particularly those involving vehicle dynamics.

These findings confirm the suitability of LSTM networks for temporal modeling tasks that require both predictive accuracy and adaptability to domain-specific dynamics.

3. Methodology

This section presents the overall framework of all the methodologies adopted, including: the description of the purpose of this project and what it was designed to achieve; it covers the LSTM neural network and its combination with Convolutional Neural Networks; the processes of data collection, processing, and classification, as well as the separation of the data into training and testing sets; and finally, the creation and compilation of the model.

3.1. Project Purpose

The purpose of this project is to develop a machine learning-based system that can assess driver behavior by identifying both aggressive and non-aggressive driving patterns. By leveraging data from sensors such as accelerometers and gyroscopes, the system aims to detect behavioral trends across individual driving maneuvers. The project explores LSTM networks and convolutional layers to model the temporal and local dependencies of sensor data.

The end goal is to build a competent and reliable LSTM-based model capable of playing an important role in ensuring safer road environments by providing a tool that can support real-time driver monitoring.

The implementation process was entirely developed in Python (version 3.11.4), selected for its versatility and rich ecosystem of libraries suited to machine learning tasks. All development activities, from data preparation to model evaluation, were performed using Visual Studio Code (version 1.100.1), which offered a flexible and scalable environment to support the project’s technical requirements.

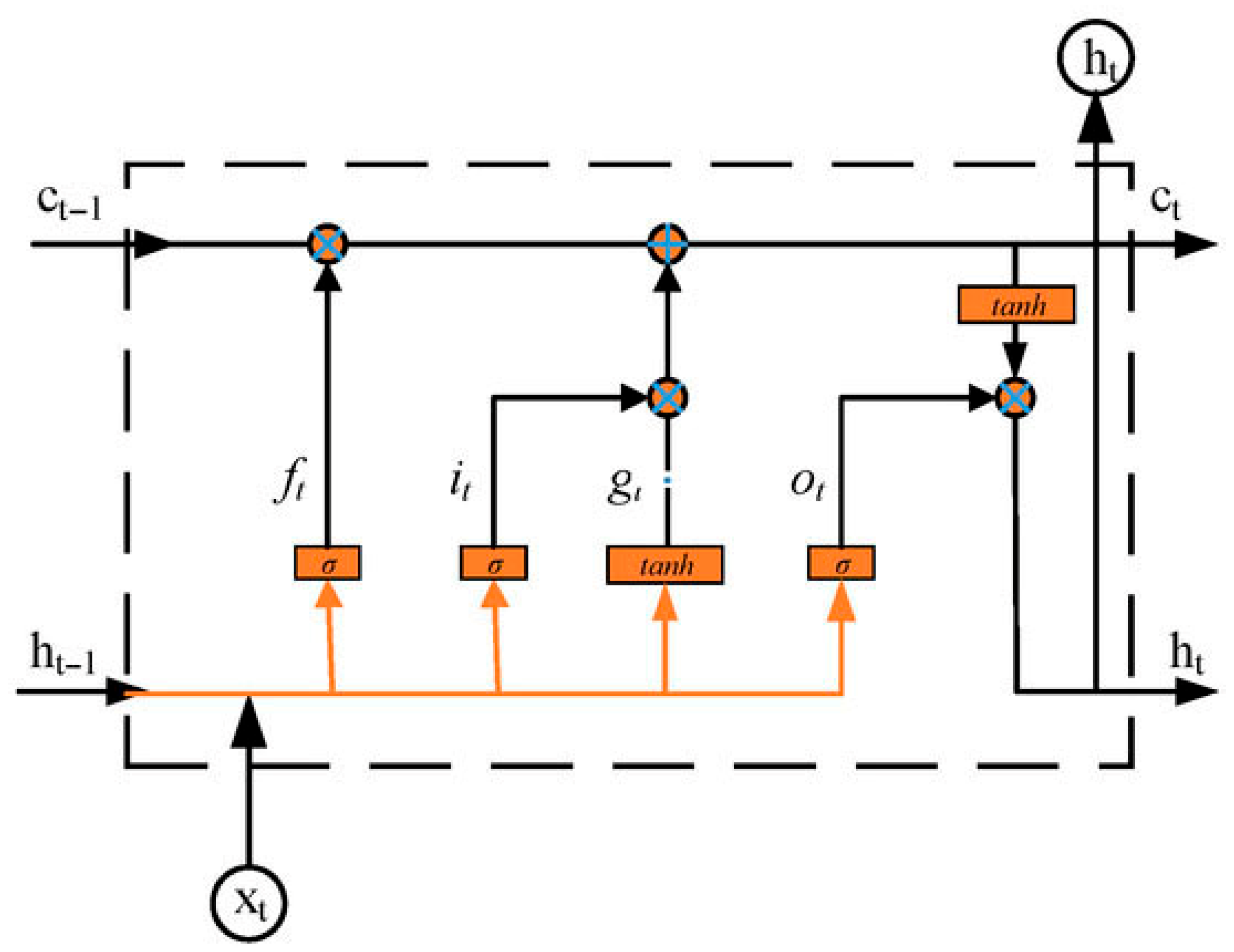

3.2. Long Short-Term Memory Neural Networks

LSTM networks are a type of RNN specially designed to process sequential data and capture temporal dependencies. Developed by Hochreiter and Schmidhuber, LSTMs overcome the limitations of traditional RNNs, such as the vanishing gradient problem, making it difficult to learn long-term dependencies in long data sequences [

15]. The LSTM has memory cells that hold information over time intervals and three main gates—input, forget, and output—that regulate the flow of information, allowing the network to “remember” or “forget” relevant information in a controlled manner [

16]. This unique structure makes LSTMs ideal for tasks such as time series forecasting, natural language processing, and, in this case, classifying driving behavior patterns.

Figure 1 illustrates an LSTM cell [

17].

As mentioned, an LSTM network is composed of multiple memory cells designed to manage and preserve relevant information across time steps. Each cell contains three critical components known as gates, which control the flow of information: the Forget Gate, the Input Gate, and the Output Gate [

18].

The Forget Gate (

ft) evaluates which parts of the previous cell state

Ct−1 should be discarded, based on the current input

Xt and the previous hidden state

ht−1 (see Equation (1)):

In this expression, Wt and bf represent the weights and biases associated with the forget gate, respectively. The activation function σ is a sigmoid function, which outputs values between 0 and 1, effectively functioning as a filter that scales the influence of each part of the previous memory.

Next, the Input Gate (

it) regulates how much new information will be stored in the cell state and is responsible for controlling how much new information is added to the memory cell. Its activation is calculated using Equation (2):

In parallel, Cell State Update

ĉt (temporary cell state) proposes an update to the memory cell and it is calculated using the

tanh activation function (see Equation (3)):

This candidate state contains new potential information to be merged with the previous cell state.

The actual update of the cell state

Ct combines both the previous state (scaled by the forget gate) and the new candidate values filtered by the input gate (see Equation (4)):

Here, Ct−1 represents the previous cell state and ⊙ represents element-wise multiplication. This equation allows LSTM to selectively retain relevant past information and incorporate new data as needed.

The Output Gate (

ot) determines which part of the cell state will influence the next hidden state. Like the other gates, it is calculated using the previous hidden state and the current input (see Equation (5)):

Once the output gate has been computed, the hidden state

ht is updated by applying the

tanh function to the new cell state and modulating it through the output gate (see Equation (6)):

This hidden state ht is then passed forward in the sequence, carrying forward the filtered representation of the cell’s memory.

In this project, LSTM was chosen due to its ability to learn patterns from time-series data, which is fundamental in detecting driver behavior based on vehicle sensor readings and GPS data. Driving behavior consists of continuous actions over time, such as acceleration, braking, and maintaining speed. Since these actions form sequential dependencies, a model capable of understanding such temporal relationships is crucial.

In this context, it is important to note that each driving maneuver in the dataset is composed of a sequence of consecutive sensor readings—on average, 15 records per maneuver. Since each reading follows directly from the previous one, there is a clear temporal dependency between them. This temporal structure reinforces the use of LSTM, as it is designed to capture such sequential relationships within time-series data.

The use of LSTM is directly related to the term sliding windows because instead of analyzing single data points, the LSTM receives a sequence of consecutive readings (e.g., acceleration, speed, and braking values over the last 5 timestamps, if the sliding window value is 5). This allows the model to observe trends and behaviors instead of relying only on instantaneous values. As the LSTM processes each window, it updates its memory cells based on the relevance of previous actions. For example, if a driver suddenly accelerates before a roundabout, the LSTM can recognize this aggressive behavior pattern by considering past acceleration trends. The training algorithm updates the weights of the LSTM gates by minimizing the error between the predicted and actual driving behavior labels (aggressive or non-aggressive). Over multiple training epochs, LSTM learns to associate specific driving patterns with their corresponding labels, so the model improves in distinguishing between non-aggressive driving and aggressive behaviors, generalizing patterns from different drivers and conditions.

Therefore, LSTM was chosen for its ability to store relevant information over time, helping the model understand past behaviors and predict future actions.

3.3. LSTM with Convolutional Neural Networks

Convolutional Neural Networks (CNNs) are widely used in various domains due to their ability to automatically extract spatial or local features from input data [

19]. In the context of time-series or sequential data, 1D CNNs (Conv1D) have shown effectiveness by acting as localized pattern detectors across the temporal axis. This architecture is particularly useful for learning relevant features such as sudden changes in acceleration or direction during driving maneuvers, which may indicate aggressive behavior. Conv1D layers also help reduce input dimensionality and enhance generalization by filtering out noise and redundant information, contributing to greater model robustness against variability in sequential data and improving computational efficiency [

19].

One of the major advantages of Conv1D is its versatility in being integrated with other architectures, such as LSTM networks, which makes it ideal for hybrid designs. In this solution, Conv1D layers are integrated before LSTM layers to combine local pattern extraction with long-term temporal modeling, creating a hybrid architecture well-suited for behavior classification in vehicular environments.

3.4. Dataset Description and Data Processing for Classification

The dataset used in this project was collected through some controlled driving circuits, with a vehicle operated under different conditions to capture distinct maneuvers such as acceleration, braking, and others.

Figure 2 illustrates examples of maneuvers considered, including sudden acceleration and deceleration, right and left turns, steep uphill and downhill segments, and vehicle inclination in a roundabout.

Data acquisition was performed with a dedicated Android application. It served both for training the model and evaluating its performance. This data was obtained by the phone’s sensors, such as the accelerometer, gyroscope, and GPS, and it could be exported via JSON and CSV formats. During recording, smartphones were placed horizontally with the screen facing upward and the accelerometer’s Y-axis aligned with the vehicle’s forward direction, ensuring consistency in sensor orientation and minimizing variability that could affect model performance.

Figure 3 illustrates the mobile application.

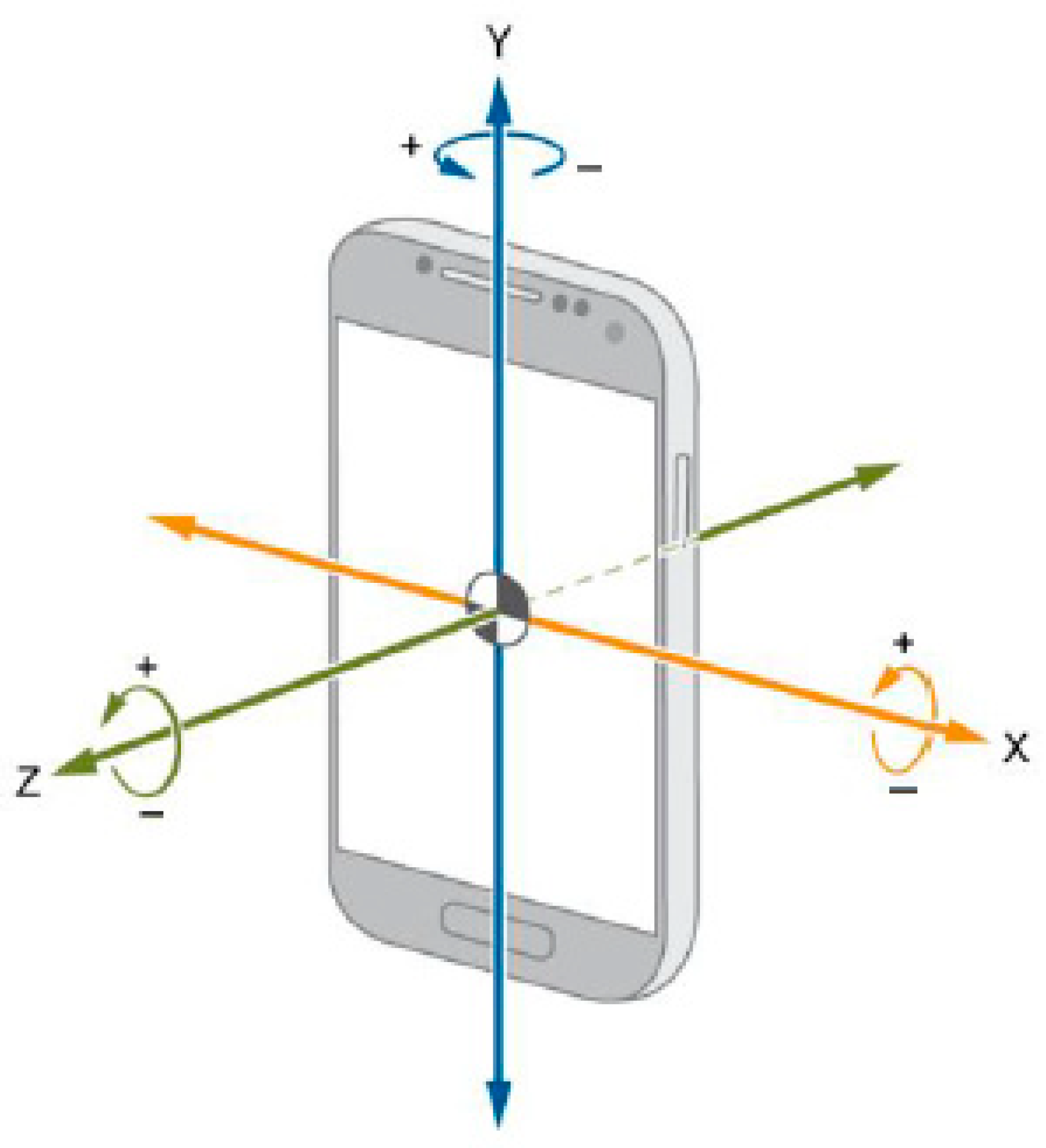

Modern smartphones are equipped with a wide variety of sensors that allow them to capture a large amount of data. Each of these sensors has its own function in detecting movements while driving. The recorded data includes readings along three axes for the accelerometer and the gyroscope (x, y, z). These axes provide crucial information about the movement and applied forces in different directions, allowing the identification of specific driving maneuvers.

The accelerometer is responsible for detecting changes in the speed of the mobile device along the three axes (x, y, z), making it possible to detect linear movements such as accelerations and hard braking (see

Figure 4).

The gyroscope measures the mobile device’s rotation rate around the three axes, detecting changes in direction and rotations while driving, particularly when cornering. The next image shows the axes of a gyroscope (see

Figure 5).

Finally, the GPS provides the geographical location of the device, as well as speed and direction information. This allows you to obtain the vehicle’s position in real time, as well as its entire route.

To improve data structure and classification effectiveness, this dataset was organized by individual driving maneuvers. Instead of aggregating continuous sequences of driving data into a single dataset, each maneuver (e.g., acceleration, braking, turning) was recorded and stored as a separate block. This approach ensures that each entry corresponds to a single type of behavior, allowing the model to learn more accurate and consistent temporal patterns associated with aggressive or non-aggressive driving. By treating each maneuver as an isolated sequence, it becomes possible to minimize noise caused by mixed behaviors and enhance the quality of the training and evaluation process.

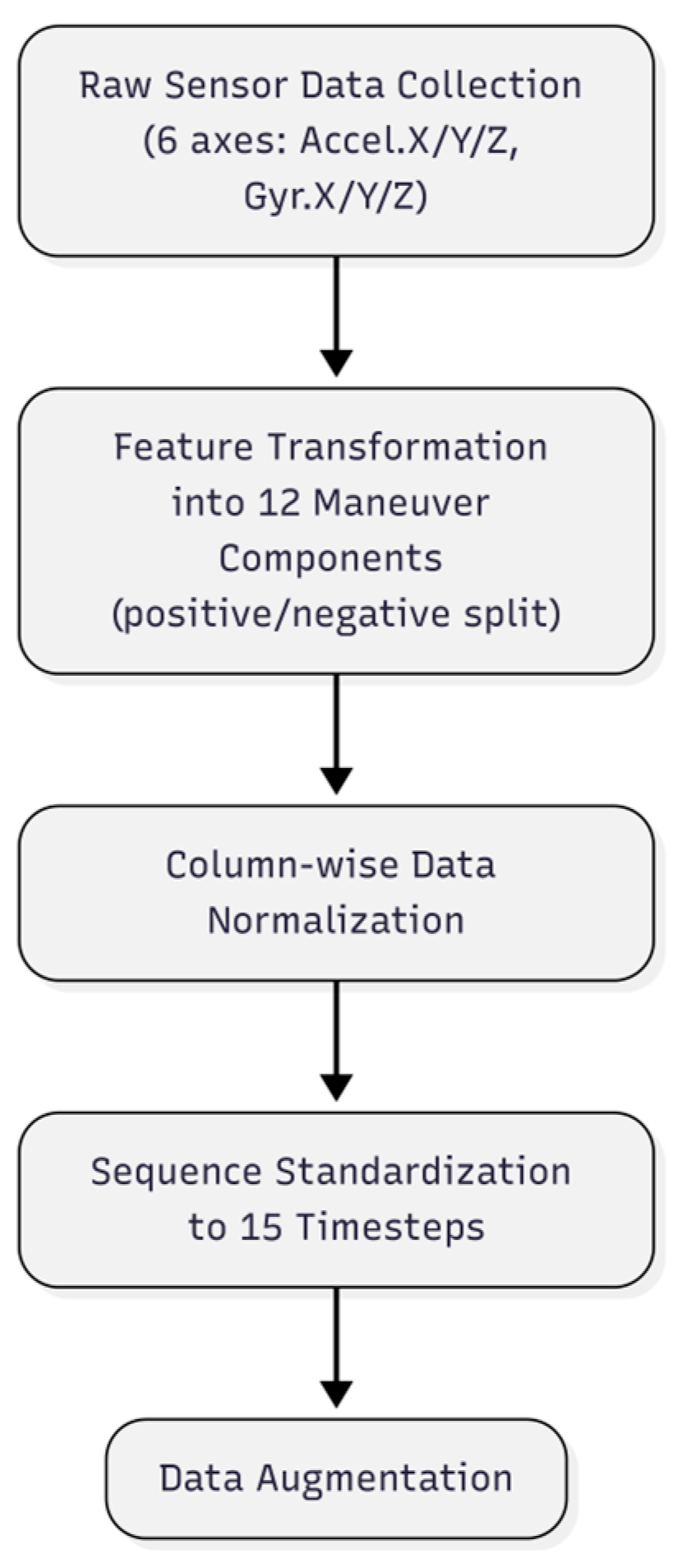

Figure 6 presents a flowchart summarizing the preprocessing workflow, from raw data acquisition through transformation, normalization, and augmentation. It provides a visual guide to the sequential steps involved in preparing the data for classification.

Once collected, data needs to be treated and processed. Each row of the dataset corresponds to a simultaneous reading from the accelerometer and gyroscope across the three spatial axes (x, y, z). These sensor readings are essential for identifying driving patterns.

Table 1 illustrates an example of the raw data extracted from the dataset.

The collected data was transformed to highlight directional changes more effectively. Every axis was split into two components (positive and negative), yielding 12 features per timestep. This approach ensured that the model was not affected by potentially negative numbers, maximizing its accuracy. To enhance the LSTM model’s ability to capture meaningful patterns, the dataset was transformed before normalization by splitting each feature into positive and negative components. This separation enabled the model to treat variations independently, improving the detection of specific driving behaviors. Each original sensor measurement is converted into two columns: one capturing only positive values and the other capturing only negative values.

Concretely, positive values remain unchanged, while negatives were placed in separate columns, and all values converted to absolute magnitude. Therefore, each row of a reading corresponds to the values recorded by the accelerometer and gyroscope at a specific instant for a specific vehicle maneuver.

Table 2 illustrates the dataset structure after transformation, showing the naming convention used to ensure consistency with the physical actions represented in the original sensor readings.

By separating these components, the model gains a clearer distinction between increasing and decreasing forces, improving its ability to distinguish aggressive from non-aggressive driving behaviors.

After splitting features into positive and negative components, normalization was applied using the maximum absolute value of each column as a reference. By calculating these maximums, we establish the necessary upper bounds that will be used to normalize all data values. Data normalization rescales features to a common range, reducing amplitude differences and improving training stability, speed, and accuracy.

In this approach, a column-wise normalization was applied, with each of the 12 feature columns divided by its maximum value to rescale data to [0, 1] and avoid distortions in training.

Table 3 illustrates the result of the normalization process, where values were scaled to [0, 1] by dividing each column by its maximum, ensuring consistent feature representation in model training.

After normalization, all maneuver samples were standardized to 15 records, reflecting the average temporal duration of a typical driving maneuver (e.g., acceleration, braking, or turning), which usually spans between 15 and 20 sensor records. By fixing the length to 15, each sample captures a complete maneuver while maintaining uniformity across the dataset. Maintaining a uniform input length is essential for training deep learning models such as LSTM and Conv1D, which require consistent input shapes.

Each maneuver was thus represented as a sequence of 15 timesteps with 12 features (six sensor axes split into positive and negative components). Sequences shorter than 15 were filled with zero-valued rows at the end to reach the required length, while longer ones were split into overlapping sliding windows of size 15 using a stride of 1 (e.g., records 0–14, 1–15, 2–16, etc.), ensuring all inputs had shape (15, 12).

To enhance generalization, data augmentation was applied through a random perturbation algorithm that introduced small variations (±1%) to each individual sensor value (accelerometer and gyroscope across all axes). Each maneuver was treated as an individual 15-record block, and the perturbation ensured that the overall temporal structure of the maneuver was preserved while introducing realistic noise patterns, simulating natural variations that could occur in real-world driving conditions.

This procedure was applied exclusively to aggressive driving samples, as they were significantly underrepresented in the dataset compared to non-aggressive ones. An initial augmentation factor of ×10 was found to be insufficient, as the dataset remained imbalanced and the model struggled to consistently detect aggressive behaviors. To address these limitations, the augmentation factor was increased to ×20, which produced a more balanced dataset and improved the model’s ability to generalize across different driving styles. As a result, the model was exposed to a more balanced representation of both classes during training, enhancing its ability to detect aggressive behaviors despite the natural imbalance in real-world data.

In this work, the classification task is formulated as a binary problem, distinguishing aggressive (label = 1) from non-aggressive (label = 0) maneuvers.

Unlike threshold-based approaches, where specific acceleration or braking limits are used to define aggressive behavior, this study deliberately avoided rigid quantitative thresholds. Such rules can lead to bias and poor generalization, as vehicles with different weights, braking capacity, or engine power may produce very different sensor readings for the same maneuver. Instead, we adopted a pattern-based, data-driven classification, allowing the model to learn directly from temporal sequences. This choice is supported by Kaplan et al. [

6], who highlight the limitations of threshold-driven methods in heterogeneous driving contexts and emphasize the potential of hybrid and learning-based systems. Ghandour et al. [

8] further demonstrate that machine learning models consistently outperform rule-based detection in classifying behaviors such as aggression or drowsiness, while Ben Brahim et al. [

9] reinforce that smartphone-sensor data combined with algorithms such as LSTM or Gradient Boosting provides a more robust alternative to predefined thresholds, especially under variable road and weather conditions.

Each sample consisted of 15 time-ordered sensor records, with class labels assigned after preprocessing and augmentation according to the nature of the maneuver. This labeling step was fundamental to preparing the dataset for supervised learning, as the labeled instances provided the ground truth for model training and evaluation. While future work aims to expand this classification into a multiclass model that distinguishes between specific maneuvers, this research focuses on binary classification because the primary goal is to analyze the effect of training models on isolated driving maneuvers, that is, an approach that is notably underexplored in the literature.

After labeling, data and labels were aligned into arrays (X_data, Y_data), providing the structure needed for effective learning and generalization. This binary setup simplifies the task while laying the foundation for future multiclass extensions to identify specific aggressive maneuvers, such as sudden acceleration, harsh braking, or sharp turns.

Table 4 summarizes examples of aggressive and non-aggressive maneuvers, defined during controlled circuits to ensure consistent dataset labeling.

3.5. Split Data into Training and Test Sets

To ensure a fair and reliable evaluation of model performance, the dataset was split into two sets: 80% of the samples were used for training, and the remaining 20% were reserved for testing. This partition allows the model to learn general patterns from a large portion of the data while keeping a separate subset to objectively assess its ability to generalize to unseen driving behavior. In this project, an 80/20 train–test split was used to balance two needs: ensuring sufficient data for model training (particularly for deep architectures such as LSTM and Conv1D) while reserving a substantial independent subset for assessing generalization. The choice of this ratio is well established in the literature. Joseph [

20] identifies 80/20 as a standard split in many machine learning tasks, and Sivakumar et al. [

21] highlight its frequent use alongside alternatives such as 70/30 or 90/10 depending on dataset size and model complexity. Importantly, in the specific domain of driver behavior classification, recent studies have also adopted this convention. For instance, a 2024 study by Gheni et al. [

22] on deep learning for driver behavior assessment explicitly applied an 80/20 partition when working with sensor-based data, supporting the appropriateness of this ratio for tasks closely aligned with the present work. This evidence reinforces that allocating 80% of the data for training and 20% for testing offers a suitable compromise, providing sufficient variety for learning while maintaining a robust evaluation set to measure generalization.

This split was implemented using randomized shuffling (shuffle = True in the model definition) to guarantee a representative distribution of both aggressive and non-aggressive classes in each subset. Such randomness reduces the risk of overfitting to specific patterns and ensures that the model learns features that are generalizable across different maneuvers and driving contexts.

The selected split ratio reflects a balance between providing sufficient data for effective training and retaining enough diversity for a robust evaluation phase. By following this methodology, it is possible to monitor the model’s learning process and validate its performance under real-world-like testing conditions.

3.6. Model Architecture

The deep learning model developed for this study follows a hybrid architecture combining 1D Convolutional Neural Networks (Conv1D) with Long Short-Term Memory (LSTM) layers. This design leverages the strengths of both architectures: Conv1D for capturing localized patterns within driving maneuvers and LSTM for modeling long-term temporal dependencies across sequences of sensor readings.

To effectively model temporal relationships in driving data, a sliding window technique was adopted. This approach involves transforming raw sequential sensor readings into structured input segments of fixed size, allowing the neural network to analyze not just isolated values but patterns over time. This small conceptual step is essential for preparing the input sequences and understanding the architecture of the LSTM-based model.

Several tests and approaches were carried out in search of the best combination of factors that would deliver excellent performance. In one of the test ranges, these sliding windows were created manually, by code, after data normalization. In this case, the full dataset was treated as a continuous sequence, where a fixed-size window (e.g., 5, 7, 10, or 15 timesteps) slid across the data sample by sample with a stride of 1. This generated overlapping sequences that preserved the temporal structure of the driving signals. Each resulting window was treated as an independent training sample, allowing the LSTM to analyze how behaviors evolved over short, continuous intervals.

Figure 7 illustrates how the sliding window mechanism operates using a window size of 5 as an example. The first highlighted block corresponds to the first moment of the sliding window, while the second block illustrates the second moment.

As mentioned, several tests were carried out, some of which used the previously mentioned approach to draw conclusions about its performance. The manual sliding-window mechanism was used during some experiments. This operation was replaced by the mechanism integrated into the Conv1D layer, which inherently performs the sliding-window operation.

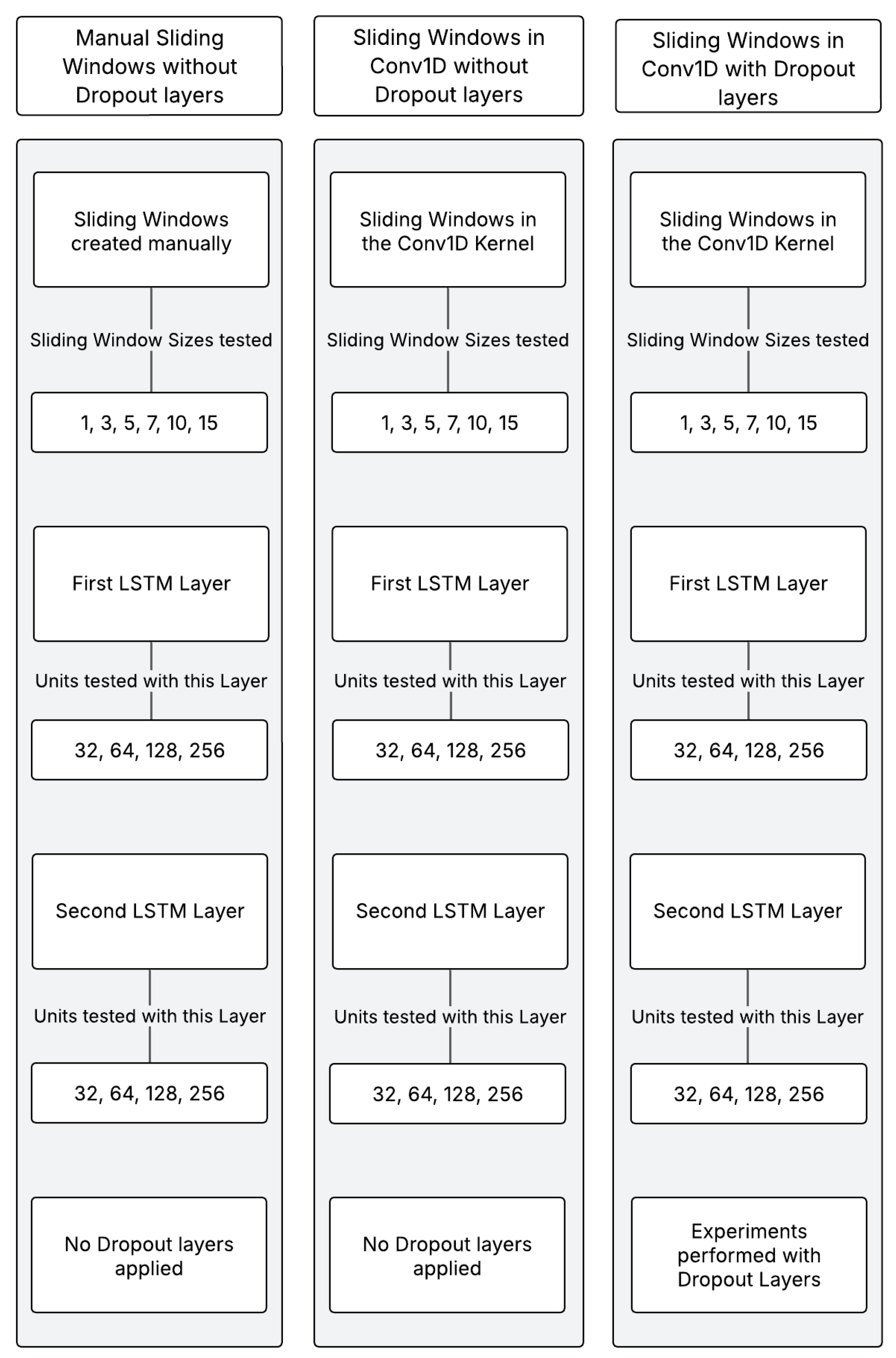

In this alternative configuration, the Conv1D layer was employed to automatically extract temporal features from the preprocessed data. The kernel size parameter of Conv1D defines the number of consecutive timesteps the filter considers at once, which effectively acts as an internal sliding window. For example, a kernel size of 5 allows the model to analyze patterns spanning 5-time steps within each maneuver. This integration eliminates the need for manual sequence generation, as the convolutional layer inherently slides across the input sequence, learning local patterns. To evaluate the impact of different architectural choices, a comprehensive series of experiments was conducted by systematically varying key model parameters. The primary elements investigated included the kernel_size parameter of the Conv1D layer, the number of units in each LSTM layer, and the inclusion or absence of Dropout layers. Each of these components plays a vital role in the model’s learning capacity, generalization ability, and resistance to overfitting.

The kernel size of the Conv1D layer was tested with multiple values, including 1, 3, 5, 7, 10, and 15, to explore how different temporal resolutions affect the detection of driving behavior patterns. Smaller kernel sizes focus on finer-grained changes over short intervals, while larger kernel sizes allow the model to capture broader temporal contexts within each maneuver.

In terms of LSTM configuration, the architecture includes two stacked LSTM layers, allowing the model to learn both low-level and higher-order temporal dependencies. To find the most effective setup, several combinations of hidden units were tested in each LSTM layer: 32, 64, 128, and 256 units. In the first LSTM layer, the memory was explicitly reset after each batch, meaning it did not retain information from previous sequences. This ensures that each sequence is treated independently during training, avoiding unwanted dependencies between batches.

Dropout layers were another critical focus in the model design. In early tests, Dropout was omitted to observe the baseline learning capacity of the model. However, Dropout layers were subsequently introduced after the Conv1D layer and between the LSTM layers. These layers randomly deactivate a portion of neurons during training, improving the model’s ability to generalize and reducing the likelihood of memorizing training samples. The Dropout rates were set empirically, with the first Dropout layer often using a higher value (e.g., 0.5) compared to the subsequent ones (e.g., 0.2), aligning with best practices in deep learning regularization.

Additionally, Batch Normalization was applied after the Conv1D layer in all model configurations. This technique normalizes the outputs of the previous layer, accelerating training and improving model stability by reducing internal covariate shift.

All model configurations concluded with a Dense output layer containing a single neuron with a sigmoid activation function, producing a probability score indicating whether the driving behavior was aggressive or non-aggressive. This structure is suitable for the binary classification problem defined in this study.

Figure 8 illustrates a diagram of the different approaches tested in this project.

The architecture presented in

Table 5 features a Conv1D layer with 64 filters, responsible for extracting local spatial features, followed by Batch Normalization and a Dropout layer to enhance training stability and prevent overfitting. The sequence then passes through two LSTM layers, with 32 and 64 units, respectively, each followed by additional Dropout layers. A Dense output layer performs the final binary classification.

Table 6 summarizes the parameter distribution of this configuration, totaling 49,153 parameters (49,025 trainable and 128 non-trainable), making it a more lightweight model suitable for efficient behavior recognition. It reflects a representative example of the hybrid Conv1D-LSTM model evaluated throughout the experiments and illustrates just one of the many configurations tested during the process.

The Conv1D-LSTM model architecture begins with a 1D Convolutional (Conv1D) layer designed to extract local features from time-series input data. This layer uses 64 filters with a kernel size of 15 and applies the ReLU activation function. The input shape to this layer corresponds to the number of time steps and features per time step.

Following the convolutional layer, a Batch Normalization layer is applied. This step helps stabilize and accelerate training by normalizing the outputs of the previous layer, reducing internal covariate shift.

To improve generalization and prevent overfitting, a Dropout layer is added immediately after, with a dropout rate of 0.5. This layer randomly deactivates 50% of the neurons during each training iteration.

The next stage is the primary LSTM layer, configured with 32 memory units. This layer is set to return sequences so that the subsequent LSTM layer receives the full output sequence. The memory is reset at the end of each batch, which avoids learning dependencies across unrelated sequences.

Another Dropout layer follows, this time with a rate of 0.2, again promoting regularization and preventing the model from overfitting.

Next, a second LSTM layer is introduced with 64 units. This second LSTM layer condenses the processed sequence into a fixed-size vector representing the entire input window.

A final Dropout layer, also with a rate of 0.2, is placed before the output layer. This contributes additional regularization before the final prediction step.

The architecture concludes with a Dense output layer containing a single neuron and a sigmoid activation function. This configuration produces a probability value between 0 and 1, indicating whether the input maneuver corresponds to aggressive (1) or non-aggressive (0) driving behavior.

The model was compiled using the Adam optimizer, Binary Cross-Entropy as the loss function, and Accuracy as the primary evaluation metric. These settings are suitable for binary classification tasks and help ensure efficient weight updates during training.

Additionally, two callbacks were used: EarlyStopping, to prevent overfitting by halting training when validation loss stops improving, and ReduceLROnPlateau, to dynamically adjust the learning rate and enhance convergence when performance plateaus.

3.7. Training Process

The training process was designed to optimize the model’s ability to distinguish between aggressive and non-aggressive driving patterns. The model was trained using 80% of the dataset, while the remaining 20% was reserved for testing. From the training portion, a validation split of 20% was applied to monitor performance during training.

Each model was trained over a maximum of 100 epochs with a batch size of 32. During training, performance was monitored using validation loss and accuracy. The EarlyStopping callback ensured that training stopped automatically if no improvement was observed in validation loss for 10 consecutive epochs, restoring the best model weights. Simultaneously, the learning rate was adjusted dynamically using the ReduceLROnPlateau callback, halving it when the model stopped improving for 5 epochs.

This configuration ensured that models converged efficiently, adjusted their learning rates according to training dynamics, and maintained strong generalization, with Accuracy serving as the primary metric for performance evaluation.

The implementation of the models was carried out using Python (version 3.11.4) in a local environment with Visual Studio Code (version 1.101.0) as the development platform. This project employed Pandas (version 2.2.1) and NumPy (version 1.26.4) for data manipulation, Matplotlib (version 3.8.3) for result visualization, Scikit-learn (version 1.5.2) for data processing and evaluation, and TensorFlow/Keras (version 2.15.0) for building and training the deep learning models. These tools collectively supported all stages of the workflow, from data processing to model development and performance analysis.

4. Results

All experiments were conducted on a local machine running Windows 10 Pro, equipped with an Intel Core i7-10750H CPU at 2.60 GHz, 16 GB of RAM, and an NVIDIA GeForce GTX 1650 GPU. This hardware configuration ensured sufficient computational resources for training and evaluating the deep learning models developed in this study.

During the early stages of this project, preliminary experiments were conducted using a dataset where all driving maneuvers were collected and aggregated into a single sequence. In this initial setup, aggressive and non-aggressive behaviors were merged within the same dataset, without separating them into distinct driving events. Although this approach simplified data management, it presented several challenges. Specifically, the presence of mixed behaviors within the same sliding window sequences often led to inconsistent or ambiguous training samples, which may have hindered the model’s ability to learn clearly distinguishable patterns. As a result, the performance of the model in these early tests was noticeably lower compared to more recent configurations.

To address this limitation, a refined data organization strategy was adopted. In the current approach, each driving maneuver such as acceleration, braking, or turning is stored and processed individually. Each maneuver is treated as an isolated time-series block, which allows for more accurate pattern extraction and better representation of temporal dependencies. This separation ensures that each sequence passed to the model corresponds to a single, coherent behavior, significantly improving the clarity and reliability of the training data.

Although this paper focuses on the current strategy involving pre-segmented driving maneuvers, it is important to acknowledge earlier experiments provided valuable insights guiding design choices in later stages. These include not only the change in dataset organization but also the systematic exploration of parameters such as the kernel value from Conv1D, LSTM layer dimensions, and the integration of dropout regularization. These elements played a critical role in shaping the final architecture presented in this research.

Table 7 presents the best results obtained from the experimental evaluation of the proposed model, showcasing the top-performing combinations of hyperparameters in terms of classification accuracy and validation loss. The configurations shown reflect the most effective setups tested during the different experimental approaches, varying the Conv1D kernel size, LSTM layer dimensions, and the application of Dropout regularization. These combinations yielded the highest generalization performance on the validation set and served as a benchmark for assessing model robustness and consistency.

A complete list of all experiments conducted, including detailed results for each configuration tested, is available online [

23], ensuring transparency and reproducibility of the results. In this webpage, a preliminary table is first presented, summarizing early test outcomes. At the end of the page, a refined table highlights the top-performing configurations selected from all tests, based on their accuracy and loss performance.

An overall analysis of the top-performing configurations reveals several important patterns. Firstly, a window or kernel size of 10 stands out as the most consistent parameter among the best results, appearing repeatedly across different approaches. This supports the hypothesis raised during earlier discussions: 10 timesteps seem to encapsulate an entire driving maneuver effectively, allowing the model to detect meaningful patterns without including unrelated or noisy data. This balance avoids underrepresentation (as might occur with kernel size 1) and prevents the inclusion of multiple behaviors (as could happen with excessively large kernels like 15). Notably, even among models using manually generated sliding windows, configurations with a size of 10 showed excellent performance, highlighting that this length is effective regardless of whether the segmentation is performed manually or by convolution. This may also align with the fact that a typical driving maneuver in the dataset spans approximately 10 sensor readings. In contrast, larger window sizes such as 15 may include transitions between maneuvers, potentially blurring the distinct behavioral patterns the model aims to capture.

It is important to emphasize that

Table 7 summarizes only the top-performing configurations obtained in our experiments. However, a complete record of all test approaches, including intermediate configurations, error rates, and the evolution of accuracy and loss across epochs, is publicly available in the online repository [

23]. These detailed results provide a richer picture of the training dynamics, supporting the transparency and reproducibility of the study. Moreover, the curves presented in online repository confirm that the reported high accuracies are not isolated outcomes but consistent patterns across different training runs.

When comparing manual sliding windows with automated kernel-based pattern extraction via Conv1D, the results show that kernel-based approaches generally yielded slightly better outcomes in terms of loss values, even though all top configurations reached 100% accuracy. This suggests that integrating the segmentation process directly into the network architecture (via Conv1D) not only simplifies the pipeline but may also improve training efficiency and consistency in capturing local patterns. The convolutional approach appears to offer more stable generalization, likely due to its ability to learn optimal feature extraction filters directly from the data.

Regarding regularization, although Dropout layers were included in some tests, the lowest loss values are predominantly found in configurations without Dropout. This may indicate that, given the relatively balanced and augmented dataset, Dropout was not strictly necessary, and its removal allowed the model to preserve more learning capacity. However, the presence of Dropout layers in some of them still produced highly competitive results, showing that it did not hinder learning, especially in slightly more complex architectures.

Another important insight relates to the architecture of the LSTM layers. The most successful models generally used a larger number of units (128 or 256) in the first LSTM layer, followed by a smaller second LSTM layer (32 or 64 units). This design allows the network to capture complex temporal dynamics early in the sequence processing while condensing the learned representation for final classification. This hierarchical structure appears to strike a good balance between model capacity and overfitting prevention.

An additional noteworthy observation concerns the configuration 32–32 (referring to the number of units in the first and second LSTM layers, respectively) using a sliding window size of 10. Despite other configurations also achieving 100% accuracy, this setup is considered the best overall. The simplicity of this model translates into fewer trained parameters, making it significantly lighter and faster, which is an essential advantage for real-time applications. When applied in a real-world environment to process incoming data in real time, a configuration such as 32–32 would be preferable over more complex setups like 32–64, as it requires fewer weights to be processed and can deliver results more rapidly. The fact that such a minimal configuration yielded outstanding results suggests that it not only performs effectively but also ensures computational efficiency, offering an ideal balance between accuracy and responsiveness.

An analysis of the training dynamics across the best-performing configurations revealed that, on average, the training process concluded around epoch 37. This value corresponds to the point where the EarlyStopping mechanism interrupted training due to stagnation in validation loss improvement. Although the maximum number of epochs was set to 100, the early convergence around this value indicates that the model was able to learn relevant patterns efficiently within the first third of the training time. This also suggests that the architecture and preprocessing strategies adopted enabled rapid convergence without overfitting. The EarlyStopping strategy, therefore, proved effective in optimizing training time while preserving performance, and the average stopping point around epoch 37 may serve as a useful guideline for similar future implementations.

Figure 9 presents the training and validation curves for one of the top-performing configurations, featuring a Conv1D kernel size of 10, LSTM layers with 32 and 64 units, respectively, and the inclusion of Dropout layers (0.5, 0.2, 0.2). The training and validation accuracy curves converge rapidly and stabilize around epoch 20, both reaching 100% accuracy, demonstrating strong learning and generalization. The corresponding loss curves show a sharp decline in the initial epochs, followed by stabilization with near-zero loss values. The close alignment between training and validation metrics confirms that the model avoids overfitting and maintains excellent performance across unseen data. These results validate the effectiveness of combining Conv1D for local pattern extraction and LSTM layers for temporal modeling.

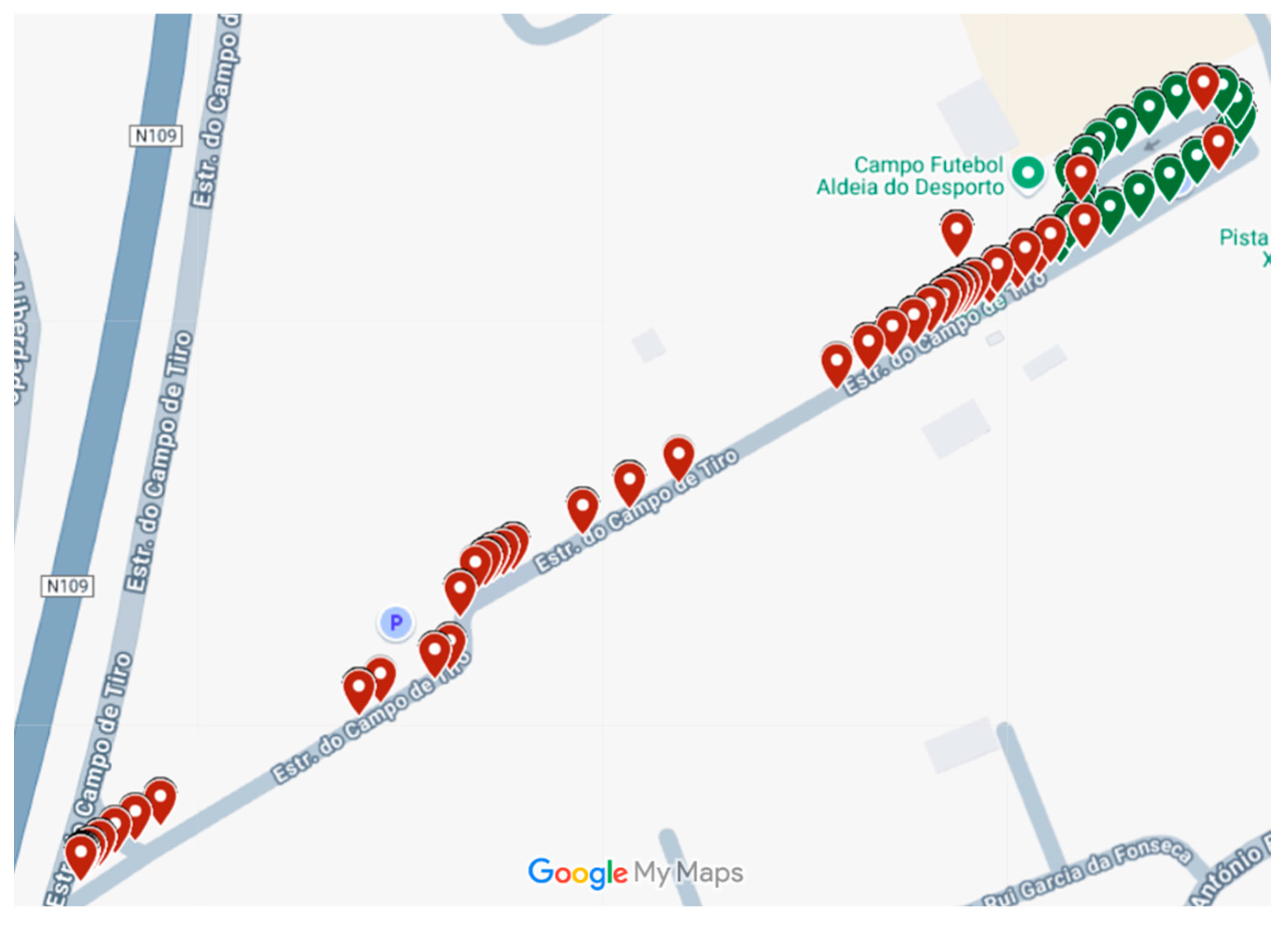

To further validate the effectiveness of the research, one of the best-performing configurations using manually generated sliding windows and an LSTM architecture with 32 units in both layers was tested on real-world driving sessions. These tests aimed to verify whether the model, beyond achieving excellent metrics in controlled training environments, could also generalize to unseen data collected during actual driving. The chosen architecture, due to its lower computational complexity and high accuracy, was particularly suitable for this practical evaluation.

For this purpose, two separate recordings were conducted on the same road circuit, which was chosen for its low-traffic road to ensure safety while performing various driving maneuvers. In

Figure 10 and

Figure 11, the red markers represent segments classified as aggressive behavior, while green markers indicate non-aggressive behavior.

In the first recording (

Figure 10), the driving style was deliberately safe and steady throughout the entire route, serving as a reference for non-aggressive behavior. This test aimed to confirm that the model would not falsely classify smooth and consistent driving as aggressive. Although a few isolated predictions of aggressive behavior were registered (

Figure 10), these were expected and likely triggered by minor steering adjustments or natural variations in sensor readings, which do not reflect actual aggressive intent.

In the second recording (

Figure 11), aggressive behavior was intentionally introduced. The session began with a full lap around a parking area, executed cautiously, followed by a strong braking maneuver upon exiting. Then, a sudden and intense acceleration was performed, leading to a sharp braking point before a gentle curve in the middle of the route. From that point on, the driving continued at a steady and safe pace, ending with another strong deceleration at the end of the circuit. This sequence was designed to simulate a variety of aggressive behaviors and assess whether the model could accurately detect them in a continuous stream of real-world sensor data.

The model’s predictions on both circuits were consistent with the expected outcomes, correctly distinguishing between the non-aggressive and aggressive sessions. This practical test reinforces the model’s applicability in real scenarios and supports its reliability for integration into driver behavior monitoring systems.

This project reveals a unique approach compared to the rest of the literature, due to the use of different driving patterns. These driving patterns refer to specific behaviors captured by the vehicle’s acceleration and gyroscope sensors, making it possible to identify distinct driving style characteristics. These patterns can be classified as sudden acceleration and braking, turns, etc. Recognizing these patterns makes it possible to classify the driving style according to its aggressiveness, offering an in-depth understanding of the driver’s behavior. Analyzing these driving patterns is essential for creating monitoring and safety systems, as it makes it possible to identify risky maneuvers, such as rapid acceleration, which may indicate more aggressive driving.

5. Limitations and Future Work

While this research demonstrates the potential of LSTM-based models for classifying aggressive and non-aggressive driving behaviors, some limitations should be acknowledged.

This study did not explicitly account for external traffic conditions such as vehicle density, surrounding traffic flow, or weather factors. The experiments were conducted on controlled circuits, focusing on isolated maneuvers to ensure consistency in data collection and to minimize external noise. However, in real-world driving, surrounding traffic can strongly influence behaviors that might otherwise be considered non-aggressive, for instance when a sharp braking event is caused by an unexpected external hazard rather than by aggressive intent. Therefore, future research should incorporate contextual variables such as traffic density, weather, and road conditions to enhance robustness and ensure greater applicability in real driving environments.

Another limitation lies in the binary classification setup adopted, which distinguished only between aggressive and non-aggressive behaviors. Although effective, this approach does not differentiate between specific aggressive maneuvers, such as sudden acceleration, harsh braking, or sharp turns. Expanding the system into a multiclass classification framework would provide greater granularity and improve its practical usefulness.

In addition, although an 80/20 train–test split with early stopping and dropout was applied, the results presented in

Table 7 showed unusually high accuracy values across multiple configurations. This outcome can be explained by the binary nature of the task, the pre-segmentation of maneuvers, and the use of data augmentation, but it does not fully capture the variability of the model’s performance. Future work should therefore incorporate cross-validation strategies (e.g., five-fold) to report average accuracy and standard deviation across multiple runs, providing a more statistically robust measure of model stability and generalization.

Furthermore, while this study focused primarily on the sensitivity analysis of the hybrid Conv1D–LSTM architecture, it did not include direct comparisons with classical baseline models such as Logistic Regression, Support Vector Machine, Random Forest, or a standard LSTM without convolutional layers. Although the Literature Review already discussed performance levels reported in related studies using these approaches, a systematic benchmarking under a common evaluation framework would further demonstrate the effectiveness of the proposed model. We identify this as an important direction for future work, to be addressed with larger and more diverse datasets in multiclass classification.

Future work could also explore fine-tuning the individual dropout rates per layer. While this study adopted standard dropout values based on prior experience, optimizing these rates could potentially yield even greater robustness, especially in deeper or more complex architectures.

The study also relied exclusively on smartphone sensors for data collection such as the accelerometer, gyroscope, and GPS. While these devices are widely accessible and low-cost, their performance is influenced by placement variability inside the vehicle and by inherent sensor precision limits, which may affect the quality of the collected data.

Finally, despite the application of data augmentation, the dataset remains relatively limited in scope. Larger datasets collected from multiple drivers, vehicles, and environments would enhance the generalizability of the model.

It should also be noted that a comprehensive set of experimental results, including training and validation accuracy/loss curves and error evolution across epochs, has been made publicly available through the online repository [

23]. This ensures transparency and reproducibility of the findings, complementing the summarized results presented in the paper.

6. Conclusions

In conclusion, this research successfully demonstrates the potential of combining LSTM networks with Conv1D layers to detect aggressive and non-aggressive driving behaviors using mobile sensor data. Unlike other studies, this work focused on a pattern-based approach, treating each maneuver as an independent time-series segment, which allowed for more precise behavior classification.

Through a series of systematic experiments, different model configurations were tested, varying kernel sizes, LSTM units, and the use of Dropout layers. The results showed that a kernel size or sliding window size of 10 offered the best trade-off between capturing complete maneuvers and avoiding overlapping behaviors. Additionally, although all top models achieved 100% accuracy, the best-performing ones typically excluded Dropout, suggesting that in well-augmented and balanced datasets, Dropout may not be essential.

The study further demonstrated that integrating the segmentation process into the network using Conv1D generally yields more consistent outcomes than manually generating sliding windows, streamlining the pipeline and improving training efficiency. These insights provide practical guidance for designing real-time behavior recognition systems and contribute new findings to the literature by emphasizing the effect of training on isolated maneuvers, an area not thoroughly explored in previous work.

Moreover, the application of robust preprocessing techniques, including data normalization, sample labeling, and structured feature representation, proved essential for achieving reliable performance, ensuring that the model could accurately distinguish between different aggressive and non-aggressive driving behaviors.

Overall, this paper reinforces the viability of AI-driven behavioral analysis in the context of road safety, offering a scalable and effective methodology for driver monitoring systems.