Quality of Emerging Data in Transportation Systems: A Showcase of On-Street Parking

Abstract

1. Introduction

2. Literature Review

2.1. Parking Data: Typologies, Uses Cases, Quality Issues

2.2. Quality Frameworks and Definitions in Information Systems, ITS and the Parking Sector

3. Materials and Methods

4. Results

4.1. Exemplary Quality Implications

4.1.1. The Correctness Issue

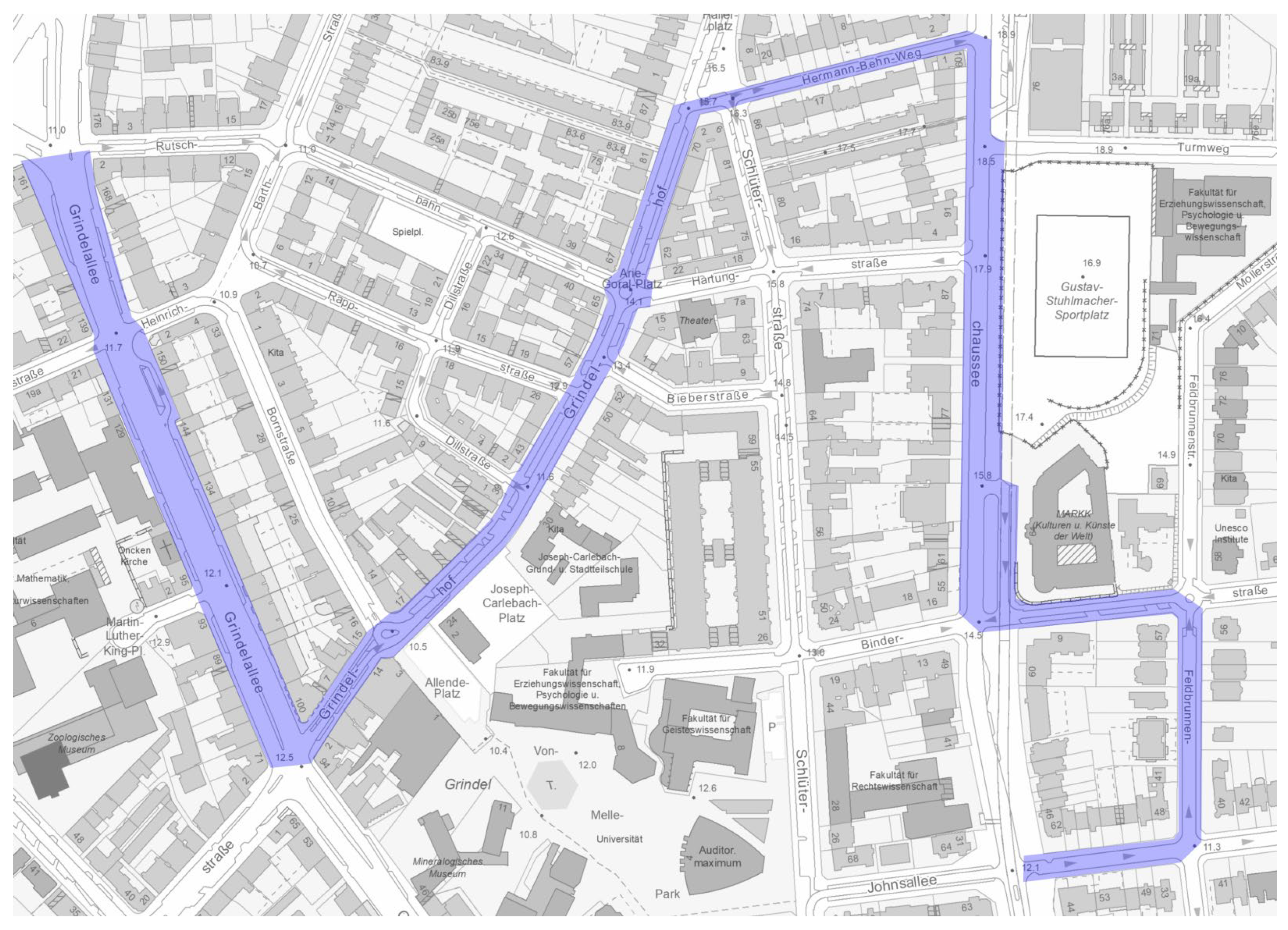

4.1.2. The Coverage Issue

4.2. Definition of Quality Criteria and Metrics

4.2.1. Approach

4.2.2. Coverage and Completeness

4.2.3. Correctness

4.2.4. Timeliness

4.2.5. Exploitability

4.2.6. Validation

5. Discussion

6. Conclusions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| API | Application Programming Interface |

| CECR | Content Element Coverage Rate |

| DPER | Data Portal Evaluation Rubric |

| DRCR | Data Record Completeness Rate |

| FCD | Floating Car Data |

| GIS | Geographic Information System |

| ITS | Intelligent Transportation System |

| LBV | Landesbetrieb Verkehr |

| MAE | Mean Absolute Error |

| RMSE | Root Mean Squared Error |

| SPS | Smart Parking System |

| TOCR | Temporal Occurrence Coverage Rate |

References

- Ogás, M.G.D.; Fabregat, R.; Aciar, S. Survey of Smart Parking Systems. Appl. Sci. 2020, 10, 3872. [Google Scholar] [CrossRef]

- Zhu, L.; Yu, F.R.; Wang, Y.; Ning, B.; Tang, T. Big Data Analytics in Intelligent Transportation Systems: A Survey. IEEE Trans. Intell. Transp. Syst. 2019, 20, 383–398. [Google Scholar] [CrossRef]

- Bonsall, P.W. The Changing Face of Parking-Related Data Collection and Analysis: The Role of New Technologies. Transportation 1991, 18, 83–106. [Google Scholar] [CrossRef]

- Arnd, M.; Cré, I. Local Opportunities for Digital Parking; POLIS Parking Working Group: Brussels, Belgium, 2018; Available online: https://www.polisnetwork.eu/wp-content/uploads/2019/06/parking-paper-2018-web-2.pdf (accessed on 20 May 2025).

- Lubrich, P. Analysis of Parking Traffic in Cologne, Germany, Based on an Extended Macroscopic Transport Model and Parking API Data. Case Stud. Transp. Policy 2023, 11, 100940. [Google Scholar] [CrossRef]

- Feiqe, B.; Margiotta, C.; Margiotta, R.; Turner, S. Traffic Data Quality Measurement; Federal Highway Administration: Washington, DC, USA, 2004. [Google Scholar]

- Sadiq, S.; Indulska, M. Open Data: Quality over Quantity. Int. J. Inf. Manag. 2017, 37, 150–154. [Google Scholar] [CrossRef]

- Lubrich, P. Smart Parking Systems: A Data-Oriented Taxonomy and a Metadata Model. Transp. Res. Rec. J. Transp. Res. Board 2021, 2675, 1015–1029. [Google Scholar] [CrossRef]

- Dey, S.S.; Darst, M.; Dock, S.; Pochowski, A.; Sanchez, E.C. Asset-Lite Parking: Big Data Analytics in Development of Sustainable Smart Parking Solutions in Washington, D.C. Transp. Res. Rec. J. Transp. Res. Board 2016, 2559, 35–45. [Google Scholar] [CrossRef]

- Hagen, T.; Schäfer, P.; Scheel-Kopeinig, S.; Saki, S.; Nguyen, T.; Wenz, K.-P.; Bellina, L. Ganglinien als Grundlage für eine Nachhaltige Parkraumplanung; Frankfurt University of Applied Sciences: Frankfurt a.M., Germany, 2020. [Google Scholar]

- Yang, S.; Qian, Z.S. Turning Meter Transactions Data into Occupancy and Payment Behavioral Information for On-Street Parking. Transp. Res. Part C Emerg. Technol. 2017, 78, 165–182. [Google Scholar] [CrossRef]

- Schäfer, P.K.; Lux, K.; Wolf, M.; Hagen, T.; Celebi, K. Entwicklung von Übertragbaren Erhebungsmethoden Unter Berücksichtigung Innovativer Technologien Zur Parkraumdatengenerierung Und Digitalisierung Des Parkraums—ParkenDigital; Frankfurt University of Applied Sciences: Frankfurt a.M., Germany, 2019. [Google Scholar]

- Hagen, T.; Saki, S.; Scheel-Kopeinig, S. Start2park—Determining, Explaining and Predicting Cruising for Parking; Frankfurt University of Applied Sciences: Frankfurt a.M., Germany, 2021; Available online: https://www.frankfurt-university.de/fileadmin/standard/Hochschule/Fachbereich_3/Forschung_und_Transfer/Publikationen/Working_Paper/Fb3_WP_20_2021_-_Hagen_et_al_final_start2park.pdf (accessed on 20 May 2025).

- Nawaz, S.; Efstratiou, C.; Mascolo, C. Parksense: A Smartphone Based Sensing System for on-Street Parking. In Proceedings of the Annual International Conference on Mobile Computing and Networking, MOBICOM, Miami, FL, USA, 30 September–4 October 2013. [Google Scholar]

- Margreiter, M.; Orfanou, F.; Mayer, P. Determination of the Parking Place Availability Using Manual Data Collection Enriched by Crowdsourced In-Vehicle Data. Transp. Res. Procedia 2017, 25, 497–510. [Google Scholar] [CrossRef]

- Mathur, S.; Jin, T.; Kasturirangan, N.; Chandrashekharan, J.; Xue, W.; Gruteser, M.; Trappe, W. ParkNet: Drive-by Sensing of Road-Side Parking Statistics. In Proceedings of the MobiSys’10-Proceedings of the 8th International Conference on Mobile Systems, Applications, and Services, San Francisco, CA, USA, 15–18 June 2010. [Google Scholar]

- Hellekes, J.; Kehlbacher, A.; Díaz, M.L.; Merkle, N.; Henry, C.; Kurz, F.; Heinrichs, M. Parking Space Inventory from above: Detection on Aerial Images and Estimation for Unobserved Regions. IET Intell. Transp. Syst. 2023, 17, 1009–1021. [Google Scholar] [CrossRef]

- Louen, C.; Föhrenbach, L.; Seitz, I.; ter Smitten, V.; Pielen, M. ACUP—Analyse Der Merkmale Des Städtischen Parkens; Institut für Stadtbauwesen und Stadtverkehr (ISB): Aachen, Germany, 2022; Available online: https://edocs.tib.eu/files/e01fb23/1844755576.pdf (accessed on 20 May 2025).

- Piovesan, N.; Turi, L.; Toigo, E.; Martinez, B.; Rossi, M. Data Analytics for Smart Parking Applications. Sensors 2016, 16, 1575. [Google Scholar] [CrossRef]

- Gomari, S.; Domakuntla, R.; Knoth, C.; Antoniou, C. Development of a Data-Driven On-Street Parking Information System Using Enhanced Parking Features. IEEE Open J. Intell. Transp. Syst. 2023, 4, 30–47. [Google Scholar] [CrossRef]

- Neumann, T.; Dalaff, C.; Niebel, W. Was Ist Eigentlich Qualitaet?-Versuch Einer Begrifflichen Konsolidierung Und Systematik Im Verkehrsmanagement/What Is Quality?—Terms and Systematization in Context of Traffic Management. Straßenverkehrstechnik 2014, 9, 601–606. [Google Scholar]

- Weigel, N. Datenqualitätsmanagement — Steigerung der Datenqualität mit Methode. In Daten-und Informationsqualität; Hildebrand, K., Gebauer, M., Hinrichs, H., Mielke, M., Eds.; Vieweg+Teubner: Wiesbaden, Germany, 2008; pp. 68–87. [Google Scholar]

- Jayawardene, V.; Sadiq, S.; Indulska, M. The Curse of Dimensionality in Data Quality. In Proceedings of the 24th Australasian Conference on Information Systems, Melbourne, Australia, 4 December 2013. [Google Scholar]

- Heinrich, B.; Klier, M. Datenqualitätsmetriken für ein ökonomisch orientiertes Qualitätsmanagement. In Daten-und Informationsqualität; Hildebrand, K., Gebauer, M., Hinrichs, H., Mielke, M., Eds.; Vieweg+Teubner: Wiesbaden, Germany, 2008; pp. 49–67. [Google Scholar]

- Carvalho, A.M.; Soares, S.; Montenegro, J.; Conceição, L. Data Quality: Revisiting Dimensions towards New Framework Development. Procedia Comput. Sci. 2025, 253, 247–256. [Google Scholar] [CrossRef]

- Niculescu, M.; Jansen, M.; Barr, J.; Lubrich, P. Multimodal Travel Information Services (MMTIS)-Quality Package, Deliverable by EU EIP Sub-Activity 4.1; EU EIP Consortium: Utrecht, Amsterdam, 2019. [Google Scholar]

- ISO/TR 21707:2008; International Organization for Standardization Intelligent Transport Systems—Integrated Transport Information, Management and Control—Data Quality in ITS Systems. ISO: Geneva, Switzerland, 2008.

- ISO 19157-1:2023; International Organization for Standardization Geographic Information, Data Quality, Part 1: General Requirements. ISO: Geneva, Switzerland, 2023.

- Venkatachalapathy, A.; Sharma, A.; Knickerbocker, S.; Hawkins, N. A Rubric-Driven Evaluation of Open Data Portals and Their Data in Transportation. J. Big Data Anal. Transp. 2020, 2, 181–198. [Google Scholar] [CrossRef]

- Jessberger, S.; Southgate, H. Innovative Individual Vehicle Record Traffic Data Quality Analysis Methods. Transp. Res. Rec. J. Transp. Res. Board 2023, 2677, 70–82. [Google Scholar] [CrossRef]

- Hoseinzadeh, N.; Liu, Y.; Han, L.D.; Brakewood, C.; Mohammadnazar, A. Quality of Location-Based Crowdsourced Speed Data on Surface Streets: A Case Study of Waze and Bluetooth Speed Data in Sevierville, TN. Comput. Environ. Urban Syst. 2020, 83, 101518. [Google Scholar] [CrossRef]

- SBD Automotive Germany GmbH. Global Parking Lot Data Accuracy Assessment: ParkMe vs. Parkopedia; SBD Automotive Germany GmbH: Düsseldorf, Germany, 2016. [Google Scholar]

- Lenormand, M.; Picornell, M.; Cantú-Ros, O.G.; Tugores, A.; Louail, T.; Herranz, R.; Barthelemy, M.; Frías-Martínez, E.; Ramasco, J.J.; Moreno, Y. Cross-Checking Different Sources of Mobility Information. PLoS ONE 2014, 9, e105184. [Google Scholar] [CrossRef]

- Berrebi, S.J.; Joshi, S.; Watkins, K.E. Cross-Checking Automated Passenger Counts for Ridership Analysis. J. Public Trans. 2021, 23, 100008. [Google Scholar]

- Nickerson, R.C.; Varshney, U.; Muntermann, J. A Method for Taxonomy Development and Its Application in Information Systems. Eur. J. Inf. Syst. 2013, 22, 336–359. [Google Scholar] [CrossRef]

- Forschungsgesellschaft für Straßen- und Verkehrswesen e.V. Empfehlungen Für Anlagen Des Ruhenden Verkehrs (Recommendations for Parking Facilities, EAR 05); FGSV: Köln, Germany, 2005. [Google Scholar]

- Mylonas, C.; Stavara, M.; Mitsakis, E. Systematic Evaluation of Floating Car Data Quality. In Proceedings of the 16th ITS European Congress, Seville, Spain, 19–21 May 2025. [Google Scholar]

- Heinrich, B.; Kaiser, M.; Klier, M. Metrics for Measuring Data Quality Foundations for an Economic Data Quality Management. In Proceedings of the ICSOFT 2007-2nd International Conference on Software and Data Technologies, Proceedings, Barcelona, Spain, 22–25 July 2007; Volume 2. [Google Scholar]

- Lubrich, P.; Founta, A.; Daems, F.; T’Siobbel, S.; Bureš, P.; Mylonas, C.; Stavara, M.; Montenegro, J.; Soares, S.; Conceição, L. NAPCORE Quality Online Repository. Available online: https://napcore.eu/quality/ (accessed on 28 July 2025).

- Albertoni, R.; Isaac, A.; Hyvonen, E. Introducing the Data Quality Vocabulary (DQV). Semant. Web 2020, 12, 81–97. [Google Scholar] [CrossRef]

- Implementing Data Governance at Transportation Agencies; National Academies Press: Washington, DC, USA, 2024; ISBN 978-0-309-73271-0.

- Paterson, J.; Massoud, M.; Kadri, Z.; Aristizabal, J.; Adlam, L.; Pogmore, A.; Spear, J.; Woolley, A. Road Related Data and How to Use It; World Road Association (PIARC): La Défense, France, 2020; Available online: https://www.piarc.org/ressources/publications/12/abcb609-34608-2020SP02EN-Road-Related-Data-and-How-to-Use-it-PIARC-19012021.pdf (accessed on 20 May 2025).

| Data Source | Use Case | Reference |

|---|---|---|

| Transaction data (parking meters) | Describe local parking demand including its temporal dynamics | [9,10,11] |

| Parking violation records | Derive parking supply data (location and type of on-street parking spaces) | [12] |

| Smartphone-based data | Record parking events and parking search traffic | [13,14] |

| Vehicle-based data via ultrasonic sensors | Locate on-street parking spaces and their occupancy | [15,16] |

| GPS trip data | Determine parking demand and its temporal dynamics | [10] |

| Satellite imagery and land use data | Map public and private parking supply | [17] |

| Stationary, on-street sensors | Determine occupancy situation, with statistical methods to identify data anomalies and clusters of sensors with comparable demand patterns | [18,19] |

| Parking event messages from connected vehicles | Predict occupancy of on-street parking spaces | [20] |

| The Curse of Dimensionality in Data Quality [23] | Data Quality Metrics for Economically Oriented Quality Management [24] | FHWA Traffic Data Quality Measurement [6] | EU EIP Quality Package [26] | ISO/TR 21707:2008 (International Organization for Standardization, 2008) [27] | ISO 19157-1:2023 [28] | Data Portal Evaluation Rubric (DPER) [29] |

|---|---|---|---|---|---|---|

| Completeness Availability and accessibility Currency Accuracy Validity Reliability and credibility Consistency Usability and interpretability | Completeness Freedom from errors Consistency Up-to-datedness | Accuracy Completeness Validity Timeliness Coverage Accessibility | Geographical coverage Availability Timeliness Reporting period Latency Location accuracy Classification correctness Error rate Event coverage Report coverage | Service completeness Service availability Service grade Veracity Precision Timeliness Location measurement Measurement source Ownership | Completeness Logical consistency Positional accuracy Temporal quality Thematic quality | Content relevance Ease of usage Accessibility Visualization Statistical tools application Developer tools Number of data sets Feedback tool Number of data formats Data description Data characteristics Data performance Legal provisions |

| Content Element | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Data Source | Information on Parking Regulations | Parking Fees | Amount of Parking Spaces | Geometry of Parking Spaces | Parking Space Layout | Marked Parking Space | Surface Type | Loading Zones | Spaces Reserved for Disabled | Spaces Reserved for Taxi | Spaces Reserved for Car Sharing |

| Manual survey | T 1 | N/A | N | N/A | T | N/A | N/A | N | N | N | N |

| Parking API | Indirectly from fee information | N | N | G (street segments) | N/A | N/A | N/A | N/A | N/A | N/A | N |

| Geoportal | T | N/A | Indirectly from geometry | G (individual spaces) | T | B | T | G | G | N/A | G |

| Values of ei | Definition |

|---|---|

| 1 | Content element can be used directly without conversions and interpretations. |

| 0.7 | Content element must be converted (e.g., from a string to a float value). |

| 0.3 | Content element must be interpreted (e.g., the “number of parking spaces” must be derived from a geometric value). |

| 0 | Content element is not available. |

| Feature | Definition |

|---|---|

| fU1 | Metadata is listed on an online portal. |

| fU2 | Metadata can be searched via an online portal. |

| fU3 | Content data can be searched via an online portal. |

| fU4 | Content data can be filtered via an online portal. |

| fU5 | There is a preview of the content data via an online portal. |

| fU6 | There are statistical functions for analyzing content data via an online portal. |

| Feature | Definition |

|---|---|

| fO1 | Standard licenses are used (e.g., Creative Commons licenses) or the data can be used without any legal restrictions. |

| fO2 | No contracts need to be concluded between the data provider and the data consumer. |

| Feature | Definition |

|---|---|

| fT1 | A web-based access mechanism (e.g., a programming interface/API) is available. |

| fT2 | A web-based access mechanism (e.g., a programming interface/API) is documented. |

| fT3 | Standard data formats are used. |

| fT4 | Data access is unlimited in terms of data volume and the number of data retrievals. |

| fT5 | Transparent information is provided on calculation methods and original data sources. |

| fT6 | Technical support is available. |

| Per Content Element | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Data Source | Location (w1 = 1) | Access Conditions (w2 = 1) | Payment Methods (w3 = 1) | Capacity (w4 = 1) | Resident-Only Restrictions (w5 = 0.5) | Loading Bays (w6 = 0.5) | Disabled Parking (w7 = 0.5) | Taxi Stands (w8 = 0.5) | Car Sharing Stands (w9 = 0.5) | |

| Manual survey | 0.5 | 0.5 | 0 | 1 | 1 | 1 | 1 | 1 | 1 | 4.08 |

| Parking API | 1 | 1 | 1 | 1 | 1 | 0 | 1 | 0 | 1 | 5.08 |

| Geoportal | 1 | 0.3 | 0.5 | 0.3 | 0 | 0.5 | 0.5 | 0 | 0.5 | 2.64 |

| Data Source | |||

|---|---|---|---|

| Parking API | 11.17 | 15.84 | 14.58 |

| Geoportal | 5.19 | 6.18 | 6.25 |

| Location | Access Conditions | Payment Methods | Capacity | Resident-Only Restrictions | Loading Bays | Disabled Parking | Taxi Stands | Car Sharing Stands | |

|---|---|---|---|---|---|---|---|---|---|

| (years) | 7 | 7 | 7 | 7 | 7 | 7 | 7 | 7 | 7 |

| (1/year) | 0 | 0.5 | 0.3 | 2 | 0.3 | 0.5 | 0.5 | 0.5 | 0.5 |

| 1.000 | 0.030 | 0.122 | 0.001 | 0.122 | 0.030 | 0.030 | 0.030 | 0.030 | |

| 0.155 | |||||||||

| Data Source | |||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Transaction data (parking meters) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 2 | 0 | 0 | 0 | 1 | 0 | 0 | 1 |

| Transaction data (mobile phone applications) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 2 | 0 | 0 | 0 | 1 | 0 | 0 | 1 |

| Sensor data | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 0 | 2 |

| Parking API | 1 | 0 | 1 | 1 | 1 | 0 | 4 | 0 | 0 | 0 | 1 | 1 | 0 | 0 | 0 | 1 | 3 |

| Geoportal | 1 | 1 | 1 | 1 | 1 | 0 | 5 | 1 | 1 | 2 | 1 | 1 | 0 | 1 | 0 | 1 | 3 |

| Manual survey | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 2 | 0 | 0 | 0 | 1 | 1 | 0 | 2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lubrich, P. Quality of Emerging Data in Transportation Systems: A Showcase of On-Street Parking. Future Transp. 2025, 5, 110. https://doi.org/10.3390/futuretransp5030110

Lubrich P. Quality of Emerging Data in Transportation Systems: A Showcase of On-Street Parking. Future Transportation. 2025; 5(3):110. https://doi.org/10.3390/futuretransp5030110

Chicago/Turabian StyleLubrich, Peter. 2025. "Quality of Emerging Data in Transportation Systems: A Showcase of On-Street Parking" Future Transportation 5, no. 3: 110. https://doi.org/10.3390/futuretransp5030110

APA StyleLubrich, P. (2025). Quality of Emerging Data in Transportation Systems: A Showcase of On-Street Parking. Future Transportation, 5(3), 110. https://doi.org/10.3390/futuretransp5030110