Supervised Machine Learning for PICU Outcome Prediction: A Comparative Analysis Using the TOPICC Study Dataset

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Design and Dataset

2.2. Patient Population

2.3. Model Variables

2.4. Data Preprocessing and Feature Engineering

2.5. ML Model Building

2.6. ML Model’s Predictive Performance Evaluation and Feature Importance Analysis

2.7. Statistical Analysis

3. Results

3.1. Patient Characteristics

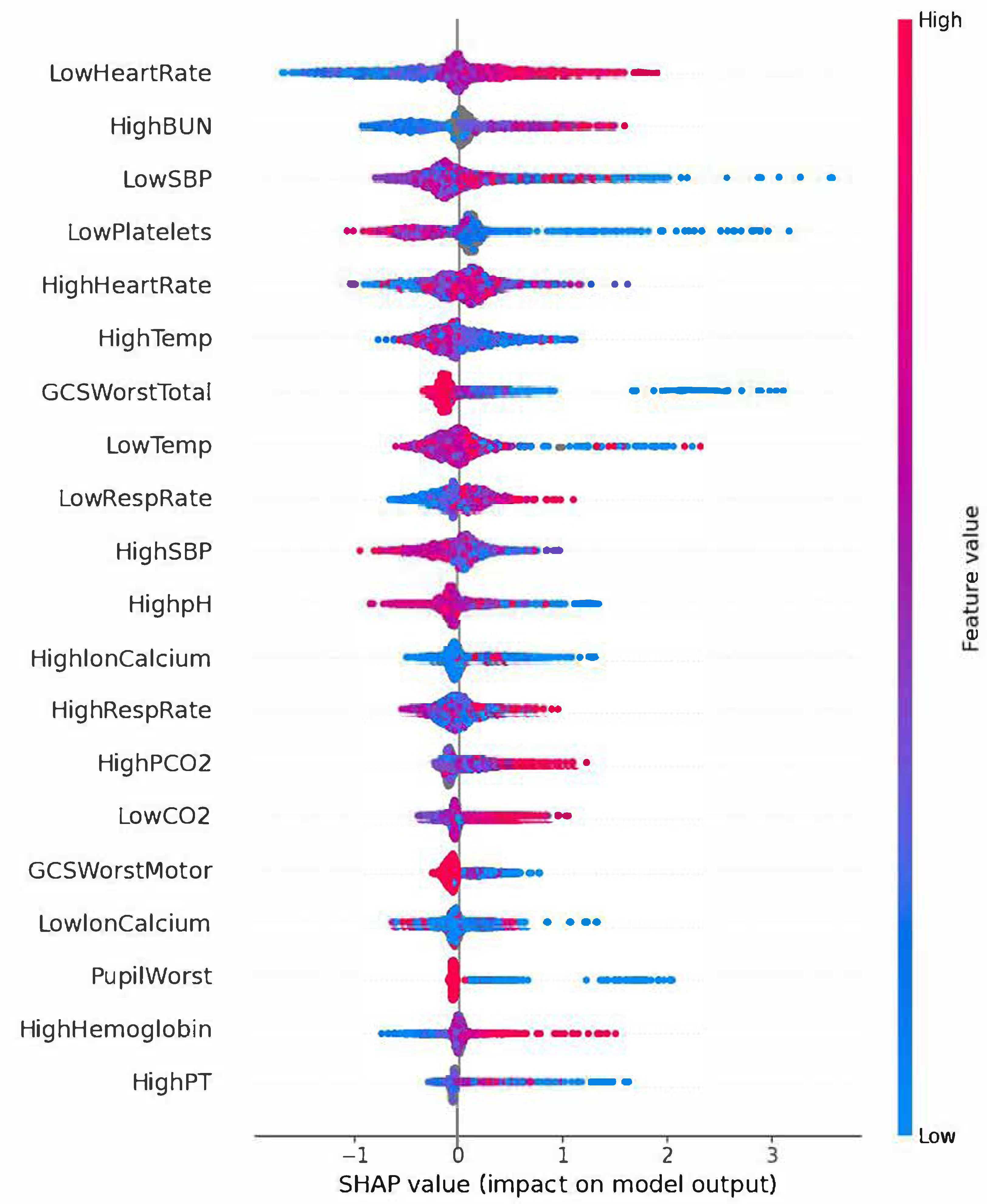

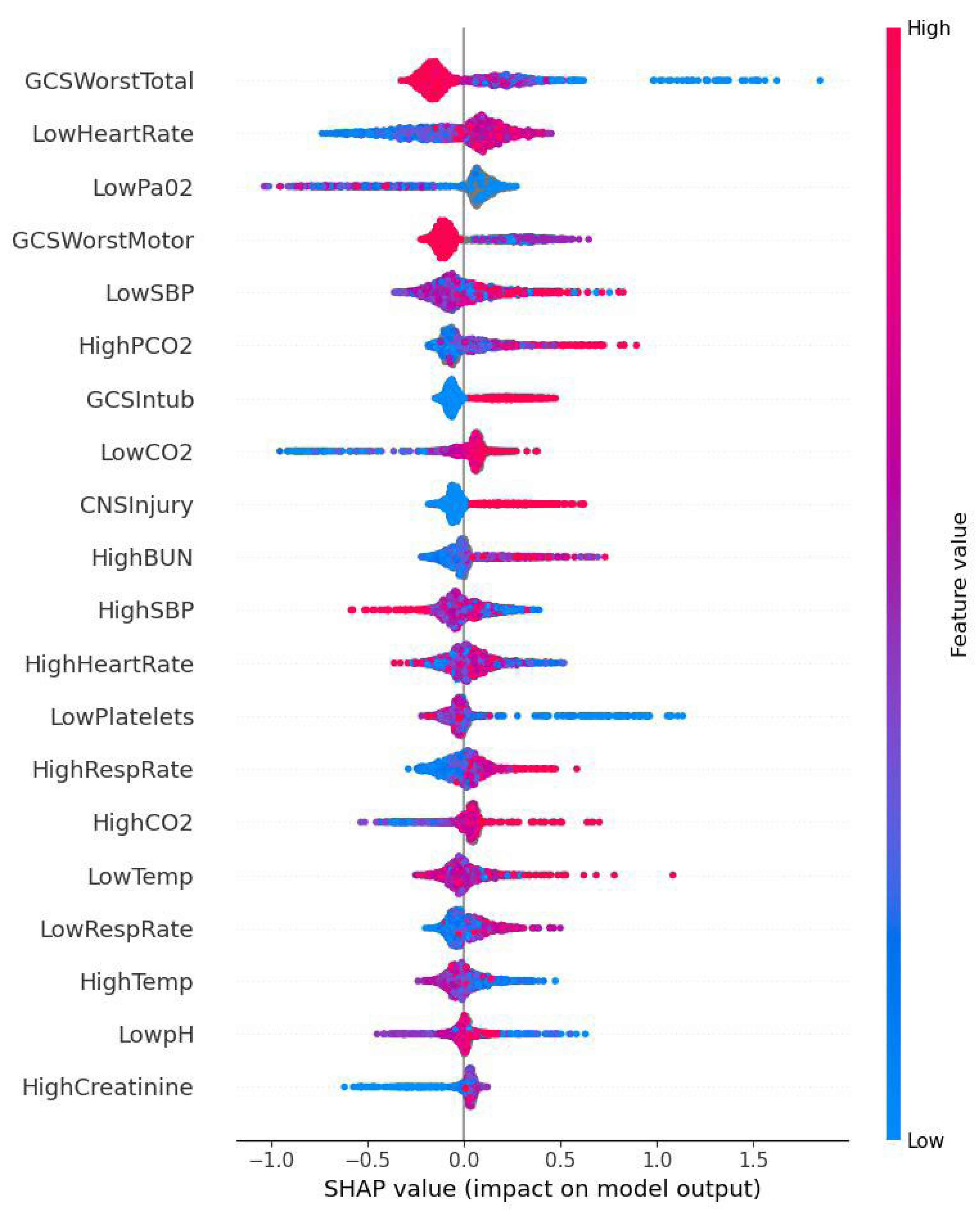

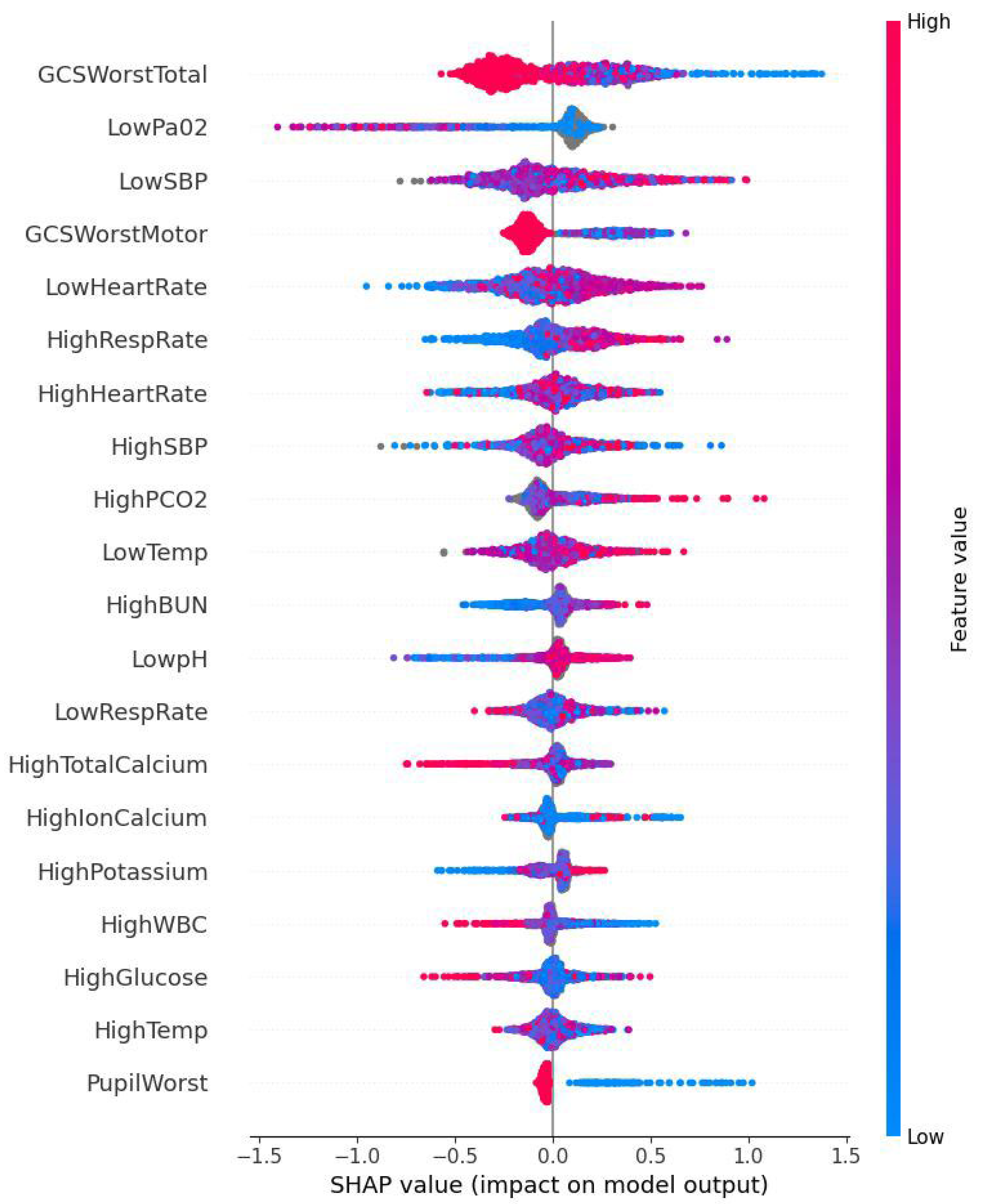

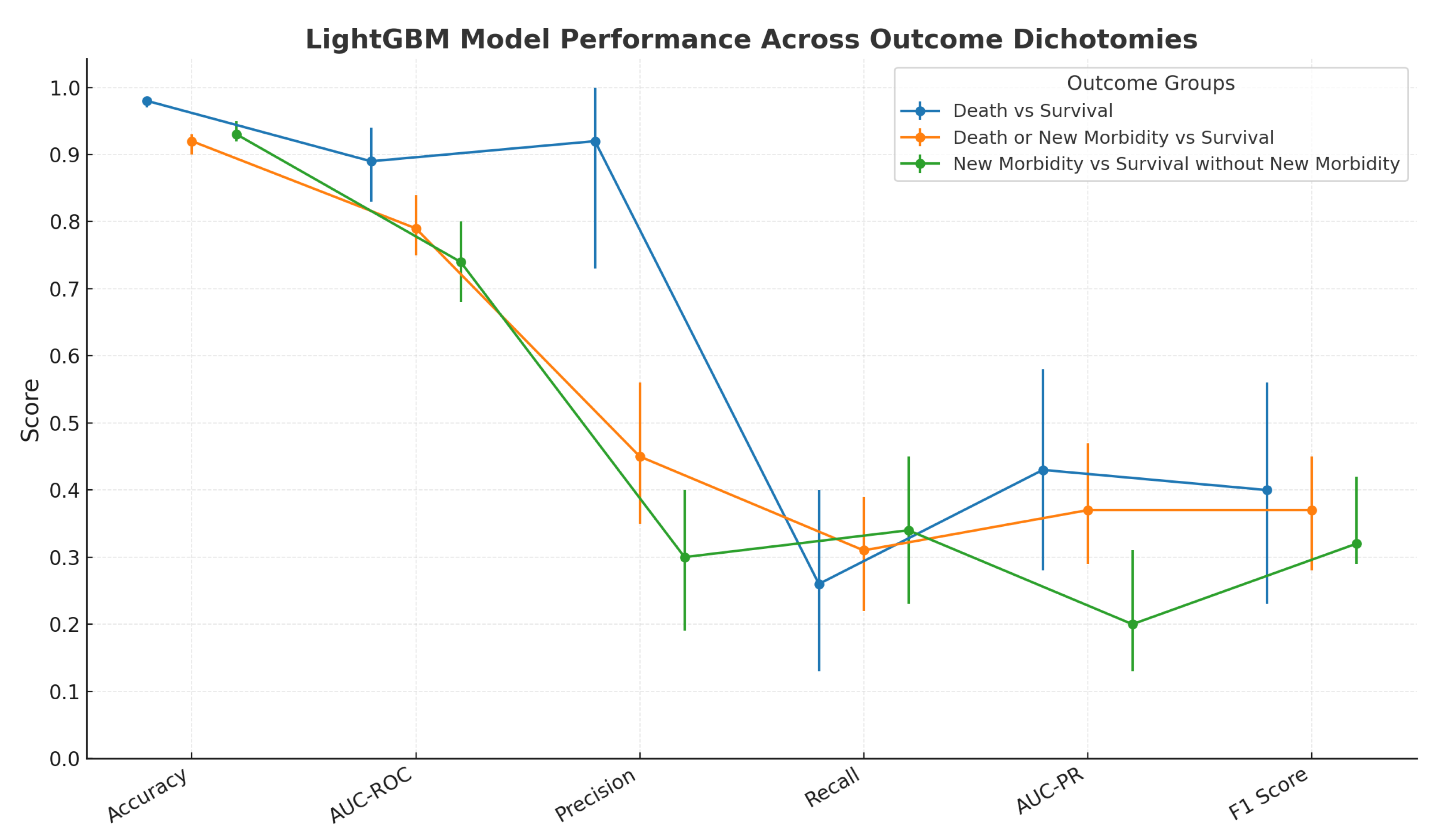

3.2. ML Model’s Predictive Performance and SHAP Analysis

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Markovitz, B.P.; Kukuyeva, I.; Soto-Campos, G.; Khemani, R.G. PICU Volume and Outcome: A Severity-Adjusted Analysis. Pediatr. Crit. Care Med. 2016, 17, 483–489. [Google Scholar] [CrossRef] [PubMed]

- Typpo, K.V.; Petersen, N.J.; Hallman, D.M.; Markovitz, B.P.; Mariscalco, M.M. Day 1 multiple organ dysfunction syndrome is associated with poor functional outcome and mortality in the pediatric intensive care unit. Pediatr. Crit. Care Med. 2009, 10, 562–570. [Google Scholar] [CrossRef] [PubMed]

- Pollack, M.M.; Holubkov, R.; Funai, T.; Clark, A.; Berger, J.T.; Meert, K.; Newth, C.J.L.; Shanley, T.; Moler, F.; Carcillo, J.; et al. Pediatric intensive care outcomes: Development of new morbidities during pediatric critical care. Pediatr. Crit. Care Med. 2014, 15, 821–827. [Google Scholar] [CrossRef] [PubMed]

- Pollack, M.M.; Patel, K.M.; Ruttimann, U.E. PRISM III: An updated Pediatric Risk of Mortality score. Crit. Care Med. 1996, 24, 743–752. [Google Scholar] [CrossRef] [PubMed]

- Mirza, S.; Malik, L.; Ahmed, J.; Malik, F.; Sadiq, H.; Ali, S.; Aziz, S. Accuracy of Pediatric Risk of Mortality (PRISM) III Score in Predicting Mortality Outcomes in a Pediatric Intensive Care Unit in Karachi. Cureus 2020, 12, e7489. [Google Scholar] [CrossRef] [PubMed]

- Anjali, M.M.; Unnikrishnan, D.T. Effectiveness of PRISM III score in predicting the severity of illness and mortality of children admitted to pediatric intensive care unit: A cross-sectional study. Egypt. Pediatr. Assoc. Gaz. 2023, 71, 25. [Google Scholar] [CrossRef]

- Knaus, W.A.; Wagner, D.P.; Draper, E.A.; Zimmerman, J.E.; Bergner, M.; Bastos, P.G.; Sirio, C.A.; Murphy, D.J.; Lotring, T.; Damiano, A. The APACHE III prognostic system. Risk prediction of hospital mortality for critically ill hospitalized adults. Chest 1991, 100, 1619–1636. [Google Scholar] [CrossRef] [PubMed]

- Le Gall, J.R.; Lemeshow, S.; Saulnier, F. A new Simplified Acute Physiology Score (SAPS II) based on a European/North American multicenter study. JAMA 1993, 270, 2957–2963. [Google Scholar] [CrossRef] [PubMed]

- Churpek, M.M.; Yuen, T.C.; Winslow, C.; Meltzer, D.O.; Kattan, M.W.; Edelson, D.P. Multicenter Comparison of Machine Learning Methods and Conventional Regression for Predicting Clinical Deterioration on the Wards. Crit. Care Med. 2016, 44, 368–374. [Google Scholar] [CrossRef] [PubMed]

- Tomašev, N.; Glorot, X.; Rae, J.W.; Zielinski, M.; Askham, H.; Saraiva, A.; Mottram, A.; Meyer, C.; Ravuri, S.; Protsyuk, I.; et al. A clinically applicable approach to continuous prediction of future acute kidney injury. Nature 2019, 572, 116–119. [Google Scholar] [CrossRef] [PubMed]

- Johnson, A.E.W.; Mark, R.G. Real-time mortality prediction in the Intensive Care Unit. AMIA Annu. Symp. Proc. 2018, 2017, 994–1003. [Google Scholar] [PubMed]

- Bailly, S.; Meyfroidt, G.; Timsit, J.-F. What’s new in ICU in 2050: Big data and machine learning. Intensive Care Med. 2018, 44, 1524–1527. [Google Scholar] [CrossRef] [PubMed]

- Ke, G.; Meng, Q.; Finley, T.; Wang, T.; Chen, W.; Ma, W.; Ye, Q.; Liu, T.-Y. LightGBM: A Highly Efficient Gradient Boosting Decision Tree. In Advances in Neural Information Processing Systems 30 (NIPS 2017); [Internet]; Curran Associates, Inc.: New York, NY, USA, 2017; Available online: https://proceedings.neurips.cc/paper_files/paper/2017/hash/6449f44a102fde848669bdd9eb6b76fa-Abstract.html (accessed on 18 August 2025).

- Pollack, M.M.; Holubkov, R.; Glass, P.; Dean, J.M.; Meert, K.L.; Zimmerman, J.; Anand, K.J.S.; Carcillo, J.; Newth, C.J.L.; Harrison, R.; et al. Functional Status Scale: New pediatric outcome measure. Pediatrics 2009, 124, e18–e28. [Google Scholar] [CrossRef] [PubMed]

- Lundberg, S.; Lee, S.-I. A Unified Approach to Interpreting Model Predictions. arXiv 2017. [Google Scholar] [CrossRef]

- Wei, Q.; Dunbrack, R.L., Jr. The Role of Balanced Training and Testing Data Sets for Binary Classifiers in Bioinformatics. PLoS ONE 2013, 8, e67863. [Google Scholar] [CrossRef] [PubMed]

- Buckland, M.; Gey, F. The relationship between Recall and Precision. J. Am. Soc. Inf. Sci. 1994, 45, 12–19. [Google Scholar] [CrossRef]

- Goutte, C.; Gaussier, E. A Probabilistic Interpretation of Precision, Recall and F-Score, with Implication for Evaluation. In Advances in Information Retrieval; Losada, D.E., Fernández-Luna, J.M., Eds.; Springer: Berlin/Heidelberg, Germany, 2005; pp. 345–359. [Google Scholar]

- Aczon, M.D.; Ledbetter, D.R.; Laksana, E.; Ho, L.V.; Wetzel, R.C. Continuous Prediction of Mortality in the PICU: A Recurrent Neural Network Model in a Single-Center Dataset. Pediatr. Crit. Care Med. 2021, 22, 519–529. [Google Scholar] [CrossRef] [PubMed]

- Munjal, N.K.; Clark, R.S.B.; Simon, D.W.; Kochanek, P.M.; Horvat, C.M. Interoperable and explainable machine learning models to predict morbidity and mortality in acute neurological injury in the pediatric intensive care unit: Secondary analysis of the TOPICC study. Front. Pediatr. 2023, 11, 1177470. [Google Scholar] [CrossRef] [PubMed]

- Patel, A.K.; Trujillo-Rivera, E.; Morizono, H.; Pollack, M.M. The criticality Index-mortality: A dynamic machine learning prediction algorithm for mortality prediction in children cared for in an ICU. Front. Pediatr. 2022, 10, 1023539. [Google Scholar] [CrossRef] [PubMed]

| Logistic Regression vs. LightGBM | ||

|---|---|---|

| TOPICC Study AUC-ROC (SD) | LightGBM model AUC-ROC (95% CI) | |

| Survival vs. Death | 0.89 ± 0.020 | 0.89 (0.83, 0.94) |

| Death or New Morbidity vs. Survival without New Morbidity | 0.80 ± 0.018 | 0.79 (0.75, 0.84) |

| New Morbidity vs. Survival without New Morbidity | 0.74 ± 0.024 | 0.74 (0.68, 0.80) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ali, A.M.; Baloglu, O. Supervised Machine Learning for PICU Outcome Prediction: A Comparative Analysis Using the TOPICC Study Dataset. BioMedInformatics 2025, 5, 52. https://doi.org/10.3390/biomedinformatics5030052

Ali AM, Baloglu O. Supervised Machine Learning for PICU Outcome Prediction: A Comparative Analysis Using the TOPICC Study Dataset. BioMedInformatics. 2025; 5(3):52. https://doi.org/10.3390/biomedinformatics5030052

Chicago/Turabian StyleAli, Amr M., and Orkun Baloglu. 2025. "Supervised Machine Learning for PICU Outcome Prediction: A Comparative Analysis Using the TOPICC Study Dataset" BioMedInformatics 5, no. 3: 52. https://doi.org/10.3390/biomedinformatics5030052

APA StyleAli, A. M., & Baloglu, O. (2025). Supervised Machine Learning for PICU Outcome Prediction: A Comparative Analysis Using the TOPICC Study Dataset. BioMedInformatics, 5(3), 52. https://doi.org/10.3390/biomedinformatics5030052