Identifying Themes in Social Media Discussions of Eating Disorders: A Quantitative Analysis of How Meaningful Guidance and Examples Improve LLM Classification

Abstract

1. Introduction

- Category Divergence Index (CDI) measures the degree of disagreement between zero-shot and few-shot approaches when classifying the same text content into thematic categories.

- Top Category Confidence is the estimated probability of the most prominent category for each post, indicating how certain the model is about its primary classification choice.

- Focus Score is an entropy-based measure that captures how concentrated or scattered the confidence scores are across all assigned categories. Values closer to zero indicate more concentrated confidence in fewer categories.

- Dominance Ratio compares the strength of the primary category against all secondary categories, calculated as the ratio between the top category’s confidence and the combined confidence of remaining categories.

2. Materials and Methods

2.1. Reddit Data Collection

- Markdown formatting symbols (e.g., asterisks, hashtags, and backticks);

- HTML entities and escape sequences;

- Special characters and encoding inconsistencies;

- Extraneous whitespace and line break patterns.

2.2. Methods

2.2.1. Overview of Methods

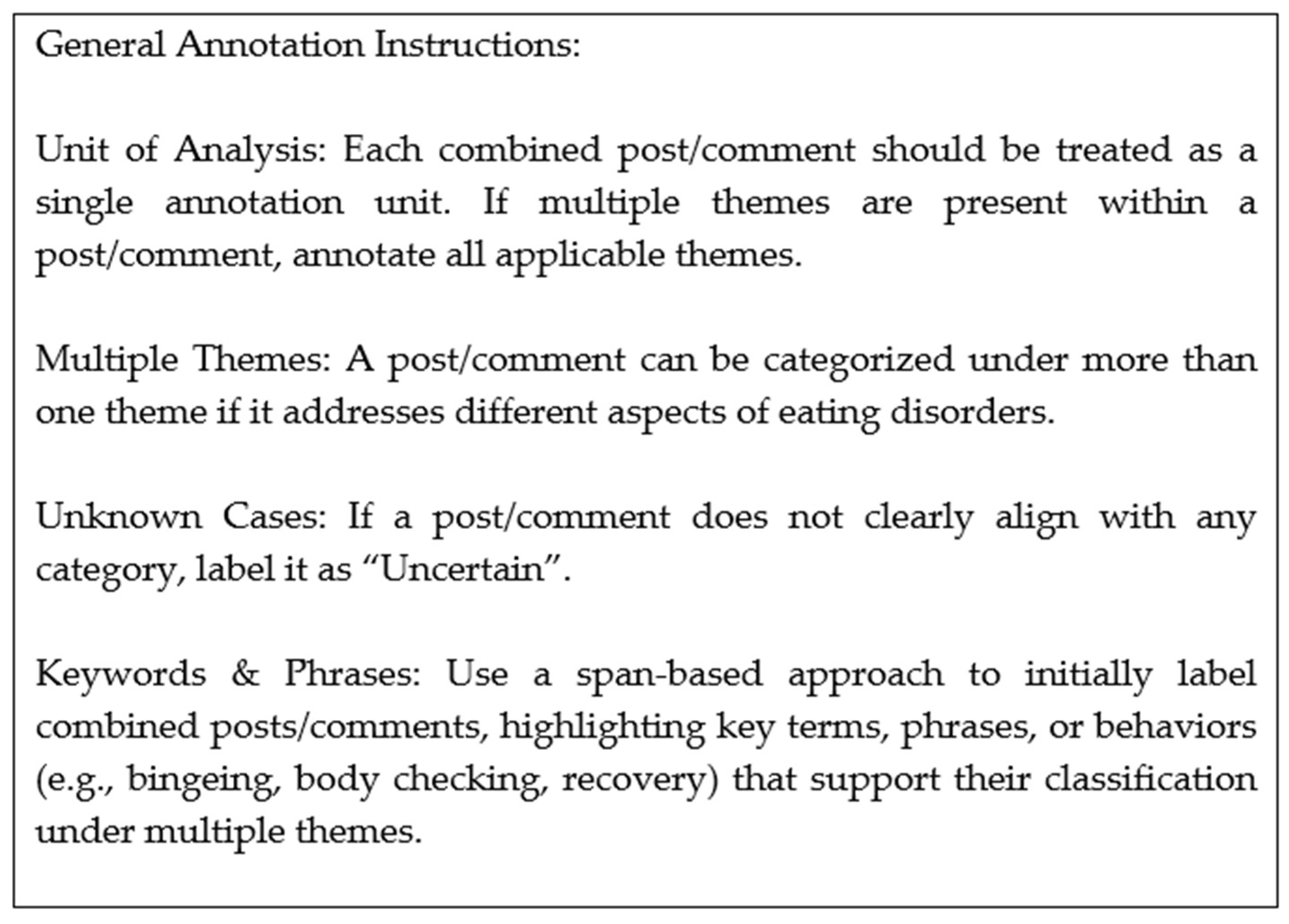

2.2.2. Developing Annotation Guidelines of Eating Disorder Themes

2.2.3. Zero-Shot and Few-Shot Prompt

- Role Definition: A brief statement identifying the model as “an expert in classifying posts about eating disorders according to specific themes”.

- Theme List: The 14 predefined theme categories presented as a numbered list.

- Target Post: The post to be classified.

- Output Instructions: Simple formatting requirements specifying score assignment (0.0–1.0).

- Role Definition: Similar expert framing as zero-shot.

- Annotation Guidelines: Adds full theme definitions with keywords and contextual information (~2000 words).

- Additional Disambiguation Notes: Detailed guidance on distinguishing between overlapping themes (~2000 words).

- Theme List: The same 14 categories with emphasis on exact meaning (as in zero-shot).

- Example Demonstration: Adds up to 100 randomly selected annotated examples showing post-classification pairs.

- Target Post: The post to be classified as zero-shot.

- Enhanced Instructions: Original instructions with additional explicit rules against inferring implicit themes and encouraging emphasis on textual evidence.

2.2.4. Analytical Methods

- Category Divergence Index (CDI)

- Top Category Concordance Analysis

- Confidence Distribution Metrics

2.2.5. Statistical Analysis

3. Results

3.1. Classification Concordence

3.2. Confidence and Distributional Properties Analysis

3.3. High-Confidence Error Detection

- High Confidence + High Divergence: 27 cases (43%).

- High Confidence + High Focus + Disagreement: 9 cases (14%).

- High Confidence + High Dominance: 17 cases (27%).

- High Confidence + Low Theme Count: 10 cases (16%).

4. Discussion

- Layered Communication: Online posts about eating disorders often contain multiple layers of meaning. For example, a statement like “I feel disgusting after gaining 5 lbs. I need to start restricting again” simultaneously addresses weight concerns, body image disturbance, negative emotions, and disordered eating behaviors. The multi-thematic approach allows for the coding of all relevant dimensions: [Weight, Eating Disorder Symptoms, Negative Emotions].

- Contextual Interdependence: Themes in eating disorder discourse are often contextually dependent. The system accounts for how the same phrase can indicate different themes based on context. For instance, “scared” might indicate Eating Disorder Symptoms when linked to food consumption (“too scared to eat”) but Negative Emotions when expressing general anxiety about recovery.

- Temporal Complexity: Eating disorder discussions frequently involve past experiences, present struggles, and future concerns within the same post. The multi-thematic system can capture this temporal complexity, such as when individuals discuss past treatment while planning recovery strategies: [Treatment, Recovery, Advice/Reflection/Planning].

- Hierarchical Theme Structure: Each primary theme contains specific keywords and behavioral indicators that guide coding decisions. This hierarchical structure allows for both broad thematic categorization and granular content analysis. For example, the “Eating Disorder Symptoms and Behaviors” theme encompasses

- Behavioral manifestations (binge eating, purging, restricting);

- Physical symptoms (dizziness, fatigue, nausea);

- Emotional precursors and consequences.

- Boundary Definition Guidelines: The additional notes provide explicit guidance for distinguishing between overlapping themes, addressing common ambiguities in coding. These guidelines establish decision rules for boundary cases, such as

- Distinguishing emotional distress directly linked to food consumption (Eating Disorder Symptoms and Behaviors) from general negative emotions.

- Differentiating between personal recovery experiences and advice-giving behaviors.

- Separate relationship dynamics from broader negative social reactions.

- Inclusive Coding Principles: The system adopts an inclusive rather than exclusive approach to thematic assignment. Coders are instructed to apply all relevant themes rather than forcing content into the single “best” category. This principle ensures that the full complexity of eating disorder discourse is captured, reflecting the multifaceted nature of these conditions.

4.1. Limitations and Future Research Directions

- Validation Requirements: While our confidence, focus, and dominance metrics provide compelling evidence for improved classification quality, they represent model self-assessments rather than objective accuracy measures. Future research should examine the relationship between these metrics and ground truth accuracy using expert-annotated datasets. The correlation between confidence scores and actual classification accuracy is particularly important for calibrating automated decision thresholds.

- Generalizability and Robustness: The current study examines a single model (Llama 3.1:8b) and domain (eating disorder content). Replication across different language models and mental health domains is essential to establish the generalizability of these findings. Additionally, robustness testing with adversarial examples and edge cases should evaluate whether the observed improvements persist under challenging conditions.The selection and quality of few-shot examples likely influence performance substantially, but systematic investigation of example curation strategies remains an important research direction. Understanding how example diversity, complexity, and domain specificity affect classification quality could inform best practices for few-shot prompt design.

- Ethical and Safety Considerations: The improved classificatory capabilities must be balanced against potential risks of increased automation in mental health contexts. While higher confidence and dominance scores suggest more reliable classification, they do not eliminate the possibility of systematic biases or misclassifications. Automated systems should maintain appropriate human oversight, particularly high-risk content categories.The concentration of improvements in moderate confidence ranges suggests appropriate calibration, but monitoring for over-confidence bias in production deployments remains essential. Regular auditing and bias testing should be implemented to ensure that enhanced classificatory confidence does not mask problematic systematic errors.

4.2. Broader Implications for AI in Mental Health

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Dane, A.; Bhatia, K. The social media diet: A scoping review to investigate the association between social media, body image and eating disorders amongst young people. PLOS Glob. Public Health 2023, 3, e0001091. [Google Scholar] [CrossRef] [PubMed]

- Zhou, S.; Zhao, Y.; Bian, J.; Haynos, A.F.; Zhang, R. Exploring eating disorder topics on Twitter: Machine learning approach. JMIR Med. Inform. 2020, 8, e18273. [Google Scholar] [CrossRef] [PubMed]

- Guo, Y.; Ovadje, A.; Al-Garadi, M.A.; Sarker, A. Evaluating large language models for health-related text classification tasks with public social media data. J. Am. Med. Inform. Assoc. 2024, 31, 2181–2189. [Google Scholar] [CrossRef] [PubMed]

- Erriu, M.; Cimino, S.; Cerniglia, L. The role of family relationships in eating disorders in adolescents: A narrative review. Behav. Sci. 2020, 10, 71. [Google Scholar] [CrossRef] [PubMed]

- Hewlings, S.J. Eating disorders and dietary supplements: A review of the science. Nutrients 2023, 15, 2076. [Google Scholar] [CrossRef] [PubMed]

- LaMarre, A.; Rice, C. Recovering uncertainty: Exploring eating disorder recovery in context. Cult. Med. Psychiatry 2021, 45, 706–726. [Google Scholar] [CrossRef] [PubMed]

- Lee, Y.; Son, K.; Kim, T.S.; Kim, J.; Chung, J.J.Y.; Adar, E.; Kim, J. One vs. many: Comprehending accurate information from multiple erroneous and inconsistent AI generations. In Proceedings of the Seventh Annual ACM Conference on Fairness, Accountability, and Transparency, Rio de Janeiro, Brazil, 3–6 June 2024; pp. 2518–2531. [Google Scholar]

- Lundblade, K.M. Sorting Things Out: Critically Assessing the Impact of Reddit’s Post Sorting Algorithms on Qualitative Analysis Methods. In Proceedings of the SIGDOC ’23: The 41st ACM International Conference on Design of Communication, Orlando, FL, USA, 9–11 October 2023. [Google Scholar]

| Component | Content | Purpose |

|---|---|---|

| Role Definition | “You are an expert in classifying posts about eating disorders according to specific themes.” | Establishes domain expertise and task context. |

| Theme Constraints | Numbered list of 14 predefined themes (Eating Disorder Symptoms and Behaviors, Weight, Body Image, etc.) | Constrains model vocabulary to valid themes. |

| Target Input | “Classify this post: [POST_TEXT]” | Presents classification target. |

| Output Format | Score assignment (0.0–1.0) with structured formatting requirements | Ensures parseable, consistent responses. |

| Component | Description | Content Example | Word Count |

|---|---|---|---|

| Role Definition | Similar expert framing as zero-shot with enhanced emphasis | “You are an expert in classifying posts about eating disorders according to specific themes. Your task is to ONLY identify themes that are EXPLICITLY mentioned in the text, no implied or inferred themes.” | ~30 words |

| Annotation Guidelines | Full theme definitions with keywords and contextual information | “ANNOTATION GUIDELINES: [Full guidelines content loaded from annotation_guidelines.txt]” | ~2000 words |

| Additional Disambiguation Notes | Detailed guidance on distinguishing between overlapping themes | “ADDITIONAL NOTES ON CONFLICTING THEMES: [Additional notes content loaded from annotation_additional_notes.txt]” | ~2000 words |

| Theme List | Same 14 themes with emphasis on exact matching | “IMPORTANT: Only use EXACTLY these theme labels: 1. Eating Disorder Symptoms and Behaviors 2. Weight 3. Body Image […continues for all 14 themes] | ~60 words |

| Annotated Examples | Up to 100 randomly selected annotated examples | “Here are [N] examples of how to classify posts with EXPLICIT themes only: Example 1: Post: ‘[EXAMPLE_POST_1]’ Classification: Theme_A: 0.6 Theme_B: 0.4 [..continues for up to 100 examples]” | ~20,000 words |

| Target Post | The post to be classified | “Now, please classify this new post based ONLY on what is explicitly stated: ‘[POST_TEXT]’ | Variable |

| Enhanced Instructions | Original instructions with additional anti-hallucination rules | “CRITICAL RULES: 1. Do NOT include themes that are merely implied 2. Only include themes with direct textual evidence 3. If uncertain about a theme, DO NOT include it Only assign scores to themes genuinely and explicitly present in the text.” | ~250 words |

| Aspect | Zero-Shot | Few-Shot |

|---|---|---|

| Total Context Usage | ~300–500 words | ~20,000–25,000 words |

| Guidance Provided | Basic role + theme list | Comprehensive guidelines + disambiguation + annotated examples |

| Anti-Hallucination Measures | Simple instructions | Enhanced rules with explicit textual evidence emphasis |

| Examples for Context | None | Up to 100 annotated demonstrations |

| Analysis | Value |

|---|---|

| Total Posts Analyzed | 4458 |

| Concordant Classifications | 1372 |

| Discordant Classifications | 3086 |

| Concordance Rate (%) | 30.78% |

| Discordance Rate (%) | 69.22% |

| Metric | Zero-Shot | Few-Shot | Difference | % Change |

|---|---|---|---|---|

| Mean | 0.266 | 0.424 | +0.158 | +59.4% |

| Median | 0.235 | 0.410 | +0.175 | +74.5% |

| Standard Deviation | 0.147 | 0.153 | +0.006 | +4.1% |

| Comparative Outcomes | ||||

| Few-Shot > Zero-Shot | _ | _ | 87.26% | _ |

| Few-Shot = Zero-Shot | _ | _ | 2.09% | _ |

| Few-Shot < Zero-Shot | _ | _ | 10.66% | _ |

| Confidence Category | Zero-Shot | Few-Shot | Change | Interpretation |

|---|---|---|---|---|

| Low Confidence (0.0–0.3) | 71.4% | 18.5% | −52.9% | Substantial reduction in uncertain predictions |

| Medium Confidence (0.3–0.6) | 24.8% | 69.0% | +44.2% | Major increase in moderate certainty |

| High Confidence (0.6–0.1) | 3.9% | 12.5% | +8.6% | Notable increase in high certainty predictions |

| Metric | Zero-Shot | Few-Shot | Difference | % Change |

|---|---|---|---|---|

| Mean | −1.715 | −1.053 | +0.662 | +38.6% |

| Median | −1.782 | −1.071 | +0.711 | +39.9% |

| Standard Deviation | 0.563 | 0.379 | −0.184 | −32.7% |

| Comparative Outcomes | ||||

| Few-Shot > Zero-Shot | _ | _ | 89.61% | _ |

| Few-Shot = Zero-Shot | _ | _ | 1.05% | _ |

| Few-Shot < Zero-Shot | _ | _ | 9.33% | _ |

| Focus Category | Range | Interpretation | Zero-Shot | Few-Shot | Change | Effect |

|---|---|---|---|---|---|---|

| Very Diffuse | −3.00 to −2.10 | High uncertainty across many themes | 29.4% | 0.8% | −28.6% | Dramatic Reduction |

| Moderately Diffuse | −2.10 to −1.50 | Moderate spread across themes | 43.3% | 11.6% | −31.7% | Substantial Reduction |

| Moderately Focused | −1.50 to −0.90 | Emerging clarity on primary themes | 19.3% | 57.5% | +38.2% | Major Increase |

| Highly Focused | −0.90 to 0.00 | Clear confidence in specific themes | 8.0% | 30.2% | +22.2% | Substantial Increase |

| Metric | Zero-Shot | Few-Shot | Difference | % Change |

|---|---|---|---|---|

| Mean | 0.46 | 0.81 | +0.35 | +76.1% |

| Median | 0.30 | 0.67 | +0.37 | +123.33% |

| Standard Deviation | 0.52 | 0.68 | +0.16 | +30.8% |

| Comparative Outcomes | ||||

| Few-Shot > Zero-Shot | _ | _ | 86.3% | _ |

| Few-Shot = Zero-Shot | _ | _ | 4.1% | _ |

| Few-Shot < Zero-Shot | _ | _ | 9.6% | _ |

| Dominance Category | Range | Threshold Meaning | Zero-Shot | Few-Shot | Change | Strategic Implication |

|---|---|---|---|---|---|---|

| Weak Primary Theme | 0.0–0.5 | Top < all others combined | 78.5% | 30.9% | −47.6% | Reduced ambiguous classifications |

| Emerging Primary | 0.5–1.0 | Top ~ half of others | 12.8% | 38.0% | +25.2% | Increased moderate clarity |

| Clear Primary | 1.0–1.5 | Top > all others combined | 5.0% | 20.0% | +15.0% | Enhanced decisive classification |

| Strong Dominance | 1.5+ | Top >> others | 3.7% | 11.1% | +7.4% | Improved high-confidence decisions |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Prasad, A.; Shalmani, S.A.; He, L.; Wang, Y.; McRoy, S. Identifying Themes in Social Media Discussions of Eating Disorders: A Quantitative Analysis of How Meaningful Guidance and Examples Improve LLM Classification. BioMedInformatics 2025, 5, 40. https://doi.org/10.3390/biomedinformatics5030040

Prasad A, Shalmani SA, He L, Wang Y, McRoy S. Identifying Themes in Social Media Discussions of Eating Disorders: A Quantitative Analysis of How Meaningful Guidance and Examples Improve LLM Classification. BioMedInformatics. 2025; 5(3):40. https://doi.org/10.3390/biomedinformatics5030040

Chicago/Turabian StylePrasad, Apoorv, Setayesh Abiazi Shalmani, Lu He, Yang Wang, and Susan McRoy. 2025. "Identifying Themes in Social Media Discussions of Eating Disorders: A Quantitative Analysis of How Meaningful Guidance and Examples Improve LLM Classification" BioMedInformatics 5, no. 3: 40. https://doi.org/10.3390/biomedinformatics5030040

APA StylePrasad, A., Shalmani, S. A., He, L., Wang, Y., & McRoy, S. (2025). Identifying Themes in Social Media Discussions of Eating Disorders: A Quantitative Analysis of How Meaningful Guidance and Examples Improve LLM Classification. BioMedInformatics, 5(3), 40. https://doi.org/10.3390/biomedinformatics5030040