Generative Artificial Intelligence in Healthcare: Applications, Implementation Challenges, and Future Directions

Abstract

1. Introduction

2. Methodology

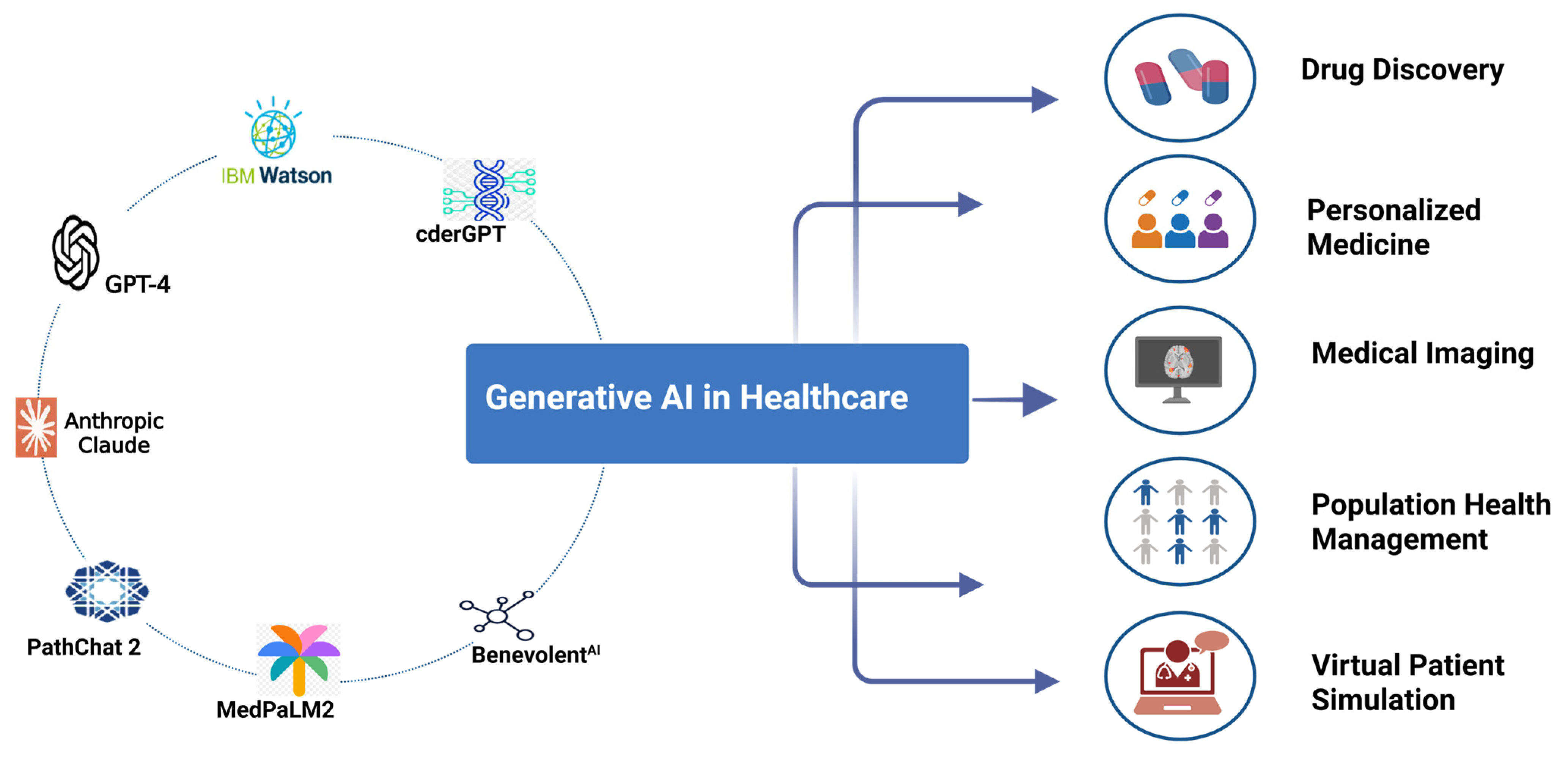

3. Different Generative AI Tools

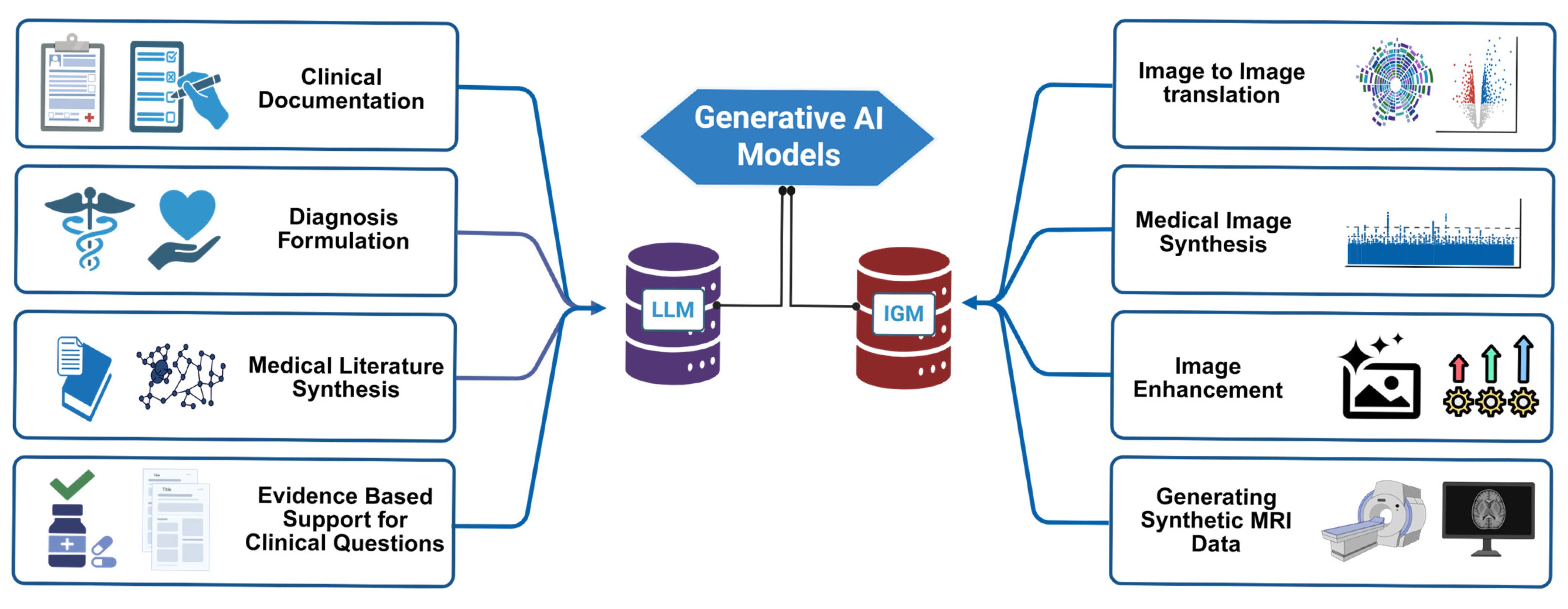

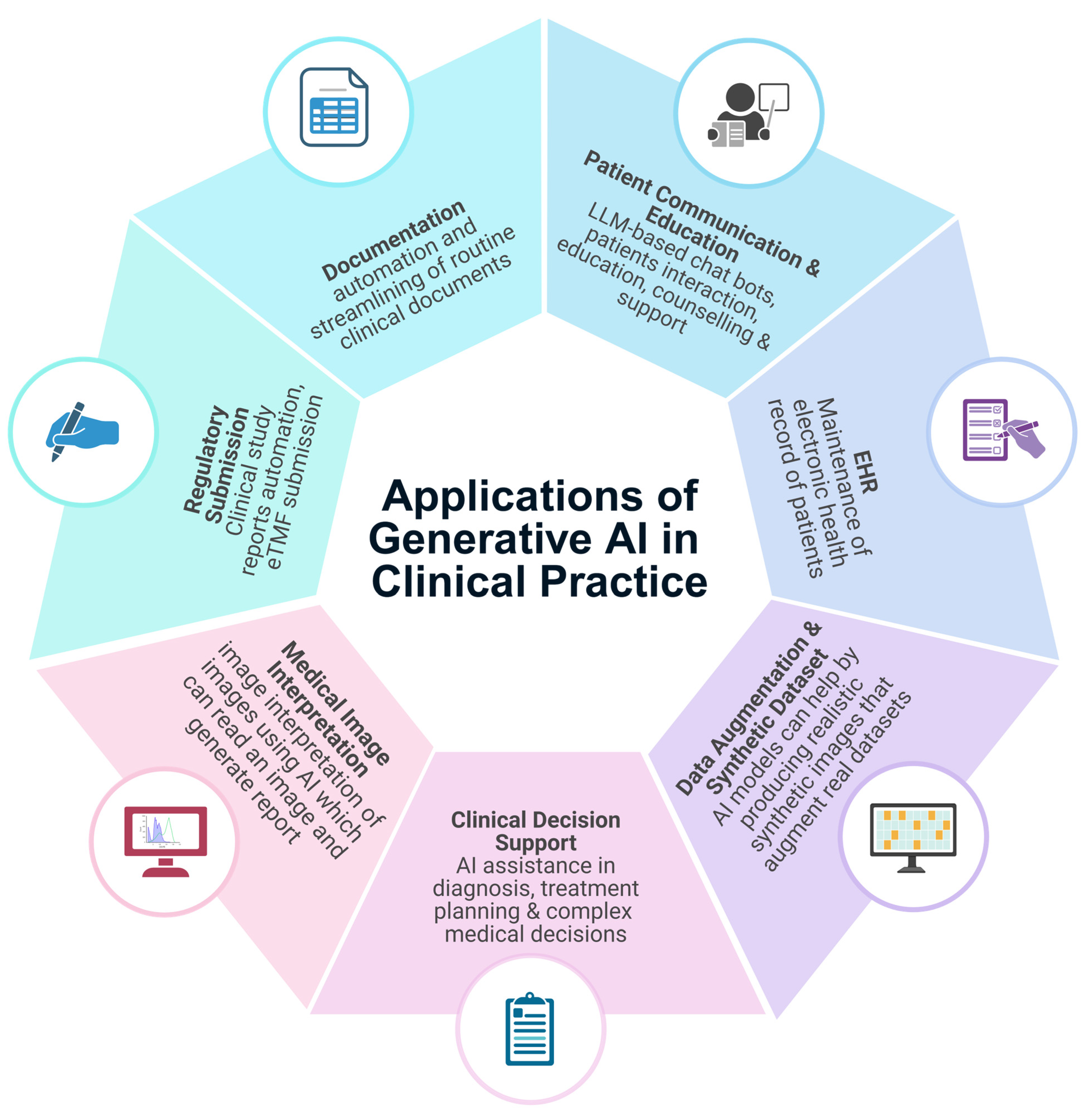

4. Applications in Clinical Practice and Research

4.1. Clinical Documentation and Administrative Workflow

4.2. Patient Communication and Education

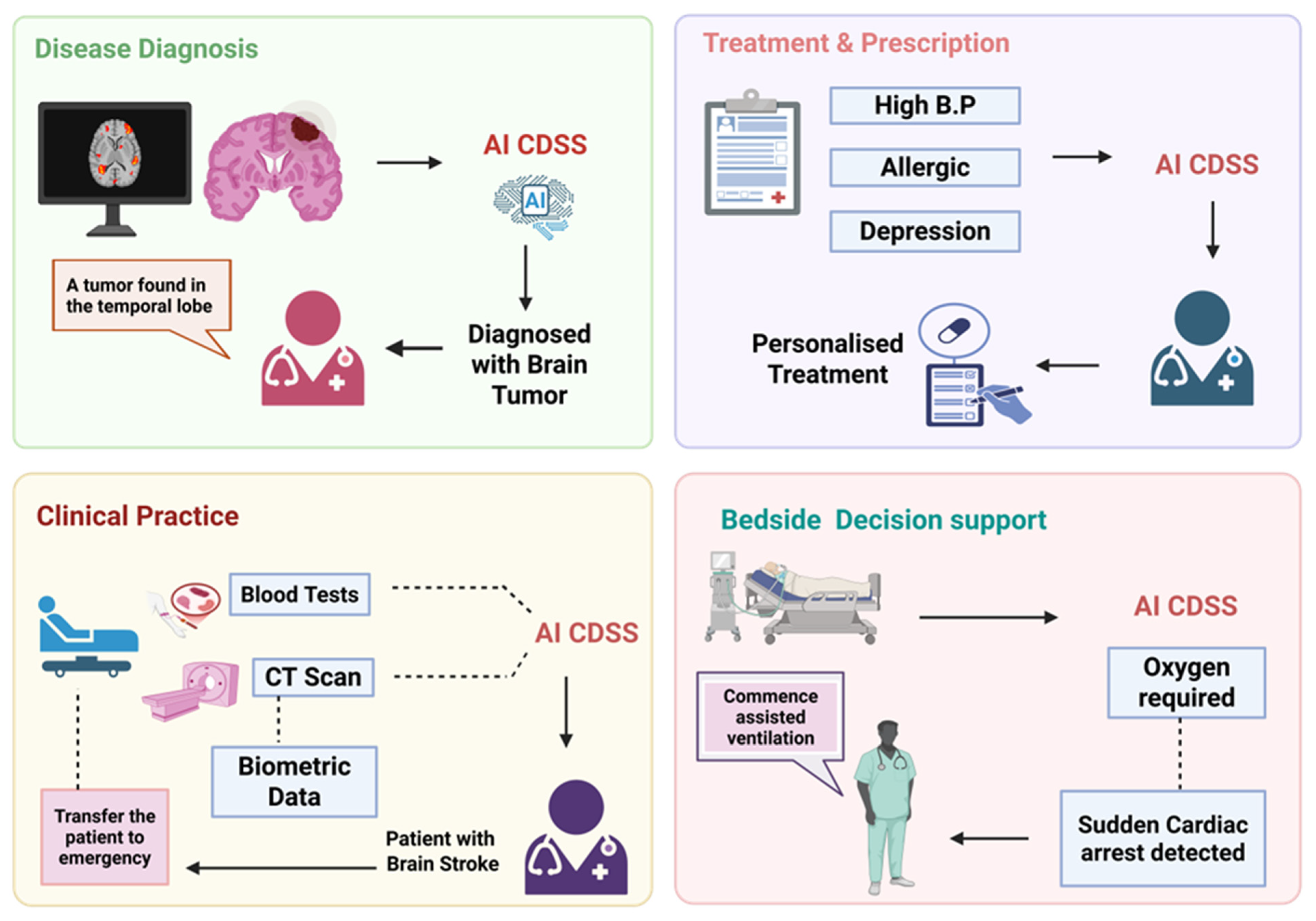

4.3. Clinical Decision Support and Diagnostics

4.4. Medical Imaging Interpretation

| Application | Description | Benefits | References |

|---|---|---|---|

| Synthetic Image Generation | Use of GANs and diffusion models to generate synthetic MRI, CT, X-ray, ultrasound, and pathology images. | Augments datasets, preserve patient privacy, improve model generalization. | [8,67] |

| Image Reconstruction | Generative models like GANs used to enhance image quality and reconstruct missing parts of images. | Improved image clarity; better diagnostics. | [69,70] |

| Data Augmentation | GAN-generated synthetic data to augment training datasets, synthetic images to balance class distribution and enhance model performance and robustness. | Increases diagnostic model accuracy; addresses domain shift; reduced overfitting. | [8,71,72] |

| Image Quality Enhancement | Denoising low-dose CT/MRI images, super -resolution MRI using generative models. | Reduces scan time, radiation exposure, and improves image clarity. | [73] |

| Modality-to-Modality Translation | Generating synthetic CT images from MRIs or virtual histochemical staining in pathology using GANs. | Reduces need for multiple scans; enhances surgical/radiation planning. | [68,74] |

| Disease Detection | AI models trained on generatively augmented data for more accurate detection of diseases in scans. | Early detection; better patient outcomes. | [75,76] |

| Image Segmentation | Segmentation models using generative techniques for precise delineation of structures in medical images. | Enhanced surgical planning; faster analysis. | [77,78] |

4.5. Clinical Decision Support Systems (CDSSs)

4.6. Emergency and Triage

4.7. Medical Imaging and Pathology

4.7.1. Data Augmentation and Synthetic Datasets

4.7.2. Image Quality Enhancement

4.7.3. Image-to-Image Translation

4.8. Drug Discovery and Biomedical Research

4.8.1. De Novo Molecule Generation

4.8.2. Drug Optimization and ADMET

4.8.3. Clinical Trial Design and Data Augmentation

4.8.4. Genomics and Precision Medicine

4.8.5. Biomedical Literature and Knowledge Synthesis

4.9. Patient Monitoring and Telehealth Integration

4.10. Medical Education and Training

4.10.1. Education for Trainees

4.10.2. Simulation and Case-Based Learning

4.10.3. Continuing Education and Knowledge Update

4.10.4. Assessment and Feedback

4.11. Explainability in Machine Learning in Contrast to Traditional Statistical Methods

4.12. Comparative Insights of Generative AI with Traditional Methods or Human Experts

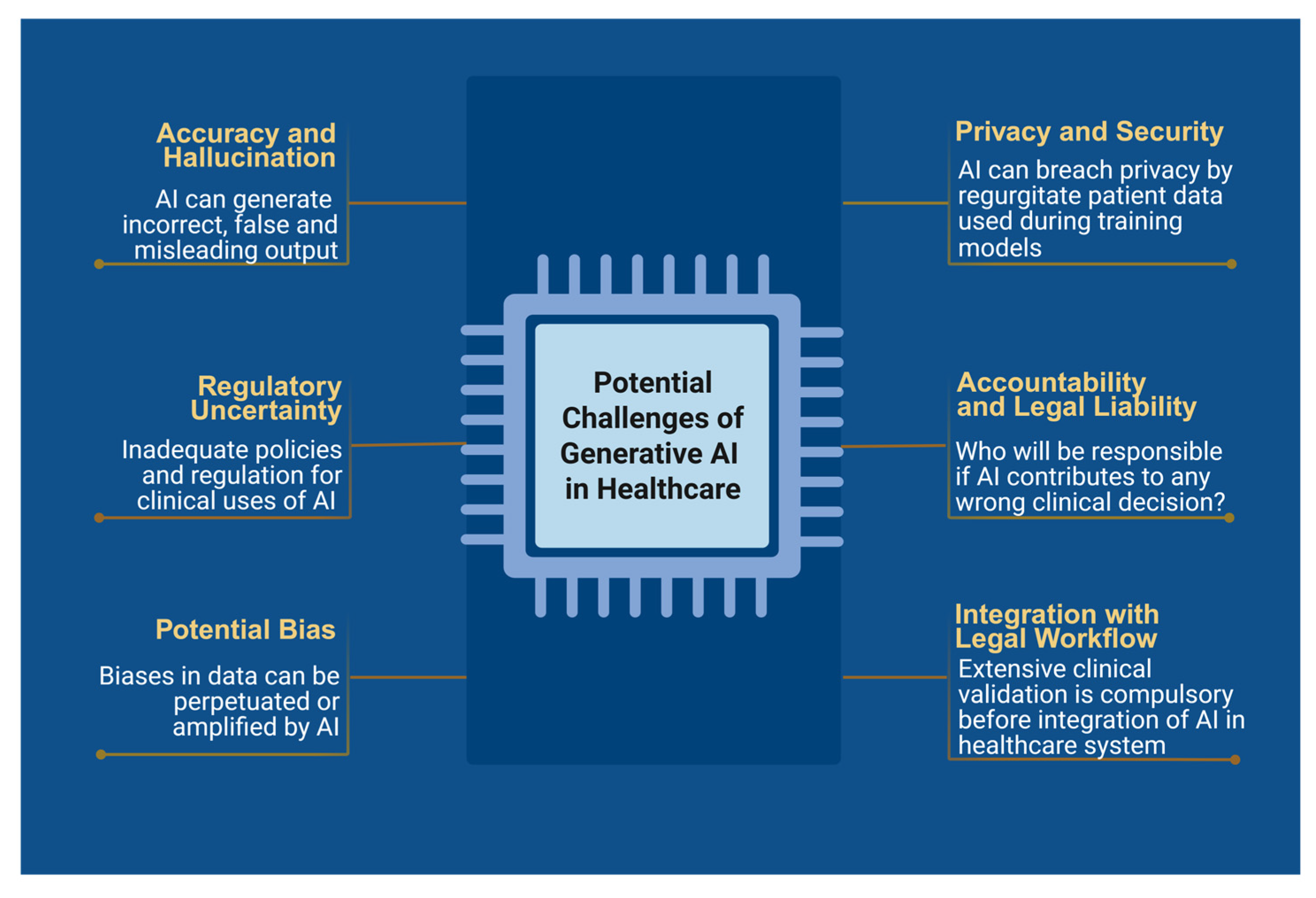

5. Challenges and Ethical Considerations

5.1. Accuracy and Hallucinations

5.2. Bias and Health Equity

5.3. Privacy and Security

5.4. Accountability and Legal Liability

5.5. Implementation Challenges

5.6. Sustainable Development Goals (SDGs): Climate Action

5.7. Cost-Effectiveness and Scalability Considerations

6. Future Directions and Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Zhang, K.; Meng, X.; Yan, X.; Ji, J.; Liu, J.; Xu, H.; Zhang, H.; Liu, D.; Wang, J.; Wang, X.; et al. Revolutionizing Health Care: The Transformative Impact of Large Language Models in Medicine. J. Med. Internet Res. 2025, 27, e59069. [Google Scholar] [CrossRef] [PubMed]

- Hacking, S. ChatGPT and Medicine: Together We Embrace the AI Renaissance. JMIR Bioinform. Biotechnol. 2024, 5, e52700. [Google Scholar] [CrossRef] [PubMed]

- Kung, T.H.; Cheatham, M.; Medenilla, A.; Sillos, C.; De Leon, L.; Elepaño, C.; Madriaga, M.; Aggabao, R.; Diaz-Candido, G.; Maningo, J.; et al. Performance of ChatGPT on USMLE: Potential for AI-Assisted Medical Education Using Large Language Models. PLoS Digit. Health 2023, 2, e0000198. [Google Scholar] [CrossRef]

- Allaway, R.J. Science & Tech Spotlight: Generative AI in Health Care 2024. Available online: https://www.gao.gov/products/gao-24-107634 (accessed on 30 April 2025).

- Singhal, K.; Tu, T.; Gottweis, J.; Sayres, R.; Wulczyn, E.; Amin, M.; Hou, L.; Clark, K.; Pfohl, S.R.; Cole-Lewis, H.; et al. Toward Expert-Level Medical Question Answering with Large Language Models. Nat. Med. 2025, 31, 943–950. [Google Scholar] [CrossRef]

- Torous, J.; Blease, C. Generative Artificial Intelligence in Mental Health Care: Potential Benefits and Current Challenges. World Psychiatry 2024, 23, 1–2. [Google Scholar] [CrossRef]

- Ng, C.K.C. Generative Adversarial Network (Generative Artificial Intelligence) in Pediatric Radiology: A Systematic Review. Children 2023, 10, 1372. [Google Scholar] [CrossRef] [PubMed]

- Brugnara, G.; Jayachandran Preetha, C.; Deike, K.; Haase, R.; Pinetz, T.; Foltyn-Dumitru, M.; Mahmutoglu, M.A.; Wildemann, B.; Diem, R.; Wick, W.; et al. Addressing the Generalizability of AI in Radiology Using a Novel Data Augmentation Framework with Synthetic Patient Image Data: Proof-of-Concept and External Validation for Classification Tasks in Multiple Sclerosis. Radiol. Artif. Intell. 2024, 6, e230514. [Google Scholar] [CrossRef]

- Liu, T.-L.; Hetherington, T.C.; Stephens, C.; McWilliams, A.; Dharod, A.; Carroll, T.; Cleveland, J.A. AI-Powered Clinical Documentation and Clinicians’ Electronic Health Record Experience: A Nonrandomized Clinical Trial. JAMA Netw. Open 2024, 7, e2432460. [Google Scholar] [CrossRef] [PubMed]

- Ali, S.R.; Dobbs, T.D.; Hutchings, H.A.; Whitaker, I.S. Using ChatGPT to Write Patient Clinic Letters. Lancet Digit. Health 2023, 5, e179–e181. [Google Scholar] [CrossRef]

- Zaretsky, J.; Kim, J.M.; Baskharoun, S.; Zhao, Y.; Austrian, J.; Aphinyanaphongs, Y.; Gupta, R.; Blecker, S.B.; Feldman, J. Generative Artificial Intelligence to Transform Inpatient Discharge Summaries to Patient-Friendly Language and Format. JAMA Netw. Open 2024, 7, e240357. [Google Scholar] [CrossRef]

- Garcia, P.; Ma, S.P.; Shah, S.; Smith, M.; Jeong, Y.; Devon-Sand, A.; Tai-Seale, M.; Takazawa, K.; Clutter, D.; Vogt, K.; et al. Artificial Intelligence–Generated Draft Replies to Patient Inbox Messages. JAMA Netw. Open 2024, 7, e243201. [Google Scholar] [CrossRef] [PubMed]

- Hartman, V.; Zhang, X.; Poddar, R.; McCarty, M.; Fortenko, A.; Sholle, E.; Sharma, R.; Campion, T.; Steel, P.A.D. Developing and Evaluating Large Language Model–Generated Emergency Medicine Handoff Notes. JAMA Netw. Open 2024, 7, e2448723. [Google Scholar] [CrossRef] [PubMed]

- Duggan, M.J.; Gervase, J.; Schoenbaum, A.; Hanson, W.; Howell, J.T.; Sheinberg, M.; Johnson, K.B. Clinician Experiences With Ambient Scribe Technology to Assist With Documentation Burden and Efficiency. JAMA Netw. Open 2025, 8, e2460637. [Google Scholar] [CrossRef]

- Ayers, J.W.; Poliak, A.; Dredze, M.; Leas, E.C.; Zhu, Z.; Kelley, J.B.; Faix, D.J.; Goodman, A.M.; Longhurst, C.A.; Hogarth, M.; et al. Comparing Physician and Artificial Intelligence Chatbot Responses to Patient Questions Posted to a Public Social Media Forum. JAMA Intern. Med. 2023, 183, 589–596. [Google Scholar] [CrossRef] [PubMed]

- Anderson, B.J.; Zia Ul Haq, M.; Zhu, Y.; Hornback, A.; Cowan, A.D.; Mott, M.; Gallaher, B.; Harzand, A. Development and Evaluation of a Model to Manage Patient Portal Messages. NEJM AI 2025, 2, AIoa2400354. [Google Scholar] [CrossRef]

- Ren, Y.; Loftus, T.J.; Datta, S.; Ruppert, M.M.; Guan, Z.; Miao, S.; Shickel, B.; Feng, Z.; Giordano, C.; Upchurch, G.R.; et al. Performance of a Machine Learning Algorithm Using Electronic Health Record Data to Predict Postoperative Complications and Report on a Mobile Platform. JAMA Netw. Open 2022, 5, e2211973. [Google Scholar] [CrossRef]

- Small, W.R.; Wiesenfeld, B.; Brandfield-Harvey, B.; Jonassen, Z.; Mandal, S.; Stevens, E.R.; Major, V.J.; Lostraglio, E.; Szerencsy, A.; Jones, S.; et al. Large Language Model–Based Responses to Patients’ In-Basket Messages. JAMA Netw. Open 2024, 7, e2422399. [Google Scholar] [CrossRef]

- Yalamanchili, A.; Sengupta, B.; Song, J.; Lim, S.; Thomas, T.O.; Mittal, B.B.; Abazeed, M.E.; Teo, P.T. Quality of Large Language Model Responses to Radiation Oncology Patient Care Questions. JAMA Netw. Open 2024, 7, e244630. [Google Scholar] [CrossRef]

- Liu, T.-L.; Hetherington, T.C.; Dharod, A.; Carroll, T.; Bundy, R.; Nguyen, H.; Bundy, H.E.; Isreal, M.; McWilliams, A.; Cleveland, J.A. Does AI-Powered Clinical Documentation Enhance Clinician Efficiency? A Longitudinal Study. NEJM AI 2024, 1, AIoa2400659. [Google Scholar] [CrossRef]

- Zakka, C.; Shad, R.; Chaurasia, A.; Dalal, A.R.; Kim, J.L.; Moor, M.; Fong, R.; Phillips, C.; Alexander, K.; Ashley, E.; et al. Almanac—Retrieval-Augmented Language Models for Clinical Medicine. NEJM AI 2024, 1, AIoa2300068. [Google Scholar] [CrossRef]

- Nayak, A.; Vakili, S.; Nayak, K.; Nikolov, M.; Chiu, M.; Sosseinheimer, P.; Talamantes, S.; Testa, S.; Palanisamy, S.; Giri, V.; et al. Use of Voice-Based Conversational Artificial Intelligence for Basal Insulin Prescription Management Among Patients With Type 2 Diabetes: A Randomized Clinical Trial. JAMA Netw. Open 2023, 6, e2340232. [Google Scholar] [CrossRef] [PubMed]

- Bernstein, I.A.; Zhang, Y.; Govil, D.; Majid, I.; Chang, R.T.; Sun, Y.; Shue, A.; Chou, J.C.; Schehlein, E.; Christopher, K.L.; et al. Comparison of Ophthalmologist and Large Language Model Chatbot Responses to Online Patient Eye Care Questions. JAMA Netw. Open 2023, 6, e2330320. [Google Scholar] [CrossRef] [PubMed]

- Chen, D.; Huang, R.S.; Jomy, J.; Wong, P.; Yan, M.; Croke, J.; Tong, D.; Hope, A.; Eng, L.; Raman, S. Performance of Multimodal Artificial Intelligence Chatbots Evaluated on Clinical Oncology Cases. JAMA Netw. Open 2024, 7, e2437711. [Google Scholar] [CrossRef]

- Dolan, E. Generative AI Chatbots like ChatGPT Can Act as an Emotional Sanctuary for Mental Health 2025. Available online: https://www.psypost.org/generative-ai-chatbots-like-chatgpt-can-act-as-an-emotional-sanctuary-for-mental-health/ (accessed on 30 April 2025).

- Patel, T.A.; Heintz, J.; Chen, J.; LaPergola, M.; Bilker, W.B.; Patel, M.S.; Arya, L.A.; Patel, M.I.; Bekelman, J.E.; Manz, C.R.; et al. Spending Analysis of Machine Learning–Based Communication Nudges in Oncology. NEJM AI 2024, 1, AIoa2300228. [Google Scholar] [CrossRef]

- Krohmer, K.; Naumann, E.; Tuschen-Caffier, B.; Svaldi, J. Mirror Exposure in Binge-Eating Disorder: Changes in Eating Pathology and Attentional Biases. J. Consult. Clin. Psychol. 2022, 90, 613–625. [Google Scholar] [CrossRef]

- Nong, P.; Platt, J. Patients’ Trust in Health Systems to Use Artificial Intelligence. JAMA Netw. Open 2025, 8, e2460628. [Google Scholar] [CrossRef]

- Shea, Y.-F.; Lee, C.M.Y.; Ip, W.C.T.; Luk, D.W.A.; Wong, S.S.W. Use of GPT-4 to Analyze Medical Records of Patients With Extensive Investigations and Delayed Diagnosis. JAMA Netw. Open 2023, 6, e2325000. [Google Scholar] [CrossRef]

- Lin, C.; Liu, W.-T.; Chang, C.-H.; Lee, C.-C.; Hsing, S.-C.; Fang, W.-H.; Tsai, D.-J.; Chen, K.-C.; Lee, C.-H.; Cheng, C.-C.; et al. Artificial Intelligence–Powered Rapid Identification of ST-Elevation Myocardial Infarction via Electrocardiogram (ARISE)—A Pragmatic Randomized Controlled Trial. NEJM AI 2024, 1, AIoa2400190. [Google Scholar] [CrossRef]

- Goh, E.; Gallo, R.; Hom, J.; Strong, E.; Weng, Y.; Kerman, H.; Cool, J.A.; Kanjee, Z.; Parsons, A.S.; Ahuja, N.; et al. Large Language Model Influence on Diagnostic Reasoning: A Randomized Clinical Trial. JAMA Netw. Open 2024, 7, e2440969. [Google Scholar] [CrossRef]

- Konz, N.; Buda, M.; Gu, H.; Saha, A.; Yang, J.; Chłędowski, J.; Park, J.; Witowski, J.; Geras, K.J.; Shoshan, Y.; et al. A Competition, Benchmark, Code, and Data for Using Artificial Intelligence to Detect Lesions in Digital Breast Tomosynthesis. JAMA Netw. Open 2023, 6, e230524. [Google Scholar] [CrossRef]

- Tsai, C.-M.; Lin, C.-H.R.; Kuo, H.-C.; Cheng, F.-J.; Yu, H.-R.; Hung, T.-C.; Hung, C.-S.; Huang, C.-M.; Chu, Y.-C.; Huang, Y.-H. Use of Machine Learning to Differentiate Children with Kawasaki Disease from Other Febrile Children in a Pediatric Emergency Department. JAMA Netw. Open 2023, 6, e237489. [Google Scholar] [CrossRef] [PubMed]

- Tong, W.-J.; Wu, S.-H.; Cheng, M.-Q.; Huang, H.; Liang, J.-Y.; Li, C.-Q.; Guo, H.-L.; He, D.-N.; Liu, Y.-H.; Xiao, H.; et al. Integration of Artificial Intelligence Decision Aids to Reduce Workload and Enhance Efficiency in Thyroid Nodule Management. JAMA Netw. Open 2023, 6, e2313674. [Google Scholar] [CrossRef] [PubMed]

- Hansen, L.; Bernstorff, M.; Enevoldsen, K.; Kolding, S.; Damgaard, J.G.; Perfalk, E.; Nielbo, K.L.; Danielsen, A.A.; Østergaard, S.D. Predicting Diagnostic Progression to Schizophrenia or Bipolar Disorder via Machine Learning. JAMA Psychiatry 2025, 82, 459. [Google Scholar] [CrossRef]

- Ngeow, A.J.H.; Moosa, A.S.; Tan, M.G.; Zou, L.; Goh, M.M.R.; Lim, G.H.; Tagamolila, V.; Ereno, I.; Durnford, J.R.; Cheung, S.K.H.; et al. Development and Validation of a Smartphone Application for Neonatal Jaundice Screening. JAMA Netw. Open 2024, 7, e2450260. [Google Scholar] [CrossRef] [PubMed]

- Barami, T.; Manelis-Baram, L.; Kaiser, H.; Ilan, M.; Slobodkin, A.; Hadashi, O.; Hadad, D.; Waissengreen, D.; Nitzan, T.; Menashe, I.; et al. Automated Analysis of Stereotypical Movements in Videos of Children With Autism Spectrum Disorder. JAMA Netw. Open 2024, 7, e2432851. [Google Scholar] [CrossRef] [PubMed]

- Gjesvik, J.; Moshina, N.; Lee, C.I.; Miglioretti, D.L.; Hofvind, S. Artificial Intelligence Algorithm for Subclinical Breast Cancer Detection. JAMA Netw Open 2024, 7, e2437402. [Google Scholar] [CrossRef]

- Wong, E.F.; Saini, A.K.; Accortt, E.E.; Wong, M.S.; Moore, J.H.; Bright, T.J. Evaluating Bias-Mitigated Predictive Models of Perinatal Mood and Anxiety Disorders. JAMA Netw. Open 2024, 7, e2438152. [Google Scholar] [CrossRef]

- Hillis, J.M.; Bizzo, B.C.; Mercaldo, S.; Chin, J.K.; Newbury-Chaet, I.; Digumarthy, S.R.; Gilman, M.D.; Muse, V.V.; Bottrell, G.; Seah, J.C.Y.; et al. Evaluation of an Artificial Intelligence Model for Detection of Pneumothorax and Tension Pneumothorax in Chest Radiographs. JAMA Netw. Open 2022, 5, e2247172. [Google Scholar] [CrossRef]

- L’Imperio, V.; Wulczyn, E.; Plass, M.; Müller, H.; Tamini, N.; Gianotti, L.; Zucchini, N.; Reihs, R.; Corrado, G.S.; Webster, D.R.; et al. Pathologist Validation of a Machine Learning–Derived Feature for Colon Cancer Risk Stratification. JAMA Netw. Open 2023, 6, e2254891. [Google Scholar] [CrossRef]

- Benary, M.; Wang, X.D.; Schmidt, M.; Soll, D.; Hilfenhaus, G.; Nassir, M.; Sigler, C.; Knödler, M.; Keller, U.; Beule, D.; et al. Leveraging Large Language Models for Decision Support in Personalized Oncology. JAMA Netw. Open 2023, 6, e2343689. [Google Scholar] [CrossRef]

- Zhong, Y.; Brooks, M.M.; Kennedy, E.H.; Bodnar, L.M.; Naimi, A.I. Use of Machine Learning to Estimate the Per-Protocol Effect of Low-Dose Aspirin on Pregnancy Outcomes: A Secondary Analysis of a Randomized Clinical Trial. JAMA Netw. Open 2022, 5, e2143414. [Google Scholar] [CrossRef] [PubMed]

- Ramírez-Baraldes, E.; García-Gutiérrez, D.; García-Salido, C. Artificial Intelligence in Nursing: New Opportunities and Challenges. Eur. J. Educ. 2025, 60, e70033. [Google Scholar] [CrossRef]

- Huang, J.; Neill, L.; Wittbrodt, M.; Melnick, D.; Klug, M.; Thompson, M.; Bailitz, J.; Loftus, T.; Malik, S.; Phull, A.; et al. Generative Artificial Intelligence for Chest Radiograph Interpretation in the Emergency Department. JAMA Netw. Open 2023, 6, e2336100. [Google Scholar] [CrossRef]

- Chen, X. Generative Models in Protein Engineering: A Comprehensive Survey. 2024. Available online: https://openreview.net/pdf?id=Xc7l84S0Ao (accessed on 30 April 2025).

- Khalifa, M.; Albadawy, M.; Iqbal, U. Advancing Clinical Decision Support: The Role of Artificial Intelligence across Six Domains. Comput. Methods Programs Biomed. Update 2024, 5, 100142. [Google Scholar] [CrossRef]

- Wang, D.; Huang, X. Transforming Education through Artificial Intelligence and Immersive Technologies: Enhancing Learning Experiences. Interact. Learn. Environ. 2025, 1–20. [Google Scholar] [CrossRef]

- Haque, A.; Akther, N.; Khan, I.; Agarwal, K.; Uddin, N. Artificial Intelligence in Retail Marketing: Research Agenda Based on Bibliometric Reflection and Content Analysis (2000–2023). Informatics 2024, 11, 74. [Google Scholar] [CrossRef]

- Blease, C.R.; Locher, C.; Gaab, J.; Hägglund, M.; Mandl, K.D. Generative Artificial Intelligence in Primary Care: An Online Survey of UK General Practitioners. BMJ Health Care Inform. 2024, 31, e101102. [Google Scholar] [CrossRef] [PubMed]

- Steimetz, E.; Minkowitz, J.; Gabutan, E.C.; Ngichabe, J.; Attia, H.; Hershkop, M.; Ozay, F.; Hanna, M.G.; Gupta, R. Use of Artificial Intelligence Chatbots in Interpretation of Pathology Reports. JAMA Netw. Open 2024, 7, e2412767. [Google Scholar] [CrossRef] [PubMed]

- Ziegelmayer, S.; Reischl, S.; Havrda, H.; Gawlitza, J.; Graf, M.; Lenhart, N.; Nehls, N.; Lemke, T.; Wilhelm, D.; Lohöfer, F.; et al. Development and Validation of a Deep Learning Algorithm to Differentiate Colon Carcinoma From Acute Diverticulitis in Computed Tomography Images. JAMA Netw. Open 2023, 6, e2253370. [Google Scholar] [CrossRef]

- Gunturkun, F.; Bakir-Batu, B.; Siddiqui, A.; Lakin, K.; Hoehn, M.E.; Vestal, R.; Davis, R.L.; Shafi, N.I. Development of a Deep Learning Model for Retinal Hemorrhage Detection on Head Computed Tomography in Young Children. JAMA Netw. Open 2023, 6, e2319420. [Google Scholar] [CrossRef]

- Sun, D.; Nguyen, T.M.; Allaway, R.J.; Wang, J.; Chung, V.; Yu, T.V.; Mason, M.; Dimitrovsky, I.; Ericson, L.; Li, H.; et al. A Crowdsourcing Approach to Develop Machine Learning Models to Quantify Radiographic Joint Damage in Rheumatoid Arthritis. JAMA Netw. Open 2022, 5, e2227423. [Google Scholar] [CrossRef] [PubMed]

- Torres-Lopez, V.M.; Rovenolt, G.E.; Olcese, A.J.; Garcia, G.E.; Chacko, S.M.; Robinson, A.; Gaiser, E.; Acosta, J.; Herman, A.L.; Kuohn, L.R.; et al. Development and Validation of a Model to Identify Critical Brain Injuries Using Natural Language Processing of Text Computed Tomography Reports. JAMA Netw. Open 2022, 5, e2227109. [Google Scholar] [CrossRef]

- Zhang, L.; Dong, D.; Sun, Y.; Hu, C.; Sun, C.; Wu, Q.; Tian, J. Development and Validation of a Deep Learning Model to Screen for Trisomy 21 During the First Trimester From Nuchal Ultrasonographic Images. JAMA Netw. Open 2022, 5, e2217854. [Google Scholar] [CrossRef] [PubMed]

- Homayounieh, F.; Digumarthy, S.; Ebrahimian, S.; Rueckel, J.; Hoppe, B.F.; Sabel, B.O.; Conjeti, S.; Ridder, K.; Sistermanns, M.; Wang, L.; et al. An Artificial Intelligence–Based Chest X-Ray Model on Human Nodule Detection Accuracy From a Multicenter Study. JAMA Netw. Open 2021, 4, e2141096. [Google Scholar] [CrossRef]

- Lee, C.; Willis, A.; Chen, C.; Sieniek, M.; Watters, A.; Stetson, B.; Uddin, A.; Wong, J.; Pilgrim, R.; Chou, K.; et al. Development of a Machine Learning Model for Sonographic Assessment of Gestational Age. JAMA Netw. Open 2023, 6, e2248685. [Google Scholar] [CrossRef] [PubMed]

- Sahashi, Y.; Vukadinovic, M.; Amrollahi, F.; Trivedi, H.; Rhee, J.; Chen, J.; Cheng, S.; Ouyang, D.; Kwan, A.C. Opportunistic Screening of Chronic Liver Disease with Deep-Learning–Enhanced Echocardiography. NEJM AI 2025, 2, AIoa2400948. [Google Scholar] [CrossRef]

- Dippel, J.; Prenißl, N.; Hense, J.; Liznerski, P.; Winterhoff, T.; Schallenberg, S.; Kloft, M.; Buchstab, O.; Horst, D.; Alber, M.; et al. AI-Based Anomaly Detection for Clinical-Grade Histopathological Diagnostics. NEJM AI 2024, 1, AIoa2400468. [Google Scholar] [CrossRef]

- Kazemzadeh, S.; Kiraly, A.P.; Nabulsi, Z.; Sanjase, N.; Maimbolwa, M.; Shuma, B.; Jamshy, S.; Chen, C.; Agharwal, A.; Lau, C.T.; et al. Prospective Multi-Site Validation of AI to Detect Tuberculosis and Chest X-Ray Abnormalities. NEJM AI 2024, 1, AIoa2400018. [Google Scholar] [CrossRef]

- Shu, Q.; Pang, J.; Liu, Z.; Liang, X.; Chen, M.; Tao, Z.; Liu, Q.; Guo, Y.; Yang, X.; Ding, J.; et al. Artificial Intelligence for Early Detection of Pediatric Eye Diseases Using Mobile Photos. JAMA Netw. Open 2024, 7, e2425124. [Google Scholar] [CrossRef]

- Cui, H.; Zhao, Y.; Xiong, S.; Feng, Y.; Li, P.; Lv, Y.; Chen, Q.; Wang, R.; Xie, P.; Luo, Z.; et al. Diagnosing Solid Lesions in the Pancreas With Multimodal Artificial Intelligence: A Randomized Crossover Trial. JAMA Netw. Open 2024, 7, e2422454. [Google Scholar] [CrossRef]

- Aklilu, J.G.; Sun, M.W.; Goel, S.; Bartoletti, S.; Rau, A.; Olsen, G.; Hung, K.S.; Mintz, S.L.; Luong, V.; Milstein, A.; et al. Artificial Intelligence Identifies Factors Associated with Blood Loss and Surgical Experience in Cholecystectomy. NEJM AI 2024, 1, AIoa2300088. [Google Scholar] [CrossRef]

- Ahn, J.S.; Ebrahimian, S.; McDermott, S.; Lee, S.; Naccarato, L.; Di Capua, J.F.; Wu, M.Y.; Zhang, E.W.; Muse, V.; Miller, B.; et al. Association of Artificial Intelligence–Aided Chest Radiograph Interpretation With Reader Performance and Efficiency. JAMA Netw. Open 2022, 5, e2229289. [Google Scholar] [CrossRef]

- Sima, D.M.; Phan, T.V.; Van Eyndhoven, S.; Vercruyssen, S.; Magalhães, R.; Liseune, A.; Brys, A.; Frenyo, P.; Terzopoulos, V.; Maes, C.; et al. Artificial Intelligence Assistive Software Tool for Automated Detection and Quantification of Amyloid-Related Imaging Abnormalities. JAMA Netw. Open 2024, 7, e2355800. [Google Scholar] [CrossRef] [PubMed]

- Carmody, S.; John, D. On Generating Synthetic Histopathology Images Using Generative Adversarial Networks. In Proceedings of the 2023 34th Irish Signals and Systems Conference (ISSC), Dublin, Ireland, 13–14 June 2023; pp. 1–5. [Google Scholar]

- Van Booven, D.J.; Chen, C.-B.; Malpani, S.; Mirzabeigi, Y.; Mohammadi, M.; Wang, Y.; Kryvenko, O.N.; Punnen, S.; Arora, H. Synthetic Genitourinary Image Synthesis via Generative Adversarial Networks: Enhancing Artificial Intelligence Diagnostic Precision. J. Pers. Med. 2024, 14, 703. [Google Scholar] [CrossRef]

- Pinaya, W.H.L.; Graham, M.S.; Kerfoot, E.; Tudosiu, P.-D.; Dafflon, J.; Fernandez, V.; Sanchez, P.; Wolleb, J.; da Costa, P.F.; Patel, A.; et al. Generative AI for Medical Imaging: Extending the MONAI Framework 2023. Available online: https://arxiv.org/abs/2307.15208 (accessed on 30 April 2025).

- Shende, P. A Brief Review on: MRI Images Reconstruction Using GAN; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar]

- Wang, Z.; Lim, G.; Ng, W.Y.; Tan, T.-E.; Lim, J.; Lim, S.H.; Foo, V.; Lim, J.; Sinisterra, L.G.; Zheng, F.; et al. Synthetic Artificial Intelligence Using Generative Adversarial Network for Retinal Imaging in Detection of Age-Related Macular Degeneration. Front. Med. 2023, 10, 1184892. [Google Scholar] [CrossRef]

- Kebaili, A.; Lapuyade-Lahorgue, J.; Ruan, S. Deep Learning Approaches for Data Augmentation in Medical Imaging: A Review. J. Imaging 2023, 9, 81. [Google Scholar] [CrossRef]

- Waddle, S.; Ganji, S.; Wang, D.; Chao, T.C.; Browne, J.; Leiner, T. Feasibility of Ai-Denoising and Ai-Super-Resolution to Accelerate Cardiac Cine Imaging: Qualitative and Quantitative Analysis. J. Cardiovasc. Magn. Reson. 2024, 26, 100989. [Google Scholar] [CrossRef]

- Li, Y.; Xu, S.; Lu, Y.; Qi, Z. CT Synthesis from MRI with an Improved Multi-Scale Learning Network. Front. Phys. 2023, 11, 1088899. [Google Scholar] [CrossRef]

- Sakthivel, B.; Vanathi, P.; Sri, M.R.; Subashini, S.; Sonthi, V.K.; Sathish, C. Generative AI Models and Capabilities in Cancer Medical Imaging and Applications. In Proceedings of the 2024 3rd International Conference on Sentiment Analysis and Deep Learning (ICSADL), Bhimdatta, Nepal, 13 March 2024; pp. 349–355. [Google Scholar]

- Abbasi, N.; Fnu, N.; Zeb, S. Md Fardous Generative AI in Healthcare: Revolutionizing Disease Diagnosis, Expanding Treatment Options, and Enhancing Patient Care. J. Knowl. Learn. Sci. Technol. 2024, 3, 127–138. [Google Scholar] [CrossRef]

- Iqbal, A.; Sharif, M.; Yasmin, M.; Raza, M.; Aftab, S. Generative Adversarial Networks and Its Applications in the Biomedical Image Segmentation: A Comprehensive Survey. Int. J. Multimed. Inf. Retr. 2022, 11, 333–368. [Google Scholar] [CrossRef]

- Ali, M.; Ali, M.; Hussain, M.; Koundal, D. Generative Adversarial Networks (GANs) for Medical Image Processing: Recent Advancements. Arch. Comput. Methods Eng. 2025, 32, 1185–1198. [Google Scholar] [CrossRef]

- Khosravi, M.; Zare, Z.; Mojtabaeian, S.M.; Izadi, R. Artificial Intelligence and Decision-Making in Healthcare: A Thematic Analysis of a Systematic Review of Reviews. Health Serv. Res. Manag. Epidemiol. 2024, 11, 23333928241234863. [Google Scholar] [CrossRef] [PubMed]

- Rydzewski, N.R.; Dinakaran, D.; Zhao, S.G.; Ruppin, E.; Turkbey, B.; Citrin, D.E.; Patel, K.R. Comparative Evaluation of LLMs in Clinical Oncology. NEJM AI 2024, 1, AIoa2300151. [Google Scholar] [CrossRef] [PubMed]

- Kamran, F.; Tjandra, D.; Heiler, A.; Virzi, J.; Singh, K.; King, J.E.; Valley, T.S.; Wiens, J. Evaluation of Sepsis Prediction Models before Onset of Treatment. NEJM AI 2024, 1, AIoa2300032. [Google Scholar] [CrossRef]

- Dagan, N.; Magen, O.; Leshchinsky, M.; Makov-Assif, M.; Lipsitch, M.; Reis, B.Y.; Yaron, S.; Netzer, D.; Balicer, R.D. Prospective Evaluation of Machine Learning for Public Health Screening: Identifying Unknown Hepatitis C Carriers. NEJM AI 2024, 1, AIoa2300012. [Google Scholar] [CrossRef]

- Lee, S.M.; Lee, G.; Kim, T.K.; Le, T.; Hao, J.; Jung, Y.M.; Park, C.-W.; Park, J.S.; Jun, J.K.; Lee, H.-C.; et al. Development and Validation of a Prediction Model for Need for Massive Transfusion During Surgery Using Intraoperative Hemodynamic Monitoring Data. JAMA Netw. Open 2022, 5, e2246637. [Google Scholar] [CrossRef]

- Horvat, C.M.; Barda, A.J.; Perez Claudio, E.; Au, A.K.; Bauman, A.; Li, Q.; Li, R.; Munjal, N.; Wainwright, M.S.; Boonchalermvichien, T.; et al. Interoperable Models for Identifying Critically Ill Children at Risk of Neurologic Morbidity. JAMA Netw. Open 2025, 8, e2457469. [Google Scholar] [CrossRef]

- Taylor, R.A.; Chmura, C.; Hinson, J.; Steinhart, B.; Sangal, R.; Venkatesh, A.K.; Xu, H.; Cohen, I.; Faustino, I.V.; Levin, S. Impact of Artificial Intelligence–Based Triage Decision Support on Emergency Department Care. NEJM AI 2025, 2, AIoa2400296. [Google Scholar] [CrossRef]

- Barnett, A.J.; Guo, Z.; Jing, J.; Ge, W.; Kaplan, P.W.; Kong, W.Y.; Karakis, I.; Herlopian, A.; Jayagopal, L.A.; Taraschenko, O.; et al. Improving Clinician Performance in Classifying EEG Patterns on the Ictal–Interictal Injury Continuum Using Interpretable Machine Learning. NEJM AI 2024, 1, AIoa2300331. [Google Scholar] [CrossRef]

- Chiu, I.-M.; Lin, C.-H.R.; Yau, F.-F.F.; Cheng, F.-J.; Pan, H.-Y.; Lin, X.-H.; Cheng, C.-Y. Use of a Deep-Learning Algorithm to Guide Novices in Performing Focused Assessment With Sonography in Trauma. JAMA Netw. Open 2023, 6, e235102. [Google Scholar] [CrossRef]

- Waikel, R.L.; Othman, A.A.; Patel, T.; Ledgister Hanchard, S.; Hu, P.; Tekendo-Ngongang, C.; Duong, D.; Solomon, B.D. Recognition of Genetic Conditions After Learning With Images Created Using Generative Artificial Intelligence. JAMA Netw. Open 2024, 7, e242609. [Google Scholar] [CrossRef] [PubMed]

- Dolezal, J.M.; Wolk, R.; Hieromnimon, H.M.; Howard, F.M.; Srisuwananukorn, A.; Karpeyev, D.; Ramesh, S.; Kochanny, S.; Kwon, J.W.; Agni, M.; et al. Deep Learning Generates Synthetic Cancer Histology for Explainability and Education. npj Precis. Oncol. 2023, 7, 49. [Google Scholar] [CrossRef]

- Liu, H.; Ding, N.; Li, X.; Chen, Y.; Sun, H.; Huang, Y.; Liu, C.; Ye, P.; Jin, Z.; Bao, H.; et al. Artificial Intelligence and Radiologist Burnout. JAMA Netw. Open 2024, 7, e2448714. [Google Scholar] [CrossRef]

- Fajtl, J.; Welikala, R.A.; Barman, S.; Chambers, R.; Bolter, L.; Anderson, J.; Olvera-Barrios, A.; Shakespeare, R.; Egan, C.; Owen, C.G.; et al. Trustworthy Evaluation of Clinical AI for Analysis of Medical Images in Diverse Populations. NEJM AI 2024, 1, AIoa2400353. [Google Scholar] [CrossRef]

- He, Y.; Guo, Y.; Lyu, J.; Ma, L.; Tan, H.; Zhang, W.; Ding, G.; Liang, H.; He, J.; Lou, X.; et al. Disorder-Free Data Are All You Need—Inverse Supervised Learning for Broad-Spectrum Head Disorder Detection. NEJM AI 2024, 1, AIoa2300137. [Google Scholar] [CrossRef]

- Lehmann, V.; Zueger, T.; Maritsch, M.; Notter, M.; Schallmoser, S.; Bérubé, C.; Albrecht, C.; Kraus, M.; Feuerriegel, S.; Fleisch, E.; et al. Machine Learning to Infer a Health State Using Biomedical Signals—Detection of Hypoglycemia in People with Diabetes While Driving Real Cars. NEJM AI 2024, 1, AIoa2300013. [Google Scholar] [CrossRef]

- Natesan, D.; Eisenstein, E.L.; Thomas, S.M.; Eclov, N.C.W.; Dalal, N.H.; Stephens, S.J.; Malicki, M.; Shields, S.; Cobb, A.; Mowery, Y.M.; et al. Health Care Cost Reductions with Machine Learning-Directed Evaluations during Radiation Therapy—An Economic Analysis of a Randomized Controlled Study. NEJM AI 2024, 1, AIoa2300118. [Google Scholar] [CrossRef] [PubMed]

- Wu, K.; Wu, E.; Theodorou, B.; Liang, W.; Mack, C.; Glass, L.; Sun, J.; Zou, J. Characterizing the Clinical Adoption of Medical AI Devices through U.S. Insurance Claims. NEJM AI 2024, 1, AIoa2300030. [Google Scholar] [CrossRef]

- Mazzucato, M.; Li, H.L. Rebalancing the research agenda: From discovery to societal impact. Nature 2023, 615, 415–418. [Google Scholar]

- National Science Foundation. NSF Report on Equity and Research Prioritization. 2023. Available online: https://www.nsf.gov (accessed on 30 April 2025).

- First Drug Discovered and Designed with Generative AI Enters Phase 2 Clinical Trials; EurekAlert! 2023; Available online: https://www.eurekalert.org/news-releases/993844 (accessed on 30 April 2025).

- Brazil How AI Is Transforming Drug Discovery. 27-06-23. Available online: https://www.drugdiscoverytrends.com/ai-drug-discovery-2023-trends/ (accessed on 30 April 2025).

- AI Drug Discovery 2023 Trends. 2023. Available online: https://pharmaceutical-journal.com/article/feature/how-ai-is-transforming-drug-discovery (accessed on 30 April 2025).

- Unlu, O.; Shin, J.; Mailly, C.J.; Oates, M.F.; Tucci, M.R.; Varugheese, M.; Wagholikar, K.; Wang, F.; Scirica, B.M.; Blood, A.J.; et al. Retrieval-Augmented Generation–Enabled GPT-4 for Clinical Trial Screening. NEJM AI 2024, 1, AIoa2400181. [Google Scholar] [CrossRef]

- Mao, D.; Liu, C.; Wang, L.; AI-Ouran, R.; Deisseroth, C.; Pasupuleti, S.; Kim, S.Y.; Li, L.; Rosenfeld, J.A.; Meng, L.; et al. AI-MARRVEL—A Knowledge-Driven AI System for Diagnosing Mendelian Disorders. NEJM AI 2024, 1, AIoa2300009. [Google Scholar] [CrossRef]

- Tang, X.; Dai, H.; Knight, E.; Wu, F.; Li, Y.; Li, T.; Gerstein, M. A Survey of Generative AI for de Novo Drug Design: New Frontiers in Molecule and Protein Generation. Brief. Bioinform. 2024, 25, bbae338. [Google Scholar] [CrossRef]

- Helm, J.M.; Swiergosz, A.M.; Haeberle, H.S.; Karnuta, J.M.; Schaffer, J.L.; Krebs, V.E.; Spitzer, A.I.; Ramkumar, P.N. Machine Learning and Artificial Intelligence: Definitions, Applications, and Future Directions. Curr. Rev. Musculoskelet. Med. 2020, 13, 69–76. [Google Scholar] [CrossRef] [PubMed]

- Wang, W.-H.; Hsu, W.-S. Integrating Artificial Intelligence and Wearable IoT System in Long-Term Care Environments. Sensors 2023, 23, 5913. [Google Scholar] [CrossRef] [PubMed]

- Amjad, A.; Kordel, P.; Fernandes, G. A Review on Innovation in Healthcare Sector (Telehealth) through Artificial Intelligence. Sustainability 2023, 15, 6655. [Google Scholar] [CrossRef]

- Ahmed, A.; Aziz, S.; Khalifa, M.; Shah, U.; Hassan, A.; Abd-Alrazaq, A.; Househ, M. Thematic Analysis on User Reviews for Depression and Anxiety Chatbot Apps: Machine Learning Approach. JMIR Form. Res. 2022, 6, e27654. [Google Scholar] [CrossRef] [PubMed]

- Alkaissi. McFarlane Artificial Hallucinations in ChatGPT: Implications in Scientific Writing; Harvard Business School: Boston, MA, USA, 2022. [Google Scholar]

- Tonekaboni, S.; Joshi, S.; McCradden, M.D.; Goldenberg, A. What clinicians want: Contextualizing explainable machine learning for clinical end use. Proc. Mach. Learn. Res. 2019, 106, 359–380. [Google Scholar]

- Currie, G.M.; Hawk, K.E.; Rohren, E.M. Generative Artificial Intelligence Biases, Limitations and Risks in Nuclear Medicine: An Argument for Appropriate Use Framework and Recommendations. Semin. Nucl. Med. 2025, 55, 423–436. [Google Scholar] [CrossRef]

- Farhud, D.D.; Zokaei, S. Ethical Issues of Artificial Intelligence in Medicine and Healthcare. Iran. J. Public Health 2021, 50, i–v. [Google Scholar] [CrossRef]

- Chen, Y.; Esmaeilzadeh, P. Generative AI in Medical Practice: In-Depth Exploration of Privacy and Security Challenges. J. Med. Internet Res. 2024, 26, e53008. [Google Scholar] [CrossRef]

- Habli, I.; Lawton, T.; Porter, Z. Artificial Intelligence in Health Care: Accountability and Safety. Bull. World Health Organ. 2020, 98, 251–256. [Google Scholar] [CrossRef]

- Strubell, E.; Ganesh, A.; McCallum, A. Energy and Policy Considerations for Deep Learning in NLP. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July 2019. [Google Scholar] [CrossRef]

- Patterson, D.; Gonzalez, J.; Le, Q.; Liang, C.; Munguia, L.-M.; Rothchild, D.; So, D.; Texier, M.; Dean, J. Carbon Emissions and Large Neural Network Training. arXiv 2021, arXiv:2104.10350. [Google Scholar]

- Reddy, S. Generative AI in Healthcare: An Implementation Science Informed Translational Path on Application, Integration and Governance. Implement. Sci. 2024, 19, 27. [Google Scholar] [CrossRef] [PubMed]

- Hanna, M.G.; Pantanowitz, L.; Dash, R.; Harrison, J.H.; Deebajah, M.; Pantanowitz, J.; Rashidi, H.H. Future of Artificial Intelligence—Machine Learning Trends in Pathology and Medicine. Mod. Pathol. 2025, 38, 100705. [Google Scholar] [CrossRef]

- Maleki Varnosfaderani, S.; Forouzanfar, M. The Role of AI in Hospitals and Clinics: Transforming Healthcare in the 21st Century. Bioengineering 2024, 11, 337. [Google Scholar] [CrossRef] [PubMed]

- Nundy, S.; Montgomery, T.; Wachter, R.M. Promoting trust between patients and physicians in the era of artificial intelligence. JAMA 2022, 327, 521–522. [Google Scholar] [CrossRef]

- Lu, Z.; Peng, Y.; Cohen, T.; Ghassemi, M.; Weng, C.; Tian, S. Large Language Models in Biomedicine and Health: Current Research Landscape and Future Directions. J. Am. Med. Inform. Assoc. 2024, 31, 1801–1811. [Google Scholar] [CrossRef]

- Bhuyan, S.S.; Sateesh, V.; Mukul, N.; Galvankar, A.; Mahmood, A.; Nauman, M.; Rai, A.; Bordoloi, K.; Basu, U.; Samuel, J. Generative Artificial Intelligence Use in Healthcare: Opportunities for Clinical Excellence and Administrative Efficiency. J. Med. Syst. 2025, 49, 10. [Google Scholar] [CrossRef]

| Domain | Application | Outcomes | References |

|---|---|---|---|

| Clinical Documentation | Drafting clinical notes, discharge summaries, and patient letters | Improved clinician efficiency; reduced burnout | [10,44] |

| Patient Communication | Drafting responses to patient messages and health education | AI responses rated higher in empathy; improved satisfaction and understanding | [15,19,21] |

| Clinical Decision Support | Assisting in diagnosis and management suggestions | Comparable or improved accuracy in diagnostic reasoning compared to physicians | [29,31,32] |

| Medical Imaging Interpretation | AI that can “read” an image and generate a report | Generating reports from radiology images | [45] |

| Drug Discovery and Biomedical Research | Assisting in drug discovery and development | Generating novel molecules, optimizing drug candidates, and designing clinical trials | [46] |

| Patient Monitoring and Telehealth Integration | Transforming patient care, especially for chronic conditions | Remote patient monitoring systems, AI powered telehealth | [47] |

| Medical Education and Training | Enhancing medical education | AI as an adjunct for learning | [48] |

| Mental Health Support | Chatbots offering conversational support or behavioral coaching | Early evidence of utility as a supportive tool; still requires human oversight | [49,50] |

| Author | Clinical Study | Primary Outcomes | Secondary Outcomes | Inference | Reference |

|---|---|---|---|---|---|

| Aklilu et al. | Artificial Intelligence Identifies Factors Associated with Blood Loss and Surgical Experience in Cholecystectomy | The study’s primary objective was to identify specific surgical maneuvers associated with positive indicators of surgical performance and high surgical skill, with a particular focus on factors contributing to blood loss during cholecystectomy. | The secondary objectives was to examine additional elements influencing surgical outcomes. | The AI model demonstrated the capability to identify factors—such as surgical experience and technique—associated with intraoperative outcomes, particularly blood loss during cholecystectomy. | [64] |

| Barnett et al. | Improving Clinician Performance in Classifying EEG Patterns on the Ictal-Interictal Injury Continuum Using Interpretable Machine Learning | Developed an interpretable deep learning system that accurately classifies six patterns of potentially harmful EEG activity seizures, lateralized periodic discharges (LPDs), generalized periodic discharges (GPDs), lateralized rhythmic delta activity (LRDA), generalized rhythmic delta activity (GRDA), and other patterns while providing faithful case-based explanations of its predictions. | Identification and characterization of strategies to bolster confidence in model-generated responses. | Users demonstrated significant improvements in pattern classification accuracy with the assistance of this interpretable deep learning model. The interpretable design facilitates effective human–AI collaboration; this system may improve diagnosis and patient care in clinical settings. The model may also provide a better understanding of how EEG patterns relate to each other along the ictal–interictal injury continuum. | [86] |

| Fajtl et al. | Methodology for independent evaluation of algorithms for automated analysis of medical images for trustworthy and equitable deployment of clinical Al in diverse population screening programmes | The study outlines a transferable methodology for the independent evaluation of algorithms, using a routine, high-volume, multiethnic national diabetic eye-screening program as an exemplar. | Secondary objective was to evaluate the practical aspects of implementing these AI systems in real-world screening programs. This included assessing the time required for algorithm installation, image-processing durations, and the overall scalability of deploying such systems in large-scale, routine screening settings. | The methodology was shown to be transferable for algorithm evaluation in large-scale, multiethnic screening programs. | [91] |

| He et al. | Disorder-Free Data are All You Need: Inverse Supervised Learning for Broad-Spectrum Head Disorder Detection | The study’s primary objective was to develop and evaluate an AI-based system capable of accurately detecting a wide range of head disorders without requiring any disorder-containing data for training. This was achieved by introducing a novel learning algorithm called Inverse Supervised Learning (ISL), which learns exclusively from disorder-free head CT scans. | The adaptability of the ISL-based system to other medical imaging modalities. Specifically, it evaluated the system’s performance on pulmonary CT and retinal optical coherence tomography (OCT) images, achieving AUC values of 0.893 and 0.895, respectively. | Inverse supervised learning can be effective for broad-spectrum head disorder detection. | [92] |

| Hiesinger et al. | Almanac: Retrieval-Augmented Language Models for Clinical Medicine | The study develops Almanac, a large language model framework augmented with retrieval capabilities to provide medical guideline and treatment recommendations. The primary outcome was to demonstrate significant improvements in factuality across all specialties. | Secondary outcomes include improvements in completeness and the safety of the recommendations. Evaluate performance on a novel dataset of clinical scenarios (n = 130). | Performance on a novel dataset of clinical scenarios demonstrates that large language models can be effective tools in the clinical decision-making process, showing significant increases in factuality (mean of 18%, p < 0.05) across all specialties, along with improvements in completeness and safety—highlighting the need for careful testing and deployment. | [21] |

| Kamran et al. | Evaluation of Sepsis Prediction Models before Onset of Treatment | The primary outcome is typically specified before the study begins and is the basis for calculating the sample size needed for adequate statistical power. | The accuracy of AI sepsis predictions varies depending on the timing of the prediction relative to treatment initiation. | [81] | |

| Kazemzadeh et al. | Prospective Multi-Site Validation of AI to Detect Tuberculosis and Chest X-Ray Abnormalities | Noninferiority of AI detection to radiologist performance. | AI detection compared with WHO targets. Abnormality AI was non-inferior to the high-sensitivity benchmark. | The CXR TB AI was noninferior to radiologists for active pulmonary TB triaging in a population with a high TB and HIV burden. Neither the TB AI nor the radiologists met WHO recommendations for sensitivity in the study population. AI can also be used to detect other CXR abnormalities in the same population. | [61] |

| Lehmann et al. | Machine learning to infer a health state using biomedical signals—detection of hypoglycemia in people with diabetes while driving real cars | The primary outcome was the detection of hypoglycemia using a machine learning approach. | The secondary outcome was the diagnostic accuracy of this approach, quantified by the area under the receiver operating characteristic curve (AUROC). | Machine learning can effectively and noninvasively detect hypoglycemia in people with diabetes during real-world driving scenarios, using driving behavior and gaze/head motion data. | [93] |

| Lin et al. | Artificial Intelligence-Powered Rapid Identification of ST-Elevation Myocardial Infarction via Electrocardiogram (ARISE) A Pragmatic Randomized Controlled Trial | To evaluate the potential of AI-ECG-assisted detection of STEMI to reduce treatment delays for patients with STEMI. | The secondary objectives was to evaluate the sensitivity and specificity of the AI algorithm in accurately identifying STEMI from 12-lead ECGs. | In patients with STEMI, AI-ECG-assisted triage of STEMI decreased the door-to-balloon time for patients presenting to the emergency department and decreased the ECG-to-balloon time for patients in the emergency room and inpatients. | [30] |

| Natesan et al. | Health Care Cost Reductions with Machine Learning Directed Evaluations during Radiation Therapy—An Economic Analysis of a Randomized Controlled Study | Healthcare cost reduction. | Acute care visit costs, inpatient costs. | Machine learning-directed evaluations during radiation therapy can lead to significant healthcare cost reductions. | [94] |

| Patel et al. | Spending Analysis of Machine Learning Based Communication Nudges in Oncology | Total medical costs. | Acute care visit costs. | Machine learning-based communication nudges may lead to cost reductions in oncology care. | [26] |

| Rydzewski et al. | Comparative Evaluation of LLMs in Clinical Oncology | To conduct comprehensive evaluations of LLMs in the field of oncology. To identify and characterize strategies to bolster confidence in a model’s response. | The secondary objective was to evaluate LLM performance on a novel validation set of 50 oncology questions. | LLMs, particularly GPT-4 Turbo and Gemini 1.0 Ultra, demonstrated high accuracy in answering clinical oncology questions, with GPT-4 achieving the highest performance among those tested; however, all models exhibited clinically significant error rates. | [80] |

| Wu et al. | Characterizing the Clinical Adoption of Medical AI Devices through U.S. Insurance Claims | The primary objective was to quantify the adoption and usage of medical AI devices in the United States. | Analyze the prevalence of medical AI devices based on submitted claims. | Medical AI device adoption is still nascent, with most usage driven by a handful of leading devices. Zip codes with higher income levels, metropolitan areas, and academic medical centers are more likely to have medical AI usage. | [95] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rabbani, S.A.; El-Tanani, M.; Sharma, S.; Rabbani, S.S.; El-Tanani, Y.; Kumar, R.; Saini, M. Generative Artificial Intelligence in Healthcare: Applications, Implementation Challenges, and Future Directions. BioMedInformatics 2025, 5, 37. https://doi.org/10.3390/biomedinformatics5030037

Rabbani SA, El-Tanani M, Sharma S, Rabbani SS, El-Tanani Y, Kumar R, Saini M. Generative Artificial Intelligence in Healthcare: Applications, Implementation Challenges, and Future Directions. BioMedInformatics. 2025; 5(3):37. https://doi.org/10.3390/biomedinformatics5030037

Chicago/Turabian StyleRabbani, Syed Arman, Mohamed El-Tanani, Shrestha Sharma, Syed Salman Rabbani, Yahia El-Tanani, Rakesh Kumar, and Manita Saini. 2025. "Generative Artificial Intelligence in Healthcare: Applications, Implementation Challenges, and Future Directions" BioMedInformatics 5, no. 3: 37. https://doi.org/10.3390/biomedinformatics5030037

APA StyleRabbani, S. A., El-Tanani, M., Sharma, S., Rabbani, S. S., El-Tanani, Y., Kumar, R., & Saini, M. (2025). Generative Artificial Intelligence in Healthcare: Applications, Implementation Challenges, and Future Directions. BioMedInformatics, 5(3), 37. https://doi.org/10.3390/biomedinformatics5030037