Abstract

As artificial intelligence (AI) integrates within the intersecting domains of healthcare and computational biology, developing interpretable models tailored to medical contexts is met with significant challenges. Explainable AI (XAI) is vital for fostering trust and enabling effective use of AI in healthcare, particularly in image-based specialties such as pathology and radiology where adjunctive AI solutions for diagnostic image analysis are increasingly utilized. Overcoming these challenges necessitates interdisciplinary collaboration, essential for advancing XAI to enhance patient care. This commentary underscores the critical role of interdisciplinary conferences in promoting the necessary cross-disciplinary exchange for XAI innovation. A literature review was conducted to identify key challenges, best practices, and case studies related to interdisciplinary collaboration for XAI in healthcare. The distinctive contributions of specialized conferences in fostering dialogue, driving innovation, and influencing research directions were scrutinized. Best practices and recommendations for fostering collaboration, organizing conferences, and achieving targeted XAI solutions were adapted from the literature. By enabling crucial collaborative junctures that drive XAI progress, interdisciplinary conferences integrate diverse insights to produce new ideas, identify knowledge gaps, crystallize solutions, and spur long-term partnerships that generate high-impact research. Thoughtful structuring of these events, such as including sessions focused on theoretical foundations, real-world applications, and standardized evaluation, along with ample networking opportunities, is key to directing varied expertise toward overcoming core challenges. Successful collaborations depend on building mutual understanding and respect, clear communication, defined roles, and a shared commitment to the ethical development of robust, interpretable models. Specialized conferences are essential to shape the future of explainable AI and computational biology, contributing to improved patient outcomes and healthcare innovations. Recognizing the catalytic power of this collaborative model is key to accelerating the innovation and implementation of interpretable AI in medicine.

1. Introduction

As emergent technologies push toward the full attainment of personalized medicine, computational biology emerges as an integral practice for deciphering the complexity of biomedical interactions [1,2]. Large collections of patient data offer genomic, proteomic, metabolomic, and histopathological insights. When analyzed in concert, newly elucidated cellular interactions uncover novel treatment targets and disease mechanisms. Alongside this focus on data-driven insights, a growing recognition of the need for transparency and interpretability in artificial intelligence (AI) systems has spurred collective collaboration among clinicians, data scientists, and multidisciplinary teams of software engineers, quality experts, and domain specialists [3].

As machine learning (ML) models have become more complex and harder to interpret, the need for “explainable AI” (XAI), particularly when used in healthcare, has grown [4,5]. Though model-derived outputs may align with clinical guidelines, the algorithmic ‘reasoning’ behind ML predictions is often opaque and indecipherable [6]. To align with the evidence-based framework of healthcare, the decisions of computer aided diagnostic (CAD) tools must be seamlessly interrogable by the clinicians who utilize them in practice [7,8].

XAI aims to overcome the indecipherable ‘black-box’ nature of ML models to reveal how ML predictions are made [7,8,9]. In healthcare, accountability and explainability are inextricably linked. As AI systems used in healthcare must adhere to the same legal, ethical, and Hippocratic principles as practitioners, explainability is a fundamental prerequisite [8].

Recent years have seen a rise in the development of domain-specific XAI techniques tailored to healthcare and computational biology applications [5,10]. These techniques aim to address the need for accurate and interpretable models, the integration of data from multiple sources, and the management and analysis of large datasets [5].

In the healthcare setting, the interpretability of XAI tools is crucial for clinicians to trust and effectively use these systems in clinical decision support. However, the degree to which doctors can understand the reasoning provided by these XAI tools can vary. The complexity of the explanation, the clinician’s familiarity with AI and ML concepts, and the presentation of the explanation are among many influencing factors. As doctors are not typically domain experts in the design of XAI tools, the effectiveness of AI tools in clinical settings will depend on how well they can convey complex AI decision-making processes in a way that clinicians can understand and trust. Therefore, while doctors may not be the primary designers of XAI tools, their input is invaluable in creating clinically relevant explanations. To create tools that are both technically sound and clinically relevant, the involvement of clinicians in the design process may greatly enhance the usability and effectiveness of these tools in clinical practice.

Interdisciplinary organizations such as the International Society for Computational Biology (ISCB) and specialized conferences such as the International Conference on Medical Image Computing and Computer Assisted Intervention (MICCAI) play a crucial role in molding diverse expertise in exchange for research, ideas, and collaborative partnerships [11,12,13]. Through such collaborative ventures, the transparency and interpretability of AI systems may improve in healthcare and computational biology sectors [11,12,13,14].

2. Explainable AI in Healthcare: Applications and Challenges

XAI provides tools to understand how AI models arrive at specific decisions for individual patients. For instance, in the context of COVID-19, XAI methods may aid in identifying patterns and factors that contribute to disease spread and severity, denoting the genesis of targeted interventions and treatments [15]. For chronic kidney disease, XAI methods may elucidate granular understanding of disease progression and the impact of different treatment options, leading to more personalized and effective patient care [16]. The application of XAI in analyzing electronic medical records (EMRs) can uncover valuable insights into patient health trends, treatment outcomes, and potential risk factors, facilitating earlier intervention and preventative care [17]. In the case of fungal or bloodstream infections, interpretability methods can assist in the rapid and accurate identification of pathogens, enabling timely and appropriate treatment decisions [18]. Fundamental capabilities of XAI include pattern recognition (e.g., risk factors for COVD-19, indicators of kidney disease progression), natural language processing (NLP) [e.g., EMR data insights], and image understanding (e.g., pathogen identification, kidney disease diagnosis). When used alongside broader pattern recognition, NLP and image understanding enhance the interpretation of complex healthcare data. Multimodal XAI is therefore a requisite for enhanced understanding.

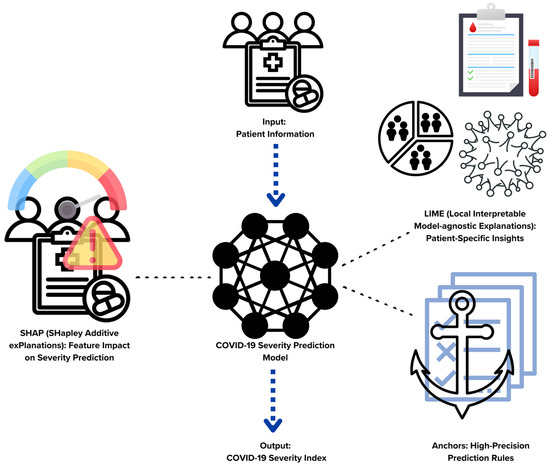

Techniques such as LIME (local interpretable model-agnostic explanations) provide simplified breakdowns for a single case or ‘local approximations’, identifying the most important components of patient data by assigning ‘feature importance values’ to each datapoint used by an AI model, and formulate easy-to-follow decision logic through the creation of ‘high-precision rules’ that mimic how AI makes its predictions, facilitating physician comprehension of model reasoning. SHAP (Shapley additive explanations) and ‘Anchors’ are other examples of XAI techniques that have been applied in real-world healthcare settings for predicting cancer survival rates, understanding disease risk factors, reducing hospital readmissions, and developing personalized treatment plans. A COVD-19 severity prediction model may utilize a variety of data points such as patient demographics, symptoms, and laboratory results to predict the likelihood that an individual COVID-19 patient will develop severe complications. In this instance, LIME may reveal AI model ascription of high severity risk as primarily driven by a patient age and underlying health conditions. Consequently, the information aids physician prioritization of monitoring and interventions. To further understand how different factors drive model predictions overall, SHAP analysis may indicate that patient age was consistently the most important feature in determining risk across numerous patients, followed closely by pre-existing conditions such as diabetes and chronic respiratory conditions such as chronic obstructive pulmonary disease (COPD). Finally, Anchors may generate clear rules that explain the model’s reasoning. For example: ‘IF patient is over 65 and has COPD, THEN predict high risk of severe COVID-19 complications’. These simplified rules offer additional clarity to healthcare providers, enabling them to better understand and trust the model’s predictions (Figure 1).

Figure 1.

Interpreting a hypothetical COVID-19 severity prediction model with select XAI techniques.

Pattern recognition is a core ability of AI models, enabling them to identify regularities and correlations within complex data. For healthcare use, with respect to cases involving image analysis, pattern recognition entails computational identification of visual patterns that can be associated with diagnoses or other outcomes. While XAI techniques are all designed to uncover important patterns within data, specific techniques primarily rely on pattern recognition whereas others extend to a broader scope (Table 1).

Table 1.

XAI techniques that rely heavily on pattern recognition versus techniques with a broader scope.

Image-based healthcare specialties including radiology, dermatology, and ophthalmology are rapidly adopting AI for visual pattern recognition, creating space for unique applications within each field.

Pathology, with its rich datasets of whole slide images (WSIs) and potential for high-impact diagnoses, is a leading area for AI integration in medicine. Complex WSIs, containing an immense amount of high-resolution visual information ripe for AI-powered pattern recognition, e.g., tumor classification, grading, and biomarker identification, are therefore among the primary targets for XAI exploration [20,21]. XAI image analysis of WSIs includes attention-based techniques, e.g., Grad-CAM and Grad-CAM++, which highlight areas within the WSI that contribute the most to the model’s prediction. ‘Guided backpropagation’, similar to Grad-CAM, provides a more fine-grained visualization of important image regions. ‘Patches’, pixel-level regions of WSIs, may be interpreted by LIME, which creates simplified models for individual image patches within WSIs, providing local explanations for how these regions influenced the overall prediction. Shapley values can be adapted to image data to determine the contribution of each patch to the model’s decision, offering a more global picture of feature importance. Counterfactual explanations aid in concept-based explanations by generating slightly modified versions of the WSI to show what minimal changes would be needed to alter model predictions. The mechanism aids pathologists in the identification of regions and features critical to the model’s decision. Combining techniques like Grad-CAM with patch-based approaches or concept-based explanations can offer richer, multi-level insights. However, rigorous validation of XAI methods is crucial before full integration into clinical workflows.

As AI is increasingly utilized in patient care as a co-pilot to physician-centric diagnostic, prognostic, and therapeutic applications, medical, legal, ethical, and societal questions are growing [4,5,6,22]. The current majority of interpretable ML methods are domain-agnostic. Having evolved from fields such as computer vision, automated reasoning, or statistics, direct application to bioinformatics problems is challenging without customization and domain adaptation [8]. AI models that lack transparency are often developed by non-medical professionals. A lack of control may result over the derivation of model results by end users, such as healthcare providers and patients [23].

AI systems require large amounts of data, and the quality and availability of these data is crucial for the performance of these systems. However, datasets used to develop AI systems often include unforeseen gaps, despite intensive attempts to clean and analyze the data. Issues with regulation and compatibility across institutions also constrain the amount of data that can be utilized to develop efficient algorithms [24].

The implementation of AI in clinical settings is also stymied by a lack of empirical data validating the effectiveness of AI-based interventions in planned clinical trials. Most research on AI applications has been conducted in non-clinical settings, making it challenging to generalize research results [24] and raising questions about safety and efficacy [25]. Some argue that the opaque nature of many AI systems implies that physicians and patients cannot and should not rely on the results of such systems. In contrast, others oppose the central role of explainability in AI [26,27,28].

Logistical difficulties in implementing AI systems in healthcare include barriers to adoption and the need for sociocultural or pathway changes [29]. All stakeholders, including healthcare professionals, computational biologists, and policymakers, are increasingly compelled to understand each other’s domain in order to identify a solution [30,31,32,33,34,35].

2.1. Legal Implications

Currently, there is a paucity of well-defined regulations that specifically address issues which may arise due to the use of AI in healthcare settings [36]. This includes concerns about safety and effectiveness, liability, data protection and privacy, cybersecurity, and intellectual property law [37]. For instance, the sharing of responsibility and accountability when the implementation of an AI-based recommendation causes clinical problems is not clear [38].

2.2. Ethical Implications

Ethical dilemmas about the application of AI in healthcare encompass a broad range of issues, including privacy and data protection, that are paramount due to the sensitive nature of personal health information. Informed consent is also critical, as patients must understand and agree to the use of AI in their healthcare procedures, recognizing the implications and outcomes associated with these technologies. Social gaps, characterized by disparities and inequalities within healthcare systems and broader society, may be intensified by the introduction of AI. For example, healthcare access disparities can result in unequal medical AI distribution across different socioeconomic populations and healthcare systems, potentially widening existing health inequities.

Furthermore, the incorporation of AI in healthcare raises questions about the preservation of medical consultation, which traditionally involves nuanced human interaction and shared decision-making between a patient and a healthcare provider. The personal touch of empathy and sympathy, both of which are foundational to patient care, may not be fully replicable by AI systems. While AI can enhance diagnostic and treatment processes, it is crucial to maintain the human elements of understanding and compassion that are integral to the healing process. It is also important to ensure that AI supports, rather than replaces, the human-centric aspects of medical consultations, allowing for the continuation of personalized care that addresses individual patient needs and concerns [39]. There are also concerns about intrinsic biases in the data used in AI system tests, which can lead to poor or negative outcomes [40]. The principles of medical ethics, including autonomy, beneficence, nonmaleficence, and justice, should be emphasized before integrating AI into healthcare systems [22,39].

2.3. Societal Implications

AI can lead to healthcare inequities through biased data collection, algorithm development, and a lack of diversity in training data [41]. Such inequities may lead to automation bias, which can lead to discrimination and inequity at great scale [42]. Furthermore, the rapid and commercial development of AI could challenge known methods, protocols, standards, and regulatory measures that govern the development, deployment, and management of technology in healthcare settings. This could necessitate new national and international regulations to ensure that AI is developed and used ethically, safely, and equitably in healthcare [22]. Ensuring ethical and legal implementation of AI, with consideration to societal implications, requires continuous attention and thoughtful policy [43].

To ensure that AI-powered decisions uphold the principles of patient-centered care, they must be developed with a focus on patient engagement and autonomy. Patient-centered care treats individuals as active participants in their health management, where their preferences and values guide clinical decisions. Trust in AI systems, then, hinges on their ability to operate transparently and provide explanations that patients can understand. Only with such clarity can patients confidently and independently choose to accept the recommendations provided by AI [6].

2.4. Bridging the Gap with Computational Biology

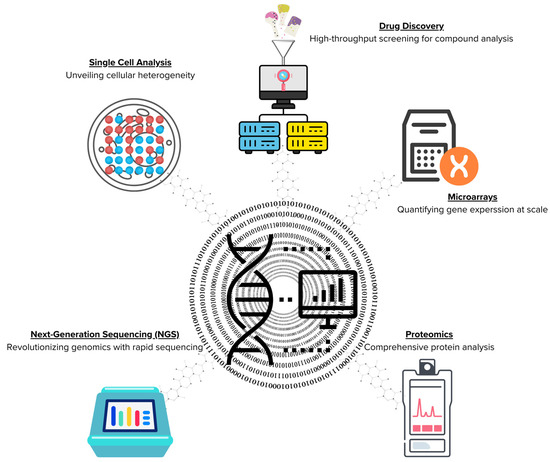

With the emergence of high-throughput technologies which generate massive amounts of data requiring advanced data analysis techniques, the lines between bioinformatics and data science have become increasingly indistinct. Both fields now share common methodologies and tools to manage, analyze, and interpret large datasets (Figure 2).

Figure 2.

Convergence of bioinformatics and data science in high-throughput technologies.

As the field of life sciences shifts towards a more data-centric, integrative, and computational approach, biomedical researchers must cultivate bioinformatics proficiency to keep pace with this evolution [44].

Skill gaps in this proficiency may impede modern research and fuel a global need for bioinformatics education and training. Bridging this gap is critical to the advancement of healthcare research including pharmaceutical and biopharmaceutical arenas [44].

Policy makers and research funders should acknowledge the existing gap between the ‘two cultures’ of clinical informatics and data science. The full social and economic benefits of digital health and data science can only be realized by accepting the interdisciplinary nature of biomedical informatics and by supporting a significant expansion of clinical informatics capacity and capability [45].

3. The Importance of Interdisciplinary Collaboration

Interdisciplinary collaboration is crucial for the development and implementation of robust and effective AI solutions in healthcare. A conglomeration of diverse expertise, including physicians, researchers, technologists, and policymakers, is necessary to effectively refine AI algorithms, validate their clinical utility, and address the ethical and regulatory challenges associated with their implementation [46]. While progress has been made, substantial challenges persist. These include the need for: (1) algorithmic and theoretical clarity, e.g., developing deeper theoretical foundations for interpretability, including defining what constitutes explainability in various contexts and (2) trust and responsibility, e.g., aligning AI decision making with ethical guidelines ensuring fairness, accountability, and transparency. The following case studies illuminate the complex challenges inherent in the successful integration of AI into healthcare settings. To navigate these complexities and ensure ethical implementation, trust, and accountability in AI solutions deployed within patient care environments, collaborative interdisciplinary approaches fostering robust ethical XAI frameworks are essential.

3.1. Case Studies and Examples

3.1.1. Human-in-the-Loop (HITL) Approach

Sezgin E. emphasizes the importance of a human-in-the-loop (HITL) approach in healthcare AI, where AI systems are guided, communicated, and supervised by human expertise. This approach ensures safety and quality in healthcare services. Multidisciplinary teams are essential for exploring cost-effective and impactful AI solutions within this framework and for establishing robust HITL protocols [47]. These teams have successfully designed AI systems that significantly reduce diagnostic errors, enhance patient engagement through personalized care plans, and streamline operational efficiencies in healthcare facilities. To fully realize the potential of the HITL approach, it is crucial to evaluate solutions by quantifying their improvements in patient outcomes and operational efficiency, all while ensuring ethical use through ongoing feedback and human oversight.

- Algorithmic/theoretical tie-in: defining interpretability is key, as it impacts how HITL systems communicate with physicians. Information like confidence scores, visual highlights of influential image areas, and insights into alternative diagnoses to AI reasoning can all help physicians calibrate their trust in AI recommendations.

- Trust/responsibility tie-in: HITL protocols should include logging instances where physicians override the AI, along with their justifications. These data are valuable for quality assurance, refinement of AI models, and for detecting potential biases in the training data. While diverse datasets are crucial, HITL provides real-world bias detection—if physicians of a certain specialty consistently disagree with the AI, it could point to issues in the underlying data.

3.1.2. Interdisciplinary Research in Digital Health

Krause-Jüttler G. et al. provide a valuable case study on interdisciplinary research in digital health, involving collaboration among 20 researchers from medicine and engineering [48]. Their study emphasizes that success in these projects depends not only on individual expertise and adaptability, but also on effective team dynamics and organizational support. Factors like mutual respect, shared goals, and structures facilitating interaction are crucial for overcoming the challenges of interdisciplinary work.

- Algorithmic/theoretical tie-in: this case indirectly highlights the need for shared theoretical foundations. Medical researchers and computer scientists may approach AI in healthcare from different theoretical angles—one focusing on disease mechanisms, the other on computational efficiency. Aligning these perspectives is key to ensuring the AI tools developed truly address relevant medical needs.

- Trust/responsibility tie-in: interdisciplinary teams are better equipped to tackle ethical considerations from the outset of digital health solution design. Diverse perspectives help identify potential biases or unintended consequences early on, allowing for proactive measures. Such teams can also establish ethical oversight mechanisms, potentially incorporating student feedback as in the case of an intelligent tutoring system, to ensure values alignment throughout the development process.

3.1.3. Intelligent Tutoring System for Medical Students

Bilgic E. and Harley JM. demonstrate the power of interdisciplinary collaboration in developing innovative educational tools [49]. By combining expertise in AI, educational psychology, and medicine, they created an intelligent tutoring system used to simulate complex patient scenarios. The system improved diagnostic accuracy and enhanced decision-making skills by providing immediate personalized feedback and personalized learning pathways adapted to student needs. Integrated insights led to the development of realistic patient interactions and adaptive difficulty levels, making the learning experience more engaging and effective.

- Algorithmic/theoretical tie-in: explaining AI decisions to students poses unique challenges, e.g., different levels of explanation compared to medical experts. This case highlights the need for XAI methods that can adapt based on the user’s knowledge level, making AI reasoning understandable to learners.

- Trust/responsibility tie-in: in an educational context, it is especially important to ensure that the AI system itself is not a source of flawed reasoning or biases. Rigorous ethical oversight, potentially involving educational experts, is needed to analyze the system’s logic and the data it uses. This helps prevent the unintentional teaching of incorrect clinical assumptions or harmful stereotypes.

3.1.4. Quality Management Systems (QMS) in Healthcare AI

Integrating quality management system (QMS) principles into the development and deployment of healthcare AI is crucial for bridging the gap between research and real-world clinical use. A well-designed QMS establishes rigorous standards for safety, efficacy, and ethical compliance throughout the AI lifecycle [50,51]. Implementing a QMS requires adaptability to specific healthcare settings and close interdisciplinary collaboration. It fosters awareness, education, and organizational change by aligning AI development with clinical needs and ensuring a user-centered approach. The result is increased system reliability, improved patient outcomes, and streamlined workflows.

- Algorithmic/theoretical tie-in: for QMS to meaningfully ensure the quality of AI systems, we need robust theoretical definitions of concepts like interpretability. Definitions for measuring interpretability and AI benchmarks deemed sufficiently safe for clinical use are essential for establishing clear quality metrics within the QMS framework.

- Trust/responsibility tie-in: clear ethical guidelines and regulatory frameworks are fundamental for responsible AI in healthcare. Interdisciplinary teams, including ethicists, healthcare practitioners, and AI experts, are best positioned to develop comprehensive QMS protocols. These protocols should encompass ethical considerations at all stages, ensuring that patient safety, fairness, and transparency are embedded in AI systems.

Cross-disciplinary collaboration can be highly rewarding yet challenging. We propose a series of best practices for successful cross-disciplinary collaboration based on research findings (Figure 3).

Figure 3.

Best practices for successful cross-disciplinary collaboration.

Successful cross-disciplinary collaboration in bioinformatics requires a firm foundation built on mutual understanding, respect, and open-mindedness to diverse perspectives [52,53]. Fostering routine dialogue through joint workshops and academic initiatives encourages building this foundation, as does providing mentorship to early-career researchers navigating this complex terrain [54,55].

Additionally, implementing structures and strategies to promote accountability and effective communication is crucial. Clarifying team member roles, developing comprehensive data stewardship plans for accessibility and reproducibility, and articulating a unifying vision can bridge disciplinary gaps [56,57,58,59,60]. Understanding and accommodating varying research paces across disciplines is also key to maintaining momentum in a collaborative research collective [14,61]. Cultivating an egalitarian and cohesive research collective depends on equitable valuing of all inputs, regular sharing of insights and resources, and refining approaches through continuous feedback [62,63,64,65,66]. Successful collaboration requires firm foundations of mutual understanding, respect, transparency, and proactive conflict resolution to navigate inherent challenges. By following these best practices, cross-disciplinary teams can reap the benefits of embracing a diverse range of expertise through structured exchanging of ideas, data, and people for bioinformatics advancement [67,68,69].

3.2. The Role of Academia, Industry, and Healthcare Professionals

Academia, industry, and healthcare professionals all play essential roles in fostering interdisciplinary collaboration in healthcare AI.

Academia:

- Provides education and training in AI-related fields, advancing theoretical understanding and practical applications in healthcare.

- Fosters collaboration through joint programs, courses, and research projects uniting disciplines like medicine, computer science, engineering, and ethics [70,71,72]. By promoting interdisciplinary education and research, academia bridges the gap between data science and clinical contexts, ensuring AI solutions address real-world healthcare needs.

Industry:

- Drives development, implementation, and evaluation of AI solutions in healthcare settings [73].

- Collaborates with academia and healthcare providers to translate research into practice, addressing real-world problems and providing resources and expertise [73,74].

- Participates in conferences and workshops for knowledge sharing and idea generation, further amplifying cross-disciplinary interaction [74].

Healthcare professionals:

- Offer essential clinical insights to identify priority areas for AI implementation and guide the development and evaluation of solutions [75].

- Collaborate on interdisciplinary research projects, share domain expertise, and advocate for the effective and ethical integration of AI within the healthcare practice [75,76].

4. The Role of Conferences in Fostering Collaboration and Innovation

Conferences are vital for interdisciplinary knowledge exchange, fostering global connections and forming professional networks [77,78,79]. Special sessions within these conferences can create an even richer environment for fostering progress in explainable AI (XAI). These focused sessions bring together a diversity of researchers, physicians, scientists, and computational biologists to delve deeper into specific XAI challenges and opportunities. Opportunities for more in-depth discussions and hands-on experiences augment collaboration and innovative potential among participants [80].

The benefits of interdisciplinary collaboration for XAI advancements may be maximized by thoughtful structuring of a special session of [78] (Table 2).

Table 2.

Example of a special session conference format.

While interdisciplinary conferences are not the sole driver of XAI progress, they play a crucial and distinct role within the broader innovation ecosystem. Conferences bring together professionals from diverse fields like psychology, computer science, ethics, and healthcare, fostering a holistic understanding of AI systems and emphasizing the importance of user-centric explanations and ethical considerations in XAI development. Computer scientists and statisticians can collaborate to create new methods for generating interpretable models, while domain experts can provide essential feedback and validation. Additionally, innovative XAI solutions often emerge from the serendipitous integration of insights from disparate methodologies. For instance, collaborations between data scientists and legal experts can ensure compliance with frameworks while maintaining technical feasibility, and social scientists partnering with computer scientists can create user-friendly and intuitive XAI tools.

Global collaboration and networking at conferences are essential for disseminating and scaling XAI initiatives. Rapid feedback on early-stage concepts helps refine ideas and avoid dead ends. For example, a medical ethicist raising concerns about potential bias in a system could prompt its redesign, preventing harm. Direct interactions with end users like clinicians provide valuable feedback, driving user-oriented improvements in XAI tools.

Specialized conferences play a crucial role. For instance, the Association for Computing Machinery Conference on Fairness, Accountability, and Transparency (ACM FAccT) conference has united perspectives from multiple disciplines to shape core XAI frameworks, models, and evaluation methods. Presentations on algorithmic bias have informed techniques to improve model interpretability and auditability [19,81,82,83]. The AI for Good Global Summit (International Telecommunication Union/ITU) promotes AI to advance global development priorities in health, climate, and sustainable infrastructure. These events and others facilitate long-term research partnerships, leading to high-impact publications on various aspects of XAI including visualization tools, user-centric evaluations, and algorithmic fairness [84,85,86,87,88].

Standardization is essential for XAI reproducibility and reliability. Conferences can spearhead the establishment of standardized frameworks and best practices, including evaluation criteria for XAI methods and guidelines for responsible AI development [89,90,91].

Targeted conferences like the International Conference of Learning Representations (ICLR) provide in-depth knowledge sharing on representation learning. ICLR 2022 workshops featuring AI developers, social scientists, and medical professionals helped crystallize priorities for patient-facing XAI in healthcare by addressing subjects spanning from machine learning for social good to practical machine learning for developing countries and accountable and ethical use of AI technologies in high-stake applications. Such multidisciplinary discussions rapidly identify gaps and lead to solutions.

Gaps addressed in interdisciplinary conferences may serve as accelerants for innovation throughout rippling webs of multidisciplinary discussion. Initiatives like the “Practical ML for Developing Countries Workshop” at ICLR 2022 brought together representatives from academia, industry, and government agencies to reflect on aspects of designing and implementing AI solutions in resource-constrained environments. Such focused collaboration of expertise not only accelerates innovation cycles in XAI research and application, but may be expanded upon by interdisciplinary cohorts who converge at other specialized venues. As an example, the Economics of Artificial Intelligence Conference hosted by the National Bureau of Economic Research (NBER) features interdisciplinary collaborations among researchers from various institutions to advance exploration of economic implications of AI to build trust in AI systems and ensure compliance with regulations like the European Union General Data Protection Regulation (GDPR). While the GDPR is an EU regulation, its influence is global. Many countries have adopted similar data privacy frameworks, and organizations worldwide need to be aware of its principles when handling the data of EU citizens. Conferences disseminate knowledge about regulatory requirements and promote the adoption of responsible AI practices.

Virtual conferences increase accessibility and inclusivity, extending reach and promoting diverse participation [78]. This shift toward virtual formats, as demonstrated by the adaptability of ISCB’s GLBIO conference series, broadens accessibility and representation, enriching discussions with diverse research and viewpoints [77,80,92,93,94]. GLBIO has also made efforts to promote diversity and inclusivity by offering fellowship awards and by prioritizing considerations for first-time attendees, individuals from underrepresented groups, and students [94].

Conferences foster collaboration and networking with diverse researchers, impacting access to resources and enhancing social trust within the research community [79]. GLBIO has incorporated novel approaches, such as “matchmaking” sessions, to actively encourage communication and collaborations [95,96].

Speakers play a key role in audience engagement and innovation, with the ability to adapt content for interactivity [97]. Similarly, academic conferences serve as platforms for situated learning, research sharing, and agenda setting. Organizations promoting XAI discourse for healthcare must adapt to various complexities associated with major conference organization to support field innovation [80].

Advancing XAI through Goal-Oriented Collaboration

To effectively tackle the challenges of interpretable AI, conferences must strive to direct, instead of simply gather, a diversity of perspectives honed to address specific XAI obstacles. A focus on foundational gaps should be emphasized, with dedicated tracks and sessions focused on theoretical limitations of current AI models and the need for breakthroughs in interpretability by design. Sessions should delve into the theoretical foundations, current research, and practical applications in making algorithms more understandable and interpretable. As compliment, keynote speakers may highlight the core theoretical hurdles related to explainability, setting a clear agenda for the conference.

Targeted interdisciplinary projects may be facilitated by seed funding through the offering of small grants to encourage teams formed during the conference to pursue collaborative projects directly addressing the fundamental challenges in interpretable AI. Implementation of ‘challenge workshops’ or cross-disciplinary ‘hackathons’ invites a focused space for interdisciplinary teams aiming to develop new interpretability approaches to work on specific, well-defined theoretical or algorithmic problems.

To bridge theory and practice, sessions discussing application-driven challenges are essential. Stakeholders and end users, e.g., policymakers, ethicists, physicians, legal scholars, industry, affected communities, and domain experts, outline real-world cases where explainability is essential but current AI falls short. Joint presentations incentivize researchers across disciplines to co-present work, demonstrating how theoretical advances translate into practical tools. Integration of theoretical and applied research is crucial for translating theoretical insights into actionable strategies for building interpretable AI systems.

Advancement of theoretical and practical knowledge may be accomplished through the invitation of keynote speakers from various disciplines such as psychology, cognitive science, and domain-specific areas to provide insights into human interpretability and its integration into AI models. Tutorials on the theoretical foundations of AI may be offered specifically for non-computer scientists, fostering a shared language for collaboration. Explainability ‘clinics’ may be established whereby practitioners can express issues they face with XAI implementation to theoreticians who can offer insights and potential solutions. Submissions focusing on the development of AI models with built-in interpretability should be encouraged.

Emphasis on evaluation and reproducibility may be emphasized through standardization workshops, i.e., a dedicated space for developing standardized metrics for interpretability or benchmarks to compare different XAI methods. Encouragement of open-source code sharing may be accomplished through sessions on sharing code, models, and datasets to promote reproducibility and research. Sessions on replicating foundational XAI research results may facilitate breakthroughs in addressing reproducibility challenges. Publishing of detailed conference proceedings ensures accessibility of findings and discussions for a wider audience, fostering further collaboration. Additional support for interdisciplinary collaboration, e.g., networking, research initiatives, funding, is ideal to foster engagement with the broader AI community, raising awareness and encouraging collaboration beyond the conference.

Encouragement of ethical and responsible AI development is integral to XAI advancement. Discussions on the ethical and societal implications of interpretable AI may be facilitated through ethics workshops emphasizing transparency, fairness, accountability, and run in alignment with societal values. Social scientists and ethicists are ideal for inclusion in this discourse to guide ethical AI development and address its social impact.

5. Looking Forward: Future Directions and Innovations

Explainable AI (XAI) and computational biology are two rapidly evolving fields that are expected to bring significant advancements in the future.

5.1. Future Trends in Explainable AI

XAI aims to make the decision-making process of AI models transparent and understandable. This is particularly important in healthcare, where the decisions made by AI can have significant impacts on patient outcomes [6,22,26,98].

As we enter a period of unparalleled data accumulation and analysis, computational biology will continue to promote our understanding of molecular systems [1]. The success and wide acceptance of open data projects will impact how patients, healthcare practitioners, and the general public view computational biology as a field [1]. The development of XAI for demystifying complex biological models will enable medical professional comprehension and trust of AI-based clinical decision support systems [26]. By understanding how a model works, researchers can identify potential biases or shortcomings and refine the model for improved accuracy. Furthermore, XAI may reveal unexpected patterns or relationships within biological data, e.g., gene interactions and protein structures, leading to new research questions and potential breakthroughs.

The future of XAI in healthcare is expected to focus on addressing the interdisciplinary nature of explainability, which involves medical, legal, ethical, and societal considerations [6]. This will require fostering multidisciplinary collaboration and sensitizing developers, healthcare professionals, and legislators to the challenges and limitations of opaque algorithms in medical AI [6]. By translating complex biological findings into actionable insights that doctors and researchers can readily understand and utilize, XAI can bridge the gap between data and actionable insights. This includes personalized treatment approaches that derive from an understanding of how individual factors influence biological processes through interpretable models.

5.2. Healthcare 5.0 and Explainable AI

Healthcare 5.0 is a vision for the future of healthcare that focuses on real-time patient monitoring, ambient control and wellness, and privacy compliance through assisted technologies [99]. XAI has emerged as a critical component in the evolution of healthcare, particularly in the context of Healthcare 5.0, where it plays a pivotal role in unlocking opportunities and addressing complex challenges [100]. XAI aims to produce a human-interpretable justification for each model output, increasing confidence if the results appear plausible and match clinicians’ expectations [99].

In the context of Healthcare 5.0, XAI can assist in finding suitable libraries that support visual explainability and interpretability of the output of AI models [99]. For instance, in medical imaging applications, end-to-end explainability can be provided through AI and federated transfer learning [99].

5.3. Balancing Explainability and Accuracy/Performance in Future AI Models

More complex systems are capable of modeling intricate relationships in the data, leading to higher accuracy, but their complexity often makes them less interpretable [101]. This trade-off between accuracy and explainability is a significant concern, especially for complex deep learning techniques [102].

Research has shown that while professionals and the public value the explainability of AI systems, they may value it less in healthcare domains when weighed against system accuracy [101]. Van der Veer SN et al. found that 88% of responding physicians preferred explainable over non-explainable AI, but without asking respondents to make the trade-off between explainability and accuracy [101].

However, the absence of a plausible explanation does not imply an inaccurate model [99]. Therefore, instead of setting categorical rules around AI explainability, policymakers should consider the context and the specific needs of the application [101].

The future of healthcare and research is poised to be significantly influenced by advancements in AI, computational biology, and their integration into various clinical practice and research arenas. These advancements are expected to revolutionize drug discovery, disease diagnosis, treatment recommendations, and patient engagement [10,103,104,105,106,107].

One of the most promising areas of exploration is the application of AI and computational biology in drug discovery. AI can transform large amounts of aggregated data into usable knowledge by fielding the complex relationship between input and output variables for high-dimensional data, i.e., potential chemical compounds and the range of properties or biological activities for consideration, an ability which can expedite the process of drug discovery and optimization [10,104,107,108]. For instance, the reinforcement learning for structural evolution (ReLeaSE) system, implemented at the University of North Carolina, demonstrates the ability to design new, patentable chemical entities with specific biological activities and optimal safety profiles, potentially shortening the time required to bring a new drug candidate to clinical trials [108].

Technological advancements in AI and data science are expected to continue at a rapid pace, with the AI-associated healthcare market projected to grow significantly [109,110]. These advancements are not only revolutionizing healthcare, but also transforming the practice of medicine [75,103,111,112].

Future research topics and areas of exploration are likely to focus on the ethical, legal, and societal challenges posed by the rapid advancements in AI. Addressing these challenges will require a multidisciplinary approach and the development of more rigorous AI techniques and models [103,113,114].

Conferences and special issues of journals will play a crucial role in shaping these future directions. Examples include the Journal of Biomedical and Health Informatics special issues on topics such as “Ethical AI for Biomedical and Health Informatics in the Generative Era” and “Advancing Personalized Healthcare: Integrating AI and Health Informatics” [114]. These platforms provide opportunities for interdisciplinary collaboration among AI experts, computer scientists, healthcare professionals, and informatics specialists, vital for the development of robust AI systems and ethical guidelines [103,114].

6. Conclusions

While interdisciplinary collaboration is invaluable in addressing the challenges of interpretable AI—bringing together expertise from computer science, cognitive science, psychology, and domain-specific knowledge—it is not a panacea. The obstacles are deeply rooted in the fundamental nature of the algorithms themselves, requiring advances in both the theoretical underpinnings of AI and the development of new, inherently interpretable models. Nonetheless, collaborations across fields can foster innovative approaches to interpretability, such as leveraging human-centric design principles or drawing on theories of human cognition to inform model development. AI applications in healthcare demand transparency and explainability to address legal, ethical, and societal concerns, especially in clinical decision-making and patient care [72,73,74,75]. The integration of AI and computational biology into healthcare and research holds immense potential for accelerating discoveries, improving diagnostics, and enhancing patient care. However, it also poses significant challenges that need to be addressed through continued research, innovation, and interdisciplinary collaboration [103,113,114]. Computational biology is pivotal to linking data science to clinical applications, necessitating interdisciplinary collaboration and training [34]. Despite ongoing efforts, the field of interpretable AI is still in its early stages. This is partly because the complexity of models continues to increase as AI research progresses, often outpacing the development of interpretability techniques. Additionally, there is not yet a consensus on the best practices for AI interpretability, nor is there a standardized framework for evaluating the effectiveness of different interpretability methods. The future of explainable AI and computational biology is promising, with significant advancements expected in the development of transparent AI models and new computational methods for biological research. The future of XAI and computational biology in the context of Healthcare 5.0 will likely involve a careful balance between explainability and accuracy, with the ultimate goal being that of enhancing patient outcomes and upholding the essential elements of compassion, empathy, and ethical considerations that define the core of healthcare [100]. These advancements will likely have a profound impact on healthcare and biological research, leading to a deeper understanding of biological systems and improved patient outcomes [72,73,74,75,76].

Conferences are key for collaboration and innovation, uniting experts across fields, enabling knowledge exchange, and stimulating idea sharing [73,74,75,103]. They are vital for academic progress, research refinement, and fostering real-world impacts. The role of academia, industry, and healthcare professionals in fostering interdisciplinary collaboration is crucial for effective AI implementation in healthcare.

Author Contributions

Conceptualization, A.U.P., Q.G., R.E., D.M. and N.M.; methodology, A.U.P., Q.G., D.M. and N.M.; A.U.P., Q.G. and R.E.; writing—original draft preparation, A.U.P., Q.G., R.E., D.M. and N.M.; writing—review and editing. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

More information on integrated, interdisciplinary bioinformatics efforts for healthcare advancement may be found at: www.biodataxai.com (accessed on 16 December 2023).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Fogg, C.N.; Kovats, D.E. Computational Biology: Moving into the Future One Click at a Time. PLoS Comput. Biol. 2015, 11, e1004323. [Google Scholar] [CrossRef]

- Mac Gabhann, F.; Pitzer, V.E.; Papin, J.A. The blossoming of methods and software in computational biology. PLoS Comput. Biol. 2023, 19, e1011390. [Google Scholar] [CrossRef]

- Journal of Proteomics & Bioinformatics. Innovations On Computational Biology. Available online: https://www.longdom.org/peer-reviewed-journals/innovations-on-computational-biology-12981.html (accessed on 15 December 2023).

- Confalonieri, R.; Coba, L.; Wagner, B.; Besold, T.R. A historical perspective of explainable Artificial Intelligence. WIREs Data Min. Knowl. Discov. 2021, 11, e1391. [Google Scholar] [CrossRef]

- Xu, F.; Uszkoreit, H.; Du, Y.; Fan, W.; Zhao, D.; Zhu, J. Explainable AI: A Brief Survey on History, Research Areas, Approaches and Challenges. In Natural language Processing and Chinese Computing; Tang, J., Kan, M.-Y., Zhao, D., Li, S., Zan, H., Eds.; Springer: Cham, Switzerland, 2019; Volume 11839, pp. 563–574. [Google Scholar]

- Amann, J.; Blasimme, A.; Vayena, E.; Frey, D.; Madai, V.I. Explainability for artificial intelligence in healthcare: A multidisciplinary perspective. BMC Med. Inform. Decis. Mak. 2020, 20, 310. [Google Scholar] [CrossRef]

- Chaddad, A.; Peng, J.; Xu, J.; Bouridane, A. Survey of Explainable AI Techniques in Healthcare. Sensors 2023, 23, 634. [Google Scholar] [CrossRef] [PubMed]

- Karim, M.R.; Islam, T.; Shajalal, M.; Beyan, O.; Lange, C.; Cochez, M.; Rebholz-Schuhmann, D.; Decker, S. Explainable AI for Bioinformatics: Methods, Tools and Applications. Brief. Bioinform. 2023, 24, bbad236. [Google Scholar] [CrossRef] [PubMed]

- Kurdziolek, M. Explaining the Hard to Explain: An Overview of Explainable AI (XAI) for UX. 2022. Available online: https://www.youtube.com/watch?v=JzK_SBhakUQ (accessed on 16 December 2023).

- Zhang, Y.; Luo, M.; Wu, P.; Wu, S.; Lee, T.-Y.; Bai, C. Application of Computational Biology and Artificial Intelligence in Drug Design. Int. J. Mol. Sci. 2022, 23, 13568. [Google Scholar] [CrossRef]

- Ghosh, R. Guest Post—The Paradox of Hyperspecialization and Interdisciplinary Research. Available online: https://scholarlykitchen.sspnet.org/2023/08/29/guest-post-the-paradox-of-hyperspecialization-and-interdisciplinary-research/ (accessed on 15 December 2023).

- Oester, S.; Cigliano, J.A.; Hind-Ozan, E.J.; Parsons, E.C.M. Why Conferences Matter—An Illustration from the International Marine Conservation Congress. Front. Mar. Sci. 2017, 4, 257. [Google Scholar] [CrossRef]

- Sarabipour, S.; Khan, A.; Seah, Y.F.S.; Mwakilili, A.D.; Mumoki, F.N.; Sáez, P.J.; Schwessinger, B.; Debat, H.J.; Mestrovic, T. Changing scientific meetings for the better. Nat. Hum. Behav. 2021, 5, 296–300. [Google Scholar] [CrossRef] [PubMed]

- Daniel, K.L.; McConnell, M.; Schuchardt, A.; Peffer, M.E. Challenges facing interdisciplinary researchers: Findings from a professional development workshop. PLoS ONE 2022, 17, e0267234. [Google Scholar] [CrossRef]

- Adamidi, E.S.; Mitsis, K.; Nikita, K.S. Artificial intelligence in clinical care amidst COVID-19 pandemic: A systematic review. Comput. Struct. Biotechnol. J. 2021, 19, 2833–2850. [Google Scholar] [CrossRef] [PubMed]

- Garcia Sanchez, J.J.; Thompson, J.; Scott, D.A.; Evans, R.; Rao, N.; Sörstadius, E.; James, G.; Nolan, S.; Wittbrodt, E.T.; Abdul Sultan, A.; et al. Treatments for Chronic Kidney Disease: A Systematic Literature Review of Randomized Controlled Trials. Adv. Ther. 2022, 39, 193–220. [Google Scholar] [CrossRef] [PubMed]

- Payrovnaziri, S.N.; Chen, Z.; Rengifo-Moreno, P.; Miller, T.; Bian, J.; Chen, J.H.; Liu, X.; He, Z. Explainable artificial intelligence models using real-world electronic health record data: A systematic scoping review. J. Am. Med. Inform. Assoc. 2020, 27, 1173–1185. [Google Scholar] [CrossRef]

- Zhang, X.R.; Ma, T.; Wang, Y.C.; Hu, S.; Yang, Y. Development of a Novel Method for the Clinical Visualization and Rapid Identification of Multidrug-Resistant Candida auris. Microbiol. Spectr. 2023, 11, e0491222. [Google Scholar] [CrossRef]

- Dolezal, J.M.; Wolk, R.; Hieromnimon, H.M.; Howard, F.M.; Srisuwananukorn, A.; Karpeyev, D.; Ramesh, S.; Kochanny, S.; Kwon, J.W.; Agni, M.; et al. Deep learning generates synthetic cancer histology for explainability and education. Npj Precis. Oncol. 2023, 7, 49. [Google Scholar] [CrossRef] [PubMed]

- Computational Biology—Focus Areas. Available online: https://www.mayo.edu/research/departments-divisions/computational-biology/focus-areas (accessed on 16 December 2023).

- Patel, A.; Balis, U.G.J.; Cheng, J.; Li, Z.; Lujan, G.; McClintock, D.S.; Pantanowitz, L.; Parwani, A. Contemporary Whole Slide Imaging Devices and Their Applications within the Modern Pathology Department: A Selected Hardware Review. J. Pathol. Inform. 2021, 12, 50. [Google Scholar] [CrossRef]

- Al Kuwaiti, A.; Nazer, K.; Al-Reedy, A.; Al-Shehri, S.; Al-Muhanna, A.; Subbarayalu, A.V.; Al Muhanna, D.; Al-Muhanna, F.A. A Review of the Role of Artificial Intelligence in Healthcare. J. Pers. Med. 2023, 13, 951. [Google Scholar] [CrossRef]

- Mudgal, S.K.; Agarwal, R.; Chaturvedi, J.; Gaur, R.; Ranjan, N. Real-world application, challenges and implication of artificial intelligence in healthcare: An essay. Pan Afr. Med. J. 2022, 43, 3. [Google Scholar] [CrossRef]

- Khan, B.; Fatima, H.; Qureshi, A.; Kumar, S.; Hanan, A.; Hussain, J.; Abdullah, S. Drawbacks of Artificial Intelligence and Their Potential Solutions in the Healthcare Sector. Biomed. Mater. Devices 2023, 1, 731–738. [Google Scholar] [CrossRef]

- Sunarti, S.; Fadzlul Rahman, F.; Naufal, M.; Risky, M.; Febriyanto, K.; Masnina, R. Artificial intelligence in healthcare: Opportunities and risk for future. Gac. Sanit. 2021, 35 (Suppl. 1), S67–S70. [Google Scholar] [CrossRef]

- He, Z.; Zhang, R.; Diallo, G.; Huang, Z.; Glicksberg, B.S. Editorial: Explainable artificial intelligence for critical healthcare applications. Front. Artif. Intell. 2023, 6, 1282800. [Google Scholar] [CrossRef] [PubMed]

- Pierce, R.L.; van Biesen, W.; van Cauwenberge, D.; Decruyenaere, J.; Sterckx, S. Explainability in medicine in an era of AI-based clinical decision support systems. Front. Genet. 2022, 13, 903600. [Google Scholar] [CrossRef] [PubMed]

- Patel, A.U.; Williams, C.L.; Hart, S.N.; Garcia, C.A.; Durant, T.J.S.; Cornish, T.C.; McClintock, D.S. Cybersecurity and Information Assurance for the Clinical Laboratory. J. Appl. Lab. Med. 2023, 8, 145–161. [Google Scholar] [CrossRef] [PubMed]

- Kelly, C.J.; Karthikesalingam, A.; Suleyman, M.; Corrado, G.; King, D. Key challenges for delivering clinical impact with artificial intelligence. BMC Med. 2019, 17, 195. [Google Scholar] [CrossRef] [PubMed]

- National Research Council (US) Committee on Frontiers at the Interface of Computing and Biology. Challenge Problems in Bioinformatics and Computational Biology from Other Reports. In Catalyzing Inquiry at the Interface of Computing and Biology; Wooley, J.C., Lin, H.S., Eds.; National Academies Press: Cambridge, MA, USA, 2005. [Google Scholar]

- Nussinov, R. Advancements and challenges in computational biology. PLoS Comput. Biol. 2015, 11, e1004053. [Google Scholar] [CrossRef] [PubMed]

- Samuriwo, R. Interprofessional Collaboration-Time for a New Theory of Action? Front. Med. 2022, 9, 876715. [Google Scholar] [CrossRef] [PubMed]

- Warren, J.L.; Warren, J.S. The Case for Understanding Interdisciplinary Relationships in Health Care. Ochsner J. 2023, 23, 94–97. [Google Scholar] [CrossRef] [PubMed]

- Williams, P. Challenges and opportunities in computational biology and systems biology. Int. J. Swarm Evol. Comput. 2023, 12, 304. [Google Scholar] [CrossRef]

- Zajac, S.; Woods, A.; Tannenbaum, S.; Salas, E.; Holladay, C.L. Overcoming Challenges to Teamwork in Healthcare: A Team Effectiveness Framework and Evidence-Based Guidance. Front. Commun. 2021, 6, 606445. [Google Scholar] [CrossRef]

- Naik, N.; Hameed, B.M.Z.; Shetty, D.K.; Swain, D.; Shah, M.; Paul, R.; Aggarwal, K.; Ibrahim, S.; Patil, V.; Smriti, K.; et al. Legal and Ethical Consideration in Artificial Intelligence in Healthcare: Who Takes Responsibility? Front. Surg. 2022, 9, 862322. [Google Scholar] [CrossRef]

- Gerke, S.; Minssen, T.; Cohen, G. Ethical and legal challenges of artificial intelligence-driven healthcare. In Artificial Intelligence in Healthcare; Bohr, A., Memarzadeh, K., Eds.; Academic Press imprint of Elsevier: London, UK; San Diego, CA, USA, 2020; pp. 295–336. [Google Scholar] [CrossRef]

- Ganapathy, K. Artificial Intelligence and Healthcare Regulatory and Legal Concerns. Telehealth Med. Today 2021, 6. [Google Scholar] [CrossRef]

- Farhud, D.D.; Zokaei, S. Ethical Issues of Artificial Intelligence in Medicine and Healthcare. Iran. J. Public Health 2021, 50, i–v. [Google Scholar] [CrossRef] [PubMed]

- Karimian, G.; Petelos, E.; Evers, S.M.A.A. The ethical issues of the application of artificial intelligence in healthcare: A systematic scoping review. AI Ethics 2022, 2, 539–551. [Google Scholar] [CrossRef]

- Jeyaraman, M.; Balaji, S.; Jeyaraman, N.; Yadav, S. Unraveling the Ethical Enigma: Artificial Intelligence in Healthcare. Cureus 2023, 15, e43262. [Google Scholar] [CrossRef] [PubMed]

- Redrup Hill, E.; Mitchell, C.; Brigden, T.; Hall, A. Ethical and legal considerations influencing human involvement in the implementation of artificial intelligence in a clinical pathway: A multi-stakeholder perspective. Front. Digit. Health 2023, 5, 1139210. [Google Scholar] [CrossRef] [PubMed]

- Davenport, T.; Kalakota, R. The potential for artificial intelligence in healthcare. Future Healthc. J. 2019, 6, 94–98. [Google Scholar] [CrossRef] [PubMed]

- Attwood, T.K.; Blackford, S.; Brazas, M.D.; Davies, A.; Schneider, M.V. A global perspective on evolving bioinformatics and data science training needs. Brief. Bioinform. 2019, 20, 398–404. [Google Scholar] [CrossRef] [PubMed]

- Scott, P.; Dunscombe, R.; Evans, D.; Mukherjee, M.; Wyatt, J. Learning health systems need to bridge the ‘two cultures’ of clinical informatics and data science. J. Innov. Health Inform. 2018, 25, 126–131. [Google Scholar] [CrossRef] [PubMed]

- Showalter, T. Unlocking the power of health care AI tools through clinical validation. MedicalEconomics 2023. Available online: https://www.medicaleconomics.com/view/unlocking-the-power-of-health-care-ai-tools-through-clinical-validation (accessed on 16 December 2023).

- Sezgin, E. Artificial intelligence in healthcare: Complementing, not replacing, doctors and healthcare providers. Digit. Health 2023, 9, 20552076231186520. [Google Scholar] [CrossRef]

- Krause-Jüttler, G.; Weitz, J.; Bork, U. Interdisciplinary Collaborations in Digital Health Research: Mixed Methods Case Study. JMIR Hum. Factors 2022, 9, e36579. [Google Scholar] [CrossRef]

- Bilgic, E.; Harley, J.M. Analysis: Using AI in Health Sciences Education Requires Interdisciplinary Collaboration and Risk Assessment. Available online: https://brighterworld.mcmaster.ca/articles/analysis-using-ai-in-health-sciences-education-requires-interdisciplinary-collaboration-and-risk-assessment/ (accessed on 15 December 2023).

- Overgaard, S.M.; Graham, M.G.; Brereton, T.; Pencina, M.J.; Halamka, J.D.; Vidal, D.E.; Economou-Zavlanos, N.J. Implementing quality management systems to close the AI translation gap and facilitate safe, ethical, and effective health AI solutions. NPJ Digit. Med. 2023, 6, 218. [Google Scholar] [CrossRef] [PubMed]

- Patel, A.U.; Shaker, N.; Erck, S.; Kellough, D.A.; Palermini, E.; Li, Z.; Lujan, G.; Satturwar, S.; Parwani, A.V. Types and frequency of whole slide imaging scan failures in a clinical high throughput digital pathology scanning laboratory. J. Pathol. Inform. 2022, 13, 100112. [Google Scholar] [CrossRef] [PubMed]

- Turner, R.; Cotton, D.; Morrison, D.; Kneale, P. Embedding interdisciplinary learning into the first-year undergraduate curriculum: Drivers and barriers in a cross-institutional enhancement project. Teach. High. Educ. 2024, 29, 1092–1108. [Google Scholar] [CrossRef]

- Rutherford, M. Standardized Nursing Language: What Does It Mean for Nursing Practice? OJIN Online J. Issues Nurs. 2008, 13, 1–9. [Google Scholar] [CrossRef]

- Grandien, C.; Johansson, C. Institutionalization of communication management. Corp. Commun. Int. J. 2012, 17, 209–227. [Google Scholar] [CrossRef]

- In support of early career researchers. Nat. Commun. 2021, 12, 2896. [CrossRef] [PubMed]

- Bolduc, S.; Knox, J.; Ristroph, E.B. Evaluating team dynamics in interdisciplinary science teams. High. Educ. Eval. Dev. 2023, 17, 70–81. [Google Scholar] [CrossRef]

- Nancarrow, S.A.; Booth, A.; Ariss, S.; Smith, T.; Enderby, P.; Roots, A. Ten principles of good interdisciplinary team work. Hum. Resour. Health 2013, 11, 19. [Google Scholar] [CrossRef] [PubMed]

- Kilpatrick, K.; Paquette, L.; Jabbour, M.; Tchouaket, E.; Fernandez, N.; Al Hakim, G.; Landry, V.; Gauthier, N.; Beaulieu, M.D.; Dubois, C.A. Systematic review of the characteristics of brief team interventions to clarify roles and improve functioning in healthcare teams. PLoS ONE 2020, 15, e0234416. [Google Scholar] [CrossRef]

- Wruck, W.; Peuker, M.; Regenbrecht, C.R.A. Data management strategies for multinational large-scale systems biology projects. Brief. Bioinform. 2014, 15, 65–78. [Google Scholar] [CrossRef]

- Ng, D. Embracing interdisciplinary connections in academia. Nat. Microbiol. 2022, 7, 470. [Google Scholar] [CrossRef]

- Nyström, M.E.; Karltun, J.; Keller, C.; Andersson Gäre, B. Collaborative and partnership research for improvement of health and social services: Researcher’s experiences from 20 projects. Health Res. Policy Syst. 2018, 16, 46. [Google Scholar] [CrossRef]

- Charles, C.C. Building better bonds: Facilitating interdisciplinary knowledge exchange within the study of the human–animal bond. Anim. Front. 2014, 4, 37–42. [Google Scholar] [CrossRef]

- Yeboah, A. Knowledge sharing in organization: A systematic review. Cogent Bus. Manag. 2023, 10, 2195027. [Google Scholar] [CrossRef]

- Fazey, I.; Bunse, L.; Msika, J.; Pinke, M.; Preedy, K.; Evely, A.C.; Lambert, E.; Hastings, E.; Morris, S.; Reed, M.S. Evaluating knowledge exchange in interdisciplinary and multi-stakeholder research. Glob. Environ. Change 2014, 25, 204–220. [Google Scholar] [CrossRef]

- Liu, F.; Lu, Y.; Wang, P. Why Knowledge Sharing in Scientific Research Teams Is Difficult to Sustain: An Interpretation From the Interactive Perspective of Knowledge Hiding Behavior. Front. Psychol. 2020, 11, 537833. [Google Scholar] [CrossRef]

- Chouvarda, I.; Mountford, N.; Trajkovik, V.; Loncar-Turukalo, T.; Cusack, T. Leveraging Interdisciplinary Education Toward Securing the Future of Connected Health Research in Europe: Qualitative Study. J. Med. Internet Res. 2019, 21, e14020. [Google Scholar] [CrossRef]

- Ding, Y.; Pulford, J.; Crossman, S.; Bates, I. Practical Actions for Fostering Cross-Disciplinary Research. Available online: https://i2insights.org/2020/09/08/fostering-cross-disciplinary-research/ (accessed on 15 December 2023).

- Knapp, B.; Bardenet, R.; Bernabeu, M.O.; Bordas, R.; Bruna, M.; Calderhead, B.; Cooper, J.; Fletcher, A.G.; Groen, D.; Kuijper, B.; et al. Ten simple rules for a successful cross-disciplinary collaboration. PLoS Comput. Biol. 2015, 11, e1004214. [Google Scholar] [CrossRef]

- Stawarczyk, B.; Roos, M. Establishing effective cross-disciplinary collaboration: Combining simple rules for reproducible computational research, a good data management plan, and good research practice. PLoS Comput. Biol. 2023, 19, e1011052. [Google Scholar] [CrossRef]

- Ng, F.Y.C.; Thirunavukarasu, A.J.; Cheng, H.; Tan, T.F.; Gutierrez, L.; Lan, Y.; Ong, J.C.L.; Chong, Y.S.; Ngiam, K.Y.; Ho, D.; et al. Artificial intelligence education: An evidence-based medicine approach for consumers, translators, and developers. Cell Reports. Med. 2023, 4, 101230. [Google Scholar] [CrossRef]

- Kennedy, S. Achieving Clinical AI Fairness Requires Multidisciplinary Collaboration. Available online: https://healthitanalytics.com/news/achieving-clinical-ai-fairness-requires-multidisciplinary-collaboration (accessed on 15 December 2023).

- Lomis, K.; Jeffries, P.; Palatta, A.; Sage, M.; Sheikh, J.; Sheperis, C.; Whelan, A. Artificial Intelligence for Health Professions Educators. NAM Perspect. 2021. [Google Scholar] [CrossRef] [PubMed]

- Pantanowitz, L.; Bui, M.M.; Chauhan, C.; ElGabry, E.; Hassell, L.; Li, Z.; Parwani, A.V.; Salama, M.E.; Sebastian, M.M.; Tulman, D.; et al. Rules of engagement: Promoting academic-industry partnership in the era of digital pathology and artificial intelligence. Acad. Pathol. 2022, 9, 100026. [Google Scholar] [CrossRef] [PubMed]

- Manata, B.; Bozeman, J.; Boynton, K.; Neal, Z. Interdisciplinary Collaborations in Academia: Modeling the Roles of Perceived Contextual Norms and Motivation to Collaborate. Commun. Stud. 2023, 75, 40–58. [Google Scholar] [CrossRef]

- Bajwa, J.; Munir, U.; Nori, A.; Williams, B. Artificial intelligence in healthcare: Transforming the practice of medicine. Future Healthc. J. 2021, 8, e188–e194. [Google Scholar] [CrossRef] [PubMed]

- Connolly, C.; Hernon, O.; Carr, P.; Worlikar, H.; McCabe, I.; Doran, J.; Walsh, J.C.; Simpkin, A.J.; O’Keeffe, D.T. Artificial Intelligence in Interprofessional Healthcare Practice Education—Insights from the Home Health Project, an Exemplar for Change. Comput. Sch. 2023, 40, 412–429. [Google Scholar] [CrossRef]

- Revisiting the Future of Conferences from an Early Career Scholar Perspective. Available online: https://labs.jstor.org/blog/revisiting-the-future-of-conferences-from-an-early-career-scholar-perspective/ (accessed on 16 December 2023).

- Is Conference Paper Valuable? Available online: https://globalconference.ca/is-conference-paper-valuable/ (accessed on 16 December 2023).

- Hauss, K. What are the social and scientific benefits of participating at academic conferences? Insights from a survey among doctoral students and postdocs in Germany. Res. Eval. 2021, 30, 1–12. [Google Scholar] [CrossRef]

- ICCA Congress 2023: Unveiling Innovation & Collaboration. Available online: https://boardroom.global/icca-congress-2023-unveiling-innovation-collaboration/ (accessed on 16 December 2023).

- Zhou, J.; Joachims, T. How to Explain and Justify Almost Any Decision: Potential Pitfalls for Accountability in AI Decision-Making. In Proceedings of the 2023 ACM Conference on Fairness, Accountability, and Transparency, Chicago, IL, USA, 12–15 June 2023; pp. 12–21. [Google Scholar]

- Narayanan, D. Welfarist Moral Grounding for Transparent AI. In Proceedings of the 2023 ACM Conference on Fairness, Accountability, and Transparency, Chicago, IL, USA, 12–15 June 2023; pp. 64–76. [Google Scholar]

- Kim, S.S.Y.; Watkins, E.A.; Russakovsky, O.; Fong, R.; Monroy-Hernández, A. Humans, AI, and Context: Understanding End-Users’ Trust in a Real-World Computer Vision Application. In Proceedings of the 2023 ACM Conference on Fairness, Accountability, and Transparency, Chicago, IL, USA, 12–15 June 2023; pp. 77–88. [Google Scholar]

- Starke, C.; Baleis, J.; Keller, B.; Marcinkowski, F. Fairness perceptions of algorithmic decision-making: A systematic review of the empirical literature. Big Data Soc. 2022, 9, 20539517221115189. [Google Scholar] [CrossRef]

- Karran, A.J.; Demazure, T.; Hudon, A.; Senecal, S.; Léger, P.-M. Designing for Confidence: The Impact of Visualizing Artificial Intelligence Decisions. Front. Neurosci. 2022, 16, 883385. [Google Scholar] [CrossRef]

- Haque, A.K.M.B.; Islam, A.K.M.N.; Mikalef, P. Explainable Artificial Intelligence (XAI) from a user perspective: A synthesis of prior literature and problematizing avenues for future research. Technol. Forecast. Soc. Chang. 2023, 186, 122120. [Google Scholar] [CrossRef]

- Longo, L.; Brcic, M.; Cabitza, F.; Choi, J.; Confalonieri, R.; Ser, J.D.; Guidotti, R.; Hayashi, Y.; Herrera, F.; Holzinger, A.; et al. Explainable Artificial Intelligence (XAI) 2.0: A manifesto of open challenges and interdisciplinary research directions. Inf. Fusion 2024, 106, 102301. [Google Scholar] [CrossRef]

- Saeed, W.; Omlin, C. Explainable AI (XAI): A systematic meta-survey of current challenges and future opportunities. Knowl. -Based Syst. 2023, 263, 110273. [Google Scholar] [CrossRef]

- Wells, L.; Bednarz, T. Explainable AI and Reinforcement Learning—A Systematic Review of Current Approaches and Trends. Front. Artif. Intell. 2021, 4, 550030. [Google Scholar] [CrossRef]

- Lopes, P.; Silva, E.; Braga, C.; Oliveira, T.; Rosado, L. XAI Systems Evaluation: A Review of Human and Computer-Centred Methods. Appl. Sci. 2022, 12, 9423. [Google Scholar] [CrossRef]

- Ghallab, M. Responsible AI: Requirements and challenges. AI Perspect. 2019, 1, 3. [Google Scholar] [CrossRef]

- Great Lakes Bioinformatics (GLBIO) Conference. Available online: https://environment.umn.edu/event/great-lakes-bioinformatics-glbio-conference/ (accessed on 16 December 2023).

- GLBIO 2021. Available online: https://www.iscb.org/glbio2021 (accessed on 16 December 2023).

- GLBIO 2023. Available online: https://www.iscb.org/511-glbio2023 (accessed on 16 December 2023).

- Mathé, E.; Busby, B.; Piontkivska, H. Matchmaking in Bioinformatics. F1000Research 2018, 7, 171. [Google Scholar] [CrossRef]

- de Vries, B.; Pieters, J. Knowledge sharing at conferences. Educ. Res. Eval. 2007, 13, 237–247. [Google Scholar] [CrossRef]

- Speaker Agency. Elevating Events: The Role of Conference Speakers in Engaging Audiences. Available online: https://www.speakeragency.co.uk/blog/elevating-events-the-role-of-conference-speakers-in-engaging-audiences (accessed on 16 December 2023).

- Ali, S.; Akhlaq, F.; Imran, A.S.; Kastrati, Z.; Daudpota, S.M.; Moosa, M. The enlightening role of explainable artificial intelligence in medical & healthcare domains: A systematic literature review. Comput. Biol. Med. 2023, 166, 107555. [Google Scholar] [CrossRef]

- Saraswat, D.; Bhattacharya, P.; Verma, A.; Prasad, V.K.; Tanwar, S.; Sharma, G.; Bokoro, P.N.; Sharma, R. Explainable AI for Healthcare 5.0: Opportunities and Challenges. IEEE Access 2022, 10, 84486–84517. [Google Scholar] [CrossRef]

- Date, S.Y.; Thalor, M. AI in Healthcare 5.0: Opportunities and Challenges. Int. J. Educ. Psychol. Sci. 2023, 1, 189–191. [Google Scholar] [CrossRef]

- van der Veer, S.N.; Riste, L.; Cheraghi-Sohi, S.; Phipps, D.L.; Tully, M.P.; Bozentko, K.; Atwood, S.; Hubbard, A.; Wiper, C.; Oswald, M.; et al. Trading off accuracy and explainability in AI decision-making: Findings from 2 citizens’ juries. J. Am. Med. Inform. Assoc. JAMIA 2021, 28, 2128–2138. [Google Scholar] [CrossRef]

- Sengupta, P.; Zhang, Y.; Maharjan, S.; Eliassen, F. Balancing Explainability-Accuracy of Complex Models. arXiv 2023, arXiv:2305.14098. [Google Scholar]

- Alowais, S.A.; Alghamdi, S.S.; Alsuhebany, N.; Alqahtani, T.; Alshaya, A.I.; Almohareb, S.N.; Aldairem, A.; Alrashed, M.; Bin Saleh, K.; Badreldin, H.A.; et al. Revolutionizing healthcare: The role of artificial intelligence in clinical practice. BMC Med. Educ. 2023, 23, 689. [Google Scholar] [CrossRef]

- Ferrini, A. AI and Computational Biology. Available online: https://www.the-yuan.com/70/AI-and-Computational-Biology.html (accessed on 16 December 2023).

- Yoon, S.; Amadiegwu, A. AI Can Make Healthcare More Accurate, Accessible, and Sustainable. Available online: https://www.weforum.org/agenda/2023/06/emerging-tech-like-ai-are-poised-to-make-healthcare-more-accurate-accessible-and-sustainable/ (accessed on 16 December 2023).

- AI for Healthcare and Life Sciences. Available online: https://www.eecs.mit.edu/research/explore-all-research-areas/ml-and-healthcare/ (accessed on 16 December 2023).

- Unlocking the Potential of AI in Drug Discovery: Current Status, Barriers and Future Opportunities. Available online: https://cms.wellcome.org/sites/default/files/2023-06/unlocking-the-potential-of-AI-in-drug-discovery_report.pdf (accessed on 16 December 2023).

- Nagarajan, N.; Yapp, E.K.Y.; Le, N.Q.K.; Kamaraj, B.; Al-Subaie, A.M.; Yeh, H.-Y. Application of Computational Biology and Artificial Intelligence Technologies in Cancer Precision Drug Discovery. BioMed Res. Int. 2019, 2019, 8427042. [Google Scholar] [CrossRef]

- Bohr, A.; Memarzadeh, K. The rise of artificial intelligence in healthcare applications. In Artificial Intelligence in Healthcare; Bohr, A., Memarzadeh, K., Eds.; Academic Press Imprint of Elsevier: London, UK; San Diego, CA, USA, 2020; pp. 25–60. [Google Scholar] [CrossRef]

- Patel, A.U.; Shaker, N.; Mohanty, S.; Sharma, S.; Gangal, S.; Eloy, C.; Parwani, A.V. Cultivating Clinical Clarity through Computer Vision: A Current Perspective on Whole Slide Imaging and Artificial Intelligence. Diagnostics 2022, 12, 1778. [Google Scholar] [CrossRef]

- Patel, A.U.; Mohanty, S.K.; Parwani, A.V. Applications of Digital and Computational Pathology and Artificial Intelligence in Genitourinary Pathology Diagnostics. Surg. Pathol. Clin. 2022, 15, 759–785. [Google Scholar] [CrossRef]

- Parwani, A.V.; Patel, A.; Zhou, M.; Cheville, J.C.; Tizhoosh, H.; Humphrey, P.; Reuter, V.E.; True, L.D. An update on computational pathology tools for genitourinary pathology practice: A review paper from the Genitourinary Pathology Society (GUPS). J. Pathol. Inform. 2023, 14, 100177. [Google Scholar] [CrossRef]

- How is AI Reshaping Health Research? Available online: https://wellcome.org/news/how-ai-reshaping-health-research (accessed on 16 December 2023).

- Special Issues. Available online: https://www.embs.org/jbhi/special-issues/ (accessed on 16 December 2023).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).