1. Introduction

Modern transportation systems and smart cities extensively use electric vehicles (EVs), which rely on lithium-ion (Li-ion) batteries (LIBs) as their primary energy storage solution. LIBs become an essential component to move from fossil fuels to sustainable transportation due to their long cycle life, low self-discharge, high energy and power density, and environmental advantages [

1,

2]. Global EV sales reached 1.7 million in March 2025 alone, contributing to a total of 4.1 million units sold in Q1—a 29% increase over March 2024 and a 40% rise over February 2025 [

3].

Thermal runaway (TR), defined as a self-accelerating reaction that causes g rapid temperature rise, fire, or explosion, has become one of the essential safety issues in LIBs [

4,

5,

6]. It represents a chain reaction phenomenon initiated by multiple factors. Under abusive conditions, including overcharging, elevated temperatures, and mechanical damage, lithium-ion batteries may rupture and emit highly flammable gases. The emitted gases pose a direct risk and will ignite upon reaching the ignition threshold. TR ultimately leads to combustion and explosion, presenting significant risks to life, property, and health [

7]. The threat of TR becomes more influential for user safety, infrastructure, and public confidence in clean energy technologies. The National Transportation Safety Board (NTSB) reports that more than 65 fire incidents involving EVs were recorded globally in 2023, with many linked to TR events [

8,

9]. Dealing with TR is, therefore, not only a technical challenge but also a strategic imperative to ensure the safe deployment of Li-ion technologies in the energy and transportation sectors [

7,

10].

Contemporary technologies can aid in identifying the potential for TR in lithium-ion batteries. TR is a severe safety hazard in LIBs. Overheating, overcharging, mechanical abuse, or internal faults can trigger it. Once activated, TR continues spontaneously because increasing temperature accelerates chemical reactions, producing even more heat. Thus, early detection and prevention are critical during battery design and operation. Machine learning (ML) is a domain of computer science that enables computers to learn and adapt autonomously, without explicit programming. This learning is achieved by employing algorithms and statistical models to evaluate data patterns and derive conclusions from them [

11]. Deep learning (DL) is a type of machine learning that utilizes artificial neural networks, employing numerous processing layers to extract increasingly sophisticated features from data. DL and ML have recently been employed in the detection of TR in lithium-ion batteries [

12]. The authors in [

13] compare five artificial intelligence algorithms (Multiple Linear Regression, k-Nearest Neighbors, Decision Tree, Random Forest, and Artificial Neural Networks) in predicting TR onset in lithium-ion batteries under external heating and compression. In [

14], the authors presented a new method for early fault detection and TR prediction in lithium-ion batteries for electric vehicles. The authors propose a multimodal unsupervised learning approach that does not require labeled data or significant pre-training, thus making it viable for real-world usage. The diagnosis begins with the extraction of fault-related features across three domains: time, frequency, and internal resistance. These are Time-Scale Metrics to highlight weak temporal fault patterns, charging resistance to detect internal short-circuits, and spectrograms to visually represent frequency-domain anomalies. Spectrograms are feature-extracted by Convolutional Neural Networks and subsequently reduced in dimensions using Kernel Principal Component Analysis. The system recursively fails by combining numerical and graphical features into one diagnostic model. Generative AI (GenAI) presents a set of techniques related to artificial intelligence that can generate new data samples similar to specific input data, like text, images, audio, or any other synthetic features. It includes tools such as Generative Adversarial Networks (GANs) [

15] and Variational Autoencoders (VAEs) [

16] that help machine learning and deep learning models work better with uneven datasets by making extra examples of the smaller groups and reducing overfitting by providing different training data that improves how well the model performs. In this paper, DL techniques integrated with GenAI tools will be used to detect and classify the TR status of the LIBs based on experimental observations from open-source battery failure data [

17]. Various evaluation metrics, encompassing prediction accuracy, confusion matrices, receiver operating characteristic (ROC) curves, and the significance of features in machine learning models, will be examined.

This paper fills the gaps of existing TR detection systems by presenting an enhanced ML method to detect and classify the main reason for TR in LIBs. Existing models still struggle under imbalanced class conditions, lack strong preprocessing techniques, and are limited in interpretability despite developments in ML and DL applications. This work combines DL/ML with generative artificial intelligence to efficiently handle class imbalance and enhance prediction reliability. Conventional DL models often operate as black boxes, lacking transparency. In contrast, a tabular DL model is proposed to enhance both performance and interpretability within LIB datasets. The comprehensive novel approach of this study enhances TR prediction employing balanced training data and interpretable models. Hyperparameters are fine-tuned based on the Optuna optimization framework. Moreover, using three-fold cross-validation guarantees strong model validation and prevents overfitting. The proposed method enhances model transparency and boosts classification performance through the integration of XAI techniques, thereby making it suitable for safety-critical battery monitoring systems.

This paper is organized into five main sections. The existing models used in detecting LIBS are illustrated in

Section 2. The proposed approach will be described in

Section 3. The results of applying the suggested approach are illustrated, discussed, and compared in

Section 4. This paper is concluded in

Section 5.

2. Literature Review

Lithium-ion batteries (LIBs) have become the foundation of modern EV technology due to their high energy density, long service life, and low maintenance needs. However, the increasing use of LIBs in high-capacity applications exposes vital safety concerns, particularly the risk of TR. TR can be defined as an uncontrollable rise in temperature that could lead to a fire or explosion. Traditional battery management systems predominantly rely on threshold-based monitoring, which is prone to overlooking slight faults in their early stages or providing late warnings regarding potential TR events. To overcome these shortcomings, researchers have moved more towards ML and data-driven diagnostic approaches in order to enhance fault detection accuracy and promptness, as well as TR prediction. With diversified battery signals such as voltage, temperature, current, and internal resistance, ML methods aim to identify anomalies, classify fault type, and predicit TR events in real-world applications. This section will review the most significant developments in this field by illustrating the best-known supervised and unsupervised learning methods proposed recently by researchers to improve battery health monitoring. To improve coherence and traceability, the literature review is organized according to the modeling approaches used. In every category, the evolution of methodologies is examined, and significant progress in years is highlighted with an aim to demonstrate the technological progress in electric vehicle battery forecasting and prevention of thermal runaway risk. As a foundational review and traditional modeling approach, the authors in [

18] offers a comprehensive review of the causes, behaviors, and mitigation strategies regarding fire in EVs using LIBs. TR is reported as the most common cause of battery fires by the researchers. The authors analyzed multiple real-world fire incidents involving electric vehicles, including private vehicles, electric buses, and hybrid vehicles, to illustrate how fires can occur both during charging and under normal operation of the vehicle. These case studies reveal the unpredictability of battery response under certain failure modes and the need for systematic recording and root cause analysis. Furthermore, the paper showed testing methodologies used to assess fire safety in EV batteries, highlighting the limitations of existing standards and the necessity for more comprehensive testing methods simulating real accident scenarios. It also discussed the challenges of fire suppression, noting that traditional extinguishing chemicals such as water or foam are generally ineffective or impractical to apply to battery modules. While the study provide a valuable taxonomy of battery fire accidents and describe the mechanisms of thermal runaway in electric vehicles, the research is limited by the absence of predictive modeling or quantitative framework for either assessing or preventing such incidents. The second category of research concerns early machine learning approaches, representing an initial transition toward predictive modeling for battery health monitoring and risk assessment. In [

17], the authors addressed the TR safety concerns of high-voltage LIBs in EVs. They proposed integrating Thermal Energy Storage systems, such as phase change materials and sensible/latent heat storage, with ML tools to improve fire protection during Vehicle-to-Grid and Grid-to-Vehicle operations. A customized Simulink model simulates real scenarios under various battery voltages (300 V, 400 V, 600 V, 800 V) and varying environmental conditions to ascertain how efficiently TES regulates battery temperatures. Simulations produced a rich dataset used to train ML models to predict thermal spikes. However, this study does not respond to real-time prediction capabilities.

In [

19], multilayer perceptron (MLP) neural network model was built for the estimation of the thermal-related health factor of LIBs in battery electric vehicles. Through real driving data of an MG ZS EV with a liquid cooling system, the researchers collected over 104,000 data records across variables such as state of charge (SoC), maximum charge (MaxCh), battery temperature (BT), vehicle speed, ambient humidity, payload weight, and cooling system data. The study is limited to specific driving datasets and on selected performance factors. The third category involves deep learning models for thermal runaway prediction

. A system for TR prediction in EV batteries using deep learning and simultaneous consideration of temperature and voltage was suggested in [

20]. A k-means clustering model was employed to refine the forecast by identifying diverse transition behaviors, and a time window of 7 min was used to preserve regulatory limitations as well as the trustworthiness of the forecast. While the model enhances feature sensitivity through attention mechanisms, it is limited by its reliance on specific voltage and temperature inputs, which may reduce its generalizability across different battery types and operational conditions. The authors in [

21] applied DL models to improve battery thermal management (BTM) of EVs and new energy vehicles. The study combine both predictive and control functionalities to enhance system efficiency. However, the limitation lies in the lack of real-world testing, which may affect the generalizability and practical deployment of the proposed model.

The fourth category concerns hybrid and multi-physics modeling framework. The study in [

22] introduced a method that combines multiphysics and machine learning to provide useful information for predicting the TR in real time, as well as a way to apply data-driven techniques to various areas of electrochemistry. The authors looked into how the solid electrolyte interface on the negative electrode forms and breaks down, which can affect the TR. To enhance prediction accuracy, the authors effectively integrate multi-physics modeling with deep learning, but the framework was limited by high computational demands and the need for extensive domain-specific parameter calibration. In [

23], the authors suggested a methodology that integrates computational fluid dynamics and deep learning for the early detection of battery thermal runaway. A hybrid model integrating a convolutional neural network and a long short-term memory neural network was utilized to forecast probable fire hazards in the battery pack by detecting aberrant heat generation. The proposed model is constrained by the complexity and computational cost of integrating high-fidelity simulations into real-time applications. In [

24], the authors introduced a resilient thermal runaway prediction methodology for LIBs employing a generative adversarial network. The model employed the charging curves of batteries as features in training to mitigate the unpredictability of sparse data and improve the model’s robustness. A resampling approach was introduced to achieve the standardized transformation of the charging curve, preserving the comprehensive charging data. The method utilized a one-class generative adversarial network to create a detection model that established a reference normal curve for the original battery charging operation, identifying probable irregularities. Actual accident data of electric vehicles that underwent thermal runaway and subsequent fires were utilized to validate the model’s performance. The authors in [

24] classified LIB accidents as “latent” and “sudden” failures, presenting a new feature indicator and a convolutional neural network for automated classification. The method examined data attributes associated with various accident causes and their relationship with thermal runaway mechanisms. A data-driven methodology was presented to identify causes and ascertain liability, facilitating on-site investigations. The proposed method is limited by increased training complexity and potential challenges in generalizing across diverse battery chemistries and operating conditions.

2.1. Research Gaps and Objectives

Despite the significant advancements in the application of ML and DL techniques for detecting thermal runaway in lithium-ion batteries, numerous research gaps remain:

Model performance is heavily restricted by imbalanced conditions, where some classes are under-represented, resulting in biased predictions and lowered reliability in safety-critical scenarios;

Utilization of generic architectures, including MLP and convolutional neural networks and neglecting robust tabular models like TabNet, which enhance interpretability and performance in structured data contexts;

The models exhibit a deficiency in interpretability due to the widespread use of DL techniques, which operate as black boxes among researchers;

Neglecting to address dataset balance, missing values, or the application of resampling techniques is critical in ensuring real-world reliability.

2.2. Main Contributions and Novelty of the Proposed Approach

To address the gaps and limitations of existing TR detection methods in lithium-ion batteries, this study presents a comprehensive and innovative approach that combines traditional DL techniques with GenAI tools to handle class imbalance issues. In addition, to better understand the impact of each factor on the TR study, XAI tools will be employed. Thus, the main contributions introduced in this paper will be summarized by the following main points:

Develop a hybrid pipeline integrating deep learning and generative AI methodologies to enhance fault prediction and ensure balanced training data;

Evaluate the effectiveness of tabular deep learning models (TabNet) and their interpretability in structured battery datasets;

Use of the Optuna framework for automated hyperparameter optimization guarantees that the models achieve optimal performance with minimal manual intervention;

Compare the efficacy and performance of tabular DL with traditional ML and DL techniques;

Examine the dataset imbalance, implement a GenAI tool as a resampling method, and evaluate their effects on classification metrics;

Employ explainable AI (XAI) methods to ensure model transparency and adherence to safety regulations.

3. Proposed Approach

Battery TR is a primary safety challenge for energy storage systems, especially for LIBS, in which it can induce fatal failure and serious conditions. Thus, effective and timely detection of TR events becomes an essential task for battery safety and reliability in power and EV systems. Building such a system is challenging due to the complex characteristics of battery systems, especially LIBs, which have complicated features, and there are many more normal operating conditions than failure cases in the available data. In this paper, an optimized ML-based model that uses GenAI techniques to handle class imbalance and Optuna for hyperparameter tuning is proposed. Four advanced classification algorithms—XGBoost, TabNet, MLP, and Support Vector Machine (SVM)—were tested to assess their ability to accurately predict battery TR events, even when the data is unevenly distributed. Indeed, SVM can handle high-dimensional feature spaces. In addition, TR circumstances are relatively rare, and datasets are often imbalanced; SVM produces excellent performance when dealing with a limited number of samples. Furthermore, systems that monitor battery health have widely used SVM for classification and anomaly detection, as mentioned in [

19]. On the other hand, XGBoost outperforms traditional ML models in classification tasks due to its efficient gradient boosting framework. TR prediction requires nonlinear relationships between different variables such as heater power, temperature gradients, and environmental factors. XGBoost can handle these interactions effectively. In addition, feature importance scores and SHAP values can be easily derived from XGBoost to recognize the most critical variables contributing to TR, aligning with the goal of root cause analysis in your work. Unlike other neural network models, TabNet provides built-in interpretability by learning which features to attend to at each decision step, which is critical in safety applications like TR prediction. It can be more robust to noisy or imbalanced input by means of attention masks and spare feature selection. Recent studies have considered hybrid deep models such as CNN-LSTM and GAN-based systems for battery safety [

23,

24]. As a lightweight and understandable deep learning choice for real-time deployment, TabNet naturally fits within this environment.

Explainable AI (XAI) methods such as SHAP (SHapley Additive exPlanations) are used to make prediction results clearer and more trustworthy, helping to understand which features are important and how the model works.

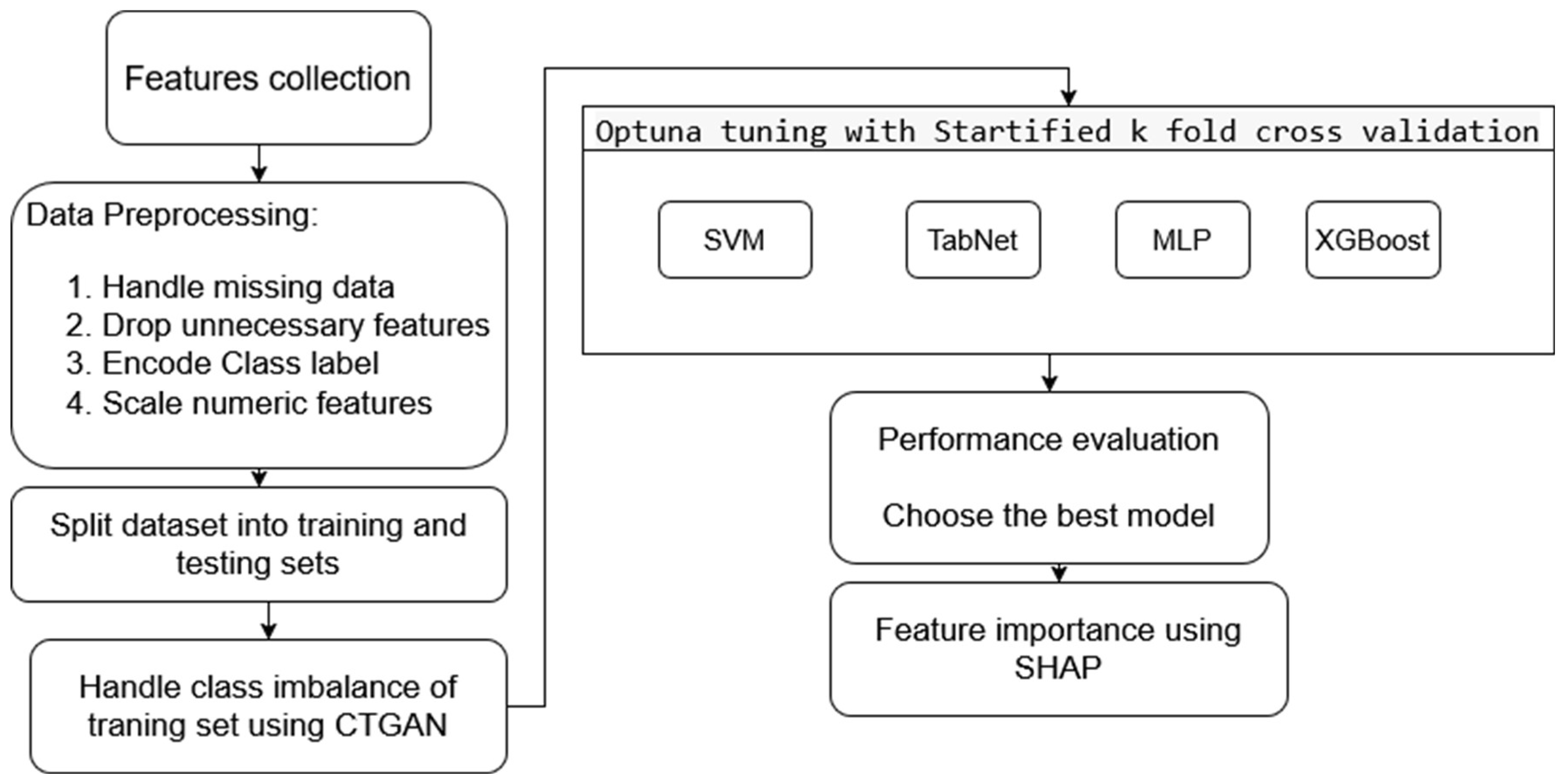

Figure 1 shows the main steps of the proposed model.

3.1. Preprocessing and Splitting Steps

In this step, the dataset collected from several batteries is loaded, and all irrelevant features, such as IDs and description texts, are removed. Initially, we remove the missing values to improve the encoding process. The battery failure causes the target variables to be re-extracted from the Trigger-Mechanism column. It includes three possible classes:

Internal short-circuit (ISC);

Heat;

Nail that represents the nail penetration test, which is a standard method used to simulate internal short circuits and assess battery safety under mechanical abuse.

After that, the features are numerically encoded using One-hot encoding to guarantee that all features are in a model-friendly numeric format. Finally, the dataset is decomposed into a training set (80%) and a testing set (20%).

3.2. Handle Class Imbalance

The class imbalance issue affects almost all real-world datasets, presenting some failure mechanisms less frequently than others. This imbalance issue can seriously bias ML models. Many resampling and generative techniques, such as Synthetic Minority Over-sampling Technique (SMOTE), Adaptive Synthetic Sampling Approach for Imbalanced Learning (ADASYN), and Conditional Tabular Generative Adversarial Network (CTGAN), can address this issue by producing a more balanced class distribution, thereby enhancing the model’s prediction ability. In this study, CTGAN will be used to generate additional data and enhance the classification performance of unusual LIB test situations, such as ISC, heat, and nail. It is an advanced DL technique that includes two main components: the generator and the discriminator. The generator creates new data similar to the actual dataset, while the discriminator separates between natural and generated data by giving suitable class labels [

25]. CTGAN offers several benefits over traditional oversampling techniques like SMOTE (Synthetic Minority Over-sampling Technique) and ADASYN (Adaptive Synthetic Sampling). Indeed, SMOTE and ADASYN count on interpolation between existing minority class samples. But when it comes to learning the joint distribution of tabular data, CTGAN gives credit to deep generative models. This capability permits it to create more realistic and assorted synthetic samples. Moreover, in CTGAN, the generation process is performed based on class labels, allowing for more targeted class balancing. It also minimizes the risk of overfitting by deflecting the production of synthetic points that are too similar to original samples [

26,

27].

3.3. Optuna Tuning with Stratified K Fold Cross Validation

As shown in

Figure 1, this study will implement, optimize, and compare ML, ensemble learning (EL), and DL techniques. The optimization step will be performed using Optuna. Optuna approaches hyperparameter tuning by framing it as a global optimization challenge, effectively seeking the most optimal parameters. In contrast to conventional approaches such as GridSearchCV, Optuna adapts search spaces in real-time according to prior evaluations, enhancing both efficiency and model performance [

28]. This study focuses on optimizing four classifiers: SVM, XGBoost, MLP, and TabNet. The hyperparameters of each classifier, as outlined in their respective subsections, underwent fine-tuning through the Optuna Bayesian optimization process. Each model received the subsequent operations:

Specify the hyperparameter search space;

Train the model utilizing several hyperparameter configurations;

Assess the model’s efficacy through the ROC AUC score;

Choose the optimal configuration according to the optimization procedure.

The optimal hyperparameters for each classifier are specified in their corresponding sections. To guarantee equitable assessment and prevent overfitting, 3-fold cross-validation is employed for model validation. This decision optimizes computational efficiency and resilience. Initial experiments indicated that augmenting the fold count (such as 5-fold or 10-fold) did not produce substantial performance enhancements, hence validating our choice of 3-fold validation.

SVM identifies the optimal hyperplane in the feature space to separate distinct classes. The primary hyperparameters for SVM are as follows [

29]:

C: A regularization parameter that governs the balance between reducing classification error and decreasing the weight vector’s norm.

Gamma: The kernel coefficient for ‘rbf’, ‘poly’, and ‘sigmoid’ kernels, which dictates the degree of influence exerted by an individual training example.

The optimization objective function utilizing Optuna is articulated as follows in Equation (1):

where

is the search space defined by Optuna and

is the set of hyperparameters.

- B.

Extreme Gradient Boosting (XGBoost)

XGBoost is considered one of the main powerful EL techniques. It is a tree-based classifier with optimizing performance through gradient boosting. It demonstrates high performance and effectiveness in handling structured/tabular data, especially in classification and regression problems. The learning capability of XGBoost is highly dependent on various hyperparameters, such as the following [

30]:

n_estimators represents the number of trees employed to create the boosting process;

max_depth controls the maximum depth of each tree. This parameter has a significant on the model complexity and potential overfitting;

learning_rate that fixes the step size at each boosting iteration. It alters the model’s convergence speed and stability;

The subsample represents the portion of training samples used to grow each tree, assisting in preventing overfitting;

colsample_bytree represents the proportion of variables employed by each tree. It assists in reducing the variance;

gamma is a regularization parameter that illustrates the minimum loss deduction necessary to create a new partition on a leaf node;

reg_alpha and reg_lambda are the L1 and L2 regularization parameters that assist in regulating the model complexity.

The objective function is set to “multi:softprob”. It is used for multi-class classification problems with probabilistic outputs. Optuna’s goal is to enhance the average accuracy across folds using Equation (1).

- C.

Tabular Network (TabNet)

TabNet is a type of DL model particularly designed for tabular data. It manipulates consecutive processes to identify the most significant features at each decision step [

31]. Distinguishable from other traditional DL models, TabNet employs a set of sparse feature selection and interpretability via its attentive transformers, which make it appropriate for situations that necessitate high accuracy and explainability. In this paper, Optuna is used to fine-tune the TabNet model to enhance its classification capabilities [

32]. The main hyperparameters tuned are as follows:

n_d represents the size of the decision prediction layer, managing the dimensionality of the decision output at each step;

n_a shows the size of the attention considered;

n_steps identify the number of consecutive decision steps that consider different parts of the input;

Gamma manages the relaxation factor that controls the balance between feature reuse and exploration;

n_independent and n_shared which represent the number of independent and shared Gated Linear Unit blocks, impacting the model’s ability to capture feature dependencies;

optimizer_fn and optimizer_params represent the optimizer and its parameters, like learning rate, that influence the convergence and stability of training;

mask_type indicates the sparse feature selection function.

The goal of Optuna is to increase the average accuracy across different folds. By repeatedly examining different hyperparameter combinations, Optuna can identify the parameters that yield the best performance using Equation (1).

- D.

Multi-Layer Perceptron (MLP)

MLP belongs to the family of artificial neural network models. It possesses multiple layers of neurons and employs nonlinear activation functions allowing for the learning of patterns [

33]. In this study, the MLP was configured with two hidden layers, each one encompassing batch normalization, Gaussian Error Linear Unit activation, and dropout to avoid overfitting. Tuning the MLP hyperparameters effectively is crucial for achieving optimal performance, as the model is highly sensitive to the design of neurons and layers. Optuna is used in this paper to investigate the best configuration using the following parameters [

34]:

hidden1 and hidden2 show the number of neurons in the first (from 64 to 512 with step size 64) and second hidden layers (from 64 to 256 with step size 64), respectively. They permit the model to improve its feature extraction performance.

dropout1 and dropout2 are applied after the hidden layers. They can take values from 0.1 to 0.5. They are used to prevent overfitting by randomly halting the training of neurons.

learning rate (lr) that manages the step size for parameter updates.

batch_size takes the following values: 32, 64, and 128 for equilibrizing gradient stability and training speed.

The MLP is adjusted with Optuna to enhance the mean accuracy across various folds. Through iterative analysis of various hyperparameter combinations, Optuna can ascertain the parameters that yield optimal performance as delineated in Equation (1).

3.4. Performance Evaluation and Selection of the Best Model

In this study, confusion matrix (CM), precision, recall, accuracy, F1-score, ROC curves, and the Area Under the Curve (AUC) score are used to evaluate the performance of the different models. Unlike binary classification, the CM for a three-class classification problem is illustrated in a 3 × 3 matrix where each row represents the actual class and each column represents the predicted class (see

Table 1). Each diagonal element represents to the number of correct predictions for each class (TP), while off-diagonal elements represent misclassifications (False Positives (FP) and False Negatives (FN) for each class):

True Positives (TPi): The number of instances correctly predicted as class i: .

False Positives (FPi): The number of instances that do not belong to class i but are incorrectly predicted as class i:

False Negatives (FNi): The number of instances that do belong to class i but are incorrectly predicted as another class:

True Negatives (TNi): All other correctly predicted instances that are not class i:

Table 2 presents the performance metrics deduced from the CM [

35]. As the problem is a three-class classification, the performance metrics are evaluated according to each class (

), where

: represents the accuracy of the model in detecting and classifying the class .

: shows the rate of positive predictions that are correct for class .

: represents the proportion of the real positive instances of class that are correctly detected.

: Harmonic mean of and .

Macro avg: computes the performance metric such as precision and recall for each class and then derives the arithmetic mean across all classes. The macro-average assigns equal weight to each class, irrespective of the number of instances.

The micro avg: derived from the , , , and of each class inside the model. It consolidates the contributions of all classes to calculate the average metric, assigning equal weight to each sample.

: Number of classes in the target variable.

The receiver operating characteristic (ROC) curve graphically illustrates model efficacy across different thresholds. The process involves calculating the true positive rate (TPR) and false positive rate (FPR) for each potential threshold, typically at predetermined intervals, and then graphing the TPR against the FPR. The area under the ROC curve, known as AUC, represents the probability that the model will rank a randomly chosen positive instance higher than a randomly chosen negative one. An AUC value of 1 signifies absolute assurance that the model will correctly rank a randomly chosen positive instance above a randomly chosen negative instance. The AUC indicates the probability that the model will position a randomly selected square to the right of a randomly selected circle, irrespective of the threshold applied.

The evaluation and comparison of the best model will be based on the recall for each class, the macro F1-Score, and the ROC-AUC metrics. Furthermore, the accuracy in identifying each category will be taken into account.

3.5. Feature Importance Using SHAP

SHAP (SHapley Additive exPlanations) is an effective method for elucidating machine learning models by quantifying the impact of each variable on the model’s predictions. It is founded on cooperative game theory. SHAP assigns an importance value to each feature according to its impact on the model’s performance across various scenarios. It ensures a fair allocation of awards across all conceivable factor groupings. Consequently, SHAP offers a more rigorous evaluation of feature significance compared to conventional techniques such as tree-based ranking. It enables the identification of critical elements influencing model performance [

36].

4. Results

This section presents the results obtained by applying the proposed approach. The experiment was performed on a workstation equipped with an Intel Core i7 CPU (3.6 GHz), 32 GB of RAM, and an NVIDIA RTX 3060 GPU. The hyperparameter tuning was performed using Optuna (50 trials per model). Due to variations in model complexity, the training step took an average of 40 min for XGBoost and 2.5 h for TabNet. After training the model, the testing on a single sample takes around 6 ms for TabNet (using GPU) and 0.3 ms for CPU-based XGBoost. Thus, both models are appropriate for real-world applications where low-latency prediction is crucial. First, the dataset will be described. After that, the results will be illustrated and compared.

4.1. Dataset

The goal of the fractional thermal runaway calorimeter (FTRC) is to measure the mass and heat released by the battery cell during the thermal runaway [

37]. In [

38], the authors carry out comprehensive experiments and establish a substantial open-source database of battery failure cases. This dataset covers several commercial cell types and their respective causes. This extensive collection of experimental data, utilizing different cell manufacturers and abuse types, serves as a significant standard. The thermal runaway of each cell was initiated utilizing three distinct misuse methods:

Table 3 encapsulates the quantified characteristics and the origins of the open-source battery.

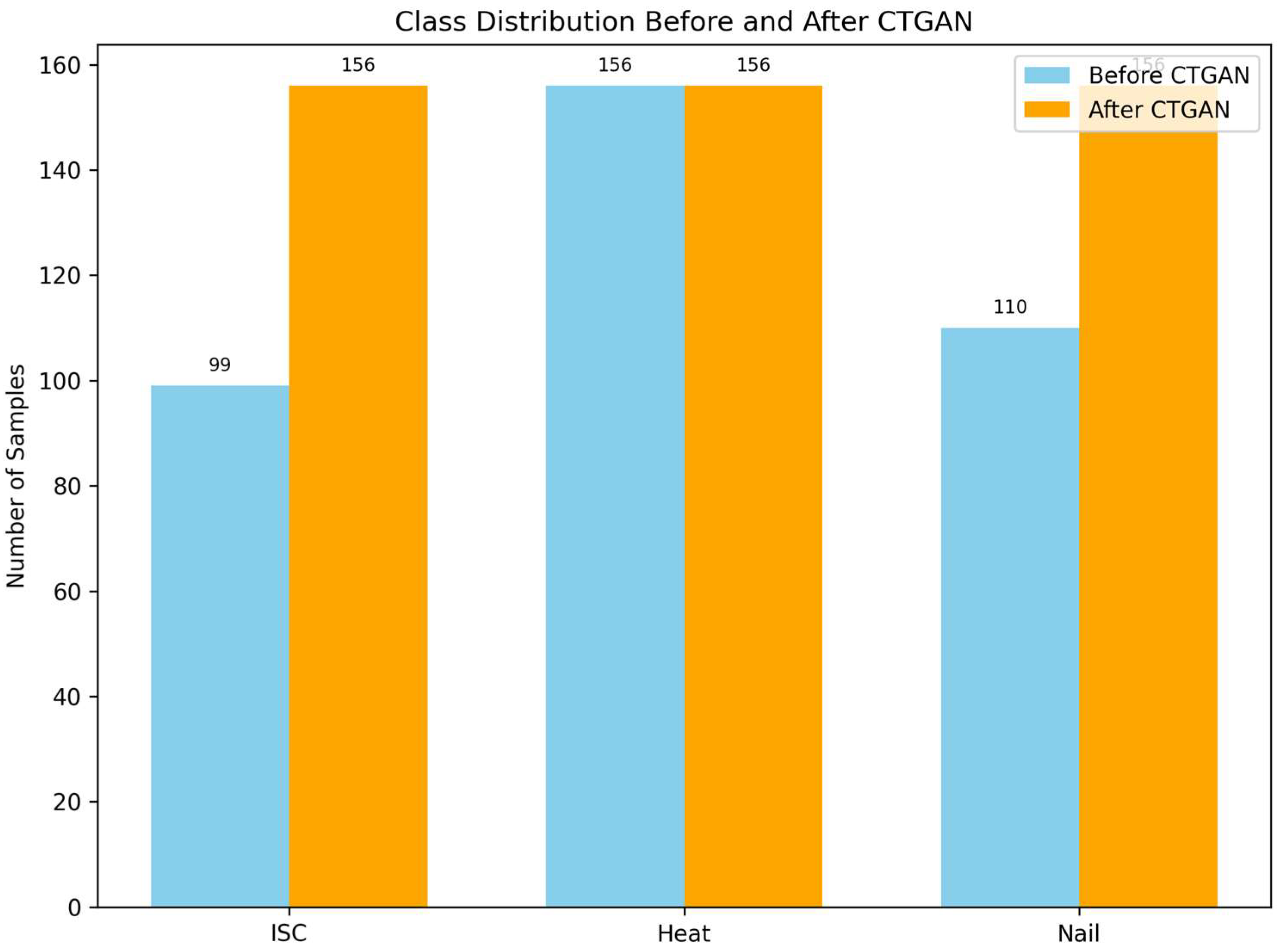

Figure 2 shows the class distribution in the dataset before and after applying CTGAN. As illustrated in this figure, the class distribution before CTGAN was very uneven, with 99 samples for ISC (27.12%), 156 samples for heat (42.74%), and 110 samples for nail (30.14%). Thus, to handle the class imbalance issue, conditional CTGAN was used to generate samples for the minority classes: ISC and nail. After resampling, the dataset becomes balanced with 156 samples for each class. As a result, every class receives equal representation. Better class distribution should make the trained classification model more robust, fair, and able to generalize.

4.2. Results and Discussion

The performance metrics resulting from the four models are illustrated in

Table 4. This table shows a comparison of the performance of the different models before and after applying CTGAN and Optuna techniques based on important measures such as per-class recall, average F1 score, and overall accuracy. As illustrated in this table, the models showed noticeable performance limitations, especially on the minority classes, ISC and Nail, before applying CTGAN and Optuna techniques. Indeed, all models failed in correctly identifying rare events (recall between 0.1 and 0.66). The overall accuracy and macro-average metrics were lower (accuracy between 68% and 82%), reflecting the negative influence of class imbalance and suboptimal hyperparameters on the model efficiency. These results show the essential need to implement both data balancing and hyperparameter optimization to enhance reliability and fairness in classification.

The XGBoost model outperforms all other models for all the evaluation criteria by achieving high recall for the three target classes. Indeed, these results indicate the capability of XGBoost in detecting all the instances of ISC, heat, and nail classes. The value of the macro F1 score is also equal to 1, showing that the classification performed by XGBoost is both balanced and precise. The high performance, especially the 100% accuracy for the XGBoost model, is reached after using CTGAN-based class balancing and Optuna-based hyperparameter tuning, which make the training conditions better. However, it is crucial to acknowledge that more complex datasets may not yield similar results.

The TabNet model achieves a high recall value of 1 in detecting and classifying heat and nail classes. However, the detection of ISC class is a slip-up with a recall of 0.75. It achieves a macro F1 score and overall accuracy of 0.930. These results point out that the TabNet model is a competitive DL model that is able to balance recall and precision well, particularly for imbalanced datasets.

The performance of the MLP model is lower. It achieves a recall value of 1.00 for the nail class, a high recall value of 0.91 for the heat class, and a moderate recall of 0.71 for the nail class. It achieves a macro F1-score value of 0.87 and an accuracy value of 0.87. The analysis suggests that MLP may misidentify ISC cases while succeeding in classifying nail and heat classes. This misclassification may pose a high-risk for safety applications. The SVM model shows the worst performance, especially for the ISC class, in which it achieves precision and recall values of 0.00. These values point out that the SVM model fails completely in detecting ISC events. The overall macro F1-score value is 0.59, and the accuracy value is 0.73, indicating poor generalization among the three classes. This failure demonstrates that SVM is not appropriate for such an application.

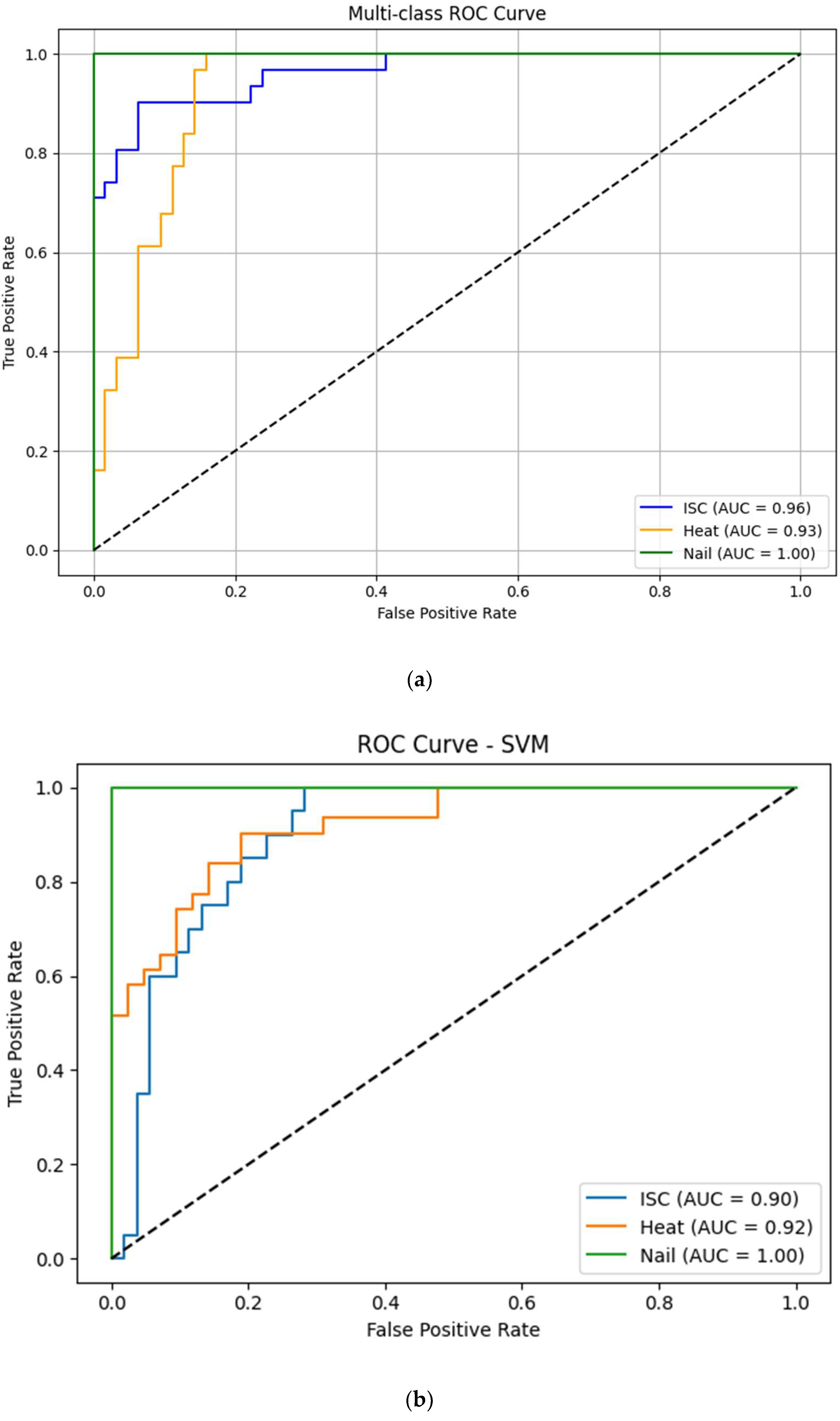

Figure 3 shows the ROC curves with AUC values for the four models. XGBoost and TabNet outperform the other models with AUC values of 1. These results indicate the high and strong capabilities of these models in handling imbalanced datasets and detecting all types of battery failures. They also succeed in separating the positive and negative classes of all failure types. The MLP model performs well with AUC values of 0.96 for ISC, 0.97 for heat, and 1 for nail classes. It demonstrates its high capability in identifying the different types of failure, especially for the nail class. The moderate performance for ISC and heat types suggests that the MLP may be sensitive to feature representations or class distribution. The SVM models achieve the worst performance, with AUC scores of 0.9 for ISC, 0.92 for heat, and 1 for nail classes. These values show that the SVM model fails in distinguishing between ISC and heat classes. This can be explained by the SVM kernel failing to catch the high-dimensional and nonlinear nature of TR LIBs data. All the above results show the XGBoost and TabNet models outperforming the MLP and SVM models in detecting and classifying the TR causes of LIBs. These findings support the use of EL and advanced tabular DL methods for important classification tasks in battery diagnostics.

The proposed approach shows high performance. However, it is crucial to mention that the dataset used in this study suffers from a critical issue. Even the current dataset shows real TR situations, but it is limited in size and may not encompass all the differences and complexities that real lithium-ion battery systems have. Therefore, to ensure the efficiency and the reliability of the proposed approach, future work should focus on gathering and combining more complex datasets that include various sources and settings. This would not only make the model more generalizable but it would also support its use in real-world battery monitoring applications where safety is very important.

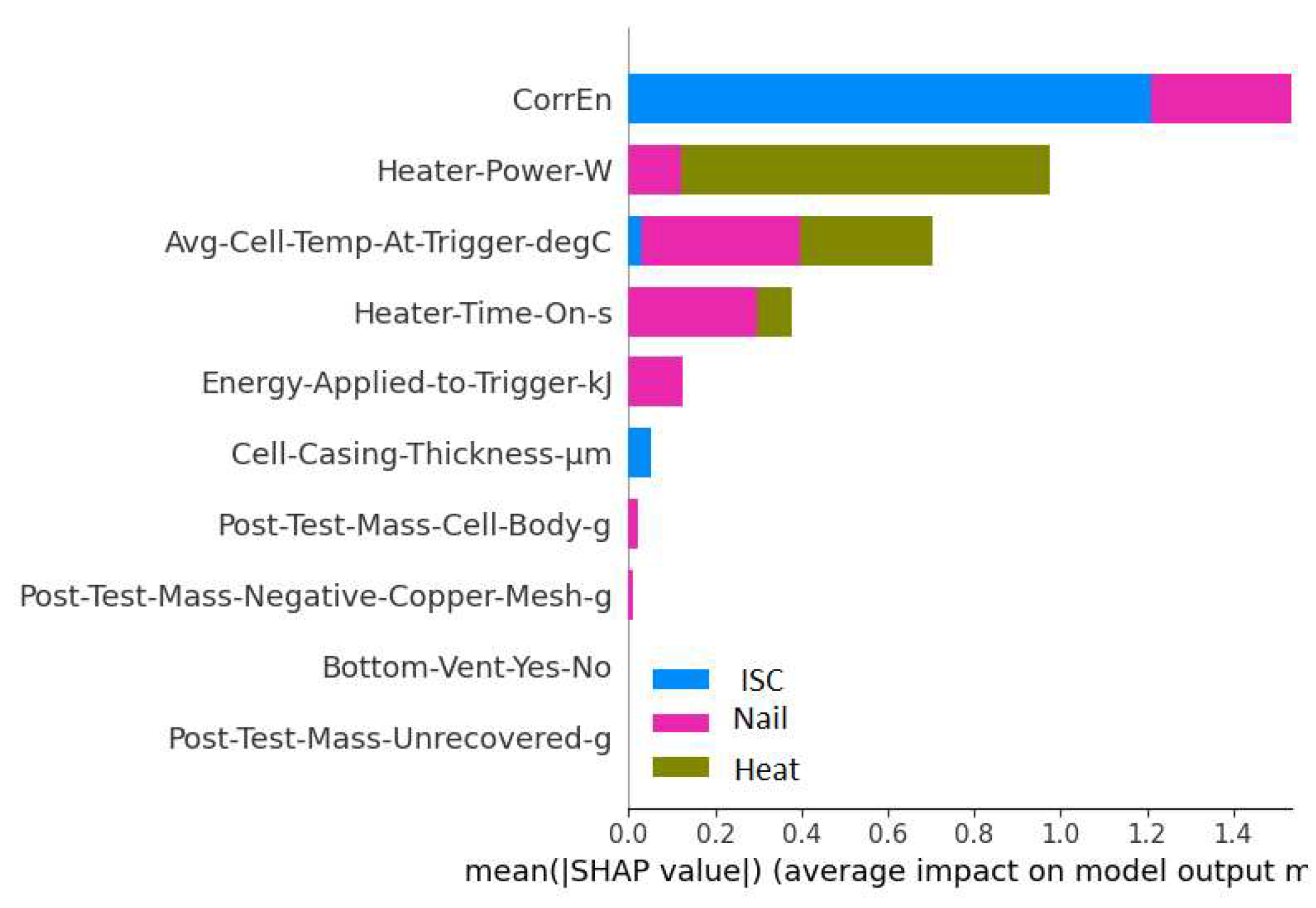

4.3. Shap Plots

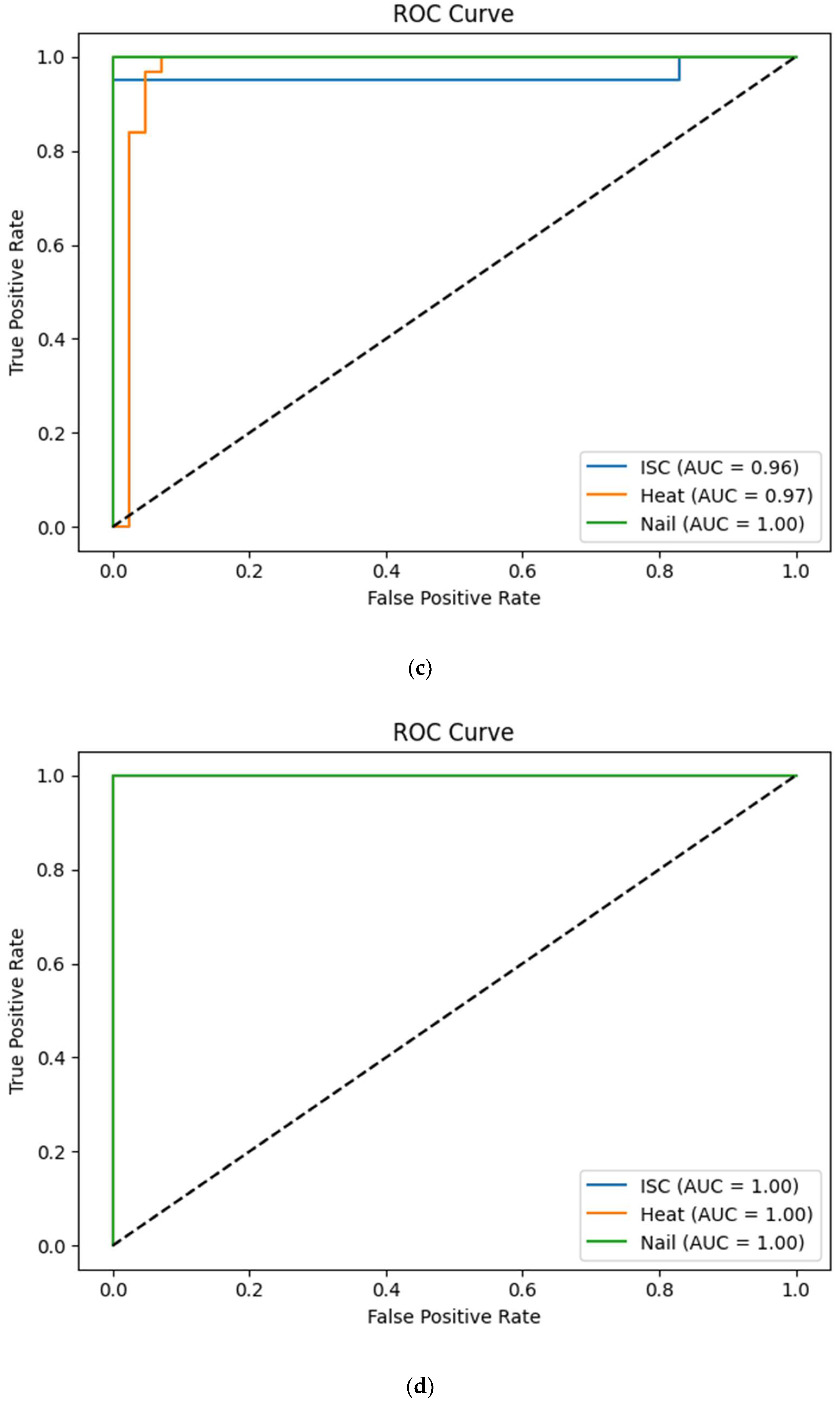

Figure 4 shows the SHAP summary plot, which explains the ten most important features that affect the detection and identification of TR causes: short circuit (ISC), nail penetration, and external heat. As illustrated in this figure, the most significant feature in all three classes is CorrEn, which indicates the energy corrected for heat and mass loss. This variable captures the efficient thermal output after accounting for the heat corruption and unrecovered mass [

39]. Therefore, CorrEn serves as the primary physical indicator of both the severity and nature of the failure event. However, it affects only two types of failure: ISC and nail. Indeed, CorrEn speculates the effective energy release during TR, corrected for heat and mass losses, and serves as a key indicator of the severity associated with ISC and nail-induced failures. Thus, the variable CorrEn, which represents the corrected measure of energy release during thermal runaway, showed significant correlation with ISC and nail events. While it is not a causal factor, its high SHAP importance proposes that it acts as a trustworthy indicator of the severity typically associated with these abuse conditions.

The other important features are the heater power (W), average cell temperature at trigger (°C), and heater time on (s). External thermal input, which is significant in relation to heat-triggered events, strongly influences these features. Even so, the “Heater Time On (s)” feature does not directly influence the nail penetration event, as external heating is not applied during this type of test. The illustrated influence of the “Heater Time On (s)” on nail-triggered cases is likely be due to the data correlation or the resampling generated by CTGAN. The variable “Heater-Time-On” has an influence also on heat-induced events. Indeed, it is directly related to the duration of external thermal exposure during testing. This variable can speed up electrochemical reactions, raise internal pressure, and disable the separator integrity. Thus, it can lead to TR. Other features, such as the post-test mass of the negative copper mesh and the status of the bottom vent, have a moderate impact. They show the mechanical and structural effects of the failure, and their smaller but steady impact points out that they help the model tell apart failures caused by causes such as nail penetration and ISC.

4.4. Comparison

Table 5 shows a comparison of AUC and accuracy values between existing models and the proposed approach. The models suggested by Choi, Y., and Park, P. [

40] employ different ML, EL, and DL algorithms such as SVM, Naive Bayes (NB), Decision Tree (DTE), and MLP. Among all these models, the DTE achieved the best performance with an overall AUC value of 0.9, showing high efficacy in detecting and identifying the three different causes of failures. It demonstrates impressive performance in ISC and nail classification with AUC values of 0.9296 and 0.9922, respectively. The proposed approach achieves high performance with TabNet and XGBoost models. Indeed, the TabNet model reaches an AUC of 0.97, 0.96, and 1.0 for heat, ISC, and nail, respectively, and an overall AUC of 0.93. However, XGBoost outperforms all other models by achieving excellent results with AUC and accuracy values of 1. This enhancement in efficacy and performance can be credited to TabNet and XGBoost capability to detect complex relationships and dependencies between features. Additionally, using a data augmentation technique like CTGAN to manage imbalanced datasets can enhance model accuracy and detection efficacity. CTGAN can offer high model robustness by generating realistic, varied data samples that conditionally resemble the current dataset. More data enhances the overall detection and classification accuracy by allowing models to learn more generalizable features, particularly for uncommon failure types such as ISC.

Furthermore, the use of a cutting-edge hyperparameter optimization tool like Optuna enables an automatic parameter tuning process. Systematic searching of the hyperparameter space using Optuna helps to further enhance the performance of models such as XGBoost, TabNet, and MLP. These techniques ensure that the models are trained using the best parameter configuration, therefore enhancing the generalization and providing quicker convergence. Finally, the models can reach almost excellent predictive accuracy. In the context of failure prediction, Optuna’s ability to fine-tune complex models is invaluable because even small improvements in accuracy can have significant real-world repercussions.

4.5. Theoretical Basis and Reliability of the Proposed Method

To achieve precise and effective classification, the suggested approach uses a DL tabular-based model, Optuna for intelligent adjustment of settings, CTGAN to manage class imbalance, and XAI tools. The CTGAN can generate various high-quality synthetic samples based on class labels. By producing more data for minority classes, it is able to address the class imbalance problem and avoid bias and impaired classifier performance. Indeed, a classifier trained on more generalized patterns generates more reliable predictions and classifications. The Optuna technique helps to quickly and easily explore various sets of hyperparameters by using a Tree-structured Parzen Estimator (TPE) along with a Bayesian optimization method. It reduces overfitting and ensures optimized performance and generalization. Stratified k-fold cross-validation, which ensures each fold maintains a representative distribution of classes, increases the robustness of the results. Thus, the model is less prone to bias toward any certain class. Therefore, the generalization on novel data can be improved, and the reliability of performance metrics, including accuracy, precision, and recall, is enhanced. Explainable AI (XAI) methods are employed to enhance model transparency and foster trust. Stakeholders can understand better, validate, and troubleshoot model predictions based on domain knowledge of the results provided by these techniques, such as feature importance and decision-making procedures.

5. Conclusions

Electric vehicles rely on lithium-ion batteries for energy storage. However, their safety remains a concern due to the risk of thermal runaway. Abusive conditions such as overcharging, overdischarging, or physical damage can trigger this chain reaction, potentially leading to fires or thermal runaway events that may significantly endanger health, property, and life. Over the past five years, there have been at least sixty significant fire incidents involving lithium-ion batteries. To address this issue, contemporary technologies such as machine learning and deep learning have been recently employed to detect potential thermal runaway in lithium-ion batteries. By ensuring the safety of electric vehicles and their potential applications in energy storage, these technologies can help detect and prevent thermal runaway. This study proposes an optimized machine learning approach to identify and classify the principal TR causes. CTGAN, a type of generative AI tool, was used to address problems with uneven class sizes and generate additional data to enhance the classification accuracy in unusual LIB test scenarios, such as ISC, heat, and nail tests. The Optuna tool was utilized for hyperparameter tuning of four models: SVM, MLP, TabNet, and XGBoost. Indeed, Optuna dynamically adjusts search spaces in real time based on previous evaluations, thereby improving both efficiency and model performance. To ensure a fair evaluation and prevent overfitting, a 3-fold cross-validation was employed for model validation. The proposed approach was tested and compared using an open-source database of battery failure cases that covers several commercial cell types and their respective causes. The result indicates that XGBoost and TabNet outperformed the other ML and DL techniques. In addition, the proposed approach with the XGBoost model achieved excellent accuracy and recall values of 1.00 for the three considered failure causes. This achievement results from employing a data augmentation strategy, such as CTGAN, to address imbalanced datasets, thereby improving model accuracy and detection efficacy. CTGAN provides substantial model resilience by producing realistic and diverse data samples that conditionally mirror the existing dataset. Having more data helps models detect and classify better by allowing them to learn more general features, especially for uncommon failure types like ISC. Moreover, using Optuna facilitates an automated parameter tuning process, improving the performance of models such as XGBoost and TabNet. Compared to existing methods, the proposed approach assists in enhancing energy efficiency and reducing emissions. Indeed, earlier and more precise identification of TR causes allows proactive battery management. As a result, the likelihood of harmful emissions will drop, and the necessity for battery degradation will vanish. Therefore, energy use becomes more efficient. Additionally, the model ensures strong validation and transparency by utilizing Optuna for hyperparameter tuning and integrating XAI techniques. Explainable AI techniques are used to detect the most significant features that may impact the TR. The results showed that energy corrected for heat and mass loss (CorrEn) is the most important attribute in ISC and may influence nail failures. Other essential features include heater power (W), average cell temperature at trigger (°C), and heater time on. External thermal input, which affects heat-triggered events, greatly affects these properties. It is important to note that these features do not necessarily cause TR but are indicative of conditions under which TR is more likely to occur. The SHAP analysis highlights their predictive importance through statistical correlation, not physical causality, and further investigation is needed to establish the underlying mechanisms driving these associations.

The dataset used reflects all TR causes, but it is limited in size and may not capture the complex scenarios presented in real lithium-ion battery systems. Consequently, it is essential to test the proposed approach on a larger and more complex dataset that includes various forms of factors and usage conditions. Creating a comprehensive, multi-source dataset will enable better adaptation of the model to various industrial applications.