Abstract

Given the climate change in recent decades and the ever-increasing energy consumption in the building sector, research is widely focused on the green revolution and ecological transition of buildings. In this regard, artificial intelligence can be a precious tool to simulate and optimize building energy performance, as shown by a plethora of recent studies. Accordingly, this paper provides a review of more than 70 articles from recent years, i.e., mostly from 2018 to 2023, about the applications of machine/deep learning (ML/DL) in forecasting the energy performance of buildings and their simulation/control/optimization. This review was conducted using the SCOPUS database with the keywords “buildings”, “energy”, “machine learning” and “deep learning” and by selecting recent papers addressing the following applications: energy design/retrofit optimization, prediction, control/management of heating/cooling systems and of renewable source systems, and/or fault detection. Notably, this paper discusses the main differences between ML and DL techniques, showing examples of their use in building energy simulation/control/optimization. The main aim is to group the most frequent ML/DL techniques used in the field of building energy performance, highlighting the potentiality and limitations of each one, both fundamental aspects for future studies. The ML approaches considered are decision trees/random forest, naive Bayes, support vector machines, the Kriging method and artificial neural networks. The DL techniques investigated are convolutional and recursive neural networks, long short-term memory and gated recurrent units. Firstly, various ML/DL techniques are explained and divided based on their methodology. Secondly, grouping by the aforementioned applications occurs. It emerges that ML is mostly used in energy efficiency issues while DL in the management of renewable source systems.

1. Introduction

1.1. Background

The energy issue is important today and will be even more so in the years to come, given the ongoing climate change and the increased energy consumption worldwide. It is estimated that the building sector is responsible for consuming approximately 40% of primary energy in Europe [1] and 32% in the world [2]. More specifically, this energy consumption becomes even more massive considering the thermal energy requirement for heating in locations with temperate and cold climates [3]. Furthermore, in recent decades, climate change and global warming have been modifying buildings’ energy performance, especially in large urban centres [4], with the consequence of an increasing need for thermal energy. Thus, since the construction sector is responsible for more than one third of energy consumption and climate-changing emissions, at the national, community and global levels, it is necessary to intervene, such as researching innovative technologies and methods that are climate-change-resilient.

Also from a community and regulatory point of view, the awareness of the need to minimize consumption and environmental impact emerges. The “2030 Framework for Climate and Energy Policies” of 2013 [5] highlighted the importance of the issues of energy independence and efficiency for Europe, thus implementing a large-scale process, currently ongoing. The community purpose is to achieve a reduction of at least 40% in the emissions for each Member State compared to the 1990 levels and the generation of at least 27% more renewable energy. In this way, the European Union (EU) directives favour an ecological transition at the macroscopic levels, considering economic incentives too. Furthermore, the “Roadmap for moving to a competitive low-carbon economy in 2050” (EU COM112/2011) envisages an even more massive reduction in climate-changing emissions, i.e., 80–95% by 2050, compared to the levels recorded in 1990 [6]. Moreover, the EU prescribes the target of nearly zero-energy buildings (nZEBs) for all new public buildings from 2019 and for all new ones from 2021 [7,8].

In Italy, the Minimum Requirements Decree [9] imposes several limits for minimum energy requirements and thermal transmittance in the design of high-performance buildings depending on the climate zone. Moreover, the recent economic incentive of the 110% Superbonus tried to speed up the energy renovation of the national building stock.

1.1.1. Building Energy Optimization

Minimizing the heating/cooling demand of buildings is possible with optimization methods. The study by Cho et al. [10], for example, refers to an energy-saving potential by balancing heating systems in buildings while guaranteeing the occupants’ thermal comfort. This can be extended to space conditioning in general, considering both heating and cooling systems. Optimization techniques turn out to be the key to the problem of minimizing energy consumption and emissions. Optimization enables the rationalization of decision-making processes, that is, finding the best solution while taking into account the chosen criteria and the imposed constraints. The optimal value obtained can be—for instance—the best result in terms of energy consumption or the lowest cost for obtaining good energy performance. Sometimes, the solution is not optimal but sub-optimal, especially in energy simulation problems where the domain can be huge [11]. Optimization can also consist of the implementation of brute-force or smart search algorithms, where a dynamic energy simulation tool (e.g., EnergyPlus v.8) works with an “optimizer” engine (e.g., MATLAB® R2021b) to select energy efficiency measures [12].

The green revolution must start from the energy transition of buildings through methodologies and technologies that can promote efficient retrofit strategies in relation to two different perspectives: the public perspective, which aims to minimize energy consumption and climate-changing emissions to promote sustainable development and energy independence; the private perspective, which is mainly interested in minimizing global costs while maintaining indoor comfort [13,14]. Generally, the decision maker has to find the optimal solution, taking into account multiple and sometimes opposite aims from public (e.g., public administration) and private (e.g., citizens and private promoters) actors. Thus, the best solution comes from a multi-objective optimization, exploring in this way different solutions and evaluating them with multiple criteria. Multi-objective optimization may concern the following, for example:

- Minimization of CO2-eq emissions [15];

- Achievement of thermal comfort [16];

- Minimization of energy requirements [17,18];

- Minimization of global costs [19].

The most used dynamic building simulation tools are EnergyPlus [20], TRNSYS [21], ESP-r [22] and IDA ICE [23], with good reliability regarding the simulation of energy retrofit measures and their performance but not totally user-friendly. Moreover, these tools sometimes risk high computational burden, from several hours to several days. Therefore, it is necessary to resort to faster methods, which using optimization algorithms, can manage a greater amount of data. In the energy efficiency sector, the most common algorithms are the simulation-based ones, where the simulations continue until a settled tolerance parameter is achieved. Generally, commercial programs find this parameter in a semi-random way, running several simulations in order to theoretically reach an acceptable value of tolerance. There are different types of simulation-based algorithms for optimization, such as the following:

- Differential evolution algorithms within multi-objective optimization models—for example, Wang et al. [24] used this method to determine optimal solutions for lifecycle costs of building retrofit planning;

- Meta-heuristic optimization algorithms—for example, Suh et al. [25] used the heuristic and meta-heuristic approach for the energy optimization of a post office building;

- Genetic algorithms—for example, Ascione et al. [26] proposed a new methodology for the multi-objective optimization of building energy performance and thermal comfort using a genetic algorithm to identify the best retrofit measures, and Hamdy et al. [27] developed a comprehensive multi-step methodology to find the cost-optimal combination of energy efficiency measures to achieve the standard of a nearly zero-energy building (nZEB);

- Particle swarm optimization algorithm—for example, Ferrara et al. [28] used this method to find the cost-optimal solution for building energy retrofit.

Innovative methodologies are therefore revolutionizing the world of energy efficiency, with dynamic simulation tools to model the building–plant system and through machine/deep learning techniques, mainly physically informed, and multi-objective numerical optimization methods to address building energy design.

The brute-force method (also known as exhaustive search) is an algorithm for solving a given optimization problem by investigating all of the theoretically possible solutions to find the optimal one.

However, when the solution domain is wide, such an algorithm becomes unfeasible from the computational viewpoint; thus, more efficient optimization methods are required [29]. In this regard, genetic algorithms have been frequently used for building energy optimization. They allow for even very complex optimization problems to be solved and are based on various phases reminiscent of Darwin’s evolution theory, simulating the evolution of a population of individuals (solutions) through the process of generation, selection, mutation, crossover and survival. For instance, a genetic algorithm application can be found in [30], where various multi-objective techniques were evaluated for the energy optimization of a hospital building in various possible scenarios. The objective in this case was to reduce overall costs and greenhouse gas emissions.

Another algorithm frequently used for building energy optimization is the particle swarm optimization, which reproduces dynamic collective systems of agents, such as swarms, used from an optimization perspective. It draws inspiration from some models that simulate the social behaviour of animals, such as flocks of birds that move in sync when searching for food. From this perspective, in particle swarm optimization, movement rules are assigned to individual agents who lead them to explore the spatial domain in search of critical points, e.g., the ones that minimize a certain objective function. An application of particle swarm optimization can be found in [31], where a multi-objective optimization of building energy performance was carried out. The particle swarm optimization method was used in conjunction with EnergyPlus to identify valid energy efficiency solutions.

In the multi-objective approach, the cost-optimal approach is largely used in order to reduce both lifecycle costs and energy consumption [27,32]. Multi-objective methods are often enriched to take into account other issues, e.g., discomfort and greenhouse gas emissions. An application of the multi-objective approach is the study of Wang et al. [24], where an optimization model for the lifecycle cost analysis and retrofitting planning of buildings was presented, considering different possible retrofit options in order to best use the available budget. A similar approach was used by Ascione et al. [26,33] for cost-optimal analyses by means of the multi-objective optimization of building energy performance. The cost optimal method can be found in the legislation for reference buildings too, which simplifies the approach and reduces the computational time for dynamic analyses. In this way, however, the best retrofit is designed only for the reference buildings [34], while with innovative techniques, e.g., neural networks, it is possible to analyse numerous case studies more efficiently. Due to its versatility, the cost-optimal method has been widely used in various scientific studies, both for residential buildings [35] and those belonging to historical heritage [36]. In this last study, the cost-optimal approach was applied by adopting a mainly macroeconomic method in order to define reference buildings for historical structures too, so as to quickly identify the best retrofit measures.

1.1.2. Surrogate Models: Physically Informed and Data-Driven

Machine/deep learning techniques are often used to create surrogate models, also called meta-models or “models of the model”, to simplify and speed up tools for building performance simulation and optimization, since in traditional approaches, the computational time can be excessive or unfeasible. The starting model can be replaced by a surrogate model, which reduces the complexity of the problem, thereby facilitating the search for the best solution. The surrogate models created via machine/deep learning can be based on the results of simulated data from physical models, i.e., physically informed models. In other cases, such models could be obtained from real data, such as those from monitoring and data acquisition, i.e., statistical data-driven models. Physically informed models (1) and statistical data-driven models (2) are better explained below:

- Physically informed models can solve supervised learning tasks while respecting the different physics laws described, e.g., using non-linear partial differential equations; thus, the model can be trained to respect both the differential equations and the given boundary conditions. Physics-informed machine learning extracts physically relevant solutions from complex modelling problems, even partially understood and without sufficient quantity of data, through learning models informed by physically relevant predetermined information [37].

- Data-driven models are computational models that work with historical data previously collected, e.g., by monitoring, and can link inputs and outputs by identifying correlations between them. In other words, data-driven approaches include raw data from real experience and observations. These procedures have the advantage of identifying correlations between variables and can lead to the discovery of new scientific laws or forecasting without the availability of predetermined laws [38]. Statistical data-driven models include statistical assumptions concerning the generation of sample data and are the basis of data-driven models’ functioning. Therefore, they have a set of statistical assumptions with a certain property, that is the assumption that allows for the probability of any event to be calculated.

Physically informed models find application, for example, in energy performance forecasting for building or stock/categories of buildings [33]. Statistical models are largely used in many sectors, e.g., the prediction of indoor air quality [39].

In the field of energy engineering, Swan et al. [40] identified two main techniques for estimating building stock energy consumption: top-down and bottom-up models. The first kind uses historical aggregate data to regress the energy consumption of the stock as a function of input variables such as macroeconomic indicators, e.g., energy price, and climate. In other words, top-down approaches can obtain outcomes while neglecting the complex building dynamic behaviour. On the other hand, bottom-up approaches operate at a disaggregated level because they use a representative set of buildings to extrapolate energy consumption as the outcome. This characterization has been amplified over time. Langevin et al. [41] identified methods as being white-box or black-box approaches too. The first ones assume knowledge of the building thermal balance and the resolution of physical equations, while the second ones are based on accumulated building data and implementations of forecast models developed by machine learning techniques. This new approach also permits other variables, e.g., occupants’ energy-related behaviours, environmental boundary conditions and uncertainties, to be taken into account. The idea is to bridge the gap between data-driven and physically informed procedures and tools towards an integrated and unified approach that can overcome the limitations of each technique by coupling them so that the advantages of one method can counteract the drawbacks of the other. In this way, the modelling strategy combines white-box and black-box approaches, resulting in a simplified physical approach, named grey-box, which is extremely useful when a problem cannot be completely solved by applying only one of the previously described methods.

1.2. Scope and Objectives

The present review paper tries to accurately synthesize the large amount of existing knowledge in order to discuss machine/deep learning applications in the building energy field. It focuses on recent studies, mostly from 2018 to 2023. This paper makes an accurate distinction between machine/deep learning techniques based on the used approach in order to highlight which approaches are mostly used in the field of building simulation, optimization and management. The investigated techniques are divided into four application fields:

- Energy design/retrofit optimization and prediction of energy consumption;

- Control and management of heating/cooling systems;

- Control and management of renewable energy source systems;

- Fault detection.

The main aim of this study is to guide current and future researchers and professionals in their decision to use one artificial intelligence method over another depending on the purpose of their research/task and their desired level of accuracy.

1.3. Significance and Relevance

Nowadays, the energy issue is of increasing importance, given global warming and the need to make buildings more energy-efficient. The most common reasons for pushing to resolve this issue are the need to consume and pollute less, and to improve thermal comfort at reduced overall costs. The ability of modern computers to store big amounts of data and to investigate them using queries means that cutting-edge artificial intelligence techniques can be exploited in the energy sector to improve the performance of buildings based on data monitoring and forecasting. In this way, occupants’ thermal comfort can be improved, greenhouse gas emissions and costs can be reduced, and energy can be saved without waste. The use of machine/deep learning therefore allows for the optimized design of a building–plant system and the consequent reduction in environmental impact.

This paper highlights which machine/deep learning approaches are best suited to the specific problem to be addressed, e.g., consumption forecasting and systems’ management, reporting results of previous studies as applications.

The main aspects that emerge from this study are as follows:

- Different machine or deep learning methods can be used to pursue the same goal, e.g., decision trees/random forest and neural networks are both frequently used for consumption prediction and systems’ control;

- More than one approach can be applied to obtain a more reliable result, e.g., using both machine and deep learning methods;

- Until now, most of the studies conducted are based on the energy performance optimization of individual buildings, while building stocks are still scarcely investigated. This aspect should be addressed to provide a large-scale ecological transition;

- Furthermore, numerous studies investigated the residential sector, which covers a significant part of the world’s building stock. However, studies concerning other energy-intensive building sectors are still few and, in any case, fewer in number than those concerning the residential sector. Also, this aspect needs to be addressed.

1.4. Previous Reviews on the Topic

Previous reviews on the subject have underlined the increasingly frequent use in recent times of artificial intelligence techniques to forecast building performance or to optimize its design and/or operation.

Predicting the energy performance of a building–plant system and the possibilities for improving indoor comfort are topics in which the use of artificial intelligence and techniques such as machine/deep learning are most frequent. The integration of occupant behaviour within the energy simulation of buildings is necessary for accurate and realistic modelling, as highlighted by Bordeau et al. [42]. In that review paper, particular attention was paid to data-driven methods. Given the ever-increasing progress of data transmission and exchange that allows for a connection between users, objects and networks, the need to store historical data in order to analyse them at a later time or to exploit them to predict certain behaviours thus emerged. This is possible with data-driven methods, as examined in the work by Mousavi et al. [43]. In that review, machine/deep learning techniques that find the most frequent application in energy prediction and management problems were analysed. Moreover, the focus was on green energy supply, occupants’ comfort and the use of the internet of things for energy issues. Particular attention was paid to data-driven studies, which have found increasingly widespread applications since 2019. Studies in which artificial intelligence was applied to energy-related engineering issues appeared to be more frequent in China and in the United States. That review also paid particular attention to the control and management of energy resources and the maintenance of indoor thermal comfort through machine learning methods. Specifically, in order to optimize occupants’ thermal comfort, data-driven methods were used to monitor air quality with sensors.

While most scientific studies focus on the energy performance of individual buildings, the review by Fathi et al. [44] researched machine/deep learning applications on an urban scale in order to predict energy consumption and to achieve better future management of energy resources. That paper put together such applications of building energy performance forecasting based on the learning method, building type, energy type, input data and time scale. Until now, the research highlights have shown how most attention has been paid to commercial, residential and educational buildings at both the individual and urban levels. More specifically, at the individual level, the most commonly investigated seemed to be commercial buildings while at the large-scale urban level, residential buildings. Furthermore, techniques such as artificial neural networks and support vector regression were mostly used in energy problems referring to a single building. These methods were also very popular in urban-scale approaches, where the random forest/decision trees methods are widely used.

The common objectives of numerous scientific studies on the subject therefore appear to be the minimization of energy consumption, the control and optimization of ventilation and air conditioning (HVAC) systems, the monitoring of indoor air quality to achieve thermal comfort and the reduction in environmental impact. Artificial intelligence, in particular, machine/deep learning techniques, allows for optimal solutions to be found in the cases mentioned above, with the possibility of adopting a holistic approach, therefore satisfying multiple needs. As reported in the review by Tien et al. [45], the search for the best method to solve a specific type of energy issue can be quite complex. That review analysed the most frequent machine/deep learning methods for building energy efficiency issues. In particular, deep learning techniques such as convolutional neural networks could be used as internal sensing to detect occupants’ presence and indoor air quality; this approach was more widely used after the COVID-19 pandemic.

The review by Pan et al. [46] referred to machine learning techniques too, in which the possibility of linking machine learning algorithms and optimization methods was investigated, using building information modelling (BIM) too. Thus, the user could work on the digital twin created in a BIM environment in which all data derived from the sensors are integrated to obtain more reliable and realistic results to reduce energy consumption.

1.5. Rationale for this Review

This paper aims to enhance the mentioned review papers by reporting recent examples of machine and deep learning applications in various fields of building energy simulation, optimization and management. The most widespread artificial intelligence techniques in this sector are outlined in order to direct future studies/research in this regard. In this way, it is possible to draw inspiration from this review by considering both the pros and cons of each described method in order to understand its applicability and limitations. Furthermore, knowledge gaps to overcome for future studies are identified in Section 1.3.

1.6. Outline of this Review

This paper provides a review of more than 70 articles from recent years, i.e., mostly from 2018 to 2023, about the applications of machine/deep learning to forecast the energy performance of buildings and their simulation/control/optimization. The SCOPUS database was used with the keywords “buildings”, “energy”, “machine learning” and “deep learning”. Recent papers were selected from these application fields: energy design/retrofit optimization, prediction, control/management of heating/cooling systems and of renewable source systems, and fault detection.

The remainder of this paper is organized as follows: in Section 2, the most frequent machine and deep learning methods in the simulation/optimization/control of building energy performance are examined; in Section 3, examples of these methods are reported, divided per application (as aforementioned); and finally, in Section 4, the main conclusions are drawn.

2. Machine and Deep Learning Methods

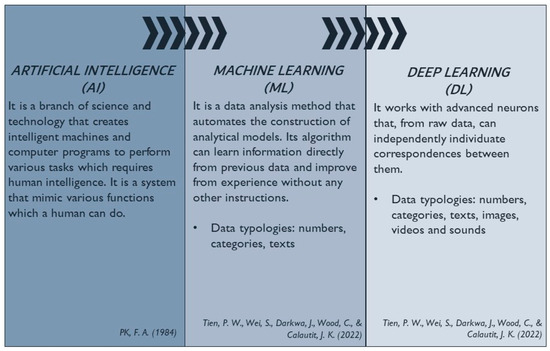

Artificial intelligence (AI) is a branch of science and technology that creates intelligent machines and computer programs to perform various tasks that would require human intelligence. It includes systems that mimic various functions that a human can perform [47]. The most common artificial intelligence (AI) techniques are machine and deep learning. They are both largely used in the energy sector.

Machine learning (ML) is a branch of AI and is a data analysis method that automates the construction of analytical models. In ML methods, data consist of texts, categories or numbers. The algorithm is a computational method capable of learning information directly from previous data, improving from experience without any other instructions [45]. In this way, ML methods can autonomously identify patterns and make decisions with minimal human intervention. As detailed in Section 2.1, they can be classified into the following:

- Physically informed or data-driven (statistical);

- Supervised, unsupervised or semi-supervised.

Many ML methods are based on artificial neural networks (ANNs), inspired by the functioning of the human brain, better explained in Section 2.1.3. They are computing systems made up of interconnected units (neurons) that process information by responding to external inputs using synapses, thus transmitting/handling/combining the relevant information between different units. ANNs can be used to replace a more complex model: when substitution with the surrogate model is advantageous since it is easier to manage, or when the starting model is completely non-existent.

A preliminary sensitivity analysis (SA) can optimize ANN generation and detect the parameters that affect the model’s outputs the most. SA is one of the most commonly applied parametric screening methods, used to reduce the dominant parameters influencing the outputs. SA finds application in various types of problems and allows for a reduction in computational burden. Generally, SA and parametric identification are conducted with reference to data disturbed by noise and allows for the effectiveness and robustness of the proposed procedure to be appreciated. SA is an expression of feature engineering, which refers to the process of selecting and transforming variables/features in a dataset while building a predictive model using machine learning methods. Feature engineering can extract useful properties from data using domain knowledge or established transformation methods. In this way, it is possible to prepare the correct input dataset, compatible with the requirements of the machine learning algorithm, and to improve model performance. Common feature engineering problems include cleaning data, handling missing data and transforming data. The processes of handling and transforming data generally involves scaling, clustering to group data and encoding data to create categories. As underlined by Boeschoten et al. [48], missing data can be a problem in datasets with many variables and a low frequency of entries. Missing values in a dataset can cause errors and poor performance with some machine learning algorithms. However, there are various possible approaches to solving the problem of missing data:

- Variable deletion involves deleting variables with missing values. Dropping certain data can be useful when there are many missing values in a variable and the variable is of relatively minor importance;

- Average or median imputation is commonly used with non-missing observations and can be applied to a feature that contains numeric data;

- The most common value method replaces missing values with the highest value tested in a function and can be a good option for managing categorical functions.

ML operations start from data acquisition and processing, in which, through statistical techniques, it is possible to filter only some data and to discard other data based on predetermined parameters depending on the issue to be managed. The time frame to be investigated must be carefully predetermined. The data derived from this phase are subsequently pre-processed and processed to obtain raw data that can be easily used in the algorithm, reducing its complexity. Finally, the model is tested for its prediction capacity, and the results are characterized by precise parameters that define its goodness of fit.

ML is useful for modelling and predicting outcomes. Examples of ML applications in the energy field include the design and optimization of a building–plant system in order to achieve, for instance, reductions in energy consumption, costs, greenhouse gas emissions, discomfort, etc.

Deep learning (DL) can be regarded as a subsection of ML, as showed in Figure 1. While in ML, the algorithm is able to learn from input data, in DL methods, it is possible to identify correlations between data and their characteristics too, using neural networks capable of managing a great amount of data, even of different natures and of high dimensions. DL is based on ANNs too but can also work with advanced neurons and raw data. The types of input data are different between ML and DL techniques, since in DL images, videos and sounds can be handled too. Similarly to ML, supervised DL makes forecasting processes and unsupervised DL identifies the correlations among the data [45].

Figure 1.

Differences between machine and deep learning. PK, F.A. (1984) [47]. Tien, P. W., Wei, S., Darkwa, J., Wood, C., & Calautit, J. K. (2022) [45].

DL is frequently used in energy consumption prediction, thermal comfort forecasting, occupancy and activity recognition, and fault detection. The only negative aspect of DL is the time needed for testing and training models, since in DL, there are many hidden layers between the input and output layers in order to achieve more reliable results.

In Section 2.1, there is first a focus on ML functioning and on its most commonly recurring methods in the building energy field, with some examples of applications; then, the same approach for DL methods is used in Section 2.2.

2.1. Machine Learning Methods

As anticipated in Section 2, ML is a set of techniques that can automatically distinguish patterns in data, and then predict future data or perform other kinds of decision making under uncertainty [44].

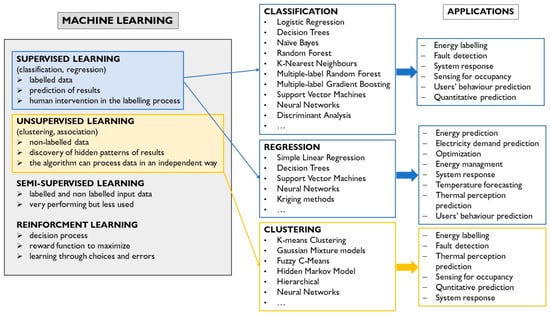

The three main types of machine learning are supervised learning, unsupervised learning and reinforcement learning, as summarized in Figure 2. These approaches have different ways of training models and thus have different processes of making the model learn from the data. Naturally, each approach can solve a precise type of problem or task depending on the different “strengths” of the approach itself and on the various categories of data to be handled. The main ML typologies are classification, regression analysis, clustering and data dimensionality reduction [44].

Figure 2.

Machine learning methods.

Supervised learning enables the building of a model starting from labelled training data, i.e., data about the feature, that can make predictions on unavailable or future data. Supervision here means that in the dataset, the desired output signals are already known because they have been previously labelled. Thus, in supervised learning, it is possible to obtain a map of inputs–outputs, both already labelled in the training phase, that aims to forecast the correct output when a different input is entered into the model in the test phase. This approach is useful for classifying unseen datasets and predicting outcomes once the model has learned the relationships between the inputs and outputs. Supervised learning requires human intervention in the labelling process, which is why it is called “supervised”. This ML method finds application, for instance, in prediction problems (e.g., forecasting future trends) and in the identification of precise categories (e.g., classifying images, texts and words), since it is able to classify objects and features.

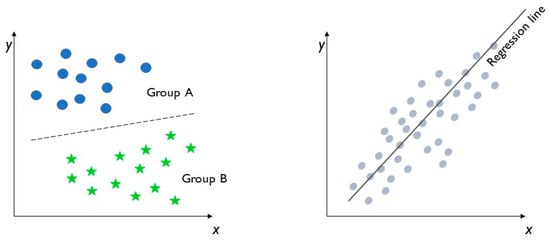

Supervised learning manages two types of problems, classification and regression, which are discussed below in (1) and (2). Their schemes are reported in Figure 3.

Figure 3.

Supervised learning: classification (left) and regression (right).

- (1)

- In classification problems, the goal, based on the analysis of previously labelled training datasets, is to predict the labelling of future data classes. Labels are discrete and unordered values that can be considered to belong to a known group or a class. Thus, in this case, the output is a category. Through a supervised machine learning algorithm, it is possible to separate two classes and to associate the data, based on their values, to different categories, as in Figure 3, on the left. The inputs and outputs are both labelled, so the model can understand which features can classify an object or data.Depending on the number of class labels, it is possible to identify three types of classification: binary classification, multiple-class classification and multiple-label classification. In binary classification, the model can apply only two class labels, such as in logistic regression, decision trees and naive Bayes. In multiple-class classification, the model can apply more than two class labels, such as in random forest and naive Bayes. In multiple-label classification, the model can apply more than one class label to the same object or data, such as in multiple-label random forest and gradient boosting.

- (2)

- In regression analysis, the approach is similar to classification but the output variables are continuous values or quantities. It is generally used to predict outcomes after the identification of connections between the input- and output-labelled data. The most used regression analysis supervised learning methods are simple linear regression and decision tree regression, as in Figure 3, on the right. In simple linear regression, the model firstly identifies the relationships between the inputs and outputs; then, it can forecast a target output from an input variable. In decision tree regression models, the algorithm structure is similar to a tree, with many branches. They are frequently used both in classification and regression problems. The dataset is divided into various sub-groups to identify correlations between the independent variables. Thus, when new input data are integrated in the model, correct outcomes can be predicted with the regression analysis on the previous data.

In unsupervised learning, unlike supervised learning, there are unlabelled data or unstructured data. This method is often used to make groups of data with similar features or to identify patterns and trends in a raw dataset. This is a more practical approach in comparison to supervised learning because human intervention is only involved in the selection of parameters such as the number of cluster points, while the algorithm processes data in an independent way, finding unseen correlations among them. Moreover, unsupervised learning can manage unlabelled and raw data, which are quite common.

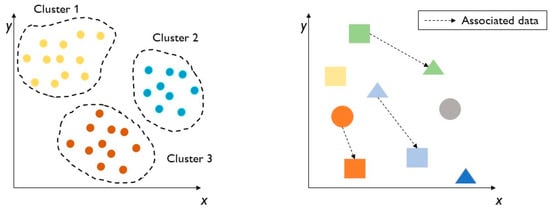

There are two main techniques for unsupervised learning, clustering (3) and association (4), as reported in Figure 4.

Figure 4.

Unsupervised learning: clustering (left) and association (right).

- (3)

- Clustering is an exploratory technique that allows raw data to be aggregated within groups called clusters, of which there is no previous knowledge of group membership. Clustering is an excellent technique that allows the structure to be found in a collection of unlabelled data, as in Figure 4, on the left. The main aim is to put an object in a precise cluster depending on its characteristics and to ensure that the cluster to which it belongs is dissimilar to other clusters [44]. The total number of clusters has been previously defined by data scientists, and it is the only human intervention in the clustering process. Irregularities can be found in the data located outside of the clusters, i.e., such data do not belong to the main clusters. The most commonly used clustering unsupervised identification methods are k-means clustering and Gaussian mixture models. In k-means clustering, k is the number of clusters, which are defined by the distance from the centre of each group. It is a useful method to identify external data, overlapping parts in two or more clusters, and data that belong only to one cluster. Gaussian mixture models are based on the probability that a precise datum or object belongs to a cluster.

- (4)

- Association is the process of understanding how certain data features connect with other features. This association between variables can be mapped, as happens in e-commerce, where some products are recommended because they are similar to others that the customer wants to buy. An association scheme is reported in Figure 4, on the right.

Sometimes, a dimension reduction is useful in reducing the number of random variables through feature selection or feature extraction, as reported by Fathi et al. [44]. This reduction can cause lower predictive performance, but it can also make the dimensional space more compact to keep only the most important information.

Semi-supervised learning can also be regarded as a type of machine learning, besides supervised, unsupervised learning and reinforcement learning, discussed later. Unlike unsupervised learning, among the data in the training set, only a few of them have been previously labelled while the remaining are unlabelled. When there is a need to manage labelled and unlabelled data together, this method can be really useful.

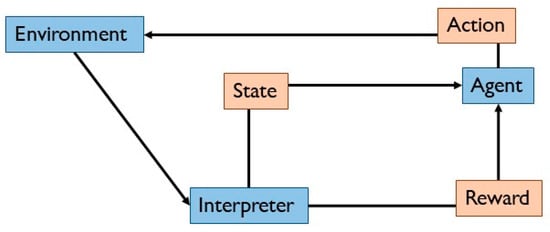

Another type of machine learning is reinforcement learning, whose scheme is reported in Figure 5. The main aim is to build a system that improves performance through interactions with the environment. It has an agent that maps the situation to maximize its numerical cumulative reward signal via a trial-and-error process [45]. The reward signal is an immediate benefit of the precise state, while the cumulative signal is the one that the agent wants to maximize through precise behaviour. The agent learns how to perturb the environment using its actions to derive the maximum reward. In order to improve the functionality of the system, reinforcements are introduced. This reinforcement is a measurement of the quality of the actions undertaken by the system. That is why it cannot be assimilated into supervised learning methods.

Figure 5.

Reinforcement learning.

The most common methods of ML are the following ones [44,49]; they will be detailed in the following sections:

- Decision trees and random forest;

- Naive Bayes;

- Support vector machines (SVMs);

- Kriging method;

- Artificial neural networks (ANNs).

Many of these use the mathematical modelling of training data when the nature of the data is not completely known at the beginning of the process. Depending on the data to manage, multiple methods are often used to make groups of data or to forecast future data as outcomes [50].

Some examples of their use in the scientific literature on building energy simulation and optimization are reported in Section 3.

2.1.1. Decision Trees and Random Forest

Decision trees are a particular type of machine learning technique that fits predictive models and/or control models well. As reported by Somvanshi et al. [51], the decision trees approach is one of the most useful and powerful algorithms in data mining because it is able to manage many, heterogeneous and eventually damaged data.

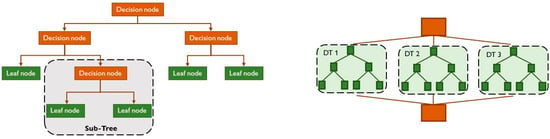

In the decision tree structure, there are two node types: decision nodes and leaf nodes. The first kind is the basic nodes; they take decisions, and from them, various branches start and represent the whole dataset. The second kind can be seen as final outputs without incoming branches. Leaf nodes are the response values until the final leaf, which represents a possible solution [52]. Other internal nodes have one incoming branch and different outcoming ones. Sometimes, the leaf may have a probability vector that indicates the probability of the target attribute assuming a certain value [49]. The problem to be solved is based on predetermined conditions to be respected. It is called a “tree” because the functioning scheme resembles a simplified natural tree, with a root node at the base and various branches that expand from it to form a tree structure, as can be seen in Figure 6 on the left.

Figure 6.

Decision trees (DTs) (left) and random forest (right).

This approach is useful both in classification and regression problems. When the target variable can take a discrete set of values, the models are called classification trees. In these tree structures, leaves represent class labels and branches represent conjunctions of features that lead to those class labels. When the target variable can take continuous values, e.g., real numbers, the models are called regression trees.

The CART algorithm (classification and regression tree algorithm) can create a tree. Its functioning is based on a simple categorical architecture of “yes” and “no”; thus, it works as a binary tree [51]. A decision tree can mimic the human thinking process when a decision is made; therefore, it is simple to apply. Moreover, the algorithm can be divided in different sub-trees to further simplify its structure through the splitting process, which divides the decision node into sub-nodes according to boundary conditions. A splitting tree can also be called a sub-tree. Sometimes, it is useful to prune the tree, removing any unwanted branches from the tree, i.e., from the decision algorithm. Similarly to genetic algorithms, the root node is the “parent node” and the other nodes are called “child nodes”.

To predict the class of the given dataset, the root nodes are the starting points of the algorithm; the inserted attribute value and the recorded value are compared; and then, the information goes further to another node, i.e., a sub-node, where, again, another comparison is performed between the attribute and recorded value. The process continues until the leaf node is reached. Then, how is the best attribute for each node selected? It is selected by using the attribute selection measure (ASM). There are two types of ASM: information gain and the Gini-index. The information gain measures the entropy change after the subdivision of an attribute dataset, with the aim to maximize the value of information gain. The Gini-index measures the impurity or purity used while creating a decision tree in the CART algorithm, with the purpose of maximizing the Gini-index. In Equation (1) [53], the Gini impurity expression is reported, where Pi is the probability of an object being classified as a particular class and c is the number of classes.

Naturally, any small changes in the input data can change the overall look of the decision tree [51].

In cases of overfitting, too many layers or too big decision trees can be handled with the random forest algorithm, useful for more complex problems. In the same way as decision trees, random forest algorithms find applications both in classification and regression. As reported in [54], the random forest approach only needs two parameters to create a prediction model, i.e., the number of regression trees and the number of evidential features that are used in each node to make regression trees grow. Thus, random forest algorithms combine multiple classifiers to solve complex problems and to improve the performance of the model, as reported in the scheme in Figure 6, on the right. The more decision trees there are, the greater the accuracy of the results. One decision tree may not lead to the correct output but different decision trees working together can.

In the following, various applications of decision trees and random forest methods in the building energy sector are presented.

Chou et al. [55] used classification and regression tree models for the prediction of heating and cooling loads in comparison with SVMs (support vector machines) and ANNs (artificial neural networks) to obtain an energy-efficient building design. Almost 800 case studies were considered to observe that all the aforementioned methods were extremely valid in energy consumption forecasting, but the best ones were SVMs and ANNs working together because of their accuracy and reliability.

Better results were obtained for decision trees in the study by Sapnken et al. [56], in which energy consumption was estimated for many buildings using nine ML approaches. Among them, decision trees showed the highest computational efficiency and the best learning speed.

The decision tree method was also applied in a recent study by Cai et al. [57], in which a greenhouse’s internal temperature was predicted and controlled via microclimate modelling. Controlling a greenhouse to improve its energy efficiency is fundamental to increasing crop yield. That study was based on a gradient boost decision tree, a particular type of decision tree, and the dataset was obtained following five years of monitoring. The results showed a particular fitting ability and a notable predictive accuracy.

Chen et al. [58] focused on energy consumption predictions using a level-based random forest classifier. In particular, to forecast the energy load and to compare its value with historical data, a regression model was applied, organized on multiple levels. Excellent performances have been discovered for this method. The only negative aspect is that, when the number of levels of the random forest algorithm increased, its accuracy seemed to reduce.

2.1.2. Naive Bayes

Naive Bayes is a classifier algorithm created in the 1960s, based on Bayes’ theorem. It is a family of statistical classifiers used in ML. It is called “naive” because the starting hypotheses are very simplified. In particular, the various characteristics of the model are considered independent of each other. To use the Bayesian classifier, it is necessary to previously know or estimate the probabilities of the problem [49]. Therefore, it is a probabilistic algorithm: it calculates the probability of each label for a given object by looking at its characteristics. Then, it chooses the label with the highest probability. To calculate the probability of labels, the Bayes’ theorem in Equation (2) [49] is used.

In Equation (2), P(A|E) is a conditional probability of event A considering the information on event E, and it is also called the posterior probability of event A because it depends on the value of E. Similarly, P(E|A) is the conditional probability of event E considering the information about event A, and it is also called the posterior probability of event E because it depends on the value of A. P(A) is the a priori probability of A, i.e., the probability of event A without considering event E, and it is also called the marginal probability of A. In the same way, P(E) is the a priori probability of E, i.e., the probability of event E without considering event A, and it is also called the marginal probability of E. As just illustrated, this method is entirely based on conditional probability, i.e., the probability of an event when some information is already given. The Naive Bayes algorithm represents a family of algorithms. The most recurrent ones are as follows:

- Naive Bayes categorical classification, where data have a discrete distribution;

- Naive Bayes binary classification, where data assume values of 0 or 1;

- Naive Bayes integer and float classification, where a naive Gaussian classifier is used [59].

The Naive Bayes algorithm is quite simple to use. Despite that, it still solves some classification problems very well today with reasonable efficiency. Moreover, it is able to handle incomplete datasets thanks to its ability to identify the relationships between the input data [60].

However, its application is limited to a few specific cases. The problem is that this algorithm requires knowledge of all the data for the problem, especially simple and conditional probabilities. This information is often difficult to obtain a priori. The algorithm provides a simple approximation of the problem because it does not consider the correlation between the characteristics of instances.

In the following, various applications of the naive Bayes method in the building energy sector are presented.

In [60], the Bayesian approach was used to forecast electricity demand in residential buildings’ smart grids, with prediction time lapses of 15 min and 1 h. The dataset for the Bayesian network was made up of real measurements from sensors. In particular, this method was successfully applied to find the dependencies between different contributing factors in the prediction of energy demand, since the model to be assessed was complex and had many variables.

Hosamo et al. [61] used the Bayesian method for improving building occupant comfort for two non-residential Norwegian buildings. In this work, the building information model was enriched both by historical data and real-time data obtained from sensors that registered occupants’ feedback. In this way, more accurate management and control of the plant system could be achieved with less energy consumption. The results showed significant energy-savings.

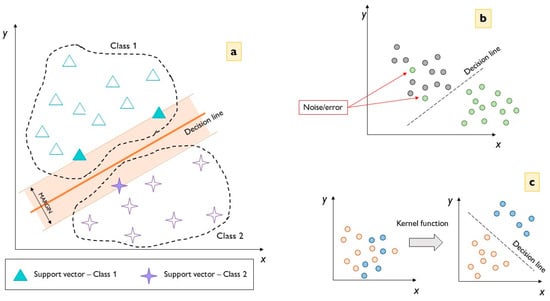

2.1.3. Support Vector Machines (SVMs)

Support vector machines (SVMs) are another typology of ML and can be used for both regression and classification tasks [55], but they are widely used in the latter problems. SVMs were first introduced by Vapnik et al. [62] only for linear classification and are still used nowadays for more complex problems too [54].

The application of SVMs requires positioning the dataset within a hyperplane consisting of multiple planes where each one represents a characteristic of the problem. The objective of SVMs is to find the class separation line that maximizes the margin, i.e., the distance between data points of both classes. This approach is necessary because there are various possible hyperplanes that can be chosen to separate two classes of data and the maximum margin approach enables more reliability in the classification of future data [51].

Once the hyperplane is known, the algorithm calculates its distance from the sides of the given dataset. Only when this distance is maximized for both sides is the decision boundary identified. The process of calculating distance is repeated for each possible hyperplane in order to find the best decision boundary with the maximum distance from the dataset.

The hyperplane is a line in a R2 feature space (as in Figure 7a) and a plane in R3 if there are three input features. Naturally, the feature space RM with M-features can have more than three dimensions too but becomes more complex. Thanks to a hyperplane, data points can be classified more easily since it represents a decision boundary that determines the position of points in the space.

Figure 7.

SVM hyperplane: (a) feature space scheme; (b) noise example; (c) Kernel function.

In order to identify a hyperplane, only a minimum amount of training data, the so-called support vectors, is used. Hence, the name of the model family. Support vectors are the points belonging to the dataset with minimum distance from the hyperplane, and sometimes, this distance is assumed to be zero. In this latter case, the support vectors reside on the edge of the margin. Through this process, the maximum margin of the classifier is identified. Changing the position of the support vector consequently changes the position of the hyperplane too, while changing the position of other data will not move the hyperplane.

Sometimes, it is not possible to identify a line as a hyperplane but a curve to divide data. Curve decision boundaries can still be used, but a linear boundary can simplify the approach. In this way, noise is eliminated but is then necessary to neglect some data points that could create noise problems, as in Figure 7b. Anyway, with non-linear data, the Kernel spatial function has to be used in a multi-dimensional feature space (Figure 7c) [51].

The main difference between the SVMs and traditional classification algorithms is that, while a logistic regression learns to classify by taking the most representative examples of a class as a reference, SVMs look for the most difficult examples, those that tend to be closer to another class, i.e., the support vectors. Naturally, if the margin is greater, the distance between the classes will be greater, with less possibility of confusion and more reliability.

In the following, various applications of SVMs in the building energy sector are presented.

Dong et al. [63] used SVMs to predict building energy consumption in a tropical region, considering four case studies in Singapore without neglecting the dynamic parameters such as external temperature and humidity. Similarly, Ahmad et al. [64] applied SVMs to predict data derived from a solar thermal collector.

Greater attention to the industrial sector was provided by Kapp et al. [65], who predicted industrial buildings’ energy consumption using ML models informed by physical system parameters. Their research focused on the connection between energy consumption and weather features. That research seems to be one of the few about industries, since this sector has various and difficult-to-manage characteristics, first of all the lack of a precise dataset. In these conditions, SVMs performed very well in overcoming numerous obstacles.

An example of SVM application in the residential sector is the study of Kim et al. [66], where a small-scale urban energy simulation method was used, integrating building information modelling and SVMs to predict energy consumption. In this work, attention was mainly paid to the surrounding context, i.e., neighbouring building volumes, heights and spaces between buildings, that could influence the building’s energy behaviour.

2.1.4. Kriging Method

The Kriging method is a family of geostatistical procedures useful for solving regression problems, which assumes the presence of a spatial correlation between measured values. Geostatistics, better known as special statistics, is the branch of statistics that deals with the analysis of geographical data. The Kriging method is largely used for interpolation and prediction issues. This technique takes its name from a South African mining engineer, D. Krige (1951), who developed empirical methods to predict the distribution of mineral deposits underground [67]. Other further studies on the Kriging method can be found in research articles and books by G. Matheron [68], J.D. Martin [69] and N. Cressie [70].

This method provides not only the interpolated values but also an estimation of the amount of potential error in the output. However, it requires a lot of interaction with the operator to be correctly used. Once the values of a certain monitored quantity are known in only some points, the Kriging method enables the values of the quantity to be deduced, even in points where there have been no previous measurements, with good approximation. This is based on the assumption that the quantity varies continuously in space. In other words, Kriging models are global rather than local, since they are based on measurements of large experimental areas [71]. As reported by Eguia et al. [72], a Kriging method is a weighted interpolation method comprising a family of generalized least-squares regression algorithms that can determine spatial information well. It fits non-linear problems well, especially in practical applications, e.g., temperature or rain quantity estimations. It offers a prediction and forecasting of the error. Thus, Kriging outcomes can quantify the reliability of the prediction too.

In this approach, a variable is considered, where is a trend function and is the error; represents the position of a precise location. As in Equation (3) [72], depends on explanatory variables . The coefficients that has to be estimated are with .

In linear regression problems, does not depend on the position, while in non-linear regression cases, it depends on the position and has to be determined through generalized least squares [72]. Kriging will give an estimation of the error .

There are various applications of Kriging methods:

- Ordinary Kriging, to be applied where the average of the residuals is constant, i.e., the trend is constant, throughout the studied domain;

- Simple Kriging, to be applied in the case in which the average of residuals is constant and known;

- Universal Kriging, to be applied when the average of residuals is not constant and the law of autocorrelation presents a trend, and is useful in forecasting random variables with spatially correlated errors;

- Co-Kriging, to be applied when the estimate of the main variable is not based only on values of the examined variable but also considers other auxiliary variables, correlated with the target variable.

In the following, various applications of the Kriging method in the building energy sector are presented.

Hopfe et al. [73] performed an evolutionary multi-objective optimization algorithm (SMS EMOA) with Kriging meta-models in order to minimize energy consumption and discomfort hours. Tresidder et al. [74] used a Kriging surrogate model to minimize annual CO2 emissions of an analysed building and its global cost. Eguìa et al. [72] generated weather datasets using Kriging techniques to calibrate building thermal simulations in a TRNSYS environment.

Almutairi et al. [75] focused on solar irradiance and the efficient use of energy in the territory of the Sultanate of Oman. First, climate maps were drafted to determine potential points for the construction of a zero-energy building. A Kriging method was used, where wind speed and sunny hours were taken from real measurements. Then, in these potential geographical points, the thickness of the thermal insulation and the influence of the building’s orientation on the gain/loss of thermal energy were evaluated. For these buildings, energy and electricity consumption and the optimal solar panel position to cover them were assessed.

Another recent study that used Kriging methods is that by Kucuktopcu et al. [76], where a spatial analysis was carried out to identify the optimal insulation thickness and its spatial distribution for a cold storage facility. In particular, ordinary Kriging was applied to find the optimal insulation thickness, considering an economic analysis and the geostatistics patterns.

2.1.5. Artificial Neural Networks (ANNs)

In general, artificial neural networks (ANNs) are a machine learning method that can manage classification/regression problems through artificial intelligence [51]. They find application mainly in pattern recognition and in prediction problems. ANNs are called “neural” because their functioning is similar to human neurons.

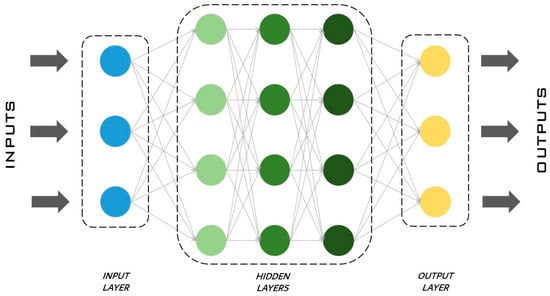

The ANN process identifies the relationship between inputs and outputs by studying recorded data from the original model. ANNs contain artificial neurons called units that are organized into various layers and together constitute the whole ANN. In the information transferred from one layer to another, the neural network learns more and more about the data.

The neural network is composed of a series of layers [77], an input layer, hidden layers and an output layer, as shown in Figure 8. The input layer is the first layer: it receives data, learns from them and transfers information to the hidden layers. In the hidden layers, each neuron receives inputs from the previous layer neurons, computes them and passes the most relevant information to the output layer, reducing computational time when redundant information is discharged. As underlined by Uzair et al. [78], there can be just one or more hidden or intermediate layers depending on the type of problem to be solved. The activation of each neuron in a hidden layer is computed, e.g., as in Equation (4) [55]. The output layer is the last layer and returns the results. In the activation function, is the activation of the neuron number k; j is the number of neurons in the considered layer, and is the connection between k and j; is the output of neuron j; and is the transfer function.

Figure 8.

Scheme of an artificial neural network.

Naturally, depending on the case study, ANNs can have more or less hidden layers, thus influencing the output parameters differently. When the network is trained, it becomes able to figure out how to respond to certain inputs by providing certain outputs, and therefore, it replaces the model.

As already mentioned, an ANN scheme is similar to that of a natural brain: in fact, they notably share some elements in common [55]. Similarly to a biological neuron, an input layer neuron of an artificial network receives inputs that are completed and analysed by hidden layer neurons; finally, output layer neurons return the results. Synapses are the links between human neurons that enable the transmission of impulses. In the same way, artificial synapses link different layers. Finally, the outputs are the result (generally) of backpropagation learning in which the outcome of each step is corrected depending on the error or the differences between the actual outcomes and the predicted ones.

The whole sample is divided into some sets, which are the training, validation and testing sets, to make sure that the ANNs are trained for other external data and not only for the ones it has been previously trained for.

There are different types of ANNs:

- Feedforward neural networks, where data travel in a unique direction, from the input to output layers;

- Convolutional neural networks, where the connection between units has different weights that influence the final outcome in different ways, where the results of each convolutional layer are obtained as the output to the next layer, and where in the convolutional layer, features are extracted from the input dataset. Convolutional neural networks are explained in detail in Section 2.2.1;

- Modular neural networks, where different neural networks work separately, thus without interaction, in order to achieve a unique final result;

- Radial basis function neural network, a real-valued function whose value depends exclusively on the distance from the argument of the function and a fixed point of the domain;

- Recurrent neural networks, where the output of a single layer comes back to the input layer in order to improve itself and then is transmitted to the output layer. Recurrent neural networks are explained in detail in Section 2.2.2.

ANNs are suitable for many non-linear and complex problems because they can correlate inputs and outputs, extract unknown characteristics from the dataset and predict future trends. Moreover, they can generalize previous learning for future data too.

All the methods previously described in Section 2 are reliable and bring accurate results for building energy simulation, optimization and management. Anyway, ANNs are the most commonly used and versatile types of ML surrogate models in this field [79,80].

In the following, various applications of ANNs in the building energy sector are presented.

Magnier et al. [18] proposed a novel approach using ANNs to predict building energy behaviour and a multi-objective genetic algorithm called NGSA-II for the optimization of a residential building. The result was that, by integrating ANNs into the optimization process, the simulation time was considerably reduced compared to a classical optimization approach. In that study, the aims were to minimize thermal energy demand and to reduce the absolute value of the predicted mean vote (PMV) from the Fanger theory [81] about the microclimatic condition of a confined environment.

Similar research was conducted by Asadi et al. [82], where genetic algorithms and ANNs were applied to choose the best retrofit solution of a case study, minimizing energy consumption, retrofit cost and thermal discomfort.

High cooling demands in warm climate were the focus of Melo et al. [83], considering a Brazilian building stock. The purpose was to investigate the versatility of ANNs in hot climatic zones for building shell energy labelling. Building energy dynamic simulations were carried out via EnergyPlus, and a sensitivity analysis was implemented to assess the accuracy of the ANN model in different cases, showing once again the capability of neural networks in handling a lot of input data with realistic outputs.

In the study by Ascione et al. [33], a cost-optimal analysis with multi-objective optimization was applied through ANNs in order to achieve a cost-optimal solution feasible for any building. The novel approach proposed the coupling of EnergyPlus and MATLAB® to find the best group of retrofit measures, meanwhile reducing thermal discomfort and energy consumption. The methodologies used were the simulation-based large-scale uncertainty/sensitivity analysis of building energy performance (SLABE) [26], ANNs for building categories [80] and cost-optimal analysis via the multi-objective optimization of energy performance (CAMO) [26]. The ANNs ensure reduced computational time and good reliability. The proposed novel approach was denominated CASA (from the previous methods applied, CAMO + SLABE + ANNs).

Another study by Ascione et al. [80] used artificial neural networks to predict energy performance and to retrofit scenarios for any member of a building category. Here, two groups of ANNs were considered, one for an existing building stock and one for a stock with retrofit measures, both modelled with the SLABE method. Using machine learning, once optimization was launched, MATLAB® was no longer coupled with EnergyPlus (in this case, the computational burden would be too high) but with ANNs, providing a drastic reduction in computational times. Thus, ANNs can replace standard building performance simulation tools and can be an effective instrument for simulating entire building stocks.

Differently from other previous studies, the review by Perez-Gomariz et al. [84] focused on the application of ANNs to refrigerator systems for industries in order to reduce production costs and polluting emissions. In particular, attention was paid to failures in cooling systems because they notably reduce the efficiency of chillers and increase CO2 emissions and energy consumption, since damage to the system also creates economical damage. That is why a previous diagnosis of the “health” of an industrial system represents a fundamental step. In this work, ANNs were used to predict system faults monitoring real-time cooling capacity and chilled water output temperature and comparing their values with historical ones. When damage occurred, alarms were activated. Thus, the ANNs were trained via past data and could successfully manage errors and singularities in the future data.

Chen et al. [85] used an optimization algorithm based on ANNs in order to reduce the energy cost and consumption of an office building in Scotland. For the ANNs’ training, internal temperature, weather data and occupation were used as the input data. Optimization was achieved using a day-ahead model predictive control strategy. The minimization of the energy consumption was achieved using a chaotic satin bowerbird optimization algorithm coupled with artificial neural networks. In this way, the energy savings were notable, i.e., about 30%.

Similarly, Zhang et al. [86] investigated the forecasting of the building energy demand for a residential structure in Canada using ANNs for the building energy modelling process and a genetic algorithm to define the optimal hyperparameters of the ANNs. In particular, the artificial networks were applied to find various retrofit scenario packages. Finally, the economic and environmental aspects were considered to reduce global cost and polluting emissions.

2.2. Deep Learning Methods

Deep learning (DL) methods allow for the automation of tasks that typically require human intelligence, such as describing images or transcribing an audio file to text. It is also a key component of emerging technologies like self-driving cars, virtual reality, and more. DL models are frequently used to analyse data and to make predictions in various applications.

DL approaches with multiple processing layers are able to learn data representations with multiple levels of abstraction, imitating human brain functioning. They work with advanced neurons that can discover and improve connections starting from a raw dataset. Generally, these advanced typologies of functions are not provided by ANNs or other ML methods [45]. As already anticipated, DL can work with a dataset made up of images, videos and sounds too. Moreover, DL can manage a large quantity of data, even high-dimension ones. DL algorithms provide better and more accurate results when trained on large amounts of high-quality data, rather than ML algorithms. Outliers or errors in the input dataset can significantly impact the deep learning process: to avoid such inaccuracies, large amounts of data must be cleaned and processed before data training. Anyway, pre-processing input data requires large amounts of storage capacity and longer times than ML applications [45].

DL neural networks are quite similar to ML neural networks’ structures. Similarly to ML, there are three layer types, input, hidden and output layers, but in DL networks, there are more hidden layers. The number of hidden layers defines the depth of the architecture [87]. A layer’s complexity is defined by the number of hyperparameters, also called weights, used to represent itself.

DL methods generally manage non-linear data. The activation function in the hidden layer identifies the non-linear correlations between the inputs and outputs. The nature of the function can be varied [87]:

- The rectified linear activation function, also called “ReLU”, is the most commonly used activation function in DL methods for its simplicity. It returns 0 if it receives a negative input, while for a positive value, it returns that value back (see Equation (5)). In various cases, this function performs very well when taking into account non-linearities in the dataset:

- The sigmoid activation function, also known for its peculiar S-curve, is a real, monotonic, differentiable and bounded function in which the independent variable is always a real number. Its constraints are two horizontal asymptotes for the x variable tending to infinity. It has a non-negative derivate at every point and only one inflection point. An example of the sigmoid function is the arctangent function in Equation (6):

- The hyperbolic tangent activation function, like the sigmoid function, has an S-shape and assumes real values as inputs. Its output varies between −1 and +1. When using this function for hidden layers, it is good practice to scale the input data to the range from −1 to +1 before training. Its expression is reported in Equation (7).

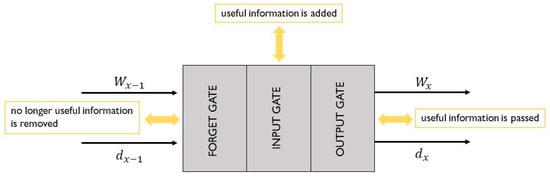

Some examples of deep learning methods are convolutional neural networks (CNNs) and recursive neural networks (RNNs). CNNs are generally used for classification and computer vision tasks. RNNs are commonly applied in natural language processing and speech recognition. The most commonly used RNN methods are long short-term memory (LSTM) and gated recurrent units (GRUs). All these methods are described in detail in Section 2.2.1 and Section 2.2.2.

Due to the high quantity of data to be managed in building energy predictions, it is sometimes useful to use more advanced data-driven methods, e.g., DL, rather than ML. Thus, it is possible to obtain building energy forecasting models that are more feasible and general, as well as more accurate and reliable. Many are possible applications of DL methods. In the review article by Wang et al. [88], there is confirmation of the large use of various DL methods, especially in renewable energy forecasting, because of their accuracy.

2.2.1. Convolutional Neural Networks (CNNs)

Convolutional neural networks (CNNs) are often used in object/image recognition and classification [45] and seem to be really versatile in the spatial data design process [84].

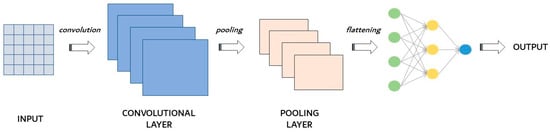

CNNs contain three main layer types, as shown in the scheme in Figure 9: convolutional layers (also called filters), pooling layers and fully connected layers. Of course, at each level, the complexity of CNNs increases. For example, in the case of image recognition, in the convolutional level, the colour and contour are identified; in the subsequent levels, the details increase; and finally, at the fully connected level, the image is completely defined.

Figure 9.

Convolutional neural network scheme.

CNNs are based on the convolution process. In mathematics, convolution is an operation between two real functions that consists of integrating the product between the first and second functions that has been translated to a certain value. Basically, one function slides over the other, providing the product of the two functions as a result. A convolution operation is useful when the input has multidimensional arrays. The generical convolution expression to find feature map a consists of the product between b and c, where b is the input function and c is the weighting function [89].

The convolutional layer is the main building block of CNNs and is where most of the computations take place, i.e., the training process. It uses convolutional filters instead of artificial neurons, as happens for ANNs. It requires just a few components: the input data, a filter, and a feature map. The convolutional layer moves through the input fields and checks for the presence of the function. The initial convolution layer can be followed by another one: when this happens, the structure of CNNs can become hierarchical and the subsequent levels take the form of subcomponents at a macro-level. In the convolutional layer, the filter extracts spatial features from the data to improve the classification and the prediction ability of the network [84].

Pooling layers, also called sub-sampling layers, perform dimensionality reduction by reducing the number of parameters in the inputs. Although the pooling layer involves the loss of a lot of information, it offers a number of advantages to CNNs, as it contributes to a reduction in the network’s complexity and to an improvement in its efficiency. Among the pooling techniques, max-pooling is usually used. This is a method for reducing the size of the input matrix, dividing it into blocks and keeping only the one with the highest value. Therefore, the overfitting problem is reduced, and only the areas with greater activation are maintained [89].

Finally, the fully connected layer performs the classification task based on the features extracted via the previous layers and their different filters. Thus, multidimensional input data are organized into feature maps [84].

In the following, various applications of CNNs in the building energy sector are presented.

Amarasinghe et al. [89] presented a load forecasting methodology based on a DL method: CNNs were applied to forecast energy loads for a single-story building using a historical dataset. The results were compared with other intelligent approaches, i.e., LSTM, SVMs and ANNs. The result was great performance in terms of testing and training errors for the CNN approach, almost comparable to those of ANNs and LSTM, and definitely better than the ones from SVMs.

Khan et al. [90] highlighted the importance of smarter management and planning operations in renewable energy predictions. That study developed an ESNCNN model for accurate renewable energy forecasting. Notably, the echo state network (ESN) and CNN were coupled to form an efficient tool for solar prediction, with reduced runtime. ESN’s work was temporal feature learning from the input energy prediction patterns, and CNN received data from ESN and extracted the spatial information. ESN and CNN were residually linearly connected to avoid the vanishing gradient problem. Thus, a solar power prediction could be achieved. The validation was performed on an electricity consumption dataset. The results showed ESNCNN’s successful performance in power generation and consumption prediction.

Kim et al. [91] studied the application of a combined approach using CNNs and LSTM to predict residential energy consumption. CNNs extracted the most influencing features among variables affecting the energy consumption, while LSTM modelled temporal information. The proposed approach seemed to be useful and accurate, with an almost perfect prediction with minute, hourly, daily and weekly resolutions. The results showed the great influence of occupancy behaviour on the energy consumption.

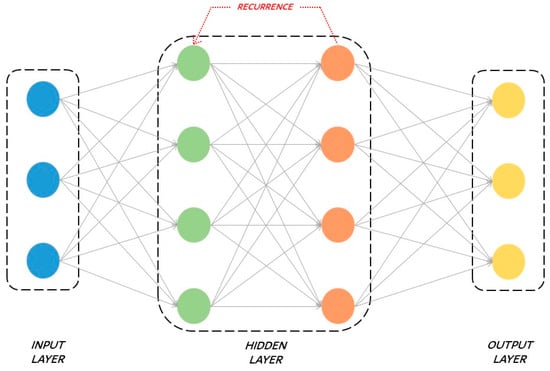

2.2.2. Recursive Neural Networks (RNNs)