Smartphone-Based Gait Analysis with OpenCap: A Narrative Review

Abstract

1. Introduction

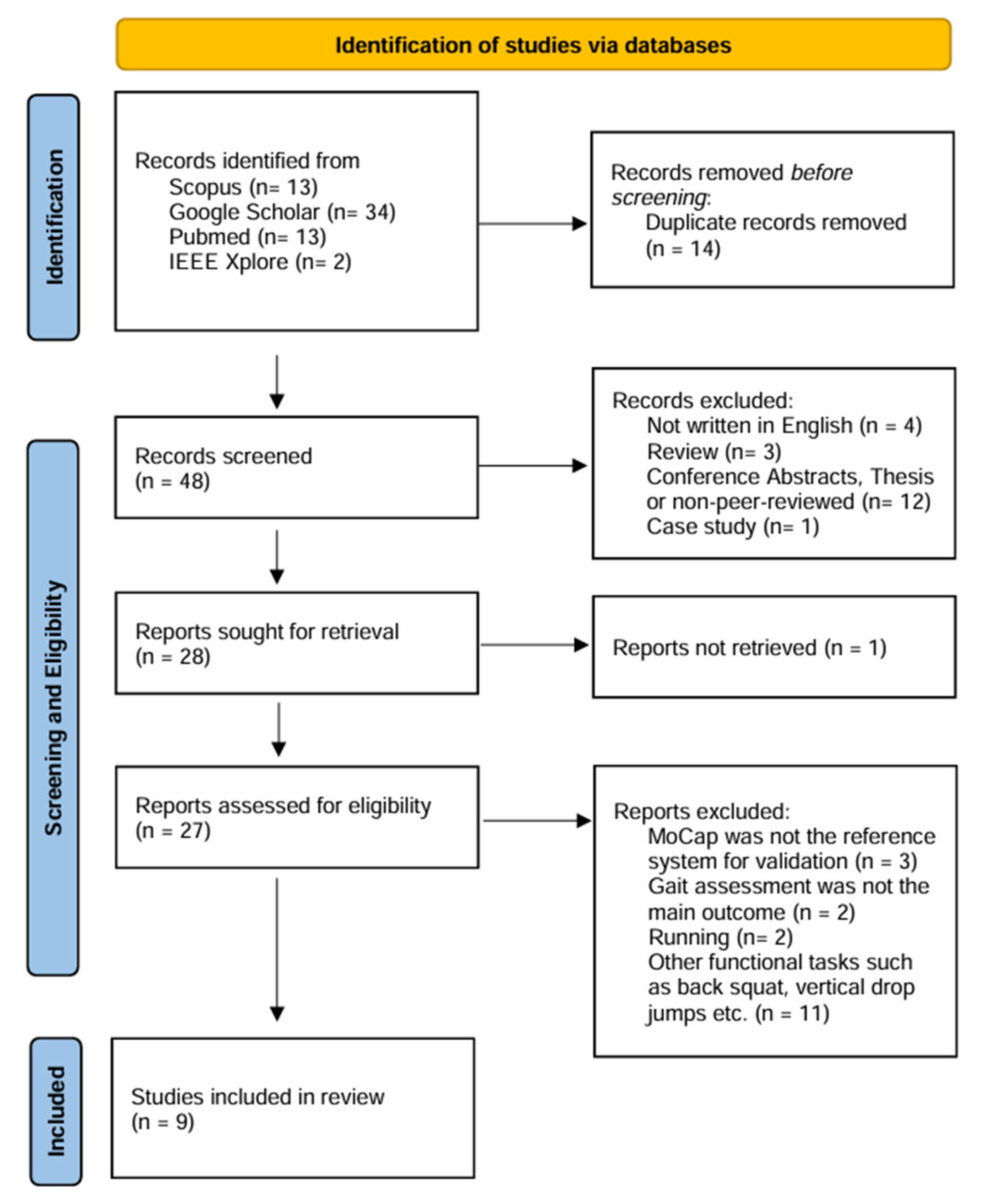

2. Materials and Methods

3. Results

3.1. Study Objectives

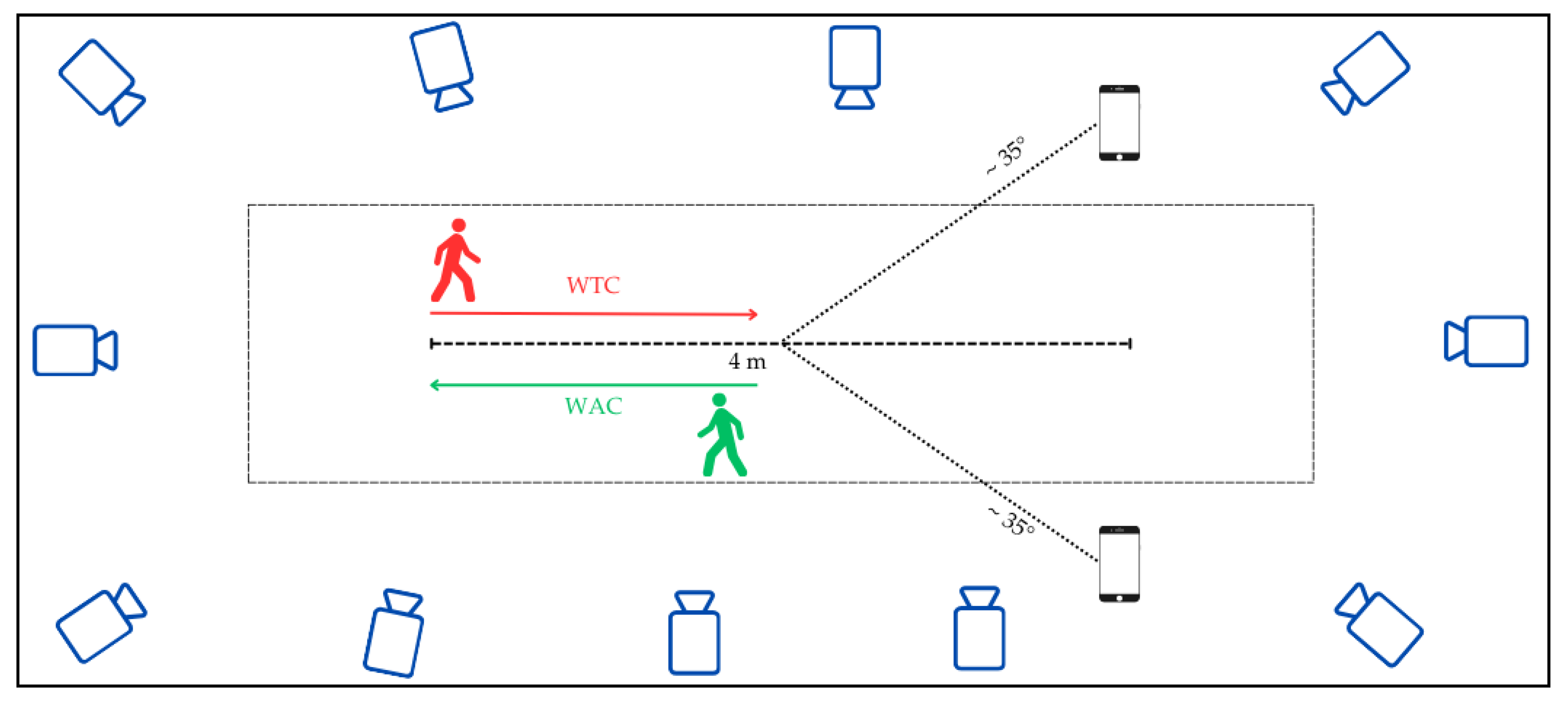

3.2. Set-Up, Data Collection and Processing

3.3. Participants and Experimental Protocol

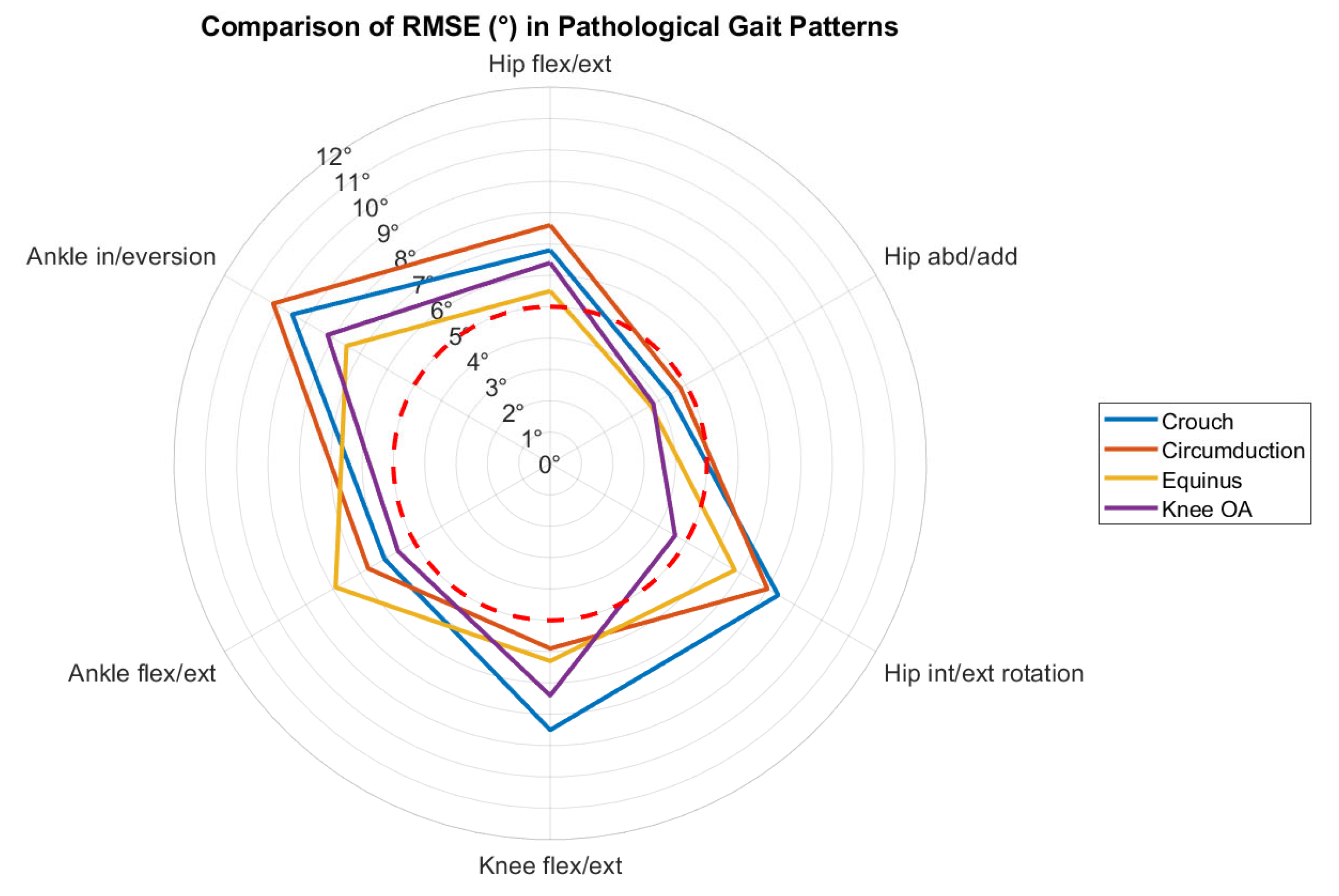

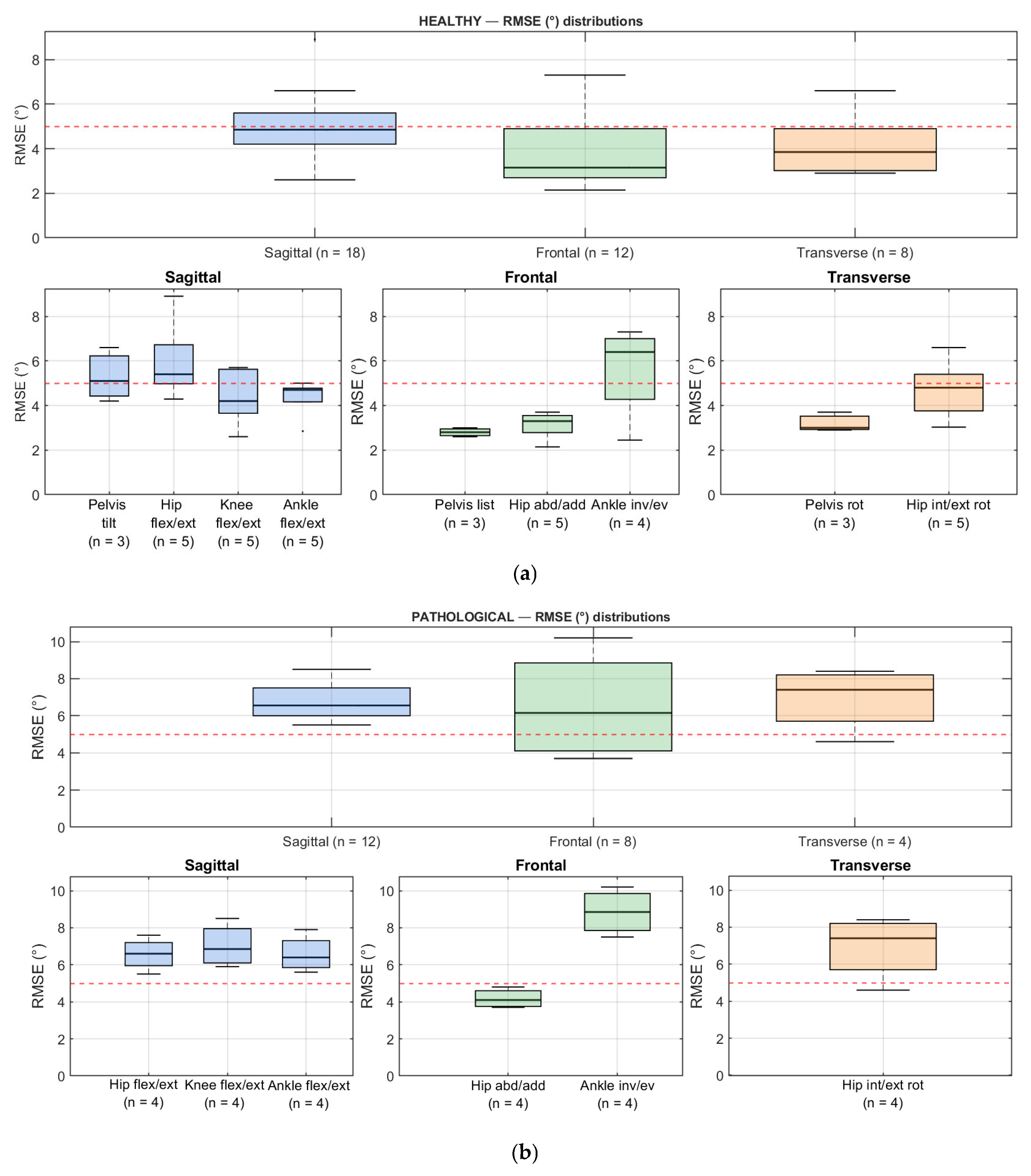

3.4. Estimated Gait Parameters

3.5. Statistical Analysis Methods

3.6. Findings and Data Availability

4. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

Abbreviations

| MoCap | Motion Capture |

| GRFs | Ground Reaction Forces |

| Flex/ext | Flexion/extension |

| Abd/add | Abduction/adduction |

| Int/ext | Internal/external |

| Rot | Rotation |

| In/ev | Inversion/eversion |

References

- Cimolin, V.; Galli, M. Summary measures for clinical gait analysis: A literature review. Gait Posture 2014, 39, 1005–1010. [Google Scholar] [CrossRef]

- Ungvari, Z.; Fazekas-Pongor, V.; Csiszar, A.; Kunutsor, S.K. The multifaceted benefits of walking for healthy aging: From Blue Zones to molecular mechanisms. GeroScience 2023, 45, 3211–3239. [Google Scholar] [CrossRef] [PubMed]

- Moon, Y.; Sung, J.; An, R.; Hernandez, M.E.; Sosnoff, J.J. Gait variability in people with neurological disorders: A systematic review and meta-analysis. Hum. Mov. Sci. 2016, 47, 197–208. [Google Scholar] [CrossRef] [PubMed]

- Snijders, A.H.; Leunissen, I.; Bakker, M.; Overeem, S.; Helmich, R.C.; Bloem, B.R.; Toni, I. Gait-related cerebral alterations in patients with Parkinson’s disease with freezing of gait. Brain 2011, 134, 59–72. [Google Scholar] [CrossRef] [PubMed]

- Chen, G.; Patten, C.; Kothari, D.H.; Zajac, F.E. Gait differences between individuals with post-stroke hemiparesis and non-disabled controls at matched speeds. Gait Posture 2005, 22, 51–56. [Google Scholar] [CrossRef]

- Rezaei, A.; Bhat, S.G.; Cheng, C.-H.; Pignolo, R.J.; Lu, L.; Kaufman, K.R. Age-related changes in gait, balance, and strength parameters: A cross-sectional study. PLoS ONE 2024, 19, e0310764. [Google Scholar] [CrossRef]

- Cruz-Jimenez, M. Normal Changes in Gait and Mobility Problems in the Elderly. Phys. Med. Rehabil. Clin. N. Am. 2017, 28, 713–725. [Google Scholar] [CrossRef]

- Selves, C.; Stoquart, G.; Lejeune, T. Gait rehabilitation after stroke: Review of the evidence of predictors, clinical outcomes and timing for interventions. Acta Neurol. Belg. 2020, 120, 783–790. [Google Scholar] [CrossRef]

- Khalid, S.; Malik, A.N.; Siddiqi, F.A.; Rathore, F.A. Overview of gait rehabilitation in stroke. J. Pak. Med. Assoc. 2023, 73, 1142–1145. [Google Scholar] [CrossRef]

- Cimolin, V.; Vismara, L.; Galli, M.; Grugni, G.; Cau, N.; Capodaglio, P. Gait strategy in genetically obese patients: A 7-year follow up. Res. Dev. Disabil. 2014, 35, 1501–1506. [Google Scholar] [CrossRef]

- McGinley, J.L.; Baker, R.; Wolfe, R.; Morris, M.E. The reliability of three-dimensional kinematic gait measurements: A systematic review. Gait Posture 2009, 29, 360–369. [Google Scholar] [CrossRef] [PubMed]

- Bonato, P.; Feipel, V.; Corniani, G.; Arin-Bal, G.; Leardini, A. Position paper on how technology for human motion analysis and relevant clinical applications have evolved over the past decades: Striking a balance between accuracy and convenience. Gait Posture 2024, 113, 191–203. [Google Scholar] [CrossRef] [PubMed]

- Topley, M.; Richards, J.G. A comparison of currently available optoelectronic motion capture systems. J. Biomech. 2020, 106, 109820. [Google Scholar] [CrossRef] [PubMed]

- Cappozzo, A.; Catani, F.; Della Croce, U.; Leardini, A. Position and orientation in space of bones during movement: Anatomical frame definition and determination. Clin. Biomech. 1995, 10, 171–178. [Google Scholar] [CrossRef]

- Richards, J.G. The measurement of human motion: A comparison of commercially available systems. Hum. Mov. Sci. 1999, 18, 589–602. [Google Scholar] [CrossRef]

- van der Kruk, E.; Reijne, M.M. Accuracy of human motion capture systems for sport applications; state-of-the-art review. Eur. J. Sport Sci. 2018, 18, 806–819. [Google Scholar] [CrossRef]

- Muro-de-la-Herran, A.; Garcia-Zapirain, B.; Mendez-Zorrilla, A. Gait analysis methods: An overview of wearable and non-wearable systems, highlighting clinical applications. Sensors 2014, 14, 3362–3394. [Google Scholar] [CrossRef]

- Lopes, T.J.A.; Ferrari, D.; Ioannidis, J.; Simic, M.; Mícolis de Azevedo, F.; Pappas, E. Reliability and Validity of Frontal Plane Kinematics of the Trunk and Lower Extremity Measured With 2-Dimensional Cameras During Athletic Tasks: A Systematic Review With Meta-analysis. J. Orthop. Sports Phys. Ther. 2018, 48, 812–822. [Google Scholar] [CrossRef]

- Reinking, M.F.; Dugan, L.; Ripple, N.; Schleper, K.; Scholz, H.; Spadino, J.; Stahl, C.; McPoil, T.G. Reliability of two-dimensional video-based running gait analysis. Int. J. Sports Phys. Ther. 2018, 13, 453–461. [Google Scholar] [CrossRef]

- Milosevic, B.; Leardini, A.; Farella, E. Kinect and wearable inertial sensors for motor rehabilitation programs at home: State of the art and an experimental comparison. Biomed. Eng. Online 2020, 19, 25. [Google Scholar] [CrossRef]

- Colyer, S.L.; Evans, M.; Cosker, D.P.; Salo, A.I.T. A Review of the Evolution of Vision-Based Motion Analysis and the Integration of Advanced Computer Vision Methods Towards Developing a Markerless System. Sport. Med. Open 2018, 4, 24. [Google Scholar] [CrossRef] [PubMed]

- Gu, C.; Lin, W.; He, X.; Zhang, L.; Zhang, M. IMU-based motion capture system for rehabilitation applications: A systematic review. Biomim. Intell. Robot. 2023, 3, 100097. [Google Scholar] [CrossRef]

- Horak, F.; King, L.; Mancini, M. Role of body-worn movement monitor technology for balance and gait rehabilitation. Phys. Ther. 2015, 95, 461–470. [Google Scholar] [CrossRef] [PubMed]

- Komaris, D.-S.; Tarfali, G.; O’Flynn, B.; Tedesco, S. Unsupervised IMU-based evaluation of at-home exercise programmes: A feasibility study. BMC Sports Sci. Med. Rehabil. 2022, 14, 28. [Google Scholar] [CrossRef]

- Motta, F.; Varrecchia, T.; Chini, G.; Ranavolo, A.; Galli, M. The Use of Wearable Systems for Assessing Work-Related Risks Related to the Musculoskeletal System—A Systematic Review. Int. J. Environ. Res. Public Health 2024, 21, 1567. [Google Scholar] [CrossRef]

- Clark, R.A.; Mentiplay, B.F.; Hough, E.; Pua, Y.H. Three-dimensional cameras and skeleton pose tracking for physical function assessment: A review of uses, validity, current developments and Kinect alternatives. Gait Posture 2019, 68, 193–200. [Google Scholar] [CrossRef]

- Mousavi Hondori, H.; Khademi, M. A Review on Technical and Clinical Impact of Microsoft Kinect on Physical Therapy and Rehabilitation. J. Med. Eng. 2014, 2014, 846514. [Google Scholar] [CrossRef]

- Desmarais, Y.; Mottet, D.; Slangen, P.; Montesinos, P. A Review of 3D Human Pose Estimation Algorithms for Markerless Motion Capture; Computer Vision and Image Understanding: San Diego, CA, USA, 2021; Volume 212, ISBN 1077314221. [Google Scholar]

- Lam, W.W.T.; Tang, Y.M.; Fong, K.N.K. A systematic review of the applications of markerless motion capture (MMC) technology for clinical measurement in rehabilitation. J. Neuroeng. Rehabil. 2023, 20, 57. [Google Scholar] [CrossRef]

- Scataglini, S.; Abts, E.; Van Bocxlaer, C.; den Bussche, M.; Meletani, S.; Truijen, S. Accuracy, Validity, and Reliability of Markerless Camera-Based 3D Motion Capture Systems versus Marker-Based 3D Motion Capture Systems in Gait Analysis: A Systematic Review and Meta-Analysis. Sensors 2024, 24, 3686. [Google Scholar] [CrossRef]

- Molteni, L.E.; Andreoni, G. Comparing the Accuracy of Markerless Motion Analysis and Optoelectronic System for Measuring Gait Kinematics of Lower Limb. Bioengineering 2025, 12, 424. [Google Scholar] [CrossRef]

- Cao, Z.; Hidalgo, G.; Simon, T.; Wei, S.-E.; Sheikh, Y. OpenPose: Realtime Multi-Person 2D Pose Estimation Using Part Affinity Fields. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 172–186. [Google Scholar] [CrossRef]

- Rui, L.; Gao, Y.; Ren, H. EDite-HRNet: Enhanced Dynamic Lightweight High-Resolution Network for Human Pose Estimation. IEEE Access 2023, 11, 95948–95957. [Google Scholar] [CrossRef]

- Neupane, R.B.; Li, K.; Boka, T.F. A survey on deep 3D human pose estimation. Artif. Intell. Rev. 2024, 58, 24. [Google Scholar] [CrossRef]

- Zheng, C.; Wu, W.; Yang, T.; Zhu, S.; Chen, C.; Liu, R.; Shen, J.; Kehtarnavaz, N.; Shah, M. Deep Learning-Based Human Pose Estimation: A Survey. ACM Comput. Surv. 2023, 56, 1–37. [Google Scholar] [CrossRef]

- Mathis, A.; Mamidanna, P.; Cury, K.M.; Abe, T.; Murthy, V.N.; Mathis, M.W.; Bethge, M. DeepLabCut: Markerless pose estimation of user-defined body parts with deep learning. Nat. Neurosci. 2018, 21, 1281–1289. [Google Scholar] [CrossRef]

- Toshev, A.; Szegedy, C. DeepPose: Human pose estimation via deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 24–27 June 2014; pp. 1653–1660. [Google Scholar] [CrossRef]

- Kanko, R.M.; Laende, E.K.; Strutzenberger, G.; Brown, M.; Selbie, W.S.; DePaul, V.; Scott, S.H.; Deluzio, K.J. Assessment of spatiotemporal gait parameters using a deep learning algorithm-based markerless motion capture system. J. Biomech. 2021, 122, 110414. [Google Scholar] [CrossRef]

- Uhlrich, S.D.; Falisse, A.; Kidziński, Ł.; Muccini, J.; Ko, M.; Chaudhari, A.S.; Hicks, J.L.; Delp, S.L. OpenCap: Human movement dynamics from smartphone videos. PLoS Comput. Biol. 2023, 19, e1011462. [Google Scholar] [CrossRef]

- Gozlan, Y.; Falisse, A.; Uhlrich, S.; Gatti, A.; Black, M.; Hicks, J.; Delp, S.; Chaudhari, A. OpenCapBench: A Benchmark to Bridge Pose Estimation and Biomechanics. In Proceedings of the 2025 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Tucson, AZ, USA, 26 February–6 March 2025. [Google Scholar]

- Falisse, A.; Uhlrich, S.; Delp, S. OpenCap: Movement Biomechanics from Smartphone Videos. In International Conference on NeuroRehabilitation; Springer Nature: Cham, Switzerland, 2024; pp. 519–522. ISBN 978-3-031-77583-3. [Google Scholar]

- Falisse, A.; Uhlrich, S.; Chaudhari, A.; Delp, S. Marker Data Enhancement for Markerless Motion Capture. IEEE Trans. Biomed. Eng. 2025, 72, 2013–2022. [Google Scholar] [CrossRef]

- Delp, S.L.; Anderson, F.C.; Arnold, A.S.; Loan, P.; Habib, A.; John, C.T.; Guendelman, E.; Thelen, D.G. OpenSim: Open-source software to create and analyze dynamic simulations of movement. IEEE Trans. Biomed. Eng. 2007, 54, 1940–1950. [Google Scholar] [CrossRef]

- Rajagopal, A.; Dembia, C.L.; DeMers, M.S.; Delp, D.D.; Hicks, J.L.; Delp, S.L. Full-Body Musculoskeletal Model for Muscle-Driven Simulation of Human Gait. IEEE Trans. Biomed. Eng. 2016, 63, 2068–2079. [Google Scholar] [CrossRef]

- Seth, A.; Sherman, M.; Reinbolt, J.A.; Delp, S.L. OpenSim: A musculoskeletal modeling and simulation framework for in silico investigations and exchange. Procedia IUTAM 2011, 2, 212–232. [Google Scholar] [CrossRef]

- Abdullah, M.; Hulleck, A.A.; Katmah, R.; Khalaf, K.; El-Rich, M. Multibody dynamics-based musculoskeletal modeling for gait analysis: A systematic review. J. Neuroeng. Rehabil. 2024, 21, 178. [Google Scholar] [CrossRef]

- Kakavand, R.; Ahmadi, R.; Parsaei, A.; Brent Edwards, W.; Komeili, A. Comparison of kinematics and kinetics between OpenCap and a marker-based motion capture system in cycling. Comput. Biol. Med. 2025, 192, 110295. [Google Scholar] [CrossRef] [PubMed]

- Min, Y.S.; Jung, T.-D.; Lee, Y.S.; Kwon, Y.; Kim, H.J.; Kim, H.C.; Lee, J.C.; Park, E. Biomechanical Gait Analysis Using a Smartphone-Based Motion Capture System (OpenCap) in Patients with Neurological Disorders. Bioengineering 2024, 11, 911. [Google Scholar] [CrossRef] [PubMed]

- Turner, J.A.; Chaaban, C.R.; Padua, D.A. Validation of OpenCap: A low-cost markerless motion capture system for lower-extremity kinematics during return-to-sport tasks. J. Biomech. 2024, 171, 112200. [Google Scholar] [CrossRef] [PubMed]

- Cronin, N.J.; Walker, J.; Tucker, C.B.; Nicholson, G.; Cooke, M.; Merlino, S.; Bissas, A. Feasibility of OpenPose markerless motion analysis in a real athletics competition. Front. Sport. Act. Living 2023, 5, 1298003. [Google Scholar] [CrossRef]

- Verheul, J.; Robinson, M.A.; Burton, S. Jumping towards field-based ground reaction force estimation and assessment with OpenCap. J. Biomech. 2024, 166, 112044. [Google Scholar] [CrossRef]

- de Borba, E.F.; da Silva, E.S.; de Alves, L.L.; Neto, A.R.D.S.; Inda, A.R.; Ibrahim, B.M.; Ribas, L.R.; Correale, L.; Peyré-Tartaruga, L.A.; Tartaruga, M.P. Fatigue-Related Changes in Running Technique and Mechanical Variables After a Maximal Incremental Test in Recreational Runners. J. Appl. Biomech. 2024, 40, 424–431. [Google Scholar] [CrossRef]

- Bertozzi, F.; Brunetti, C.; Maver, P.; Palombi, M.; Santini, M.; Galli, M.; Tarabini, M. Concurrent validity of IMU and phone-based markerless systems for lower-limb kinematics during cognitively-challenging landing tasks. J. Biomech. 2025, 191, 112883. [Google Scholar] [CrossRef]

- Cheng, X.; Jiao, Y.; Meiring, R.M.; Sheng, B.; Zhang, Y. Reliability and validity of current computer vision based motion capture systems in gait analysis: A systematic review. Gait Posture 2025, 120, 150–160. [Google Scholar] [CrossRef]

- Drazan, J.F.; Phillips, W.T.; Seethapathi, N.; Hullfish, T.J.; Baxter, J.R. Moving outside the lab: Markerless motion capture accurately quantifies sagittal plane kinematics during the vertical jump. J. Biomech. 2021, 125, 110547. [Google Scholar] [CrossRef]

- Van Hooren, B.; Pecasse, N.; Meijer, K.; Essers, J.M.N. The accuracy of markerless motion capture combined with computer vision techniques for measuring running kinematics. Scand. J. Med. Sci. Sports 2023, 33, 966–978. [Google Scholar] [CrossRef]

- Ferrari, R. Writing narrative style literature reviews. Med. Writ. 2015, 24, 230–235. [Google Scholar] [CrossRef]

- Sukhera, J. Narrative Reviews: Flexible, Rigorous, and Practical. J. Grad. Med. Educ. 2022, 14, 414–417. [Google Scholar] [CrossRef] [PubMed]

- Horsak, B.; Eichmann, A.; Lauer, K.; Prock, K.; Krondorfer, P.; Siragy, T.; Dumphart, B. Concurrent validity of smartphone-based markerless motion capturing to quantify lower-limb joint kinematics in healthy and pathological gait. J. Biomech. 2023, 159, 111801. [Google Scholar] [CrossRef] [PubMed]

- Horsak, B.; Prock, K.; Krondorfer, P.; Siragy, T.; Simonlehner, M.; Dumphart, B. Inter-trial variability is higher in 3D markerless compared to marker-based motion capture: Implications for data post-processing and analysis. J. Biomech. 2024, 166, 112049. [Google Scholar] [CrossRef]

- Peng, Y.; Wang, W.; Wang, L.; Zhou, H.; Chen, Z.; Zhang, Q.; Li, G. Smartphone videos-driven musculoskeletal multibody dynamics modelling workflow to estimate the lower limb joint contact forces and ground reaction forces. Med. Biol. Eng. Comput. 2024, 62, 3841–3853. [Google Scholar] [CrossRef]

- Svetek, A.; Morgan, K.; Burland, J.; Glaviano, N.R. Validation of OpenCap on lower extremity kinematics during functional tasks. J. Biomech. 2025, 183, 112602. [Google Scholar] [CrossRef]

- Horsak, B.; Kainz, H.; Dumphart, B. Repeatability and minimal detectable change including clothing effects for smartphone-based 3D markerless motion capture. J. Biomech. 2024, 175, 112281. [Google Scholar] [CrossRef]

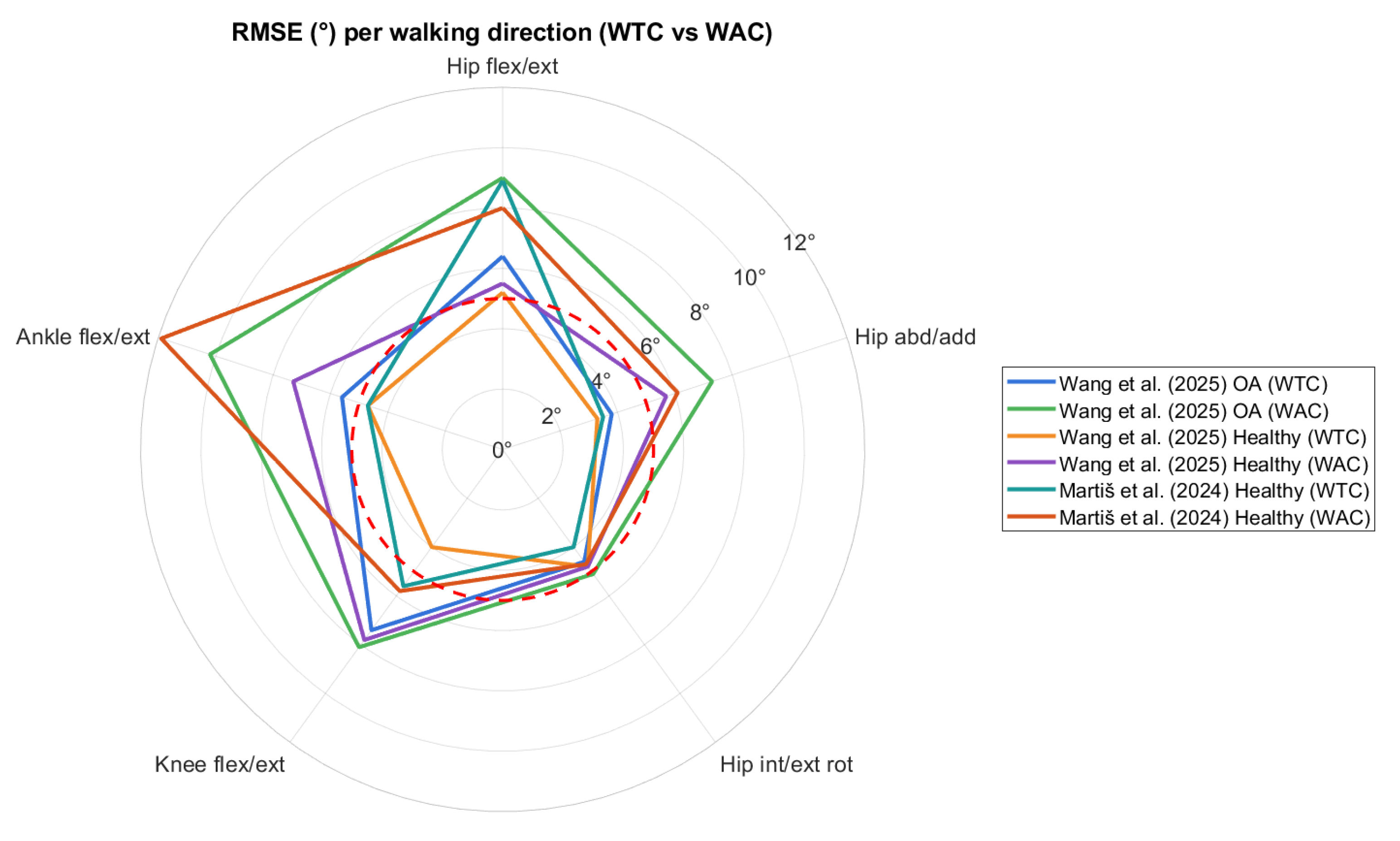

- Martiš, P.; Košutzká, Z.; Kranzl, A. A Step Forward Understanding Directional Limitations in Markerless Smartphone-Based Gait Analysis: A Pilot Study. Sensors 2024, 24, 3091. [Google Scholar] [CrossRef]

- Wang, J.; Xu, W.; Wu, Z.; Zhang, H.; Wang, B.; Zhou, Z.; Wang, C.; Li, K.; Nie, Y. Evaluation of a smartphone-based markerless system to measure lower-limb kinematics in patients with knee osteoarthritis. J. Biomech. 2025, 181, 112529. [Google Scholar] [CrossRef] [PubMed]

- Karimi, M.T.; Tahmasebi, R.; Sharifmoradi, K.; Abarghuei, M.A.F. Investigation of joint contact forces during walking in the subjects with toe in gait due to increasing in femoral head anteversion angle. Proc. Inst. Mech. Eng. Part H J. Eng. Med. 2024, 238, 755–763. [Google Scholar] [CrossRef] [PubMed]

- Liew, B.X.W.; Rügamer, D.; Mei, Q.; Altai, Z.; Zhu, X.; Zhai, X.; Cortes, N. Smooth and accurate predictions of joint contact force time-series in gait using over parameterised deep neural networks. Front. Bioeng. Biotechnol. 2023, 11, 1208711. [Google Scholar] [CrossRef] [PubMed]

- Koo, T.K.; Li, M.Y. A Guideline of Selecting and Reporting Intraclass Correlation Coefficients for Reliability Research. J. Chiropr. Med. 2016, 15, 155–163. [Google Scholar] [CrossRef]

- Wilken, J.M.; Rodriguez, K.M.; Brawner, M.; Darter, B.J. Reliability and Minimal Detectible Change values for gait kinematics and kinetics in healthy adults. Gait Posture 2012, 35, 301–307. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C. Microsoft COCO: Common Objects in Context. In European Conference on Computer Vision; Springer International: Cham, Switzerland, 2014. [Google Scholar]

- Greene, B.R.; McManus, K.; Redmond, S.J.; Caulfield, B.; Quinn, C.C. Digital assessment of falls risk, frailty, and mobility impairment using wearable sensors. Npj Digit. Med. 2019, 2, 125. [Google Scholar] [CrossRef]

- Mündermann, L.; Corazza, S.; Andriacchi, T.P. The evolution of methods for the capture of human movement leading to markerless motion capture for biomechanical applications. J. Neuroeng. Rehabil. 2006, 3, 6. [Google Scholar] [CrossRef]

- Saibene, F.; Minetti, A.E. Biomechanical and physiological aspects of legged locomotion in humans. Eur. J. Appl. Physiol. 2003, 88, 297–316. [Google Scholar] [CrossRef]

- Tesio, L.; Rota, V. The Motion of Body Center of Mass During Walking: A Review Oriented to Clinical Applications. Front. Neurol. 2019, 10, 999. [Google Scholar] [CrossRef]

- Biswas, N.; Chakrabarti, S.; Jones, L.D.; Ashili, S. Smart wearables addressing gait disorders: A review. Mater. Today Commun. 2023, 35, 106250. [Google Scholar] [CrossRef]

- Wren, T.A.L.; Tucker, C.A.; Rethlefsen, S.A.; Gorton, G.E., 3rd; Õunpuu, S. Clinical efficacy of instrumented gait analysis: Systematic review 2020 update. Gait Posture 2020, 80, 274–279. [Google Scholar] [CrossRef]

- Roggio, F.; Trovato, B.; Sortino, M.; Musumeci, G. A comprehensive analysis of the machine learning pose estimation models used in human movement and posture analyses: A narrative review. Heliyon 2024, 10, e39977. [Google Scholar] [CrossRef]

- Osness, E.; Isley, S.; Bertrand, J.; Dennett, L.; Bates, J.; Van Decker, N.; Stanhope, A.; Omkar, A.; Dolgoy, N.; Ezeugwu, V.E.; et al. Markerless Motion Capture Parameters Associated with Fall Risk or Frailty: A Scoping Review. Sensors 2025, 25, 5741. [Google Scholar] [CrossRef]

| Source, Year and Country | Participants, Age (yrs) and Sex (M/F) | Validation Set-Up | OpenCap Set-Up | Gait Assessment | Finality of the Study | |||

|---|---|---|---|---|---|---|---|---|

| Reference System | Markers’ Placement | Devices | Placement | Functional Tasks and Patterns | Parameters | |||

| Uhrich et al., 2023, USA [39] | Total: 10 healthy adults, 27.7 ± 3.8 yrs, M: 4/F: 6 | 8-camera MoCap (Motion Analysis Corp., Santa Rosa, CA, USA) at 100 Hz + 3 force plates (Bertec Corp., Columbus, OH, USA) at 2000 Hz | 31 retro-reflective markers, custom placement | 2 iOS smartphones (iPhone 12 Pro) | 45° angle relative to walking direction, 3 m from the center of walking path, 1.5 m off the ground, tripod-mounted | Gait analysis, physiological gait and gait with trunk sway modification | Spatio-temporal parameters; lower-limb joint kinematics and kinetics (hip, knee, ankle) | Validation |

| Horsak et al. 2023, 2024, Austria [59,60] | Total: 21 healthy individuals, 30.2 ± 8.5 yrs, M: 9/F: 12 | 16-camera MoCap (Vicon, Oxford, UK) at 120 Hz + 3 force plates at 1200 Hz | 57 retro-reflective markers, extended Cleveland Clinic set (with medial/lateral markers) + Plug-in-Gait | 2 iOS smartphones (iPhone 11 and 12 Pro) | 35° off center of the walking path, 1.5 m from ground, tripod-mounted, ~5° incline | Gait analysis along a 10 m walkway; physiological gait, simulated crouch, circumduction, and equinus gait | Lower-limb joint kinematics (pelvis, hip, knee, ankle, subtalar) | Validation and Characterization |

| Peng et al., 2024, China [61] | Total: 12 healthy adults, 21.7 ± 1.4 yrs, M: 5/F: 7 | 11-camera MoCap (Vicon, Oxford, UK) at 150 Hz + 2 force plates at 1200 Hz | Custom lower-limb and trunk marker set (Plug-in-Gait + additional foot markers) | 2 iOS smartphones (iPhone 12 Pro) | 45° relative to walking direction, 2–3 m distance, 1.3 m height, tripod-mounted | Gait analysis along a flat 10 m walkway, physiological walking | Lower-limb joint kinematics (hip, knee, ankle) and kinetics (ground reaction forces, joint contact forces) | Validation |

| Svetek et al. 2024, USA [62] | Total: 20 athletes (ice hockey), 21.35 ± 1.3 yrs, M: 2/F: 18 | 10-camera MoCap (Vicon, Oxford, UK) at 240 Hz | 37 retro-reflective markers, custom placement | 2 iOS devices (iPad Air) | 45° off center of the walking path, tripod-mounted | Gait analysis on a treadmill; healthy gait and other functional tasks | Peak joint angles in sagittal and frontal planes (hip, knee) | Validation |

| Min et al. 2024, South Korea [48] | Total: 20 participants (10 neurological patients: stroke, Parkinson’s, cerebral palsy; 10 healthy controls), age and gender division N/A | N/A | 2 iOS smartphones (model N/A) | 30–45° angle relative to walking direction, tripod-mounted | Gait analysis along a 4 m walkway; physiological gait and gait in neurological impairments (i.e., stroke, Parkinson’s, and cerebral palsy) | Joint kinematics and kinetics (pelvis, hip, knee, ankle) | Characterization | |

| Horsak et al. 2024, Austria [63] | Total: 19 healthy adults, 35 ± 11 yrs, M: 12/F: 7 | N/A | 2 iOS devices (12 mini and 13 Pro) | 35° off center of the walking path, 1.5 m from ground, tripod-mounted | Gait analysis along an 8 m walkway, physiological gait with different clothing conditions | Lower-limb (hip, knee, ankle), pelvic, and trunk kinematics | Characterization | |

| Martiš et al. 2024, Austria [64] | Total: 10 healthy adults, 29.7 ± 8.6 yrs, M: 6/F: 4 | 17-camera MoCap (Vicon, Oxford, UK) at 150 Hz | 49 markers, modified Cleveland Clinic and Plug-In-Gait sets | 2 iOS devices (12 and 14) | 30° off center of the walking path, 1.5 m from ground, tripod- mounted | TUG test walking along a 3 m walking path, toward and away from camera | Spatio-temporal parameters and joint kinematics (pelvis, hip, knee, ankle), foot lift-off and landing angles | Validation |

| Wang et al. 2025, China [65] | Total: 83 individuals, 53 patients with knee osteoarthritis (64.5 ± 6.5 yrs, 42M/11F), 30 healthy individuals (55.2 ± 3.3 yrs, 25M/5F) | 10-camera MoCap (Vicon, Oxford, UK) at 150 Hz + 2 force plates at 1200 Hz | 34 retro-reflective markers, custom placement | 2 iPhones (12 Pro) | 35° off center of the walking path, 1.3 m from ground, tripod-mounted | Gait analysis on a 7 m walkway, pathological and healthy gait toward and away from camera | Lower-limb joint kinematics (pelvis, hip, knee, ankle) | Validation and Characterization |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cerfoglio, S.; Lopes Storniolo, J.; de Borba, E.F.; Cavallari, P.; Galli, M.; Capodaglio, P.; Cimolin, V. Smartphone-Based Gait Analysis with OpenCap: A Narrative Review. Biomechanics 2025, 5, 88. https://doi.org/10.3390/biomechanics5040088

Cerfoglio S, Lopes Storniolo J, de Borba EF, Cavallari P, Galli M, Capodaglio P, Cimolin V. Smartphone-Based Gait Analysis with OpenCap: A Narrative Review. Biomechanics. 2025; 5(4):88. https://doi.org/10.3390/biomechanics5040088

Chicago/Turabian StyleCerfoglio, Serena, Jorge Lopes Storniolo, Edilson Fernando de Borba, Paolo Cavallari, Manuela Galli, Paolo Capodaglio, and Veronica Cimolin. 2025. "Smartphone-Based Gait Analysis with OpenCap: A Narrative Review" Biomechanics 5, no. 4: 88. https://doi.org/10.3390/biomechanics5040088

APA StyleCerfoglio, S., Lopes Storniolo, J., de Borba, E. F., Cavallari, P., Galli, M., Capodaglio, P., & Cimolin, V. (2025). Smartphone-Based Gait Analysis with OpenCap: A Narrative Review. Biomechanics, 5(4), 88. https://doi.org/10.3390/biomechanics5040088