Joint Moment Responses to Different Modes of Augmented Visual Feedback of Joint Kinematics during Two-Legged Squat Training

Abstract

:1. Introduction

2. Materials and Methods

2.1. Participants

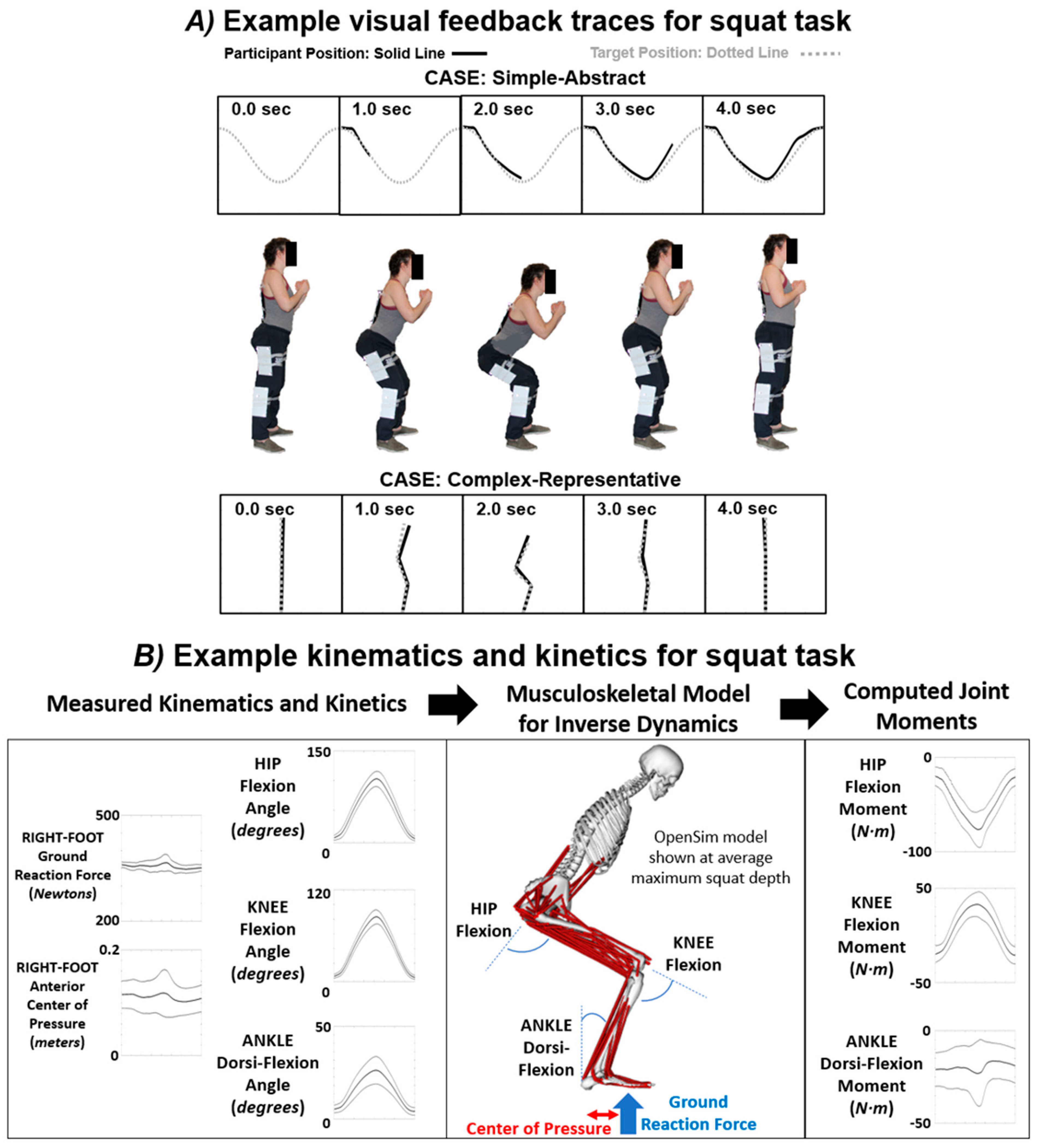

2.2. Experimental Task

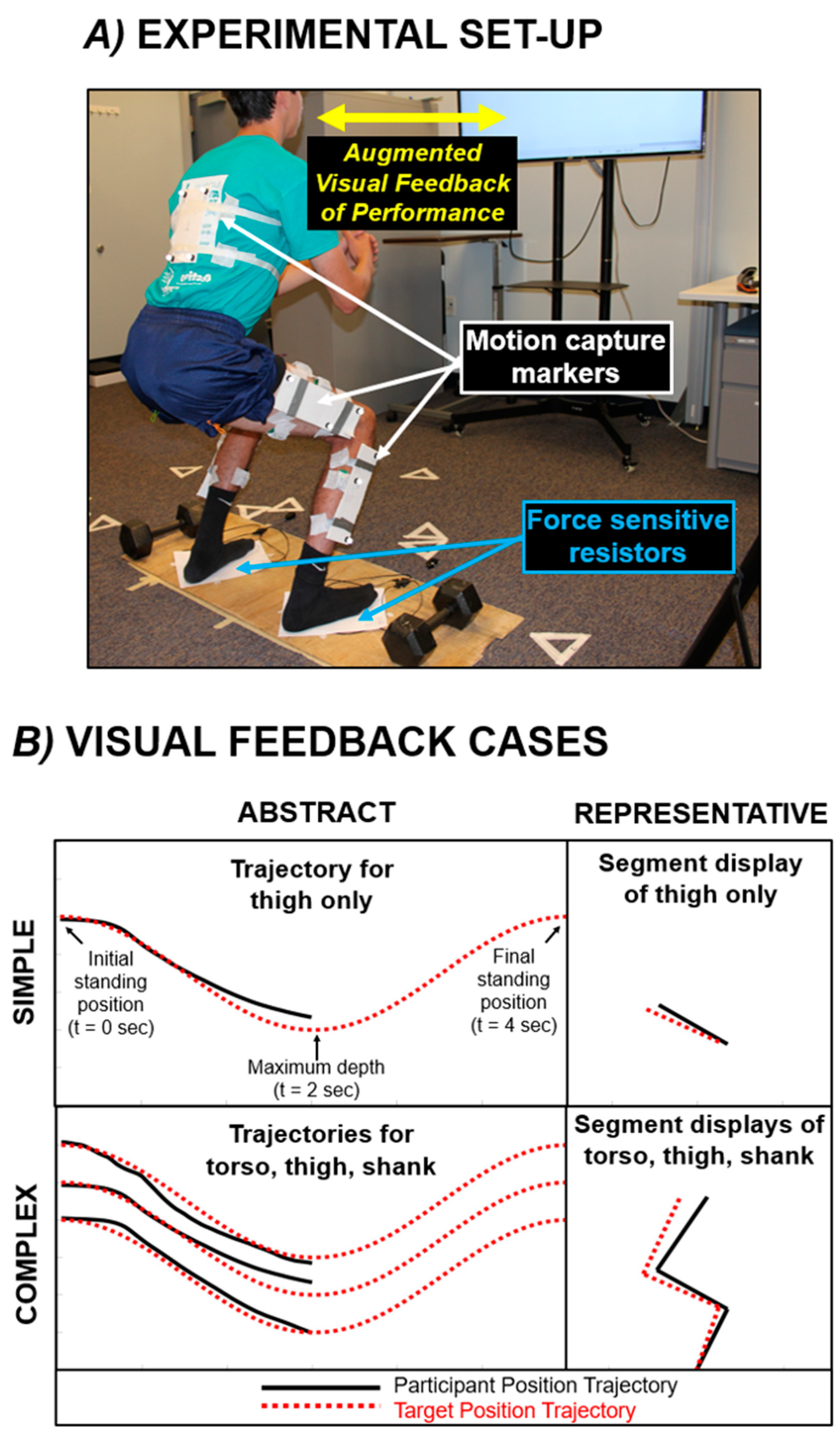

2.3. Experimental Set-Up for Data Collection

2.4. General Testing Procedure and Visual Feedback Modes

2.5. Data Analysis

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Sigrist, R.; Rauter, G.; Riener, R.; Wolf, P. Augmented visual, auditory, haptic, and multimodal feedback in motor learning: A review. Psychon. Bull. Rev. 2013, 20, 21–53. [Google Scholar] [CrossRef] [PubMed]

- Toutoungi, D.; Lu, T.; Leardini, A.; Catani, F.; O’connor, J. Cruciate ligament forces in the human knee during rehabilitation exercises. Clin. Biomech. 2000, 15, 176–187. [Google Scholar] [CrossRef] [PubMed]

- Ramachandran, V.S.; Altschuler, E.L. The use of visual feedback, in particular mirror visual feedback, in restoring brain function. Brain 2009, 132, 1693–1710. [Google Scholar] [CrossRef]

- Sanford, S.; Liu, M.; Selvaggi, T.; Nataraj, R. Effects of visual feedback complexity on the performance of a movement task for rehabilitation. J. Mot. Behav. 2021, 53, 243–257. [Google Scholar] [CrossRef]

- Sanford, S.; Liu, M.; Nataraj, R. Concurrent Continuous Versus Bandwidth Visual Feedback with Varying Body Representation for the 2-Legged Squat Exercise. J. Sport Rehabil. 2021, 30, 794–803. [Google Scholar] [CrossRef]

- Karatsidis, A.; Richards, R.E.; Konrath, J.M.; Van Den Noort, J.C.; Schepers, H.M.; Bellusci, G.; Harlaar, J.; Veltink, P.H. Validation of wearable visual feedback for retraining foot progression angle using inertial sensors and an augmented reality headset. J. Neuroeng. Rehabil. 2018, 15, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Benjaminse, A.; Welling, W.; Otten, B.; Gokeler, A. Transfer of improved movement technique after receiving verbal external focus and video instruction. Knee Surg. Sports Traumatol. Arthrosc. 2018, 26, 955–962. [Google Scholar] [CrossRef]

- Giggins, O.M.; Persson, U.M.; Caulfield, B. Biofeedback in rehabilitation. J. Neuroeng. Rehabil. 2013, 10, 60. [Google Scholar] [CrossRef]

- Onate, J.A.; Guskiewicz, K.M.; Sullivan, R.J. Augmented feedback reduces jump landing forces. J. Orthop. Sports Phys. Ther. 2001, 31, 511–517. [Google Scholar] [CrossRef]

- Kernozek, T.; Schiller, M.; Rutherford, D.; Smith, A.; Durall, C.; Almonroeder, T.G. Real-time visual feedback reduces patellofemoral joint forces during squatting in individuals with patellofemoral pain. Clin. Biomech. 2020, 77, 105050. [Google Scholar] [CrossRef]

- Sigrist, R.; Rauter, G.; Marchal-Crespo, L.; Riener, R.; Wolf, P. Sonification and haptic feedback in addition to visual feedback enhances complex motor task learning. Exp. Brain Res. 2015, 233, 909–925. [Google Scholar] [CrossRef] [PubMed]

- Dyer, J.; Stapleton, P.; Rodger, M. Transposing musical skill: Sonification of movement as concurrent augmented feedback enhances learning in a bimanual task. Psychol. Res. 2017, 81, 850–862. [Google Scholar] [CrossRef] [PubMed]

- Moinuddin, A.; Goel, A.; Sethi, Y. The role of augmented feedback on motor learning: A systematic review. Cureus 2021, 13, e19695. [Google Scholar] [CrossRef] [PubMed]

- Zimmerli, L.; Duschau-Wicke, A.; Mayr, A.; Riener, R.; Lunenburger, L. Virtual reality and gait rehabilitation Augmented feedback for the Lokomat. In Proceedings of the 2009 Virtual Rehabilitation International Conference, Haifa, Israel, June 29–July 2 2009; pp. 150–153. [Google Scholar]

- Otten, E. Inverse and forward dynamics: Models of multi–body systems. Philos. Trans. R. Soc. Lond. Ser. B Biol. Sci. 2003, 358, 1493–1500. [Google Scholar] [CrossRef]

- Winter, D.A. Kinematic and kinetic patterns in human gait: Variability and compensating effects. Hum. Mov. Sci. 1984, 3, 51–76. [Google Scholar] [CrossRef]

- Preatoni, E.; Hamill, J.; Harrison, A.J.; Hayes, K.; Van Emmerik, R.E.; Wilson, C.; Rodano, R. Movement variability and skills monitoring in sports. Sports Biomech. 2013, 12, 69–92. [Google Scholar] [CrossRef]

- Latash, M.L. On primitives in motor control. Mot. Control 2020, 24, 318–346. [Google Scholar] [CrossRef] [PubMed]

- Gerritsen, K.G.; van den Bogert, A.J.; Hulliger, M.; Zernicke, R.F. Intrinsic muscle properties facilitate locomotor control—A computer simulation study. Mot. Control 1998, 2, 206–220. [Google Scholar] [CrossRef]

- Wulf, G.; Dufek, J.S. Increased jump height with an external focus due to enhanced lower extremity joint kinetics. J. Mot. Behav. 2009, 41, 401–409. [Google Scholar] [CrossRef]

- Weakley, J.; Wilson, K.; Till, K.; Banyard, H.; Dyson, J.; Phibbs, P.; Read, D.; Jones, B. Show me, tell me, encourage me: The effect of different forms of feedback on resistance training performance. J. Strength Cond. Res. 2020, 34, 3157–3163. [Google Scholar] [CrossRef]

- Swinnen, S.P.; Lee, T.D.; Verschueren, S.; Serrien, D.J.; Bogaerds, H. Interlimb coordination: Learning and transfer under different feedback conditions. Hum. Mov. Sci. 1997, 16, 749–785. [Google Scholar] [CrossRef]

- Schoenfeld, B.J. Squatting kinematics and kinetics and their application to exercise performance. J. Strength Cond. Res. 2010, 24, 3497–3506. [Google Scholar] [CrossRef]

- Song, Y.; Li, L.; Albrandt, E.E.; Jensen, M.A.; Dai, B. Medial-lateral hip positions predicted kinetic asymmetries during double-leg squats in collegiate athletes following anterior cruciate ligament reconstruction. J. Biomech. 2021, 128, 110787. [Google Scholar] [CrossRef] [PubMed]

- Crenshaw, S.; Royer, T.; Richards, J.; Hudson, D. Gait variability in people with multiple sclerosis. Mult. Scler. J. 2006, 12, 613–619. [Google Scholar] [CrossRef] [PubMed]

- Lewek, M.D.; Scholz, J.; Rudolph, K.S.; Snyder-Mackler, L. Stride-to-stride variability of knee motion in patients with knee osteoarthritis. Gait Posture 2006, 23, 505–511. [Google Scholar] [CrossRef] [PubMed]

- Welling, W.; Benjaminse, A.; Gokeler, A.; Otten, B. Retention of movement technique: Implications for primary prevention of ACL injuries. Int. J. Sports Phys. Ther. 2017, 12, 908. [Google Scholar] [CrossRef]

- Lee, J.H.; Kang, N. Effects of online-bandwidth visual feedback on unilateral force control capabilities. PLoS ONE 2020, 15, e0238367. [Google Scholar] [CrossRef]

- Schiffman, J.M.; Luchies, C.W.; Piscitelle, L.; Hasselquist, L.; Gregorczyk, K.N. Discrete bandwidth visual feedback increases structure of output as compared to continuous visual feedback in isometric force control tasks. Clin. Biomech. 2006, 21, 1042–1050. [Google Scholar] [CrossRef]

- Yang, C.; Bouffard, J.; Srinivasan, D.; Ghayourmanesh, S.; Cantú, H.; Begon, M.; Côté, J.N. Changes in movement variability and task performance during a fatiguing repetitive pointing task. J. Biomech. 2018, 76, 212–219. [Google Scholar] [CrossRef]

- Sigrist, R. Visual and auditory augmented concurrent feedback in a complex motor task. Presence 2011, 20, 15–32. [Google Scholar] [CrossRef]

- McCabe, C. Mirror visual feedback therapy. A practical approach. J. Hand Ther. 2011, 24, 170–179. [Google Scholar] [CrossRef] [PubMed]

- Roosink, M.; Robitaille, N.; McFadyen, B.J.; Hébert, L.J.; Jackson, P.L.; Bouyer, L.J.; Mercier, C. Real-time modulation of visual feedback on human full-body movements in a virtual mirror: Development and proof-of-concept. J. Neuroeng. Rehabil. 2015, 12, 2. [Google Scholar] [CrossRef] [PubMed]

- Hoover, A.E.; Harris, L.R. Detecting delay in visual feedback of an action as a monitor of self recognition. Exp. Brain Res. 2012, 222, 389–397. [Google Scholar] [CrossRef]

- Lieber, R.L.; Bodine-Fowler, S.C. Skeletal muscle mechanics: Implications for rehabilitation. Phys. Ther. 1993, 73, 844–856. [Google Scholar] [CrossRef] [PubMed]

- Webster, K.E.; Feller, J.A.; Wittwer, J.E. Longitudinal changes in knee joint biomechanics during level walking following anterior cruciate ligament reconstruction surgery. Gait Posture 2012, 36, 167–171. [Google Scholar] [CrossRef] [PubMed]

- Hamill, J.; Palmer, C.; Van Emmerik, R.E. Coordinative variability and overuse injury. Sports Med. Arthrosc. Rehabil. Ther. Technol. 2012, 4, 45. [Google Scholar] [CrossRef]

- Hubbard, W.A.; McElroy, G. Benchmark data for elderly, vascular trans-tibial amputees after rehabilitation. Prosthet. Orthot. Int. 1994, 18, 142–149. [Google Scholar] [CrossRef]

- Rutherford, D.J.; Hubley-Kozey, C. Explaining the hip adduction moment variability during gait: Implications for hip abductor strengthening. Clin. Biomech. 2009, 24, 267–273. [Google Scholar] [CrossRef]

- Kilteni, K.; Groten, R.; Slater, M. The sense of embodiment in virtual reality. Presence Teleoperators Virtual Environ. 2012, 21, 373–387. [Google Scholar] [CrossRef]

- Pizzolato, C.; Reggiani, M.; Modenese, L.; Lloyd, D. Real-time inverse kinematics and inverse dynamics for lower limb applications using OpenSim. Comput. Methods Biomech. Biomed. Eng. 2017, 20, 436–445. [Google Scholar] [CrossRef]

- Donohue, M.R.; Ellis, S.M.; Heinbaugh, E.M.; Stephenson, M.L.; Zhu, Q.; Dai, B. Differences and correlations in knee and hip mechanics during single-leg landing, single-leg squat, double-leg landing, and double-leg squat tasks. Res. Sports Med. 2015, 23, 394–411. [Google Scholar] [CrossRef]

- Roos, P.E.; Button, K.; van Deursen, R.W. Motor control strategies during double leg squat following anterior cruciate ligament rupture and reconstruction: An observational study. J. Neuroeng. Rehabil. 2014, 11, 19. [Google Scholar] [CrossRef]

- Martínez-Cava, A.; Morán-Navarro, R.; Sánchez-Medina, L.; González-Badillo, J.J.; Pallarés, J.G. Velocity-and power-load relationships in the half, parallel and full back squat. J. Sports Sci. 2019, 37, 1088–1096. [Google Scholar] [CrossRef]

- Zawadka, M.; Smolka, J.; Skublewska-Paszkowska, M.; Lukasik, E.; Gawda, P. How Are Squat Timing and Kinematics in The Sagittal Plane Related to Squat Depth? J. Sports Sci. Med. 2020, 19, 500. [Google Scholar]

- Webster, K.E.; Austin, D.C.; Feller, J.A.; Clark, R.A.; McClelland, J.A. Symmetry of squatting and the effect of fatigue following anterior cruciate ligament reconstruction. Knee Surg. Sports Traumatol. Arthrosc. 2015, 23, 3208–3213. [Google Scholar] [CrossRef]

- Escamilla, R.F. Knee biomechanics of the dynamic squat exercise. Med. Sci. Sports Exerc. 2001, 33, 127–141. [Google Scholar] [CrossRef]

- Jung, Y.; Koo, Y.-j.; Koo, S. Simultaneous estimation of ground reaction force and knee contact force during walking and squatting. Int. J. Precis. Eng. Manuf. 2017, 18, 1263–1268. [Google Scholar] [CrossRef]

- Park, J.-H.; Shea, C.H.; Wright, D.L. Reduced-frequency concurrent and terminal feedback: A test of the guidance hypothesis. J. Mot. Behav. 2000, 32, 287–296. [Google Scholar] [CrossRef]

- Wu, G.; Cavanagh, P.R. ISB recommendations for standardization in the reporting of kinematic data. J. Biomech. 1995, 28, 1257–1262. [Google Scholar] [CrossRef]

- Nadeau, S.; McFadyen, B.J.; Malouin, F. Frontal and sagittal plane analyses of the stair climbing task in healthy adults aged over 40 years: What are the challenges compared to level walking? Clin. Biomech. 2003, 18, 950–959. [Google Scholar] [CrossRef]

- Nataraj, R.; Li, Z.-M. Integration of marker and force data to compute three-dimensional joint moments of the thumb and index finger digits during pinch. Comput. Methods Biomech. Biomed. Eng. 2015, 18, 592–606. [Google Scholar] [CrossRef] [PubMed]

- Seth, A.; Hicks, J.L.; Uchida, T.K.; Habib, A.; Dembia, C.L.; Dunne, J.J.; Ong, C.F.; DeMers, M.S.; Rajagopal, A.; Millard, M. OpenSim: Simulating musculoskeletal dynamics and neuromuscular control to study human and animal movement. PLoS Comput. Biol. 2018, 14, e1006223. [Google Scholar] [CrossRef]

- Dembia, C.L.; Bianco, N.A.; Falisse, A.; Hicks, J.L.; Delp, S.L. Opensim moco: Musculoskeletal optimal control. PLoS Comput. Biol. 2020, 16, e1008493. [Google Scholar] [CrossRef]

- Gallo, C.; Thompson, W.; Lewandowski, B.; Humphreys, B.; Funk, J.; Funk, N.; Weaver, A.; Perusek, G.; Sheehan, C.; Mulugeta, L. computational modeling using opensim to simulate a squat exercise motion. In Proceedings of the NASA Human Research Program Investigators’ Workshop: Integrated Pathways to Mars, Galveston, TX, USA, 13–15 January 2015. [Google Scholar]

- Lu, Y.; Mei, Q.; Peng, H.-T.; Li, J.; Wei, C.; Gu, Y. A comparative study on loadings of the lower extremity during deep squat in Asian and Caucasian individuals via OpenSim musculoskeletal modelling. BioMed Res. Int. 2020, 2020, 7531719. [Google Scholar] [CrossRef]

- Escamilla, R.F.; Fleisig, G.S.; Lowry, T.M.; Barrentine, S.W.; Andrews, J.R. A three-dimensional biomechanical analysis of the squat during varying stance widths. Med. Sci. Sports Exerc. 2001, 33, 984–998. [Google Scholar] [CrossRef]

- Kommalapati, R.; Michmizos, K.P. Virtual reality for pediatric neuro-rehabilitation: Adaptive visual feedback of movement to engage the mirror neuron system. In Proceedings of the 2016 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Orlando, FL, USA, 16–20 August 2016; pp. 5849–5852. [Google Scholar]

- Shiri, S.; Feintuch, U.; Lorber-Haddad, A.; Moreh, E.; Twito, D.; Tuchner-Arieli, M.; Meiner, Z. A novel virtual reality system integrating online self-face viewing and mirror visual feedback for stroke rehabilitation: Rationale and feasibility. Top. Stroke Rehabil. 2012, 19, 277–286. [Google Scholar] [CrossRef] [PubMed]

- Neitzel, J.A.; Davies, G.J. The benefits and controversy of the parallel squat in strength training and rehabilitation. Strength Cond. J. 2000, 22, 30. [Google Scholar] [CrossRef]

- Latash, M.L.; Scholz, J.P.; Schöner, G. Motor control strategies revealed in the structure of motor variability. Exerc. Sport Sci. Rev. 2002, 30, 26–31. [Google Scholar] [CrossRef]

- Müller, H.; Sternad, D. Motor learning: Changes in the structure of variability in a redundant task. Prog. Mot. Control 2009, 629, 439–456. [Google Scholar]

- Sánchez, C.C.; Moreno, F.J.; Vaíllo, R.R.; Romero, A.R.; Coves, Á.; Murillo, D.B. The role of motor variability in motor control and learning depends on the nature of the task and the individual’s capabilities. Eur. J. Hum. Mov. 2017, 38, 12–26. [Google Scholar]

- Dhawale, A.K.; Smith, M.A.; Ölveczky, B.P. The role of variability in motor learning. Annu. Rev. Neurosci. 2017, 40, 479. [Google Scholar] [CrossRef] [PubMed]

- Anderson, D.I.; Magill, R.A.; Mayo, A.M.; Steel, K.A. Enhancing motor skill acquisition with augmented feedback. In Skill Acquisition in Sport; Routledge: London, UK, 2019; pp. 3–19. [Google Scholar]

- Sanford, S.; Collins, B.; Liu, M.; Dewil, S.; Nataraj, R. Investigating features in augmented visual feedback for virtual reality rehabilitation of upper-extremity function through isometric muscle control. Front. Virtual Real. 2022, 3, 943693. [Google Scholar] [CrossRef]

- Liu, M.; Wilder, S.; Sanford, S.; Saleh, S.; Harel, N.Y.; Nataraj, R. Training with agency-inspired feedback from an instrumented glove to improve functional grasp performance. Sensors 2021, 21, 1173. [Google Scholar] [CrossRef] [PubMed]

- Nataraj, R.; Sanford, S. Control modification of grasp force covaries agency and performance on rigid and compliant surfaces. Front. Bioeng. Biotechnol. 2021, 8, 574006. [Google Scholar] [CrossRef] [PubMed]

- Nataraj, R.; Sanford, S.; Shah, A.; Liu, M. Agency and performance of reach-to-grasp with modified control of a virtual hand: Implications for rehabilitation. Front. Hum. Neurosci. 2020, 14, 126. [Google Scholar] [CrossRef]

- Krishnamoorthy, V.; Goodman, S.; Zatsiorsky, V.; Latash, M.L. Muscle synergies during shifts of the center of pressure by standing persons: Identification of muscle modes. Biol. Cybern. 2003, 89, 152–161. [Google Scholar] [CrossRef] [PubMed]

- Masani, K.; Vette, A.H.; Kouzaki, M.; Kanehisa, H.; Fukunaga, T.; Popovic, M.R. Larger center of pressure minus center of gravity in the elderly induces larger body acceleration during quiet standing. Neurosci. Lett. 2007, 422, 202–206. [Google Scholar] [CrossRef]

- Yu, E.; Abe, M.; Masani, K.; Kawashima, N.; Eto, F.; Haga, N.; Nakazawa, K. Evaluation of postural control in quiet standing using center of mass acceleration: Comparison among the young, the elderly, and people with stroke. Arch. Phys. Med. Rehabil. 2008, 89, 1133–1139. [Google Scholar] [CrossRef]

- Horak, F.B.; Nashner, L.M. Central programming of postural movements: Adaptation to altered support-surface configurations. J. Neurophysiol. 1986, 55, 1369–1381. [Google Scholar] [CrossRef]

- Garcia-Hernandez, N.; Guzman-Alvarado, M.; Parra-Vega, V. Virtual body representation for rehabilitation influences on motor performance of cerebral palsy children. Virtual Real. 2021, 25, 669–680. [Google Scholar] [CrossRef]

- Ventura, S.; Marchetti, P.; Baños, R.; Tessari, A. Body ownership illusion through virtual reality as modulator variable for limbs rehabilitation after stroke: A systematic review. Virtual Real. 2023, 27, 2481–2492. [Google Scholar] [CrossRef]

- Li, G.; Kawamura, K.; Barrance, P.; Chao, E.Y.; Kaufman, K. Prediction of muscle recruitment and its effect on joint reaction forces during knee exercises. Ann. Biomed. Eng. 1998, 26, 725–733. [Google Scholar] [CrossRef] [PubMed]

- Biscarini, A. Determination and optimization of joint torques and joint reaction forces in therapeutic exercises with elastic resistance. Med. Eng. Phys. 2012, 34, 9–16. [Google Scholar] [CrossRef] [PubMed]

- Biscarini, A.; Botti, F.M.; Pettorossi, V.E. Joint torques and joint reaction forces during squatting with a forward or backward inclined Smith machine. J. Appl. Biomech. 2013, 29, 85–97. [Google Scholar] [CrossRef] [PubMed]

- Collins, T.D.; Ghoussayni, S.N.; Ewins, D.J.; Kent, J.A. A six degrees-of-freedom marker set for gait analysis: Repeatability and comparison with a modified Helen Hayes set. Gait Posture 2009, 30, 173–180. [Google Scholar] [CrossRef]

- Lloyd, C.H.; Stanhope, S.J.; Davis, I.S.; Royer, T.D. Strength asymmetry and osteoarthritis risk factors in unilateral trans-tibial, amputee gait. Gait Posture 2010, 32, 296–300. [Google Scholar] [CrossRef]

- Goh, P.; Fuss, F.; Yanai, T.; Ritchie, A. Dynamic intrameniscal stresses measurement in the porcine knee. In Proceedings of the 2006 International Conference on Biomedical and Pharmaceutical Engineering, Singapore, 11–14 December 2006; pp. 194–196. [Google Scholar]

- Seibt, E. Force Sensing Glove for Quantification of Joint Torques during Stretching after Spinal Cord Injury in the Rat Model; University of Louisville: Louisville, KY, USA, 2013. [Google Scholar]

- Sato, M.; Shimada, Y.; Iwani, T.; Miyawaki, K.; Matsunaga, T.; Chida, S.; Hatakeyama, K. Development of prototype FES-rowing power rehabilitation equipment. In Proceedings of the 10th Annual Conference of the International FES Society, Montreal, QC, Canada, 5–8 July 2005. [Google Scholar]

- Robertson, J.V.; Roby-Brami, A. Augmented feedback, virtual reality and robotics for designing new rehabilitation methods. In Rethinking Physical and Rehabilitation Medicine; Springer: Berlin/Heidelberg, Germany, 2010; pp. 223–245. [Google Scholar]

- Magill, R.A.; Anderson, D.I. The roles and uses of augmented feedback in motor skill acquisition. In Skill Acquisition in Sport: Research, Theory and Practice; Routledge: London, UK, 2012; pp. 3–21. [Google Scholar]

- Gerig, N.; Basalp, E.; Sigrist, R.; Riener, R.; Wolf, P. Visual error amplification showed no benefit for non-naïve subjects in trunk-arm rowing. Curr. Issues Sport Sci. 2019, 4, 13. [Google Scholar] [CrossRef]

- Nataraj, R.; Sanford, S.; Liu, M.; Harel, N.Y. Hand dominance in the performance and perceptions of virtual reach control. Acta Psychol. 2022, 223, 103494. [Google Scholar] [CrossRef]

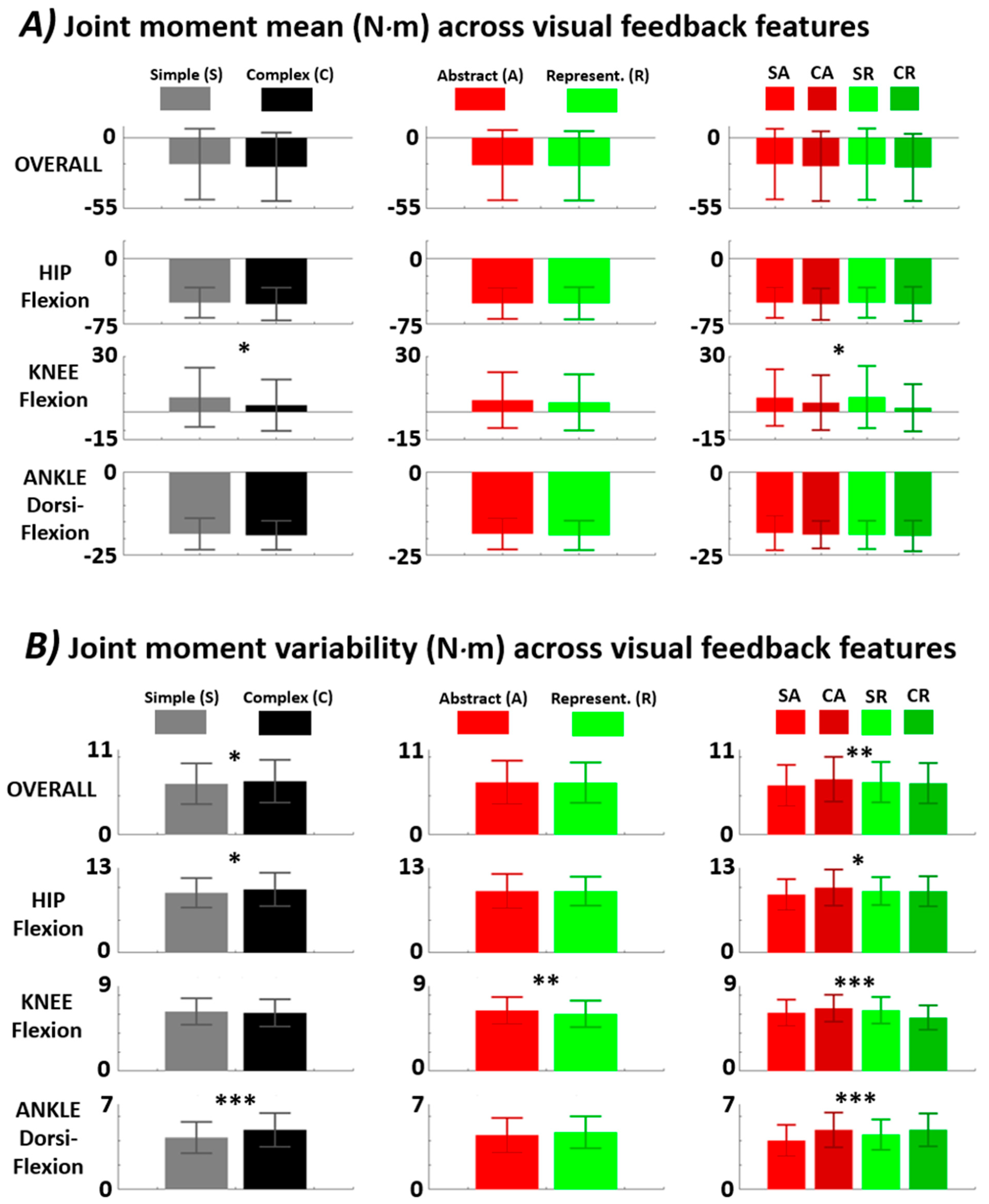

| Joint Moment Mean | |||||||

|---|---|---|---|---|---|---|---|

| Joint | p-Val Complexity (F-Stat) | Simple Mean Value in N·m | Complex Mean Value in N·m | p-Val Representation (F-Stat) | Abstract Mean Value in N·m | Representative Mean Value in N·m | p-Val Interaction (F-Stat) |

| Overall | 0.19 (1.7) | −20.4 ± 27.6 | −22.5 ± 26.6 | 0.75 (0.11) | −21.2 ± 27.3 | −21.7 ± 27.0 | 0.78 (0.08) |

| Hip Flexion | 0.39 (0.74) | −50.5 ± 17.2 | −52.1 ± 18.7 | 0.93 (0.007) | −51.4 ± 17.6 | −51.2 ± 18.3 | 0.95 (0.005) |

| Knee Flexion | 5.4 × 10−3 (7.8) | 7.8 ± 16.0 | 3.6 ± 13.8 | 0.42 (0.66) | 6.4 ± 15.1 | 5.1 ± 15.1 | 0.31 (1.0) |

| Ankle Dorsi-Flexion | 0.38 (0.77) | −18.6 ± 4.7 | −19.0 ± 4.3 | 0.31 (1.0) | −18.5 ± 4.7 | −19.0 ± 4.4 | 0.83 (0.05) |

| Joint Moment Variability | |||||||

| t | p-Val Complexity (F-Stat) | Simple Mean Value in N·m | Complex Mean Value in N·m | p-Val Representation (F-Stat) | Abstract Mean Value in N·m | Representative Mean Value in N·m | p-Val Interaction (F-Stat) |

| Overall | 0.04 (4.4) | 6.6 ± 2.6 | 6.9 ± 2.8 | 0.77 (0.09) | 6.8 ± 2.8 | 6.7 ± 2.6 | 3.2 × 10−3 (8.8) |

| Hip Flexion | 0.04 (4.4) | 9.2 ± 2.3 | 9.7 ± 2.5 | 0.94 (0.03) | 9.4 ± 2.6 | 9.4 ± 2.2 | 0.03 (5.0) |

| Knee Flexion | 0.30 (1.1) | 6.3 ± 1.4 | 6.1 ± 1.4 | 0.01 (6.73) | 6.4 ± 1.4 | 6.0 ± 1.4 | 1.1 × 10−5 (19.9) |

| Ankle Dorsi-Flexion | 5.0 × 10−6 (21.5) | 4.3 ± 1.3 | 4.9 ± 1.4 | 0.075 (3.2) | 4.4 ± 1.4 | 4.7 ± 1.3 | 0.081 (3.1) |

| Joint Moment Mean | |||||

|---|---|---|---|---|---|

| Joint | Simple-Abstract Mean Value in N·m | Simple-Representative Mean Value in N·m | Complex-Abstract Mean Value in N·m | Complex-Representative Mean Value in N·m | Significant Difference Pairs (p-Val) |

| Overall | −20.4 ± 27.5 | −22.0 ± 27.2 | −20.4 ± 27.8 | −23.0 ± 26.2 | N/A |

| Hip Flexion | −50.5 ± 17.3 | −52.3 ± 17.9 | −50.5 ± 17.2 | −52.0 ± 19.4 | N/A |

| Knee Flexion | 7.7 ± 15.2 | 5.0 ± 14.8 | 8.0 ± 16.7 | 2.2 ± 12.7 | CA−CR (0.04) |

| Ankle Dorsi-Flexion | −18.3 ± 5.2 | −18.8 ± 4.1 | −18.9 ± 4.2 | −19.2 ± 4.6 | N/A |

| Joint Moment Variability | |||||

| Joint | Simple-Abstract Mean Value in N·m | Simple-Representative Mean Value in N·m | Complex-Abstract Mean Value in N·m | Complex-Representative Mean Value in N·m | Significant Difference Pairs (p-Val) |

| Overall | 6.4 ± 2.6 | 7.1 ± 2.9 | 6.8 ± 2.6 | 6.6 ± 2.6 | SA-SR (2 × 10−3) |

| Hip Flexion | 8.9 ± 2.4 | 9.9 ± 2.8 | 9.4 ± 2.1 | 9.4 ± 2.3 | SA-SR (0.01) |

| Knee Flexion | 6.2 ± 1.4 | 6.6 ± 1.4 | 6.4 ± 1.4 | 5.6 ± 1.3 | SA-CR (0.05), SR-CR (4 × 10−4), CA-CR (6 × 10−4) |

| Ankle Dorsi-Flexion | 4.0 ± 1.3 | 4.9 ± 1.4 | 4.5 ± 1.2 | 4.9 ± 1.3 | SA-SR (4 × 10−5), SA-CA (3 × 10−5) |

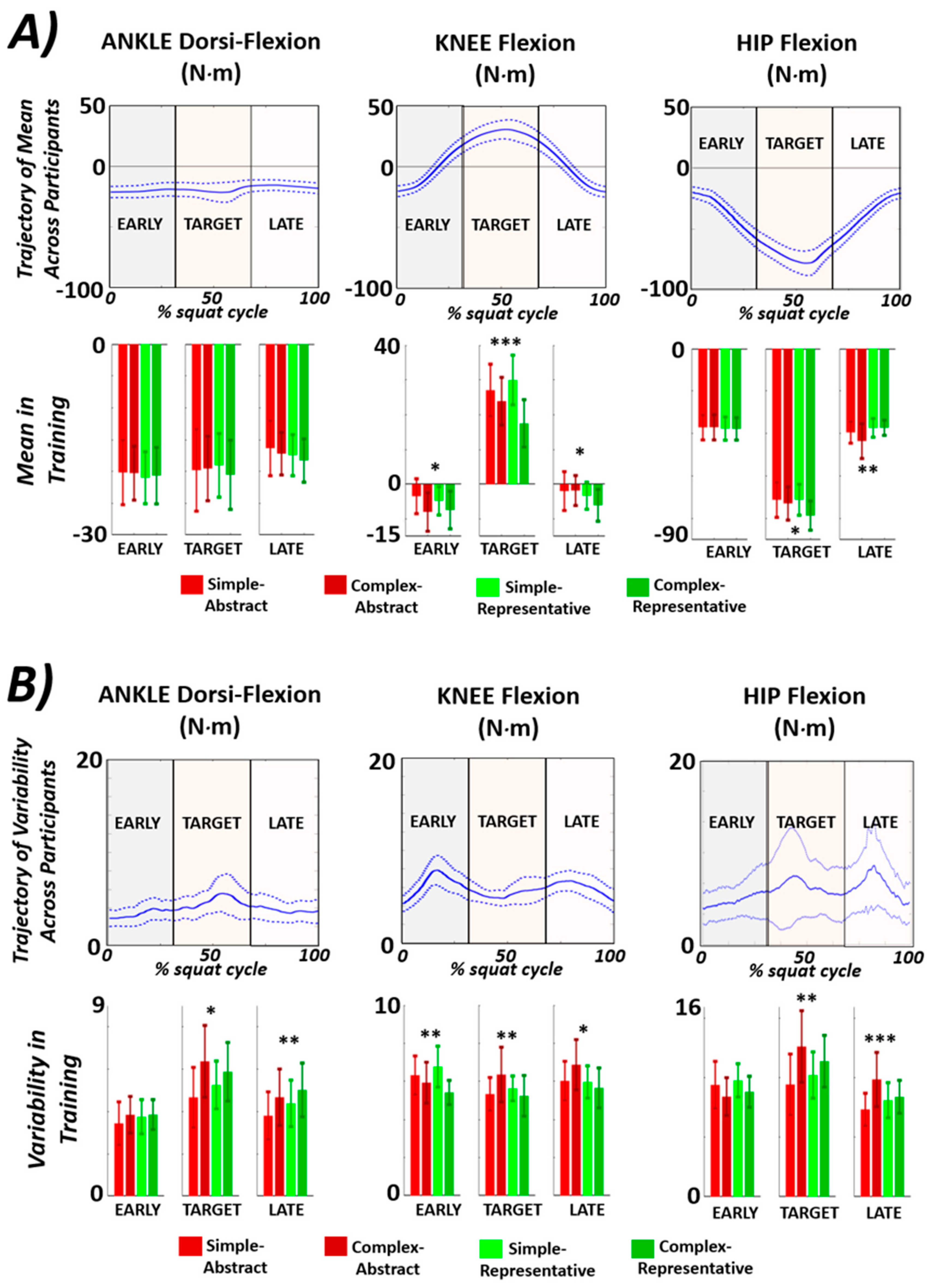

| Ankle Dorsi-Flexion | Knee Flexion | Hip Flexion | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Early | Target | Late | Early | Target | Late | Early | Target | Late | |

| JT Mom Mean p-val, (F-stat) | 0.95 (0.12) | 0.90 (0.20) | 0.50 (0.79) | 0.04 (2.9) | 5 × 10−4 (9.15) | 0.04 (2.9) | 0.98 (0.07) | 0.02 (3.5) | 7 × 10−3 (4.4) |

| JT Mom Variability p-val (F-stat) | 0.48 (0.83) | 8 × 10−3 (4.4) | 0.04 (2.9) | 2 × 10−3 (5.7) | 0.02 (3.8) | 0.02 (3.8) | 0.08 (2.3) | 3 × 10−3 (5.1) | 7 × 10−4 (6.4) |

| Simple-Abstract | Simple-Representative | Complex-Abstract | Complex-Representative | |

|---|---|---|---|---|

| Accuracy (mean error, degrees) | 5.1 ± 1.3 | 3.0 ± 0.6 | 4.4 ± 1.4 | 5.2 ± 1.3 |

| Consistency/precision (s.d. of error, degrees) | 3.5 ± 1.2 | 1.9 ± 0.6 | 2.4 ± 0.6 | 3.3 ± 0.7 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nataraj, R.; Sanford, S.P.; Liu, M. Joint Moment Responses to Different Modes of Augmented Visual Feedback of Joint Kinematics during Two-Legged Squat Training. Biomechanics 2023, 3, 425-442. https://doi.org/10.3390/biomechanics3030035

Nataraj R, Sanford SP, Liu M. Joint Moment Responses to Different Modes of Augmented Visual Feedback of Joint Kinematics during Two-Legged Squat Training. Biomechanics. 2023; 3(3):425-442. https://doi.org/10.3390/biomechanics3030035

Chicago/Turabian StyleNataraj, Raviraj, Sean Patrick Sanford, and Mingxiao Liu. 2023. "Joint Moment Responses to Different Modes of Augmented Visual Feedback of Joint Kinematics during Two-Legged Squat Training" Biomechanics 3, no. 3: 425-442. https://doi.org/10.3390/biomechanics3030035

APA StyleNataraj, R., Sanford, S. P., & Liu, M. (2023). Joint Moment Responses to Different Modes of Augmented Visual Feedback of Joint Kinematics during Two-Legged Squat Training. Biomechanics, 3(3), 425-442. https://doi.org/10.3390/biomechanics3030035