1. Introduction

Predicting and analyzing the stability of underground rock excavations have been a long-time challenge in the field of geotechnical studies. In particular, for enterprises in the mineral industry that use open stoping methods, an adequate evaluation of stope stability is fundamental to preserve worker safety, reduce the impact of phenomena such as dilution, and ensure the continuity of operations in production galleries.

Studies aiming to evaluate the stability of stopes in mining date back to the 1980s, with the development of the first stability graphs by Mathews et al. [

1], later improved by Potvin [

2]. Popular in the industry to this day, these early graphs made use of modified values from the rock mass classification system proposed by Barton et al. [

3], in correlation with the hydraulic radius of the hangingwall surface to define zones of stability and instability. The premises defined by Mathews and Potvin led to the development of several works associated with stability graphs, such as those produced by Laubscher [

4], Villaescusa [

5], and Clark [

6], the latter introducing the concept of ELOS, with prominent use in the mining industry.

A recurring criticism associated with the graphs, however, is the excessive simplification of the instability phenomenon, where it is reduced to only a few variables when, in practice, it proves to be highly complex. Additionally, there is the implicit subjectivity in the definitions of ‘stable’ and ‘unstable’ within the graphs [

7,

8]. As such, new lines of study have emerged over the years proposing to investigate stope instability or its consequences, such as dilution, in different ways using various variables. These include multivariate regression [

9], computational modeling [

10], and logistic regression [

11]. More recent research has focused on statistical models and machine learning, such as the work by Qi et al. [

12], which used classification through random forests to determine whether a stope is stable or unstable.

In some of the works that use a statistical approach, a noticeable characteristic is the adoption of balanced datasets, that is, datasets where the ratio between the number of ‘stable’ and ‘unstable’ stope observations is less than 1.5 [

13]. However, because the mining industry is composed mostly of enterprises aiming for profit, it is expected that mines will trend to have their stopes planned to be stable, making the available datasets either imbalanced or highly imbalanced (when the ratio between the number of ‘stable’ and ‘unstable’ stope observations is greater than 9). In the study performed by Clark [

6], about 34 stopes of 47 can be considered stable, making it an imbalanced dataset; in Wang [

7], over 60% of the stopes can also be assumed as stable; and even considering Potvin [

2]’s stability graph, around 70% of the stopes fell into the stability zone, reinforcing the general notion of imbalance when dealing with stope datasets.

Imbalanced datasets tend to adversely affect the performance of statistical and machine learning models by biasing them towards the majority class [

14] and amplifying the effects of issues associated with data, such as a lack of representativity, high dimensionality, and unwanted noise [

15]. For that reason, having a methodology that also considers imbalanced datasets is fundamental in order to cover a wider range of mining scenarios.

Considering the aforementioned aspects, this project aims to propose a new methodology to predict the stability of underground stopes by building a dataset composed of field data that characterizes mined stopes, rebalancing the data using a rebalancing algorithm, using that data to estimate the parameters of different probabilistic classifiers, comparing the performance of the models across specific metrics, selecting the two most relevant features, and using those features for the estimation of a bi-dimensional decision frontier that separates the stable and unstable zones.

3. Materials and Methods

The observations in the dataset originated from an underground zinc mine that used vertical retreat mining, a variant of the sublevel open stoping, as the mine method. Each observation corresponds to a stope mined in the period between 2017 and 2020. From the descriptive features present in the dataset, those selected were the ones whose impact in stope stability has been consolidated in the literature:

Rock Quality Designation (RQD) (%): RQD is defined as the ratio between the sum of the lengths of core fragments greater than 10 cm and the total length of the core run, preferably not exceeding 1.5 m [

22,

45]. Based on the obtained value, the rock mass will be classified in terms of its quality, ranging from very poor (0–25%) to excellent (90–100%). Various studies in the literature, using RQD or other classification systems, point to the influence of the rock mass quality on the stability of the opening, with higher-quality rock masses exhibiting greater stability [

2,

7,

46,

47].

Hydraulic Radius (HR) (m): The hydraulic radius is defined as the ratio between the stope’s area of the hangingwall surface and its perimeter, a variable representing the geometry of the stope, a shape factor of the critical face [

47]. The hydraulic radius was widely used in some of the earliest studies [

1,

2,

6] related to stope stability, with a trend of decreasing stability as its value increases [

48].

Depth (m): According to Hoek et al. [

49], the vertical stress applied to a rock mass can be estimated from the product of the depth of a point within that rock mass by its specific weight. In the mine under consideration, the specific weight of the rock mass is considered approximately constant (about 28 kN/m

3), meaning that vertical stress is directly proportional to depth. Different studies [

8,

50] suggest that the relaxation and redistribution of stresses applied to the rock mass are influential variables affecting the stability of the stope.

Direction (degrees): This refers to the direction of the hangingwall’s surface plane, i.e., its orientation relative to north. The orientation of the stope with respect to the discontinuity sets present in the rock mass is a critical factor for its stability [

5,

6].

Dip (degrees): Dip is the angle between a plane parallel to the hangingwall and a horizontal plane of reference. Stopes with less steep dips tend to exhibit greater instability, as vertical stresses are directed onto the orebody, resulting in larger displacements within the rock mass [

10].

Undercut (m): This variable refers to the situation where the shape of the drift leading to the stope does not follow the ore contact and extends into the hangingwall [

51]. Undercutting is an influential factor in stope stability because it degrades the integrity of the rock mass and increases the zones of loosened rock that could potentially fall into the stope [

7]. Here, the value represents the performed undercut by the mine operations team.

Stability factors: Three correction factors are multiplied by the Q-value from Barton’s Q-system [

3] to calculate the stability number ‘N’ used in the Matthews [

1] and Potvin [

2] stability charts. Factor A represents the effect of stress on the stope surface, Factor B is associated with the effect of discontinuities on the stability of that surface, and Factor C evaluates the effect of gravity on the stope surface.

The response variable is binary, representing the classification of the stope as either unstable or stable. The criterion for defining stope stability followed that used by Clark [

6] and by Qi et al. [

12] in their studies. Based on the evaluation of ELOS (equivalent linear overbreak slough), measured using a cavity monitoring system (CMS), it was proposed that stopes with an ELOS value greater than 1 m would be considered unstable, while those with an ELOS value less than 1 m would be considered stable.

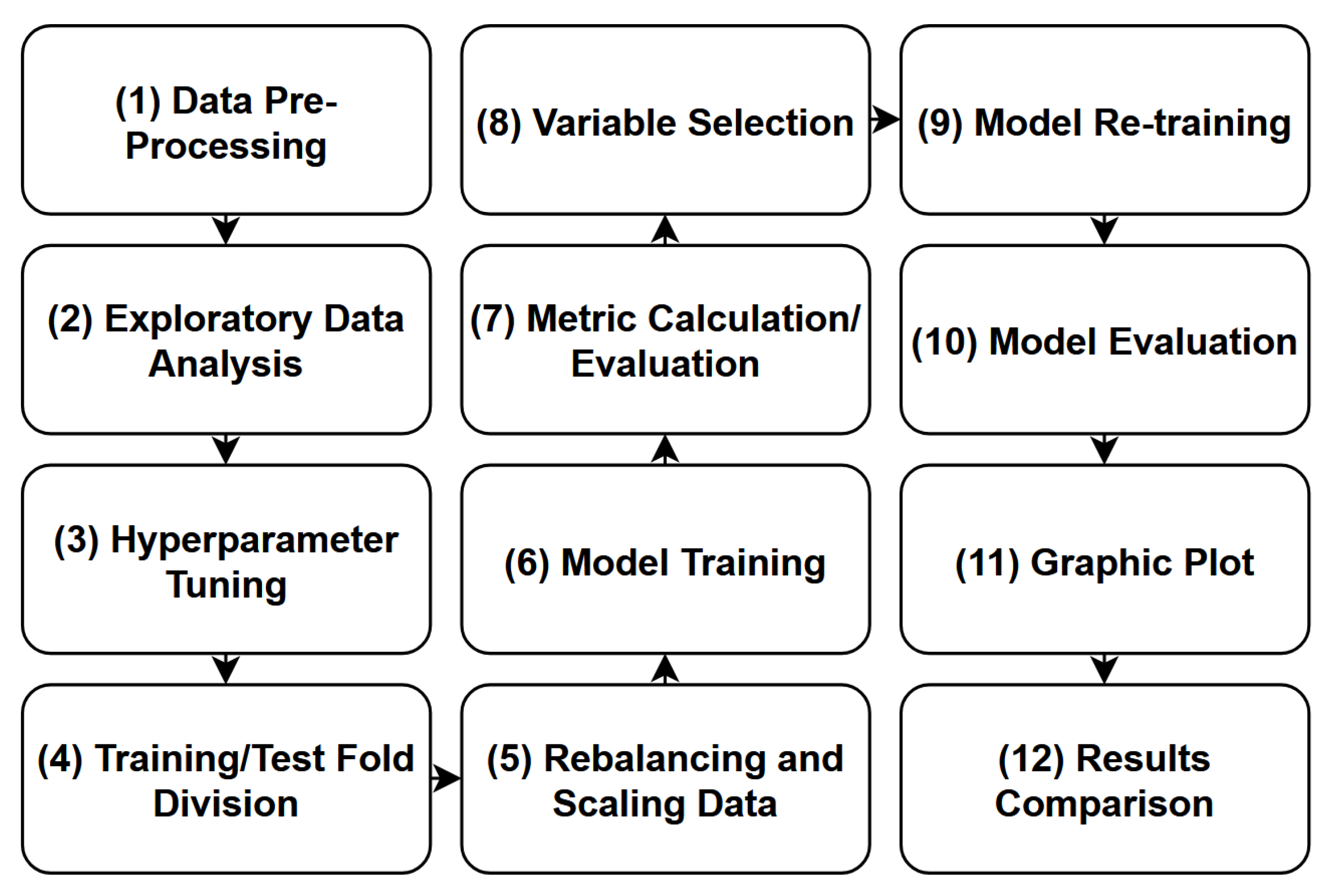

As an analytical tool, the Python 3.7 programming language and its available libraries were used. The adopted process flow is summarized in

Figure 3.

The data pre-processing stage involved selecting the variables considered relevant to the process, determining the variable types, and investigating the presence of null and duplicate values. The exploratory analysis included univariate analysis, which involved evaluating descriptive statistics by considering the observations in each class separately, as well as assessing features distributions. It also included bivariate analysis, which involved investigating correlations between the variables.

The model hyperparameter selection and tuning was performed using the “grid search” method, which conducts an exhaustive search within defined subsets of the possible hyperparameter space [

52]. In total, five models were trained and tested. Data rebalancing was performed using the SMOTE algorithm, whereas the scaling was performed using the z-score. To evaluate model performance, four different metrics were used: accuracy, precision, recall, and G-mean, although the G-mean was the standard metric adopted in loss functions and hyperparameter tuning. Variable selection was performed using the permutation feature importance method [

53] on the G-mean metric.

For dividing the dataset into training and testing sets, the “k-fold cross-validation” methodology was used. In this approach, the dataset is subdivided into

k subsets such that

are used to train the models and 1 is used for testing. The subsets are rotated through these roles until all observations have been used in both the training and testing processes. A value of

was adopted in this procedure. The subdivision into subsets is carried out randomly but maintains the class proportions in the different subsets (“stratified k-fold”). The cross-validation procedure is widely adopted as a method for estimating a model’s predictive error [

32].

The five models evaluated were logistic regression, k-nearest neighbors, random forests, gradient boosting machine, and support vector machine. Models were chosen based on their characteristics: Logistic regression is a simple but highly interpretable model that, when used as a classifier, estimates a linear frontier that separates the classes; k-nearest neighbors is the most simple non-parametric classification algorithm, being highly flexible in the frontier estimation; random forests and gradient boosting machines are, respectively, bagging and boosting-type models, which allows them to work well in regard the bias-variance tradeoff; and the support vector machines are also highly interpretable and flexible models that can estimate different frontiers depending on the kernel used.

Logistic regression: The hyperparameters tuned using grid search were the regularization parameter “C” (in increments of a factor of 10, ranging from 0.001 to 1000) and the norm used in the penalty (“l1” or “l2”). The optimal combination found to maximize the G-mean was and norm = “l1”.

k-nearest neighbors: The only adjusted hyperparameter was the number of nearest neighbors “k” (ranging from 1 to 200). The value found that maximized the G-mean was .

Random forest: The tuned hyperparameters were the maximum depth of the decision trees (an integer ranging from 3 to 10), the number of decision trees in the model (an integer increasing in steps of 50 from 50 to 1000), and the selection criterion (Gini or entropy). The combination that maximized the G-mean was a depth of 5, number of trees = 200, and criterion = “entropy”.

Gradient boosting machine: The weak learners were decision trees and the tuned hyperparameters were the number of estimators (an integer, ranging from 50 to 500, in steps of 50), the learning rate (a float ranging from 0.1 to 1 in steps of 0.1) and the maximum depth (integer, from 0 to 10). The combination that maximized the G-mean was number of estimators = 150, learning rate = 0.8, and maximum depth = 3.

Support vector machine: The tuned hyperparameters were the model’s kernel (“Gaussian RBF”, “sigmoidal”, and “polynomial”), the regularization parameter “C” (multiples of 10, ranging from 0.001 to 1000), and the Gamma parameter (multiples of 10, between 0.001 and 1). The combination that maximized the G-mean was kernel = “polynomial” (with degree = 3), , and gamma = 0.01.

It is worth mentioning that the list is not exhaustive and was constrained by the computational power available to run the grid search algorithm in feasible time. Some of the optimal values were also observed to present slightly different results after the algorithm was executed multiple times due to the probabilistic nature of the process and effects of imbalance in the testing dataset. The ranges for hyperparameters were chosen as recommended by the Scikit-learn Python library user guide [

54].

4. Results and Discussion

A preliminary investigation of the database indicated the presence of 341 observations and 22 variables, out of which 10 were selected for the study following the previously presented guidelines. None of these variables showed any null occurrences. A single duplicate observation was identified and promptly removed. All 10 variables were renamed to facilitate interpretation, processing, and recognition by the programming language, as shown in

Table 1.

For statistical analysis purposes, all presented variables will be considered continuous numerical variables acting as predictors, with the exception of the ‘state’ variable, which serves as the response variable. The latter will be considered a non-ordinal categorical discrete variable with a cardinality of 2, taking the value ‘0’ when the stope is considered stable and ‘1’ when the stope is considered unstable—note that the study’s purpose is to be able to predict stope instability (hence, the positive class). Once the initial assessment of the database was completed, the exploratory data analysis of the predictors began.

Table 2 and

Table 3 present the descriptive statistics of the predictor variables for stable and unstable stopes, respectively.

From the count of observations in the different classes, it can be noted that the database is highly imbalanced, with an IR (imbalance ratio) equal to 15.2. That is contrasts with other works in the literature such as Potvin’s [

2], with an IR of approximately 2.4; Clark’s [

6], with an IR of approximately 2.6; and Wang’s [

7], with an IR higher than 1.5. For RQD, unstable stopes have a lower average value than stable ones, which aligns with the literature (although, perhaps, within the expected variability). A similar conclusion can be drawn for the hydraulic radius, where the average for unstable stopes tends to be higher. Comparable values for the mean and quartiles of stope depth in both classes indicate that they have similar distributions across sublevels, potentially suggesting that this variable, on its own, may not have strong discriminative power. Dip and undercut are also in line with findings from the literature, with steeper stopes tending to be more stable and stopes with greater undercut tending toward instability. Factors A and B appear to have similar distributions between the classes, suggesting limited potential in the classification process, while for Factor C, stopes with lower values seem to tend toward instability.

Another important aspect to investigate is the similarity between the distributions in different classes. Since the purpose of a classifier is to estimate a decision boundary that separates observations belonging to different categories, variables whose class distributions are clearly distinct tend to contribute more, individually, to the classification process. One way to investigate this difference is through the Mann–Whitney U test [

55], a non-parametric hypothesis test that allows one to determine whether the distribution that generated a particular sample “x” is the same as that which generated a particular sample “y”. Thus, by applying the test on the variables in different classes, the

p-value for the test statistic can be seen in

Table 4.

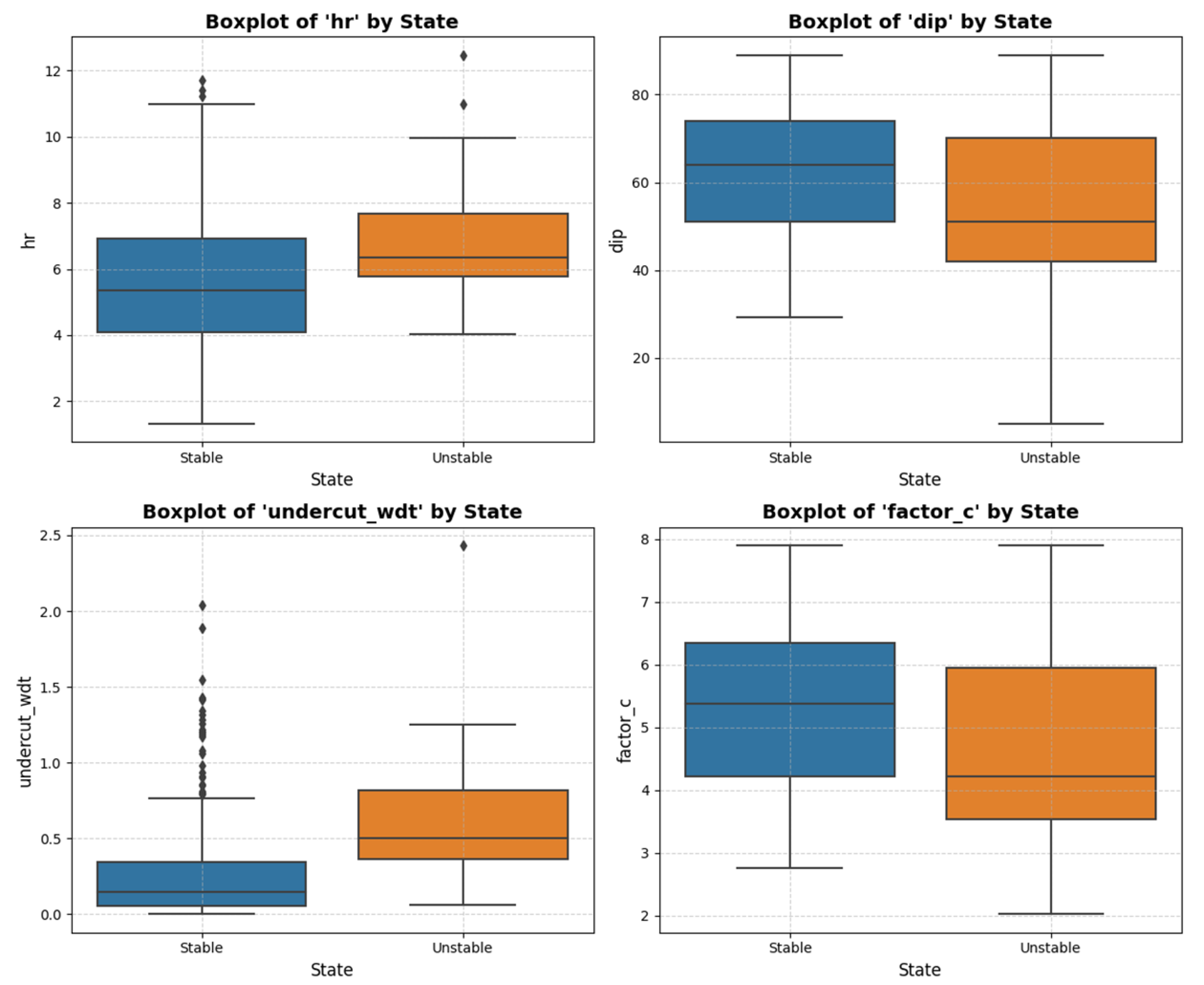

It can be concluded that it is possible to reject the null hypothesis for some of the variables—“hr”, “dip”, “undercut_wdt”, and “factor_c”—indicating that these variables, individually, contribute more significantly to differentiating between the classes. However, the univariate analysis does not allow us to determine whether there are any complex relationships among the remaining variables. Therefore, all nine were retained for the modeling process. The discriminative characteristics of the four aforementioned variables can be more readily visualized with a boxplot, as shown in

Figure 4.

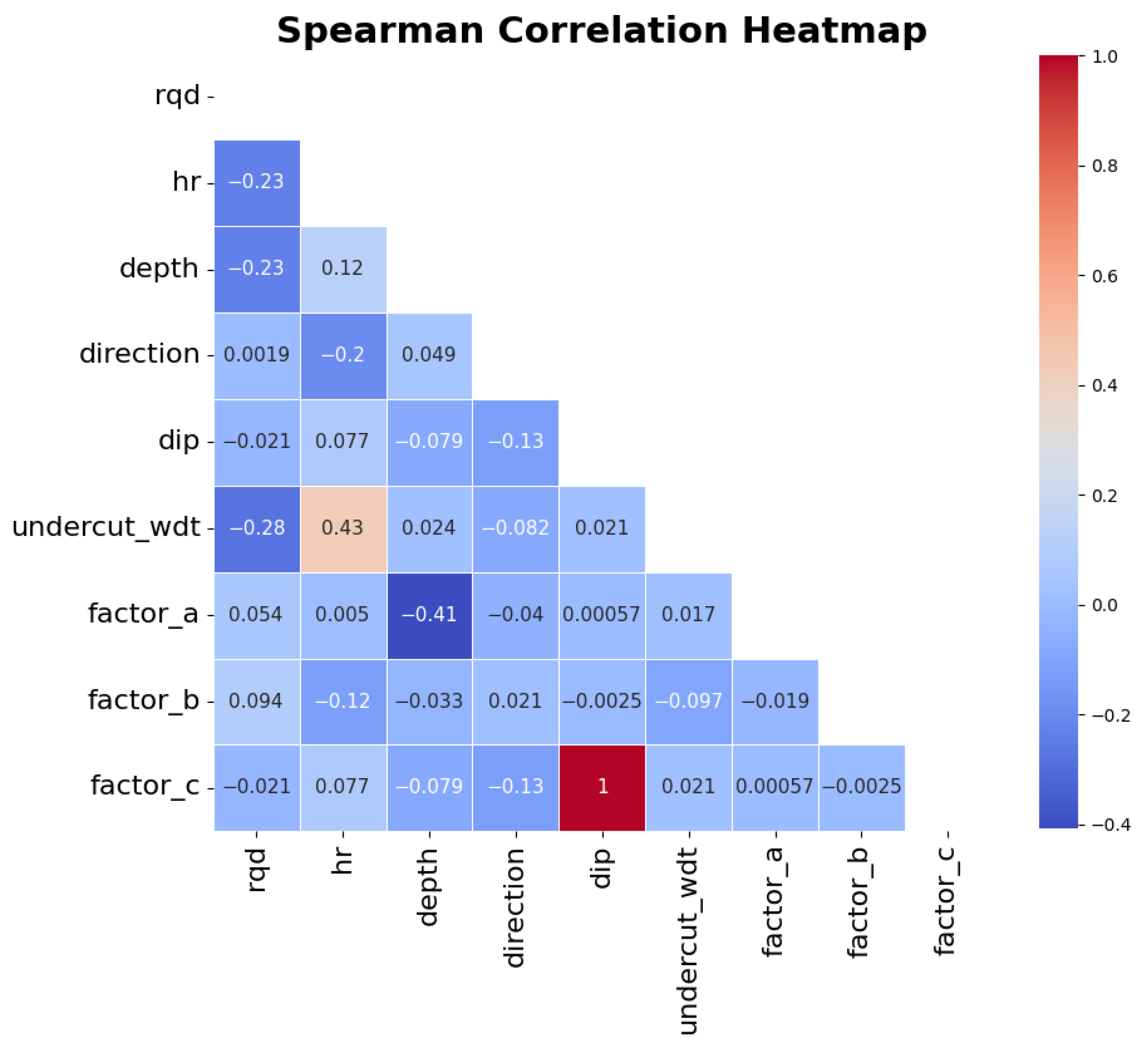

By examining the relative positioning of the boxplots representing the observations in different classes, a distinction between the distributions can be observed, which is particularly notable for the variable ‘undercut_wdt’. To evaluate the correlation between the variables, correlation coefficients can be employed. For this work, the Spearman correlation coefficient was chosen because it can also detect monotonic nonlinear relationships between the variables. The results can be seen in

Figure 5.

Most of the features present a weak to no correlation with each other. A noticeable exception is the variable ‘dip’, which shows a strong correlation with the variable ‘factor_c’, although something expected given ‘factor_c’ uses the value of stope dip on its calculation. Other relevant relationships observed are between ‘factor_a’ and ‘depth’, which present a moderate negative relationship, something also in line with the literature given that ‘factor_a’ is inversely proportional to the vertical stresses applied on the rock mass which, in turn, increase with the depth; and between the ‘undercut_wdt’ and ‘rqd’, which present a moderate positive relationship. This last one, however, is unexpected: It can potentially mean that there is a tendency in the mine of opening larger stopes in areas where the undercut is greater or, in the other way, that the undercut tends to be higher in areas where larger stopes are planned. The investigation and confirmation of those, however, is outside the scope of this work currently. Additionally, although retaining correlated variables can incur bias in certain models, their impact is negligible when using the models for prediction (which is the purpose of this work) rather than for inference; hence, it was opted to keep both the correlated variables in the subsequent steps.

With the exploratory analysis completed, the training and testing of the models began.

Table 5 presents the average value of the metrics on the five train-test folds from the cross-validation after the models were trained on the original dataset, without rebalancing, whereas

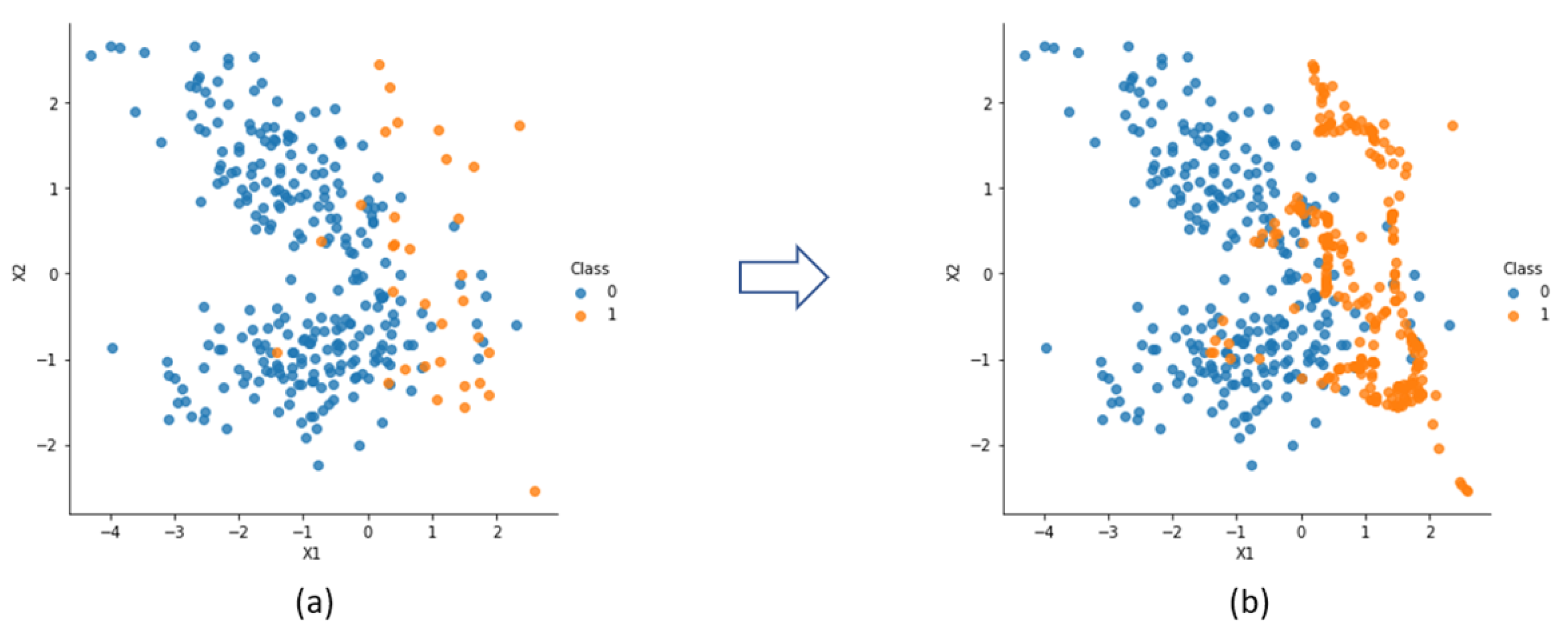

Table 6 presents the same, but after the training dataset was rebalanced with the SMOTE algorithm [

42] (testing sets do not get rebalanced) until an I.R. of 1 was reached (as hyperparameter, the algorithm used

k, the number of neighbors, equal to 3).

It is important to clarify the meaning of the metrics in the context of the stability phenomena in order to better evaluate the results. First, accuracy: Because it is a metric that evaluates the correctness of the algorithm, it is expected that it will be high when the dataset is imbalanced as the model will simply classify all stopes as stable and, because the number of stable observations is much higher than unstable, the mistakes made on the unstable have little effect on the metric value itself. That is the reason why the metric can be misleading, as a model that considers every stope as stable is useless in a stability evaluation context.

Now, for precision and recall: In a model trained on an imbalanced dataset, both will tend to have very low values or zero, which is expected as the value in the numerator in both metrics is the number of unstable stopes correctly classified as unstable. Because classifiers trained on imbalanced datasets are unable to correctly identify unstable stopes, that value will tend to be zero, bringing the whole metric to zero. When the dataset is rebalanced, the scenario changes: The precision will increase, albeit the results remain low. That is because precision has the false negative on the denominator (see Equation (1)) and, as the dataset is rebalanced, a portion of the estimated decision frontier will invade the region of the stable stopes, making the classifier consider those as unstable. As for recall, the value has a significant boost, which is expected as the number of unstable stopes correctly classified increase with the rebalancing, as the decision frontier becomes clearer for the classifier. The G-mean is the metric with highest increase: Influenced by the classifier’s capability of identifying both the stable and unstable stopes, the metric tends to increase as the model manages to better identify the unstable ones, even though it will be penalized by mistaking some stable stopes as unstable.

In a mining context, the decision regarding which model to use will depend on the business strategies: Mines at high risk of instability will most likely lean more towards models with high recall. Other metrics may also be used, such as the F-beta score (harmonic mean between precision and recall, where recall weights ‘beta’ times more) or balanced accuracy. Metrics can also be improved for a given classifier by changing the threshold of the decision rule. It is also possible to create custom metrics based on different mistaking costs, using a function on a cost-sensitive learning process. Those, however, are beyond the scope of this paper.

The next step involved selecting the two most relevant variables for the classification process. Was chosen for the task permutation feature importance, an algorithm that shuffles the observations on each feature individually and measures the average impact on a metric of choice used. The metric evaluated was the G-mean and the results are presented in

Table 7. Green cells represent the feature with the highest G-mean decrease (and its value) for the given model, whereas the yellow cells represent the feature with the second highest G-mean decrease.

The first aspect of notice is the removal of the feature “dip” in the results: “dip” was identified as being highly correlated with “factor_c”, something expected as the latter uses the former to be calculated. While correlated variables have a negligible impact on the predictive capabilities of the models, the same can not be said for the usage of permutation importance, because although the features are shuffled individually, the model would still have access to the information through the corresponding correlated feature, leading to a bias that results in lower importance values for both features. Hence, it was decided to maintain “factor_c” instead of “dip”. As for the values themselves, the higher the value, the greater the impact shuffling the variable has on the model’s performance. Negative values imply that either the variable is detrimental to the model or some slight improvement was observed on chance alone.

Regarding the results, different models will be capable of processing the features differently. Green cells represent the highest value for a given model, whereas yellow cells represent the second highest. The predominance of “undercut_wdt” as the most impactful feature in four of the five models is noticeable. The relevance of “undercut_wdt” as a discriminant variable had been previously identified during the exploratory data analysis (see

Figure 4), with permutation importance further confirming the finding. Similarly, the hydraulic radius (“hr”) was identified as the second most impactful feature in three of the five models, evidencing the effect that the stope’s geometry may have on instability. Considering those findings, those two features will be the ones used in implementing the stability graphs. It is important, though, to highlight that those results are specific to the mine context in which the research was developed: Because stability is a complex phenomenon, different variables may reveal themselves as being impactful on different mines, so a context-specific analysis must be performed instead of over-relying on findings from external environments.

The results obtained also provide useful insight that goes beyond the stability graphs: Because the undercut width was identified as being a very impactful feature, it is possible to conclude that, for that specific mine, the stope stability is strongly related to operational performance when building the drifts on which the stopes will be drilled, highlighting the importance of increased control in operational procedures. The hydraulic radius, as a representative of the stope geometry, also plays a role for mine planning in determining the maximum size of the stope. Other variables that were identified as relevant, albeit to a lesser degree, such as the dip, may also help in stope development on specific circumstances.

The models were, then, trained one last time with the two most impactful features identified, “hr” and “undercut_wdt”. Training was performed on 80% of the dataset (after rebalancing), whereas the testing (on which the metrics were calculated upon) was performed on the remaining 20% (original observations, unbalanced). The results are presented on

Table 8.

Generally, an improvement was observed after the removal of certain variables, which may be expected given that some values observed in

Table 7 are either zero or negative, with little to no contribution towards the classification process (or even harmful). Logistic regression presents the highest values across the metrics, which may infer the linear nature of the estimated Bayes decision frontier. A highlight is the performance of the support vector machine, in which there was a drop in the G-mean, which is also expected considering that the model identified other features as being more impactful.

The hypothesis defined before this study is that, through probabilistic modeling, it is possible to implement a more accurate and site-specific stability graph to trump the traditionally used one developed by Matthews and Potvin. Following the principle of Occam’s razor, the logistic regression model was chosen as the deliverable of comparison.

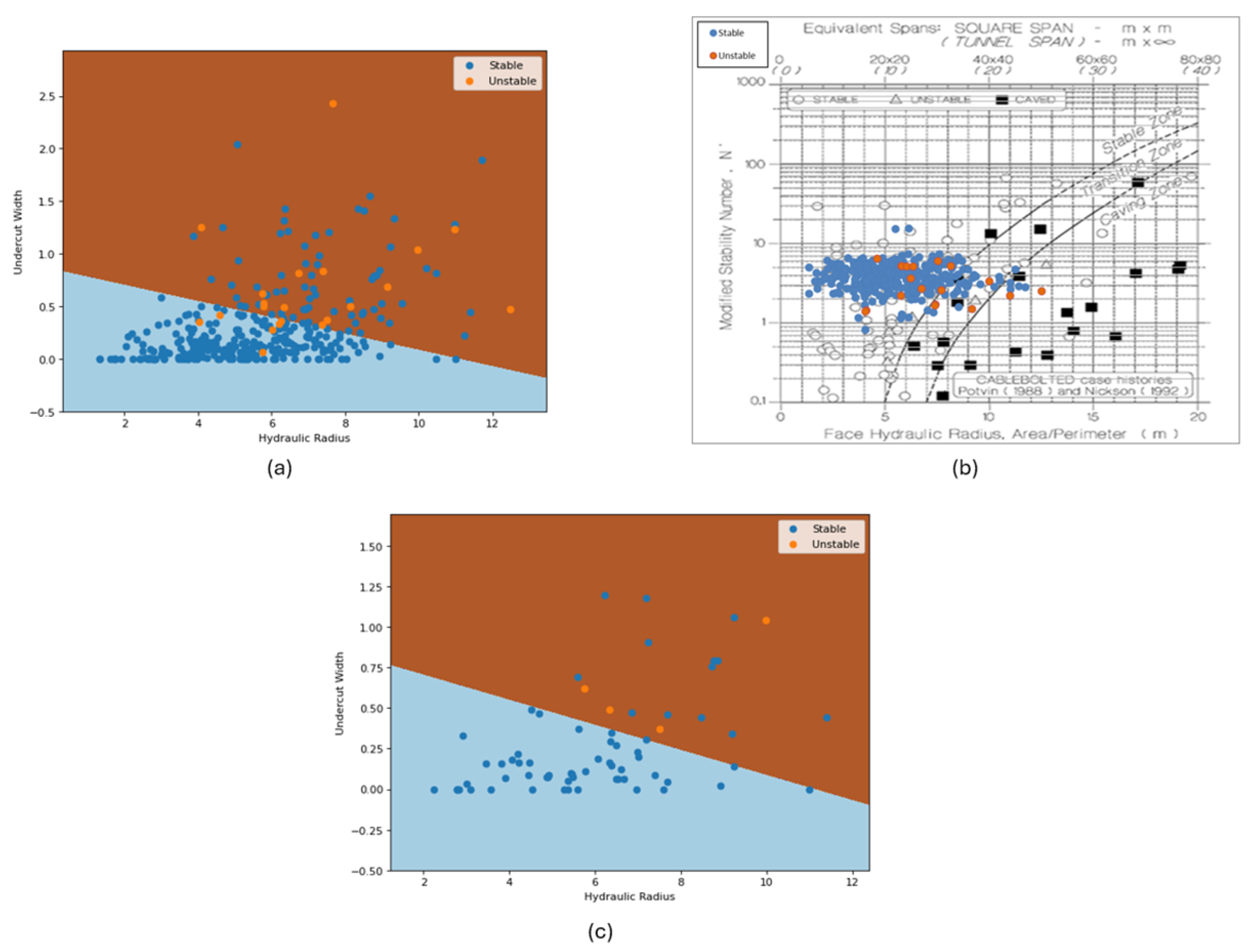

Figure 6 presents three graphs: (a) represents the estimated decision frontier (with the brown area representing the unstable zone and the blue area, the stable one) with all the dataset plotted (dark blue points represent the stable stopes and orange points represent the unstable ones); (b) represents the Mathew and Potvin’s graph when all the dataset is plotted on it (note that the modified stability number, N’, provided by the mine’s geotechnical team was used, instead of the undercut width values); and (c) represents the same decision frontier present in (a), but with only the test dataset plotted.

The first element to be observed is the nature of the graphs. While in

Figure 6a, there are only two zones, stable and unstable,

Figure 6b also considers a transition zone. It is worth mentioning that the methodology proposed also allows the inclusion of a transition zone (or as many as the model engineer wishes), but those must be defined using objective criteria. Then, there is the accuracy: It is noticeable that

Figure 6b classifies far more unstable stopes as stable (and a few as transition, which is hard to evaluate in this context), making over-reliance on it risky when used for mine planning in mines that suffer from stability issues or as an argument to reinforce the need for additional support in specific areas of the mine. The plot in

Figure 6c has as the purpose of evaluating the real potential of the developed graph when used to evaluate future observed stopes, as it uses data “unseen” by the algorithm during the training process: For the specific iteration on which the frontier was estimated, the model performed reasonably well on unstable stopes (remembering that only 20% of the dataset was used), managing to correctly classify all the unstable observations, although some stable stopes were misclassified. It is important to highlight the random nature of the process: Due to the high imbalance present in the dataset, different iterations may bring drastically different results, and while such high variance does not invalidate the process, it does reinforce the need for more data. Finally,

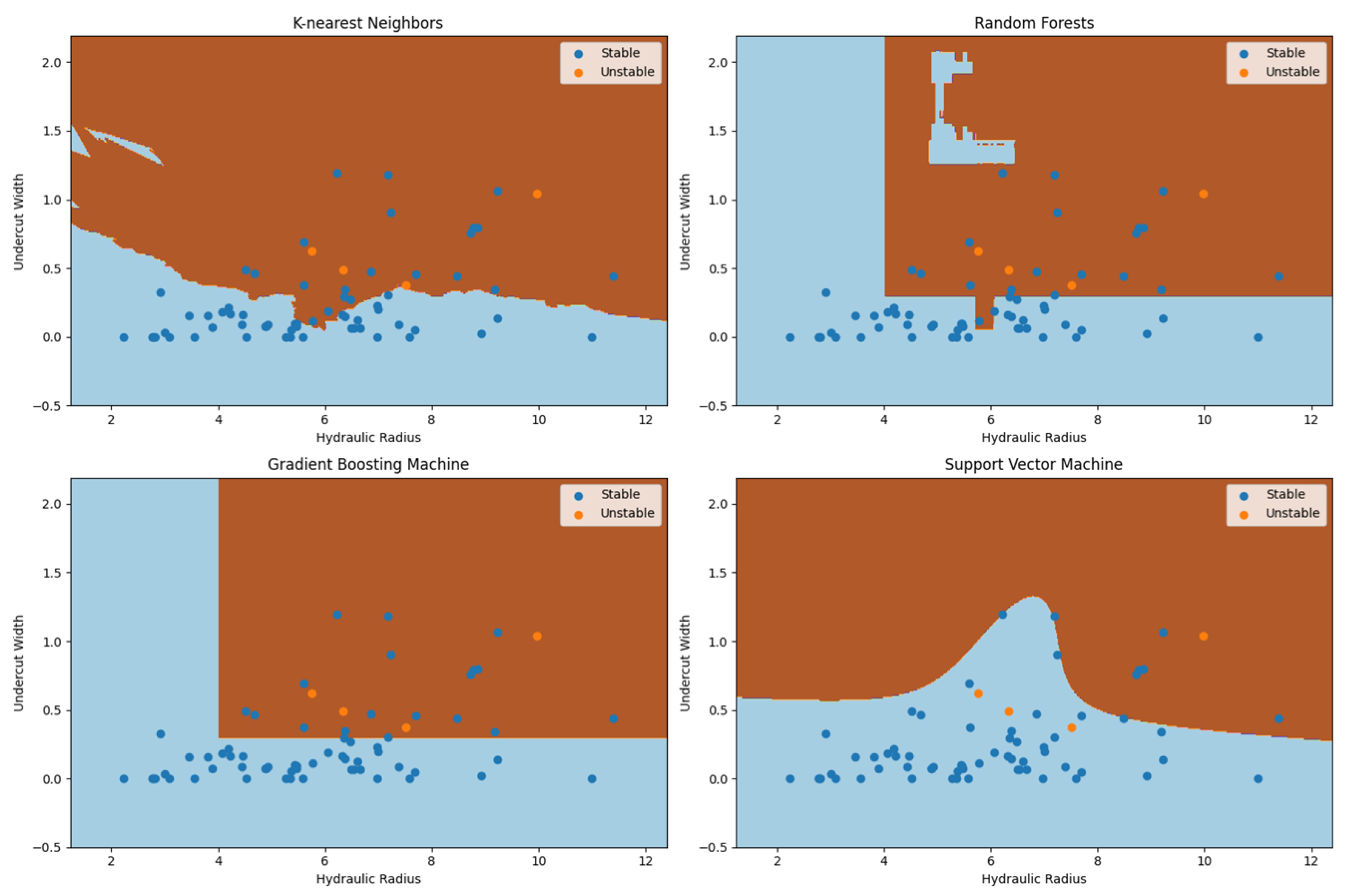

Figure 7 presents the decision frontiers estimated by the other models.

The estimated decision frontiers reflect the nature of the different algorithms used to generate them. The value of the hyperparameter k used in K-nearest neighbors will control the flexibility of the frontier, with lower values generating more flexible (albeit possibly “noisy”) frontiers. The value used (48) can be considered reasonably high in comparison to the size of the training dataset, so that may be the reason the estimated frontier is somewhat “rough” and close to the linear one estimated by the logistic regression model. Random forests and gradient boosting are both models that have decision trees at their core, and classify the observations by partitioning the domain space, the reason why the decision frontiers look like “boxes”. In the RF model, a peculiarity can be observed: the presence of a strangely placed stable zone amidst the unstable one. As it is unlikely that the trend of instability was broken in that specific place, such a peculiarity is most likely due to random variance present in the training dataset (potentially, a few isolated stable stopes are located there). Due to the bagging nature of the random forest model, situations like those may be solved by modifying the model’s hyperparameters. Regarding the support vector machine, the estimated frontier will be dependent on the kernel used: The smooth “curvy” aspect is due to the usage of a third-degree polynomial kernel.

As a final comment, the choice of the “best” stability graph may depend on more than model performance: Interpretability is an important factor, with graphs that provide clearer and understandable zones being preferable if performances are similar. Data availability is also important: Graphs that are built on specific features that may be expensive or hard to measure accurately may penalize the decision-making process in the long-term usage of the graph, with a similar notion valid for the use of features whose impact in the stability phenomenon may be hard to grasp, or that are hard to act upon or control, given that one of the main purposes of the graph is to provide professionals a tool to evaluate the impact of relevant aspects pertinent to stability so that mine planning may be adjusted accordingly and adequate corrective/containment measures may be taken.

5. Conclusions and Future Work

Conclusively, it was possible to validate the original hypothesis that it is possible to build a reasonably accurate, site-specific stability graph through statistical and machine learning modeling. The models, in general, outperformed Mathews and Potvin’s graph, particularly in identifying unstable stopes, even when trained on a highly imbalanced dataset, which makes them even more promising to develop in more adequate, balanced ones.

However, it is important to highlight that it is not a silver bullet: It depends on a dataset that represents adequately the phenomenological domain of stability for the specific mine site on which it is based upon, as evidenced by how the impact of imbalance affected the performance of all models (although some models may be more robust to it), and rebalancing algorithms should be used as a last resort, given they may not be able to correctly adjust the dataset to be adequately representative of the domain for the algorithms if the stability phenomenon in the mine is overly complex. The data quality is also a big concern: Some of the variables that may be used in investigating stability (including some not used here, such as Barton’s Q system) may be prone to subjectivity in their measurement, especially when performed by different professionals, something that can significantly impact the results. This is also valid for the response variable or label: In this study, the label was defined by discretizing the ELOS measurement using an objective criterion. Defining which stopes are stable or unstable using subjective analysis (such as “individual experience”) may end up not generating an adequate dataset that can be used for modeling. Finally, it is highly desired that all the stopes involved belong to the same population, meaning that all the observations belong roughly to the same probabilistic distribution: As an example, if the characteristics of the rock mass change drastically as the mine develops, it may be more adequate to perform a new exploratory data analysis, separate the stopes, and update the graph-generating model accordingly, as using data from different populations may have a detrimental impact on the model’s capacity to estimate the decision frontier (again, some are more robust to those deviations).

As for future work, several different improvements are suggested. Some models (such as random forests and GBM) are highly dependent on hyperparameter tuning. The method chosen, grid search, being a hard heuristic, ensures that the best combination within the specified subset is found. However, that does not mean that the identified subset is globally best, and increasing the size can be very computationally intensive, so other optimization methods, such as Bayesian optimization, may be tested to better tune the models. There is also the loss of information due to the removal of features: In this study, the variables were limited to two for the sake of interpretability, but depending on the mine site, the instability phenomenon may be highly influenced by more than two features. In this case, using an approach such as PCA (principal component analysis) may be worth evaluating, although a graph built on the vectors estimated by the algorithm may not have the same level of interpretability as using the standalone features. Another aspect is the estimated decision frontier: By standard, the criteria chosen in this study were to estimate the one closest to the Bayes decision frontier. However, depending on the business strategy of some mines, different weights may be given for classification mistakes, so a study that proposes some strategy to define the most adequate decision frontier based on specific business rules could be an interesting prospect. There is also the stability phenomenon itself: During this study, the observations were considered independent (which is an assumption for the models used); however, the stopes can be considered as being spatially correlated (as subsequent openings can also affect previous ones) and time correlated (as the stability status of a given stope may change with time, given the redistribution of the forces), so understanding how those characteristics can be modeled may help improve the graph development process, being particularly relevant for mines that have dynamic data flows, allowing for real-time updating of the stope stability status. Also, investigating how the graph behaves in different mining contexts is a desirable future prospect.