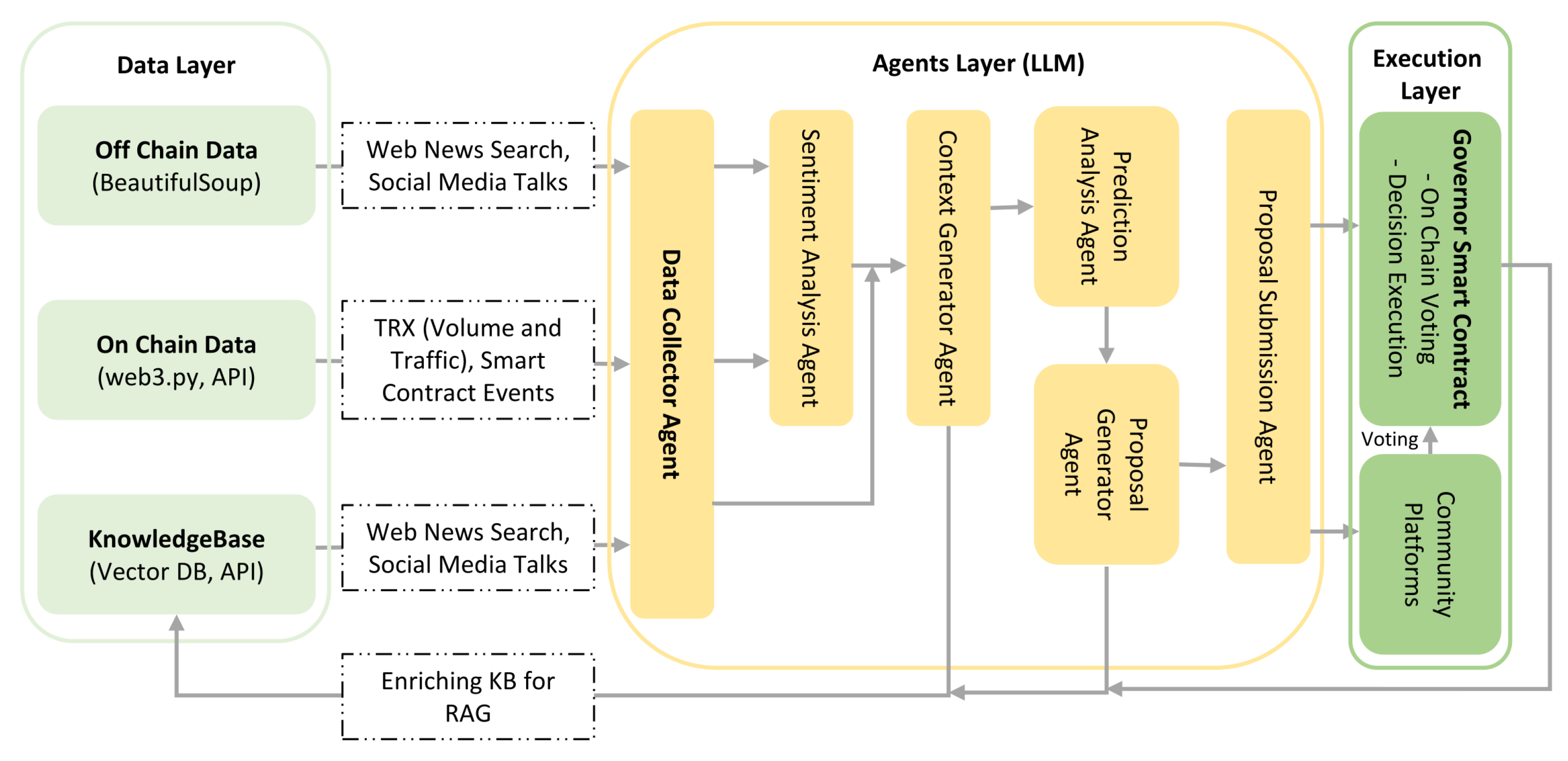

5.1. APOLLO Layers

APOLLO consists of three interconnected layers as shown in

Figure 2: (i) data layer, (ii) agents layer (LLM-based), and (iii) execution layer.

Data Layer: Off-chain data, on-chain data, and the knowledge base are the three main parts of the data layer. Off-chain data, which is driven by BeautifulSoup, gathers sentiment and trends in the market by extracting and preprocessing textual data from social media, news websites, and other sources. Web3.py and RPC indexer APIs are used for On-chain data such as transactions, smart contract interactions, voting patterns, and governance operations. The knowledge base acts as a central hub, using vector databases for semantic search and integrating data from APIs. It also incorporates stakeholder feedback and continuously updates insights from the agents layer to provide accurate, context-driven analytics.

Agents Layer (LLM-Based): The agents layer comprises AI-powered agents for automation and governance prediction. On-chain and off-chain data sources are gathered by the Data Collector Agent, and stakeholder sentiment is assessed by the Sentiment-Analysis Agent. Data and sentiment are combined by the Context Generator Agent, while governance outcomes are predicted by the Prediction Analysis Agent. Both the Proposal-Generator Agent and the Proposal Submission Agent generate governance proposals and automate proposal submissions.

Execution Layer: Through connections to community platforms and blockchain infrastructure, the Execution Layer makes it possible for APOLLO to automate governance. By automating governance execution, the governor smart contract guarantees that recommendations are implemented in a safe, transparent, and predictable manner. Integrating with apps like Telegram or Discord links community involvement with governance, encouraging openness, confidence, and participation.

In the proposed system, we normalize the voting weight of each token holder. The normalized voting weight

for voter

i is calculated using the following formula.

where

is the normalized voting weight of token holder

i,

is the number of tokens held by voter

i, and

n is the total number of voters. This ensures that the voting power of each token holder is proportional to the number of tokens they hold within the system, maintaining a fair distribution of voting rights.

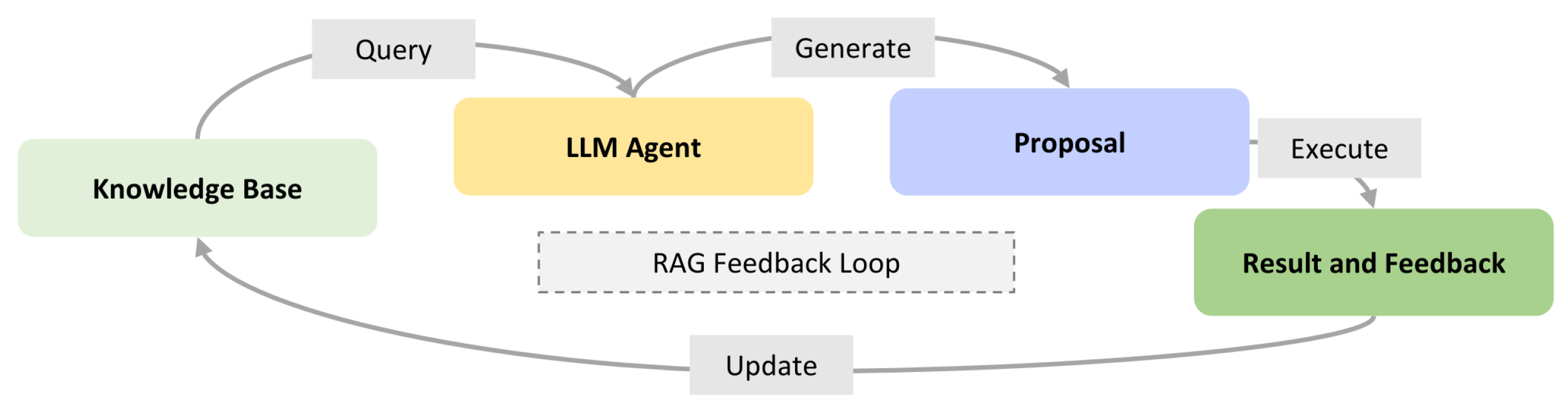

5.2. RAG Loop for Knowledge Base Enrichment

To ensure ongoing improvement and accuracy, APOLLO integrates a feedback loop using RAG as shown in

Figure 3. Contextual insights generated by the LLM-based Context-Generator Agent are continuously fed back into the knowledge base, enhancing context precision. Each submitted proposal, along with metadata and community feedback, enriches the knowledge base, creating a growing historical context of governance decisions and their outcomes. Additionally, post-execution results from governance actions performed by the governor smart contract are systematically stored and analyzed, facilitating continuous learning and iterative improvement of predictive and proposal-generation models.

In the Continuous RAG Loop, we first calculate the Cosine Similarity for retrieval. The cosine similarity between two vectors

and

is given by the following formula.

where

and

are the vectors, and

and

are the magnitudes of the vectors. Next, we apply Reciprocal Rank Fusion (RRF) [

29], which combines the results of multiple retrieval systems. The RRF for document

d is calculated as follows.

where

is the rank of document

d, and

k is a constant. This formula helps in combining ranked lists from multiple sources by applying diminishing returns to the ranks. Finally, we use Mean Pooling for Embeddings. The mean pooling of the embeddings is calculated as the following equation:

where

represents the hidden state of token

i at layer

L, and

n is the total number of tokens. This approach aggregates the token representations into a single vector, representing the overall context.

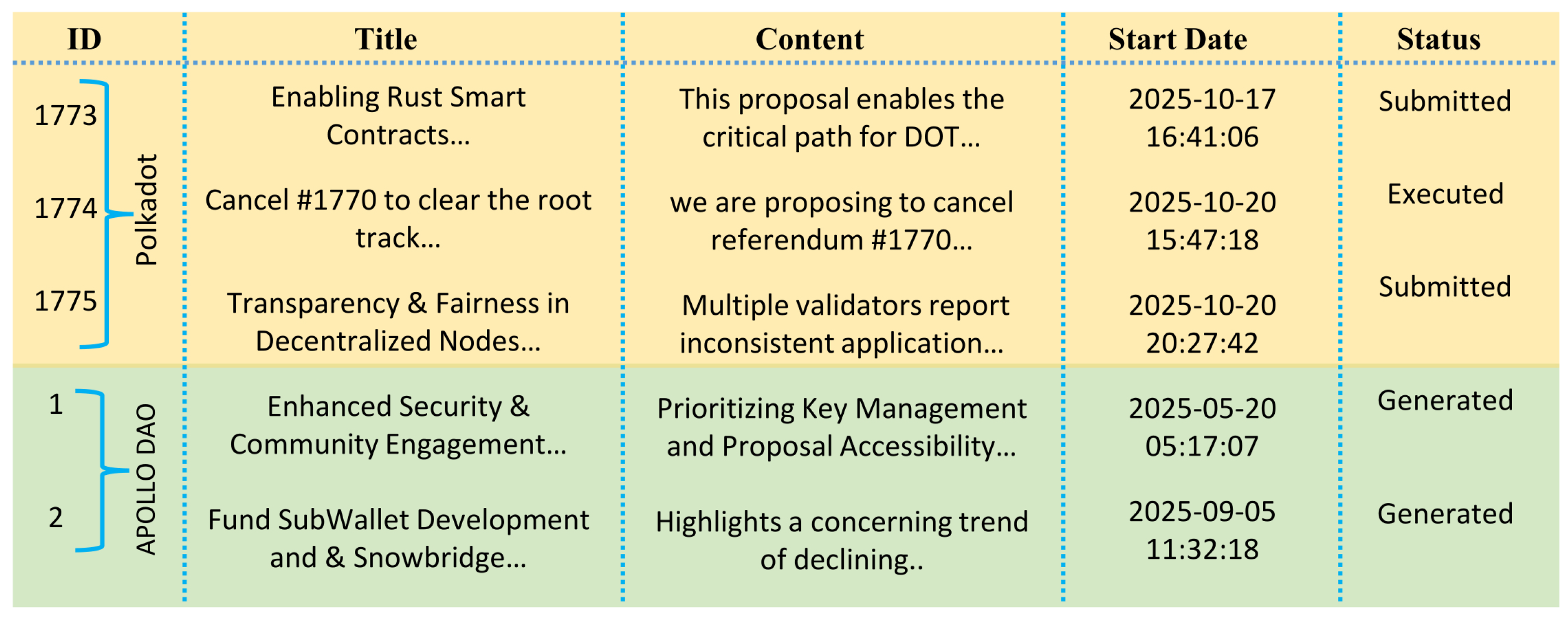

5.3. Proof of Concept (PoC) Implementation

The MVP has been successfully implemented and validated across all layers. The data layer handles data efficiently and the agents layer generates accurate proposals. The execution layer automates governance with a smart contract. A continuous RAG Loop improves decision-making. In the proposed system, we first normalize the embedding for each text chunk

, where each chunk consists of a maximum of 512 tokens. The normalized vector

is computed using the following formula.

Here, represents the i-th text chunk, which is a sequence of tokens (words or subwords) with a maximum length of 512 tokens. Each chunk is a small portion of the input text, processed to maintain consistency and manageable size. denotes the embedding vector generated by the MiniLM-L6 encoder for the text chunk . This encoder transforms the raw text into a dense vector representation in a high-dimensional space. The term represents the L2 norm (Euclidean norm) of the vector , which normalizes the embedding to unit length, ensuring consistent scale for comparison. The normalized vector has a dimensionality of , capturing the meaning and context of the chunk.

For retrieval, we use Cosine Similarity in conjunction with IVFFlat (Inverted File with Flat Index) and HNSW (Hierarchical Navigable Small World) acceleration in Pinecone [

30,

31]. Empirically, recall@10 achieves 0.94 on a 5k query benchmark, indicating that the top 10 retrieved results capture 94% of the relevant data. For any artifact

a (such as a tweet, transaction log, or proposal), we compute and store its embedding

as follows:

Here, a represents the artifact, which is preprocessed using the function . This preprocessing step strips URLs, tags, and hex blobs to clean the text before embedding. The encoder refers to the text embedding model, where the parameters are frozen after fine-tuning. The resulting embedding vector has a dimensionality of , representing the artifact in a high-dimensional space and capturing rich semantic information.

For retrieval in the RAG process, we use Cosine Similarity to retrieve the top-

chunks for RAG prompts. Additionally, for any tokenized text fragment

, where each

corresponds to a token, the transformer encoder

maps each token to a hidden state

, which represents the model’s learned features for each token. The final embedding

for the entire fragment is computed by mean-pooling the hidden states over the last layer of the transformer as follows.

The resulting dimensionality of the final embedding is , representing the tokenized fragment as a 1768-dimensional vector. For enhanced retrieval, Cosine Similarity is initially applied, followed by Reciprocal Rank Fusion (RRF) for re-ranking the top-200 hits. RRF combines the strengths of sparse BM25, which is a classical retrieval model, and dense scores from the embeddings, improving retrieval quality. This technique enhances the Hits@1 score by approximately 4% in offline tests, ensuring that more relevant and contextually grounded information is retrieved.

Data Collection and Normalization: Algorithm 1 outlines the process of collecting and normalizing on-chain and off-chain data. First, blockchain RPC events are polled to capture on-chain data (

RAW_ON), followed by scraping forum and social media HTML, as well as gathering off-chain API feeds (

RAW_OFF). The collected data is then combined and normalized to create a canonical governance dataset (

NORM_DS). Next, the current block timestamp (

t) is captured to record when the data was ingested. A timestamped log of the data sources (

SRC_LOG) is stored, which logs the source IDs for both on-chain and off-chain data. Finally, the normalized governance dataset (

NORM_DS) is returned, ready for further processing and analysis.

| Algorithm 1 Data ingestion and normalization. |

- 1:

Inputs: RAW_ON: Blockchain RPC events. - 2:

RAW_OFF: Forum & social-media HTML, Off-chain API feeds. - 3:

Outputs: NORM_DS: Canonical dataset (JSON-Lines). - 4:

SRC_LOG: Timestamped log of data sources, Error/latency metrics. - 5:

procedure Data_Layer_Ingest - 6:

Step 1: Poll blockchain RPC events: - 7:

RAW_ON ← PollRPC() - 8:

Step 2: Scrape forum and gather off-chain feeds: - 9:

RAW_OFF ← ScrapeFeeds() - 10:

Step 3: Normalize on-chain and off-chain data: - 11:

NORM_DS ← Normalize(RAW_ON ∪ RAW_OFF) - 12:

Step 4: Capture current block timestamp: - 13:

t ← block.timestamp - 14:

Step 5: Store timestamped log of sources: - 15:

SRC_LOG[t] ← {sources: ids(RAW_ON, RAW_OFF)} - 16:

Step 6: Return normalized dataset: - 17:

return NORM_DS - 18:

end procedure

|

Contextual Analysis and Prediction: Algorithm 2 processes raw data into structured context windows, enabling the extraction of insights. It calculates community sentiment and predicts voter behavior for the next 24 h, supporting informed decision-making. It derives sentiment scores from the normalized governance dataset (NORM_DS), creating a context packet (CTX_PKT), and predicting voter turnout (PRED). Using this context, the system generates a draft proposal (DRAFT) via a language model (LLM), signs it with the agent’s wallet (SIG), and returns the signed draft for community discussion and on-chain voting.

| Algorithm 2 Agents layer pipeline for governance proposal generation. |

- 1:

Inputs: Normalized governance dataset (NORM_DS) - 2:

Outputs: CTX_PKT (≤ 8 kB), Historical turnout archive, Vector-DB embeddings, Sentiment scores, Forecast (turnout and sentiment-drift) - 3:

procedure Agents_Layer_Pipeline - 4:

Step 1: Calculate sentiment scores: - 5:

sentiment ← SentimentScore(NORM_DS) - 6:

Step 2: Construct context packet (CTX_PKT) using sentiment: - 7:

CTX_PKT ← ContextBuild(NORM_DS, sentiment) - 8:

Step 3: Predict voter turnout: - 9:

PRED ← PredictTurnout(CTX_PKT) - 10:

Step 4: Generate draft proposal (DRAFT): - 11:

DRAFT ← LLM_Draft(CTX_PKT, PRED) - 12:

Step 5: Sign the draft proposal (DRAFT): - 13:

SIG ← Wallet.sign(DRAFT.calldata) - 14:

Step 6: Return draft proposal and signature: - 15:

return - 16:

end procedure

|

Proposal Submission, Tracking, and Execution Process: Algorithm 3 outlines the process of submitting and executing a proposal in a decentralized governance system. The draft proposal (DRAFT) is first posted for community feedback, then signed (SIG) and sent to the governor for approval. After the voting period ends, the algorithm checks if the vote threshold is met. If successful, the proposal is executed, and an execution receipt (RECEIPT) is generated. If the threshold is not met or the proposal expires, the receipt is marked as “Failed or Expired”. The final status of the proposal is returned through the execution receipt (RECEIPT).

| Algorithm 3 Proposal submission and execution. |

- 1:

Inputs: Context packet, Prediction report, DAO parameters - 2:

Outputs: Draft markdown, EIP-712 calldata, Governor propose() transaction hash - 3:

procedure Execution_Layer_ProposeExecute - 4:

Step 1: Post proposal draft for discussion: - 5:

PostForum(DRAFT.markdown) - 6:

Step 2: Submit signed draft to governor: - 7:

TX_HASH Governor.propose(SIG) - 8:

Step 3: Retrieve proposal ID: - 9:

PIDGetProposalID(TX_HASH) - 10:

Step 4: Wait until voting deadline: - 11:

Wait Until(block.timestamp > deadline(PID)) - 12:

Step 5: Check if proposal meets vote threshold: - 13:

if forVotes(PID) ≥ threshold - 14:

if forVotes(PID) ≥ threshold then - 15:

Step 6: Execute proposal: - 16:

RECEIPT Governor.execute(PID) - 17:

else - 18:

Step 7: Mark proposal as failed: - 19:

RECEIPT.status “Failed/Expired” - 20:

end if - 21:

Step 8: Return execution receipt: - 22:

return RECEIPT - 23:

end procedure

|

Knowledge Base Update and Enrichment Process: Algorithm 4 describes how the APOLLO system updates its knowledge base (KB). It combines the context packet (

CTX_PKT), draft proposal (

DRAFT), and execution receipt (

RECEIPT) into a single record (

REC), then generates embeddings (

EMB) for structured storage. These embeddings are added to the knowledge base (

KB), and retrieval metrics are recalculated for accuracy. The updated

KB is returned, enriched for future use in governance decisions and predictions.

| Algorithm 4 RAG_Loop_Update (REC, EMB, KB). |

- 1:

Inputs: Proposal-ID / Tx hash, Execution receipt, Community feedback - 2:

Outputs: Indexed embeddings, Updated retrieval metrics - 3:

procedure RAG_Loop_Update - 4:

Step 1: Combine context packet, proposal, and receipt: - 5:

REC ← {CTX_PKT, DRAFT, RECEIPT} - 6:

Step 2: Generate embeddings for the record: - 7:

EMB ← Embed(REC) - 8:

Step 3: Append embeddings to the knowledge base: - 9:

AppendVectorDB(KB, EMB) - 10:

Step 4: Recalculate retrieval metrics: - 11:

RecalculateRetrievalMetrics(KB) - 12:

Step 5: Return updated knowledge base: - 13:

return KB - 14:

end procedure

|