Integrating AI in Public Governance: A Systematic Review

Abstract

1. Introduction

2. Background and Theoretical Framework

2.1. Theoretical Framework: TAM, DEG, Dynamic Capabilities, and Delphi

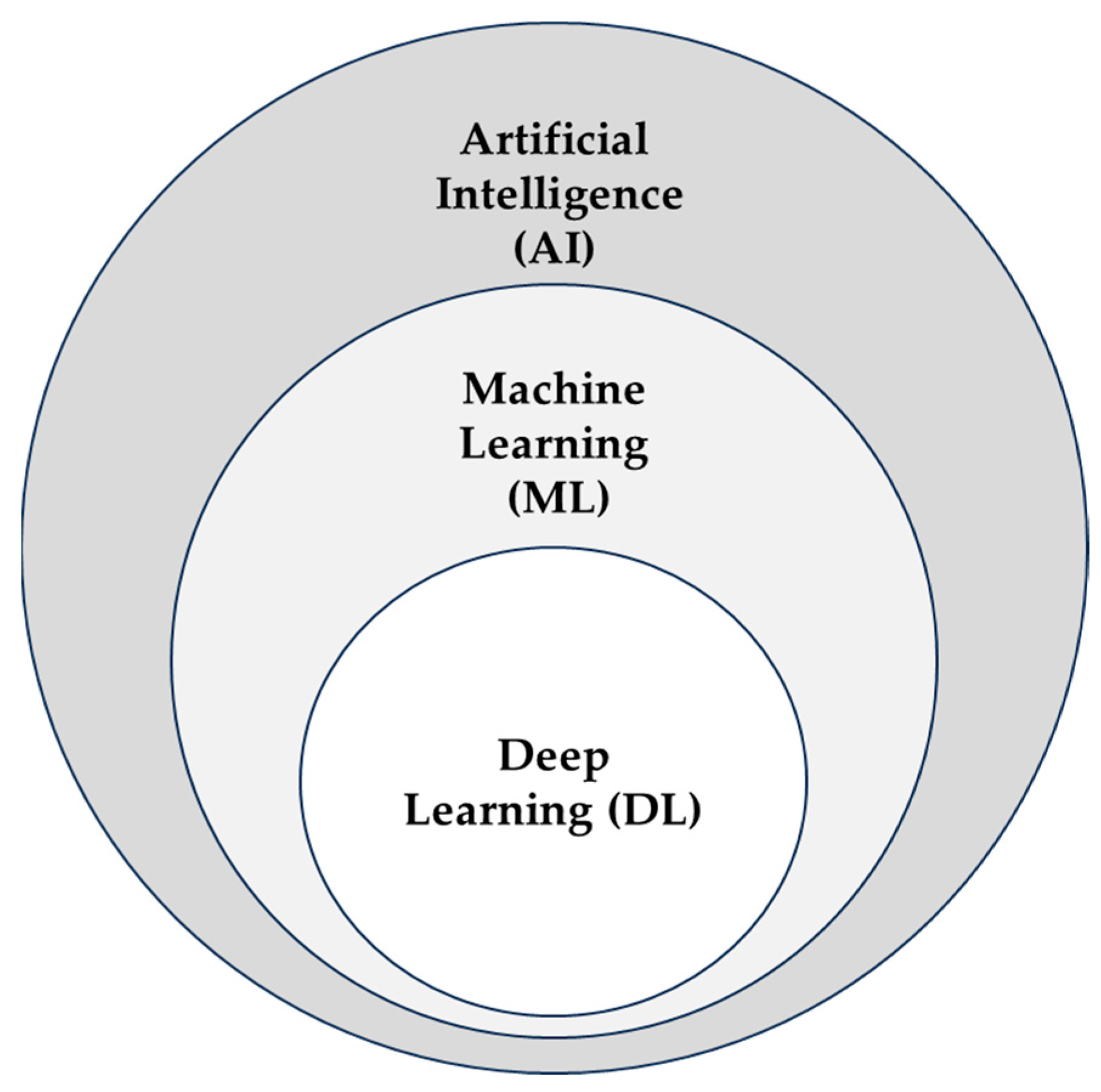

2.2. Core AI Technologies in Public Governance

- ML enables systems to identify patterns and make predictions based on large datasets, without being explicitly programmed [18]. It supports fraud detection, policy forecasting, and automated classification in various administrative domains [19]. Applications include fraud detection in finance and predictive analytics in governance [20].

- DL, as a specialized branch of ML, employs artificial neural networks with multiple layers to mimic the functioning of the human brain [21]. It excels at processing unstructured data, such as images and text, making it essential for applications like facial recognition, autonomous vehicle navigation, and healthcare diagnostics.

- Generative AI: refers to models designed to generate new content by learning patterns and structures from existing data. These models can create text, images, music, and more, often indistinguishable from human-created outputs [22].

- Large language models (LLMs), based on transformer architectures such as BERT and other generative models, can process, summarize, and generate language fluently. Their applications span public administration, education, and legal analysis, providing advanced tools for communication and document management [23].

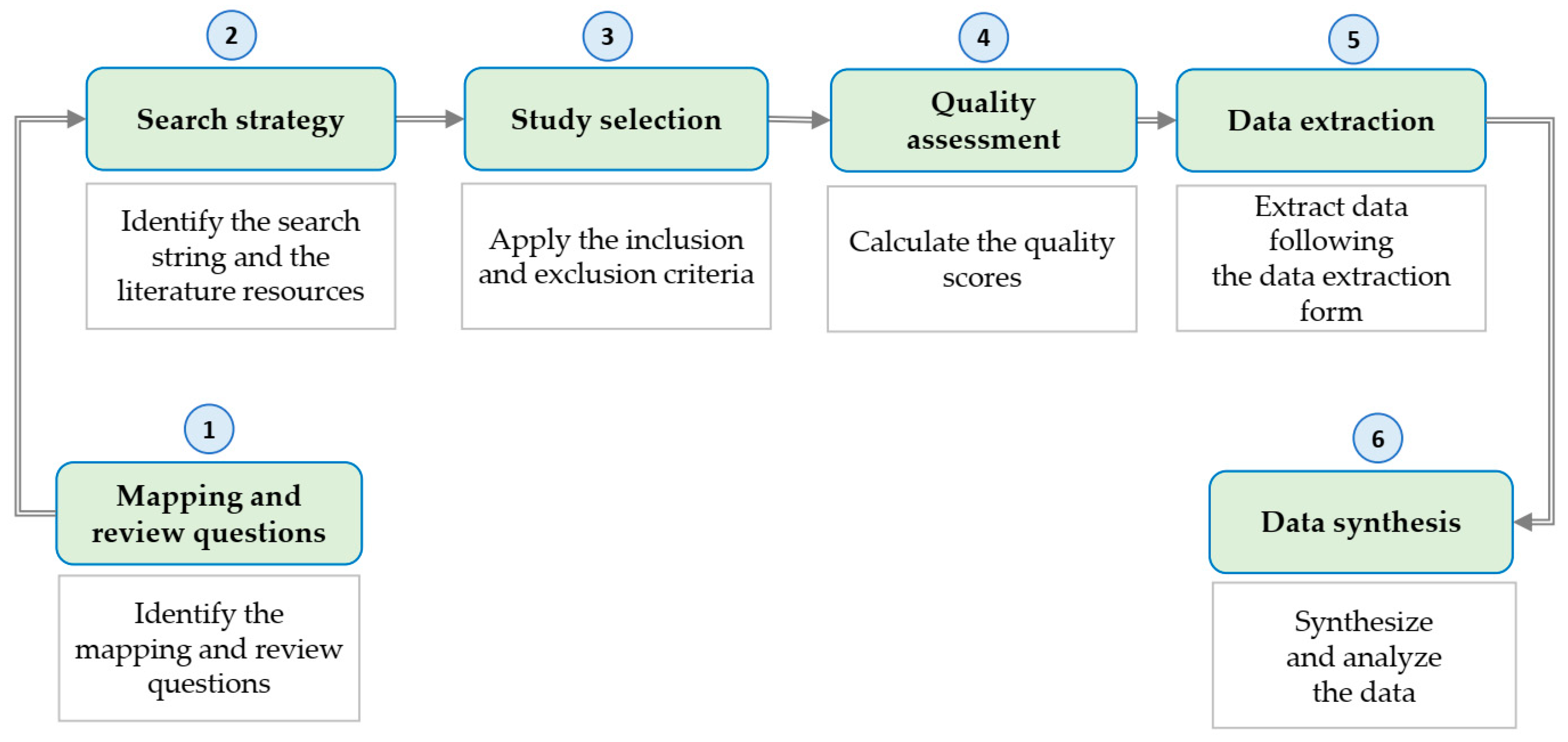

3. Systematic Literature Review Methodology

3.1. Mapping and Studying Questions

- MQ1: What are the publication trends, thematic areas, and key sources in AI-related governance research?

- MQ2: What types of contributions are presented in the paper?

- MQ3: What research methodologies are used in the studies?

- MQ4: In which governance sectors and institutional settings is AI being studied?

- RQ1: How does AI improve efficiency and automation in governance decision-making?

- RQ2: What are AI integration’s main challenges, risks, and opportunities?

- RQ3: How do institutional stakeholders such as policymakers, administrators, legal experts, and citizens perceive AI’s impact on governance processes?

- RQ4: How does AI reshape institutional autonomy and the balance between automation and human oversight?

- RQ5: What governance models and best international practices exist to support ethical and responsible AI adoption?

3.2. Search Strategy

3.2.1. Search String

3.2.2. Search Process

3.3. Study Selection

3.4. Quality Assessment

3.5. Data Extraction Strategy and Synthesis

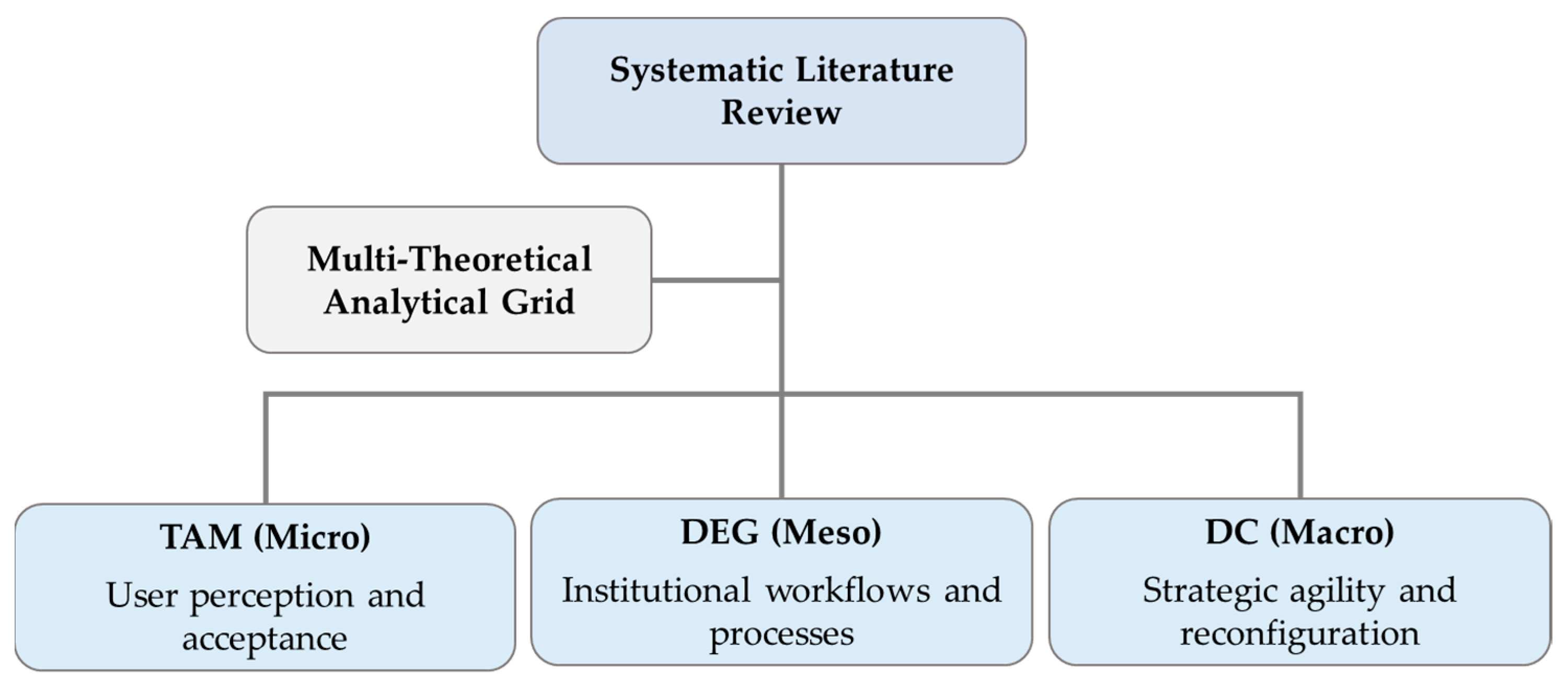

3.6. Theoretical Frameworks

- At the individual level, TAM helps assess how civil servants interact and accept AI systems, particularly regarding perceived usefulness and ease of use.

- At the organizational level, DEG captures how public agencies evolve in structure, processes, and service delivery in response to AI technologies.

- At the institutional level, DC theory examines how public institutions develop the capacity to sense digital opportunities, seize them strategically, and reconfigure internal resources in response to change.

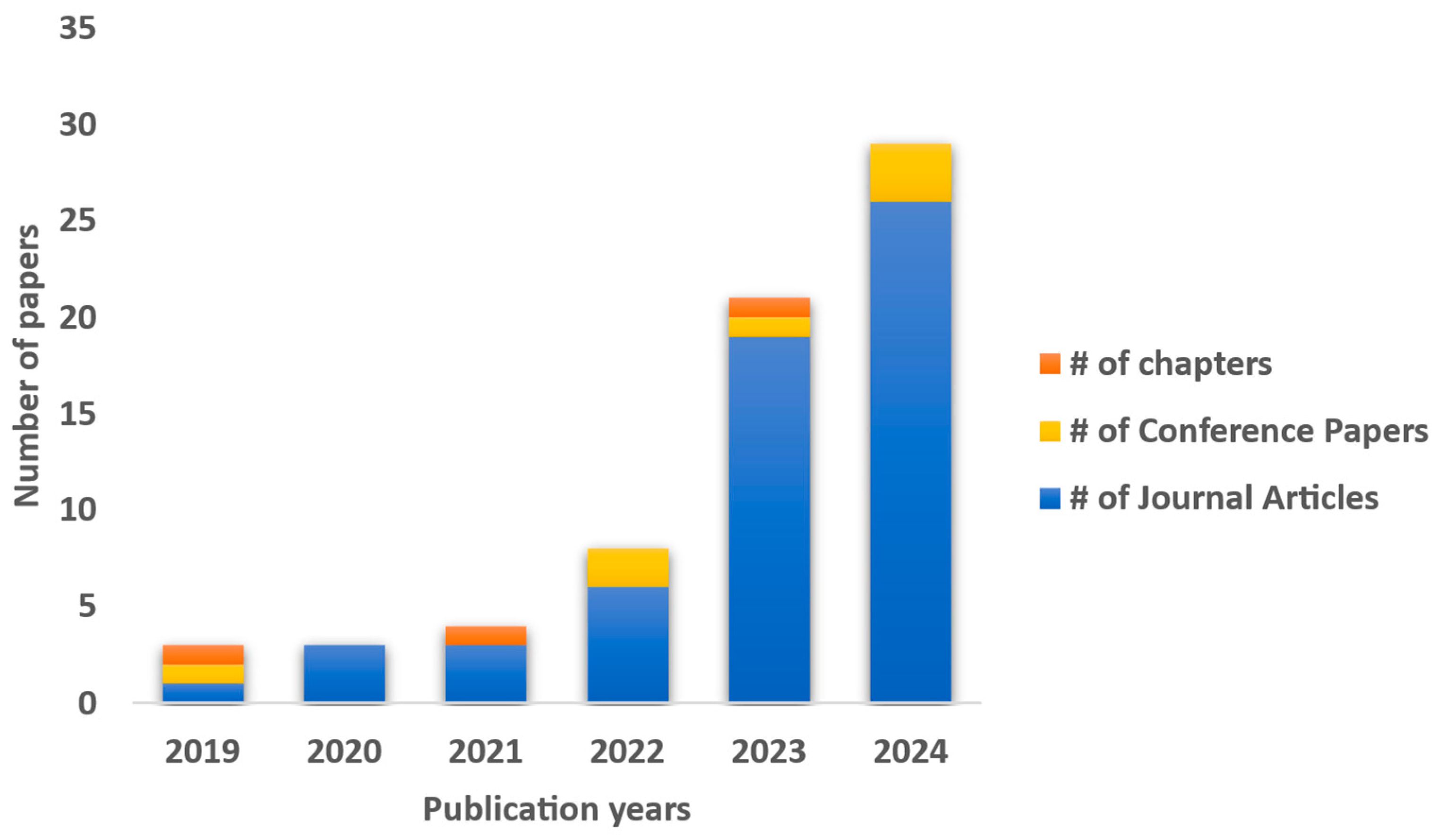

4. Statistical Trends in AI-Driven Governance Research

4.1. Data Sources and Selection

4.2. Statistical Trends

4.3. Contribution Types

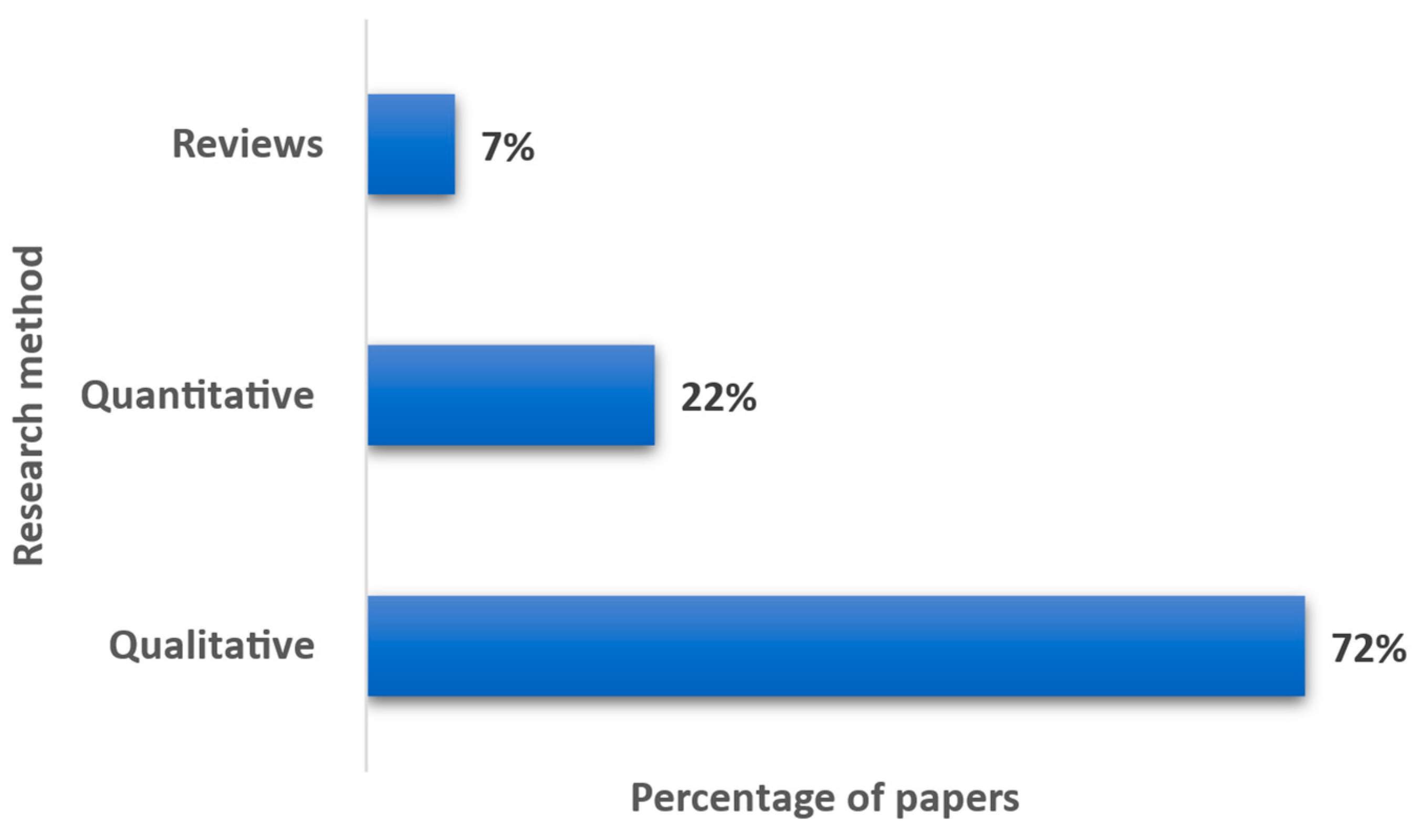

4.4. Research Methods

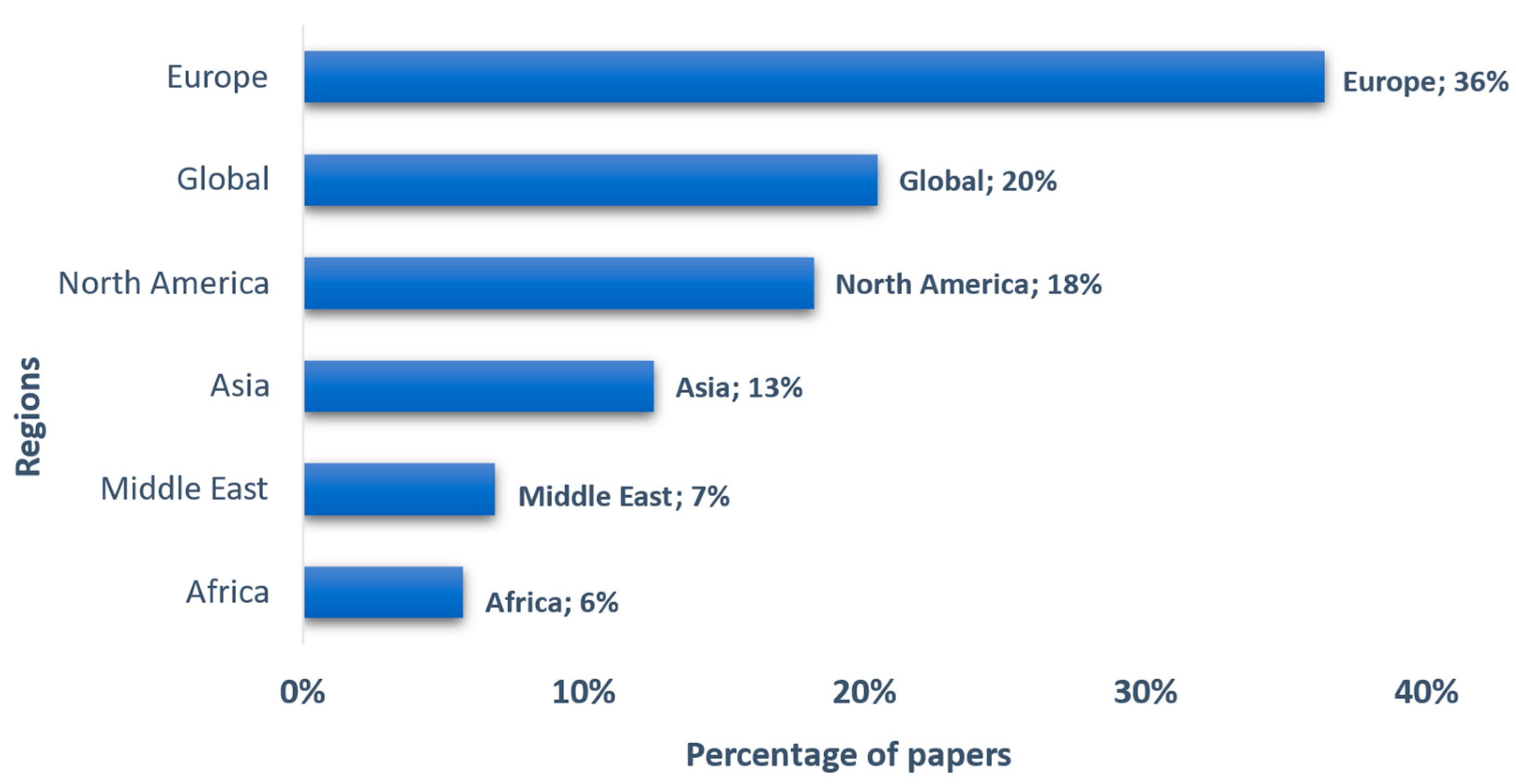

4.5. Geographic Distribution

5. Results and Discussion

5.1. RQ1: How Does AI Improve Efficiency and Automation in Governance Decision-Making?

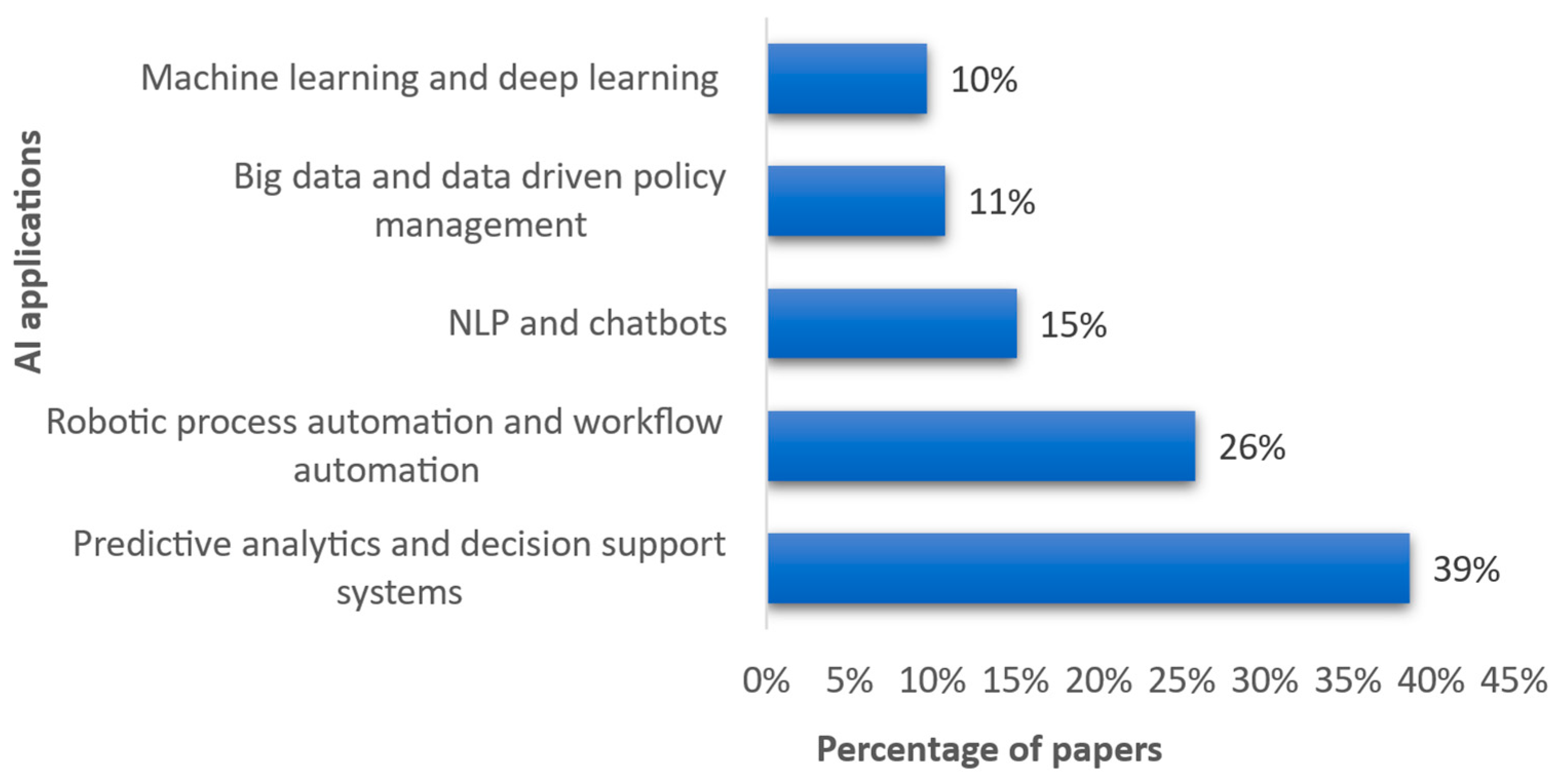

5.1.1. Areas of Application

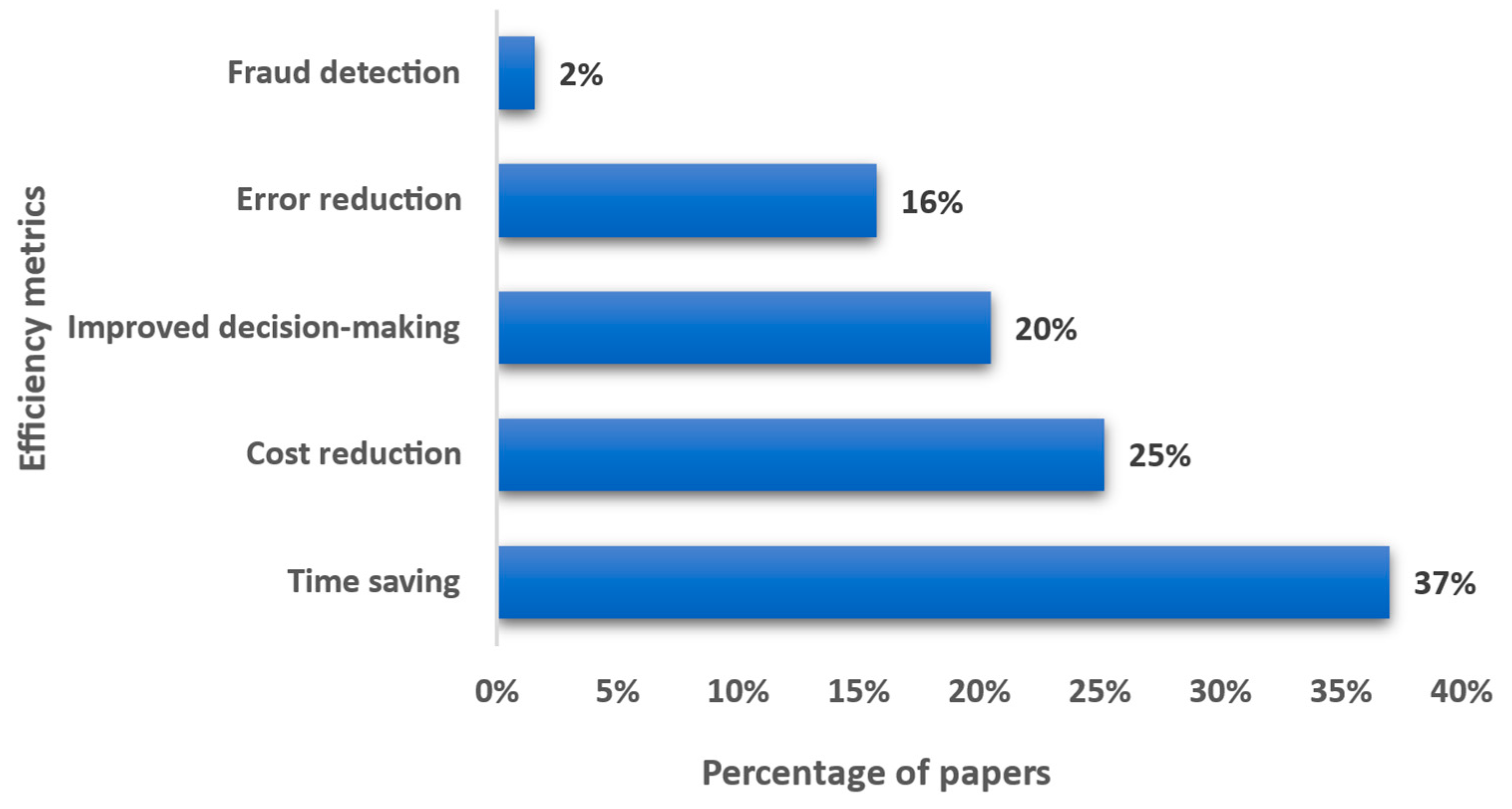

5.1.2. Efficiency Metrics

5.2. RQ2: What Are AI Integration’s Main Challenges, Risks, and Opportunities?

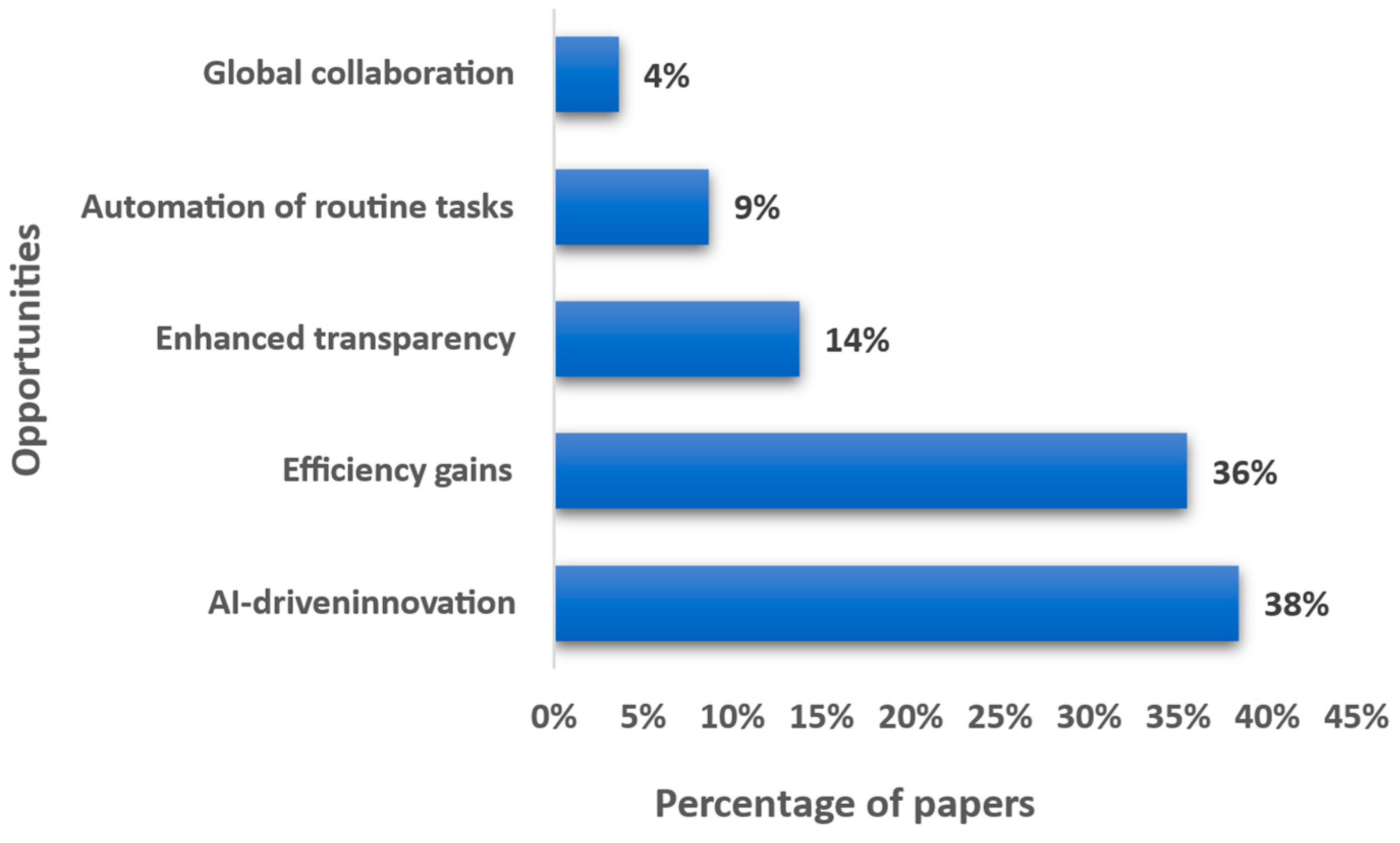

5.2.1. Opportunities for Governance

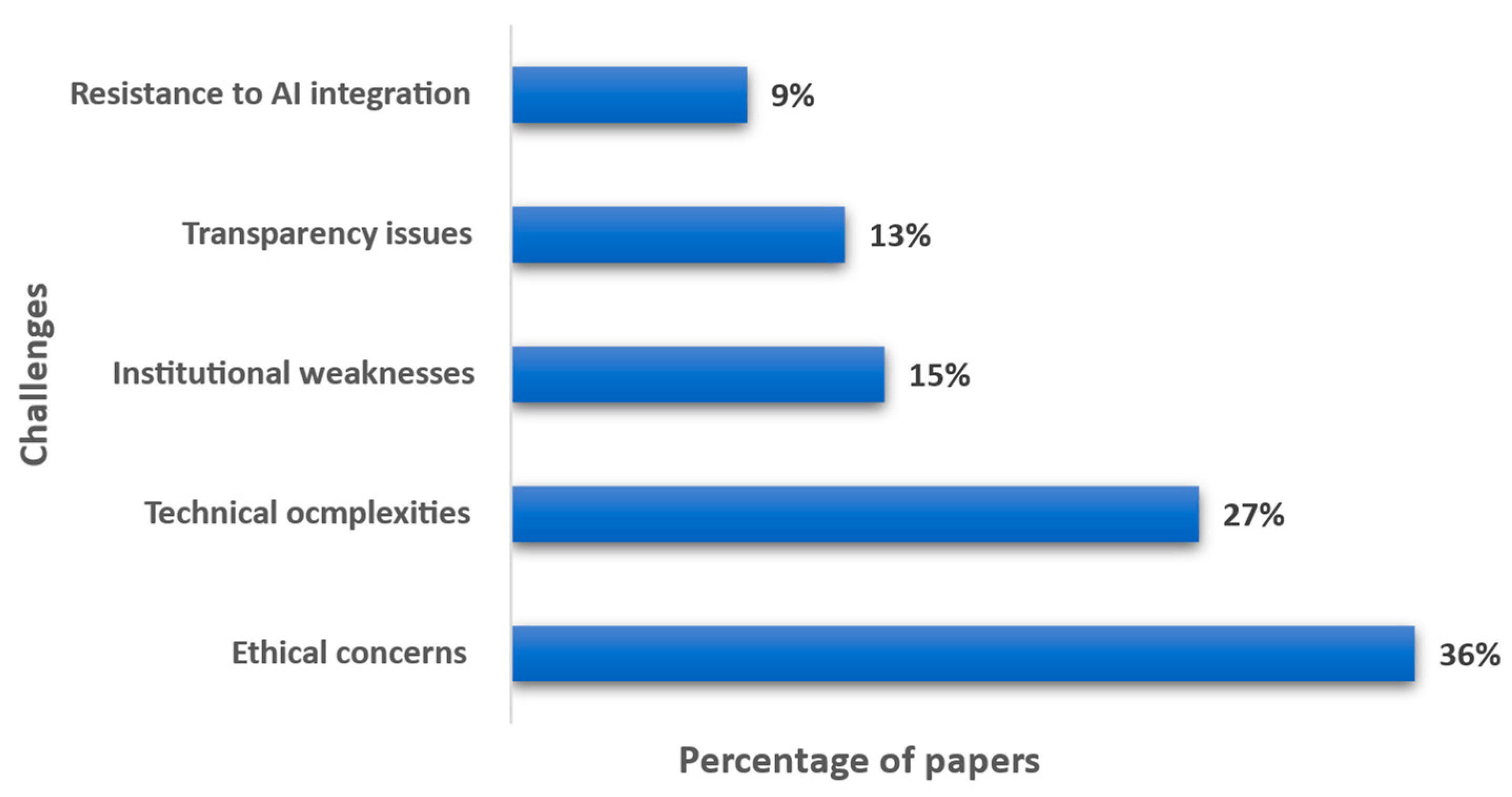

5.2.2. Key Challenges

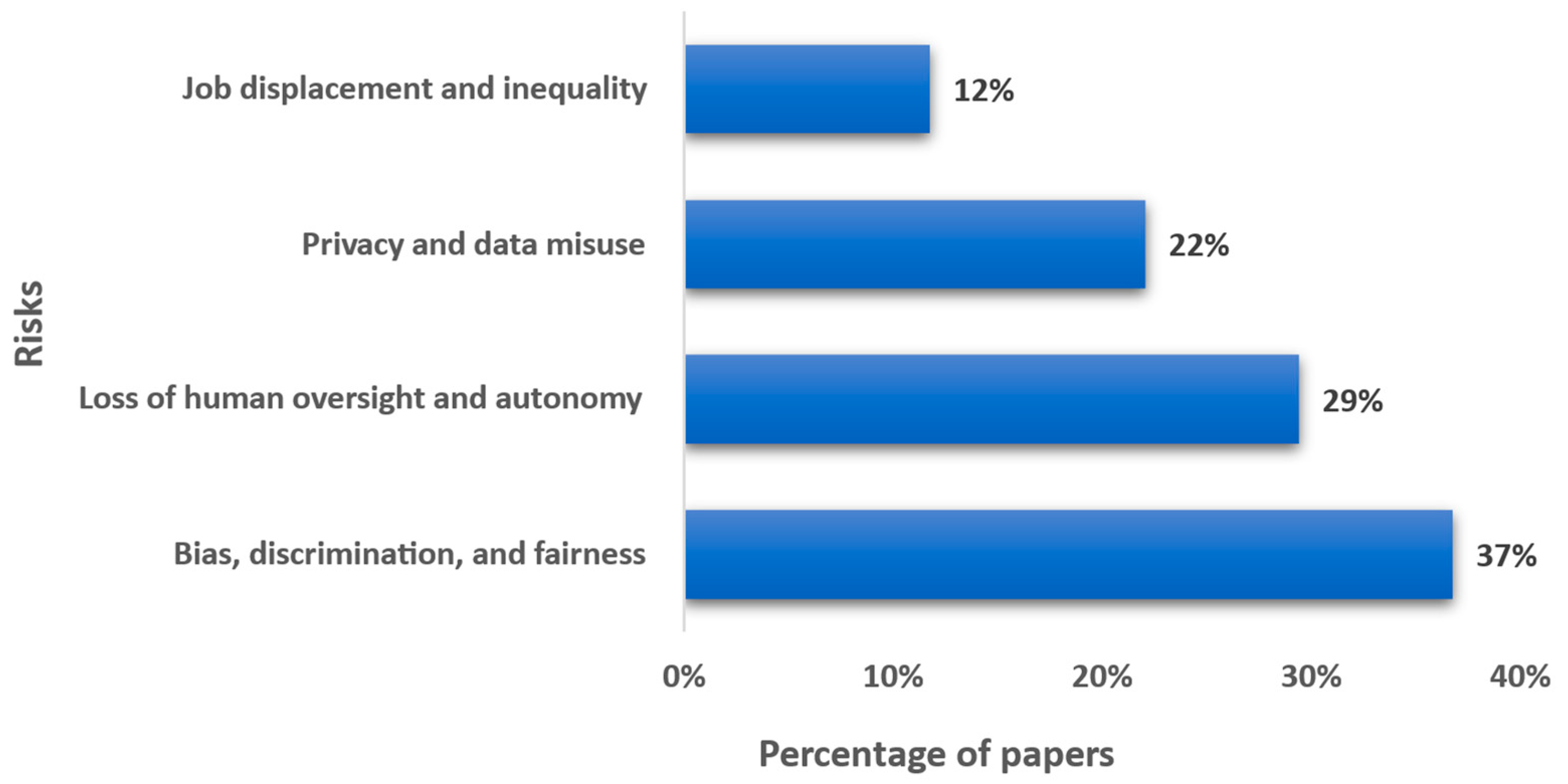

5.2.3. Risks

5.3. RQ3: How Do Institutional Stakeholders Perceive AI’s Impact on Governance Processes?

5.3.1. Key Stakeholders and Roles

5.3.2. Stakeholder Priorities and Concerns

5.4. RQ4: How Does AI Reshape Institutional Autonomy and the Balance Between Automation and Human Oversight?

5.4.1. Risks of Over-Reliance on AI

5.4.2. Balance Between AI and Human Decisions

5.5. RQ5: What Governance Models and International Frameworks Support Responsible AI Decision-Making?

5.5.1. Key Regulatory Frameworks for AI Governance and Ethical Compliance

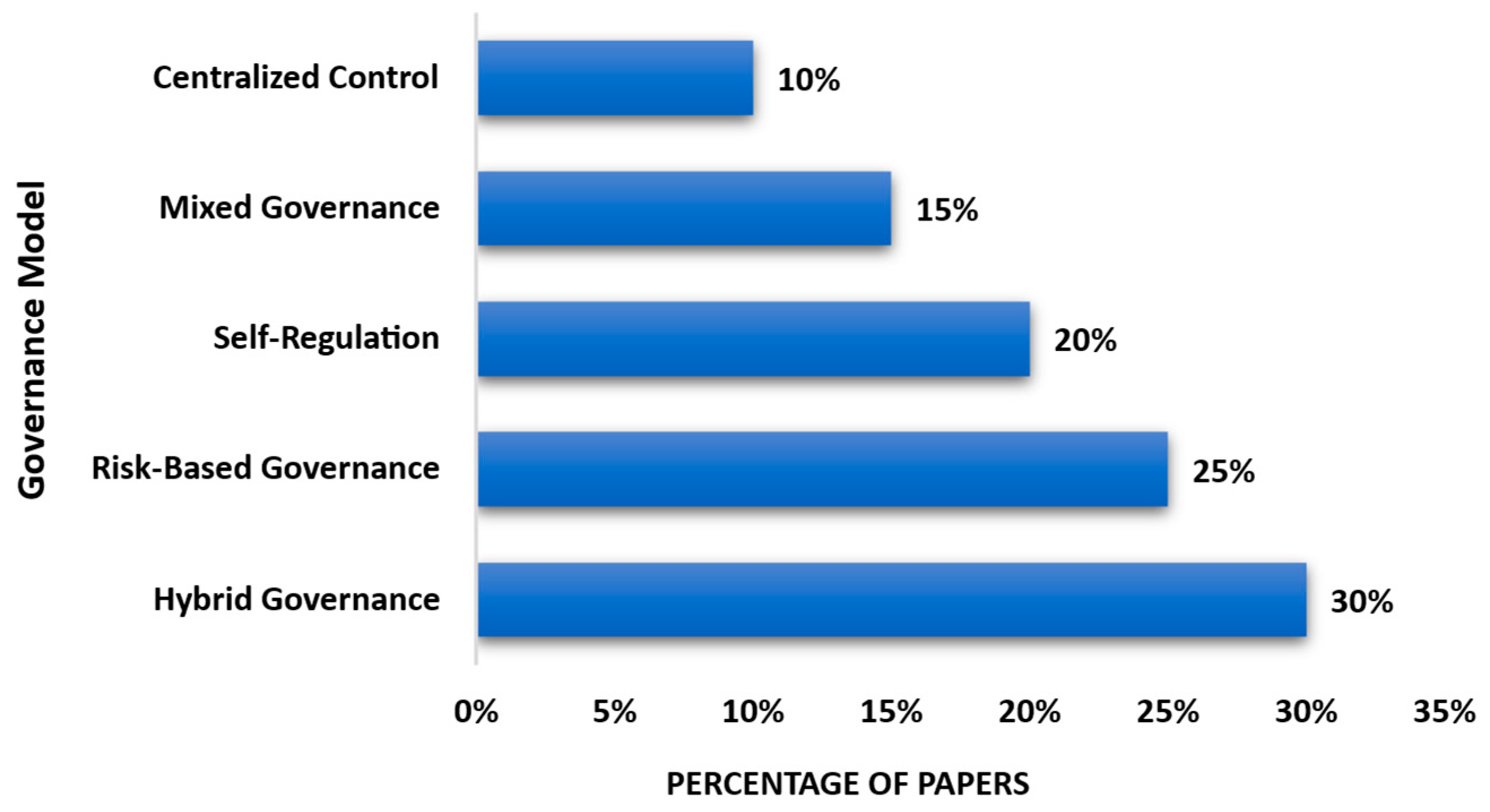

5.5.2. Governance Models for Responsible AI Decision-Making

6. Empirical Findings and Thematic Synthesis

6.1. Administrative Efficiency and Service Delivery

6.2. Decision-Making and Policy Formulation

6.3. Transparency and Accountability

6.4. Discussion and Synthesis

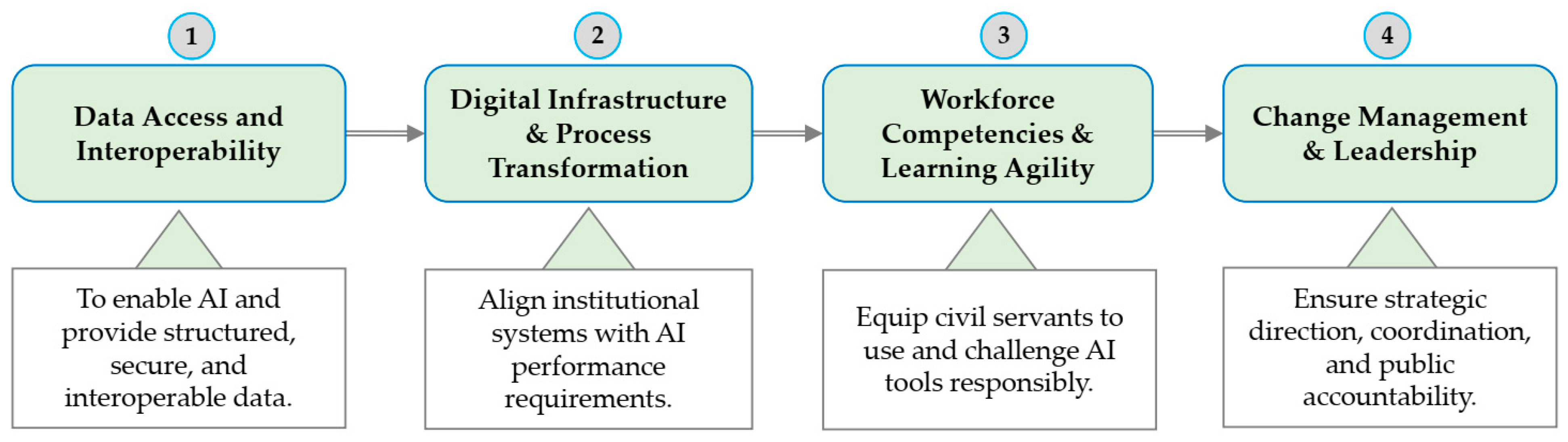

7. AI Integration Capability Model

7.1. SLR Findings

- ➢

- Pillar 1. Data access and interoperability: Ensures institutions can collect, share, and leverage high-quality, interoperable datasets across agencies and domains.

- ➢

- Pillar 2. Digital infrastructure and process redesign: Focuses on upgrading legacy systems and reengineering workflows to accommodate AI-driven service delivery.

- ➢

- Pillar 3. Workforce competencies and learning agility: Emphasizes capacity-building in digital literacy, algorithmic accountability, and continuous learning for public employees.

- ➢

- Pillar 4. Institutional leadership and change management: Promotes strategic alignment, participatory governance, and adaptive leadership to drive and sustain AI reforms.

7.2. Comparative Positioning with Existing AI Assessment Models

7.3. Limitation

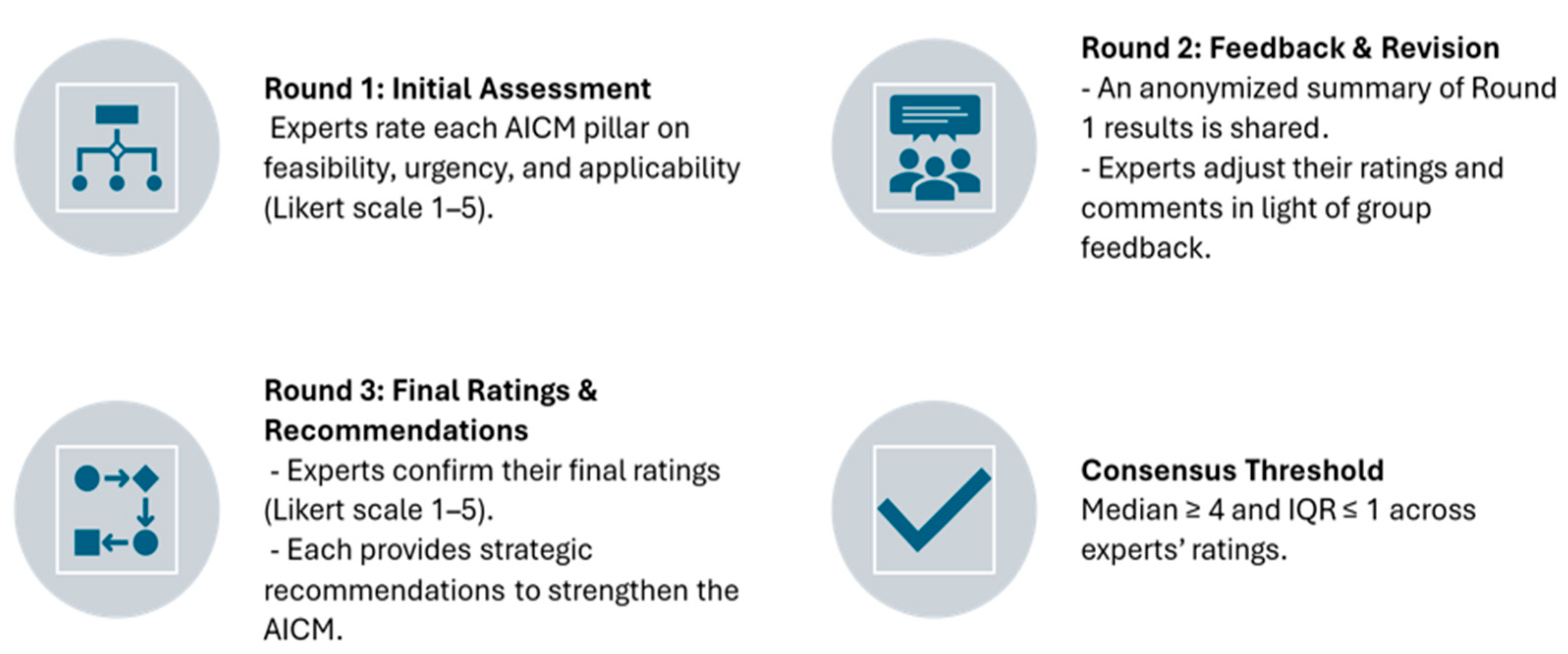

8. Validating the AICM: Delphi Study in the Moroccan Public Sector

8.1. Methodology and Delphi Design

- ➢

- Round 1: Experts independently assessed each pillar, mentioned in Figure 20, on three evaluative dimensions (feasibility, urgency, and applicability) using a 5-point Likert scale (1 = very low; 5 = very high). Initial variability in scores was particularly evident for data interoperability and leadership feasibility.

- ➢

- Round 2: Participants received anonymized feedback comprising median scores and interquartile ranges (IQRs). This controlled feedback allowed experts to reconsider their ratings regarding group trends.

- ➢

- Round 3: Final evaluations were submitted, and qualitative feedback was collected. This round also invited targeted strategic recommendations for operationalizing each pillar.

8.2. Consensus Results

- ➢

- Pillar 1: Data Access and Interoperability (Median = 5.0, IQR = 0.5).

- ➢

- Pillar 2: Digital Infrastructure and Process Redesign (Median = 5.0, IQR = 0.5).

- ➢

- Pillar 3: Workforce Competencies and Learning Agility (Median = 5.0, IQR = 0.0).

- ➢

- Pillar 4: Institutional Leadership and Change Management (Median = 5.0, IQR = 0.0).

8.3. Strategic Recommendations

- ➢

- ➢

- Pillar 2: Integrate disconnected platforms (e.g., Chikaya, Mahakim) through APIs, ensuring compliance with Law 09-08 on data protection [92].

- ➢

- Pillar 3: Develop a national competency framework in collaboration with universities, including training in AI ethics, algorithmic accountability, and explainability [93].

- ➢

- Pillar 4: Establish a High Council for AI Governance with cross-sectoral authority, modeled on Finland’s AuroraAI initiative [94].

| AICM Pillar | Strategic Recommendations | Illustrative Examples |

|---|---|---|

| Pillar 1: Data Access and Interoperability | Launch a national digitization programme to convert paper-based archives into interoperable digital records. | Less than 20% of Moroccan institutions use interoperable systems; the majority still rely on manual documentation [90]. |

| Establish a national metadata standard and enforce inter-agency data exchange protocols. | ||

| Pillar 2: Digital Infrastructure and Process Redesign | Integrate existing digital platforms (e.g., Chikaya, e-Huissier, Mahakim) using standardized APIs. | The Court of Accounts [92] highlighted duplication and inefficiency due to disconnected systems. |

| Ensure all digital services comply with Law 09-08 on personal data protection and follow national cybersecurity norms. | ||

| Pillar 3: Workforce Competencies and Learning Agility | Develop a national competency framework for public employees in partnership with universities. | Aligns with TAM3: improving perceived ease of use and building trust through user literacy [93,95]. |

| Introduce training on AI ethics, algorithmic accountability, and explainability principles. | ||

| Pillar 4: Institutional Leadership and Change Management | Create a High Council for AI Governance under the Prime Minister, with cross-sectoral coordination powers. | Inspired by initiatives like Finland’s AuroraAI, combining AI strategy with participatory governance [94]. |

| Mandate sector-specific AI implementation roadmaps, with performance indicators and citizen feedback mechanisms. |

9. Managerial and Policy Implications

9.1. Strategic Urgency and Institutional Gap in Morocco

9.2. AICM as an Action-Ariented Readiness Framework

9.3. Anticipating Workforce Transformation in the Public Sector

9.4. Institutional Leadership for Ethical and Accountable AI

9.5. Toward a Coherent National AI Strategy

10. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| ADD | Digital Development Agency (Morocco) |

| AI | Artificial Intelligence |

| AIA | Algorithmic Impact Assessment |

| AICM | AI Integration Capability Model |

| API | Application Programming Interface |

| BERT | Bidirectional Encoder Representations from Transformers |

| ERP | Enterprise Resource Planning |

| CAF | Canadian Armed Forces |

| CNSS | National Social Security Fund (Morocco) |

| COMPAS | Correctional Offender Management Profiling for Alternative Sanctions |

| DGWGR | Data Governance Working Group Report |

| CORE | Computing Research and Education Association |

| DC | Dynamic Capabilities |

| DGRL | Digital Government Reference Library |

| DEG | Digital-Era Governance |

| DL | Deep Learning |

| ENA | National School of Administration Morocco |

| EU | European Union |

| GDPR | General Data Protection Regulation |

| GDS | Government Digital Service (United Kingdom) |

| GPT | Generative Pretrained Transformer |

| HITL | Human-in-the-Loop |

| INDH | Initiative Nationale pour le Développement Humain (Morocco) |

| INPT | National Institute of Posts and Telecommunications |

| IoT | Internet of Things |

| LLM | Large Language Models |

| MEAE | Ministry of Economic Affairs and Employment |

| ML | Machine Learning |

| NLP | Natural Language Processing |

| OECD | Organization for Economic Co-operation and Development |

| ROI | Return on Investment |

| SLR | Systematic Literature Review |

| TAM | Technology Acceptance Model |

| UK | United Kingdom |

| UN | United Nations |

| USA | United States |

| XAI | Explainable Artificial Intelligence |

| X-Road | Cross-Road Interoperability Platform (Estonia) |

References

- Engel, C.; Linhardt, L.; Schubert, M. Code Is Law: How COMPAS Affects the Way the Judiciary Handles the Risk of Recidivism. Artif. Intell. Law 2025, 33, 383–404. [Google Scholar] [CrossRef]

- Lim, B.; Seth, I.; Rozen, W.M. The Role of Artificial Intelligence Tools on Advancing Scientific Research. Aesthetic Plast. Surg. 2023, 48, 3036–3038. [Google Scholar] [CrossRef] [PubMed]

- Rodrigues, R. Legal and Human Rights Issues of AI: Gaps, Challenges and Vulnerabilities. J. Responsible Technol. 2020, 4, 100005. [Google Scholar] [CrossRef]

- Schüller, M. Artificial Intelligence: New Challenges and Opportunities for Asian Countries. In Exchanges and Mutual Learning Among Asian Civilizations; Springer Nature Singapore: Singapore, 2023; pp. 277–285. ISBN 978-981-19716-4-8. [Google Scholar]

- Ahmed, M.I.; Spooner, B.; Isherwood, J.; Lane, M.; Orrock, E.; Dennison, A. A Systematic Review of the Barriers to the Implementation of Artificial Intelligence in Healthcare. Cureus 2023, 15, e46454. [Google Scholar] [CrossRef]

- Sharma, G.D.; Yadav, A.; Chopra, R. Artificial Intelligence and Effective Governance: A Review, Critique and Research Agenda. Sustain. Futures 2020, 2, 100004. [Google Scholar] [CrossRef]

- Zuiderwijk, A.; Chen, Y.-C.; Salem, F. Implications of the Use of Artificial Intelligence in Public Governance: A Systematic Literature Review and a Research Agenda. Gov. Inf. Q. 2021, 38, 101577. [Google Scholar] [CrossRef]

- Heimberger, H.; Horvat, D.; Schultmann, F. Exploring the Factors Driving AI Adoption in Production: A Systematic Literature Review and Future Research Agenda. Inf. Technol. Manag. 2024, 1–17. [Google Scholar] [CrossRef]

- Davis, F.D. Technology Acceptance Model: TAM. Al-Suqri MN Al-Aufi Inf. Seek. Behav. Technol. Adopt. 1989, 205, 5. [Google Scholar]

- Marangunić, N.; Granić, A. Technology Acceptance Model: A Literature Review from 1986 to 2013. Univers. Access Inf. Soc. 2015, 14, 81–95. [Google Scholar] [CrossRef]

- Dunleavy, P.; Margetts, H.; Bastow, S.; Tinkler, J. New Public Management Is Dead—Long Live Digital-Era Governance. J. Public Adm. Res. Theory 2006, 16, 467–494. [Google Scholar] [CrossRef]

- Teece, D.J. Explicating Dynamic Capabilities: The Nature and Microfoundations of (Sustainable) Enterprise Performance. Strateg. Manag. J. 2007, 28, 1319–1350. [Google Scholar] [CrossRef]

- Hasson, F.; Keeney, S.; McKenna, H. Research Guidelines for the Delphi Survey Technique. J. Adv. Nurs. 2000, 32, 1008–1015. [Google Scholar] [CrossRef] [PubMed]

- Dospinescu, O.; Buraga, S. Integrated ERP Systems—Determinant Factors for Their Adoption in Romanian Organizations. Systems 2025, 13, 667. [Google Scholar] [CrossRef]

- Benitez, J.M.; Castro, J.L.; Requena, I. Are Artificial Neural Networks Black Boxes? IEEE Trans. Neural Netw. 1997, 8, 1156–1164. [Google Scholar] [CrossRef]

- Vaghela, M.C.; Rathi, S.; Shirole, R.L.; Verma, J.; Shaheen; Panigrahi, S.; Singh, S. Leveraging AI and Machine Learning in Six-Sigma Documentation for Pharmaceutical Quality Assurance. Zhongguo Ying Yong Sheng Li Xue Za Zhi 2024, 40, e20240005. [Google Scholar] [CrossRef] [PubMed]

- Russell, S.J.; Norvig, P. Artificial Intelligence: A Modern Approach, 4th ed.; Prentice Hall Series in Artificial Intelligence; Pearson: Boston, MA, USA, 2022; ISBN 978-1-292-40117-1. [Google Scholar]

- Mohammed, S.; Budach, L.; Feuerpfeil, M.; Ihde, N.; Nathansen, A.; Noack, N.; Patzlaff, H.; Naumann, F.; Harmouch, H. The Effects of Data Quality on Machine Learning Performance on Tabular Data. Inf. Syst. 2025, 132, 102549. [Google Scholar] [CrossRef]

- Soori, M.; Arezoo, B.; Dastres, R. Artificial Intelligence, Machine Learning and Deep Learning in Advanced Robotics, a Review. Cogn. Robot. 2023, 3, 54–70. [Google Scholar] [CrossRef]

- Taye, M.M. Understanding of Machine Learning with Deep Learning: Architectures, Workflow, Applications and Future Directions. Computers 2023, 12, 91. [Google Scholar] [CrossRef]

- Buhmann, A.; Fieseler, C. Deep Learning Meets Deep Democracy: Deliberative Governance and Responsible Innovation in Artificial Intelligence. Bus. Ethics Q. 2023, 33, 146–179. [Google Scholar] [CrossRef]

- Ferrari, F.; Van Dijck, J.; Van Den Bosch, A. Observe, Inspect, Modify: Three Conditions for Generative AI Governance. New Media Soc. 2025, 27, 2788–2806. [Google Scholar] [CrossRef]

- Xu, J. Opening the ‘Black Box’ of Algorithms: Regulation of Algorithms in China. Commun. Res. Pract. 2024, 10, 288–296. [Google Scholar] [CrossRef]

- Jawad, Z.N.; Balázs, V. Machine Learning-Driven Optimization of Enterprise Resource Planning (ERP) Systems: A Comprehensive Review. Beni-Suef Univ. J. Basic Appl. Sci. 2024, 13, 4. [Google Scholar] [CrossRef]

- Wijesinghe, S.; Nanayakkara, I.; Pathirana, R.; Wickramarachchi, R.; Fernando, I. Impact of IoT Integration on Enterprise Resource Planning (ERP) Systems: A Comprehensive Literature Analysis. In Proceedings of the 2024 International Research Conference on Smart Computing and Systems Engineering (SCSE), Colombo, Sri Lanka, 4 April 2024; Volume 7, pp. 1–5. [Google Scholar]

- Kitchenham, B.; Charters, S. Guidelines for Performing Systematic Literature Reviews in Software Engineering; Technical Report; Keele University and Durham University: Staffordshire, UK, 2007. [Google Scholar]

- Dwivedi, R.; Nerur, S.; Balijepally, V. Exploring Artificial Intelligence and Big Data Scholarship in Information Systems: A Citation, Bibliographic Coupling, and Co-Word Analysis. Int. J. Inf. Manag. Data Insights 2023, 3, 100185. [Google Scholar] [CrossRef]

- Higgins, J.P.T.; Thomas, J.; Chandler, J.; Cumpston, M.; Li, T.; Page, M.J.; Welch, V.A. Cochrane Handbook for Systematic Reviews of Interventions, 1st ed.; Wiley: Hoboken, NJ, USA, 2019; ISBN 978-1-119-53662-8. [Google Scholar]

- Wiesmüller, S. Contextualisation of Relational AI Governance in Existing Research. In The Relational Governance of Artificial Intelligence; Springer Nature Switzerland: Cham, Switzerland, 2023; pp. 165–212. ISBN 978-3-031-25022-4. [Google Scholar]

- Haefner, N.; Parida, V.; Gassmann, O.; Wincent, J. Implementing and Scaling Artificial Intelligence: A Review, Framework, and Research Agenda. Technol. Forecast. Soc. Change 2023, 197, 122878. [Google Scholar] [CrossRef]

- Von Essen, L.; Ossewaarde, M. Artificial Intelligence and European Identity: The European Commission’s Struggle for Reconciliation. Eur. Politics Soc. 2024, 25, 375–402. [Google Scholar] [CrossRef]

- Botero Arcila, B. AI Liability in Europe: How Does It Complement Risk Regulation and Deal with the Problem of Human Oversight? Comput. Law Secur. Rev. 2024, 54, 106012. [Google Scholar] [CrossRef]

- Roberts, H.; Hine, E.; Taddeo, M.; Floridi, L. Global AI Governance: Barriers and Pathways Forward. Int. Aff. 2024, 100, 1275–1286. [Google Scholar] [CrossRef]

- Ingrams, A.; Klievink, B. Transparency’s Role in AI Governance. In The Oxford Handbook of AI Governance; Bullock, J.B., Chen, Y.-C., Himmelreich, J., Hudson, V.M., Korinek, A., Young, M.M., Zhang, B., Eds.; Oxford University Press: Oxford, UK, 2022; pp. 479–494. ISBN 978-0-19-757932-9. [Google Scholar]

- Ivic, A.; Milicevic, A.; Krstic, D.; Kozma, N.; Havzi, S. The Challenges and Opportunities in Adopting AI, IoT and Blockchain Technology in E-Government: A Systematic Literature Review. In Proceedings of the 2022 International Conference on Communications, Information, Electronic and Energy Systems (CIEES), Veliko Tarnovo, Bulgaria, 26–28 November 2022; IEEE: New York, NY, USA, 2022; pp. 1–6. [Google Scholar]

- Das, R.; Soylu, M. A Key Review on Graph Data Science: The Power of Graphs in Scientific Studies. Chemom. Intell. Lab. Syst. 2023, 240, 104896. [Google Scholar] [CrossRef]

- Bluemke, E.; Collins, T.; Garfinkel, B.; Trask, A. Exploring the Relevance of Data Privacy-Enhancing Technologies for AI Governance Use Cases 2023. arXiv 2023, arXiv:2303.08956. [Google Scholar] [CrossRef]

- Himanshu, H.; Department of HMCT, Chandigarh College of Hotel Management and Catering Technology, Punjab, India. Role of Artificial Intelligence in Decision Making. In Decision Strategies and Artificial Intelligence Navigating the Business Landscape; San International Scientific Publications: Kanyakumari, India, 2023; ISBN 978-81-963849-1-3. [Google Scholar]

- Waja, G.; Patil, G.; Mehta, C.; Patil, S. How AI Can Be Used for Governance of Messaging Services: A Study on Spam Classification Leveraging Multi-Channel Convolutional Neural Network. Int. J. Inf. Manag. Data Insights 2023, 3, 100147. [Google Scholar] [CrossRef]

- Mohammed, A.; Mohammad, M. How AI Algorithms Are Being Used in Applications. In Soft Computing and Signal Processing; Reddy, V.S., Prasad, V.K., Wang, J., Reddy, K.T.V., Eds.; Springer Nature Singapore: Singapore, 2023; Volume 313, pp. 41–53. ISBN 978-981-19866-8-0. [Google Scholar]

- Deng, B.; Qiu, Y. Comment on “Analytical Solutions to One-Dimensional Advection–Diffusion Equation with Variable Coefficients in Semi-Infinite Media” by Kumar, A., Jaiswal, D.K., Kumar, N., J. Hydrol., 2010, 380: 330–337. J. Hydrol. 2012, 424–425, 278–279. [Google Scholar] [CrossRef]

- Jha, J.; Vishwakarma, A.K.; N, C.; Nithin, A.; Sayal, A.; Gupta, A.; Kumar, R. Artificial Intelligence and Applications. In Proceedings of the 2023 1st International Conference on Intelligent Computing and Research Trends (ICRT), Roorkee, India, 3–4 February 2023; IEEE: New York, NY, USA, 2023; pp. 1–4. [Google Scholar]

- Bajwa, J.; Munir, U.; Nori, A.; Williams, B. Artificial Intelligence in Healthcare: Transforming the Practice of Medicine. Future Healthc. J. 2021, 8, e188–e194. [Google Scholar] [CrossRef]

- Yang, J.; Blount, Y.; Amrollahi, A. Artificial Intelligence Adoption in a Professional Service Industry: A Multiple Case Study. Technol. Forecast. Soc. Change 2024, 201, 123251. [Google Scholar] [CrossRef]

- Van Noordt, C.; Misuraca, G. Exploratory Insights on Artificial Intelligence for Government in Europe. Soc. Sci. Comput. Rev. 2022, 40, 426–444. [Google Scholar] [CrossRef]

- Gao, X.; Feng, H. AI-Driven Productivity Gains: Artificial Intelligence and Firm Productivity. Sustainability 2023, 15, 8934. [Google Scholar] [CrossRef]

- Espinosa, V.I.; Pino, A. E-Government as a Development Strategy: The Case of Estonia. Int. J. Public Adm. 2025, 48, 86–99. [Google Scholar] [CrossRef]

- Chen, Y. How Blockchain Adoption Affects Supply Chain Sustainability in the Fashion Industry: A Systematic Review and Case Studies. Int. Trans. Oper. Res. 2024, 31, 3592–3620. [Google Scholar] [CrossRef]

- Toll, D.; Lindgren, I.; Melin, U.; Madsen, C.Ø. Values, Benefits, Considerations and Risks of AI in Government: A Study of AI Policies in Sweden. JeDEM Ejournal Edemocr. Open Gov. 2020, 12, 40–60. [Google Scholar] [CrossRef]

- Ajali-Hernández, N.I.; Travieso-González, C.M. Novel Cost-Effective Method for Forecasting COVID-19 and Hospital Occupancy Using Deep Learning. Sci. Rep. 2024, 14, 25982. [Google Scholar] [CrossRef]

- European Commission. Proposal for a Regulation of the European Parliament and of the Council Laying down Harmonised Rules on Artificial Intelligence (Artificial Intelligence Act) and Amending Certain Union Legislative Acts; COM/2021/206final; European Commission: Brussels, Belgium, 2021; pp. 1–107. Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=celex:52021PC0206 (accessed on 10 September 2025).

- Petrin, M. The Impact of AI and New Technologies on Corporate Governance and Regulation. Sing. J. Leg. Stud. 2024, 90. [Google Scholar] [CrossRef]

- Duberry, J. Chapter 14: AI and Data-Driven Political Communication (Re)Shaping Citizen–Government Interactions. In Research Handbook on Artificial Intelligence and Communication; Nah, S., Ed.; Edward Elgar Publishing: Cheltenham, UK, 2023; pp. 231–245. ISBN 978-1-80392-030-6. [Google Scholar]

- Liebig, L.; Güttel, L.; Jobin, A.; Katzenbach, C. Subnational AI Policy: Shaping AI in a Multi-Level Governance System. AI Soc. 2024, 39, 1477–1490. [Google Scholar] [CrossRef]

- Floridi, L.; Cowls, J. A Unified Framework of Five Principles for AI in Society. Harv. Data Sci. Rev. 2019, 535–545. [Google Scholar] [CrossRef]

- Gstrein, O.J.; Haleem, N.; Zwitter, A. General-Purpose AI Regulation and the European Union AI Act. Internet Policy Rev. 2024, 13, 1–26. [Google Scholar] [CrossRef]

- Hupont, I.; Fernández-Llorca, D.; Baldassarri, S.; Gómez, E. Use Case Cards: A Use Case Reporting Framework Inspired by the European AI Act. Ethics Inf. Technol. 2024, 26, 19. [Google Scholar] [CrossRef]

- Margetts, H.; Dunleavy, P. The Second Wave of Digital-Era Governance: A Quasi-Paradigm for Government on the Web. Philos. Trans. R. Soc. A Math. Phys. Eng. Sci. 2013, 371, 20120382. [Google Scholar] [CrossRef]

- Olsen, H.P.; Hildebrandt, T.T.; Wiesener, C.; Larsen, M.S.; Flügge, A.W.A. The Right to Transparency in Public Governance: Freedom of Information and the Use of Artificial Intelligence by Public Agencies. Digit. Gov. Res. Pract. 2024, 5, 1–15. [Google Scholar] [CrossRef]

- Pislaru, M.; Vlad, C.S.; Ivascu, L.; Mircea, I.I. Citizen-Centric Governance: Enhancing Citizen Engagement through Artificial Intelligence Tools. Sustainability 2024, 16, 2686. [Google Scholar] [CrossRef]

- Alon-Barkat, S.; Busuioc, M. Human–AI Interactions in Public Sector Decision Making: “Automation Bias” and “Selective Adherence” to Algorithmic Advice. J. Public Adm. Res. Theory 2023, 33, 153–169. [Google Scholar] [CrossRef]

- Murdoch, B. Privacy and Artificial Intelligence: Challenges for Protecting Health Information in a New Era. BMC Med. Ethics 2021, 22, 122. [Google Scholar] [CrossRef]

- Greene, K.G. AI Governance Multi-Stakeholder Convening. In The Oxford Handbook of AI Governance; Bullock, J.B., Chen, Y.-C., Himmelreich, J., Hudson, V.M., Korinek, A., Young, M.M., Zhang, B., Eds.; Oxford University Press: Oxford, UK, 2022; pp. 109–126. ISBN 978-0-19-757932-9. [Google Scholar]

- John, T. The Ethical Considerations of Artificial Intelligence in Clinical Decision Support. Proc. Wellingt. Fac. Eng. Ethics Sustain. Symp. 2022. [Google Scholar] [CrossRef]

- Ndrejaj, A.; Ali, M. Artificial Intelligence Governance: A Study on the Ethical and Security Issues That Arise. In Proceedings of the 2022 International Conference on Computing, Electronics & Communications Engineering (iCCECE), Southend, UK, 17–18 August 2022; IEEE: New York, NY, USA, 2022; pp. 104–111. [Google Scholar]

- De Cremer, D.; Narayanan, D. On Educating Ethics in the AI Era: Why Business Schools Need to Move beyond Digital Upskilling, towards Ethical Upskilling. AI Ethics 2023, 3, 1037–1041. [Google Scholar] [CrossRef]

- Iddrisu, A.-M.; Mensah, S.; Boafo, F.; Yeluripati, G.R.; Kudjo, P. A Sentiment Analysis Framework to Classify Instances of Sarcastic Sentiments within the Aviation Sector. Int. J. Inf. Manag. Data Insights 2023, 3, 100180. [Google Scholar] [CrossRef]

- Mavrogiorgos, K.; Kiourtis, A.; Mavrogiorgou, A.; Manias, G.; Kyriazis, D. A Question Answering Software for Assessing AI Policies of OECD Countries. In Proceedings of the 4th European Symposium on Software Engineering, Napoli, Italy, 1–3 December 2023; ACM: New York, NY, USA, 2023; pp. 31–36. [Google Scholar]

- Dang, H.B.; Pham, T.T.Q.; Nguyen, V.P.; Nguyen, V.H. Regulatory Impact of a Governmental Approach for Artificial Intelligence Technology Implementation in Vietnam. J. Infrastruct. Policy Dev. 2024, 8, 6631. [Google Scholar] [CrossRef]

- Thoene, U.; García Alonso, R.; Dávila Benavides, D.E. Ethical Frameworks and Regulatory Governance: An Exploratory Analysis of the Colombian Strategy for Artificial Intelligence. Law State Telecommun. Rev. 2024, 16, 146–171. [Google Scholar] [CrossRef]

- Arora, A.; Gupta, M.; Mehmi, S.; Khanna, T.; Chopra, G.; Kaur, R.; Vats, P. Towards Intelligent Governance: The Role of AI in Policymaking and Decision Support for E-Governance. In Information Systems for Intelligent Systems; So In, C., Londhe, N.D., Bhatt, N., Kitsing, M., Eds.; Springer Nature Singapore: Singapore, 2024; Volume 379, pp. 229–240. ISBN 978-981-9986-11-8. [Google Scholar]

- Palladino, N. A digital constitutionalism framework for AI. Riv. Di Digit. Politics 2023, 3, 521–542. [Google Scholar] [CrossRef]

- CAF. The Department of National Defence and Canadian Armed Forces Artificial Intelligence Strategy; CAF: Elmira, NY, USA, 2024; ISBN 978-0-660-45122-0. Available online: https://www.canada.ca/en/department-national-defence/corporate/reports-publications/dnd-caf-artificial-intelligence-strategy.html (accessed on 10 September 2025).

- Wendehorst, C. Data Governance Working Group: A Framework Paper for GPAI’s Work on Data Governance. 2020. Available online: https://ucrisportal.univie.ac.at/en/publications/data-governance-working-group-a-framework-paper-for-gpais-work-on (accessed on 25 July 2025).

- Wang, C.; Teo, T.S.H.; Janssen, M. Public and Private Value Creation Using Artificial Intelligence: An Empirical Study of AI Voice Robot Users in Chinese Public Sector. Int. J. Inf. Manag. 2021, 61, 102401. [Google Scholar] [CrossRef]

- Fjeld, J.; Achten, N.; Hilligoss, H.; Nagy, A.; Srikumar, M. Principled Artificial Intelligence: Mapping Consensus in Ethical and Rights-Based Approaches to Principles for AI. SSRN Electron. J. 2020. [Google Scholar] [CrossRef]

- Leoni, G.; Bergamaschi, F.; Maione, G. Artificial Intelligence and Local Governments: The Case of Strategic Performance Management Systems and Accountability. In Artificial Intelligence and Its Contexts; Visvizi, A., Bodziany, M., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 145–157. ISBN 978-3-030-88971-5. [Google Scholar]

- Alshahrani, A.; Griva, A.; Dennehy, D.; Mäntymäki, M. Artificial Intelligence and Decision-Making in Government Functions: Opportunities, Challenges and Future Research. Transform. Gov. People Process Policy 2024, 18, 678–698. [Google Scholar] [CrossRef]

- Dreyling, R.; Tammet, T.; Pappel, I.; McBride, K. Navigating the AI Maze: Lessons from Estonia’s Bürokratt on Public Sector AI Digital Transformation 2024. Available online: https://ssrn.com/abstract=4850696 (accessed on 10 September 2025).

- Alketbi, M. Assessing Readiness for Transformation from Rule Based to Ai-Based Chatbot in UAE Healthcare: A Case Study of a Rehabilitation Hospital in Abu Dhabi. Master’s Thesis, United Arab Emirates University, Abu Dhabi, United Arab Emirates, 2025. [Google Scholar]

- Wang, S.; Zhang, Y.; Xiao, Y.; Liang, Z. Artificial Intelligence Policy Frameworks in China, the European Union and the United States: An Analysis Based on Structure Topic Model. Technol. Forecast. Soc. Change 2025, 212, 123971. [Google Scholar] [CrossRef]

- Misra, S.K.; Sharma, S.K.; Gupta, S.; Das, S. A Framework to Overcome Challenges to the Adoption of Artificial Intelligence in Indian Government Organizations. Technol. Forecast. Soc. Change 2023, 194, 122721. [Google Scholar] [CrossRef]

- OECD. Framework for the Classification of AI Systems; OECD Publishing: Paris, France, 2022. [Google Scholar]

- Government of Canada. Algorithmic Impact Assessment v0.10.0; Government of Canada: Ottawa, ON, USA, 2023. Available online: https://open.canada.ca/aia-eia-js_0.10 (accessed on 10 October 2025).

- Qudah, M.A.A.; Muradkhanli, L.; Salameh, A.A.; Rind, M.A.; Muradkhanli, Z. Artificial Intelligence Techniques In Improving the Quality of Services Provided By E-Government To Citizens. In Proceedings of the 2024 IEEE 1st Karachi Section Humanitarian Technology Conference (KHI-HTC), Tandojam, Pakistan, 8–9 January 2024; IEEE: New York, NY, USA, 2024; pp. 1–4. [Google Scholar]

- Iuga, I.C.; Socol, A. Government artificial intelligence readiness and brain drain: Influencing factors and spatial effects in the European union member states. J. Bus. Econ. Manag. 2024, 25, 268–296. [Google Scholar] [CrossRef]

- Mahajan, V. Book Review: The Delphi Method: Techniques and Applications. J. Mark. Res. 1976, 13, 317–318. [Google Scholar] [CrossRef]

- Skulmoski, G.J.; Hartman, F.T.; Krahn, J. The Delphi Method for Graduate Research. J. Inf. Technol. Educ. Res. 2007, 6, 1–21. [Google Scholar] [CrossRef]

- Hsu, C.-C.; Sandford, B.A. The Delphi Technique: Making Sense of Consensus. Pract. Assess. Res. Eval. 2007, 12, 10. [Google Scholar] [CrossRef]

- OECD. Digital Government Review of Morocco: Laying the Foundations for the Digital Transformation of the Public Sector in Morocco, OECD Digital Government Studies; OECD Publishing: Paris, France, 2018. [Google Scholar]

- United Nations Department of Economic and Social Affairs. United Nations E-Government Survey 2022: The Future of Digital Government; United Nations e-Government Survey Series, 1st ed.; United Nations Publications: New York, NY, USA, 2022; ISBN 978-92-1-123213-4. [Google Scholar]

- Cour des Comptes. Évaluation Des Services Publics En Ligne; Cour des Comptes: Rabat, Morocco, 2019; Available online: https://www.courdescomptes.ma/wp-content/uploads/2023/01/Rapport-services-en-ligne-2019.pdf (accessed on 5 April 2025).

- Venkatesh, V.; Bala, H. Technology Acceptance Model 3 and a Research Agenda on Interventions. Decis. Sci. 2008, 39, 273–315. [Google Scholar] [CrossRef]

- MEAE Finland’s Age of Artificial Intelligence—Turning Finland into a Leading Country in the Application of Artificial Intelligence: Objective and Recommendations for Measures; Publications of Ministry of Economic Affairs and Employment Helsinki. 2017. Available online: https://julkaisut.valtioneuvosto.fi/handle/10024/80849 (accessed on 10 March 2025).

- Nawaz, N.; Arunachalam, H.; Pathi, B.K.; Gajenderan, V. The Adoption of Artificial Intelligence in Human Resources Management Practices. Int. J. Inf. Manag. Data Insights 2024, 4, 100208. [Google Scholar] [CrossRef]

| Study | No. of Papers | Focus Area | Period Covered | Key Findings | Strengths | Weaknesses | Main Contributions |

|---|---|---|---|---|---|---|---|

| [6] | 74 | Theoretical frameworks for AI governance | 1983–2019 | AI improves public governance through automation, transparency, and predictive analytics. | Provides a broad conceptual agenda and identifies critical research gaps. | Limited empirical data; lacks connection to operational contexts. | Established a theoretical foundation and encouraged empirical extensions for AI governance. |

| [7] | 26 | Public governance and AI ethics | 2010–2020 | Investigate transparency, accountability, and privacy challenges in public AI applications. | Strong interdisciplinary design and robust theoretical grounding. | Limited empirical and quantitative analysis; mostly Western contexts. | Designed a comprehensive research agenda focused on procedural and normative issues. |

| [5] | 59 | AI adoption barriers in healthcare | 2000–2023 | Identifies six key barriers: ethical, technological, legal, workforce-related, social, and safety concerns. | Offers actionable insights into overcoming sector-specific obstacles. | Focused solely on healthcare, lacks applicability to broader governance settings. | Synthesized implementation barriers and provided a sectoral framework for healthcare AI integration. |

| [8] | 47 | AI adoption in industrial production | 2010–2024 | Highlights 35 adoption factors across skills, data, ethics, and leadership themes. | Structured categorization of influencing factors and strategic implications. | Based only on academic literature, it lacks validation through real-world case studies. | Proposed an adoption framework and identified gaps in training and institutional preparedness. |

| Analytical Dimension | TAM (Individual) | DEG (Institutional) | DC | Delphi |

|---|---|---|---|---|

| Perceived usefulness | ✔ | |||

| Trust and fairness | ✔ | ✔ | ✔ | |

| Ease of use | ✔ | |||

| Institutional efficiency | ✔ | ✔ | ✔ | |

| Integrated service delivery | ✔ | |||

| Transparency and accountability | ✔ | ✔ | ||

| Adaptive capacity | ✔ | ✔ |

| Scope | Terms |

|---|---|

| AI | AI, ML, DL, Neural networks, NLP, LLMs, Computer vision, Algorithmic transparency, AI fairness, Bias mitigation, AI security, Explainable AI, and Responsible AI. |

| Governance | Governance, Public administration, Policy, Transparency, Accountability, Ethics, Regulatory compliance, Risk mitigation, Digital governance, Regulation, Fairness, Auditing, Public trust. |

| Decision-making | Decision-making, Predictive analytics, Human oversight, Decision autonomy, Administration, Efficiency metrics, Strategic planning, Risk-based decisions, public services. |

| Inclusion Criteria | Exclusion Criteria |

|---|---|

| IC1: Papers analyzing the integration of AI in governance decision-making processes, including case studies, frameworks, or empirical analysis of AI governance models (e.g., risk-based, self-regulation, hybrid). | EC1: Documents not written in English. |

| IC2: Studies comparing AI-driven governance models to traditional decision-making approaches. | EC2: Unavailability of the full text. |

| IC3: Research discussing ethical, operational, regulatory, or technical challenges, as well as governance strategies (e.g., AI audits, transparency laws, risk mitigation frameworks). IC4: Studies evaluating AI’s impact on decision autonomy, transparency, accountability, and efficiency in governance. IC5: Only the most recent and comprehensive version of duplicate works will be included. | EC3: Papers focusing solely on AI’s technical aspects (e.g., algorithmic performance, model optimization) without relevance to governance decision-making. |

| ID | Question | Answer & Score |

|---|---|---|

| QA1 | Does the study primarily focus on AI’s impact on governance decision-making structures (risk-based, centralized, hybrid), processes, or administrative frameworks? | ‘Yes: +1’, ‘No: 0’ |

| QA2 | Does the study assess how AI enhances policymaking and/or operational decision-making in governance institutions? | ‘Yes: +1’, ‘No: 0’ |

| QA3 | Does the study evaluate ethical, legal, regulatory challenges, AI auditing, and transparency laws in AI-driven governance? | ‘Yes: +1’, ‘No: 0’ |

| QA4 | Does the study propose frameworks, models, or best practices (e.g., EU AI Act, US AI Bill of Rights) for AI integration in governance decision-making? | ‘Yes: +1’, ‘No: 0’ |

| QA5 | Does the study discuss AI risks (e.g., algorithmic bias, decision opacity, accountability concerns) and propose mitigation strategies? | ‘Yes: +1’, ‘No: 0’, ‘Partially: 0.5’ |

| QA6 | Has the study been published in a recognized source (high-impact journals/conferences)? | Conferences: A: +1.5, B: +1, C: +0.5, Not classified: +0 Journals: Q1: +2, Q2: +1.5, Q3 or Q4: +1, Not classified: +0 |

| Category | Barriers | Strategic Responses | References |

|---|---|---|---|

| Ethical | Algorithmic bias, opaque decisions, and fairness concerns | Ethical audits, explainable AI (XAI), fairness by design | [37,55,56,57] |

| Technical | Legacy systems, data quality issues, and lack of interoperability | Infrastructure upgrades, robust data governance, and standardized APIs | [18,36] |

| Institutional | Lack of training, siloed departments, resistance to change | Digital literacy programs, interdepartmental coordination, and agile teams | [52,58] |

| Transparency | Absence of audit trails, hidden algorithms | Algorithm registers, public dashboards, and participatory audit mechanisms | [53,59] |

| Social acceptance | Citizen mistrust, fear of surveillance, or job displacement | Co-design processes, citizen engagement, and clear communication strategies | [54,60] |

| Stakeholder Group | Main Concerns | Theoretical Lens | References |

|---|---|---|---|

| Government officials | Efficiency, strategic alignment, and service delivery performance | DEG | [5,63] |

| Citizens and service users | Trust, fairness, privacy, transparency | TAM | [34,37,67] |

| Technical experts | System reliability, ease of use, and cybersecurity | TAM | [46,64] |

| Legal and academic experts | Ethical safeguards, the rule of law, and democratic accountability | DEG | [53,65] |

| Private sector actors | Innovation, market viability, and public-private compliance | TAM + DEG | [66,68] |

| Dimension | AI Readiness Index | AI Maturity Models | AICM (Our Study) |

|---|---|---|---|

| Level of analysis | National (macro) | Organizational (mainly private) | Institutional (public sector, multi-level) |

| Approach | Benchmarking/scoring | Stage-based transformation | Capability-building and transformation roadmap |

| Actionability | Low | Moderate | High |

| Public governance orientation | Limited | Generic | Strong (contextualized and governance-specific) |

| Theoretical basis | None | Implicit/variable | TAM, DEG, DC |

| Adaptability to the Global South | Weak | Moderate | Strong |

| AICM Pillar | Median | IQR | Consensus Level |

|---|---|---|---|

| Data access and interoperability | 5.0 | 0.5 | Strong |

| Digital Infrastructure and process redesign | 5.0 | 0.5 | Strong |

| Workforce competencies and learning Agility | 5.0 | 0.0 | Unanimous |

| Institutional leadership and change management | 5.0 | 0.0 | Unanimous |

| AICM Pillar | Round 1 (Median/IQR) | Round 2 (Median/IQR) | Round 3 (Median/IQR) |

|---|---|---|---|

| P1 | 4.0/1.0 | 4.5/0.5 | 5.0/0.5 |

| P2 | 4.0/1.0 | 4.5/0.5 | 5.0/0.5 |

| P3 | 4.5/0.5 | 5.0/0.0 | 5.0/0.0 |

| P4 | 4.0/1.0 | 5.0/0.5 | 5.0/0.0 |

| AICM Pillar | Objective | Recommended Actions |

|---|---|---|

| Pillar 1. Data Infrastructure and Interoperability | Create a reliable, machine-readable, standardized data infrastructure | Digitize paper records; create an interoperable national data platform; enforce metadata and data-sharing standards |

| Pillar 2. Digital Infrastructure & Process Redesign | Redesign services to be AI-ready, integrated, and user-focused | API-based integration of existing systems; aligning new projects with privacy and security standards |

| Pillar 3. Workforce Competencies | Train public servants for ethical and effective AI governance | Develop training in AI ethics, audit, and explainability; deploy competency frameworks through universities |

| Pillar 4. Institutional Leadership | Ensure strategic coordination and political ownership | Create a High Council for AI Governance; mandate sectoral AI roadmaps with evaluation and feedback mechanisms |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Aarab, A.; El Marzouki, A.; Boubker, O.; El Moutaqi, B. Integrating AI in Public Governance: A Systematic Review. Digital 2025, 5, 59. https://doi.org/10.3390/digital5040059

Aarab A, El Marzouki A, Boubker O, El Moutaqi B. Integrating AI in Public Governance: A Systematic Review. Digital. 2025; 5(4):59. https://doi.org/10.3390/digital5040059

Chicago/Turabian StyleAarab, Amal, Abdenbi El Marzouki, Omar Boubker, and Badreddine El Moutaqi. 2025. "Integrating AI in Public Governance: A Systematic Review" Digital 5, no. 4: 59. https://doi.org/10.3390/digital5040059

APA StyleAarab, A., El Marzouki, A., Boubker, O., & El Moutaqi, B. (2025). Integrating AI in Public Governance: A Systematic Review. Digital, 5(4), 59. https://doi.org/10.3390/digital5040059