1. Introduction

The need to evaluate museum websites is an issue that has been highlighted by several researchers [

1,

2,

3,

4,

5]. This evaluation is important because a museum achieves its goals through its online presence. Thus, the correct design of the museum website is important and is an issue to be evaluated.

However, the implementation of an evaluation experiment is not an easy task. Many usability evaluation questionnaires have been designed in order to test usability of a product and/or software [

6,

7,

8,

9,

10]. One of the most widely used is the SUS questionnaire. The specific questionnaire has only ten questions that are rated on a five-point scale ranging from strongly disagree to strongly agree. The structure of the questionnaire makes it easy to answer. Moreover, it has been found that for a very small number of users answering the questionnaire, one can achieve reliable results [

11]. In addition, the SUS questionnaire has been widely used at a professional level and referenced in over 2000 scientific publications, making it one of the most effective usability assessment tools regarding the validity and the reliability of the produced results. This is the main reason for using SUS questionnaire.

The evaluation of a website is a task that takes into account several criteria. For this purpose, in the past different Multi Criteria Decision Making (MCDM) theories have been applied for evaluating websites of different content [

2,

3,

4,

12,

13,

14]. Among these experiments are some that involve museums’ website evaluation [

2,

3,

4]. In this paper we focus on museums’ website evaluation and use as a case study the evaluation of natural museums’ websites. For this evaluation experiment, MCDM methods are combined and compared.

After having defined the criteria for evaluating natural museums’ websites, we present how Analytic Hierarchy Process (AHP) [

15] is used for calculating the weights of importance of these criteria. Further, AHP is combined in turn with Fuzzy TOPSIS (Technique for Order of Preference by Similarity to Ideal Solution) [

16] and Fuzzy VIKOR (VlsekriterijumskaOptimizacija I KOmpromisnoResenje) [

17,

18]. Fuzzy MCDM theories are used because the results of the evaluation are given in linguistic terms and those theories are considered more appropriate to model their reasoning.

AHP’s rationale is based on pairwise comparisons. Both the criteria and the alternatives are compared pairwise. The validity and consistency of the pairwise comparisons can be confirmed through a consistency test [

19]. The consistency test is of great importance since the quality of the results strictly depends on the consistency of pairwise comparison matrices. In case of absence of a consistency test, wrong, poor, or misleading results may be obtained [

20]. For this purpose, after the implementation the AHP, a consistency test is implemented.

After checking and confirming the consistency of AHP for weights’ calculation, two other theories are used for ranking the alternatives. This is due to the fact that AHP is based on pairwise comparisons and, therefore, if the alternatives being evaluated are many then pairwise comparisons are time consuming and difficult to implement. As a result, other MCDM theories with different rationales have been exploited and in particular, Fuzzy VIKOR and Fuzzy TOPSIS have been selected. These two theories are compared and their effectiveness is compared to the System Usability Scale (SUS) questionnaire [

6].

The focus of this paper is twofold: (1) checking the consistency of AHP for calculating the weights of criteria and (2) comparing Fuzzy TOPSIS and Fuzzy VIKOR, not only with each other but mainly with a usability evaluation questionnaire. The main aim is to check the efficiency and correctness of the MCDM methods by checking their consistency and checking them with other methods that are considered successful in website evaluation.

2. Dimensions and Criteria

The criteria used for the evaluation of the websites of natural history museums have been selected from a review on inspection evaluation experiments as proposed by Kabassi [

2] and the study of Sylaiou et al. [

21]. Ref. [

2] offers a state of the art review of the evaluation experiments on museums’ websites and conclusions on the criteria used. Sylaiou et al. [

21], on the other hand, focus their work on Virtual Museums. Their study does not focus on natural history museums’ websites but on museums in general. However, there is no study focusing specifically on natural history museums’ websites and, therefore, other related studies on museums’ websites have been selected as relevant here. In particular, Ref. [

2] has a detailed list of all the different criteria that have been used in the studies of museums’ websites in general and are included in one or more of the reviewed papers. Most of the criteria have been acquired by [

2] and criteria related to VR interaction have been acquired by [

21].

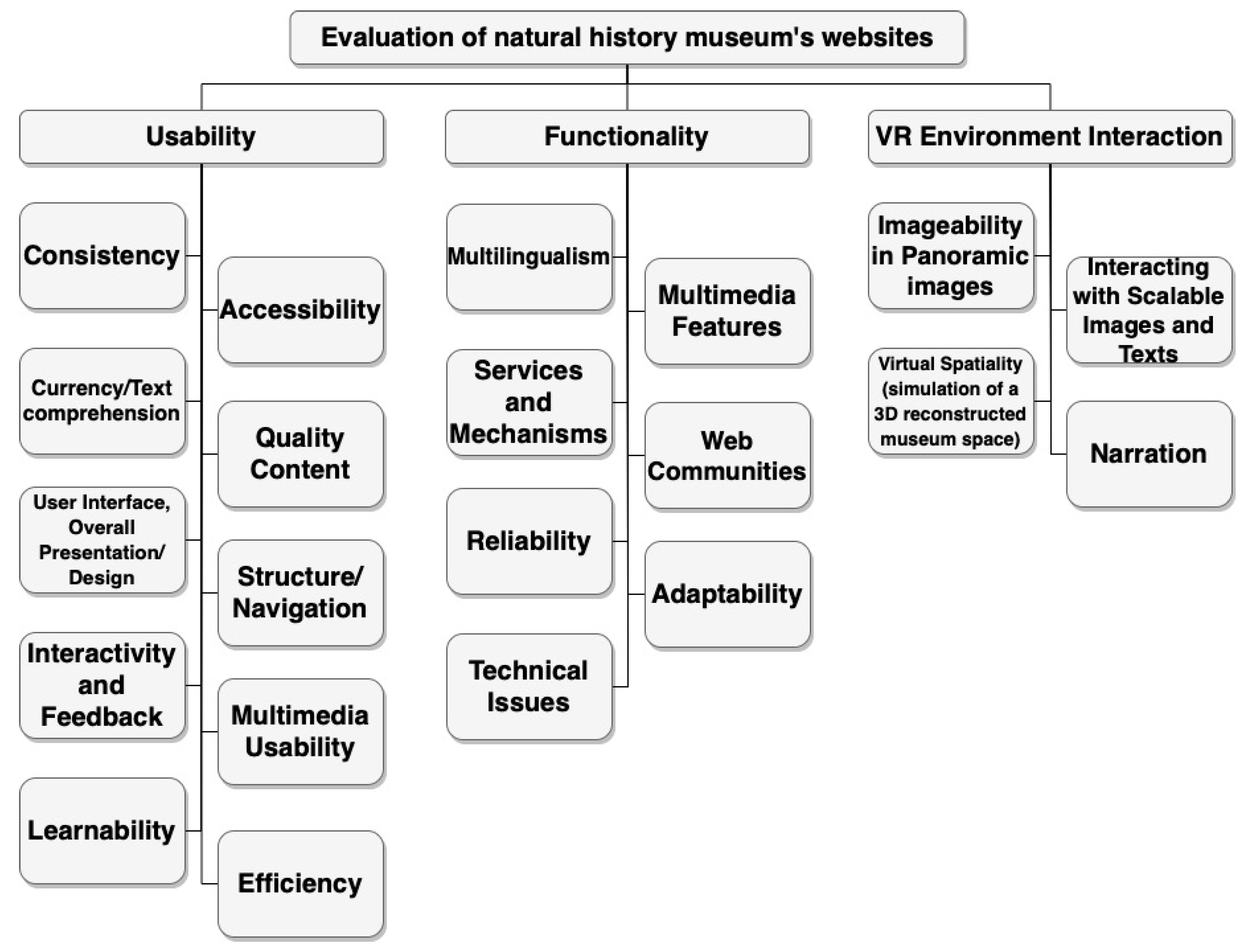

As a result, the final set of criteria has been formed taking into account the criteriathat seem to be more appropriate for the particular evaluation. The three evaluation dimensions of the proposed framework are:

Usability,

Functionality,

VR interaction.

The first two criteria have been drawn from the study [

19] while the third dimension has been drawn from the study [

21]. Each one of the above factors is further differentiated into more specific characteristics (sub criteria), the existence or not of which helps to determine the quality of a website.

The dimension of “Usability” is further analyzed through the following ten quality characteristics: Consistency—uc1 (checks if the website displays uniformity between its different elements); Accessibility—uc2 (measures how easily the website can be accessed by people with disabilities); Currency/Text comprehension—uc3 (checks if the content of the website is up to date and the text presented on it is easy to understand); Quality content—uc4 (checks if the website contains information about the museum and its exhibits, e.g., images of the exhibits, working hours, ticket prices, physical address, historical information about the museum, museum’s e-mail, etc.); User interface, overall presentation/design—uc5 (checks whether the design of the website is beautiful and attractive); Structure/navigation—uc6 (checks whether the website has an easily understood structure, menu and navigation system); Interactivity and feedback—uc7 (checks whether the website has synchronous and asynchronous communication services, e.g., links to social networks, chat and video conferencing services, e-mail services, newsletter alerts, online help etc.); Multimedia usability—uc8 (refers to the correct use of multimedia in the website in a way that enhances the user experience and enriches the user knowledge about the exhibits); Learnability—uc9 (refers to how quickly the user can learn to use the website); and Efficiency—uc10 (checks whether actions within the website like the subscription through a form, booking and paying for tickets online, etc., can be performed quickly and successfully).

The dimension of “Functionality” depends on seven criteria. Multilingualism—fc1 (checks whether the website has the ability to display its content into more than one language); Multimedia features—fc2 (refers to whether the website uses different types of multimedia to convey the information on it); Services and mechanisms—fc3 (refers to whether the website offers the possibility of use by the web user of other web services and applications such as online ticket purchasing, online museum shop, e-lectures services, etc.); Web communities—fc4 (checks if the website enhances the creation and maintenance of online user communities); Reliability—fc5 (checks whether the website provides the online services which it claims to offers, with reliability and accuracy); Adaptability—fc6 (refers to the ability of the website to adapt the structure, the content or the presentation of the website information in response to the users interaction with it); Technical issues—fc7 (checks if the website is easily accessible at any time of the day on any day via any operating system and any web browser; it also checks if the website uses unusual types of files).

The criteria evaluated within the context of “VR interaction” are: Imageability in panoramic images—VRc1 (checks if the museum website uses panoramic images in order to simulate the real-world museum space, through panoramic images that can be manipulated thanks to a set of interacting tools such as rotate and pan, zoom in and out, allowing users to experience and interactively navigate the simulated museum space); Interacting with scalable images and texts—VRc2 (refers to whether the website provides high resolution images of the museum artifacts, providing the opportunity to examine museum artefacts in detail by applying zoom tools over the high-resolution images); Virtual spatiality—VRc3 (checks if the website provides a 3D interactive representation of the museum environment and its exhibits, such that the user, through an avatar, will be able to navigate freely in the simulated museum space and interact with the information associated to the 3D representations of museum exhibits); Narration—VRc4 (corresponds to whether the website contains narrative embedded videos through which the storytelling is inserted into the user’s experience to offer a personal view and story engagement).

3. Alternative Museum Websites

The museums’ websites that have been selected to be evaluated and compared in the current experiment derive from research that was launched on November 2021. The first step of this research was the creation of a list of museums’ websites by searching on: (a) the International Committee for Museums and Collections of Natural History (NATHIST), (b) the Natural Heritage Search portal and (c) Wikipedia. This research resulted in finding more than 400 museums. Therefore, a filtering process had to be imposed in order to create a subset of museums.

First of all, the museums with an active link to an official webpage were detected, eliminating those websites that were out of function/service. In this way, the initial number of museums was reduced to 135. Considering the fact that museums have traditionally been among the most popular tourist attractions, an additional search for the most popular natural history museums was undertaken. For this purpose, the list of the most popular natural history museums as displayed in various travel platforms and guides such as Tripadvisor, Matador Network, Fodor’s Travel, Triponzy as well as the WorldAtlas website, was taken into consideration. Furthermore, the list of the most visited natural history museums, as displayed on the website of Wikipedia, was also taken into account. The support of English language by a website was the final filtering criterion in order to further reduce the dataset of 135 museums. After this additional filtering process, the number of the museums was restricted to 33.

In the design stage of the experiment, empirical methods were decided upon in order to evaluate use of the selected museums’ webpages by real users. In order to avoid any discomfort to the evaluation group that could cause a non-substantial participation or even the refusal of the user to participate at all, it was deemed necessary to reduce even more the dataset. Taking into account the popularity of the selected museums from a touristic viewpoint, the number of the museums’ annual visitors’ traffic, and the support of English language, the first three museums out of the 33 as derived in the previous step of the research, were selected. Ιn this evaluation set, it was considered that one of the most popular Greek natural history museums should be included as well, so as to examine the quality of its website, in relation to the other 3 world-renowned ones.

Thus, the final dataset of museum websites selected for this study is presented in

Table 1, as follows:

4. Calculating the Weights of Criteria

4.1. AHP for Estimating the Weights of the Criteria

The dimensions of evaluation, along with their further distinguishing criteria, are not equally important while evaluating a a museum’s website. For this purpose, the AHP method is used for calculating the values of the weights of the dimensions and the criteria. Specifically, the first two basic steps of AHP implementation were applied [

22], which involve: (1) development of the goal hierarchy and (2) formation of the pair-wise comparison matrices of the criteria.

AHP is considered one of the most popular MCDM methods and its selection among others was made because this method presents a formal way of quantifying the qualitative evaluation criteria of the examined websites [

23]. In addition, the ability of this method to make decisions by comparing pairs of uncertain qualitative and quantitative factors and also its ability to model expert opinion [

24], are two more reasons that favored the selection of AHP method. The basic steps for the implementation of AHP in an inspection method are:

Step 1. Develop the goal hierarchy: In this step, the AHP MCDM method is implemented similarly to many others website evaluations of different domains [

3,

25,

26,

27].

1.a: Setting the goal: Evaluation of the natural history museums’ websites.

1.b: Forming the set of criteria: The set of criteria has been formed taking into account the evaluation experiments done by [

2,

21], which involved museum websites’ evaluation (

Section 2).

1.c: Forming the set of alternatives: Theset of alternatives involvesnatural history museum websites, which were presented in

Section 3.

1.d: Forming the hierarchical structure: In this step, the hierarchical structure is formed so that criteria could be combined in pairs (

Figure 1).

Step 2. Selecting the evaluators: The group of evaluators consisted of human experts. The human expert evaluation group that is proposed should include experts from both the domain of computer science and the scientific field covered by natural history museums (Botany, Zoology, Biology, Geology, Paleontology, Ecology, Biotechnology and Biochemistry). The choice of this double expert evaluation group is made in order to overcome the problem of subjectivity of the results that the inspection evaluation method introduces [

23]. In this way, the reliability of the results should increase. Thus, a group of evaluators was formed which included 1 software engineer, 2 web designers, 1 biologist, 1 chemical engineer–biochemist, and 1 museum employee, with both the biologist and the chemical engineer–biochemist, having previous experience in software engineering. Both software engineer and web designers had experience in web accessibility.

Step 3. Setting up a pairwise comparison matrix of criteria: In this step, a comparison matrix is formed in order to pair-wise compare the criteria of the same level in the hierarchical structure that was created during the application of the AHP (

Figure 1). More specifically, each one of the six experts was first asked to answer four questionnaires. Each completed questionnaire corresponded to a pair-wise comparison of the criteria belonging to the same dimension. This process resulted in twenty-four pair-wise comparison matrices, i.e., six matrices for the pair wise comparison of the evaluation dimensions, six matrices for the pair wise comparison of the sub-criteria of Usability, another six for sub-criteria of Functionality and another six for the VR interaction. Each one of the comparison matrices that was formed was completed with a value varied from 1/9 to 9 (

P = {1, 2, 3, 4, 5, 6, 7, 8, 9, 1/2, 1/3, 1/4, 1/5, 1/6, 1/7, 1/8, 1/9}), as Saaty [

15,

28] proposed.

After this process, the final matrices of pair-wise comparisons between the evaluation dimensions and their respective sub-criteria were formed. Since the procedure is effectively a means of group decision making, each cell of the final matrices is calculated by the geometric mean of the corresponding values for each corresponding matrix’s cell. This procedure resulted in four aggregated comparison matrices (

Table 2,

Table 3,

Table 4 and

Table 5).

After making pairwise comparisons, estimations were made using an open-source decision making software that implements the AHP method that is called ‘Priority Estimation Tool’ (PriEst). The results are presented in

Figure 2.

4.2. Consistency of the Obtained Degree of Importance for the Dimensions and the Criteria

In order confirm the consistency of the results, a consistency test is implemented on the application of AHP.

Let

C signify an

n-dimensional column vector relating to the sum of weighted values for the importance degrees of the criteria:

where

The consistency values for the criteria can be specified by the vector

, with a representative element

computed as follows:

Saaty [

28] recommended application of maximal eigenvalue

λmaxto assess the validity of measurements as follows:

λmax is further used for calculating a consistency index (

CI):

The closer the maximal eigenvalue

λmaxis to

n, the more consistent is the assessment. Further, a consistency ratio (

CR) is calculated to check the conformity:

RI represents the average random index with the value that is estimated using different orders of the pairwise matrices of comparison. The values of

RI in our experiment are given in

Table 6.

The critical value for the CR is 0.1. Higher values may reveal inconsistency of the comparison matrix and the procedure may be repeated.

The two terms of the Consistency Index (

CI) and the Consistency Ratio (

CR) that developed related to consistency, were calculated for each one of the four aggregated pair wised comparison matrices (

Table 2,

Table 3,

Table 4 and

Table 5), revealing a value below 0.1 for each one of the four pair-wise comparison matrices. More specifically, the

CR value for the matrix of the evaluation dimensions was

, the

CR value for the matrix of the sub criteria of usability was

, the

CR value for the matrix of the sub criteria of functionality was

and finally the

CR value for the matrix of the sub criteria of VR interaction was

. With a

for each one of the four pair-wise comparison matrices we assume that the expert group evaluation as well as the whole AHP developed hierarchy is consistent, so we may use the calculated importance weights of the criteria in further calculations.

5. Fuzzy VIKOR vs. Fuzzy TOPSIS

In the second stage of the evaluation experiment, we decided to implement empirical evaluation methods. For this purpose, we created a Likert scale questionnaire in which each one of the included questions corresponded to each one of the respective sub-criteria of the evaluation dimensions. The questionnaire that was created consisted of two sections. The first section provided some general information about the purpose of the questionnaire, instructions for completing it and presented the twenty-one questions that the user was asked to obligingly answer, for each one of the four museum websites that were evaluated. The second section of the questionnaire consisted of six demographic interest questions, to which the answer was optional. The questionnaire was created using GoogleForms and it was provided to the users electronically through e-mails and social media. The data collection process lasted for 51 days. During this period, we managed to collect a sample of 72 answers from real users of different age, gender and cognitive background. When the data collection process was completed, we decided to use the fuzzy VIKOR MCDM method in order to process the data and finally rank the museum’s websites.

The VIKOR method is a compromised ranking MCDM method introduced by Opricovic [

17], in order to solve MCDM problems with conflicting and non-commensurable criteria [

29]. This MCDM technique has a simple computation procedure that allows simultaneous consideration of the closeness to ideal and the anti-ideal alternatives [

30].

In order to deal with the situation of imprecise or subjective data of our natural language expression of thoughts and judgement that the evaluation questionnaire may introduce, fuzzy logic theory [

31] was adopted. As is usual for most MCDM techniques, the VIKOR method was extended to accommodate subjectivity and imprecise data under a fuzzy environment [

18]. A number of applications from various disciplines have been carried out using the fuzzy VIKOR method [

17,

24,

30,

32,

33,

34,

35,

36]. In order to implement the fuzzy VIKOR method in the second stage of our evaluation experiment, the following steps were taken:

Step 1. Form a new group of decision makers: Based on the study of [

5], through which an attempt is made to understand the taxonomy of museum websites users, a new group of evaluators is formed including not only expert users but other categories of users as well. More specifically the new group of evaluators involved user groups coming from the general public, students at undergraduate and postgraduate level, academics/teachers and museum staff.

Step 2. Conversion of the evaluation criteria into linguistic terms: The term linguistic variable refers to a variable whose values are words or sentences in a natural or artificial language. We applied triangular fuzzy number in order to assign values to the criteria that corresponded to the questions of the questionnaire. A triangular fuzzy number is a special class of fuzzy number that is defined by three real numbers, expressed as

.

is the most possible value of fuzzy number

A and

and

are the lower and upper bounds, respectively, which are often used to illustrate the fuzziness of the data evaluated [

37]. We used the triangular fuzzy numbers presented in

Table 7 [

38]:

Step 3. Construction of the aggregated fuzzy decision matrix: The aggregated fuzzy decision matrix is constructed by polling the decision makers’ opinions to get aggregated fuzzy rating of alternatives. Let k be the number of decision makers in a group. The aggregated fuzzy rating

of alternatives with respect to each criterion can be calculated as:

The value of aggregated ratings is expressed in matrix format as follows:

where

i is the notation for the alternatives and

j is the notation for the criteria, respectively.

Step 4. Defuzzify the aggregated decision matrix: The aggregated fuzzy decision matrix that was created in step 3, is defuzzified into crisp values using the relation BNPi (Best Non-fuzzy Performance) based on the COA defuzzification method.

Step 5. Determine the best crisp value

and worst crisp value

for all criterion ratings(j = 1, 2, …, n), by using the relations (for benefit criteria):

Step 6. Compute the value

Si using the relation:

Step 7. Compute the value

Ri using the relation:

Step 8. Compute the values

,

,

and

using the relations, respectively:

Step 9. Compute the value

, using the relation:

Step 10. Rank the alternatives by sorting each

S,

R and

Q value in descending order: The alternatives are sorted using the values of

S,

R and

Q in descending order. The final ranking of the evaluated museums’ websites is presented in

Table 8.

The results using the fuzzy VIKOR results are compared with the results of the evaluation using the TOPSIS method. The TOPSIS technique was proposed by Hwang and Yoon [

16] but extended to fuzzy TOPSIS by Deng et al. [

39]. The choice of these particular MCDM methods was made due to the fact that both fuzzy VIKOR and fuzzy TOPSIS conclude at a crisp value in their ranking.

The first three steps for the implementation of the fuzzy TOPSIS technique in the evaluation experiment are common with the three first steps related to the implementation of the fuzzy VIKOR method. Thus, the implementation of the fuzzy TOPSIS method presupposes that the weights of the criteria have been estimated through AHP, and includes the following steps:

Step 1. Compute the normalized aggregated fuzzy decision matrix: Τhe aggregated fuzzy decision matrix

that was created by polling the decisionmakers’ opinions is normalized using the linear scale transformation formula that Chen [

40] proposes. The normalization of a fuzzy number for each cell

of the aggregated fuzzy decision is given by the formula (benefit criteria):

Step 2. Compute the weighted normalized fuzzy decision matrix: Taking into account the fact that the importance of each evaluation criterion is different and is expressed through their weights of importance as calculated through the AHP process, the weighted normalized fuzzy numbers are calculated using the formula:

These values are used to construct the weighted normalized fuzzy decision matrix of the following format:

Step 3. Compute the Fuzzy Positive Ideal Solution (FPIS) and the Fuzzy Negative Ideal Solution (FNIS):

and

Step 4. Estimation of the distance of each alternative to the FPIS (

) and to the FNIS (

): The two distances

and

of each alternative

iis calculated using the Euclidean distance:

Step 5. Estimation of the closeness coefficient of each alternative:

The closeness coefficient is used for determining the ranking order of all the alternatives. The value of the closeness coefficient for each alternative in the example and the final ranking of the websites are presented in

Table 9.

Note that in TOPSIS unlike VIKOR, the bigger the value of the relative closeness coefficient, the better the alternative.

6. System Usability Scale Questionnaire

In this section, a comprehensive evaluation related to usability of the four examined museum websites is implemented using the SUS questionnaire [

6]. During the data collection phase in the second stage of the evaluation experiment, the SUS questionnaire was electronically distributed to real museum websites users, simultaneously with the questionnaire that we created and we described above. The sample that we finally manage to collect after 51 days, was 62 answers and the final SUS score for each one of the examined natural history museums’ websites was derived by the mean SUS score of each one of the 62 participants.

If the SUS score is above 68 then it is considered above average and if it is below 68 then it is considered below average. Despite the fact that the SUS score is not a percentage value, the value that is obtained is in the range between [0–100].

The mean SUS score value and the final ranking for each evaluated natural history museum website is presented in

Table 10.

7. Comparing MCDM and SUS

In this section, in order to compare the three produced rankings by the two fuzzy MCDM methods (fuzzy VIKOR—fuzzy TOPSIS) and the System Usability Scale questionnaire, we calculated the Pearson correlation coefficient for making a pair-wise comparison of the values produced by the three methods and the Spearman’s ranking correlation coefficient for making a pair-wise comparison of the rankings of the alternative websites. The Pearson correlation coefficient is estimated by the following formula:

where

n is sample size,

,

are the individual sample points indexed with

i and

, the sample mean and analogously for

.

The formula for the calculation of Spearman’s rank correlation coefficient is:

where

n is the number of data points of the two variables and

is the difference in ranks of the

i element.

Both Pearson and Spearman Coefficient, can take a value between +1 to −1, where

A value of +1 means a perfect positive association

A value of 0 means no association of values

A value of −1 means a perfect negative association

The values and the ranking order of the natural history museums which arise by implementing the above formulas for the results of the three applied methods (

Table 8,

Table 9 and

Table 10), are presented in

Table 11.

Both values of the Pearson and Spearman’s rho correlation coefficient revealed a high correlation of fuzzy VIKOR and fuzzy TOPSIS. A high correlation is also found by the comparison of SUS questionnaire and fuzzy VIKOR method. Finally, a perfect positive association of values and rank was observed between the SUS questionnaire and fuzzy TOPSIS MCDM method.

This is not the first time that fuzzy VIKOR method has been compared with fuzzy TOPSIS [

41]. However, in this earlier study, a general remark is made and a specific statistical dependence between the results (values or rank) is not examined. As far as the comparison of the SUS questionnaire with any MCDM method is concerned, we have not located any particular comparison study in the literature.

In this section, the Pearson and the Spearman correlation coefficient were used, in order to compare the values calculated for each alternative website and the ranking order derived from those values, using each one of the three methods (fuzzy VIKOR, fuzzy TOPSIS and SUS). The Pearson correlation coefficient was used to make a pair-wise comparison between the values produced by the three methods for each natural history museum website (Qi, CCi and SUS mean score). Spearman’s ranking correlation coefficient was used to make a pair-wise comparison between the final ranking order of the alternative websites, which was derived from the values calculated using the three methods.

A correlation coefficient is used in statistics to describe a pattern or relationship between two variables and it measures the strength of the relationship between them. Given a pair of random variables (

x,

y), the formula for the Pearson correlation coefficient is (“Pearson Correlation Coefficient r”, 2018):

where

n is samplesize,

,

are the individual sample points indexed with

i., the sample mean; and analogously for

.

The Spearman’s correlation coefficient between a sample of ranked variables is computed as (Glen, 2022):

Both Pearson and Spearman Coefficients range from −1.0 to +1.0, where:

A value of +1.00 indicates two variables that move in a similar direction.

A value of 0.00 means that there is no linear relationship between them.

A value of −1.00 means a perfect negative association

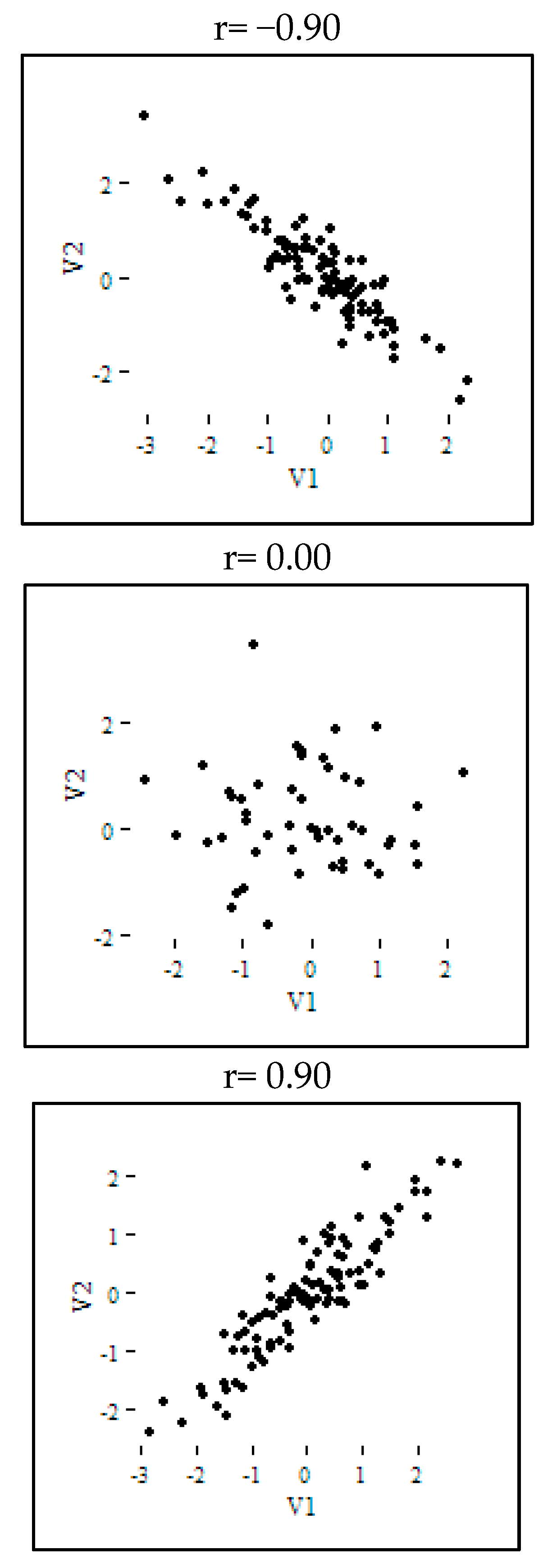

The direction and strength of a correlation are two distinct properties. The scatterplots below (

Figure 3) show correlations that are

r = +0.90,

r = 0.00, and

r = −0.90, respectively. The figure shows that only the direction of the correlations is different in the case of positive and negative correlation.

By taking into account the values, as well as the ranking order, which arise by the three applied methods (

Table 9,

Table 10 and

Table 11), the Pearson and the Spearman rho are estimated and presented in

Table 12.

Both values of the Pearson and Spearman’s rho correlation coefficient revealed a high correlation of fuzzy VIKOR and fuzzy TOPSIS. A high correlation is also found by the comparison of SUS questionnaire and fuzzy VIKOR method. Finally, a perfect positive association of values and rank was found between the SUS questionnaire and the fuzzy TOPSIS MCDM method. The negative value of the Pearson correlation coefficient is due to the fact that according to the Fuzzy VIKOR MCDM theory, the alternative with the smallest value of Qi is the optimal, thus creating an inversely proportional correlation relationship with the Fuzzy TOPSIS.

8. Conclusions

Generally, the evaluation of a website is a laborious and complex process, as it is based on the examination of several different factors. For this purpose, different methods have been examined by different researchers. Ref. [

1] examined direct observation, log analysis, online questionnaires, and inspection methods for museum websites. Ref. [

42] used design patterns for evaluation museum websites. However, these methods regard the different dimensions that are evaluated as equally important. This is the main reason why the proposed method in this paper is better as it takes into account many different dimensions and criteria during the evaluation process and considers them as having different weights of importance.

The evaluation framework that is presented in this paper was designed particularly for the evaluation of natural history museum websites. Due to the fact that the evaluation of a museum website is based on the examination of several different factors, the methodological evaluation framework that was designed proposes a combination of website evaluation methods and decision making theories. The proposed evaluation framework was applied for the first time for the evaluation of four natural history museum websites.

The combination of the different methods was carried out through an evaluation experiment which was developed in two stages. Specifically, in the first stage of the experiment, AHP is applied using inspection evaluation methods in order to determine the relative importance of criteria affecting natural history museum’s websites quality. The ability of the method to make decisions by pair-wise comparison of uncertain qualitative and quantitative factors and to model the opinion of experts and the fact that it is considered one of the most important among MCDM methods were the main reasons why it was chosen for the first stage of the evaluation experiment. The three main quality evaluation factors for the examined museum websites were their usability, their functionality and their VR interaction. Each main evaluation factor was further analyzed in several sub-criteria, thus forming a hierarchical structure. With respect to the final weights that were derived after the application of AHP in the first stage of the evaluation experiment (

Figure 2), among the quality factors of natural history museum websites, usability was ranked the highest (Uc = 0.541), followed by functionality (Fc = 0.322) and VR interaction (VRc = 0.137). Among the sub-criteria of the main evaluation factors, user interface/overall presentation was considered the most important (Uc5 = 0.201) regarding usability, multilingualism was considered the most important (Fc1 = 0.236) regarding functionality, and finally virtual spatiality was considered the most important (VRc = 0.377) regarding VR interaction. When the relative importance of each criterion was calculated, a consistency test was carried out. The reason for this was to ensure that the expert group evaluation as well as the whole AHP-developed hierarchy is consistent, such that the estimated importance criteria weights can be used in further calculations. Indeed with a

CR value bellow the critical rate of 0.1 for each one of the four pair-wise comparison matrices of the criteria, we can confirm the consistency of the developed hierarchy.

In the second stage of the evaluation experiment two fuzzy MCDM methods were applied by implementing empirical evaluation methods. More precisely an evaluation questionnaire was created, which then was distributed electronically to real potential natural history museum website users. The data finally collected from a sample of 72 users were processed and analyzed by applying the fuzzy VIKOR and fuzzy TOPSIS MCDM methods, in order to determine the best alternative. The reason we decided to implement fuzzy MCDM methods was in order to deal with the vagueness and uncertainty that the human factor introduces through the responses of the users in the evaluation questionnaire. Furthermore, the choice of applying two different fuzzy MCDM methods was made in order to compare their results. This is because, in the past, MCDM models have been criticized for producing different results [

4]. The choice of the fuzzy VIKOR MCDM method for the processing of the data was made because fuzzy VIKOR introduces a simple computation procedure to highlight the best alternative through the simultaneous consideration of the closeness to the ideal—optimal and the worst alternative. In addition, the choice of the fuzzy TOPSIS method as the second MCDM method for implementation was made because of the similarity that the two methods present in the determination of the best alternative. Both methods conclude in a ranking of the alternatives, taking into account the distance from an optimal best and a worst solution. A major drawback of fuzzy VIKOR as well as fuzzy TOPSIS is that they do not provide a specific way for calculating the weights of criteria as AHP does. This is one more reason why we chose to implement the AHP method in the first stage of our experiment. In order to further compare the results of the two fuzzy MCDM methods with a widely adopted evaluation method, an extra evaluation of the four natural history museums was applied using the SUS questionnaire.

Each one of the methods used for the evaluation of the museum websites has some advantages and some disadvantages. The pros and cons of each method are summarized in

Table 12. The results that the three different methods (fuzzy VIKOR, fuzzy TOPSIS and SUS questionnaire) produced were subjected to a pair-wise comparative analysis, by examining the statistical dependence of their values and their rankings. For this reason the Pearson correlation coefficient between the values of

Qi,

CCi and mean SUS score, as well as the Spearman’s correlation coefficient between the rankings of the three methods, were calculated. The comparative analysis revealed a high correlation of fuzzy VIKOR and fuzzy TOPSIS (

,

), a high correlation of fuzzy VIKOR and SUS (

,

) and a perfect positive correlation of fuzzy TOPSIS and SUS (

,

). In this way, the reliability of our proposed evaluation framework has been confirmed.

The evaluation experiment that was conducted in this paper was designed for the evaluation of natural history museum websites specifically. However, the implementation steps that were described above could also be followed by other researchers in order to evaluate the quality of websites of museums in other areas of science or culture. This could be implemented using exactly the same set of dimensions and criteria, without changing anything. The evaluation framework could also be used for evaluating the quality of websites in other domains but in such a case the set of dimensions and criteria should be reconsidered. The new set of criteria could be formed by adapting the set of the quality evaluation factors proposed in this study or by choosing a completely new one. Therefore, it is among our future plans to implement the proposed framework in websites of different domains to check its usability, effectiveness and reliability.