Beyond the Battlefield: A Cross-European Study of Wartime Disinformation

Abstract

1. Introduction

2. Materials and Methods

2.1. Research Objectives

- Research Objective 1: To study the characteristics of disinformation concerning the Russo-Ukrainian war in Spain, Germany, the United Kingdom, and Poland.

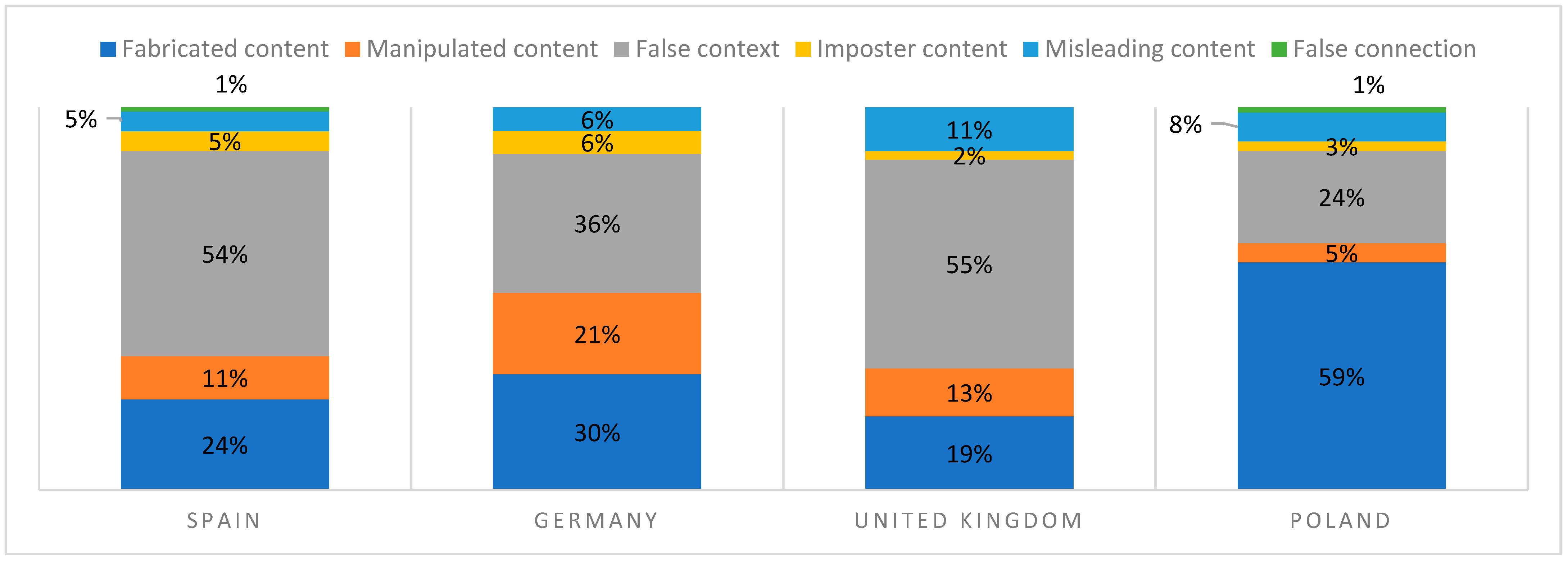

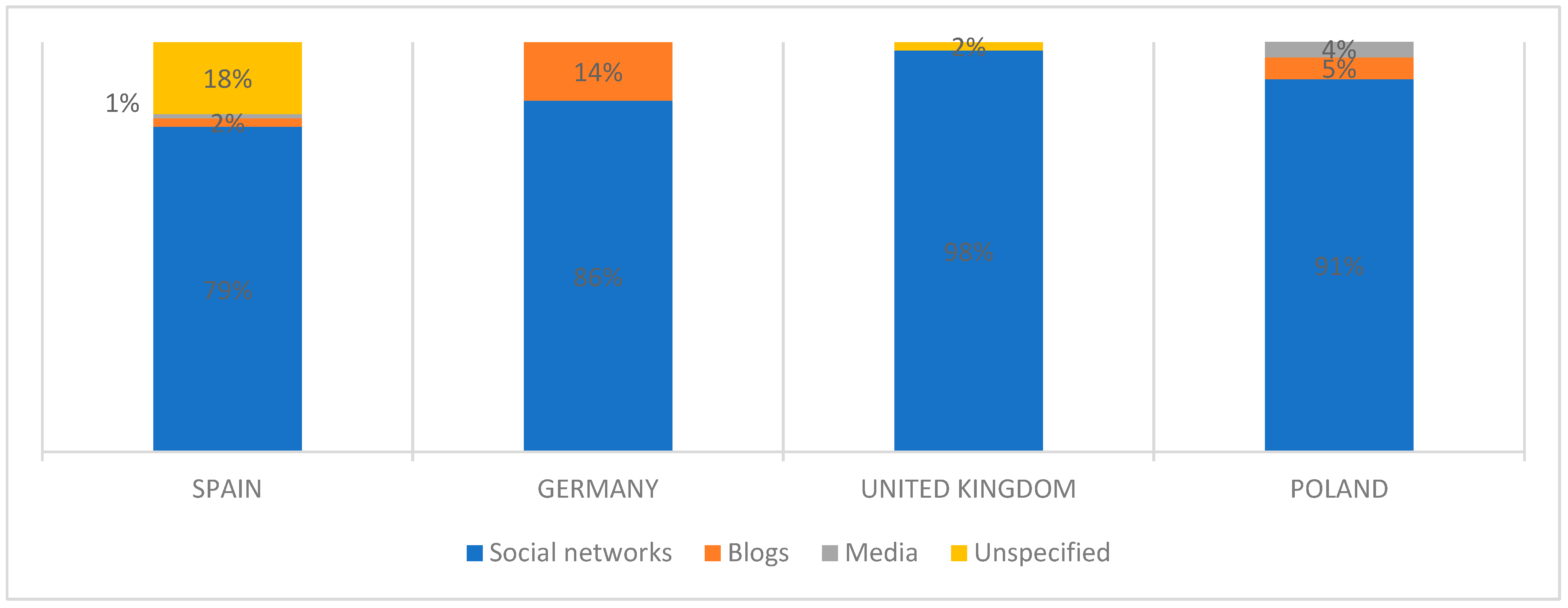

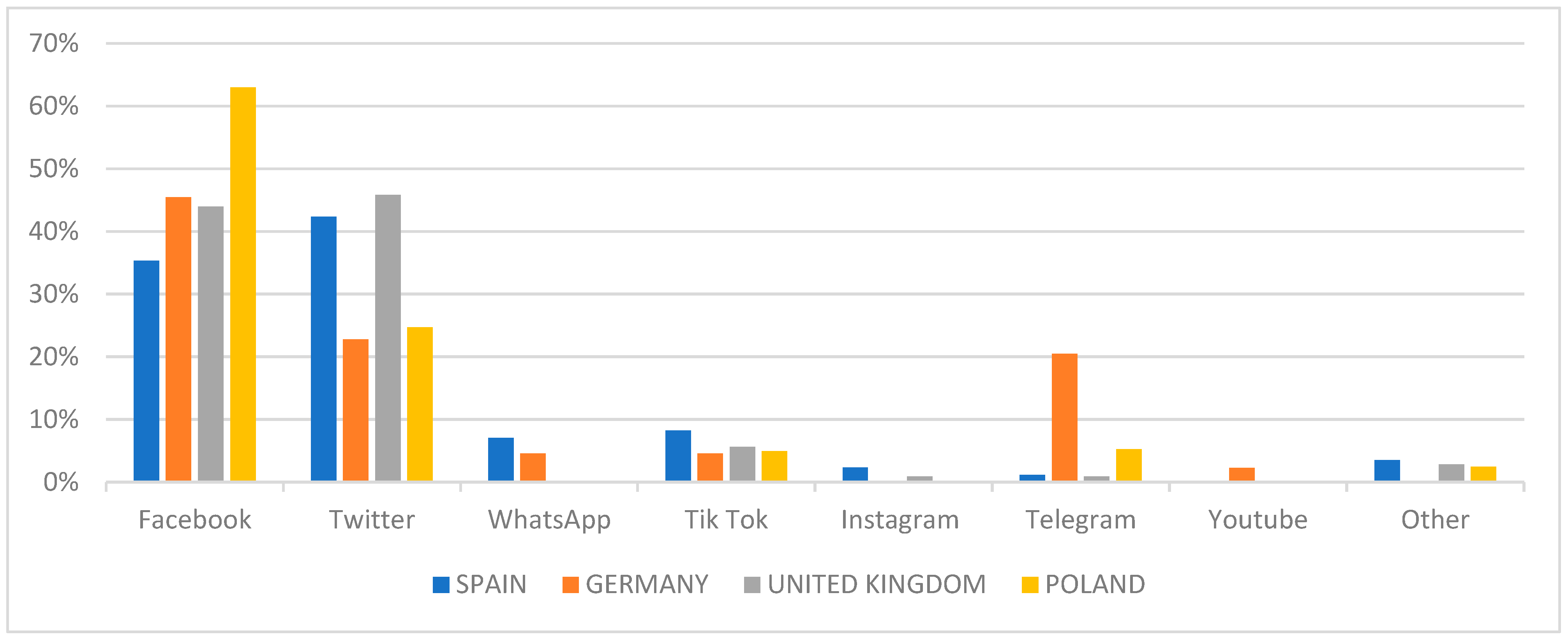

- Specific Objective 1: To examine the format and dissemination platform, as well as the typology and purpose, of the selected falsehoods.

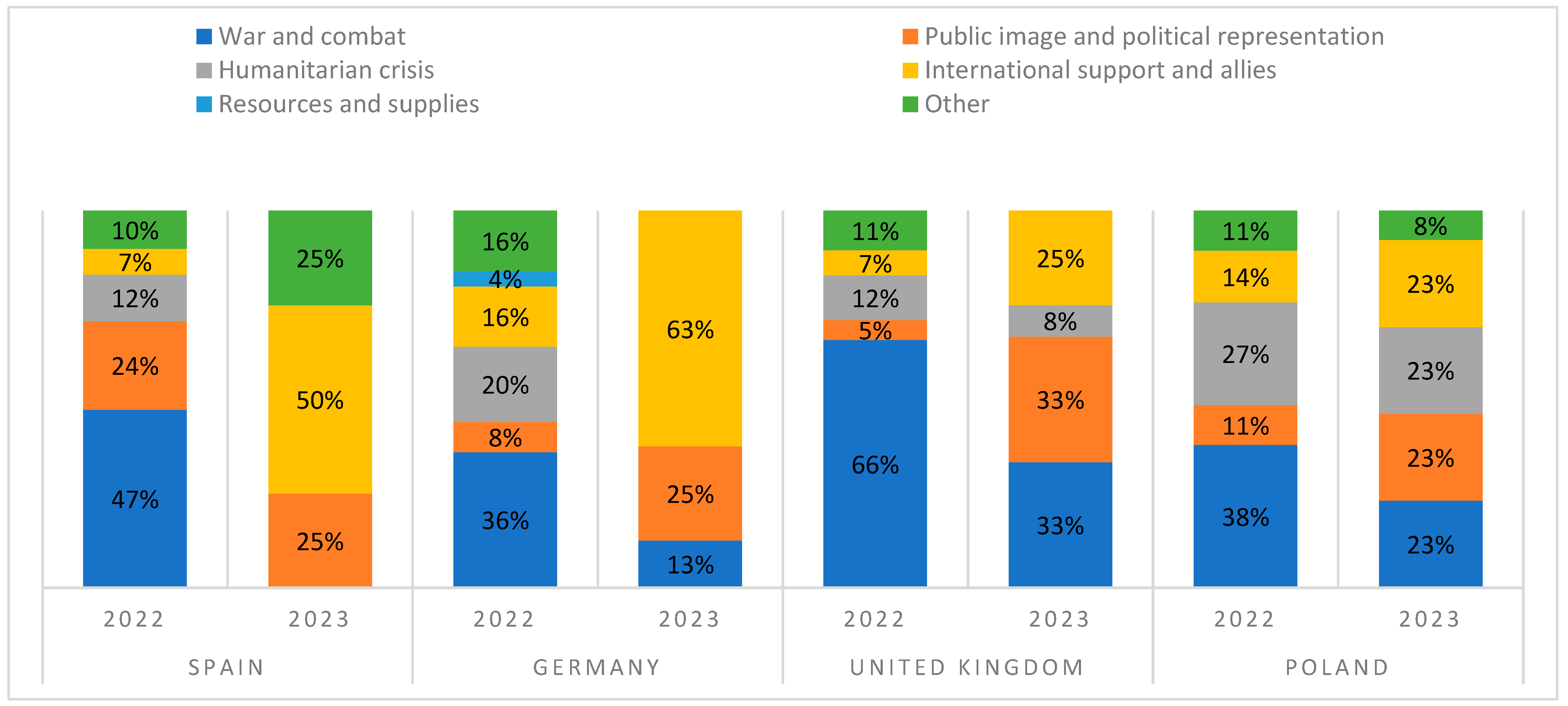

- Specific Objective 2: To analyse the temporal evolution of the frequency of fact-checked falsehoods, as well as their themes, in March 2022 and March 2023.

2.2. Research Questions

- Research Question 1: How has the volume of verified disinformation related to the war evolved between 2022 and 2023?

- Research Question 2: What is the most prominent format in the dissemination of falsehoods about the conflict?

- Research Question 3: What is the predominant typology in the verified disinformation?

- Research Question 4: Which communication channel has been the main medium for the viral spread of hoaxes about the Russo-Ukrainian war?

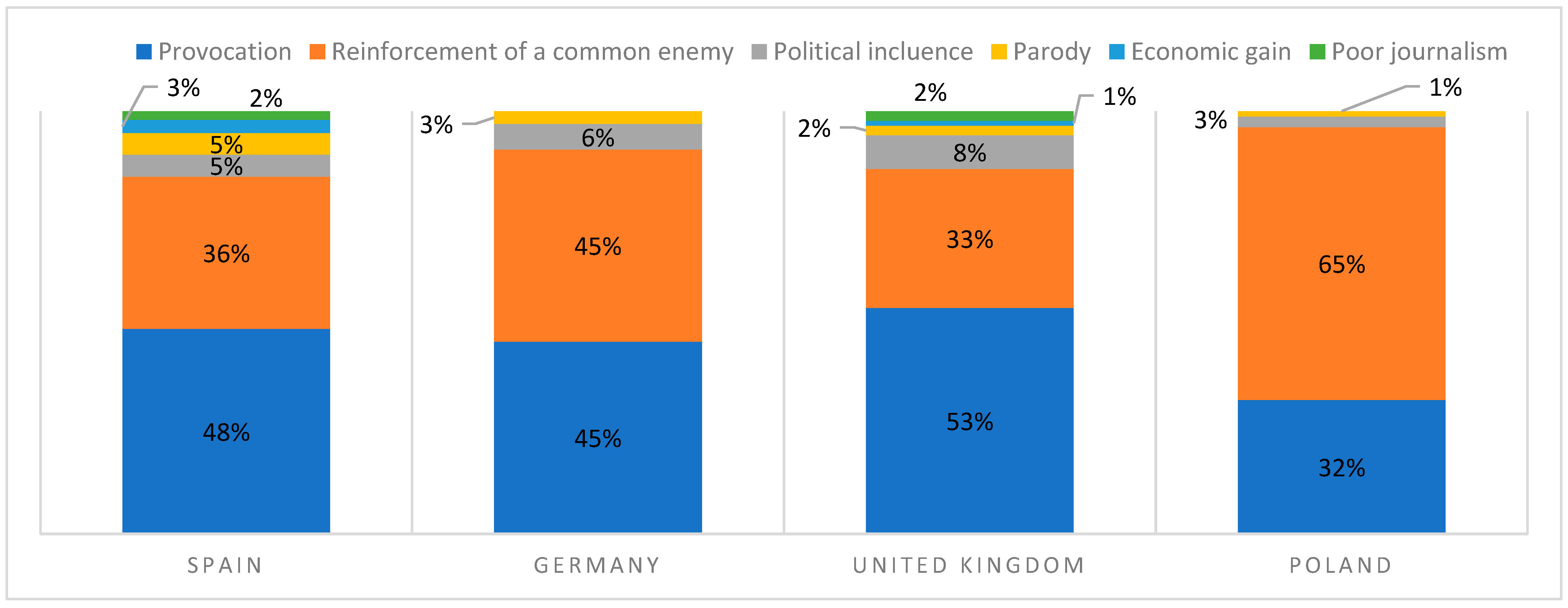

- Research Question 5: What is the predominant purpose of the disinformation disseminated about the armed conflict?

- Research Question 6: In what way has the theme of disinformation about the war evolved between 2022 and 2023?

2.3. Unit of Analysis and Case Studies

2.4. Content Analysis

2.4.1. Sample Selection Criteria

- Dissemination platforms (N = 309 channels): a count of the media or spaces where the falsehood was spread.

- Specific social media networks (N = 317): a detailed breakdown within the category of social media, which may reflect simultaneous circulation across more than one network per falsehood.

2.4.2. Variables and Categories of Analysis

- Variable 1: Frequency of falsehoods verified by fact-checking outlets.

- Variable 2: Format of the falsehoods. Categories: text; image; combined (text and image; text and video; text and audio); video; and audio.

- Variable 4: Platform of falsehoods. Categories: social media (Facebook; X, formerly Twitter; WhatsApp; Tik Tok; Instagram; Telegram; Youtube; Other); blogs; news media.

- Variable 6: Theme of the falsehood. Categories: international support and allies; humanitarian crisis; war and combat; public image and political representation; resources and supplies; and other.

2.4.3. Semi-Structured Interviews with Specialist Actors

3. Results

3.1. Frequency

3.2. Format

3.3. Typology

3.4. Platform

3.5. Purpose

3.6. Theme

4. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Allcott, H., Gentzkow, M., & Yu, C. (2019). Trends in the diffusion of misinformation on social media. Research & Politics, 6(2), 1–8. [Google Scholar] [CrossRef]

- Almansa-Martínez, A., Fernandez-Torres, M. J., & Rodríguez-Fernández, L. (2022). Desinformación en España un año después de la COVID-19. Análisis de las verificaciones de Newtral y Maldita. Revista Latina de Comunicación Social, 80, 183–200. [Google Scholar] [CrossRef]

- Amorós-García, M. (2018). Fake news. La verdad de las noticias falsas. Plataforma Editorial. ISBN 978-84-17114-72-5. [Google Scholar]

- Asmolov, G. (2018). The disconnective power of disinformation campaigns. Journal of International Affairs, 71(1.5), 69–76. Available online: https://www.jstor.org/stable/26508120 (accessed on 30 June 2025).

- Aso, H. S. (2022). Ucrania 2022: La guerra por las mentes. Revista General de Marina, 283, 563–576. Available online: https://tinyurl.com/3ak9b3nb (accessed on 30 June 2025).

- Atanesian, G. (2023, September 4). Los influencers de Putin: El negocio de los blogueros militares rusos que promueven la guerra contra Ucrania. BBC News Mundo. Available online: https://tinyurl.com/44dkdbs4 (accessed on 30 June 2025).

- Bak, P., Tveen, M. H., Walter, J., & Bechmann, A. (2022). Research on disinformation about the war in Ukraine and outlook on challenges in times of crisis. En EDMO. Available online: https://tinyurl.com/27kdk9bj (accessed on 30 June 2025).

- Baptista, J. P., Rivas-De-Roca, R., Gradim, A., & Loureiro, M. (2023). The disinformation reaction to the Russia–Ukraine war. KOME, 11(2), 27–48. [Google Scholar] [CrossRef]

- Baqués, J. (2015). El papel de Rusia en el conflicto de Ucrania: ¿La guerra híbrida de las grandes potencias? Revista de Estudios en Seguridad Internacional, 1(1), 41–60. [Google Scholar] [CrossRef]

- Boler, M. (2019). Digital disinformation and the targeting of affect: New frontiers for critical media education. Research in the Teaching of English, 54(2), 187–191. Available online: https://www.jstor.org/stable/26912445 (accessed on 30 June 2025).

- Brennen, J., Scott, S., Felix, M., Howard, P. N., & Nielsen, R. (2020). Types, sources, and claims of COVID-19 misinformation. En Reuters Institute for the Study of Journalism Factsheet. Available online: https://tinyurl.com/4pba3zyh (accessed on 30 June 2025).

- Carrión, J. (2022). Estamos ante la primera guerra mundial digital. Comunición: Estudios Venezolanos de Comunicación, 198, 17–20. Available online: https://tinyurl.com/4tjfu7tt (accessed on 30 June 2025).

- Casero-Ripollés, A., Alonso-Muñoz, L., & Moret-Soler, D. (2025). Spreading false content in political campaigns: Disinformation in the 2024 European parliament elections. Media and Communication, 13, 9525. [Google Scholar] [CrossRef]

- Consejo Europeo & Consejo de la Unión Europea. (n.d.). EU sanctions against Russia explained. European Council. Available online: https://tinyurl.com/4kkrn4y8 (accessed on 30 June 2025).

- Demagog. (2022, March 2). False: A Ukrainian rocket hit the headquarters of the Kharkiv regional state administration. Demagog. Available online: https://demagog.org.pl/fake_news/stopfake-falsz-ukrainska-rakieta-uderzyla-w-siedzibe-charkowskiej-obwodowej-administracji-panstwowej/ (accessed on 30 June 2025).

- Dierickx, L., & Lindén, C. (2024). Screens as battlefields: Fact-checkers’ Multidimensional challenges in debunking Russian-Ukrainian war propaganda. Media and Communication, 12, 8668. [Google Scholar] [CrossRef]

- EDMO. (2022a, March 11). The five disinformation narratives about the war in Ukraine. European Digital Media Observatory. Available online: https://edmo.eu/publications/the-five-disinformation-narratives-about-the-war-in-ukraine/ (accessed on 30 June 2025).

- EDMO. (2022b, March 18). Weekly insight n°1-disinformation narratives about the war in Ukraine. European Digital Media Observatory. Available online: https://tinyurl.com/bddpbuck (accessed on 30 June 2025).

- EDMO. (2023). De la pandemia a la guerra en Ucrania: Año y medio de lucha contra la desinformación: Informe en edición especial. EDMO. Available online: https://tinyurl.com/2mtnmdnn (accessed on 30 June 2025).

- EUvsDesinfo. (2015). About. EUvsDisinfo. Available online: https://tinyurl.com/2p8hsxyu (accessed on 30 June 2025).

- EUvsDisinfo. (2024, March 4). Reflexiones tras dos años de guerra y desinformación. EUvsDisinfo. Available online: https://tinyurl.com/27rdt2aj (accessed on 30 June 2025).

- Gamir-Ríos, J., Tarullo, R., & Ibáñez-Cuquerella, M. (2021). La desinformació multimodal sobre l’alteritat a Internet. Difusió de boles racistes, xenòfobes i islamòfobes el 2020. Anàlisi, 64, 49–64. [Google Scholar] [CrossRef]

- García-Marín, D., & Salvat-Martinrey, G. (2023). Desinformación y guerra. Verificación de las imágenes falsas sobre el conflicto ruso-ucraniano. La Revista Icono, 21(1). [Google Scholar] [CrossRef]

- Garriga, M., Ruiz-Incertis, R., & Magallón-Rosa, R. (2024). Propuestas de inteligencia artificial, desinformación y alfabetización mediática en torno a los deepfakes. Observatorio (OBS*), 18(5), 175–194. [Google Scholar] [CrossRef]

- Golovchenko, Y. (2020). Measuring the scope of pro-Kremlin disinformation on Twitter. Humanities & Social Sciences Communications, 7(1), 176. [Google Scholar] [CrossRef]

- Hallin, D. C., & Mancini, P. (2004). Comparing media systems: Three models of media and politics. Cambridge University Press. [Google Scholar]

- Hameleers, M. (2024). The nature of visual disinformation online: A qualitative content analysis of alternative and social media in The Netherlands. Political Communication, 42, 108–126. [Google Scholar] [CrossRef]

- Hameleers, M., Powell, T. E., Van Der Meer, T. G., & Bos, L. (2020). A picture paints a thousand lies? The effects and mechanisms of multimodal disinformation and rebuttals disseminated via social media. Political Communication, 37(2), 281–301. [Google Scholar] [CrossRef]

- Landman, T., & Carvalho, E. (2017). Issues and methods in comparative politics: An introduction (4th ed.). Routledge. [Google Scholar]

- Magallón, R., Fernández-Castrillo, C., & Garriga, M. (2023). Fact-checking in war: Types of hoaxes and trends from a year of disinformation in the Russo-Ukrainian war. Profesional De La Informacion, 32(5), 1–15. [Google Scholar] [CrossRef]

- Maldita.es. (2023, March 2). No, Zelensky did not say that “American children will die” for Ukraine. Maldita.es. Available online: https://maldita.es/malditobulo/20230302/Zelenski-Estados-Unidos-Ucrania-hijos/ (accessed on 30 June 2025)Maldita.es.

- Montes, J. (2022). La desinformación: Un arma moderna en tiempos de guerra. Número 44 cuadernos de periodistas. Available online: https://tinyurl.com/mwwwm76b (accessed on 30 June 2025).

- Morris, L., & Oremus, W. (2022, December 8). Russian disinformation is demonizing Ukrainian refugees. Washington Post. Available online: https://tinyurl.com/2ktvhtx2 (accessed on 30 June 2025).

- Newtral. (2022, March 3). The images of a firefighting plane in Turkey that are being shared as if it were an aircraft ‘shot down by Russian forces’ in Ukraine. Newtral. Available online: https://www.newtral.es/avion-derribado-fuerzas-rusas-bulo/20220303/ (accessed on 30 June 2025).

- Pierri, F., Luceri, L., Jindal, N., & Ferrara, E. (2023, April 30). Propaganda and misinformation on Facebook and Twitter during the Russian invasion of Ukraine. 15th ACM Web Science Conference (pp. 65–74), Austin, TX, USA. [Google Scholar] [CrossRef]

- Reuters Fact Check. (2022, March 30). Berlin sex worker group not ‘recruiting’ Ukrainian refugees. Reuters. Available online: https://www.reuters.com/article/fact-check/berlin-sex-worker-group-not-recruiting-ukrainian-refugees-idUSL2N2VW1MJ/ (accessed on 30 June 2025).

- Rodríguez-Pérez, C., Sánchez-del-Vas, R., & Tuñón-Navarro, J. (2025). From fact-checking to debunking: The case of elections24check during the 2024 European elections. Media and Communication, 13, 9475. [Google Scholar] [CrossRef]

- Rondeli, A. (2014). Moscow’s information campaign and Georgia. Opinion paper, Georgian foundation for strategic and international studies. Available online: https://tinyurl.com/35da8xr9 (accessed on 30 June 2025).

- Ruiz-Incertis, R., Sánchez-del-Vas, R., & Tuñón-Navarro, J. (2024). Análisis comparado de la desinformación difundida en Europa sobre la muerte de la reina Isabel II. Revista De Comunicación, 23(1), 507–534. [Google Scholar] [CrossRef]

- Salaverría, R., Buslón, N., López-Pan, F., León, B., López-Goñi, I., & Erviti, M. C. (2020). Desinformación en tiempos de pandemia: Tipología de los bulos sobre la COVID-19. El profesional de la Información (EPI), 29(3), 1–15. [Google Scholar] [CrossRef]

- Sánchez-del-Vas, R. (2025). Verificar para informar: La consolidación del fact-checking europeo como escudo frente a la desinformación. In J. Tuñón-Navarro, R. Sánchez-del-Vas, & L. Bouza-García (Eds.), Periodismo versus populismo comunicación de la unión Europea frente a la pandemia desinformativa (pp. 153–168). Editorial Comares. [Google Scholar]

- Sánchez-del-Vas, R., & Tuñón-Navarro, J. (2023). La comunicación europea del deporte en un contexto desinformativo y post-pandémico. In E. Ortega-Burgos, & M. M. García Caba (Eds.), Anuario de derecho deportivo 2023 (pp. 85–104). Tirant lo Blanch. [Google Scholar]

- Sánchez-del-Vas, R., & Tuñón-Navarro, J. (2024a). Disinformation on the COVID-19 pandemic and the Russia-Ukraine war: Two sides of the same coin? Humanities And Social Sciences Communications, 11(1), 851. [Google Scholar] [CrossRef]

- Sánchez-del-Vas, R., & Tuñón-Navarro, J. (2024b). Hoaxes’ anatomy: Analysis of disinformation during the coronavirus pandemic in Europe (2020–2022). Communication & Society, 37(4), 1–19. [Google Scholar] [CrossRef]

- Sundar, S. S., Molina, M., & Cho, E. (2021). Seeing is believing: Is video modality more powerful in spreading fake news via online messaging apps? Journal of Computer-Mediated Communication, 26(6), 301–319. [Google Scholar] [CrossRef]

- Thom, P. (2023, March 31). These military vehicles were not destined for Ukraine, they are being transferred back to the USA. CORRECTIV. Available online: https://correctiv.org/faktencheck/2023/03/31/diese-militaerfahrzeuge-waren-nicht-fuer-die-ukraine-bestimmt-sie-werden-in-die-usa-zurueckverlegt/ (accessed on 30 June 2025).

- Tuñón-Navarro, J., & Sánchez-del-Vas, R. (2022). Verificación: ¿la cuadratura del círculo contra la desinformación y las noticias falsas? adComunica Revista Científica de Estrategias Tendencias E Innovación En Comunicación, 23, 75–95. [Google Scholar] [CrossRef]

- Tuñón-Navarro, J., Sánchez-del-Vas, R., & Ruiz-Incertis, R. (2023). La regulación europea frente a la pandemia desinformativa. In J. A. Nicolás, & F. García-Moriyón (Eds.), El reto de la posverdad. Análisis multidisciplinar, valoración crítica y alternativas (pp. 129–150). Editorial Sindéresis. [Google Scholar]

- Tuñón-Navarro, J., Sánchez-del-Vas, R., & Sáenz-de-Ugarte, I. (2024). Desinformación y censura en conflictos internacionales: Los casos de Ucrania y Gaza (V. Palacio, Coord. y Ed.). Fundación Alternativas. Documento de trabajo No. 236/2024. Available online: https://tinyurl.com/2x5apbcm (accessed on 30 June 2025).

- Veebel, V. (2015). Russian propaganda, disinformation, and Estonia’s experience. En Foreign Policy Research Institute. Available online: https://tinyurl.com/4ju69tk2 (accessed on 30 June 2025).

- Vorster, O. R. (2021). The soviet information machine: The USSR’s influence on modern Russian media practices & disinformation campaigns. LSE Undergraduate Political Review, 4(2), 106–112. Available online: https://tinyurl.com/3vsac4ec (accessed on 30 June 2025).

- Wardle, C. (2017, March 14). Noticias falsas. Es complicado. First draft. Available online: https://tinyurl.com/yc7bad8t (accessed on 30 June 2025).

| Interviewee Code | Professional Field at the Time of the Interview | Date |

|---|---|---|

| Interviewee 1 | Fact-checker | 2023 |

| Interviewee 2 | Fact-checker | 2023 |

| Interviewee 3 | Fact-checker | 2023 |

| Interviewee 4 | University lecturer and researcher specialising in media and disinformation | 2023 |

| Interviewee 5 | University lecturer and researcher specialising in social network analysis | 2023 |

| Interviewee 6 | Researcher specialising in Big Data and artificial intelligence | 2023 |

| Interviewee 7 | Journalist and researcher specialising in disinformation in Europe | 2023 |

| Interviewee 8 | Former member of European institutions | 2023 |

| Interviewee 9 | Researcher at a think tank specialising in disinformation | 2023 |

| Interviewee 10 | University lecturer and researcher specialising in European Affairs | 2023 |

| Country | Language | Fact-Checking Organisation 1 and Number of Fact Checks | Fact-Checking Organisation 1 and Number of Fact Checks | Total | % |

|---|---|---|---|---|---|

| Spain | Spanish | Newtral: 45 | Maldito Bulo: 52 | 97 | 33% |

| Germany | German | CORRECTIV: 29 | BR24.Faktenfuchs: 4 | 33 | 11% |

| United Kingdom | English | FullFact: 24 | Reuters Fact Check: 64 | 88 | 30% |

| Poland | Polish | FakenewsPL: 9 | Demagog: 70 | 79 | 27% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sánchez-del-Vas, R.; Tuñón-Navarro, J. Beyond the Battlefield: A Cross-European Study of Wartime Disinformation. Journal. Media 2025, 6, 115. https://doi.org/10.3390/journalmedia6030115

Sánchez-del-Vas R, Tuñón-Navarro J. Beyond the Battlefield: A Cross-European Study of Wartime Disinformation. Journalism and Media. 2025; 6(3):115. https://doi.org/10.3390/journalmedia6030115

Chicago/Turabian StyleSánchez-del-Vas, Rocío, and Jorge Tuñón-Navarro. 2025. "Beyond the Battlefield: A Cross-European Study of Wartime Disinformation" Journalism and Media 6, no. 3: 115. https://doi.org/10.3390/journalmedia6030115

APA StyleSánchez-del-Vas, R., & Tuñón-Navarro, J. (2025). Beyond the Battlefield: A Cross-European Study of Wartime Disinformation. Journalism and Media, 6(3), 115. https://doi.org/10.3390/journalmedia6030115