Combining Low-Cost UAV Imagery with Machine Learning Classifiers for Accurate Land Use/Land Cover Mapping

Abstract

1. Introduction

2. Experimental Set Up

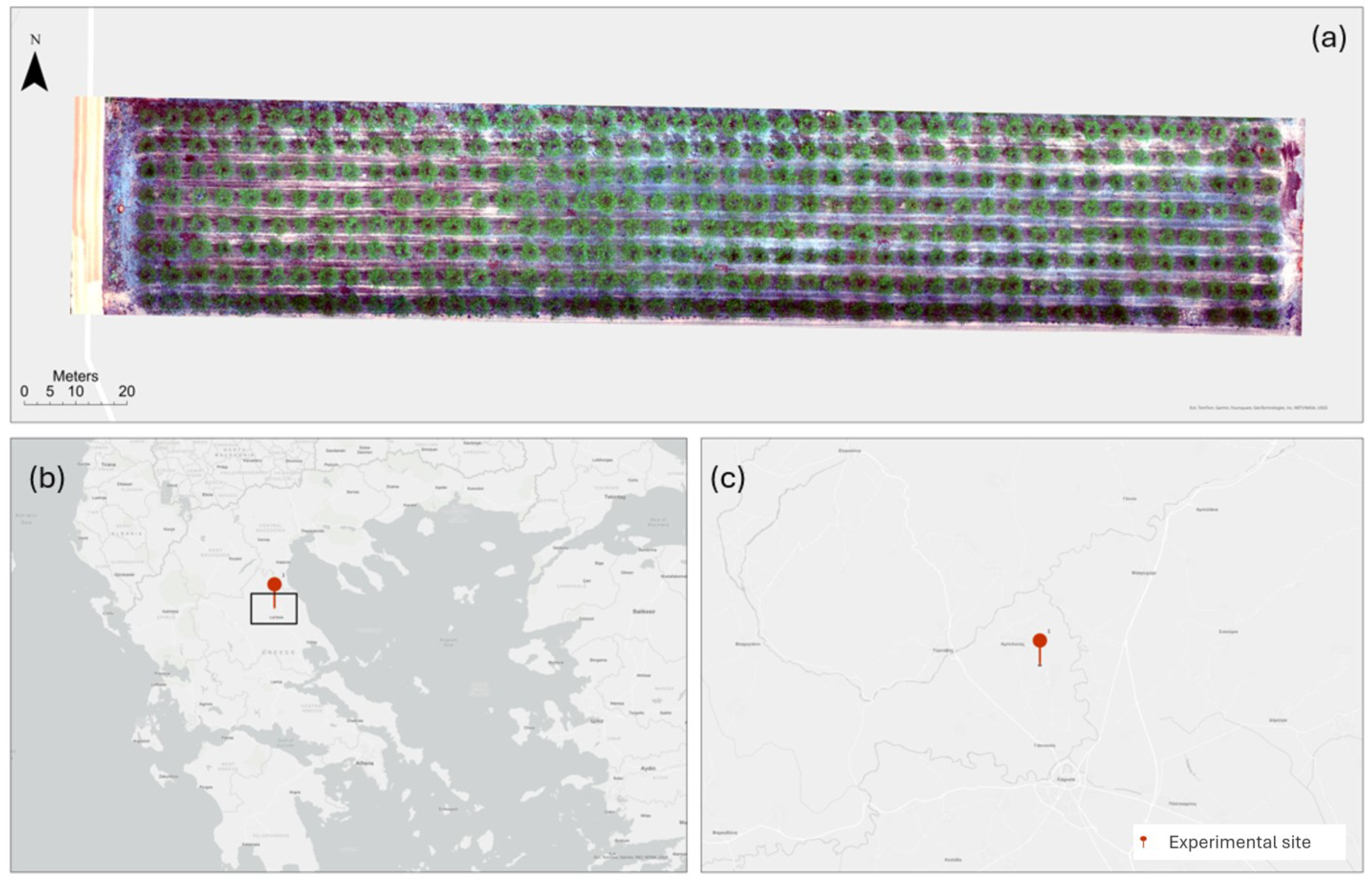

2.1. Study Area

2.2. UAV Data Collection

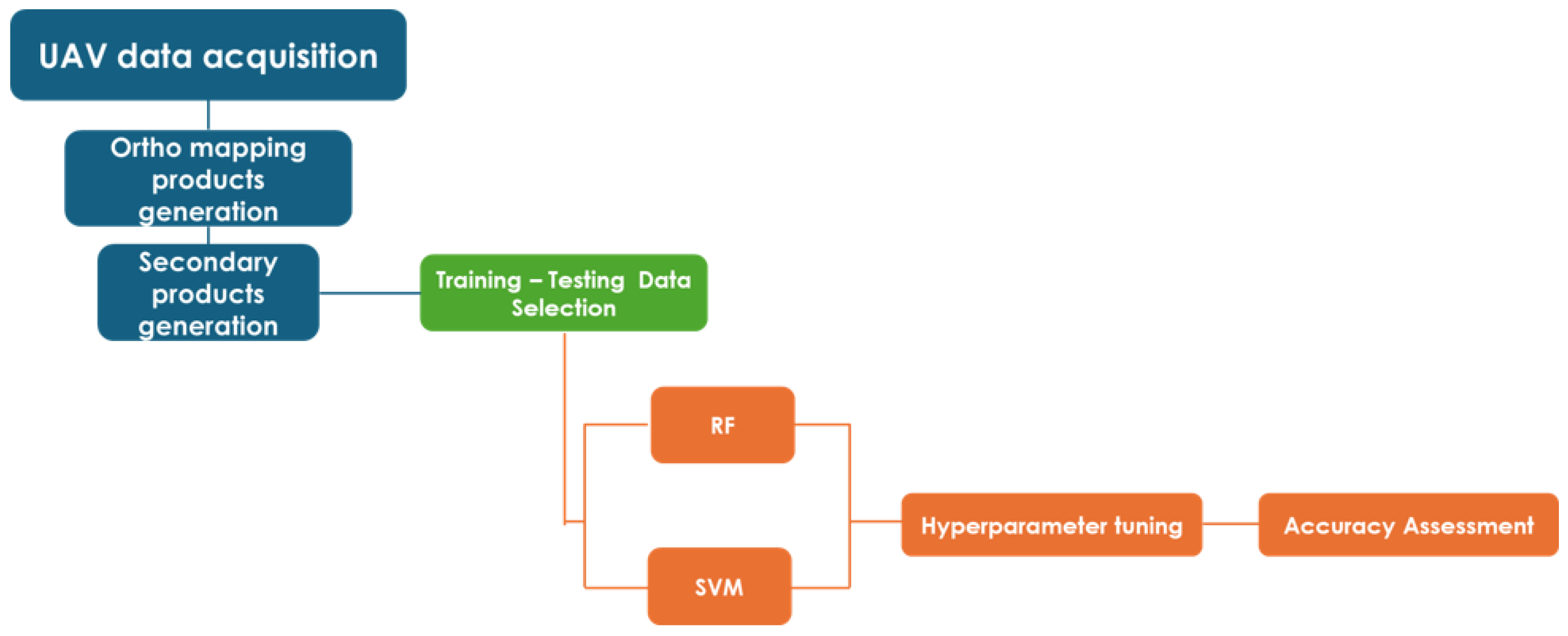

3. Methods

3.1. UAV Data Products Generation

3.2. LULC Mapping Approach

3.2.1. Support Vector Machines

3.2.2. Random Forest

3.2.3. Classifier Implementation

3.3. Validation Approach

4. Results

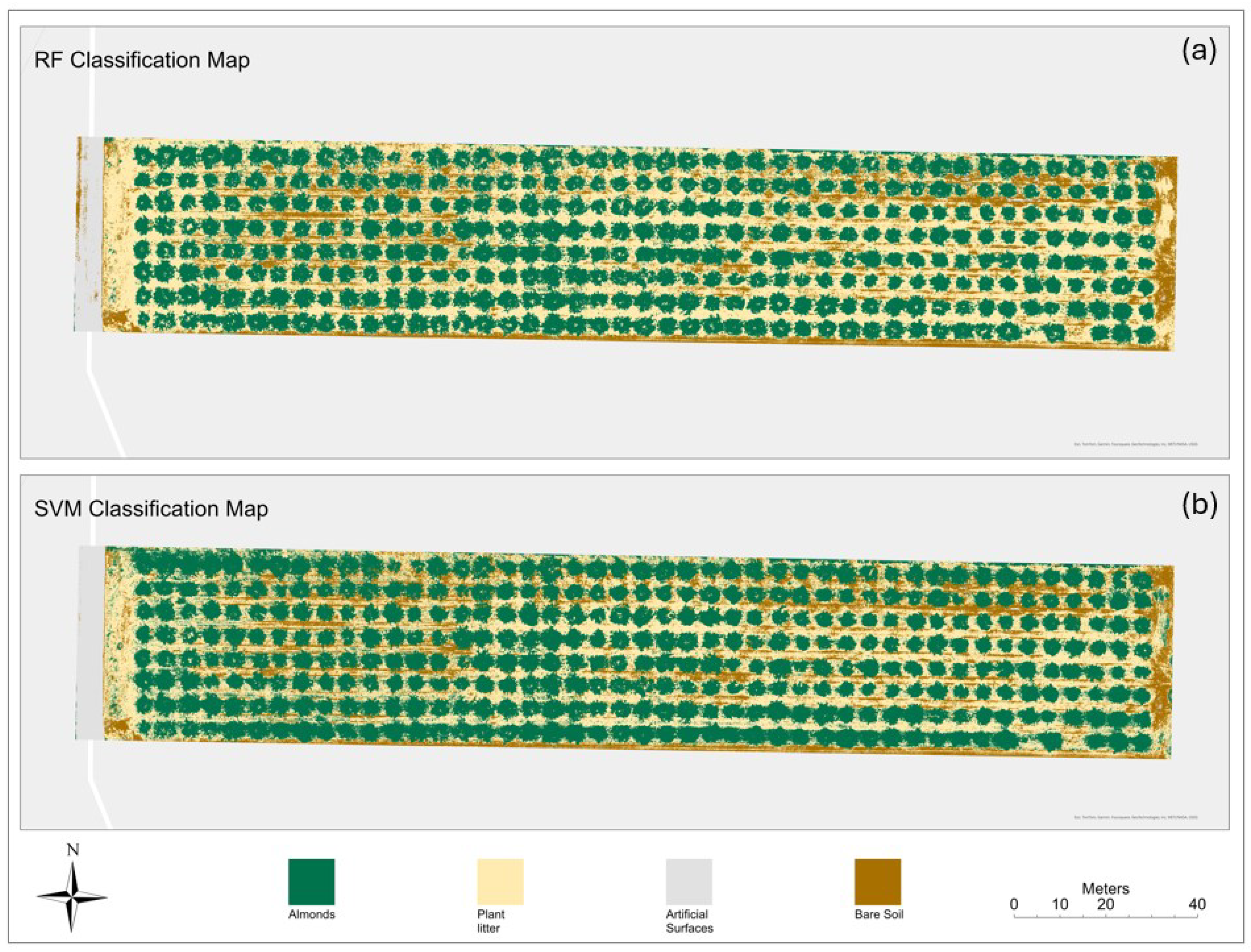

4.1. LULC Classification Maps

4.2. Accuracy Assessment

5. Discussion

6. Concluding Remarks

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Srivastava, K.; Bhutoria, A.J.; Sharma, J.K.; Sinha, A.; Pandey, P.C. UAVs Technology for the Development of GUI Based Application for Precision Agriculture and Environmental Research. Remote Sens. Appl. Soc. Environ. 2019, 16, 100258. [Google Scholar] [CrossRef]

- Tsatsaris, A.; Kalogeropoulos, K.; Stathopoulos, N.; Louka, P.; Tsanakas, K.; Tsesmelis, D.E.; Krassanakis, V.; Petropoulos, G.P.; Pappas, V.; Chalkias, C. Geoinformation Technologies in Support of Environmental Hazards Monitoring under Climate Change: An Extensive Review. ISPRS Int. J. Geo-Inf. 2021, 10, 94. [Google Scholar] [CrossRef]

- Singh, A.; Ganapathysubramanian, B.; Singh, A.K.; Sarkar, S. Machine Learning for High-Throughput Stress Phenotyping in Plants. Trends Plant Sci. 2016, 21, 110–124. [Google Scholar] [CrossRef]

- Silva Fuzzo, D.F.; Carlson, T.N.; Kourgialas, N.N.; Petropoulos, G.P. Coupling Remote Sensing with a Water Balance Model for Soybean Yield Predictions over Large Areas. Earth Sci. Inform. 2020, 13, 345–359. [Google Scholar] [CrossRef]

- Fragou, S.; Kalogeropoulos, K.; Stathopoulos, N.; Louka, P.; Srivastava, P.K.; Karpouzas, S.; P. Kalivas, D.; P. Petropoulos, G. Quantifying Land Cover Changes in a Mediterranean Environment Using Landsat TM and Support Vector Machines. Forests 2020, 11, 750. [Google Scholar] [CrossRef]

- Malhi, R.K.M.; Anand, A.; Srivastava, P.K.; Kiran, G.S.; P. Petropoulos, G.; Chalkias, C. An Integrated Spatiotemporal Pattern Analysis Model to Assess and Predict the Degradation of Protected Forest Areas. ISPRS Int. J. Geo-Inf. 2020, 9, 530. [Google Scholar] [CrossRef]

- Kavzoglu, T.; Colkesen, I. A Kernel Functions Analysis for Support Vector Machines for Land Cover Classification. Int. J. Appl. Earth Obs. Geoinformation 2009, 11, 352–359. [Google Scholar] [CrossRef]

- Srivastava, P.K.; Petropoulos, G.P.; Gupta, M.; Singh, S.K.; Islam, T.; Loka, D. Deriving Forest Fire Probability Maps from the Fusion of Visible/Infrared Satellite Data and Geospatial Data Mining. Model. Earth Syst. Environ. 2019, 5, 627–643. [Google Scholar] [CrossRef]

- Liu, B.; Shi, Y.; Duan, Y.; Wu, W. UAV-Based Crops Classification with Joint Features from Orthoimage and DSM Data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, XLII–3, 1023–1028. [Google Scholar] [CrossRef]

- Dong, X.; Zhang, Z.; Yu, R.; Tian, Q.; Zhu, X. Extraction of Information about Individual Trees from High-Spatial-Resolution UAV-Acquired Images of an Orchard. Remote Sens. 2020, 12, 133. [Google Scholar] [CrossRef]

- Prins, A.J.; Van Niekerk, A. Crop Type Mapping Using LiDAR, Sentinel-2 and Aerial Imagery with Machine Learning Algorithms. Geo-Spat. Inf. Sci. 2021, 24, 215–227. [Google Scholar] [CrossRef]

- Zhang, C.; Valente, J.; Kooistra, L.; Guo, L.; Wang, W. Opportunities of UAVs in orchard management. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2-W13, 673–680. [Google Scholar] [CrossRef]

- Salamí, E.; Gallardo, A.; Skorobogatov, G.; Barrado, C. On-the-Fly Olive Tree Counting Using a UAS and Cloud Services. Remote Sens. 2019, 11, 316. [Google Scholar] [CrossRef]

- Anagnostis, A.; Tagarakis, A.C.; Kateris, D.; Moysiadis, V.; Sørensen, C.G.; Pearson, S.; Bochtis, D. Orchard Mapping with Deep Learning Semantic Segmentation. Sensors 2021, 21, 3813. [Google Scholar] [CrossRef] [PubMed]

- Caruso, G.; Palai, G.; Marra, F.P.; Caruso, T. High-Resolution UAV Imagery for Field Olive (Olea europaea L.) Phenotyping. Horticulturae 2021, 7, 258. [Google Scholar] [CrossRef]

- Yonah, I.B.; Mourice, S.K.; Tumbo, S.D.; Mbilinyi, B.P.; Dempewolf, J. Unmanned Aerial Vehicle-Based Remote Sensing in Monitoring Smallholder, Heterogeneous Crop Fields in Tanzania. Int. J. Remote Sens. 2018, 39, 5453–5471. [Google Scholar] [CrossRef]

- Ishida, T.; Kurihara, J.; Viray, F.A.; Namuco, S.B.; Paringit, E.C.; Perez, G.J.; Takahashi, Y.; Marciano, J.J. A Novel Approach for Vegetation Classification Using UAV-Based Hyperspectral Imaging. Comput. Electron. Agric. 2018, 144, 80–85. [Google Scholar] [CrossRef]

- Reboul, J.; Genoud, D. High Precision Agriculture: An Application of Improved Machine-Learning Algorithms. In Proceedings of the 2019 6th Swiss Conference on Data Science (SDS), Bern, Switzerland, 14 June 2019; pp. 103–108. [Google Scholar]

- Lee, D.-H.; Kim, H.-J.; Park, J.-H. UAV, a Farm Map, and Machine Learning Technology Convergence Classification Method of a Corn Cultivation Area. Agronomy 2021, 11, 1554. [Google Scholar] [CrossRef]

- Bouguettaya, A.; Zarzour, H.; Kechida, A.; Taberkit, A.M. Deep Learning Techniques to Classify Agricultural Crops through UAV Imagery: A Review. Neural Comput. Appl. 2022, 34, 9511–9536. [Google Scholar] [CrossRef]

- Qiao, L.; Tang, W.; Gao, D.; Zhao, R.; An, L.; Li, M.; Sun, H.; Song, D. UAV-Based Chlorophyll Content Estimation by Evaluating Vegetation Index Responses under Different Crop Coverages. Comput. Electron. Agric. 2022, 196, 106775. [Google Scholar] [CrossRef]

- Tu, Y.-H.; Phinn, S.; Johansen, K.; Robson, A.; Wu, D. Optimising Drone Flight Planning for Measuring Horticultural Tree Crop Structure. ISPRS J. Photogramm. Remote Sens. 2020, 160, 83–96. [Google Scholar] [CrossRef]

- Green, D.R.; Hagon, J.J.; Gómez, C.; Gregory, B.J. Chapter 21—Using Low-Cost UAVs for Environmental Monitoring, Mapping, and Modelling: Examples from the Coastal Zone. In Coastal Management; Krishnamurthy, R.R., Jonathan, M.P., Srinivasalu, S., Glaeser, B., Eds.; Academic Press: Cambridge, MA, USA, 2019; pp. 465–501. ISBN 978-0-12-810473-6. [Google Scholar]

- DadrasJavan, F.; Samadzadegan, F.; Seyed Pourazar, S.H.; Fazeli, H. UAV-Based Multispectral Imagery for Fast Citrus Greening Detection. J. Plant Dis. Prot. 2019, 126, 307–318. [Google Scholar] [CrossRef]

- Al-Najjar, H.A.H.; Kalantar, B.; Pradhan, B.; Saeidi, V.; Halin, A.A.; Ueda, N.; Mansor, S. Land Cover Classification from Fused DSM and UAV Images Using Convolutional Neural Networks. Remote Sens. 2019, 11, 1461. [Google Scholar] [CrossRef]

- SPH Engineering. Available online: https://www.sphengineering.com/ (accessed on 5 April 2024).

- Agisoft Metashape: Installer. Available online: https://www.agisoft.com/downloads/installer/ (accessed on 5 April 2024).

- Kaufman, Y.J.; Tanre, D. Atmospherically Resistant Vegetation Index (ARVI) for EOS-MODIS. IEEE Trans. Geosci. Remote Sens. 1992, 30, 261–270. [Google Scholar] [CrossRef]

- Ramezan, C.; A. Warner, T.; E. Maxwell, A. Evaluation of Sampling and Cross-Validation Tuning Strategies for Regional-Scale Machine Learning Classification. Remote Sens. 2019, 11, 185. [Google Scholar] [CrossRef]

- Dawson, R.A.; Petropoulos, G.P.; Toulios, L.; Srivastava, P.K. Mapping and Monitoring of the Land Use/Cover Changes in the Wider Area of Itanos, Crete, Using Very High Resolution EO Imagery with Specific Interest in Archaeological Sites. Environ. Dev. Sustain. 2020, 22, 3433–3460. [Google Scholar] [CrossRef]

- Pandey, P.C.; Koutsias, N.; Petropoulos, G.P.; Srivastava, P.K.; Ben Dor, E. Land Use/Land Cover in View of Earth Observation: Data Sources, Input Dimensions, and Classifiers—A Review of the State of the Art. Geocarto Int. 2021, 36, 957–988. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-Vector Networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Foody, G.M.; Mathur, A. Toward Intelligent Training of Supervised Image Classifications: Directing Training Data Acquisition for SVM Classification. Remote Sens. Environ. 2004, 93, 107–117. [Google Scholar] [CrossRef]

- Moharram, M.A.; Sundaram, D.M. Land Use and Land Cover Classification with Hyperspectral Data: A Comprehensive Review of Methods, Challenges and Future Directions. Neurocomputing 2023, 536, 90–113. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Lekka, C.; Petropoulos, G.P.; Detsikas, S.E. Appraisal of EnMAP Hyperspectral Imagery Use in LULC Mapping When Combined with Machine Learning Pixel-Based Classifiers. Environ. Model. Softw. 2024, 173, 105956. [Google Scholar] [CrossRef]

- Brown, A.R.; Petropoulos, G.P.; Ferentinos, K.P. Appraisal of the Sentinel-1 & 2 Use in a Large-Scale Wildfire Assessment: A Case Study from Portugal’s Fires of 2017. Appl. Geogr. 2018, 100, 78–89. [Google Scholar] [CrossRef]

- Chatziantoniou, A.; Psomiadis, E.; Petropoulos, G.P. Co-Orbital Sentinel 1 and 2 for LULC Mapping with Emphasis on Wetlands in a Mediterranean Setting Based on Machine Learning. Remote Sens. 2017, 9, 1259. [Google Scholar] [CrossRef]

- Kuhn, M. Building Predictive Models in R Using the Caret Package. J. Stat. Softw. 2008, 28, 1–26. [Google Scholar] [CrossRef]

- Basheer, S.; Wang, X.; Farooque, A.A.; Nawaz, R.A.; Liu, K.; Adekanmbi, T.; Liu, S. Comparison of Land Use Land Cover Classifiers Using Different Satellite Imagery and Machine Learning Techniques. Remote Sens. 2022, 14, 4978. [Google Scholar] [CrossRef]

- Congalton, R.G.; Green, K. Assessing the Accuracy of Remotely Sensed Data: Principles and Practices, 2nd ed.; CRC Press: Boca Raton, FL, USA, 2008; ISBN 978-0-429-14397-7. [Google Scholar]

- Vlachopoulos, O.; Leblon, B.; Wang, J.; Haddadi, A.; LaRocque, A.; Patterson, G. Delineation of Bare Soil Field Areas from Unmanned Aircraft System Imagery with the Mean Shift Unsupervised Clustering and the Random Forest Supervised Classification. Can. J. Remote Sens. 2020, 46, 489–500. [Google Scholar] [CrossRef]

- Öztürk, M.Y.; Çölkesen, İ. The Impacts of Vegetation Indices from UAV-Based RGB Imagery on Land Cover Classification Using Ensemble Learning. Mersin Photogramm. J. 2021, 3, 41–47. [Google Scholar] [CrossRef]

- Pádua, L.; Guimarães, N.; Adão, T.; Marques, P.; Peres, E.; Sousa, A.; Sousa, J.J. Classification of an Agrosilvopastoral System Using RGB Imagery from an Unmanned Aerial Vehicle. In Proceedings of the Progress in Artificial Intelligence; Moura Oliveira, P., Novais, P., Reis, L.P., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 248–257. [Google Scholar]

- Sawant, S.; Garg, R.D.; Meshram, V.; Mistry, S. Sen-2 LULC: Land Use Land Cover Dataset for Deep Learning Approaches. Data Brief 2023, 51, 109724. [Google Scholar] [CrossRef]

| Classifier: | SVM | RF | ||

|---|---|---|---|---|

| LULC CLASSES | UA (%) | PA (%) | UA (%) | PA (%) |

| Almonds | 98.97 | 100 | 98.97 | 100 |

| Bare soil | 99.48 | 97.47 | 91.57 | 87.87 |

| Artificial Surfaces | 98.01 | 99.00 | 89.32 | 92.00 |

| Plant Litter | 100 | 100 | 100 | 100 |

| OA | 98.71% | 94.97% | ||

| K | 0.973 | 0.932 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Detsikas, S.E.; Petropoulos, G.P.; Kalogeropoulos, K.; Faraslis, I. Combining Low-Cost UAV Imagery with Machine Learning Classifiers for Accurate Land Use/Land Cover Mapping. Earth 2024, 5, 244-254. https://doi.org/10.3390/earth5020013

Detsikas SE, Petropoulos GP, Kalogeropoulos K, Faraslis I. Combining Low-Cost UAV Imagery with Machine Learning Classifiers for Accurate Land Use/Land Cover Mapping. Earth. 2024; 5(2):244-254. https://doi.org/10.3390/earth5020013

Chicago/Turabian StyleDetsikas, Spyridon E., George P. Petropoulos, Kleomenis Kalogeropoulos, and Ioannis Faraslis. 2024. "Combining Low-Cost UAV Imagery with Machine Learning Classifiers for Accurate Land Use/Land Cover Mapping" Earth 5, no. 2: 244-254. https://doi.org/10.3390/earth5020013

APA StyleDetsikas, S. E., Petropoulos, G. P., Kalogeropoulos, K., & Faraslis, I. (2024). Combining Low-Cost UAV Imagery with Machine Learning Classifiers for Accurate Land Use/Land Cover Mapping. Earth, 5(2), 244-254. https://doi.org/10.3390/earth5020013