Optimizing Safety Net Installation on Construction Sites Using YOLO and the Novel Linear Intersection over Union †

Abstract

1. Introduction

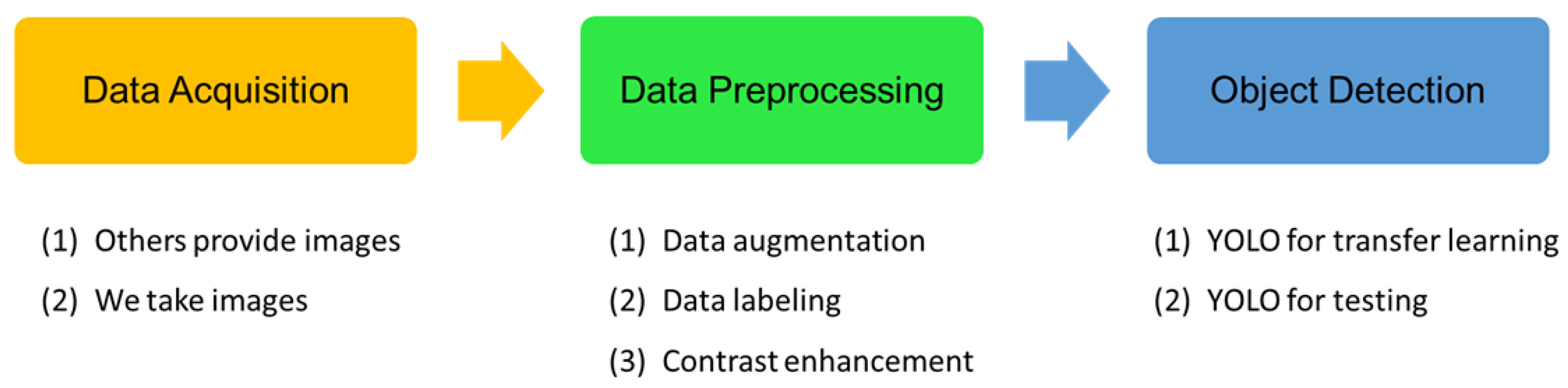

2. Materials and Methods

2.1. Data Acquisition

2.2. Data Preprocessing

2.2.1. Data Augmentation

2.2.2. Data Labeling

2.2.3. Contrast Enhancement

2.3. Object Detection

2.4. Linear Intersection over Union (LIOU)

3. Results

3.1. Data Augmentation Results

3.2. Contrast Enhancement Comparison Experiment Results

3.3. Object Detection Model Comparison

3.4. Evaluation Metrics

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Du, S.; Shehata, M.; Badawy, W. Hard hat detection in video sequences based on face features, motion and color information. In Proceedings of the 2011 3rd International Conference on Computer Research and Development, Shanghai, China, 11–13 March 2011; Volume 4, pp. 25–29. [Google Scholar]

- Weerasinghe, I.P.T.; Ruwanpura, J.Y.; Boyd, J.E.; Habib, A.F. Application of Microsoft Kinect Sensor for Tracking Construction Workers. In Proceedings of the Construction Research Congress, West Lafayette, IN, USA, 21–23 May 2012; American Society of Civil Engineers: Reston, VA, USA, 2012; pp. 858–867. [Google Scholar]

- Xiong, R.; Song, Y.; Li, H.; Wang, Y. Onsite video mining for construction hazards identification with visual relationships. Adv. Eng. Inform. 2019, 42, 100966. [Google Scholar] [CrossRef]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-Based Learning Applied to Document Recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Nath, N.D.; Behzadan, A.H. Deep Convolutional Networks for Construction Object Detection Under Different Visual Conditions. Front. Built Environ. 2020, 6, 97. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Oksuz, K.; Cam, B.C.; Kalkan, S.; Akbas, E. Imbalance problems in object detection: A review. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 3388–3415. [Google Scholar] [CrossRef] [PubMed]

- Im, J.; Jeon, J.; Hayes, M.H.; Paik, J. Single image-based ghost-free high dynamic range imaging using local histogram stretching and spatially-adaptive denoising. IEEE Trans. Consum. Electron. 2011, 57, 1478–1484. [Google Scholar] [CrossRef]

- Lim, J.J.; Salakhutdinov, R.; Torralba, A. Transfer learning by borrowing examples for multiclass object detection. In Proceedings of the International Conference on Neural Information Processing Systems, Granada, Spain, 12–17 December 2011; Curran Associates Inc.: Vancouver, BC, Canada, 2011; pp. 118–126. [Google Scholar]

- Shin, H.C.; Roth, H.R.; Gao, M.; Lu, L.; Xu, Z.; Nogues, I.; Yao, J.; Mollura, D.; Summers, R.M. Deep convolutional neural networks for computer-aided detection: CNN architectures, dataset characteristics and transfer learning. IEEE Trans. Med. Imaging 2016, 35, 1285–1298. [Google Scholar] [CrossRef] [PubMed]

- Wang, C.Y.; Liao, H.Y.M.; Wu, Y.H.; Chen, P.Y.; Hsieh, J.W.; Yeh, I.H. CSPNet: A new backbone that can enhance learning capability of CNN. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 390–391. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Jocher, G. YOLOv5 by Ultralytics. 2020. Available online: https://github.com/ultralytics/yolov5 (accessed on 22 November 2022).

| Data Augmentation | Precision | Recall | F1-Score |

|---|---|---|---|

| Horizontal flipping | 0.56 | 0.32 | 0.4 |

| Horizontal flipping + rotation | 0.60 | 0.59 | 0.6 |

| Contrast Enhancement | Precision | Recall | F1-Score |

|---|---|---|---|

| Original image | 0.60 | 0.59 | 0.60 |

| HE | 0.64 | 0.62 | 0.63 |

| HS | 0.69 | 0.31 | 0.43 |

| CLAHE | 0.80 | 0.54 | 0.64 |

| Model | Precision | Recall | F1-Score |

|---|---|---|---|

| YOLOv3 | 0.76 | 0.53 | 0.63 |

| YOLOv4 | 0.80 | 0.54 | 0.64 |

| YOLOv5 | 0.59 | 0.71 | 0.64 |

| Metric | Precision | Recall | F1-Score |

|---|---|---|---|

| IOU | 0.80 | 0.54 | 0.64 |

| LIOU | 0.99 | 0.90 | 0.94 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tsai, Y.-H.; Tsai, M.-H.; Lai, Y.-H.; Huang, H.-C. Optimizing Safety Net Installation on Construction Sites Using YOLO and the Novel Linear Intersection over Union. Eng. Proc. 2025, 98, 27. https://doi.org/10.3390/engproc2025098027

Tsai Y-H, Tsai M-H, Lai Y-H, Huang H-C. Optimizing Safety Net Installation on Construction Sites Using YOLO and the Novel Linear Intersection over Union. Engineering Proceedings. 2025; 98(1):27. https://doi.org/10.3390/engproc2025098027

Chicago/Turabian StyleTsai, Yu-Hung, Meng-Hsiun Tsai, Yun-Hui Lai, and Hsien-Chung Huang. 2025. "Optimizing Safety Net Installation on Construction Sites Using YOLO and the Novel Linear Intersection over Union" Engineering Proceedings 98, no. 1: 27. https://doi.org/10.3390/engproc2025098027

APA StyleTsai, Y.-H., Tsai, M.-H., Lai, Y.-H., & Huang, H.-C. (2025). Optimizing Safety Net Installation on Construction Sites Using YOLO and the Novel Linear Intersection over Union. Engineering Proceedings, 98(1), 27. https://doi.org/10.3390/engproc2025098027