Abstract

Grass weeds are considered one of the major pests that pose a challenge to agricultural activity as they consume nutrients, space, and water. With advancements in technology, these pests can be identified and removed. Using computer vision techniques, we developed a grass weed management and control method. Identifying the species of grass weeds enables the correct selection of weed control measures and decreases the use of herbicides and weedicides. The YOLOv5 algorithm was used in this study. It was trained using training images that were also captured as part of this study. These images were then augmented, and Raspberry Pi was adopted to create a portable system. By successfully training the YOLOv5 algorithm on four different types of grass weeds, the system achieved an overall accuracy rate of 95.31% in detecting and identifying the target objects. The developed system detects and identifies the four main types of weeds, contributing to the improvement of weed control management.

1. Introduction

Any plant unwanted in a particular area is called a weed. At present, administrators are paying a lot of attention to maintaining the beauty of parks, gardens, city streets, and agriculture fields. However, weeds are uncontrollable, attract insects, and cause diseases and infestation. Weeds are competing with crops for nutrients, space, and water. Consequently, they threaten landscape management. To manage and eliminate these invasive weed species, computer vision techniques have been widely used and are often employed alongside robotic arms and spraying mechanisms [1].

Currently, spraying chemical herbicides over an entire agricultural landscape is the main method used for weed control management, which is costly. Apart from the financial costs, severe concerns arise due to the effect of chemical herbicides on biodiversity, ecosystems, humans, and the health of the desired plants. Thus, it is important to remove undesired weed species without the use of chemical herbicides [2].

Manually detecting and classifying weeds is exhausting and repetitive work. In addition, there is a high possibility of miscalculating the amount of herbicide that should be used due to human error. With the advancement of technology, particularly digital image identification [3], image processing can be used for the direct recognition of objects, the feasible utilization of target objects, and decreasing the probability of human mistakes. Many studies have adopted object detection algorithms for the recognition of plants, weeds, and plant diseases. Buenconsejo et al. [4] and Bacus et al. [5] developed models that use convolutional neural network (CNN) algorithms for the identification of different leaf diseases in Abacca and Carica Papaya. They used machine learning and deep learning for the detection of all possible variations in the targeted environment. Ignacio et al. [6] and Legaspi et al. [7] implemented detection methods for assessing the maturity of mangos and tomatoes using a target detection network connected to Raspberry Pi. Their devices successfully classified and identified the different crops. The images in the datasets were extracted and labeled to generate annotation files and were used to construct a training model based on the YOLOv3 model [8]. However, in the images with a significant amount of non-target areas, the composite content of the background might be mistaken for a fruit, resulting in inaccurate predictions. The advancement of image identification [9,10] and deep learning [11,12] also significantly minimizes the human effort required.

However, there are few studies on grass weed identification systems that use Yolov5. Furthermore, datasets related to weeds that exist on an online platform are required [13]. Therefore, we developed a system for detecting and identifying grass weeds using a pre-trained model and transfer learning techniques. We used the You Only Look Once (Yolov5) detection model to develop a device that uses a Raspberry PI microcontroller and its camera module [14,15,16,17]. We trained the YOLOv5 model for detection and identification, and the accuracy of the system was validated using a confusion matrix. The system identified four different types of grass weed species: Euphorbia Maculata, Eleusine Indica (L.) Gaertn, Euphorbia Hirta L. and Tridax Procumbens. The system comprises a Raspberry Pi 4 [15,16,17], a camera module v2, a screen display, and a power supply [18,19,20]. The output was the common English name of the specific grass weed.

Lastly, the Philippines contains rainfed lowlands and is located in an agro-ecological zone. Therefore, it is valuable to implement an effective weed management system to remove invasive grass weed species from this area. By distinguishing between different species of weeds using the developed system, appropriate weed control measures can be formulated.

2. Methodology

2.1. Research Methodology

Grass weeds harm the environment because they decrease the nutrients available for crops and block seedlings from seeing sufficient light. To develop a solution to the problem, we created a system for detecting and classifying grass weeds in real time, with a Raspberry Pi camera and YOLOv5 algorithm used to identify weeds conveniently. We selected the appropriate software and hardware. The performance of the system was evaluated using a confusion matrix, and its accuracy in correctly identifying types of weeds was determined.

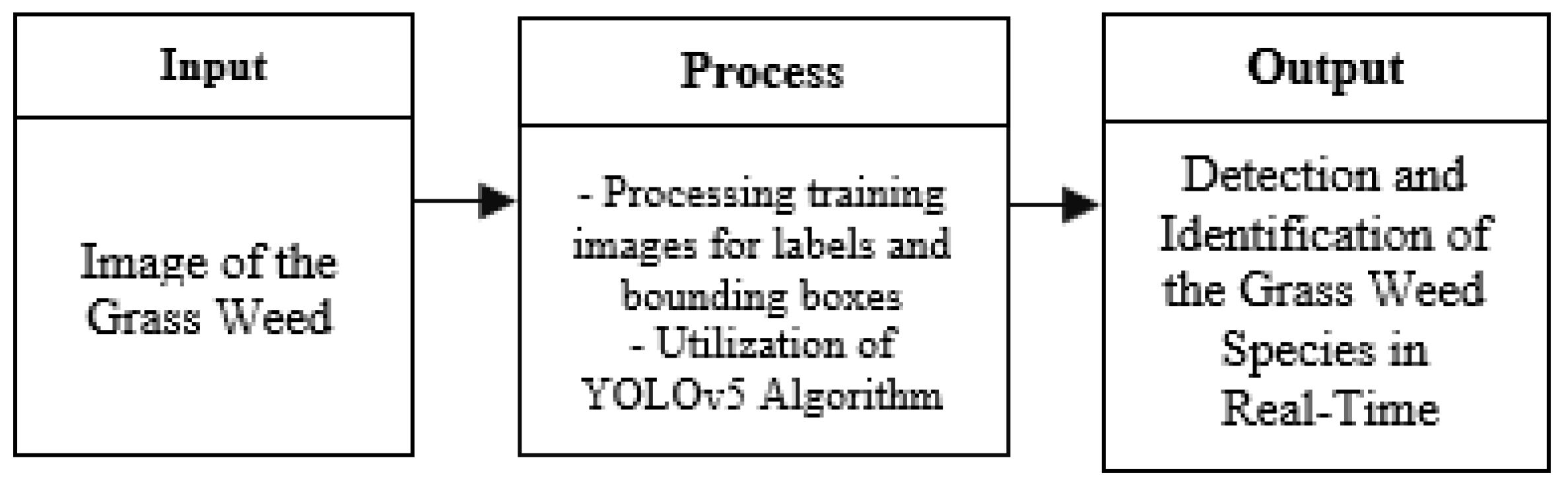

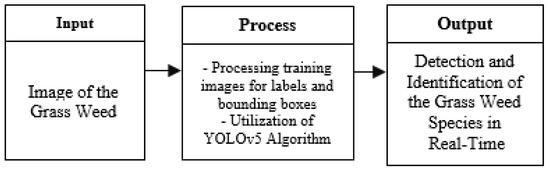

Figure 1 shows the research framework of this study. The captured image was processed by the algorithm and displayed in a grass-bounding box for identification. The YOLOv5 algorithm was trained using a large dataset for object detection and identification. This algorithm underwent transfer learning. The dataset consisted of images of the four different types of grass weeds. The output of the system showed that the identification labels and bounding boxes of the region of interest (ROI) were based on the real images captured by the camera.

Figure 1.

Research framework.

2.2. Hardware Development

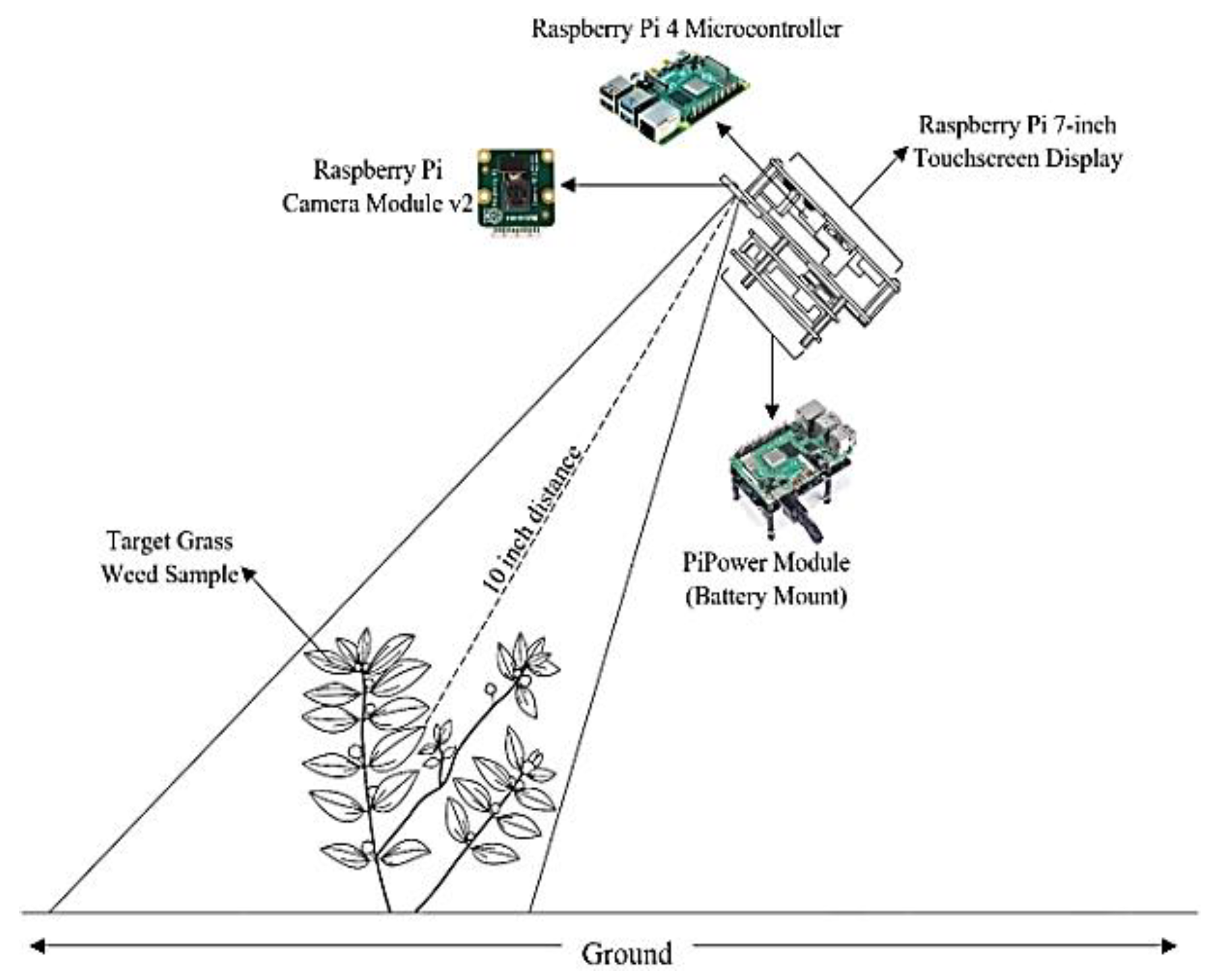

The system consisted of a Raspberry Pi microcontroller, a 7-inch touchscreen, an uninterruptible power supply, a 32 GB Samsung MicroSD Card, and a camera module v2. The system was designed to be portable. The MicroSD card was used to load the operating system (OS), while the power supply provided a constant power source. The power supply module was equipped with a recharging function, and its output power was 5 V and 3 A. Two 3.7 V 18650 Li-ion rechargeable batteries were added. The camera module was used to capture real-time images. The images were processed by the Raspberry Pi 4 microcontroller. An LCD was used to display the result output by the system in real time.

2.3. Software Development

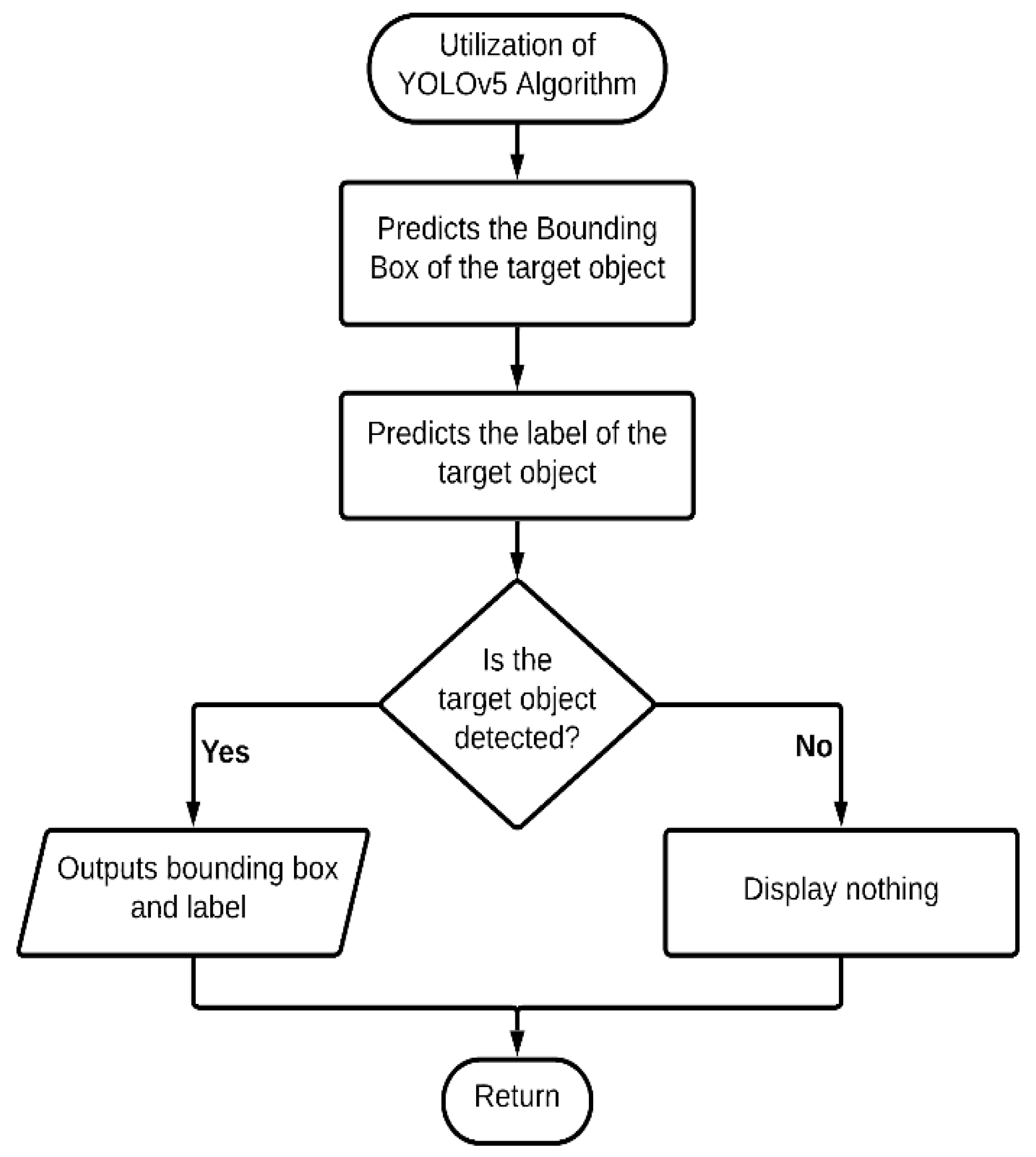

When an image was captured by the camera, the system’s algorithm processed and displayed the weed’s bounding box and identification. The YOLOv5 algorithm was used to identify the green weed species.

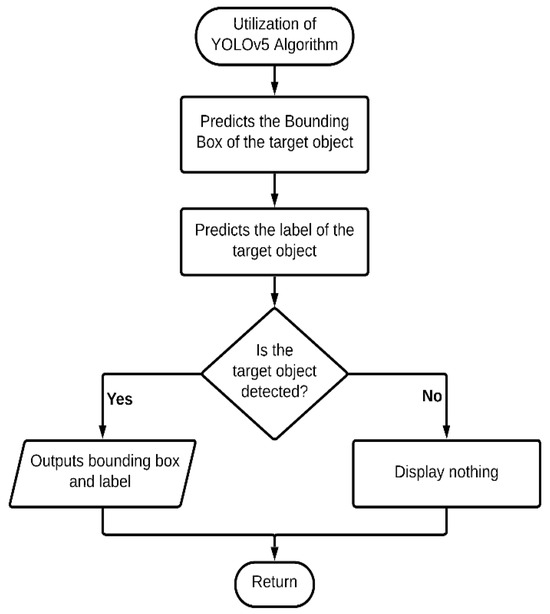

The YOLOv5 algorithm was trained with an image dataset that was created using a collection of images captured during this study and ones collected from an online database, GBIF. All images were augmented to increase the number of images in the training dataset. Each different weed category contained 500 training images. The images were annotated with labels and bounding boxes using Roboflow. Their features were extracted by rotating, flipping, and zooming in on the images. Afterward, the training dataset was exported in the YOLO format and used for training the algorithm. Next, YOLOv5 was modified to allow it to classify images into the four weed categories using transfer learning. The YOLOv5 algorithm performed object detection and was trained with the training dataset using Google Colab. Once the training was finished, the algorithm displayed an output that detected and identified the target object (Figure 2).

Figure 2.

YOLOv5 algorithm.

3. Experimental Set-Up

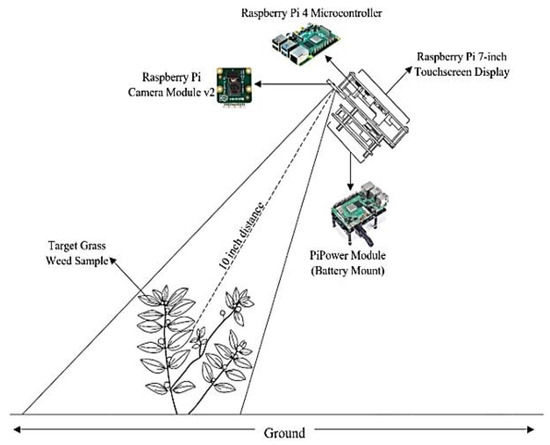

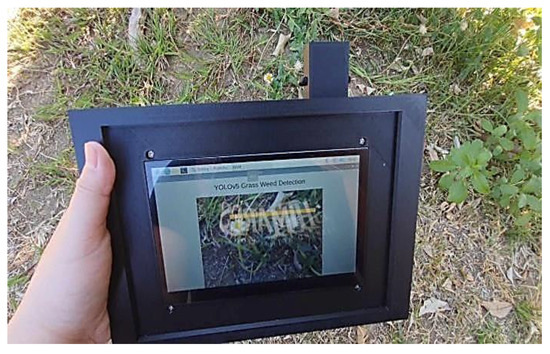

After ensuring that there were no hardware defects, an experiment was conducted in real-world settings. The system was used to capture an image at a distance of 10 inches from the object (Figure 3). Table 1 shows the number of images that were taken at the testing site.

Figure 3.

Device set-up and method used for capturing images.

Table 1.

Data collected during prototype testing.

4. Results and Discussion

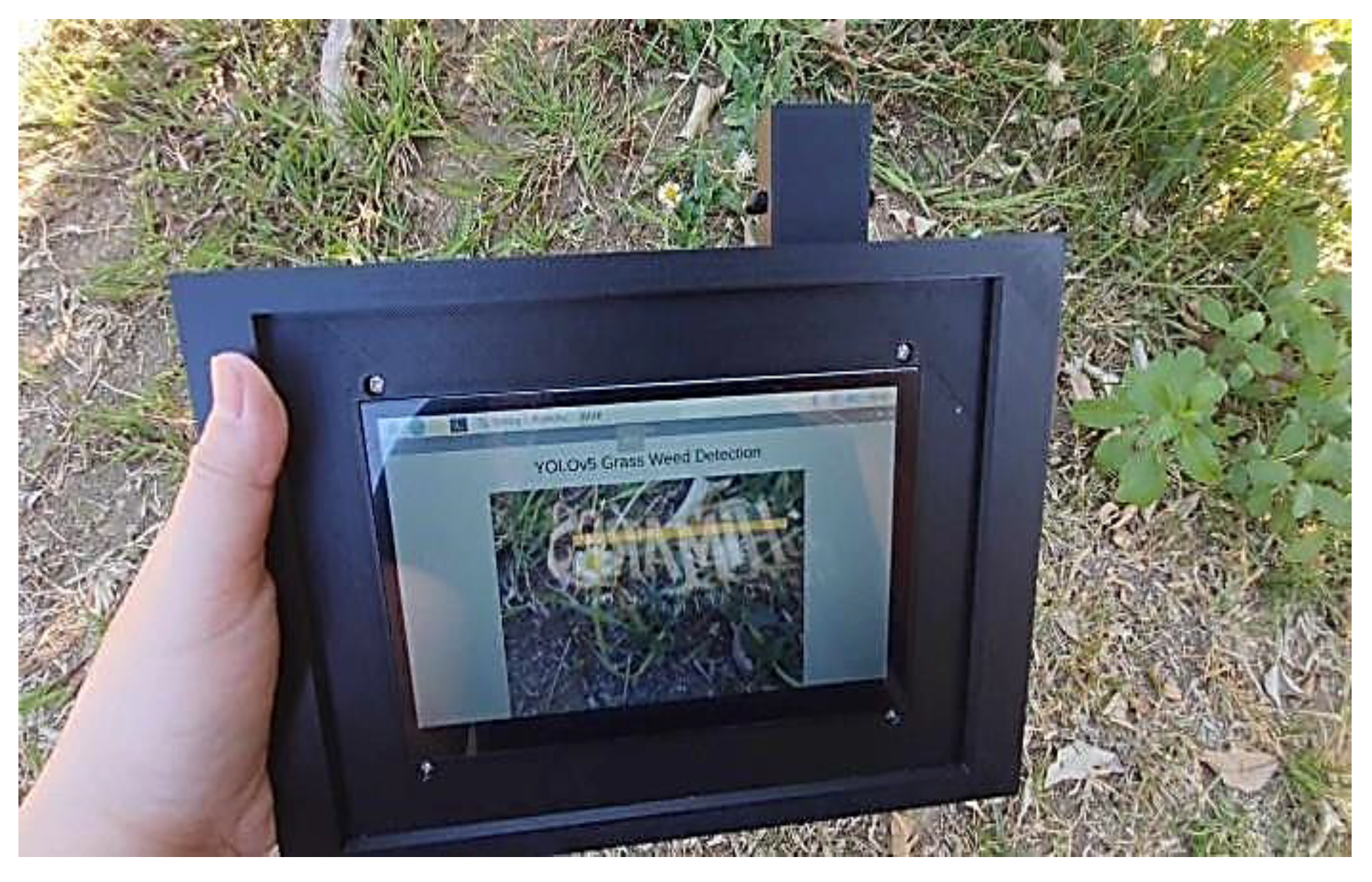

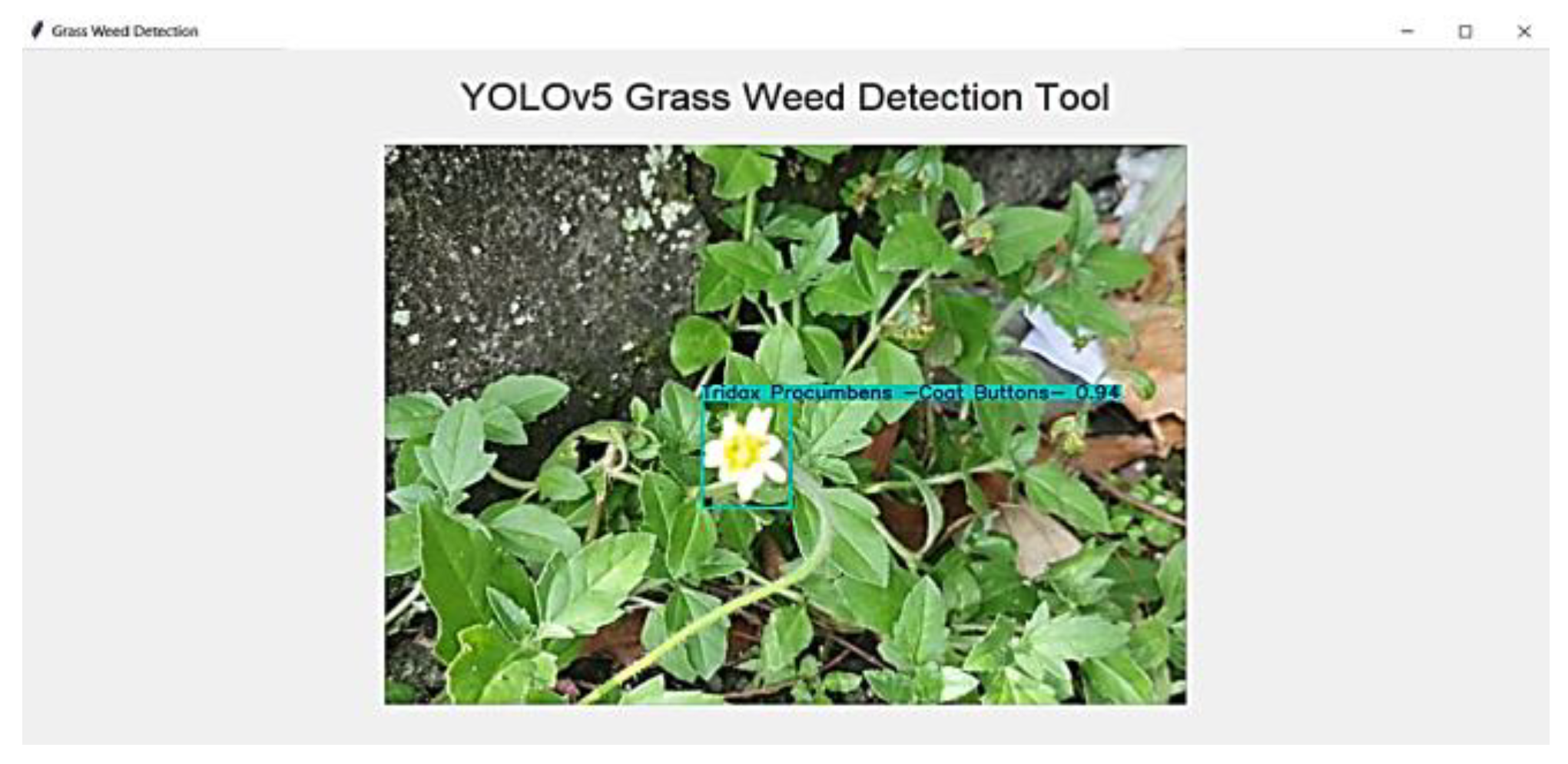

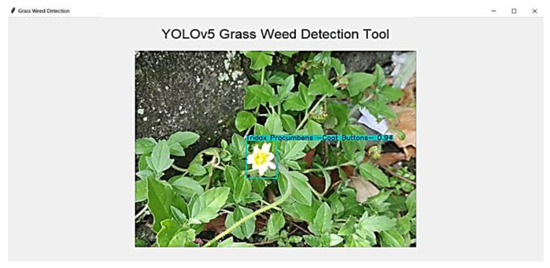

Figure 4 shows how the system was used. The algorithm was run on the Thonny software of the Raspberry Pi 4 system, and the graphical user interface (GUI) was displayed on the screen. The grass weed was identified as Tridax procumbens (Coat Buttons), which was correct.

Figure 4.

Testing of developed system.

Figure 5 shows the GUI that displayed the Raspberry Pi camera’s feed when the system was used to identify the target grass weed.

Figure 5.

System’s GUI.

The confusion matrix (Table 2) below shows the identification results generated by the system. The confusion matrix shows that Weed D (Tridax procumbens) had the largest number of data points collected, whereas Weed C (Euphorbia hirta) had the smallest number. Accurate identifications were made by the algorithm, as were several incorrect identifications. We computed the accuracy of the system using (1).

Table 2.

Confusion matrix.

There were 183 correct predictions out of a total of 192 grass weed samples. The overall accuracy of the YOLOv5 algorithm was 95.31%. Table 2 displays the confusion matrix of the system.

5. Conclusions and Recommendations

We developed a system for identifying weed species, specifically four types of weed, using the YOLOv5 algorithm and Raspberry Pi. The developed algorithm showed an overall accuracy of 95.31%. The YOLOv5 algorithm performed effectively when compared with previous YOLO models. It is necessary to include more data in the dataset used to train the algorithm. Future research is essential to increase the algorithm’s accuracy rate. A different algorithm needs to be developed for the identification of other grass weed types. An improved microcontroller is required for better processing power than that granted by Raspberry Pi 4. An improved camera module with a higher resolution would improve the object detection ability of the system.

Author Contributions

Conceptualization, C.G.R. and J.A.G.; methodology, C.G.R.; software, C.G.R.; validation, C.G.R., J.A.G. and N.L.; formal analysis, J.A.G.; investigation, C.G.R.; resources, C.G.R.; data curation, J.A.G.; writing—original draft preparation, J.A.G.; writing—review and editing, C.G.R.; visualization, J.A.G.; supervision, C.G.R. and J.A.G.; project administration, C.G.R.; funding acquisition, C.G.R. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

No new data were created.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Dhaker, D.L.; Karthik, R.; Maurya, S.; Singh, K. Chemical Weed Management Increasing Herbicide Efficiency. Agric. Environ. E-Newsl. 2021, 2, 31–32. [Google Scholar]

- Ruzzante, S.; Labarta, R.; Bilton, A. Adoption of agricultural technology in the developing world: A meta-analysis of the empirical literature. World Dev. 2021, 146, 105599. [Google Scholar] [CrossRef]

- Bakhshipour, A.; Jafari, A.; Nassiri, S.M.; Zare, D. Weed segmentation using texture features extracted from wavelet sub-images. Biosyst. Eng. 2017, 157, 1–12. [Google Scholar] [CrossRef]

- Buenconsejo, L.T.; Linsangan, N.B. Detection and Identification of Abaca Diseases using a Convolutional Neural Network CNN. In Proceedings of the IEEE Region 10 Annual International Conference, Auckland, New Zealand, 7–10 December 2021. [Google Scholar]

- Bacus, J.A.; Linsangan, N.B. Detection and Identification with Analysis of Carica papaya Leaf Using Android. J. Adv. Inf. Technol. 2022, 13, 162–166. [Google Scholar] [CrossRef]

- Ignacio, J.S.; Eisma, K.N.A.; Caya, M.V.C. A YOLOv5-based Deep Learning Model for In-Situ Detection and Maturity Grading of Mango. In Proceedings of the 2022 6th International Conference on Communication and Information Systems, ICCIS 2022, Chongqing, China, 14–16 October 2022. [Google Scholar]

- Legaspi, J.; Pangilinan, J.R.; Linsangan, N. Tomato Ripeness and Size Classification Using Image Processing. In Proceedings of the 2022 5th International Seminar on Research of Information Technology and Intelligent Systems, ISRITI 2022, Yogyakarta, Indonesia, 8–9 December 2022. [Google Scholar]

- Dandekar, Y.; Shinde, K.; Gangan, J.; Firdausi, S.; Bharne, S. Weed Plant Detection from Agricultural Field Images using YOLOv3 Algorithm. In Proceedings of the 2022 6th International Conference on Computing, Communication, Control and Automation, ICCUBEA 2022, Pune, India, 26–27 August 2022. [Google Scholar]

- Johnson, R.; Mohan, T.; Paul, S. Weed Detection and Removal based on Image Processing. Int. J. Recent Technol. Eng. (IJRTE) 2020, 8, 347–352. [Google Scholar] [CrossRef]

- Wang, Y.; Yang, L.; Chen, H.; Hussain, A.; Ma, C.; Al-Gabri, M. Mushroom-YOLO: A deep learning algorithm for mushroom growth recognition based on improved YOLOv5 in agriculture 4.0. In Proceedings of the IEEE International Conference on Industrial Informatics (INDIN), Perth, Australia, 25–28 July 2022. [Google Scholar]

- Sio, G.; Guantero, D.; Villaverde, J.F. Plastic Waste Detection on Rivers Using YOLOv5 Algorithm. In Proceedings of the 2022 13th International Conference on Computing Communication and Networking Technologies, ICCCNT 2022, Kharagpur, India, 3–5 October 2022. [Google Scholar]

- Alde, R.B.A.; De Castro, K.D.L.; Caya, M.V.C. Identification of Musaceae Species using YOLO Algorithm. In Proceedings of the 2022 5th International Seminar on Research of Information Technology and Intelligent Systems, ISRITI 2022, Yogyakarta, Indonesia, 8–9 December 2022. [Google Scholar]

- Rakhmatuiln, I.; Kamilaris, A.; Andreasen, C. Deep neural networks to detectweeds from crops in agricultural environments in real-time: A review. Remote Sens. 2021, 13, 4486. [Google Scholar] [CrossRef]

- Hu, L. An Improved YOLOv5 Algorithm of Target Recognition. In Proceedings of the 2023 IEEE 2nd International Conference on Electrical Engineering, Big Data and Algorithms, EEBDA 2023, Changchun, China, 24–26 February 2023. [Google Scholar]

- Caya, M.V.C.; Maramba, R.G.; Mendoza, J.S.D.; Suman, P.S. Characterization and Classification of Coffee Bean Types using Support Vector Machine. In Proceedings of the 2020 IEEE 12th International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment, and Management, HNICEM 2020, Manila, Philippines, 3–7 December 2020. [Google Scholar]

- Calimag, A.D.R.; Padilla, D.A.; Manlises, C.O. Checkout System with Object Detection using NVIDIA Jetson Nano and Raspberry Pi. In Proceedings of the 2023 IEEE 5th Eurasia Conference on IOT, Communication and Engineering, ECICE 2023, Yunlin, Taiwan, 27–29 October 2023; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2023; pp. 168–171. [Google Scholar] [CrossRef]

- Tenorio, A.J.F.; Desiderio, J.M.H.; Manlises, C.O. Classification of Rincon Romaine Lettuce Using convolutional neural networks (CNN). In Proceedings of the 2022 IEEE 14th International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment, and Management, HNICEM 2022, Boracay Island, Philippines, 1–4 December 2022; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2022. [Google Scholar] [CrossRef]

- Chan, C.J.L.; Reyes, E.J.A.; Linsangan, N.B.; Juanatas, R.A. Real-time Detection of Aquarium Fish Species Using YOLOv4-tiny on Raspberry Pi 4. In Proceedings of the 4th IEEE International Conference on Artificial Intelligence in Engineering and Technology, IICAIET 2022, Kota Kinabalu, Malaysia, 13–15 September 2022. [Google Scholar]

- Canlas, M.Z.P.; Ilag, C.J.S.; Villaverde, J.F. Implementation of YOLOv3 and YOLOv4 CNN Algorithm in Detecting Struvite Crystals in Canine Urine Sediments. In Proceedings of the 2022 IEEE 14th International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment, and Management, HNICEM 2022, Boracay Island, Philippines, 1–4 December 2022; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2022. [Google Scholar] [CrossRef]

- Medina, J.K.; Jasper, P.; Tribiana, P.; Villaverde, J.F. Disease Classification of Oranda Goldfish Using YOLO Object Detection Algorithm. In Proceedings of the 2023 15th International Conference on Computer and Automation Engineering, ICCAE 2023, Sydney, Australia, 3–5 March 2023; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2023; pp. 249–254. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).