1. Introduction

Fingerprint recognition is a key biometric technology in the Internet of Things (IoT), offering secure and reliable identity verification using unique fingerprint patterns. As IoT devices become widespread, fingerprint recognition has become integral to applications such as smart homes, wearable devices, and smart locks. However, the small sensors in these devices are susceptible to environmental noise, especially when fingerprints are wet or poorly captured. In such cases, effective denoising becomes critical to improving fingerprint clarity and recognition accuracy.

While existing fingerprint restoration models perform well on larger fingerprints, they struggle with smaller or partial fingerprints commonly found in a size of 176 × 36 pixels on IoT devices. These small fingerprints lack the details for traditional feature extraction and matching techniques, leading to higher false rejection rates (FRRs). Recent studies have shown that conventional enhancement methods face difficulties in maintaining their effectiveness for such small-scale fingerprints.

In this study, we evaluated FingerNet, DenseUNet, CVF-SID, Residual M-net, and FPDMet on tiny partial fingerprints. Although these methods demonstrate improvements in structural similarity index (SSIM) and peak signal-to-noise ratio (PSNR), their ability to reduce FRRs in IoT devices remains limited.

Table 1 presents a performance comparison of these methods. CVF-SID and FingerNet show high SSIM and PSNR scores but still exhibit higher FRRs than the proposed method in this study, WFDN. In contrast, WFDN significantly outperforms all other methods, achieving an SSIM of 0.95, a PSNR of 33.47, and a reduced FRR of 8.4%. These results demonstrate the superior performance of WFDN in image quality and recognition accuracy for small, wet fingerprints in IoT environments.

2. Related Works

The quality of wet fingerprints is degraded by moisture, resulting in reduced recognition accuracy. Existing restoration methods adopt traditional image processing and machine learning approaches. Traditional methods, such as median and Gaussian filtering, are commonly used for denoising but struggle to differentiate moisture-induced noise from the actual fingerprint patterns. Wavelet transform and Fourier transform are effective for noise removal, but their performance is limited when dealing with non-linear noise in wet fingerprints due to their dependence on specific basis functions and thresholding techniques.

Machine learning, particularly deep learning such as convolutional neural networks (CNNs), has significantly advanced image restoration. For instance, DenseUNet enhances feature reuse and improves performance in wet fingerprint restoration, while XY-Deblur effectively addresses moisture-induced blur. Context-based video fetching–scene identification (CVF-SID) integrates multi-scale feature extraction with deep learning and is well-suited for wet fingerprint restoration. Specialized fingerprint models, such as FingerNet, PFENet, and FPDM-Net, have shown promising results by leveraging feature extraction and enhancement techniques to improve fingerprint image quality. However, these models require large datasets and computational resources and are less effective for tiny partial fingerprints due to insufficient features.

To overcome this limitation, we developed a model specifically designed for tiny partial fingerprints, built on CVF-SID and enhanced with scale-invariant feature transform (SIFT) feature extraction. CVF-SID handles the cyclic structure of fingerprints, while SIFT improves restoration accuracy by preserving critical fingerprint details. By classifying fingerprints based on quality, the developed model balances between computational efficiency and performance.

While significant progress has been made in wet fingerprint restoration, challenges remain. In this article, the developed model is presented with its experimental setup and results. The model improves wet fingerprint restoration and advances fingerprint recognition technology.

3. Proposed Architecture

3.1. WFDN

The WFDN block is a core component to address the challenges posed by noise in wet fingerprint recognition. The architecture incorporates advanced techniques to systematically isolate noise, restore image clarity, and enhance the quality of wet fingerprint images and overall recognition accuracy.

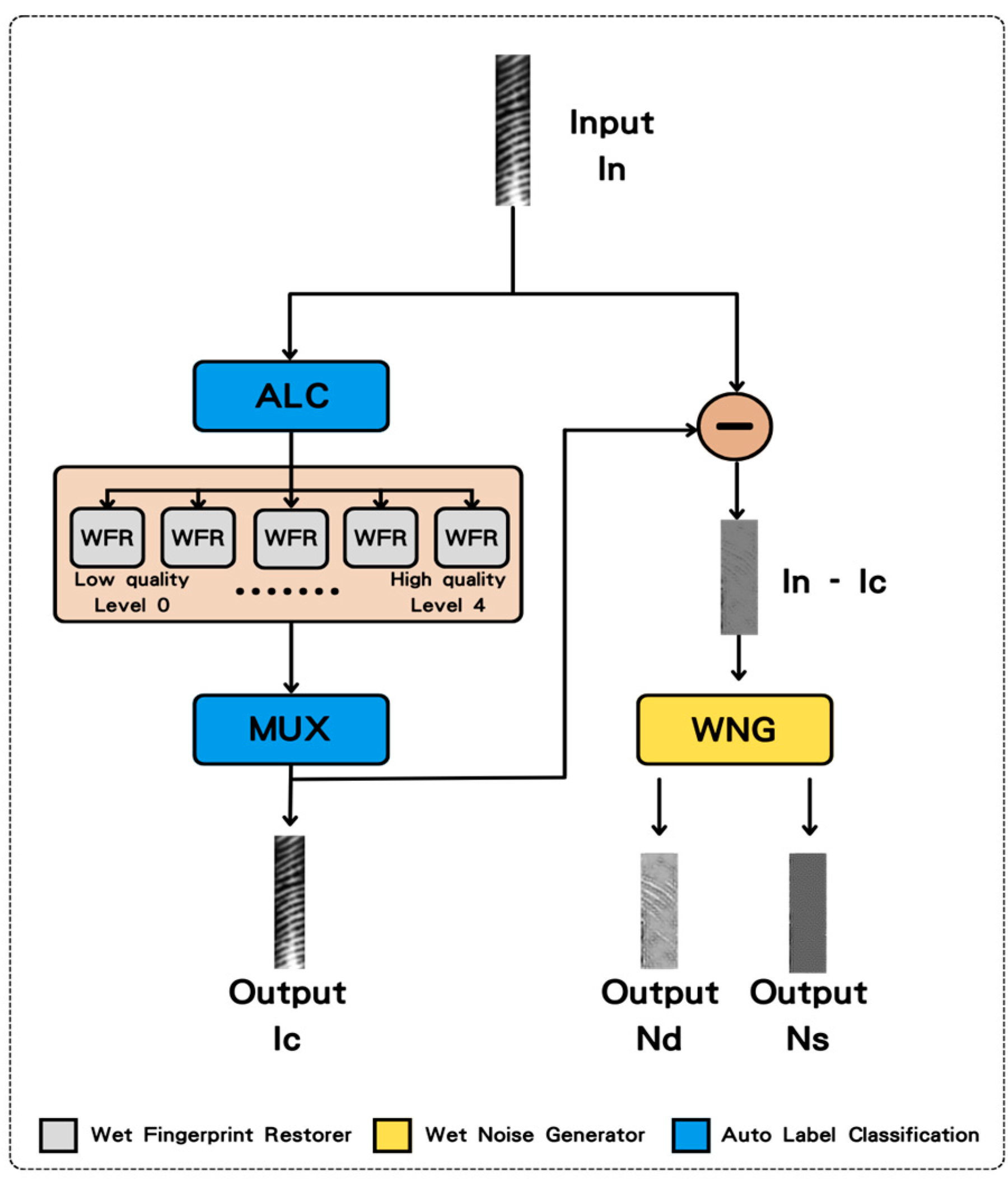

Figure 1 shows the complete architecture of the WFDN block. The WFDN block processes a single wet fingerprint image as input and generates three outputs: the restored fingerprint image, fingerprint-related noise, and fingerprint-unrelated noise. To derive the noise images, the input fingerprint image is compared with the restored image by subtracting the restored image from the input. This process is conducted to effectively isolate the noise and classify it into fingerprint-related and unrelated noise. This method ensures the effective separation and management of different noise types, maximizing image clarity and improving recognition accuracy. These three outputs provide higher-quality restored images and enable better noise characterization for optimization in later stages.

In the operation of the WFDN block, the two key components show denoising effectiveness: the wet fingerprint restorer (WFR) and wet noise generator (WNG). These components collaborate to ensure optimal image restoration and noise separation, significantly boosting the system’s overall performance.

3.1.1. WFR

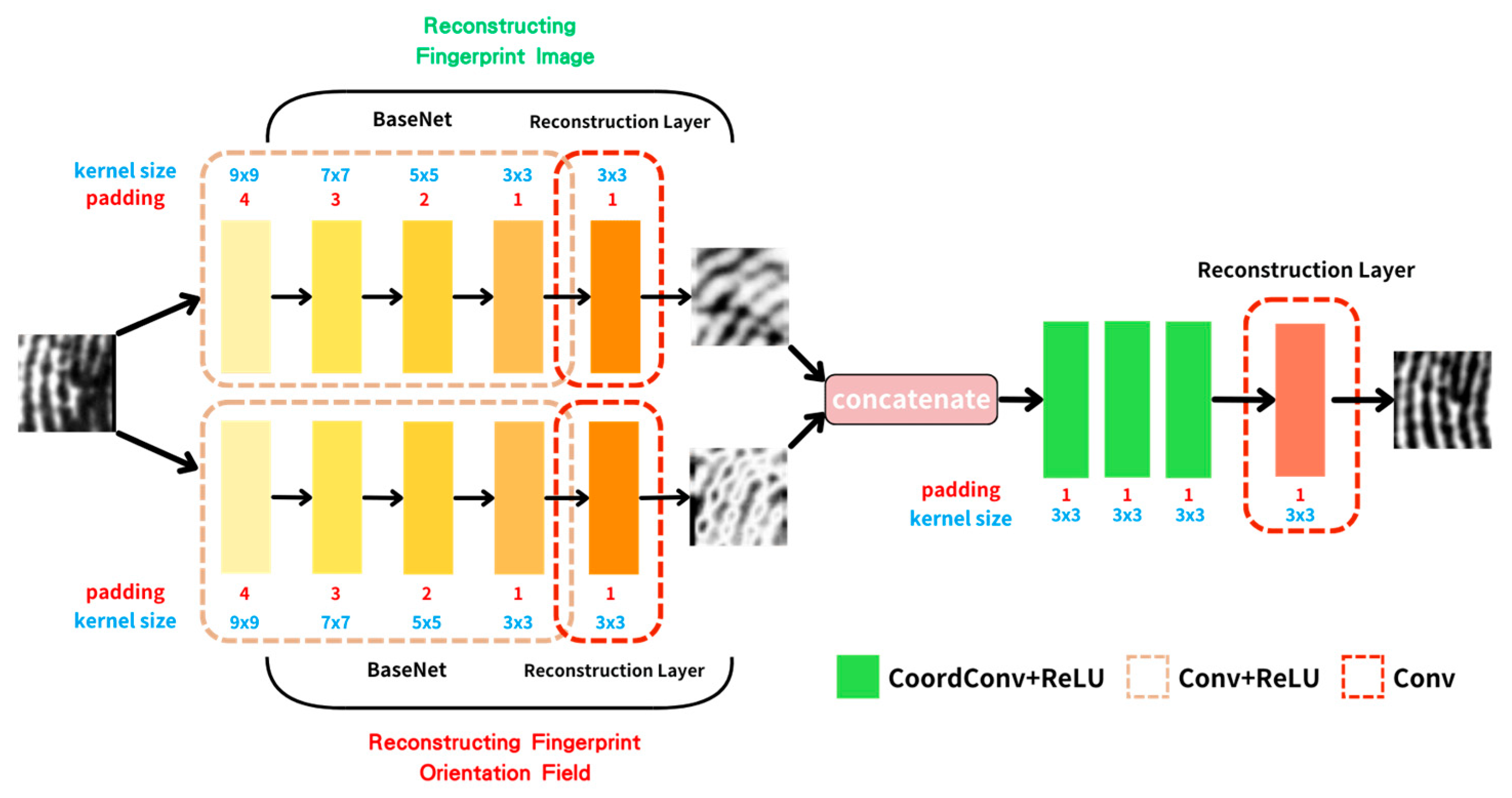

WFR utilizes a multi-branch CNN to address the challenges of noise and distortion in wet fingerprint images [

7]. As illustrated in

Figure 2, the architecture consists of two independent BaseNets for restoring the fingerprint image and reconstructing the orientation field. It captures ridge directions critical for fingerprint detail preservation. After the initial feature extraction from the input fingerprint, the outputs from BaseNets are combined with a SIFT feature map. This integration enhances the model’s ability to retain key fingerprint details. Outputs are combined to pass through three convolutional layers, each of which includes the SIFT feature map to effectively form the CoordConv layer. In these layers, the convolution operation is augmented by coordinate information from the SIFT feature map for the model to better capture spatial relationships and fine details within the fingerprint image.

Each CoordConv layer employs a 3 × 3 filter with ReLU activation to refine the essential features, while continuously incorporating the SIFT feature map. This iterative process of integrating SIFT-enhanced features significantly reduces noise and improves detail preservation in a fingerprint image as the orientation field and SIFT feature map collectively provide critical spatial and structural information. By leveraging the dual BaseNet approach and the CoordConv architecture, the model substantially improves restoration quality and boosts fingerprint recognition accuracy, even in challenging wet fingerprint scenarios.

3.1.2. WNG

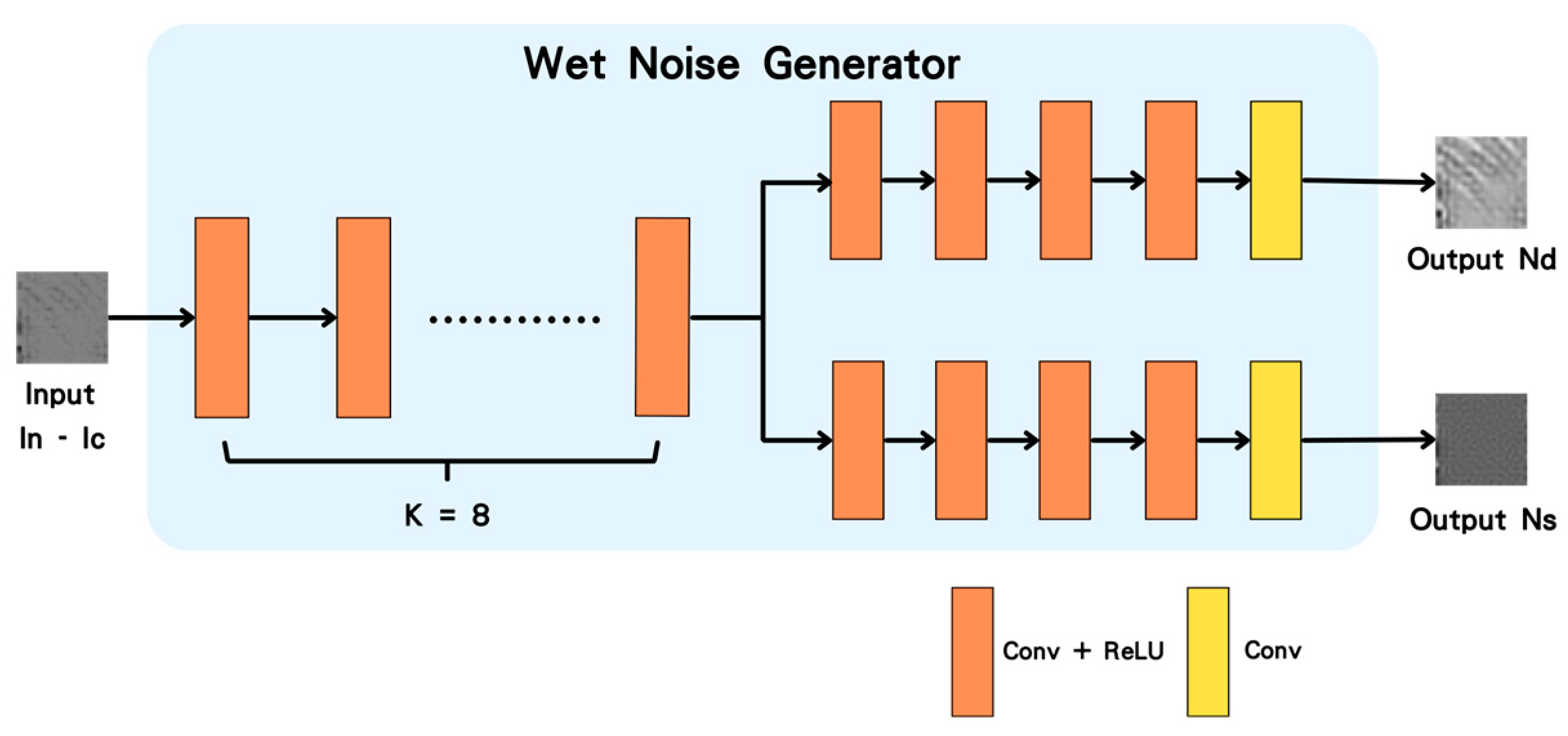

WNG is essential for separating and categorizing noise in wet fingerprint images for effective noise reduction and important fingerprint feature preservation. WNG distinguishes between fingerprint-related and independent noise, as shown in

Figure 3. Fingerprint-related noise affects ridge patterns, while independent noise consists of artifacts unrelated to the fingerprint. Using filtering techniques and a CNN, WNG creates a noise map that separates the two types of noise. Fingerprint-related noise is reduced to maintain ridge integrity, while independent noise is aggressively filtered out. This dual approach ensures precise noise reduction, enhancing fingerprint image clarity and improving recognition accuracy.

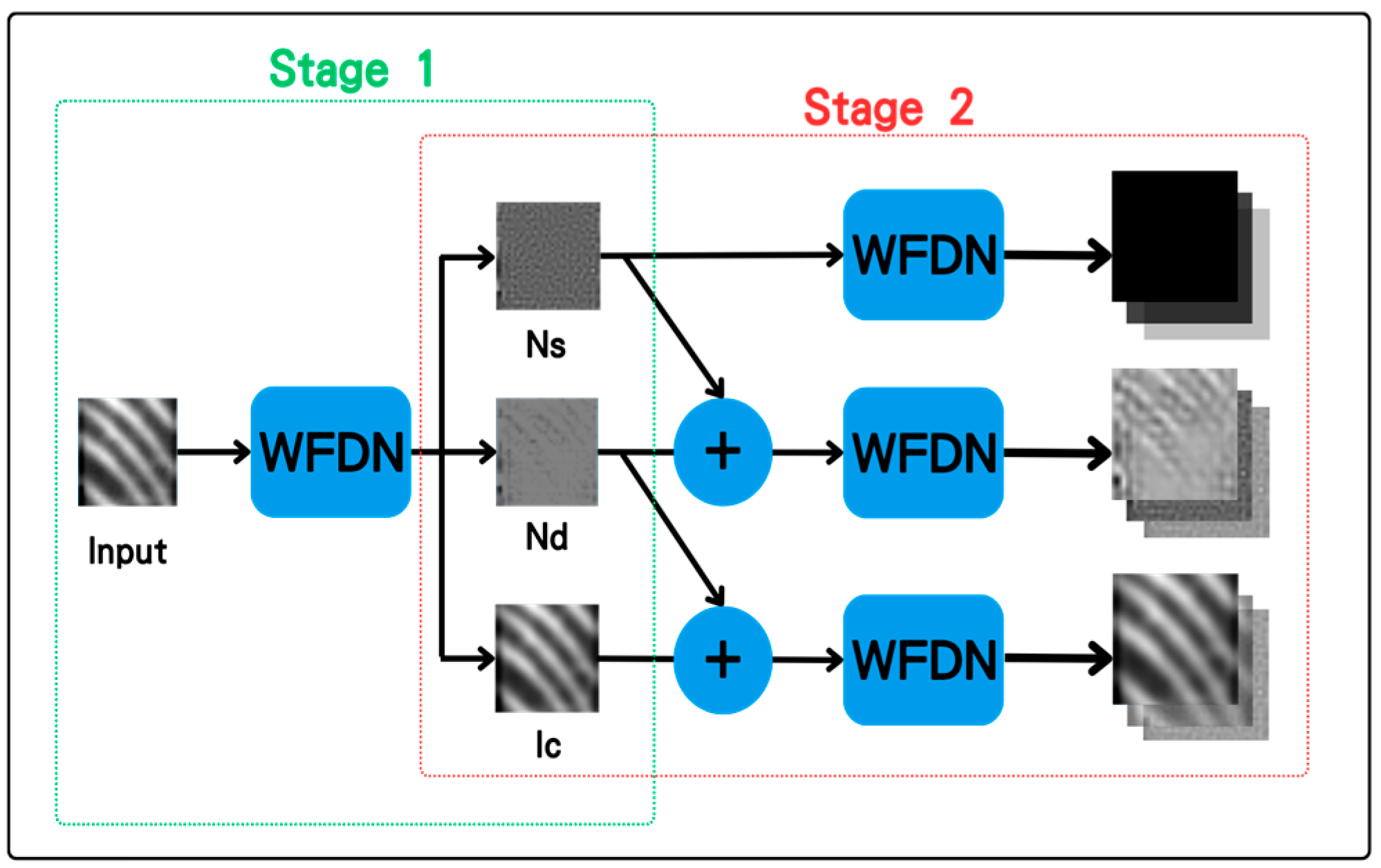

3.2. Cyclic Framework for Progressive Noise Suppression

In the developed model, the cyclic framework plays a critical role in enhancing the denoising effectiveness for wet fingerprints. This design significantly improves the model’s ability to restore fingerprint images under high-noise conditions as shown in

Figure 4.

The cyclic framework employs iterative processing to continuously refine the model’s output. Initially, the input images are processed by the WFDN block, which produces three outputs: the restored fingerprint image, noise related to the fingerprint, and noise unrelated to the fingerprint. These outputs are not used as the final result but are further refined within the cyclic framework through multiple iterations.

In this framework, the restored image is combined with both types of noise using a weighted sum. This process allows the model to learn the residual noise characteristics in the image to reduce noise effectively in subsequent stages. The combined results are then fed back into the next stage of the WFDN block, where the model progressively refines the image and reduces noise in each stage. This multi-stage cyclic training framework ensures that the model continuously optimizes itself, improving the restoration quality of wet fingerprints with each iteration. Specifically, in high-noise environments, this architecture effectively suppresses noise unrelated to the fingerprint while preserving important fingerprint features, ultimately enhancing the system’s recognition accuracy and stability. Therefore, the model precisely balances noise suppression and feature retention and significantly improves the quality of wet fingerprint images, demonstrating its potential for real-world fingerprint recognition applications.

3.3. Auto Label Classification

An innovative method is used in the model for assessing the quality of partial fingerprints. The overall system architecture is depicted in

Figure 5, including the image feature extraction process and the auto label classification module. This method accurately evaluates the quality of wet fingerprints and provides crucial information for subsequent fingerprint restoration. The system consists of the feature extractor and the auto label classification module. The feature extractor is used to extract vital metrics from the raw fingerprint images, which are essential for quality assessment and further processed by the auto label classification module.

Feature extraction is conducted before applying the auto label classification module to assess fingerprint quality. It is essential to extract effective features from the fingerprint images. We selected the following metrics to capture the key characteristics of the fingerprints:

The fingerprint ridge spectrum (FRS) is used to analyze the spectral characteristics of fingerprint ridges, particularly effective for wet fingerprints. It provides key information on ridge frequency and clarity by enhancing ridge features using a Laplacian of Gaussian (LoG) filter followed by a power spectrum analysis.

The ridge continuity metric (RCM) is used to assess the continuity of fingerprint ridges, where breaks often indicate lower quality. This metric helps identify areas of the fingerprint that may require special attention during processing.

The fingerprint contrast index (FCI) is used to measure the standard deviation of the grayscale values in the fingerprint image, where a higher standard deviation indicates greater image contrast, aiding in the recognition of clearer fingerprint features.

Ridge orientation consistency (ROC) is used to measure the consistency of ridge orientations. Higher certainty levels usually indicate better fingerprint quality, which is crucial for accurate fingerprint matching.

Once these features are extracted and compiled into a feature vector, they are used by the auto label classification module to assess the quality level of the fingerprints. This method is used to accurately classify the quality of wet fingerprints and select the appropriate restoration model based on the quality, enhancing the precision and effectiveness of fingerprint restoration techniques.

3.4. Modified SIFT

In the wet fingerprint restorer, we integrated SIFT to preserve key features in wet fingerprints [

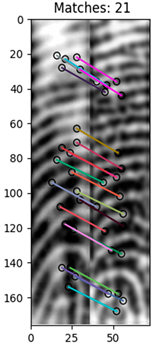

8]. The process begins with feature matching between dry and wet fingerprints using the SIFT algorithm, which identifies and matches key points. These matched key points are converted into a SIFT coordinate map, consisting of 1 and 0, with matched key points marked as 1. This map is added as a new dimension to the model to effectively record and utilize these crucial features.

By embedding the SIFT coordinate map within the clean generator, essential fingerprint features are maintained throughout the denoising process. This approach significantly enhances the model’s ability to match and retain features, leading to improved training and fingerprint recognition accuracy. The inclusion of the SIFT algorithm bolsters the overall performance of the wet fingerprint restoration, ensuring high fidelity in feature retention and noise reduction.

3.5. Loss Function

The loss function used in the WFDN model is defined as follows.

3.5.1. Denoising Loss (

is used to measure the difference between the original clean fingerprint image

and the denoised clean fingerprint image

. The generated image is as close to the real clean image as possible.

3.5.2. Consistency Loss (

is used to measure the difference between the original noisy image

and its decomposition into the clean image and noise components. This ensures that the noise decomposition process is accurate.

3.5.3. Identification Loss

is used to measure the differences between various generated images and noise components to ensure their consistency in specific aspects. These differences include the differences between the denoised image and other processed clean images, as well as differences between various noise components.

3.5.4. Match Loss (

is used to compare the SIFT value between the pairs of ground truth

and noisy input

, ground truth

and restored clean output

. A greater SIFT value represents a better result, therefore, if

is greater than

, then

will become smaller.

3.5.5. Total Loss ()

is defined as the weighted sum of the individual loss components:

where

,

, and

are the weighting coefficients assigned to each loss component. Specifically, the weights are set as follows.

= 0.5;

= 1;

= 0.05.

The weight varies depending on the level and is defined as:

Level 0: = 1.75;

Level 1: = 1.5;

Level 2: = 1.25;

Level 3: = 1.0;

Level 4: = 0.5.

4. Results and Discussions

4.1. Dataset

We created the wet–dry fingerprint dataset (WF-Dataset ) using a capacitive fingerprint sensor. This dataset included three fingerprint sizes: 176 × 36 (collected from mobile devices), 88 × 88, and 80 × 100 (collected from a laptop). The number of fingerprints for each size is detailed in

Table 2. After collecting wet and dry fingerprints, we used a matching tool to pair corresponding fingerprints, which improved the model training by providing aligned data.

We evaluated FRRs using 250 tiny partial fingerprints for enrollment and 500 for identification by calculating the accuracy of small-sized fingerprints in IoT applications. We used the public dataset FVC2002 DB3 to assess the model’s performance in terms of NFIQ2. Both datasets were collected using capacitive sensors for evaluating fingerprint quality and recognition performance.

4.2. Implementation

We utilized the WF-Dataset, comprising 71,188 wet fingerprint images and 71,188 dry fingerprint images. The model was trained with a batch size of 128 using the Adam optimizer for 50 epochs. The experiments were conducted on an NVIDIA RTX A6000 GPU, supported by an Intel(R) Xeon(R) Silver 4310 CPU @ 2.10GHz, with the entire implementation performed in PyTorch 1.12.1.

4.3. Effect of Training on Recognition

To enhance performance, we incorporated a grading system to classify fingerprint quality, allowing for tailored and effective denoising processes. We designed three different training stages to test the performance of wet fingerprint recognition. These stages represent different depths and complexities of denoising processing. The results of these experiments are shown in

Table 3.

In addition to FRR, we scored the NFIQ2 improvement using the FVC2002 DB3 dataset across the same three training stages. The initial NFIQ2 score for this dataset was 27.29. As shown in

Table 4, the score improved at each stage, with Stage 1 increasing the NFIQ2 score to 30.31, Stage 2 to 47.41, and Stage 3 to 45.31.

The recognition performance and fingerprint quality improved significantly with each successive training stage (

Table 3 and

Table 4). Specifically, the FRR decreased from 30.6% in Stage 1 to 11.8% in Stage 2, and further to 8.4% in Stage 3, while the NFIQ2 score increased substantially in Stage 2. The results indicated that training reduced noise and enhanced the overall fingerprint quality. Multi-stage training significantly improved the model’s effectiveness, particularly in scenarios of wet fingerprint recognition.

4.4. Comparison with State-of-the-Art Models

We conducted a comparative analysis to evaluate the performance of the proposed WFDN model against several models, including DenseUNet, FPDMet, FingerNet, Residual M-net, XY-Deblur, and CVF-SID. FRR was used for small fingerprints commonly found in IoT applications, where noise and reduced feature information pose significant challenges. Only WFDN successfully identified and retained the critical fingerprint features, while other models failed to extract the necessary details or introduced artifacts (

Table 5).

Table 6 demonstrates WFDN’s significant reduction in FRR compared with that of other models, particularly when processing noisy, small-sized fingerprints. Models such as DenseUNet and FingerNet perform well on larger fingerprints but struggle with smaller, noisier inputs, while WFDN consistently delivers superior results. WFDN’s effectiveness stems from its multi-stage restoration process and the integration of SIFT feature maps, which enhance feature retention and noise reduction. The pixel difference map and match points highlighted WFDN’s ability to reduce noise while maintaining ridge patterns, unlike other models that suffer from blurring and loss of important details.

We compared the performance of WFDN with other state-of-the-art models in reducing FRR as shown in

Table 6.

While other methods showed the ability to reduce the FRR, an increase in FRR after restoration was observed. In contrast, the developed WFDN model significantly reduced FRR for small fingerprints to 8.4%. This demonstrated that WFDN had a considerable advantage in handling small, wet fingerprints by effectively preserving critical features and enhancing recognition accuracy.

5. Conclusions

In this study, we introduced WFDN to address the challenges of wet fingerprint recognition. WFDN integrates two key components: the WFDN block and a multi-layer cyclic architecture. The WFDN block restores fingerprint images while separating noise, and the cyclic architecture refines these outputs through multiple iterations to ensure effective noise reduction. By using the automatic grading system, fingerprints were classified by quality with the appropriate restoration technique. Additionally, the integration of SIFT enhances the retention of key feature points, improving fingerprint detail restoration.

The developed model showed significant improvements, particularly with small, noisy fingerprints. On a small fingerprint with a size of 176 × 36 pixels, FRR was reduced from 19.6 to 8.4%. On the FVC2002 DB3 dataset, the NFIQ2 score increased from 27.29 to 47.41 at the optimal training stage. These results confirmed the WFDN’s ability to reduce noise and preserve critical fingerprint features, enhancing image quality and recognition accuracy. The multi-stage training suppressed noise and retained features, proving its effectiveness in improving performance across different fingerprint sizes. The combination of SIFT and multi-stage training allowed WFDN to restore and retain accurate feature points, ensuring reliable recognition even in challenging conditions. WFDN provides a robust solution for wet fingerprint restoration with significant advancements in biometric systems. Its ability to handle diverse fingerprint qualities and sizes makes it an effective tool for fingerprint recognition applications.

Author Contributions

Conceptualization, M.-H.H.; methodology, M.-H.H.; experiment program design, M.-H.H.; data collection, Y.-H.S.; statistical analysis, Y.-H.S.; writing—original draft preparation, Y.-H.S. All authors have read and agreed to the published version of the manuscript.

Funding

National Science and Technology Council, (NSTC-112/113-2634-F-150-001-MBK; 113-2218-E-150-003).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The datasets generated and/or analyzed during the current study are not publicly available due to privacy concerns, proprietary reasons but are available from the corresponding author on reasonable request.

Acknowledgments

This research was supported by the National Science and Technology Council.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Adiga, V.S.; Sivaswamy, J. Fpd-m-net: Fingerprint image denoising and inpainting using m-net based convolutional neural networks. In Inpainting and Denoising Challenges; Springer: Cham, Switzerland, 2019; pp. 51–61. [Google Scholar]

- Cai, S.; Tian, Y.; Lui, H.; Zeng, H.; Wu, Y.; Chen, G. Dense-unet: A novel multiphoton in vivo cellular image segmentation model based on a convolutional neural network. Quant. Imaging Med. Surg. 2020, 10, 1275. [Google Scholar] [CrossRef] [PubMed]

- Cunha, N.D.S.; Gomes, H.M.; Batista, L.V. Residual m-net with frequency-domain loss function for latent f ingerprint enhancement. In Proceedings of the 2022 35th SIBGRAPI Conference on Graphics, Patterns and Images (SIBGRAPI), Natal, Brazil, 24–27 October 2022; pp. 198–203. [Google Scholar]

- Ji, S.W.; Lee, J.; Kim, S.W.; Hong, J.P.; Baek, S.J.; Jung, S.W.; Ko, S.J. Xydeblur: Divide and conquer for single image deblurring. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 17421–17430. [Google Scholar]

- Li, J.; Feng, J.; Kuo, C.C.J. Deep convolutional neural network for latent fingerprint enhancement. Signal Process. Image Commun. 2018, 60, 52–63. [Google Scholar] [CrossRef]

- Neshatavar, R.; Yavartanoo, M.; Son, S.; Lee, K.M. Cvf-sid: Cyclic multi-variate function for self-supervised image denoising by disentangling noise from image. arXiv 2022, arXiv:2203.13009. [Google Scholar]

- Wong, W.J.; Lai, S.H. Multi-task cnn for restoring corrupted fingerprint images. Pattern Recognit. 2020, 101, 107203. [Google Scholar] [CrossRef]

- Park, U.; Pankanti, S.; Jain, A.K. Fingerprint verification using sift features. In Proceedings of the Biometric Technology for Human Identification V, Orlando, FL, USA, 17 March 2008; pp. 166–174. [Google Scholar]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).