1. Introduction

Rain causes terrain or roads to be wet for vehicles. Drivers must adapt to the wet surface to avoid accidents. According to the most recent Metro Manila Accident Reporting and Analysis System (MMARAS) [

1], more than 26,000 accidents occurred in the National Capital Region during the wet months of 2020. From June to October, over 40% of all accidents took place. Wet road conditions were the cause of many accidents. Decreased traction on wet roads increases the distance required to stop vehicles and makes it difficult to maneuver safely. Since braking distances are longer on wet roads, it is necessary to maintain more distance with the vehicle ahead to prevent accidents.

Classification of road wetness and dryness is difficult due to the low performance of the current technology. In rain, the images captured by a camera are grainy or blurry, resulting in inaccurate results. Furthermore, there are not many available datasets to evaluate wetness. Therefore, we developed a device that captures road images and classifies road conditions in this study.

Amidst the continuous progress of technology, different methods have been developed that use artificial intelligence (AI) and machine learning (ML) [

2]. Convolutional neural networks (CNNs) are an essential element of computer vision and machine learning applications. In this study, we used the MobileNet algorithm to classify road wetness using CCTV-captured images. Mask recursive CNN was used to classify road wetness in Refs. [

3,

4,

5,

6,

7]. In these studies, 12,190 images captured in various weather conditions were collected. A total of 1856 of these images were randomly subsampled and annotated for segmentation model training. However, the manual annotation of the images was a drawback in the segmentation model, while the discrepancies in the camera types and models used were another drawback. In other studies [

8,

9,

10,

11,

12], road surface friction was estimated using road surface conditions without wetness estimation.

Wet road is a major concern due to the increasing number of accidents. To address this issue, a system for classifying road wetness is essential. MobileNet and mask R-CNN were used to determine road surface wetness using water film height measurement. Roads have different characteristics depending on their type, color, and material. Therefore, the wetness of roads can be estimated by processing and classifying these characteristics [

13,

14,

15]. SqueezeNet and ENet can be integrated to accurately classify road wetness as SqueezeNet detects the features of the asphalt roads which are segmented semantically by ENet.

The system developed in this study adopted Raspberry Pi 4 to classify road conditions (wet or dry) using SqueezeNet and ENet. The system captured images with Raspberry Pi camera module 3 and processed images using SqueezeNet and ENet for the categorization of road wetness. The accuracy of the system was estimated by a confusion matrix created using the gathered and created datasets. The system helps drivers avoid accidents on the road and informs road conditions. The system can also be used to analyze and classify road conditions for different purposes. The system can be extended to classify the types of materials, skid resistance, or roads. The system and models serve as a basis for research on image processing using AI. The dataset created in this study can also be used in future research with the addition of images containing potholes, cracks, and road markings.

2. Methodology

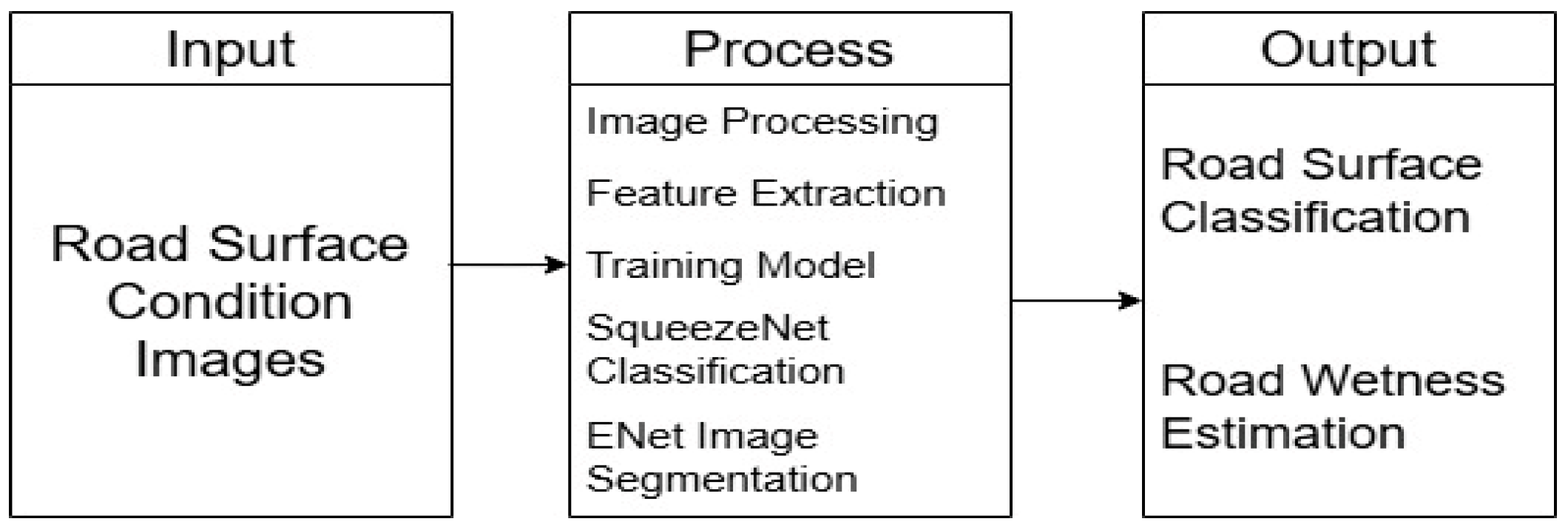

Figure 1 shows the research framework of this study. We gathered 1200 images of the road surface. The captured images were classified using SqueezeNet to classify the wet and dry surfaces. The fire module was implemented in the squeeze layer to reduce the dimension to 1 × 1 and segment the gathered images. The images were then processed by the expand layer, increasing the depth of the network. The expand layer filtered the gathered images using 1 × 1 and 3 × 3 filters. The extracted features were fed to ENet for semantic segmentation. ENet segmented images at the pixel level, isolating the road regions from the surrounding areas. PyTorch 2.2.1 processed the data and displayed the outputs. The confusion matrix was created to evaluate the accuracy of the system’s classification. The result of the classification was displayed on an OLED LCD. The images were collected by the Raspberry Pi camera module. The deep learning models classified road surface and wetness successfully.

2.1. Hardware Development

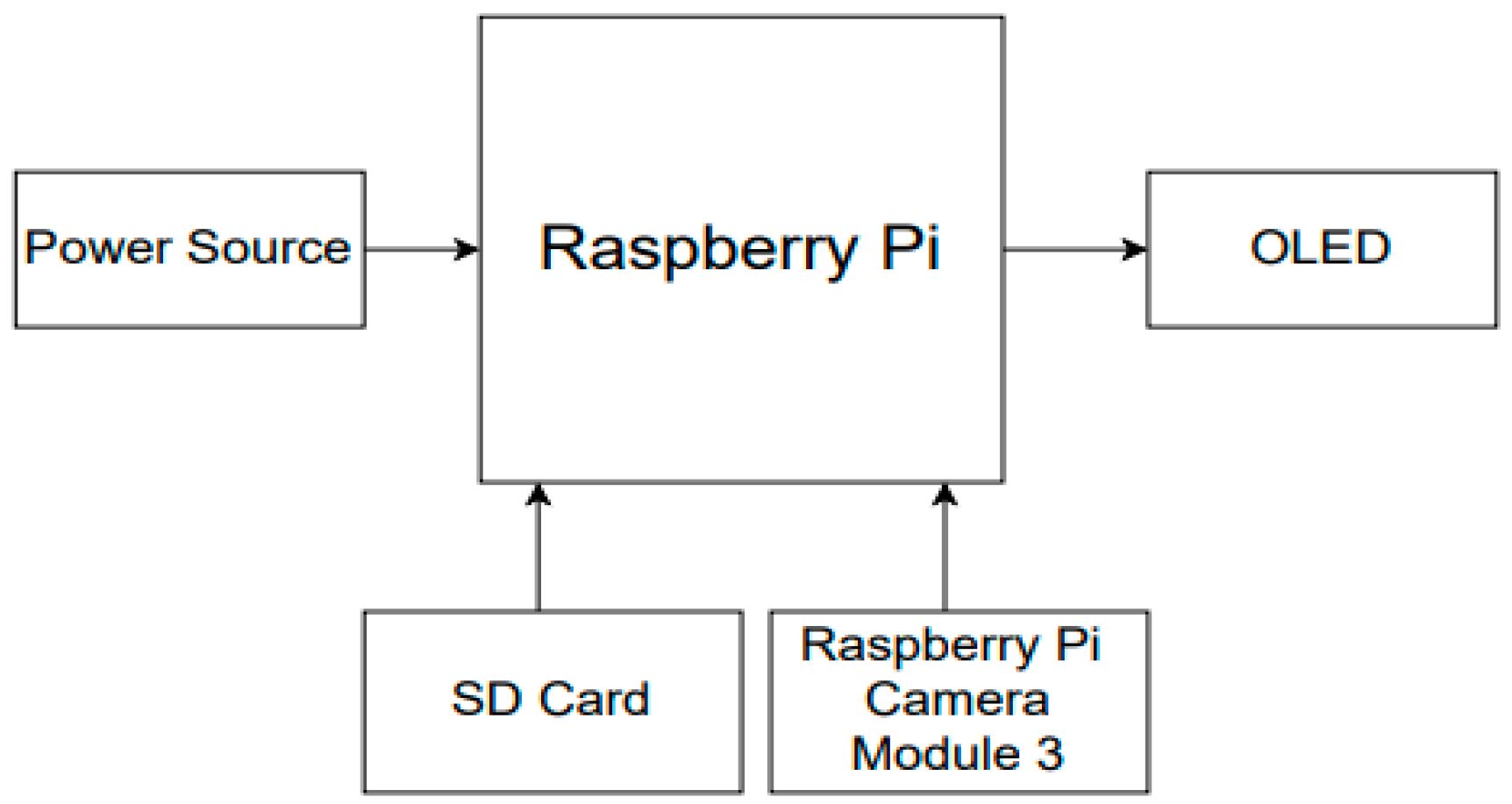

Figure 2 shows the hardware of the developed system. The system consisted of Raspberry Pi 4, Raspberry Pi camera module 3, an SD Card, OLED, and a power source. As the system’s microcontroller, Raspberry Pi 4 processed the inputs and outputs of the system. Raspberry Pi camera module 3 was connected to Raspberry Pi 4 for data collection. The camera module also captured images for classification. The SD card stored the operating algorithms, and an OLED display showed the results of wetness classification and estimation.

2.2. Software

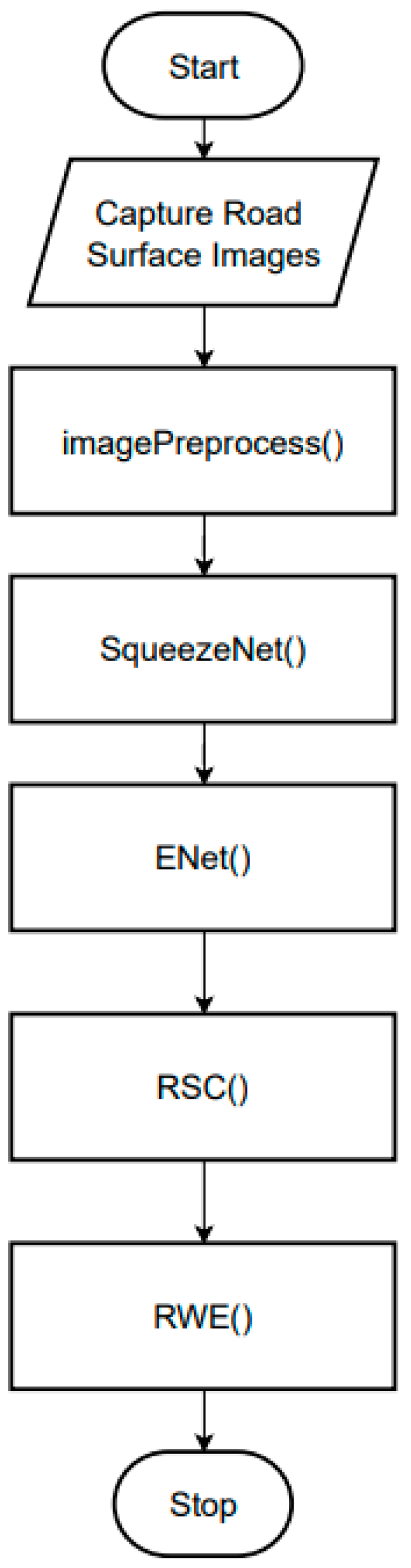

Figure 3 shows the software development process of the system. The process consisted of five modules: imagePreprocess(), SqueezeNet(), ENet(), road surface classification RSC(), and road wetness estimation RWE(). Each module included important features for identifying the type of road surface and determining wetness. The captured images were stored in the SD card to compose the database of the system. The images were resized into a fixed input state. The SqueezeNet model normalized the pixel value of the image to 1 to maintain the consistency of the images. The normalized images were classified by the SqueezeNet model to determine wetness. The extracted features were fed into ENet for semantic segmentation. The ENet model generated segmentation maps at the pixel level to effectively distinguish the road from the surrounding background. The deep learning models were trained to classify road surfaces and estimate road wetness. Residual shuffle convolution (RSC) and real-world evidence (RWE) processed classified images. The images were then categorized.

SqueezeNet detected features related to road surface conditions. Color, texture, and reflectivity were identified and saved for the next function. ENet estimated road wetness. The RSC module output road surface classification results after it detected road surface conditions based on the features. The RWE module managed the road wetness estimation. The wetness in the identified area was indicated.

2.3. Experimental Setup

Figure 4 shows the experimental setup of the system. The system was trained to classify road surface conditions and wetness using datasets. The camera module acquired the images of the road at 30 m. Wetness was estimated in percentage and was classified into dry, wet, damp, or flooded statuses. The dry status showed a wetness of 0%.

3. Results and Discussion

3.1. Data Gathering

After classifying the road surface condition as wet or dry, the system estimated wetness and displayed the result with the category. We gathered 1200 images with 300 images in dry, damp, wet, and very wet categories from the Internet. The road surface conditions were wet or dry. We constructed a dataset with images of roads from reliable sources (

Table 1).

3.2. Statistical Analysis

The confusion matrix was used to estimate the accuracy of the system (

Table 2). The SqueezeNet model accurately predicted 9 out of 10 for the dry category and 9 out of 10 for the wet category. The ENet model accurately predicted five out of seven for the damp category, seven out of seven for the wet category, and seven out of seven for the very wet category. A total of 21 test data points were used (

Table 3).

The true positive values represent the actual positive outputs. The rest are either false positives or negatives. Upon completion of the confusion matrix, accuracy was determined using (1) and (2).

Accuracy presents how often the system predicts the outcome correctly. True positive (TP) is the number of predicted values matching the actual values, and true negative (TN) is the frequency of the predicted negative values matching the actual negative values. False positive (FP) is the frequency of the algorithm incorrectly predicting negative numbers as positives, and false negative (FN) is the frequency of predicting positives matching the actual negatives. A summary of results for road wetness estimation (RWE) is shown in

Table 4. The ENet model showed an accuracy of 71.43% for the damp category and 100% for the wet and very wet categories. The overall accuracy was 90.48%.

The results for road surface condition (RSC) are shown in

Table 5. The SqueezeNet model showed an accuracy rate of 90% for the dry and wet categories. The accuracy was 90%.

4. Conclusions and Recommendation

We developed a system for road surface classification and wetness estimation using deep learning models, specifically the SqueezeNet and ENet models. After the implementation and testing of the system with Raspberry Pi 4 and camera module 3, the system accurately determined the road surface condition (dry or wet) and road wetness (damp, wet, or very wet). The SqueezeNet model achieved 90% accuracy, while the ENet model showed an accuracy of 90.48%. For the advancement of the system, the SqueezeNet and ENet models need to be further trained. More data must be added to the dataset, and the quality of the data needs to be improved. The data for training must be preprocessed to correctly classify the road conditions. Real-time inference of the SqueezeNet and ENet models helps the system determine the road surface condition and road wetness more effectively.

Author Contributions

Conceptualization, M.S.C.C. and L.A.O.; methodology, L.A.O.; software, M.S.C.C.; validation, M.S.C.C., L.A.O. and A.N.Y.; formal analysis, M.S.C.C.; investigation, L.A.O.; resources, L.A.O.; data curation, M.S.C.C.; writing—original draft preparation, M.S.C.C.; writing—review and editing, A.N.Y.; visualization, A.N.Y.; supervision, A.N.Y.; project administration, A.N.Y.; funding acquisition, L.A.O. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Datasets were downloaded from open access websites. Meanwhile, newly created datasets by authors maybe requested by sending an email to any of the authors.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zoleta, V. Road Safety Tips to Remember When Driving This Rainy Season; Moneymax: Makati, Philippines, 2022. [Google Scholar]

- Cordes, K.; Reinders, C.; Hindricks, P.; Lammers, J.; Rosenhahn, B.; Broszio, H. RoadSaW: A Large-Scale Dataset for Camera-Based Road Surface and Wetness Estimation. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, New Orleans, LA, USA, 19–20 June 2022; pp. 4439–4448. [Google Scholar] [CrossRef]

- Morris, C.; Yang, J.J. A machine learning model pipeline for detecting wet pavement condition from live scenes of traffic cameras. Mach. Learn. Appl. 2021, 5, 100070. [Google Scholar] [CrossRef]

- Roychowdhury, S.; Zhao, M.; Wallin, A.; Ohlsson, N.; Jonasson, M. Machine Learning Models for Road Surface and Friction Estimation using Front-Camera Images. In Proceedings of the 2018 International Joint Conference on Neural Networks (IJCNN), Rio de Janeiro, Brazil, 8–13 July 2018. [Google Scholar] [CrossRef]

- Du, Y.; Liu, C.; Song, Y.; Li, Y.; Shen, Y. Rapid Estimation of Road Friction for Anti-Skid Autonomous Driving. IEEE Trans. Intell. Transp. Syst. 2020, 21, 2461–2470. [Google Scholar] [CrossRef]

- Yumang, A.N.; Juana, M.C.M.S.; Diloy, R.L.C. Detection and Classification of Defective Fresh Excelsa Beans Using Mask R-CNN Algorithm. In Proceedings of the 2022 14th International Conference on Computer and Automation Engineering, ICCAE, Brisbane, Australia, 25–27 March 2022; pp. 97–102. [Google Scholar] [CrossRef]

- Yumang, A.; Magwili, G.; Montoya, S.K.C.; Zaldarriaga, C.J.G. Determination of Shelled Corn Damages using Colored Image Edge Detection with Convolutional Neural Network. In Proceedings of the 2020 IEEE 12th International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment, and Management, HNICEM, Manila, Philippines, 3–7 December 2020. [Google Scholar] [CrossRef]

- Manlises, C.O.; Santos, J.B.; Adviento, P.A.; Padilla, D.A. Expiry Date Character Recognition on Canned Goods Using Convolutional Neural Network VGG16 Architecture. In Proceedings of the 2023 15th International Conference on Computer and Automation Engineering (ICCAE), Sydney, Australia, 3–5 March 2023; pp. 394–399. [Google Scholar] [CrossRef]

- Sio, G.A.; Guantero, D.; Villaverde, J. Plastic Waste Detection on Rivers Using YOLOv5 Algorithm. In Proceedings of the 2022 13th International Conference on Computing Communication and Networking Technologies, ICCCNT, Kharagpur, India, 3–5 October 2022. [Google Scholar] [CrossRef]

- Yumang, A.N.; Banguilan, D.E.S.; Veneracion, C.K.S. Raspberry PI based Food Recognition for Visually Impaired using YOLO Algorithm. In Proceedings of the 2021 5th International Conference on Communication and Information Systems, ICCIS, Chongqing, China, 15–17 October 2021; pp. 165–169. [Google Scholar] [CrossRef]

- Al Gallenero, J.; Villaverde, J. Identification of Durian Leaf Disease Using Convolutional Neural Network. In Proceedings of the 2023 15th International Conference on Computer and Automation Engineering, ICCAE, Sydney, Australia, 3–5 March 2023; pp. 172–177. [Google Scholar] [CrossRef]

- Buenconsejo, L.T.; Linsangan, N.B. Classification of Healthy and Unhealthy Abaca leaf using a Convolutional Neural Network (CNN). In Proceedings of the 2021 IEEE 13th International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment, and Management, HNICEM, Manila, Philippines, 28–30 November 2021. [Google Scholar] [CrossRef]

- Tenorio, A.J.F.; Desiderio, J.M.H.; Manlises, C.O. Classification of Rincon Romaine Lettuce Using convolutional neural networks (CNN). In Proceedings of the 2022 IEEE 14th International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment, and Management, HNICEM, Kota Kinabalu, Malaysia, 13–15 September 2022. [Google Scholar] [CrossRef]

- Cruz, R.D.; Guevarra, S.; Villaverde, J.F. Leaf Classification of Costus Plant Species Using Convolutional Neural Network. In Proceedings of the 2023 15th International Conference on Computer and Automation Engineering, ICCAE, Sydney, Australia, 3–5 March 2023; pp. 309–313. [Google Scholar] [CrossRef]

- Pagarigan, M.A.L.; Reyes, J.R.F.; Padilla, D.A. Detection of Face Mask Wearing Conditions with Lightweight CNN Models on Raspberry Pi 4 and Jetson Nano. In Proceedings of the 2022 7th International Conference on Image, Vision and Computing, ICIVC, Xi’an, China, 26–28 July 2022; pp. 269–275. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).