1. Introduction

Art has always been influenced by technological advances, and machine learning represents one of the most groundbreaking tools for artists and technologists alike. With techniques such as Generative Adversarial Networks (GANs) and neural style transfer, machine learning has provided the ability to emulate, evolve, and even originate new art styles that challenge traditional norms of creativity [

1].

Machine learning has introduced new dimensions to the world of art, pushing the boundaries of creativity through algorithmic methods [

2]. We investigate how machine learning, particularly deep learning techniques, is used to generate evolving art styles, examining both its technical aspects and the broader implications for creativity and art. We also explore the performance of different models, compare traditional and machine-generated art, and provide visual insights into the transformation of styles. Additionally, diagrams and graphs are provided to illustrate algorithmic processes and performance comparisons.

This study aims to review the use of machine learning models in evolving art styles and explore the technological aspects of these models and their implications on creative processes. Specifically, we focus on the contribution of algorithmic creativity—art generated by machines that learn, adapt, and produce novel styles.

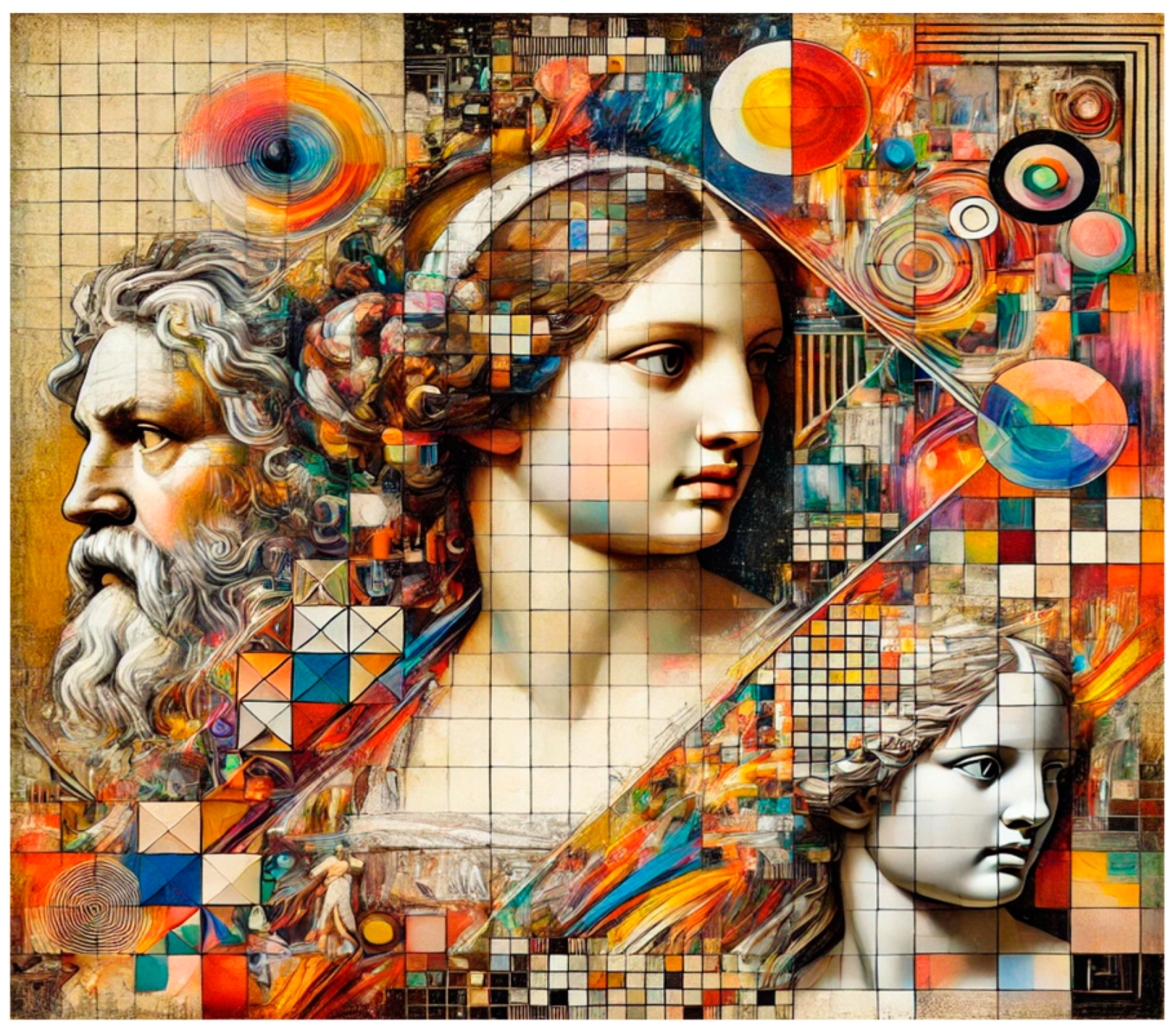

Figure 1 demonstrates a blend of classical Renaissance-style figures and modern abstract elements, suggesting an algorithmic creative process.

2. Literature Review

The concept of using computers for creative purposes began in the 1960s when early pioneers started using computers to generate visual patterns and simple forms of art. Harold Cohen, an influential figure in this space, developed AARON software, one of the first AI programs capable of creating abstract artworks autonomously. AARON used a rule-based approach to produce art, marking an important step towards algorithmic art.

During this period, the focus was primarily placed on developing basic geometric compositions, often in black and white, with simple shapes and line patterns that were visually intriguing but far from the complex, dynamic art generated by modern algorithms. By the 1990s, advancements in neural networks paved the way for more sophisticated forms of computer-generated art. Artificial neural networks (ANNs) provided a means for computers to learn from data, a concept that would later be instrumental in artistic applications.

However, the breakthrough came in the 2010s with the introduction of convolutional neural networks (CNNs) and deep learning techniques [

3]. These advances allowed computers to understand intricate visual elements and patterns, enabling the development of complex art pieces that reflected a combination of content and style. The advent of neural style transfer (NST), introduced by Gatys et al. in 2015 [

2], marked a significant milestone in machine learning’s impact on art. NST uses pre-trained CNNs to blend the content of one image with the style of another, generating a third image that captures the content and stylistic elements of both.

The method minimizes a combined loss function that accounts for both content similarity and style similarity. Content loss measures the difference between the generated image and the content image, while style loss compares the differences between the style features using Gram matrices.

NST has enabled artists to combine classical and modern styles, experiment with new aesthetics, and generate unique interpretations of well-known artworks. This technique gained rapid popularity due to its intuitive approach as it allows any individual to create art by selecting a photo and an artwork as inputs. NST has made algorithmic art more accessible to non-experts, democratizing the creative process.

Another breakthrough in the intersection of machine learning and art came in 2014 when Ian Goodfellow [

1] introduced GANs. GANs consist of two models—a generator and a discriminator—that work against each other to create realistic images. The generator tries to produce images that mimic real art, while the discriminator tries to identify whether the images are real or generated. GANs have been instrumental in creating diverse styles of art. For example, StyleGAN and ArtGAN have allowed for the generation of portraits, landscapes, and abstract art that blend elements from various artistic movements. GANs are particularly powerful because they generate entirely new images rather than merely transforming existing ones [

4]. This capability has made GANs a popular choice for generating novel and imaginative artworks that challenge conventional artistic norms.

GANs have also inspired artists to collaborate with algorithms in co-creating artworks that merge human creativity with machine-generated features. Such collaborations raise questions about the nature of creativity and who should be considered the author of the final piece.

DeepDream, developed by Google in 2015, is another notable technique that uses CNNs to enhance features found in images [

3]. By applying gradient ascent to amplify patterns, DeepDream produces visually rich, dream-like imagery. This approach highlights the inner workings of neural networks by exaggerating the features that the model perceives, resulting in highly surreal visuals. DeepDream was the first tool that gave users an insight into the features neural networks learned during training. It opened up opportunities for artists to visualize and creatively exploit the hallucinations of deep learning models.

3. Methodology

3.1. Machine Learning Models in Artistic Creation

3.1.1. NST

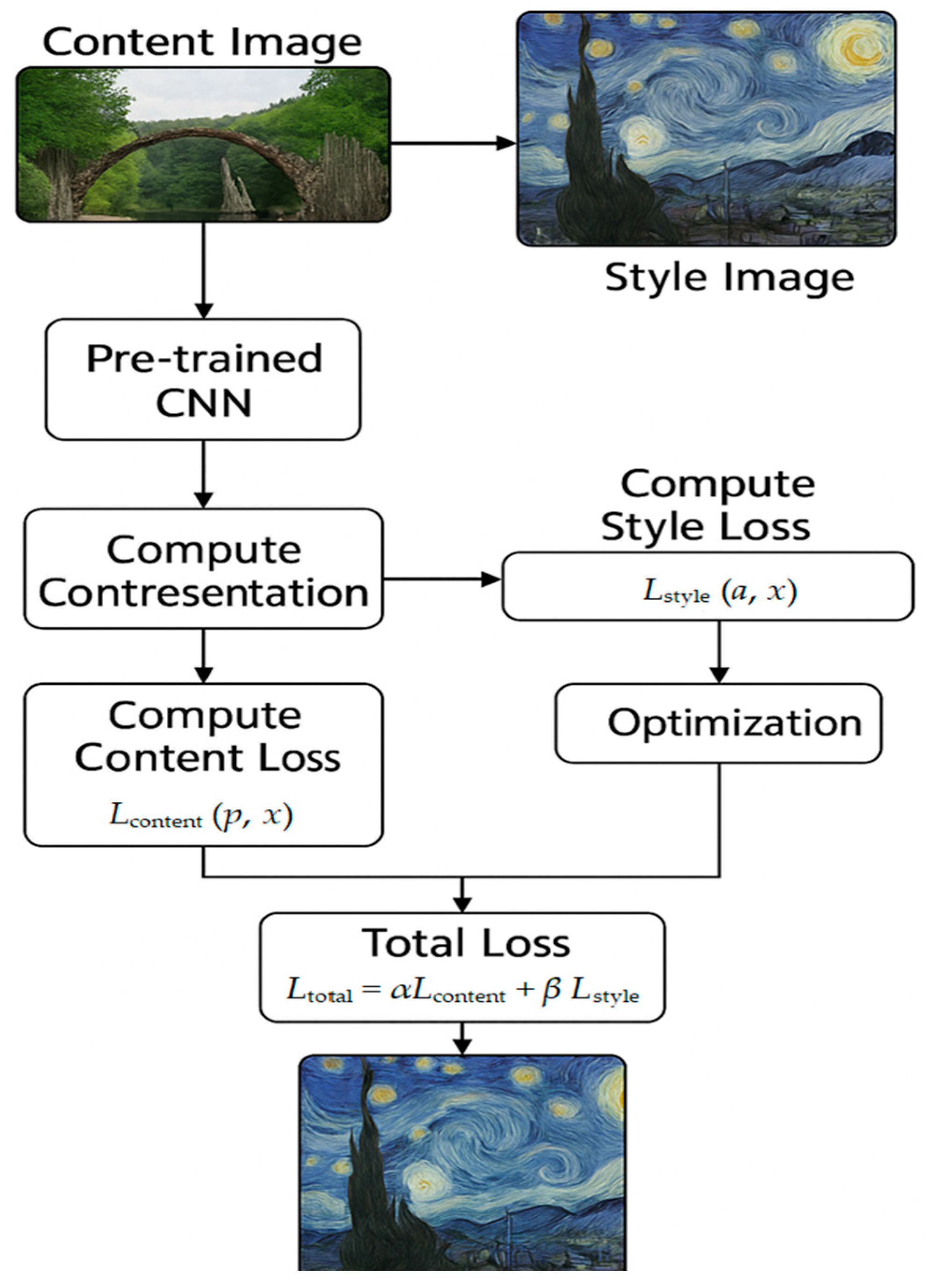

NST uses CNNs to combine the content of one image with the style of another, creating a novel piece of art. The method involves a content image (often a photo) and a style image (typically a famous artwork), and a resulting output that blends the two. NST defines content loss and style loss and the goal of NST is to minimize a loss function composed of two components: content loss (

Lcontent) and style loss (

Lstyle). These losses are minimized through backpropagation. Content loss measures the difference between the feature representations of the content image and the generated image. Mathematically, it is given by

where

and

are the feature representations of the generated image and the content image at layer

i, respectively.

Style Loss measures the similarity between the style of the generated image and the style image by comparing the Gram matrices of their feature maps.

where

and

are the Gram matrices of the generated and style images.

The total loss (

Ltotal) is given by

where

α and

β are weights that control the importance of content and style, respectively.

Figure 2 describes the transformation process, showing the content image, the style image, and the generated artwork that combines both elements, with arrows indicating the flow of the process.

3.1.2. GANs

GANs were introduced by Ian Goodfellow in 2014 [

1] and consist of two neural networks—generator and discriminator—which are trained adversarially. GANs have been instrumental in the generation of new art styles by learning from existing datasets of artworks.

The generator creates new images based on random noise, while the discriminator assesses whether the images are real or fake. The generator is trained to produce images that are indistinguishable from real artworks. GANs are well-known for their ability to create highly realistic and imaginative artworks as shown in

Figure 3.

The training process of GANs is formulated as a min-max optimization problem.

where

G represents the generator, which creates new images from random noise

z, and

D represents the discriminator, which outputs the probability that a given image is real. The generator attempts to minimize log(1 −

D(

G(

z))), while the discriminator tries to maximize it.

3.1.3. DeepDream and Algorithmic Enhancement

DeepDream, developed by Google, enhances and overinterprets images using convolutional neural networks, creating dream-like visuals by accentuating features detected by the model [

3]. The model applies feature visualization techniques to enhance edges, patterns, and colors, producing images that often resemble psychedelic versions of the original.

4. Performance Comparisons: Models and Art Style Evolution

To evaluate the impact and quality of different machine learning approaches on art creation, we compare models including NST, GANs, and DeepDream. The performance is assessed based on three key parameters: quality of output, computation time, and creative adaptability.

4.1. Quality of Output

NST tends to produce coherent images that effectively blend content and style, making it appropriate for generating a specific artistic interpretation of a given image. GANs, in contrast, have the advantage of creating entirely new compositions, often yielding more imaginative and visually appealing outputs.

Figure 4 shows the quality score comparison of different machine learning models in art generation. This graph compares the quality scores of NST, GANs, and DeepDream on a scale of 1 to 10, highlighting their relative strengths in generating artistic outputs.

4.2. Computation Time

NST is faster than GANs, particularly when applied to images with a pre-trained model. GANs, while more computationally intensive, have shown better quality in generating diverse and unique outputs.

4.3. Creative Adaptability

GANs exhibit high adaptability, capable of learning from diverse datasets and producing novel styles that do not directly replicate the training examples. DeepDream provides limited adaptability but is highly effective at generating enhanced, surreal visuals from existing content.

4.4. Algorithmic Creativity: Impact on Artistic Practices

4.4.1. Expanding Definition of Art

The use of machine learning has sparked discussions about what qualifies as art and who (or what) must be considered the “creator”. Algorithmic art generated by models such as GANs challenges traditional notions of authorship, as the creative process is shared between the human and the machine as shown in

Figure 5.

4.4.2. Collaboration Between Artists and Algorithms

Artists have begun collaborating with algorithms to produce artworks that neither humans nor machines can create independently. Machine learning models provide a new palette for artists, enabling them to experiment with styles that are beyond their usual capabilities [

4].

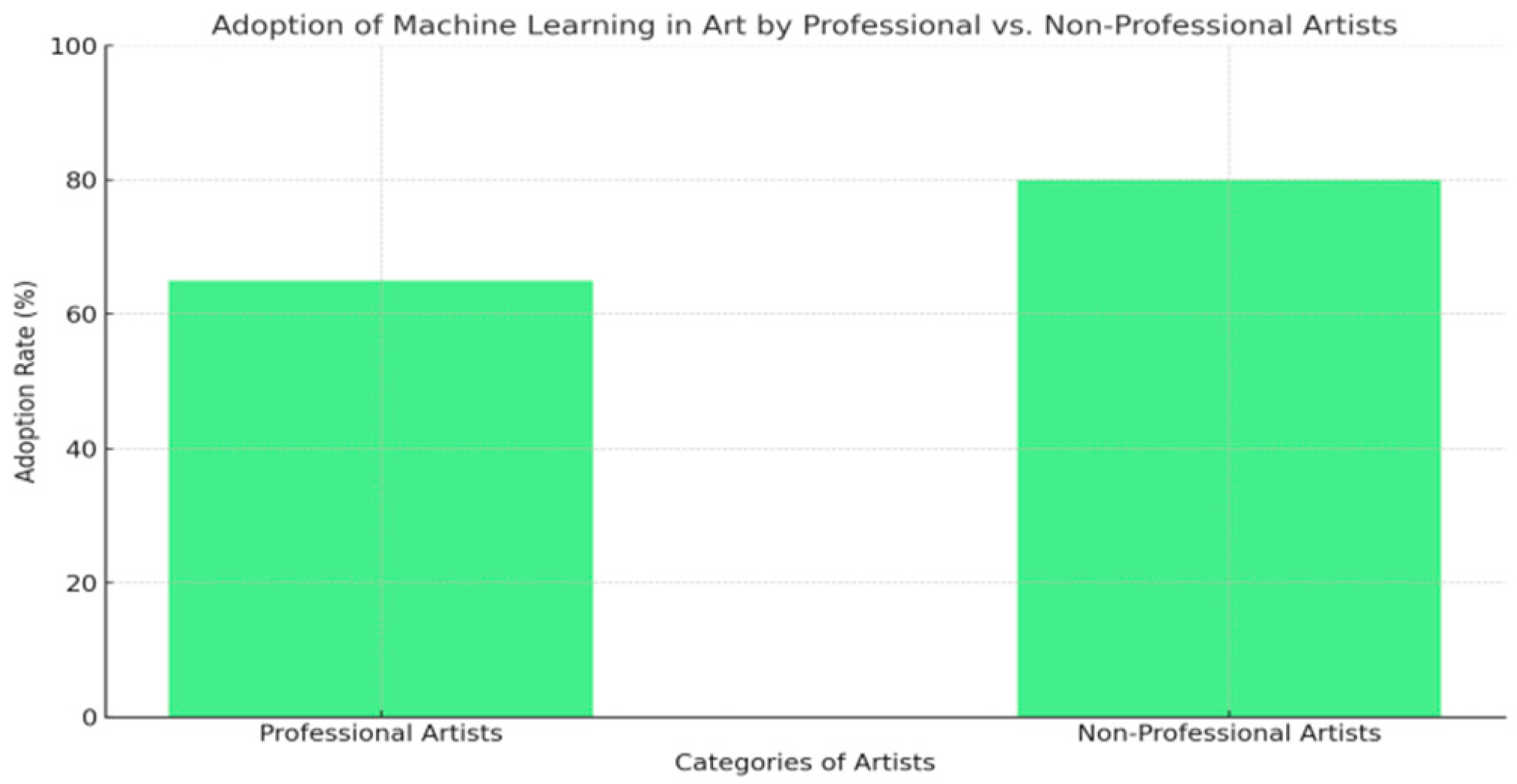

4.4.3. Democratization of Art Creation

Machine learning models have made art creation accessible to a wider audience, allowing individuals without formal training in painting or drawing to create complex artworks as shown in

Figure 6. Tools including DeepArt and Runway ML offer interfaces that allow users to generate artworks simply by uploading images and choosing desired styles.

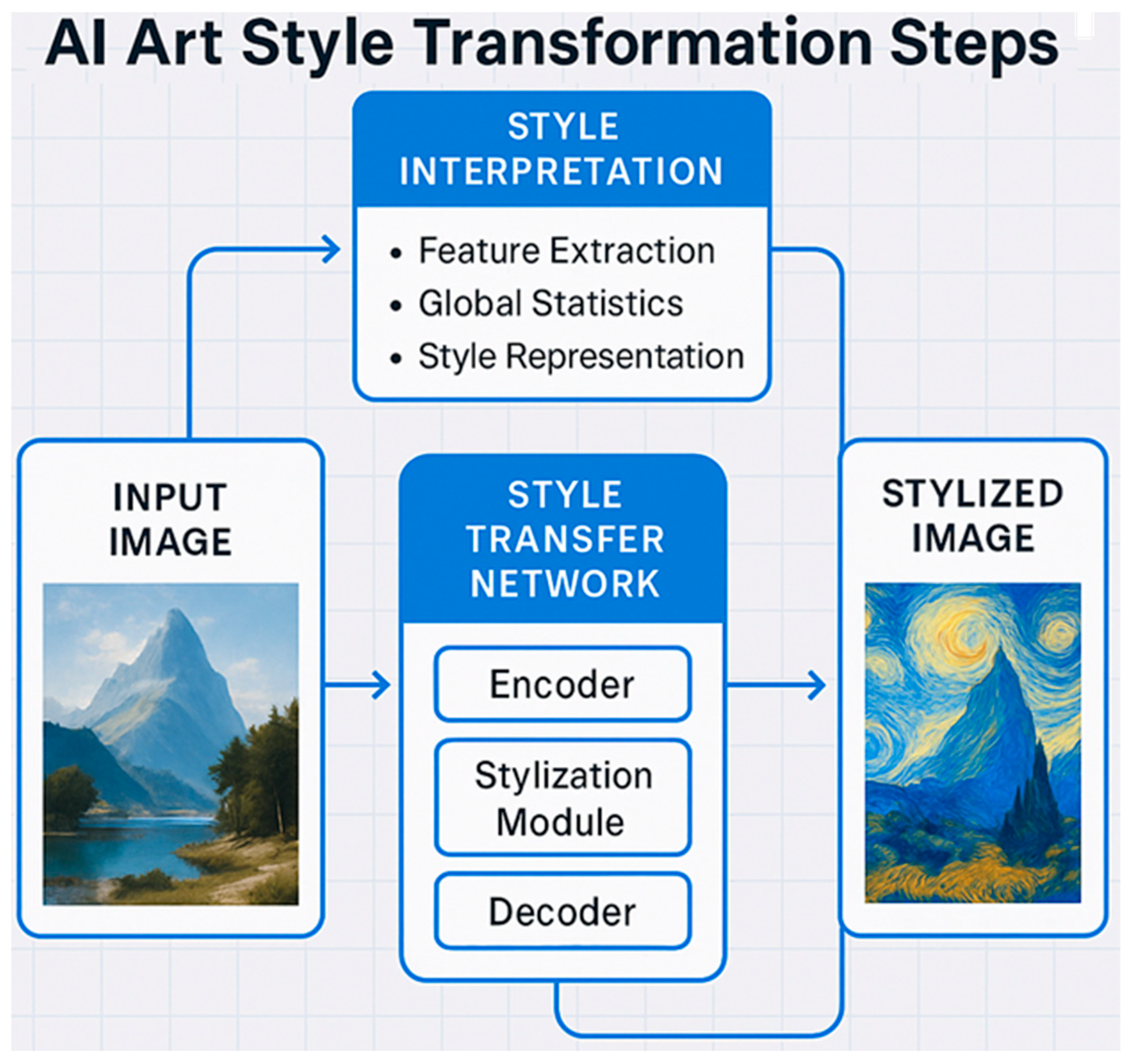

Figure 7 represents the transformation from an original image through blending with a style image to the final output, showcasing the different stages of applying an art style using AI.

Figure 8 depicts the iterative and collaborative process between an AI model and a human artist, emphasizing their partnership in refining and producing creative artwork [

5].

5. Challenges and Future Directions

Training GANs requires significant computational power and resources, which can limit accessibility for independent artists. Models trained on biased datasets may produce biased or unoriginal outputs, limiting the diversity of the generated artworks [

6]. The use of machine learning for art creation raises ethical concerns about authorship and ownership [

7]. If an algorithm produces a unique work of art, questions arise regarding who owns the rights to that artwork, the programmer, the user, or the model [

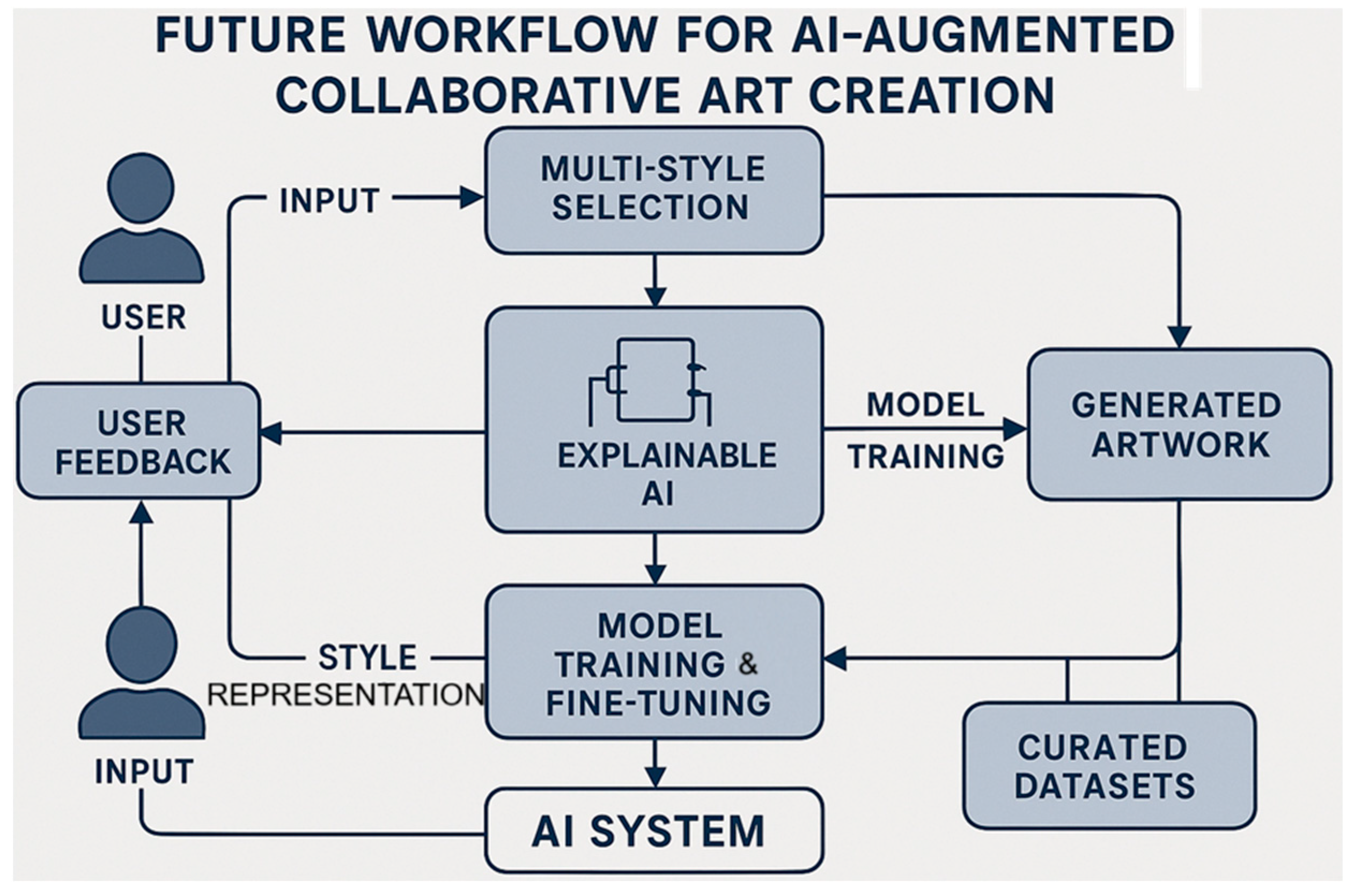

8]. As AI models evolve, there must be an increase in human-machine collaborations, where artists use models not just as tools, but as creative partners [

9]. Future models are needed to explore evolving styles in real time, adapting to audience feedback and generating art that is continually changing [

10].

Machine learning (ML) models heavily rely on training data. In the context of evolving art styles, datasets are often biased toward popular or historically well-documented styles (e.g., Renaissance, Impressionism), while niche, indigenous, or contemporary movements are underrepresented. Models may reinforce dominant aesthetics while marginalizing less mainstream styles, limiting true exploration of stylistic diversity. StyleGAN trained on Western classical art often fails to adequately reproduce indigenous Aboriginal or contemporary urban art without extensive retraining [

11].

Creativity and artistic value are inherently subjective, varying greatly across cultures, contexts, and individual perceptions. There is no universally accepted metric for evaluating "creative success" in machine-generated art. Most current evaluation methods (e.g., Fréchet Inception Distance - FID, or human surveys) fail to capture the nuanced emotional and cultural impacts of art. An ML model might produce visually complex outputs but fail to evoke the intended emotional resonance in different audience groups [

12].

Most machine learning models, particularly those based on supervised or unsupervised learning (e.g., CNNs, GANs), excel at imitation rather than true innovation. ML-generated art often recombines existing elements rather than producing genuinely novel styles or paradigms. Deep creativity, such as the founding of Cubism or Surrealism, is still beyond current algorithmic capabilities. Despite variations, outputs of neural style transfer techniques often appear as interpolations between known styles, lacking disruptive originality [

13].

Models trained extensively on specific art datasets risk overfitting, where the generative outputs converge narrowly around particular style parameters. Overfitting reduces model generalization, leading to repetitive and predictable artworks that fail to explore new stylistic territories [

14]. A model trained mainly on Van Gogh paintings might consistently output swirling brushstroke textures even when tasked to generate a "new style."

Training datasets often include copyrighted artworks without explicit permission from the artists. This raises serious legal and ethical concerns about ownership, credit attribution, and the right to remix or monetize derivative works. Several lawsuits have been filed against AI companies for using copyrighted art without licenses in training generative models.

6. Conclusions

Machine learning has reshaped the landscape of art creation, enabling new forms of creativity that blend algorithmic capabilities with human intent. NST, GANs, and DeepDream are the most notable techniques that have influenced modern artistic practices, offering a fresh perspective on style, form, and the role of technology in art. While these technologies present numerous opportunities, they also challenge the traditional understanding of authorship, creativity, and the nature of art. As machine learning continues to evolve, the boundary between artist and algorithm becomes increasingly blurred, opening up new possibilities for what art can be and who or what can create it.