Abstract

Unmanned aerial vehicles, also termed as unarmed aerial vehicles, are used for various purposes in and around the environment, such as delivering things, spying on opponents, identification of aerial images, extinguishing fire, spraying the agricultural fields, etc. As there are multi-functions in a single UAV model, it can be used for various purposes as per the user’s requirement. The UAVs are used for faster communication of identified information, entry through the critical atmospheres, and causing no harm to humans before entering a collapsed path. In relation to the above discussion, a UAV system is designed to classify and transmit information about the atmospheric conditions of the environment to a central controller. The UAV is equipped with advanced sensors that are capable of detecting air pollutants such as carbon monoxide (CO), carbon dioxide (CO2), methane (CH4), ammonia (NH3), hydrogen sulfide (H2S), etc. These sensors present in the UAV model monitor the quality of air, time-to-time, as the UAV navigates through different areas and transmits real-time data regarding the air quality to a central unit; this data includes detailed information on the concentrations of different pollutants. The central unit analyzes the data that are captured by the sensor and checks whether the quality of air meets the atmospheric standards. If the sensed levels of pollutants exceed the thresholds, then the system present in the UAV triggers a warning alert; this alert is communicated to local authorities and the public to take necessary precautions. The developed UAV is furnished with cameras which are used to capture real-time images of the environment and it is processed using the YOLO V3 algorithm. Here, the YOLO V3 algorithm is defined to identify the context and source of pollution, such as identifying industrial activities, traffic congestion, or natural sources like wildfires.

1. Introduction

To detect weeds at the smallest resolvable pixel size (2–4 cm/px), it is important to consider their similar behavior, particularly in terms of color merging, and to differentiate them from the sugarcane crop, which serves as the background. To address this challenge, both color and texture are utilized as key feature representations [1]. A lightweight deep-learning model has been developed for detecting outfall objects in aerial images [2]. This model achieves a high accuracy of 81.5% while utilizing only 2.47 million parameters and 3.95 GFLOPs. Visualization analysis indicates that the model focuses more on true outfall objects. Additionally, there is an algorithm for multi-agent maximum entropy reinforcement learning, referred to as MASAC. Operating as an intelligent node, the UAV base station establishes its own flight path based on the principle of “distributed training—distributed execution”. The PHSI-RTDETR technique is designed for UAV aerial infrared detection of small targets. Additionally, an enhanced backbone feature extraction network is developed using the RPConv-Block [3]. A refined Double Deep Q-Network (DDQN)-based path planning algorithm is proposed [4]. A novel technique is introduced that segments images to retrieve foreground objects [5]. Additionally, a multi-method approach for UAV 3D path planning is presented, utilizing the Improved Dung Beetle Optimization algorithm (IDBO) [6]. A mobile edge computing-enabled multi-UAV network is connected via cellular technology. In a predetermined amount of time, several UAVs carrying out missions fly from a starting point to a termination point [7]. An efficient iterative algorithm that applies successive convex approximation and block coordinate descent methods is proposed. This paper presents a collaborative offloading technique for UAV applications that makes use of the benefits and capabilities of fog and cloud computing [8]. This strategy seeks to deliver the necessary resources and services in real time while minimizing the service latency and energy consumption of UAVs. When flying at a higher altitude, the photographs taken will be of a lower resolution in order to cover a greater region in less time [9]. Even with the difficulties caused by overlapping vegetation, shifting ground sampling distance (GSD), and shadow effects, this method produced positive results. This is particularly true when the GSD value is below 0.45 cm. We offer a new method, C DeepLabV3+, for road segmentation [10]. The coordinate attention (CA) module gathers precise data on segmentation targets and produces smoother edges for those targets, and it is a new algorithm feature. A trajectory and offloading decision-making system (H-TAOD) on the basis of hierarchical reinforcement learning for UAVs with multi-channel parallel processing is developed in [11]. A feedback linearization transformation technique is utilized to handle the nonlinear coupled flight dynamics in attitude control and lessen the computing load of MPC [12]. A low-cost spatiotemporal [13] synchronization system uses a timestamp-driven pulse width modulation sampling method in conjunction with notable ground characteristics for UAV’s adaptability in various landscapes for its flight. Focus track is an innovative framework that adaptively narrows the search area and enhances feature representations, striking an ideal equilibrium between computational efficiency and tracking precision [14].

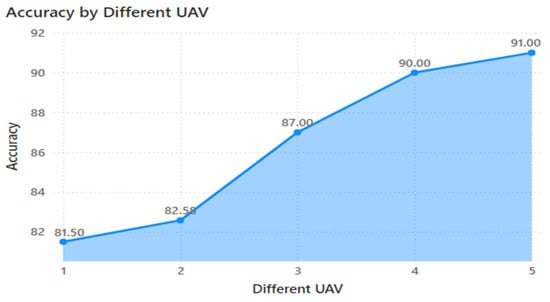

From the entire analysis made, the conclusion attained is that a UAV model can progress only a single work at a time, and multiple actions in a single UAV model will lead to improper outputs. UAVs face latency in their real-time processing as a result of limitations in bandwidth and energy supply; their classification for smaller objects is very limited, and the accuracy is also very low. For complex detection and classification, using a lightweight algorithm achieves up to ~81.5%, and the classification of dynamic object detection is insufficient. So, here is a quadcopter that performs two different processes at a time:

- Classifying garbage present in the environment by passing the information to the municipality department.

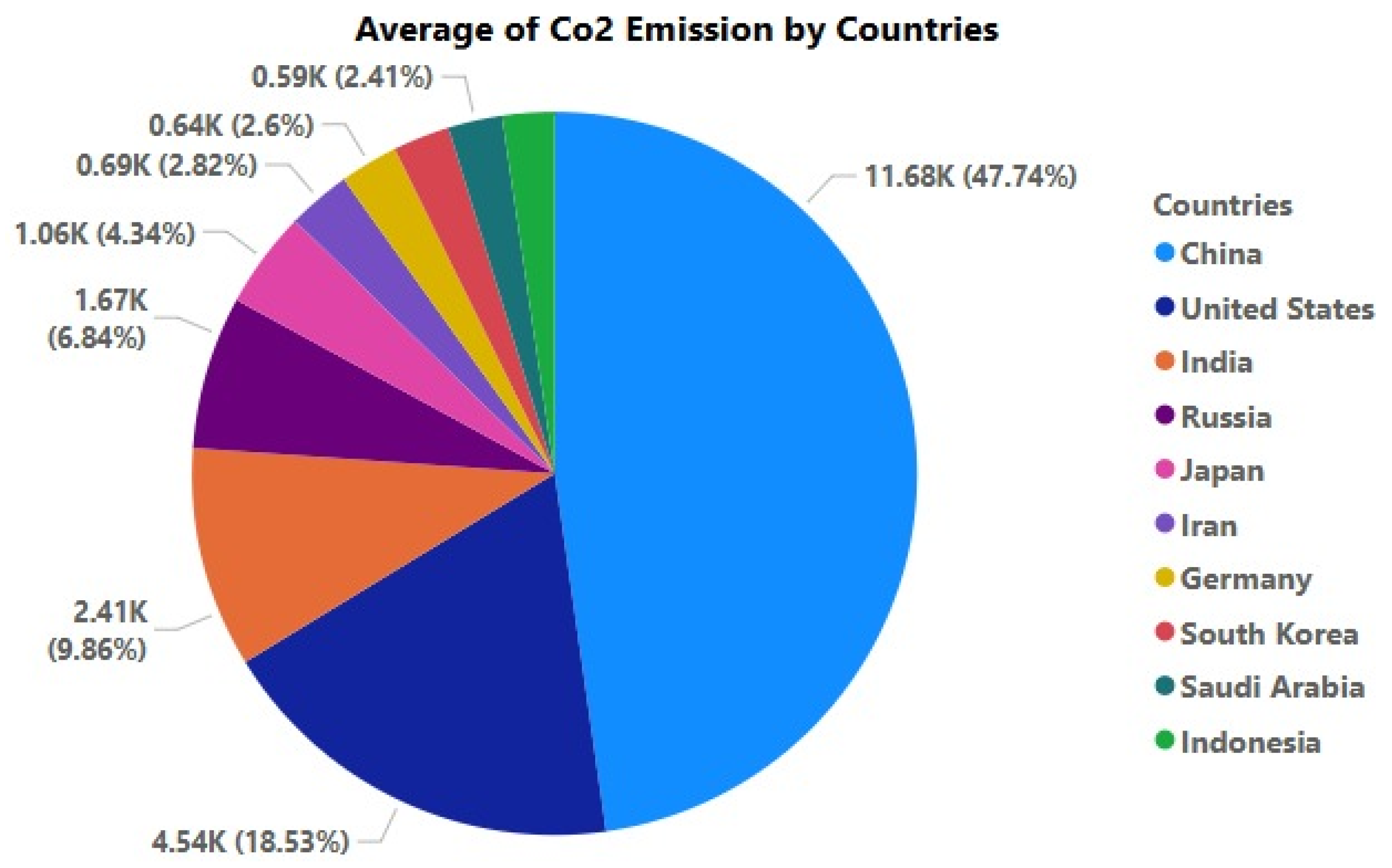

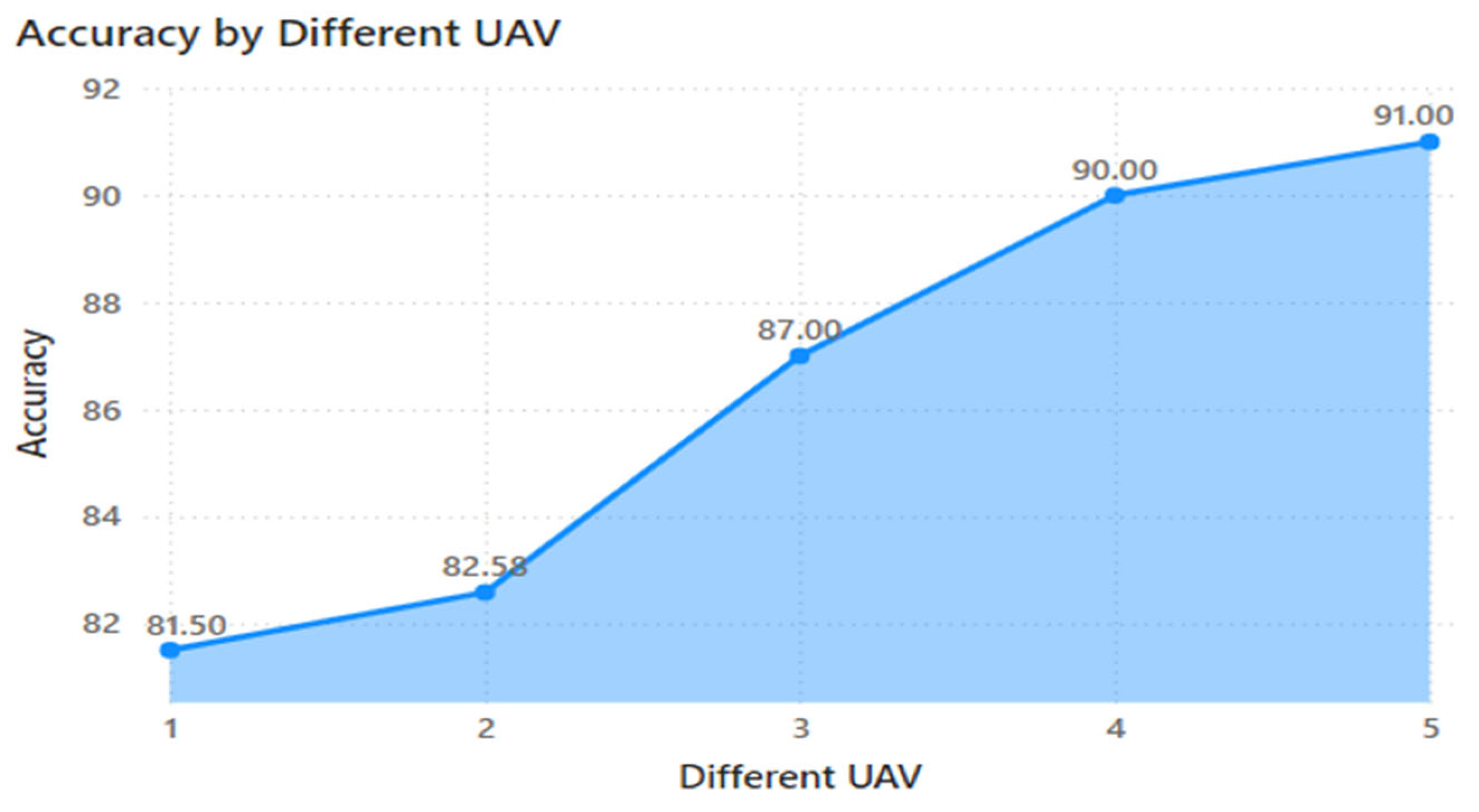

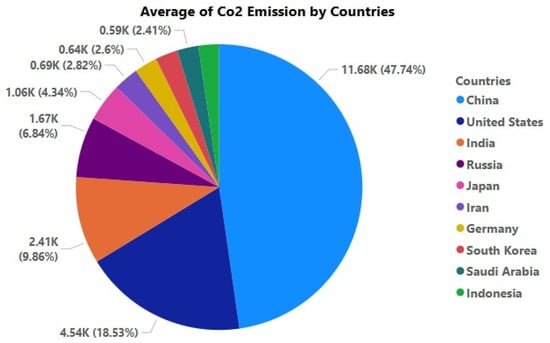

- Monitors the air pollution around the society and alerts the people with an alarm to warn about the condition present. The accuracy attained by the UAV model is 91%, which is more when compared with the previous model. Table 1 represents the comparative study made. Based on the analysis made from Worldometer, the average deployment of CO2 emissions in the atmosphere by some of the countries is mentioned in Figure 1.

Table 1. Recent research.

Table 1. Recent research. Figure 1. Analysis on CO2 emission rate.

Figure 1. Analysis on CO2 emission rate.

2. Simulation Design of Air Monitoring System

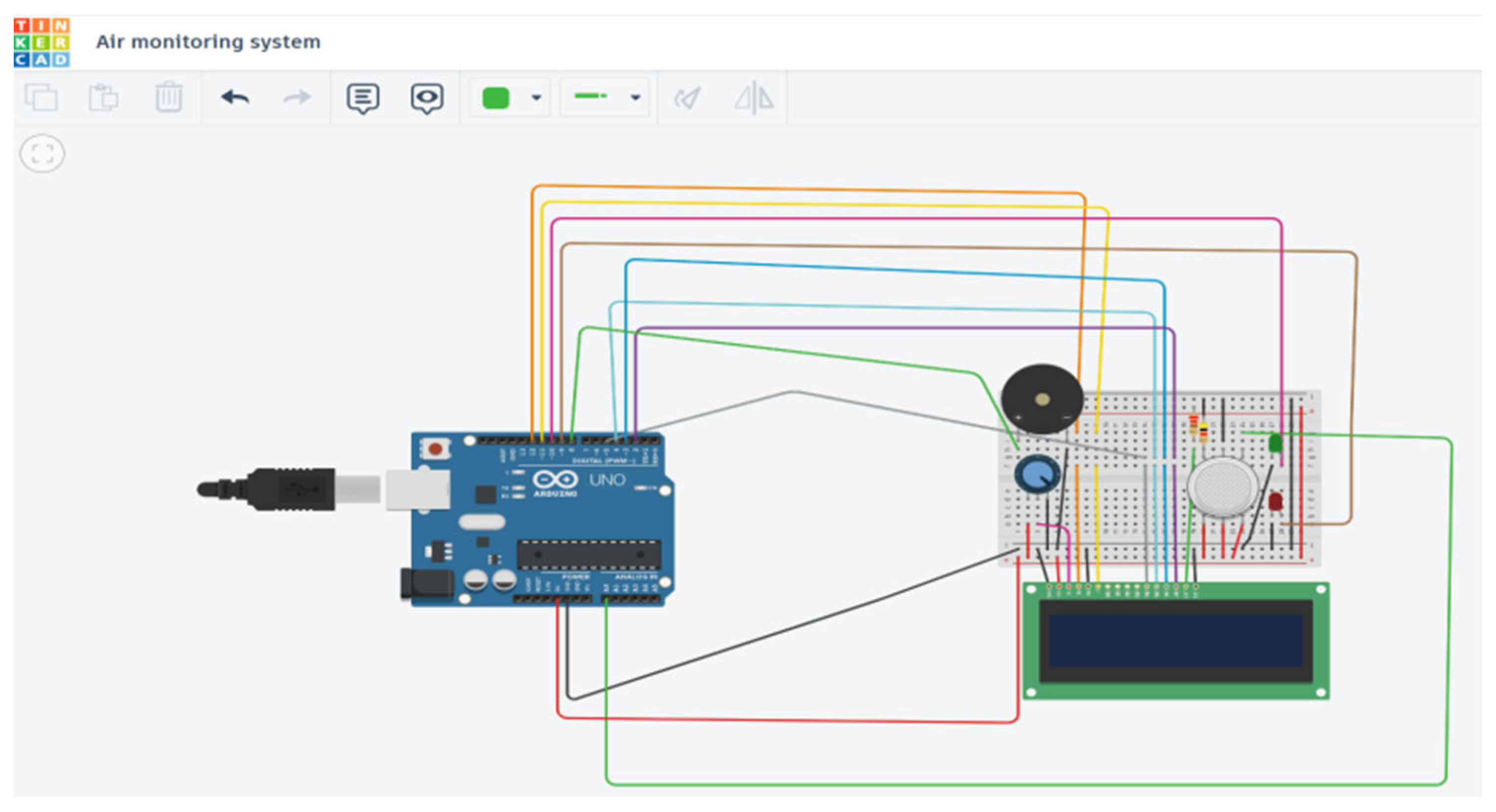

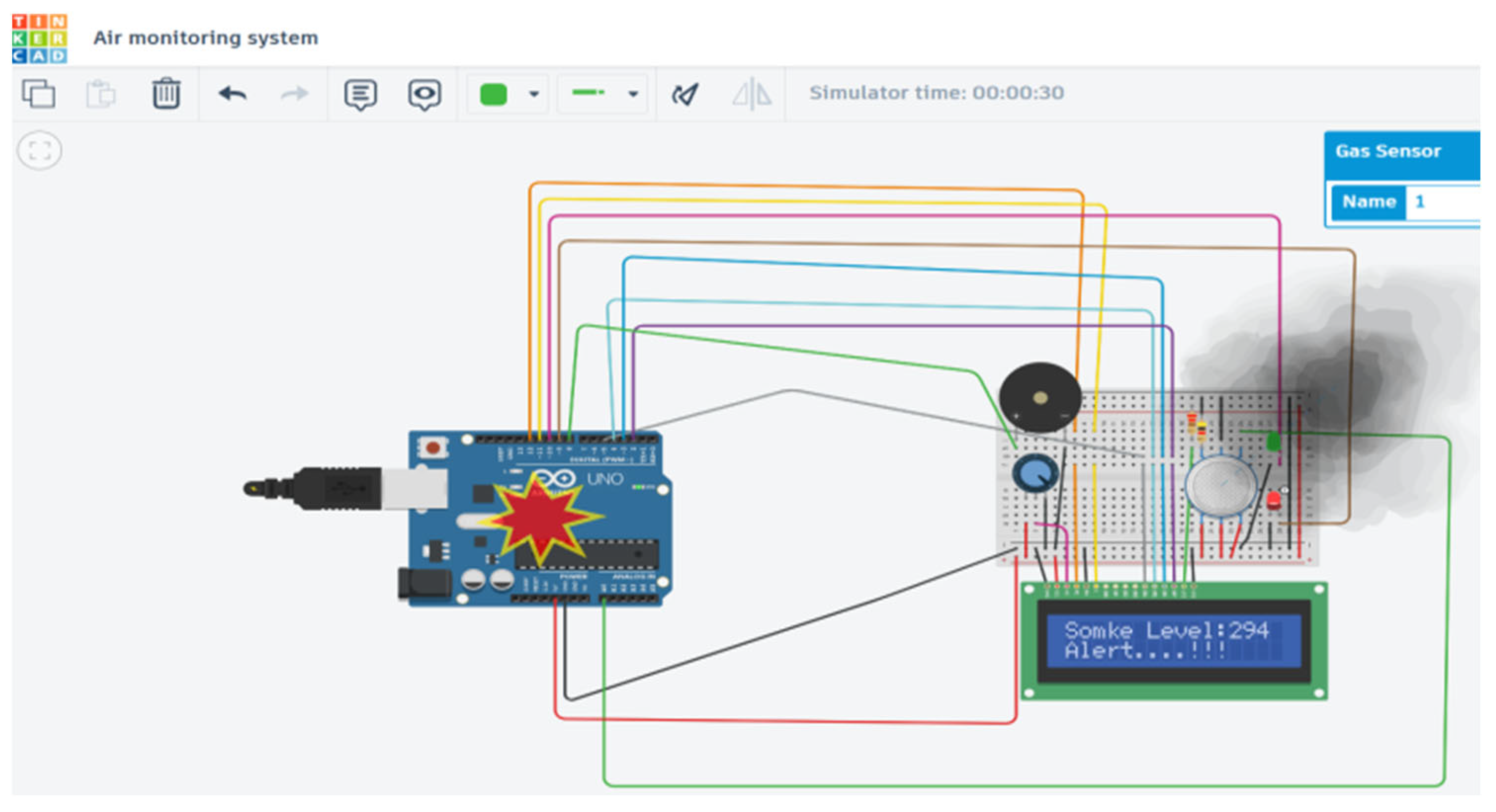

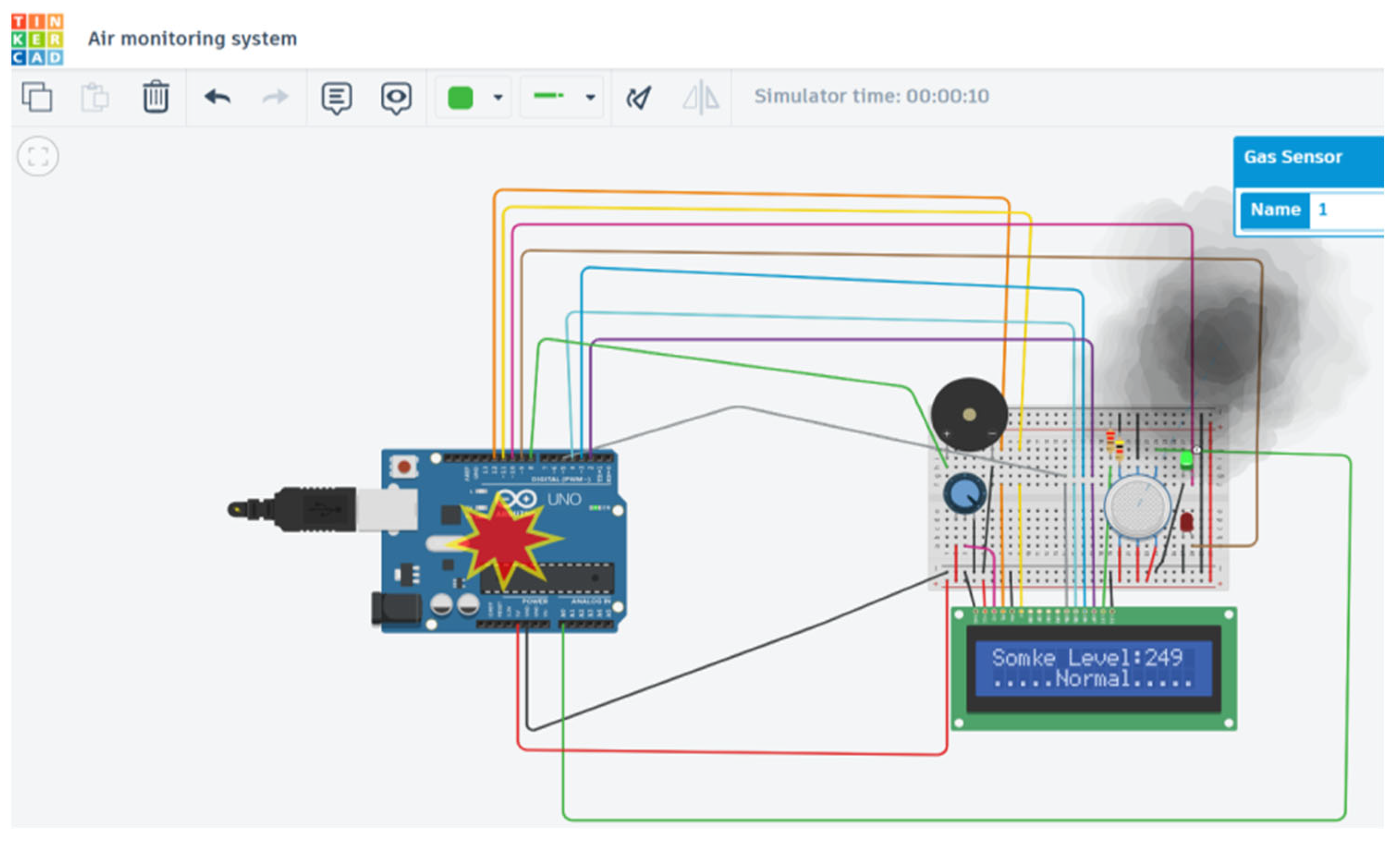

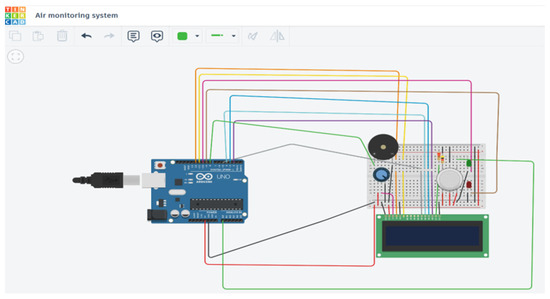

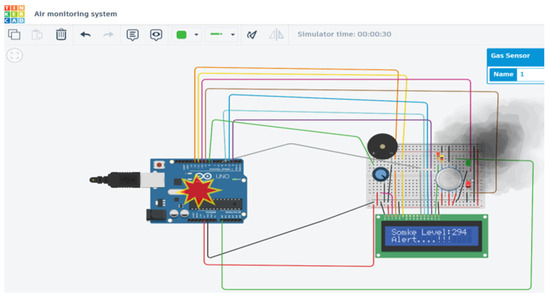

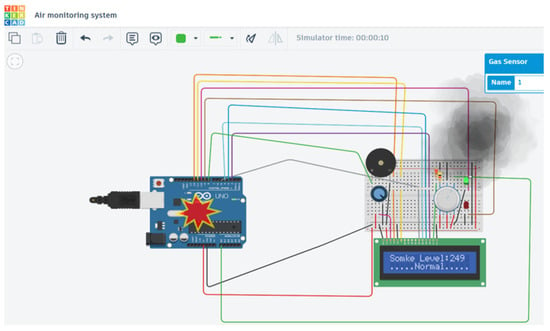

Herein, the model for simulating air pollution monitoring was developed using Tinker-cad software V6, incorporating various components such as a breadboard, a microcontroller, an air quality sensor, an alert system, and an LED display, as illustrated in Figure 2. The analysis begins with the system in the OFF state, as shown in Figure 3. Figure 4 demonstrates the system’s output when it is turned ON, with a threshold limit set at 250 for the Air Quality Index (AQI). When the AQI exceeds this limit, the system activates an alert through a speaker, warning that the air quality is poor and advising individuals to avoid prolonged exposure in the area.

Figure 2.

Simulation model in OFF state.

Figure 3.

Simulation output within threshold limit.

Figure 4.

Simulation output when threshold limit exceeded.

3. Drone Design

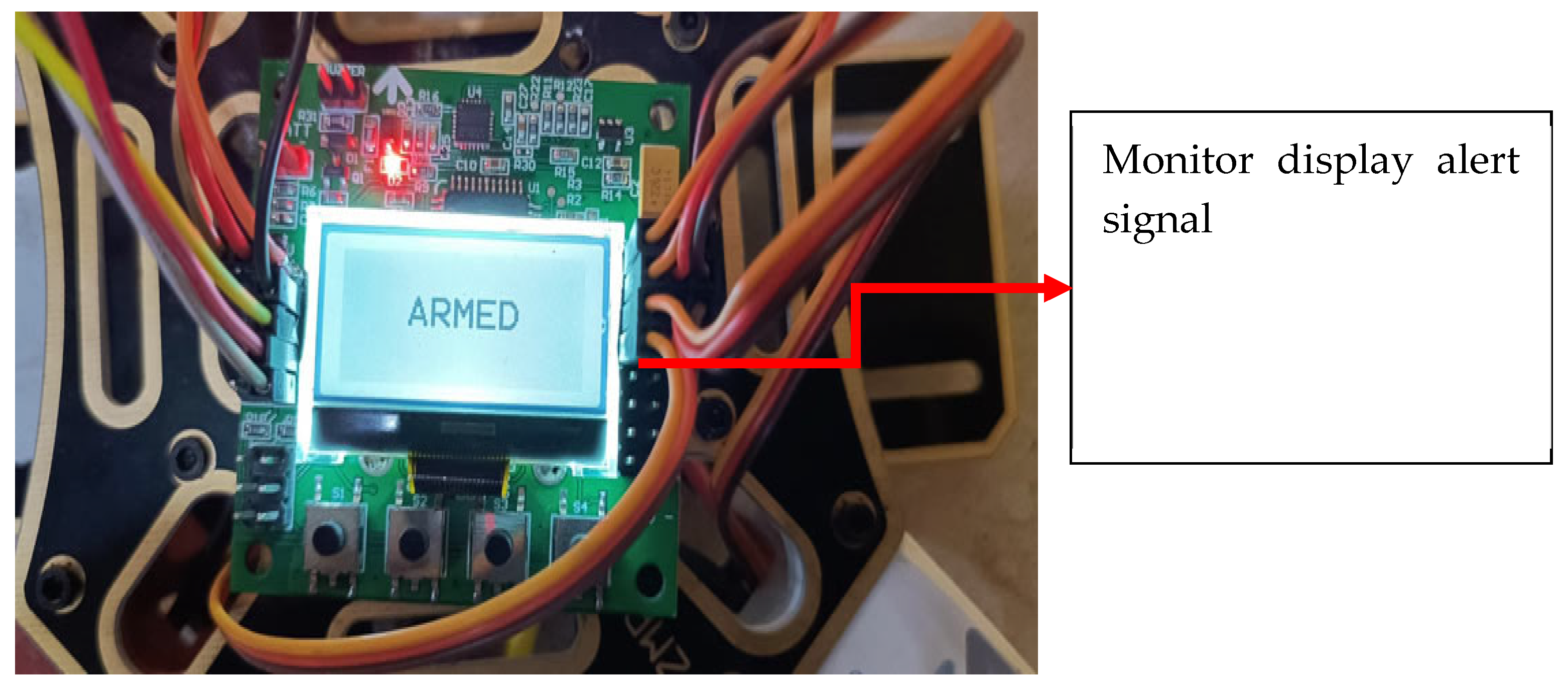

The system includes a microcontroller that processes tasks, a gas sensor that monitors the surrounding AQI levels, a speaker that emits warning sounds during hazardous conditions, and an LED monitor that displays the current air quality status in a digital format which makes the system activate on the warning conditions and not operate on normal conditions.

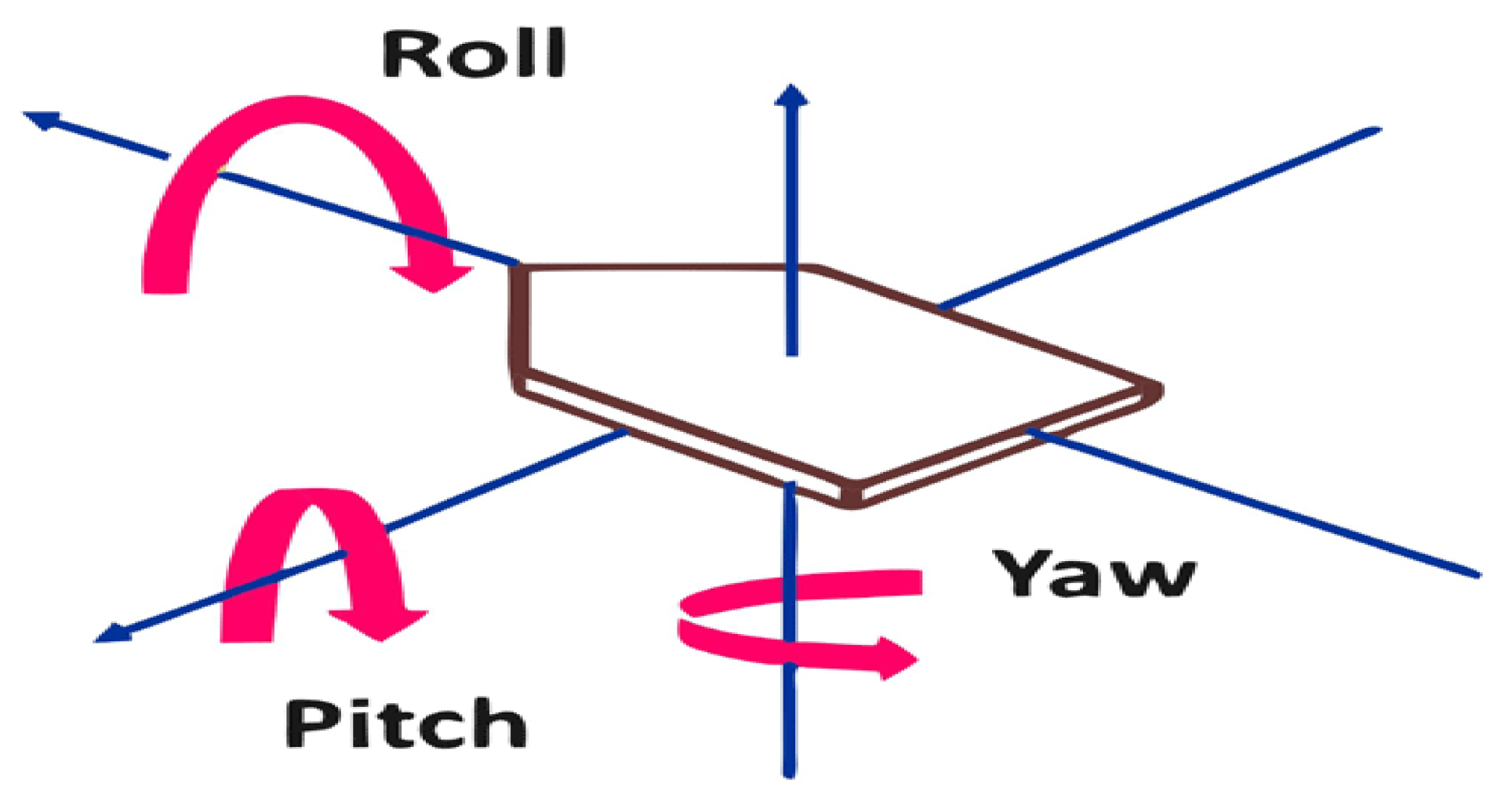

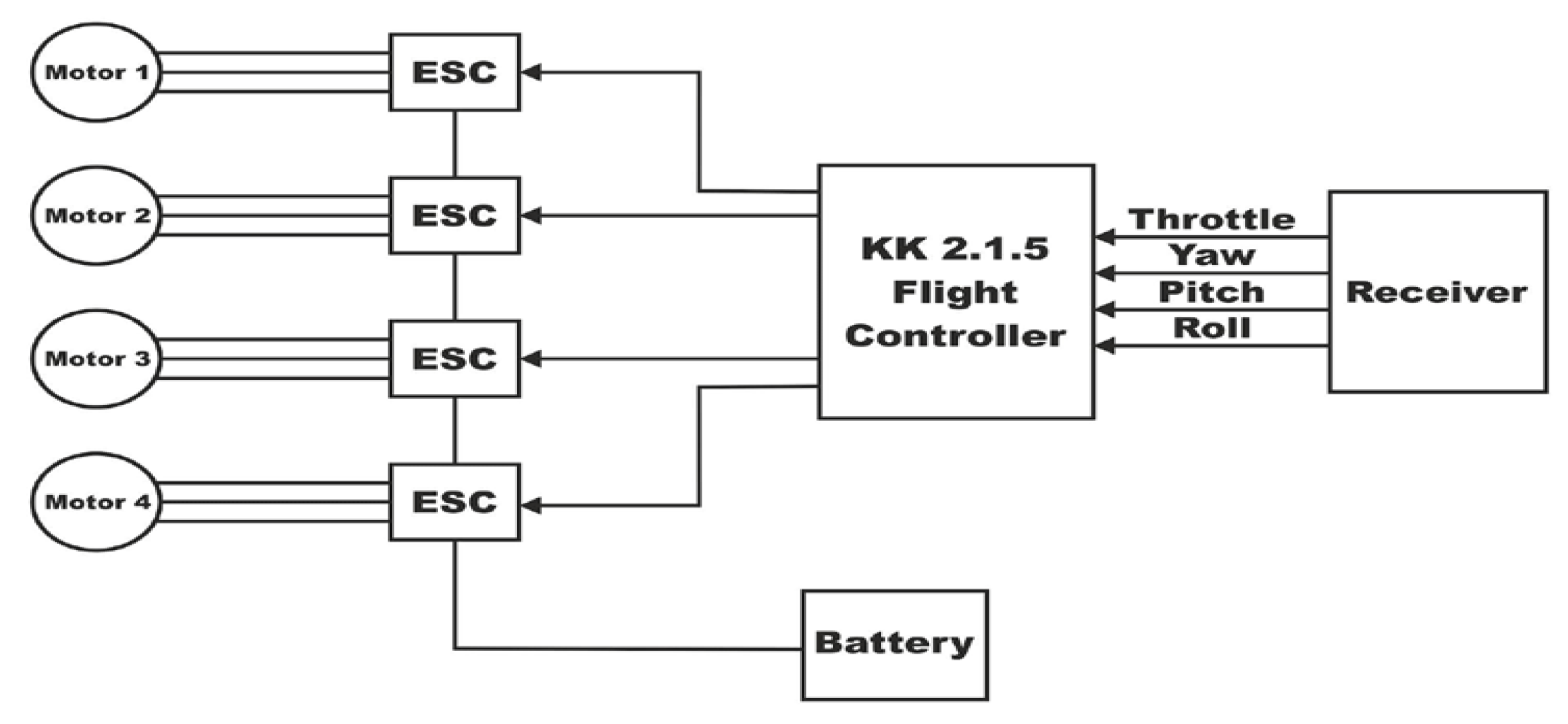

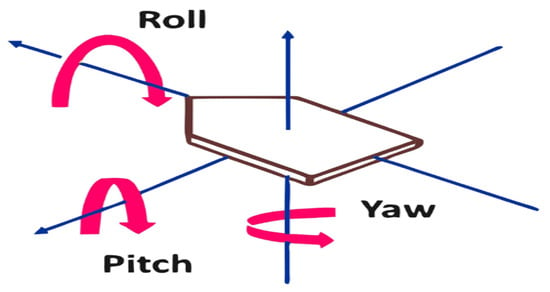

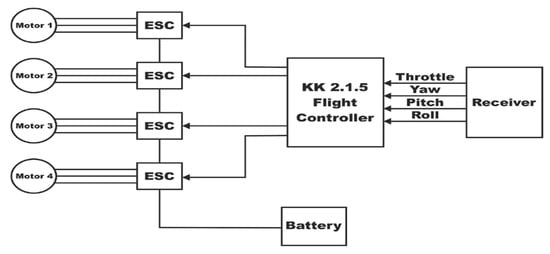

In general, the UAV design depends on three characteristics, as shown in Figure 5: pitch, yaw, and roll. Roll refers to the tilting of the drone either to the left or right. The pitch indicates the drone tilting either forward or backward. Yaw signifies the rotation of the drone either clockwise or counterclockwise, which is essential for classification in the model. Figure 6 represents the schematic design of the quadcopter. Through the receiver, the signal and direction flow take place, and from the received command, the UAV performs the required operation. The electronic speed controllers (ESCs) are used for speed control in each direction.

Figure 5.

Quadcopter characteristics graph.

Figure 6.

UAV schematic diagram.

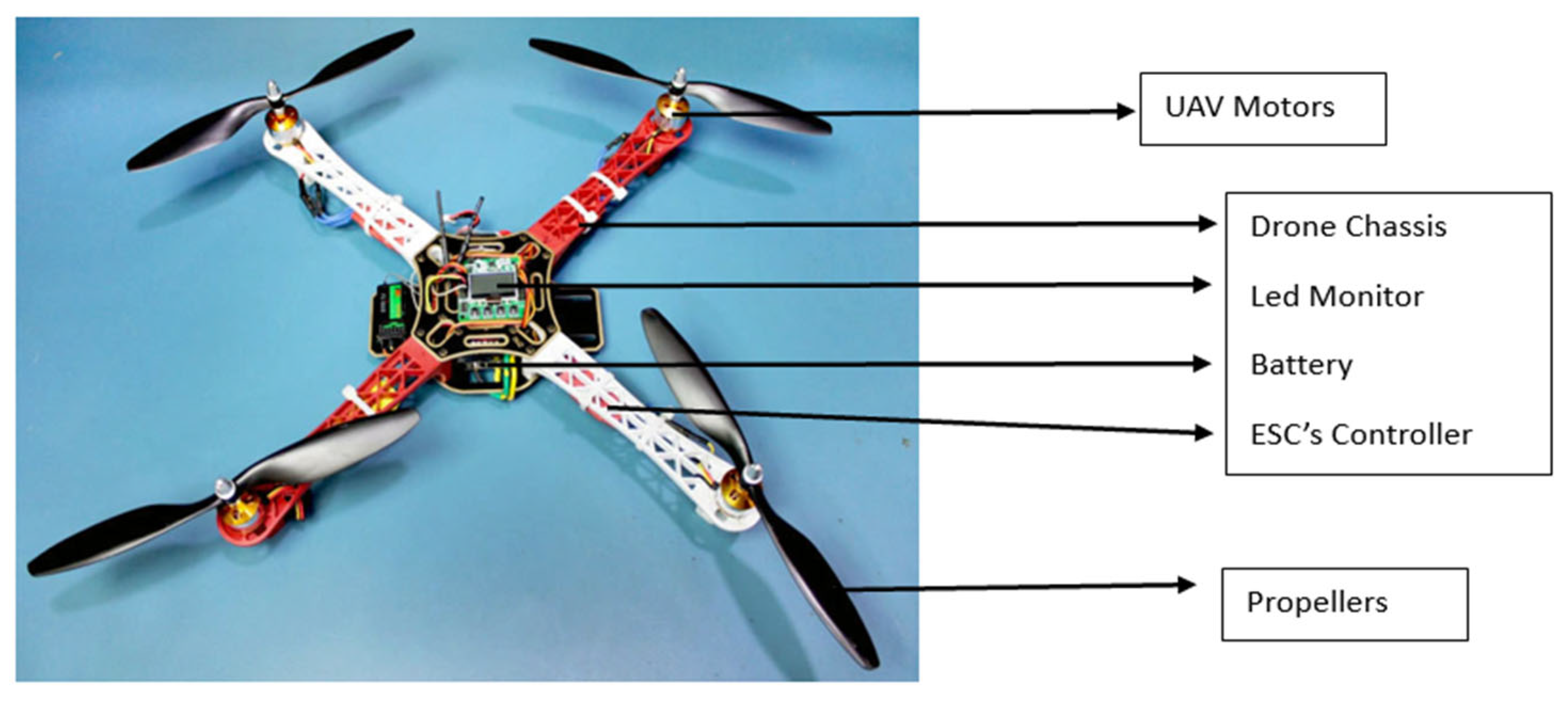

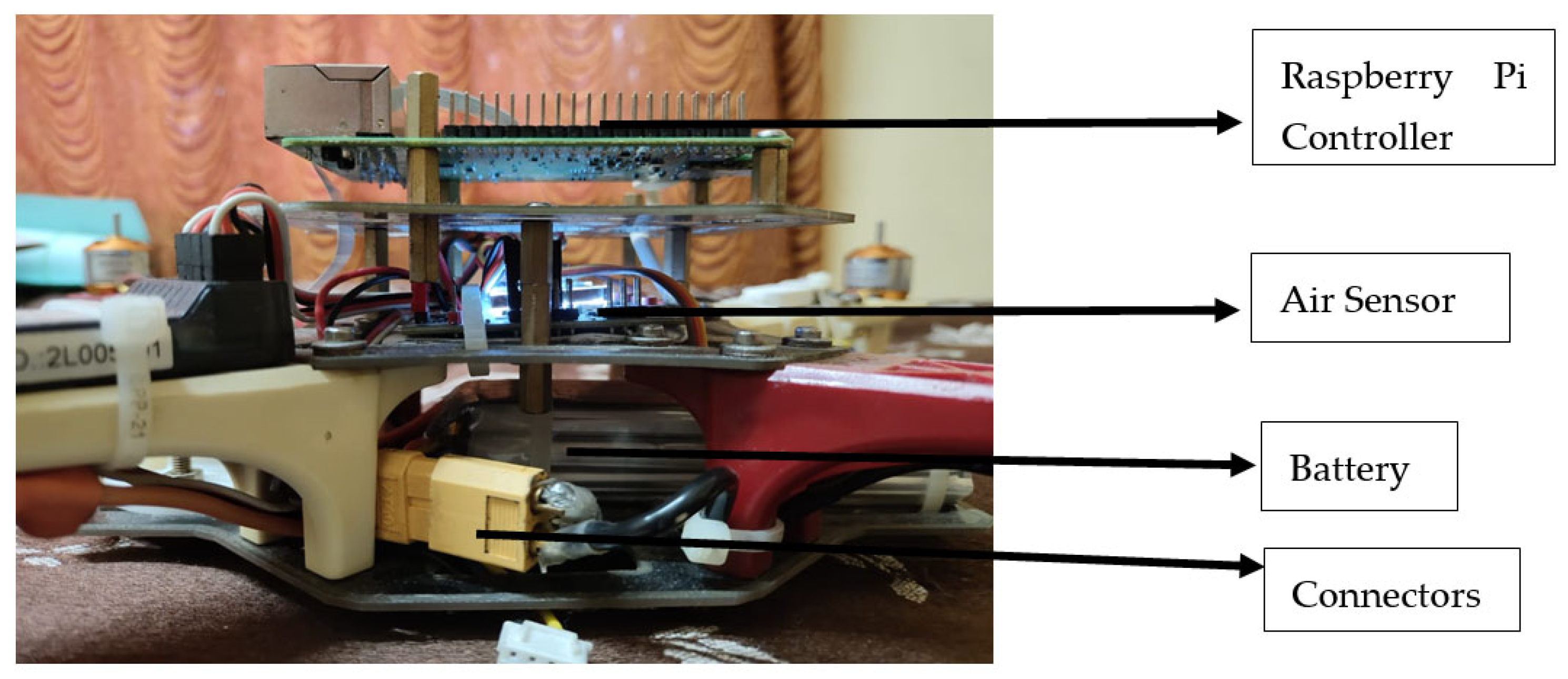

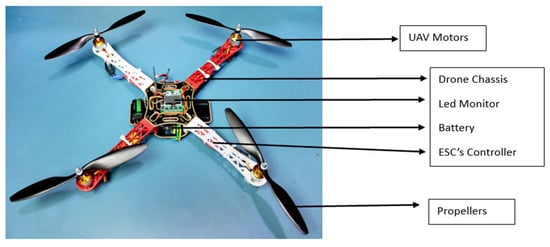

The power connection is provided by connecting the receiver’s power (often 5 V) and ground connections to the flight controller’s matching pins. Link the signal output from the receiver to the flight controller’s relevant input channel. The receiver and flight controller will determine if this is a single serial bus (SBUS), pulse position modulation (PPM), or many pulse width modulation (PWM) channels. One of the ESCs’ inbuilt battery eliminator circuits (BEC) or a different power distribution board (PDB) is typically used to power the flight controller. The signal inputs on each of the four ESCs should be connected to the flight controller’s signal outputs, which are usually designated as M1, M2, M3, and M4. A motor and an ESC are linked. Make certain that the three wires on the motor are linked to the three-phase outputs of the ESC. One way to make sure the motors spin in the right direction is to change the connection sequence. The ESCs, if they have power leads, can connect directly to the battery or to the power distribution board (PDB). Normally, the PDB is used to link the power supply to all ESCs. In order to provide thrust, the motors spin the propellers. The motors’ 2000 RPM per volt provided is shown by their 2000 KV rating. The flight controller analyses sensor data, such as accelerometer and gyroscope, and receives input from the receiver (the pilot’s orders). To regulate the drone’s movement and preserve stability, it uses the ESCs to modify the motors’ speed. The system is powered by the battery. The drone’s capacity (2200 Mah) and discharge rate (C rating) dictate how long it can run before requiring a recharge. The assembly view of the quadcopter with drone chassis inbuilt with four motors (M1, M2, M3, M4), powered with four propellers to lift the drone, and the controller unit to control the UAV and the functions done by the UAV; the entire model is powered by battery, and the LED monitor to display the state of the UAV is represented in Figure 7. The UAV alerts the general public with an alert system to make sure that the environment around them is safe and secure for their health conditions. All the classifications are made on its flight time as represented in Figure 8, from which the UAV itself classifies the presence of garbage in a particular location and informs the department for collecting garbage about its presence.

Figure 7.

Prototype of the UAV model.

Figure 8.

Identification on image classification condition (TRUE).

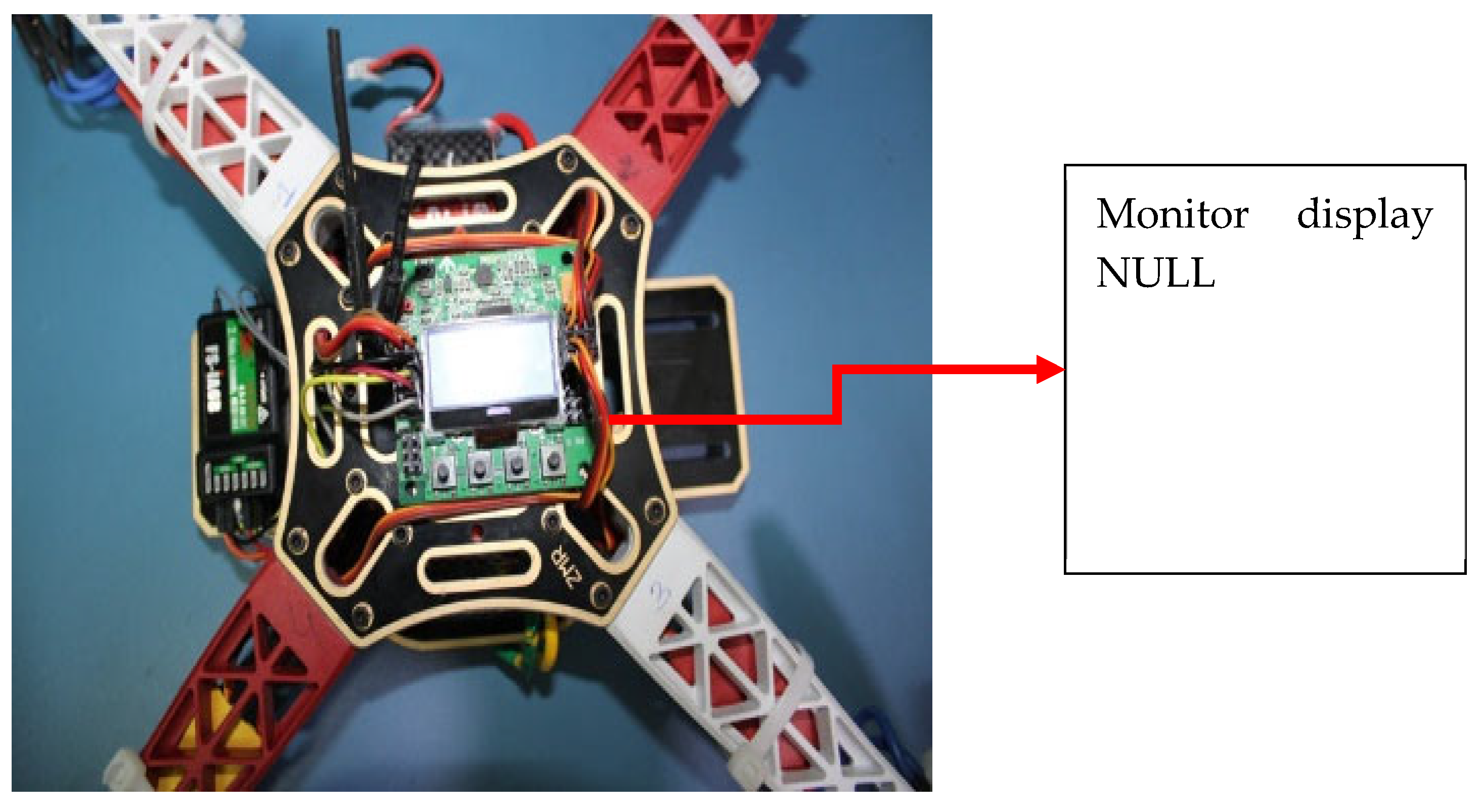

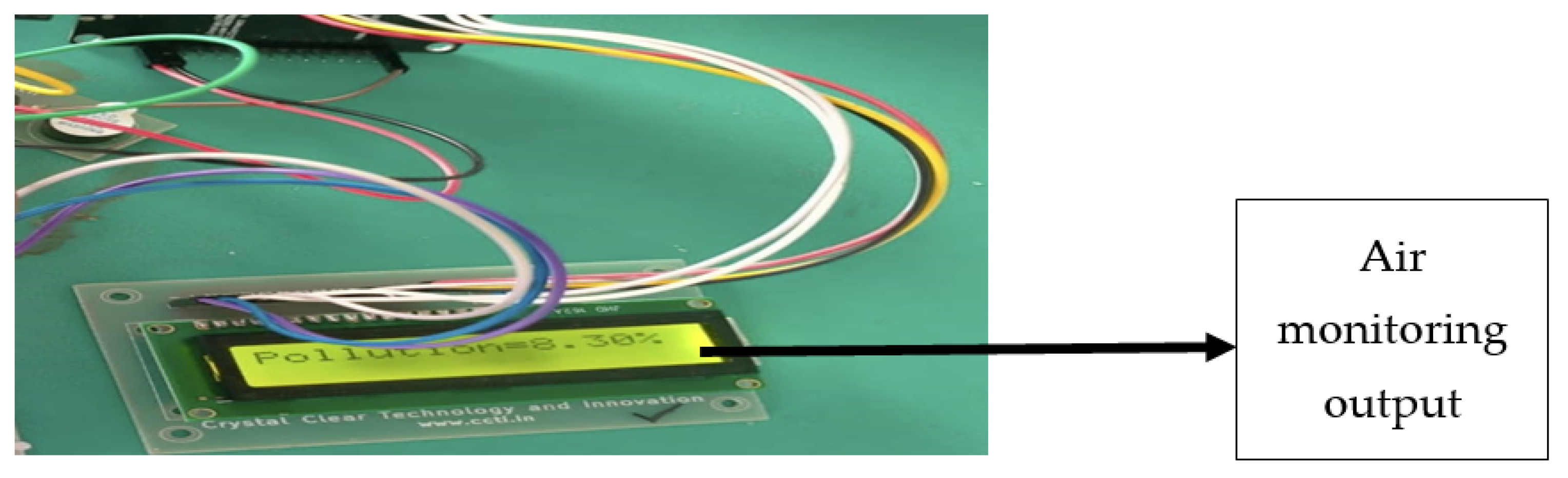

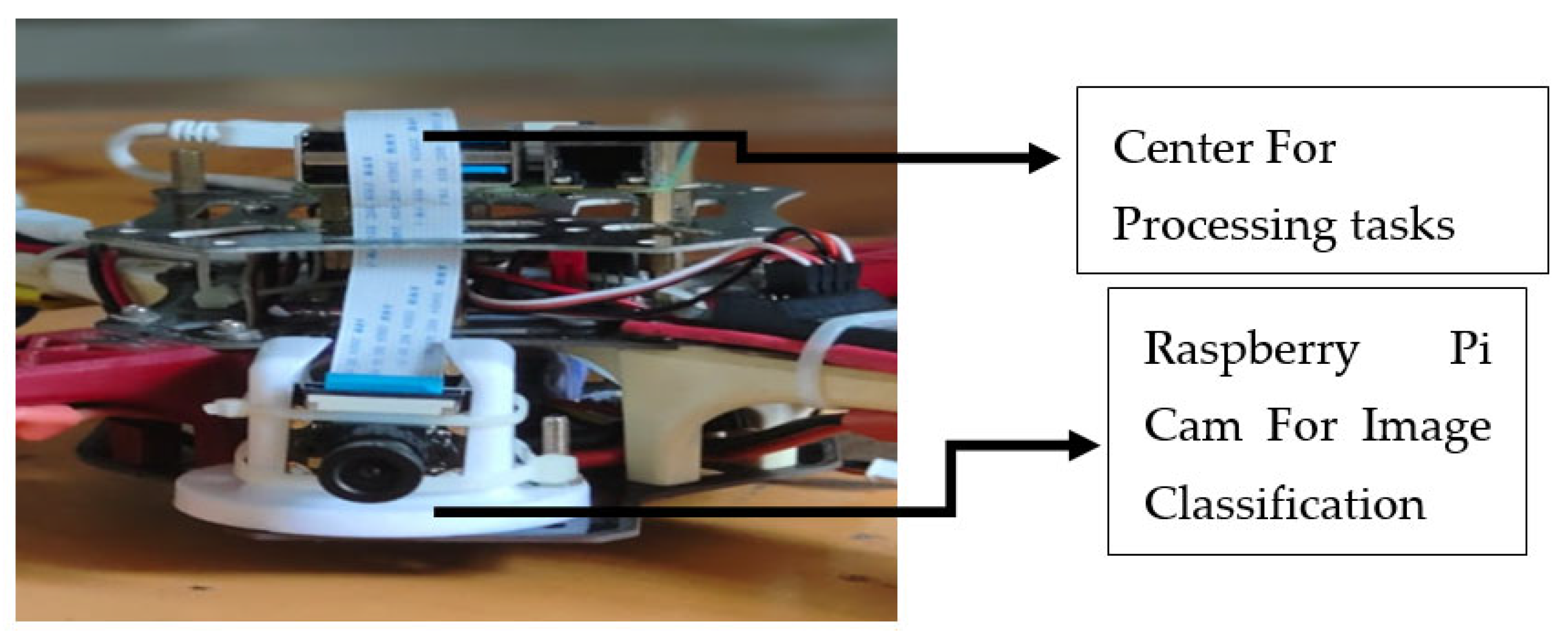

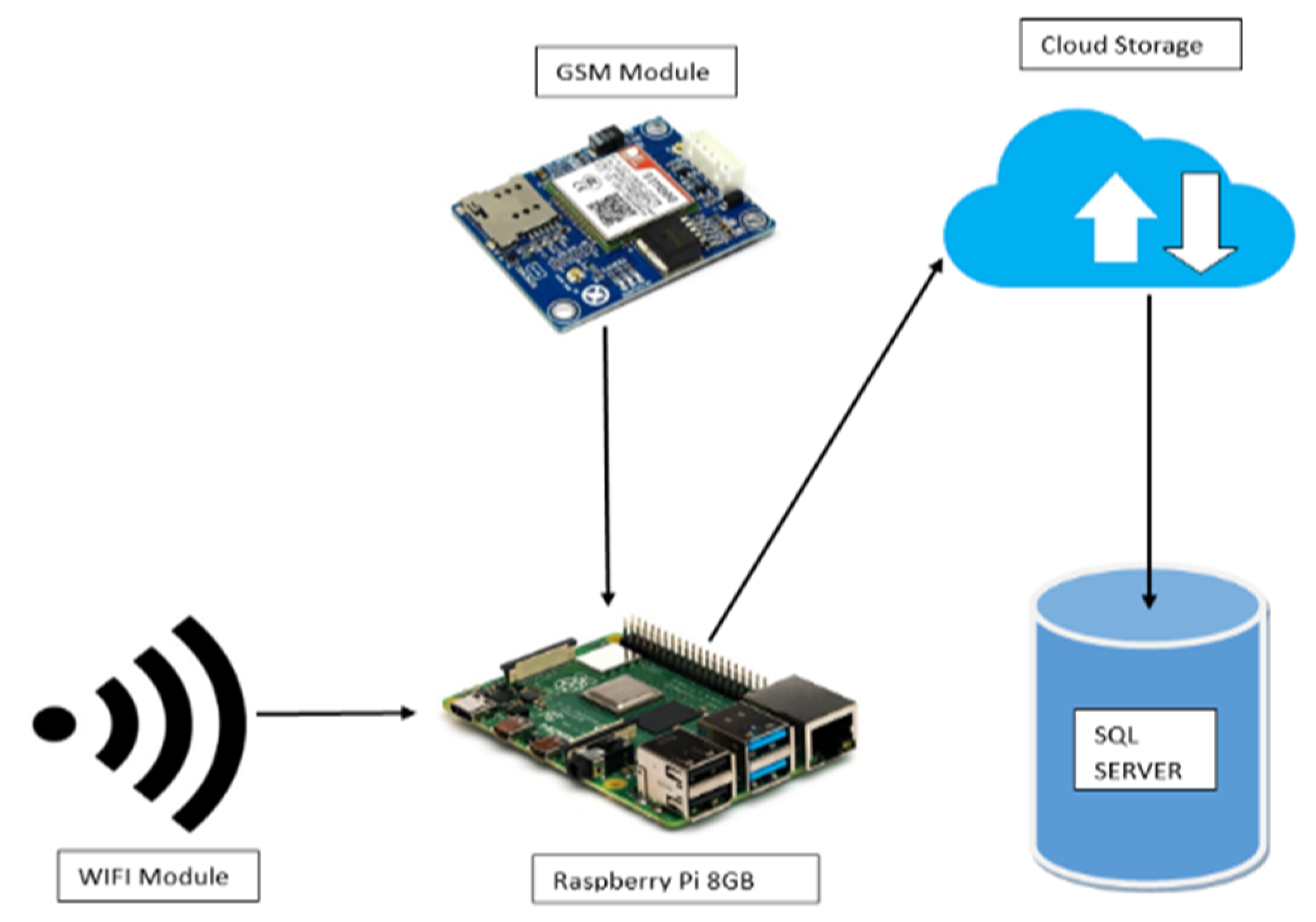

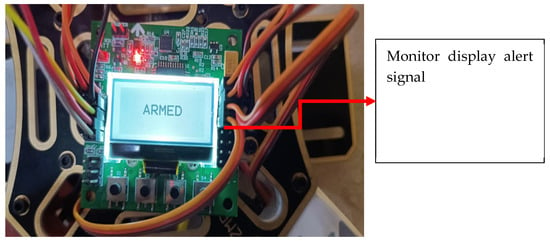

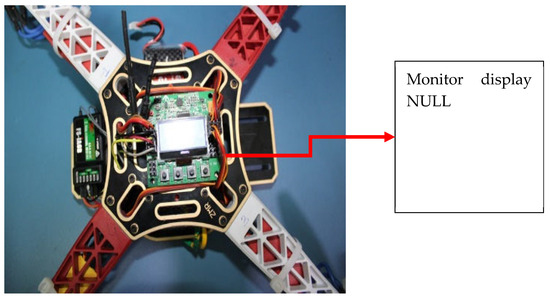

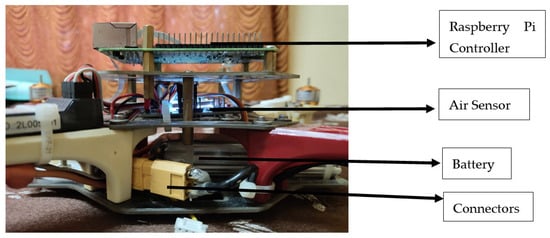

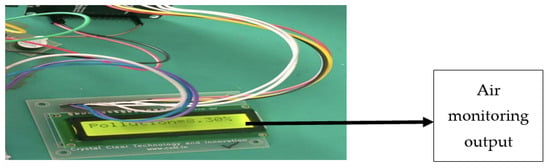

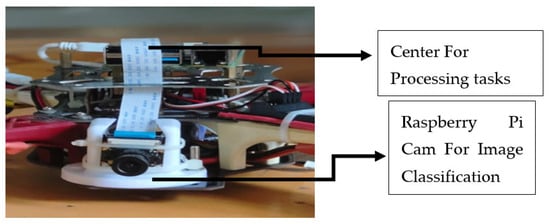

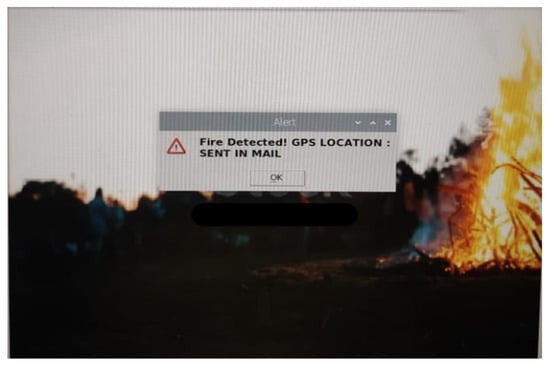

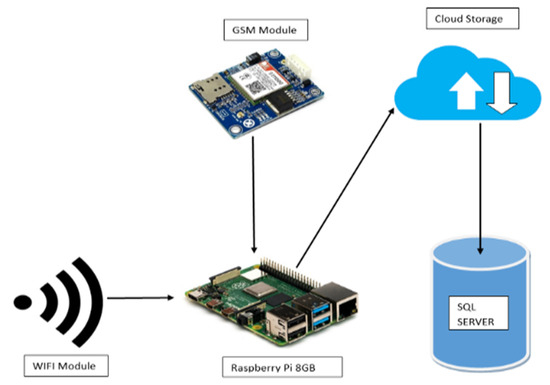

The Figure 9 represents the image classification done by the UAV model on its flight time. If there is no presence of any issues passed to the UAV model as a command, then the UAV returns NULL in the LED monitor to represent no issues identified yet. The air quality monitoring setup in the UAV model is integrated for knowing the AQI level of air around the UAV model, as represented in Figure 10, and the output for the air quality monitoring system is provided in Figure 11. After classifying the important air pollutants, details such as CO2, O3, and CO are collected, and the output is given in the form of an alert to the surrounding people to be aware of the air quality situation in the environment. The image classification technique used in the UAV for classifying the functions of the UAV in its flight time is illustrated in Figure 12 and is executed by the YOLO V3 algorithm. As demonstrated in Figure 13, the UAV automatically alerts individuals with warning messages indicating that the surrounding environment is unsafe for travel, allowing them to avoid passing through the area where the issue has occurred. The data flow of the UAV model is shown in Figure 14, where WIFI and GSM handle communication with the Raspberry Pi and the cloud. Raspberry Pi collects and processes data and sends it to the cloud. Cloud services receive, process, and store data. The SQL database stores structured data for retrieval and analysis. This setup ensures efficient data collection, processing, and storage, enabling robust analytics and monitoring capabilities.

Figure 9.

NULL output when condition (FALSE).

Figure 10.

Side view of UAV.

Figure 11.

Air Pollution monitoring output.

Figure 12.

Image classification (Yolo V3) model on UAV.

Figure 13.

Alert message output.

Figure 14.

Data flow of the UAV model.

Table 2 shows the comparison between the proposed UAV’s application that offers to monitor air pollution compared to terrestrial or satellite-based instruments.

Table 2.

Advancement of proposed UAV.

4. Conclusions

The analysis conducted shows that the proposed UAV model achieves an accuracy of 91%. Figure 15 presents a comparison of accuracy, highlighting improvements in the classification technique. These enhancements result in faster classification, allowing the UAV to recognize objects more quickly, which in turn facilitates faster communication by the drone. The use of AI and drone technology to monitor air pollution and identify litter is an effective tool for public health and environmental management. As the globe is being severely polluted by day-to-day activities, there is a huge rise in land and air pollution levels, which makes the public sustain their living in those environments. The use of this model will be helpful to prevent the further extension of pollution around the globe and maintain its sustainability for future generations. Future enhancements to the system will aim to improve its accuracy and the coverage of the system will be improved by adding solar panels for battery charging purposes and to have backup sensors for the long run of the system. It is important to incorporate real-time data analytics and expand their use for additional environmental monitoring and management tasks. The implementation of autonomous systems for these operations can further improve efficiency. Additionally, enabling the system to forecast outcomes based on analyzed data from specific locations can help in devising effective strategies to reduce pollution in those areas, ultimately sustainably protecting the environment. Creation of a website to archive forecasting data for future reference allows us to identify the most polluted areas and implement necessary measures to mitigate pollution.

Figure 15.

Comparison graph.

Author Contributions

Conceptualization, V.L. and D.R.; methodology, M.P.M., G.R.K.K. and S.S.; software, G.R.K.K.; validation, V.L., G.R.K.K. and R.K.; investigation, R.K. and M.P.M.; writing—original draft preparation, V.L. and G.R.K.K.; writing—review and editing, supervision, V.L., M.P.M., G.R.K.K. and S.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data are provided within the manuscript.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Singh, V.; Singh, D.; Kumar, H. Efficient Application of Deep Neural Networks for Identifying Small and Multiple Weed Patches Using Drone Images. IEEE Access 2024, 12, 71982–71996. [Google Scholar] [CrossRef]

- Yu, M.; Zhang, J.; Zhu, L.; Liang, S.; Lu, W.; Ji, X. An Intelligent System for Outfall Detection in UAV Images Using Lightweight Convolutional Vision Transformer Network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 6265–6277. [Google Scholar] [CrossRef]

- Wang, S.; Jiang, H.; Li, Z.; Yang, J.; Ma, X.; Chen, J.; Tang, X. PHSI-RTDETR: A Lightweight Infrared Small Target Detection Algorithm Based on UAV Aerial Photography. Drones 2024, 8, 240. [Google Scholar] [CrossRef]

- Zhu, Y.; Tan, Y.; Chen, Y.; Chen, L.; Lee, K.Y. UAV Path Planning Based on Random Obstacle Training and Linear Soft Update of DRL in Dense Urban Environment. Energies 2024, 17, 2762. [Google Scholar] [CrossRef]

- Yusuf, M.O.; Hanzla, M.; Rahman, H.; Sadiq, T.; Al Mudawi, N.; Almujally, N.A.; Algarni, A. Enhancing Vehicle Detection and Tracking in UAV Imagery: A Pixel Labeling and Particle Filter Approach. IEEE Access 2024, 12, 72896–72911. [Google Scholar] [CrossRef]

- Lyu, L.; Jiang, H.; Yang, F. Improved Dung Beetle Optimizer Algorithm with Multi-Strategy for Global Optimization and UAV 3D Path Planning. IEEE Access 2024, 12, 69240–69257. [Google Scholar] [CrossRef]

- Xia, J.; Liu, Y.; Tan, L. Joint Optimization of Trajectory and Task Offloading for Cellular-Connected Multi-UAV Mobile Edge Computing. Chin. J. Electron. 2024, 33, 823–832. [Google Scholar] [CrossRef]

- Aldossary, M. Optimizing Task Offloading for Collaborative Unmanned Aerial Vehicles (UAVs) in Fog–Cloud Computing Environments. IEEE Access 2024, 12, 74698–74710. [Google Scholar] [CrossRef]

- Sângeorzan, D.D.; Păcurar, F.; Reif, A.; Weinacker, H.; Rușdea, E.; Vaida, I.; Rotar, I. Detection and Quantification of Arnica montana L. Inflorescences in Grassland Ecosystems Using Convolutional Neural Networks and Drone-Based Remote Sensing. Remote Sens. 2024, 16, 2012. [Google Scholar] [CrossRef]

- Xiao, M.; Min, W.; Yang, C.; Song, Y. A Novel Network Framework on Simultaneous Road Segmentation and Vehicle Detection for UAV Aerial Traffic Images. Sensors 2024, 24, 3606. [Google Scholar] [CrossRef] [PubMed]

- Wang, T.; Na, X.; Nie, Y.; Liu, J.; Wang, W.; Meng, Z. Parallel Task Offloading and Trajectory Optimization for UAV-Assisted Mobile Edge Computing via Hierarchical Reinforcement Learning. Drones 2025, 9, 358. [Google Scholar] [CrossRef]

- Yuan, X.; Xu, J.; Li, S. Design and Simulation Verification of Model Predictive Attitude Control Based on Feedback Linearization for Quadrotor UAV. Appl. Sci. 2025, 15, 5218. [Google Scholar] [CrossRef]

- Dai, K.; Zhu, J.; Hou, H.; Li, Q.; Ma, X.; Yu, H. A LiDAR–Camera Spatiotemporal Synchronization Method for Unmanned Aerial Vehicle-Based Ground Target Perception. IEEE Trans. Instrum. Meas. 2025, 74, 1008715. [Google Scholar] [CrossRef]

- Wang, Y.; Xu, T.; Li, J. FocusTrack: A Self-Adaptive Local Sampling Algorithm for Efficient Anti-UAV Tracking. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5003214. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).